Rooted in 85 years of research

The groundbreaking research by Schmidt and Hunter has profoundly shaped our understanding of selection methods, highlighting the superior efficacy of tests over traditional indicators. Their comprehensive studies provide irrefutable evidence that modern testing techniques are not only more efficient but also pivotal in transforming talent acquisition and evaluation practices. Through their meticulous analysis, Schmidt and Hunter demonstrate the clear advantage of advanced testing methodologies over conventional methods, revolutionizing the way we approach the selection process.

Try for freeGMA and work sample tests have the highest validity

In the realm of hiring, the combination of General Mental Ability (GMA) tests and work sample tests stands out as a robust predictor of job performance. The data underscores a compelling narrative: GMA tests, with a validity of .51, offer a broad measure of a candidate's cognitive abilities, reflecting their capacity to learn, adapt, and solve problems. When paired with work sample tests, which have a validity of .54, employers gain a direct observation of a candidate's competencies in action, providing a realistic preview of their potential job performance.

Together, these measures eclipse traditional hiring practices, which often rely on less predictive factors like unstructured interviews and years of education. By focusing on what truly matters—actual skills and cognitive ability—employers using GMA and work sample tests can make more informed, evidence-based hiring decisions.

Multi-measure Tests Outperform Traditional Interviews in Predictive Power

Simple assessments, such as GMA tests with a validity of .51, outshine traditional interviews in forecasting job performance. Unlike unstructured interviews, which hold a lower validity of .38, these streamlined tests cut through the noise, offering a clear and quantifiable measure of a candidate's problem-solving prowess and cognitive capacity. By leveraging such objective assessments, hiring processes become more efficient and predictive, ensuring that talent selection is based on concrete data rather than subjective impressions.

The Predictive Precision of Assessments Over Experience and Education

Dissecting the numbers reveals a transformative truth in talent acquisition: Assessment tests such as GMA and work sample tests, with validity scores of .51 and .54 respectively, triumph over traditional resume screening methods. This shift underscores not only the higher predictive validity of these tests but also their reliability, potentially underscored by measures like Cronbach's alpha, in forecasting a candidate's real-world performance. Experience and educational qualifications, once the bedrocks of candidate evaluation, show a significantly lower validity at .18 and .10. This disparity marks a pivotal move from relying on a resume's narrative to a data-driven approach that prioritizes cognitive aptitude and practical skills. With these assessment tests, the promise of a candidate's performance in the actual job setting comes into clearer focus, enabling employers to make more accurate decisions, not merely based on historical achievements but grounded in predictive accuracy and reliable assessment.

Bringing the research into 2024

Over the past six years, we have meticulously built on top of the research of Hunter and Schmidt to develop valid and reliable tests. Adaface assessments effectively measure the on-the-job skills of candidates, providing employers with a robust tool for screening potential hires.

Try for freeNo puzzles. No niche algorithms.

Traditional assessment tools use trick questions and puzzles for the screening, which creates a lot of frustration among candidates about having to go through irrelevant screening assessments.

The main reason we started Adaface is that traditional pre-employment assessment platforms are not a fair way for companies to evaluate candidates. At Adaface, our mission is to help companies find great candidates by assessing on-the-job skills required for a role.

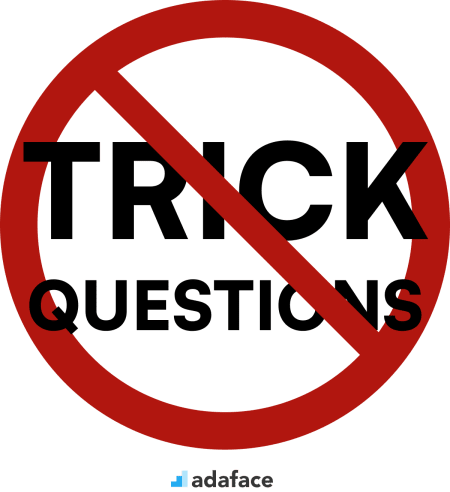

Optimal score distribution

In an optimal scenario, a valid and reliable skills assessment should yield a score distribution that closely follows a normal, or Gaussian, distribution, commonly known as a bell curve. This distribution is characterized by a few key features:

Central Peak: The majority of scores cluster around the mean, indicating an average skill level for most candidates.

Symmetry: The distribution is symmetrical around the mean. This symmetry suggests an equal representation of scores on both sides, meaning there are roughly as many candidates scoring above the average as there are below it.

Tails: The curve extends with tails on both ends, indicating fewer candidates with exceptionally high or low scores.

Such a distribution is indicative of a well-calibrated assessment that neither is neither too easy nor too hard. It also demonstrates the test's capacity to differentiate between various skill levels among candidates.

40 min tests for high completion rates

Studies have shown that tests conducted in 40 minutes or less have a 75% completion rate. Tests that take between 41 minutes to an hour have only a 66% completion rate by comparison. By limiting tests to 40 minutes or less, you can decrease the number of candidates who fail to complete the process due to time restrictions.

Designed for elimination, not selection

Adaface's pre-employment screening test adopts an elimination-focused design, a cornerstone of its effectiveness as a screening instrument. This methodology primarily filters out candidates lacking essential competencies and skills pertinent to the job role. By emphasizing elimination over selection, the test streamlines the candidate pool, allowing only those meeting the stringent criteria to advance. This approach not only refines the recruitment process but also aligns with best practices in talent assessment, ensuring a more targeted and efficient selection of potential hires.

Multi-measure tests for technical and non-technical roles

Adaface's multi-measure tests, tailored for both technical and non-technical roles are meticulously crafted to evaluate a wide range of skills and competencies, encompassing domain-specific knowledge, problem-solving abilities, and soft skills such as communication. By integrating various types of assessments — including scenario-based questions, technical exercises, and problem solving — Adaface provides a holistic view of each candidate's capabilities. This comprehensive approach not only ensures a more accurate assessment of the candidate's fit for the role but also aligns with the multifaceted nature of modern job requirements, making these tests highly effective in identifying the most suitable candidates for diverse roles.

Easier programming questions provide more data than harder/ niche questions

Simpler questions can effectively gauge a candidate's fundamental coding skills and problem-solving abilities, providing a clear baseline of competence. By focusing on these foundational aspects, Adaface's tests can efficiently differentiate between candidates who possess the essential technical skills and those who do not. This design philosophy ensures that the screening process is not only accessible but also relevant and practical, allowing for a more accurate assessment of a candidate's potential performance in real-world job scenarios. Consequently, the tests are tailored to capture the core competencies required for the role, making them more effective for initial screening purposes.

Adaface questions:

the heart of the perfect tests

Adaface questions are created through a systematic process led by subject matter experts who identify essential skills for specific roles and rank topics by importance. Questions are designed to evaluate practical understanding and critical thinking, adhering to criteria of clarity, fairness, and lack of bias. Following design, questions undergo peer review, error checks for language accuracy, and cultural sensitivity. They are then beta tested to ensure they effectively discriminate between candidates' competencies. Only questions that successfully differentiate candidate abilities during beta testing are incorporated into the live assessments. Our questions are designed keeping the Equal Employment Opportunity Commission (EEOC) guidelines in mind to remove unconscious bias from the hiring process.

A glimpse into the process

Adaface tests are trusted by recruitment teams globally