Hiring the right data modeler is crucial for organizations dealing with complex data structures and relationships. Asking the right interview questions helps you assess candidates' skills, experience, and problem-solving abilities effectively.

This blog post provides a comprehensive list of data modeling interview questions categorized for different purposes and skill levels. We cover introductory questions, junior-level assessments, conceptual queries, and design pattern discussions to help you evaluate candidates thoroughly.

By using these questions, you can identify top talent and make informed hiring decisions. Consider complementing your interviews with a data modeling skills assessment to get a complete picture of candidates' abilities.

Table of contents

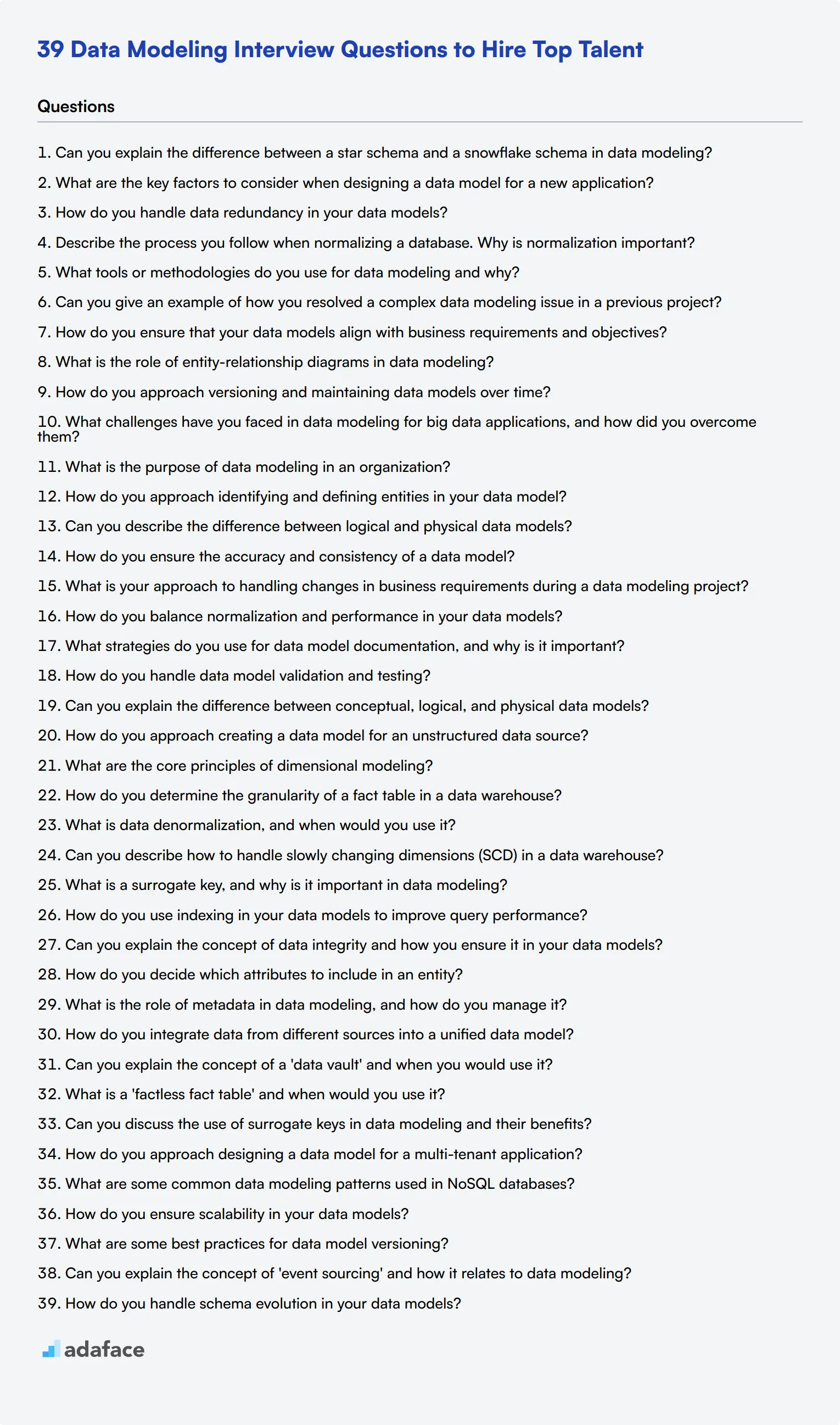

10 Data Modeling interview questions to initiate the interview

To effectively evaluate your candidates' understanding of data modeling concepts and practices, consider using this list of targeted questions. These inquiries will help you gauge their technical knowledge and problem-solving skills, ensuring you find the right fit for your data-related roles like data architect.

- Can you explain the difference between a star schema and a snowflake schema in data modeling?

- What are the key factors to consider when designing a data model for a new application?

- How do you handle data redundancy in your data models?

- Describe the process you follow when normalizing a database. Why is normalization important?

- What tools or methodologies do you use for data modeling and why?

- Can you give an example of how you resolved a complex data modeling issue in a previous project?

- How do you ensure that your data models align with business requirements and objectives?

- What is the role of entity-relationship diagrams in data modeling?

- How do you approach versioning and maintaining data models over time?

- What challenges have you faced in data modeling for big data applications, and how did you overcome them?

8 Data Modeling interview questions and answers to evaluate junior Data Modelers

To assess the capabilities of junior Data Modelers, this list of interview questions will help you gauge their fundamental understanding and practical knowledge. Use these questions during your interview process to identify candidates with the right skills and mindset for your data modeling needs.

1. What is the purpose of data modeling in an organization?

Data modeling serves as a blueprint for designing databases, ensuring that data is stored, managed, and used efficiently. It helps in structuring data according to business requirements and improves data quality by eliminating redundancy and ensuring consistency.

Look for candidates who can articulate the importance of data modeling in aligning with business goals and enhancing data governance. Follow up by asking for examples of how they have used data modeling in their work.

2. How do you approach identifying and defining entities in your data model?

Identifying and defining entities involves understanding the business requirements and the data to be stored. I start by discussing with stakeholders to gather requirements, then I identify the different objects or entities involved in the process. Each entity should represent a real-world object or concept with distinct attributes.

Ideal candidates will mention their methods for involving stakeholders and ensuring that the entities accurately reflect business needs. They should also be able to explain how they determine relationships between entities.

3. Can you describe the difference between logical and physical data models?

A logical data model outlines the structure of the data elements and their relationships without considering how they will be physically implemented in the database. It focuses on business requirements and data organization. A physical data model, on the other hand, translates the logical data model into a technical blueprint for implementing the database, including details like tables, columns, data types, and indexes.

Candidates should demonstrate an understanding of both models and their respective roles in the data modeling process. Look for explanations that highlight how logical models focus on the 'what' and physical models focus on the 'how.'

4. How do you ensure the accuracy and consistency of a data model?

Ensuring accuracy and consistency involves thorough validation and verification of the data model. This includes checking for redundancy, ensuring that relationships are correctly defined, and validating the model against business requirements. Regular reviews and updates based on feedback from stakeholders also play a crucial role.

Strong candidates will mention specific techniques they use for validation, such as peer reviews or using modeling tools. They should also show an understanding of the importance of stakeholder communication in maintaining model accuracy.

5. What is your approach to handling changes in business requirements during a data modeling project?

Changes in business requirements are inevitable, and my approach involves maintaining flexibility and open communication throughout the project. I use an iterative model development process, which allows for regular reviews and adjustments. Effective documentation and stakeholder involvement are key to managing changes smoothly.

Look for candidates who exhibit adaptability and have strategies for incorporating feedback without compromising the integrity of the data model. Ask for examples of how they have handled changes in past projects.

6. How do you balance normalization and performance in your data models?

Balancing normalization and performance is a critical aspect of data modeling. While normalization reduces redundancy and improves data integrity, it can sometimes impact performance. I aim for a level of normalization that ensures data integrity but also consider denormalization in performance-critical areas, especially for read-heavy systems.

Candidates should demonstrate an understanding of the trade-offs between normalization and performance. Look for specific strategies or examples from their experience where they had to make such trade-offs.

7. What strategies do you use for data model documentation, and why is it important?

Data model documentation is essential for ensuring that everyone involved in the project understands the data structures and their intended use. I use detailed entity-relationship diagrams, data dictionaries, and annotations within data modeling tools. Regular updates and clear explanations help maintain the relevance and usability of the documentation.

Ideal responses should emphasize the importance of documentation in facilitating communication, training, and maintenance. Candidates should also mention specific tools or methods they use for documentation.

8. How do you handle data model validation and testing?

Data model validation and testing involve multiple steps, including checking for logical consistency, ensuring that the model meets business requirements, and running test queries to verify data integrity and performance. I also involve stakeholders in the validation process to get their feedback and make necessary adjustments.

Look for candidates who have a structured approach to validation and testing. They should mention techniques such as test cases, peer reviews, or validation tools that they use to ensure the model is robust and meets business needs.

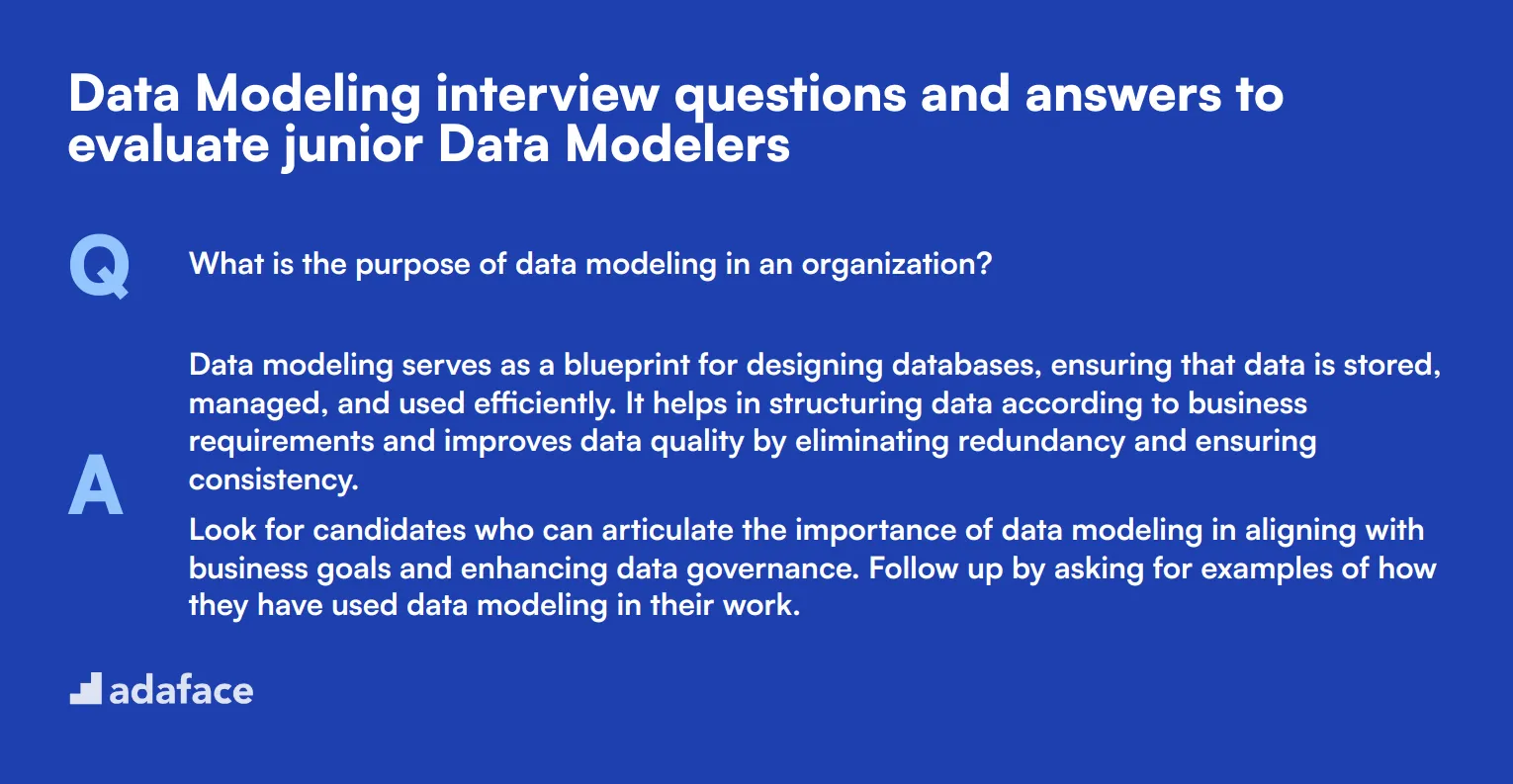

12 Data Modeling interview questions about concepts and methodologies

To assess whether candidates have a solid grasp of data modeling concepts and methodologies, consider asking some of these focused interview questions. This list will help you gauge their technical proficiency and practical experience, ensuring you select the most qualified data modeler for your team. For more detailed job descriptions, check out our resources.

- Can you explain the difference between conceptual, logical, and physical data models?

- How do you approach creating a data model for an unstructured data source?

- What are the core principles of dimensional modeling?

- How do you determine the granularity of a fact table in a data warehouse?

- What is data denormalization, and when would you use it?

- Can you describe how to handle slowly changing dimensions (SCD) in a data warehouse?

- What is a surrogate key, and why is it important in data modeling?

- How do you use indexing in your data models to improve query performance?

- Can you explain the concept of data integrity and how you ensure it in your data models?

- How do you decide which attributes to include in an entity?

- What is the role of metadata in data modeling, and how do you manage it?

- How do you integrate data from different sources into a unified data model?

9 Data Modeling interview questions and answers related to design patterns

To ensure your candidates have a solid grasp of data modeling, particularly with design patterns, consider these interview questions. These questions will help you gauge their understanding of crucial design concepts and their ability to apply them in real-world scenarios.

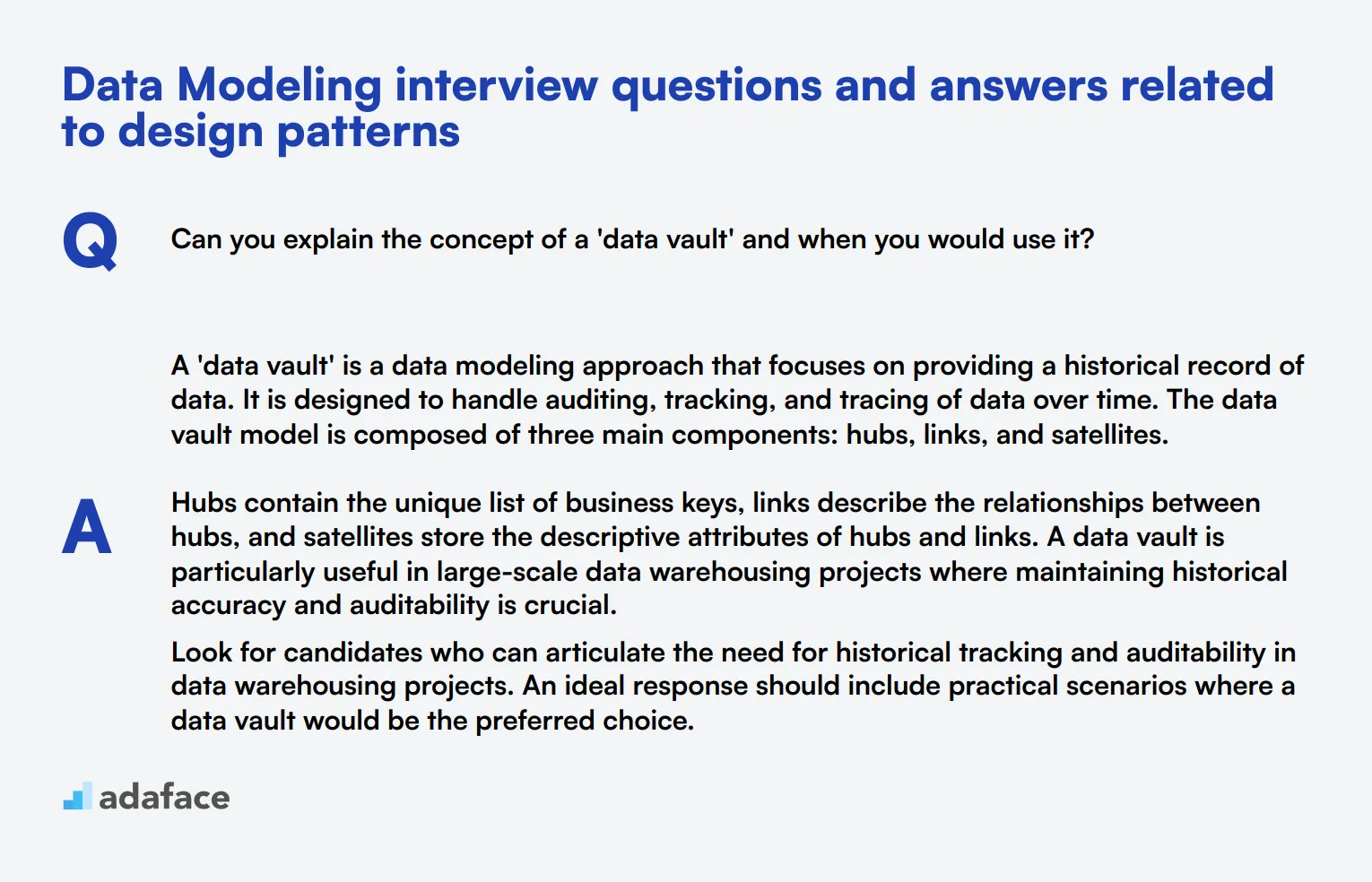

1. Can you explain the concept of a 'data vault' and when you would use it?

A 'data vault' is a data modeling approach that focuses on providing a historical record of data. It is designed to handle auditing, tracking, and tracing of data over time. The data vault model is composed of three main components: hubs, links, and satellites.

Hubs contain the unique list of business keys, links describe the relationships between hubs, and satellites store the descriptive attributes of hubs and links. A data vault is particularly useful in large-scale data warehousing projects where maintaining historical accuracy and auditability is crucial.

Look for candidates who can articulate the need for historical tracking and auditability in data warehousing projects. An ideal response should include practical scenarios where a data vault would be the preferred choice.

2. What is a 'factless fact table' and when would you use it?

A 'factless fact table' is a fact table that does not have any measures or facts. It typically captures events or activities, such as student attendance or employee sick days, where the mere occurrence of an event is what is important.

Factless fact tables are used when you need to track the occurrence of events and their related dimensions, without any quantitative data associated with the event.

Candidates should demonstrate an understanding of how these tables help in tracking events and provide examples of scenarios where they have used or would use a factless fact table.

3. Can you discuss the use of surrogate keys in data modeling and their benefits?

Surrogate keys are artificial keys used as unique identifiers for entities in a data model. They are typically integer values that are automatically incremented.

The benefits of using surrogate keys include: ensuring uniqueness, decoupling from business logic, and improving performance. Surrogate keys also make it easier to manage changes in business keys without affecting the relationships in your data model.

A strong candidate should explain the advantages of surrogate keys in terms of performance and maintenance, and provide examples of how they have implemented them in their previous projects.

4. How do you approach designing a data model for a multi-tenant application?

Designing a data model for a multi-tenant application involves ensuring data isolation and security between tenants while optimizing performance. There are generally three main approaches: shared database with shared schema, shared database with separate schemas, and separate databases for each tenant.

Each approach has its pros and cons. For example, a shared database with a shared schema is cost-effective but may pose data security risks, while separate databases offer the highest data isolation but can be costly and complex to manage.

Look for candidates who can discuss these approaches, their trade-offs, and provide examples of how they have addressed multi-tenancy in their past projects.

5. What are some common data modeling patterns used in NoSQL databases?

Common data modeling patterns for NoSQL databases include key-value, document, column-family, and graph models. Each of these patterns is suited to different types of data and use cases.

For example, key-value models are simple and fast for lookups, document models are flexible and can store complex nested data, column-family models are efficient for read-heavy workloads, and graph models excel at handling highly interconnected data.

Candidates should be able to explain these patterns and discuss scenarios where each would be appropriate. They should also demonstrate an understanding of the trade-offs involved in choosing a particular pattern.

6. How do you ensure scalability in your data models?

Ensuring scalability in data models involves designing for both vertical and horizontal scalability. This can be achieved through strategies such as sharding, partitioning, and indexing.

Sharding involves splitting a large dataset into smaller, more manageable pieces, while partitioning helps distribute data across different storage units. Indexing improves query performance by optimizing data retrieval.

An ideal candidate should discuss these strategies and provide examples of how they have implemented them in their previous projects. Look for practical insights and real-world experience in scaling data models.

7. What are some best practices for data model versioning?

Best practices for data model versioning include maintaining a clear version history, using version control systems, and documenting changes comprehensively.

It's important to ensure backward compatibility where possible and to communicate changes clearly to all stakeholders. Automated testing can also help in identifying issues early in the versioning process.

Candidates should demonstrate an understanding of these best practices and provide examples of how they have managed data model versioning in their projects. Look for a systematic approach to versioning that minimizes disruptions.

8. Can you explain the concept of 'event sourcing' and how it relates to data modeling?

Event sourcing is a pattern where state changes are logged as a sequence of events. Instead of storing the current state directly, the system reconstructs the state by replaying these events.

This approach ensures that all changes are auditable and that the system can be easily restored to any previous state. Event sourcing is particularly useful in systems where maintaining a detailed history of changes is crucial.

Look for candidates who can explain the benefits of event sourcing, such as improved auditability and flexibility. An ideal response should include practical examples of where they have applied event sourcing in their data models.

9. How do you handle schema evolution in your data models?

Handling schema evolution involves planning for changes to the data model over time. This can be achieved through techniques such as backward and forward compatibility, versioning, and using flexible data types.

Backward compatibility ensures that older versions of the application can still work with the new schema, while forward compatibility allows newer versions to handle old data. Using flexible data types like JSON can also help in accommodating changes without breaking the schema.

Candidates should discuss their approach to schema evolution and provide examples of how they have managed schema changes in their projects. Look for a proactive approach that minimizes disruptions and maintains data integrity.

Which Data Modeling skills should you evaluate during the interview phase?

Conducting a comprehensive interview for a Data Modeler can be challenging. While it's impossible to assess every aspect of a candidate's capabilities in a single interview, there are certain core skills that are essential to evaluate for this role.

Normalization

Normalization is a key concept in data modeling that involves organizing data to reduce redundancy and improve data integrity. It's important because it ensures that the database is efficient and scalable.

You can use an assessment test like the Data Modeling Test that includes MCQs on normalization to filter candidates who have a strong grasp of this concept.

You can also ask targeted interview questions specifically to judge the candidate's understanding of normalization.

Can you explain the different normal forms and provide an example for each?

Look for a candidate who can clearly explain the different normal forms (1NF, 2NF, 3NF, etc.) and provide relevant examples. This will demonstrate their depth of knowledge and practical understanding.

Entity-Relationship Modeling

Entity-Relationship (ER) modeling is foundational for representing data objects and their relationships. Understanding ER modeling is crucial for building a logical structure of the database.

An assessment test such as the Data Modeling Test with MCQs focused on ER diagrams can effectively evaluate this skill.

To judge the candidate's proficiency in ER modeling, you can ask targeted questions during the interview.

Can you design an ER diagram to model a university's database system, including entities like Students, Courses, and Enrollments?

The candidate should be able to create a clear and logical ER diagram that includes all necessary entities and their relationships. This will show their ability to visualize and structure data effectively.

SQL Proficiency

Proficiency in SQL is essential for implementing and querying databases based on data models. It enables Data Modelers to manipulate and retrieve data efficiently.

You can use a specialized SQL Coding Test like the SQL Coding Test to assess a candidate’s practical SQL skills.

In the interview, you can ask direct questions to evaluate the candidate's SQL proficiency.

How would you write a SQL query to find all students who have enrolled in more than three courses?

Look for a candidate who can write a clear and correct SQL query, demonstrating their ability to handle complex database queries and their proficiency in SQL.

Effective Strategies for Utilizing Data Modeling Interview Questions

As you prepare to apply the insights from this guide, here are some strategic tips to enhance the effectiveness of your interviewing process for data modelers.

1. Incorporate Skill Tests Before Interviews

Skill tests are a powerful tool to assess candidates before progressing to the interview stage. By evaluating their technical abilities early, you can ensure that only the most capable individuals proceed to the costly and time-consuming interview phase.

For data modeling roles, consider incorporating tests like the Data Modeling Test, SAP PowerDesigner Test, or the UML Online Test. Each of these assessments can help you gauge the practical skills and theoretical knowledge of your candidates.

Utilizing these tests streamlines the interviewing process by focusing on top contenders and providing a clear benchmark of the required skills. This approach not only saves time but also enhances the overall quality of your hiring decisions.

2. Strategically Select and Compile Interview Questions

Interview time is limited, making it imperative to choose questions that accurately assess the crucial skills needed for the role. Focus on selecting a balanced mix of questions that evaluate both technical prowess and problem-solving capabilities.

Explore other relevant interview question resources to broaden your assessment criteria. For example, questions from the Entity Framework interview questions or System Design interview topics can complement your data modeling interviews by evaluating adjacent skills that contribute to a candidate's overall expertise.

Carefully compiled questions ensure that you effectively evaluate important aspects of a candidate’s abilities, maximizing the success of your hiring process.

3. Emphasize the Importance of Follow-Up Questions

Relying solely on standard interview questions may not always reveal a candidate's full potential or fit for the role. Follow-up questions are essential for digging deeper into a candidate's responses and assessing their true understanding.

For instance, if a candidate describes their approach to normalization in data modeling, a good follow-up might be, 'Can you explain a situation where you chose not to use normalization and why?' This question helps elucidate their decision-making process and adaptability to different scenarios.

Hire skilled Data Modelers with targeted interview questions and assessments

When hiring someone with Data Modeling skills, it's important to accurately assess their abilities. Using skill tests is an effective way to do this. The Data Modeling Test can help you evaluate candidates' proficiency in this area.

After using the test to shortlist top applicants, you can invite them for interviews. To streamline your hiring process and find the best Data Modeling talent, consider exploring our Online Assessment Platform for a complete solution.

Data Modeling Skills Test

Download Data Modeling interview questions template in multiple formats

Data Modeling Interview Questions FAQs

A good data modeler should have strong analytical skills, knowledge of database management systems, understanding of business processes, and proficiency in data modeling tools and techniques.

Ask for specific examples of projects they've worked on, challenges they've faced, and solutions they've implemented. You can also present real-world scenarios and ask how they would approach them.

Key concepts include entity-relationship modeling, normalization, data warehousing concepts, dimensional modeling, and understanding of various data modeling methodologies like ER, UML, and star schema.

While tool knowledge is useful, it's more important to assess the candidate's understanding of core data modeling principles. Tools can be learned, but a solid grasp of fundamentals is harder to teach.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources