Hiring the right TensorFlow developer is critical as it ensures your machine learning projects are built on a solid foundation. Asking insightful interview questions can help you identify the best candidates who possess both the technical skills and problem-solving abilities needed for the role.

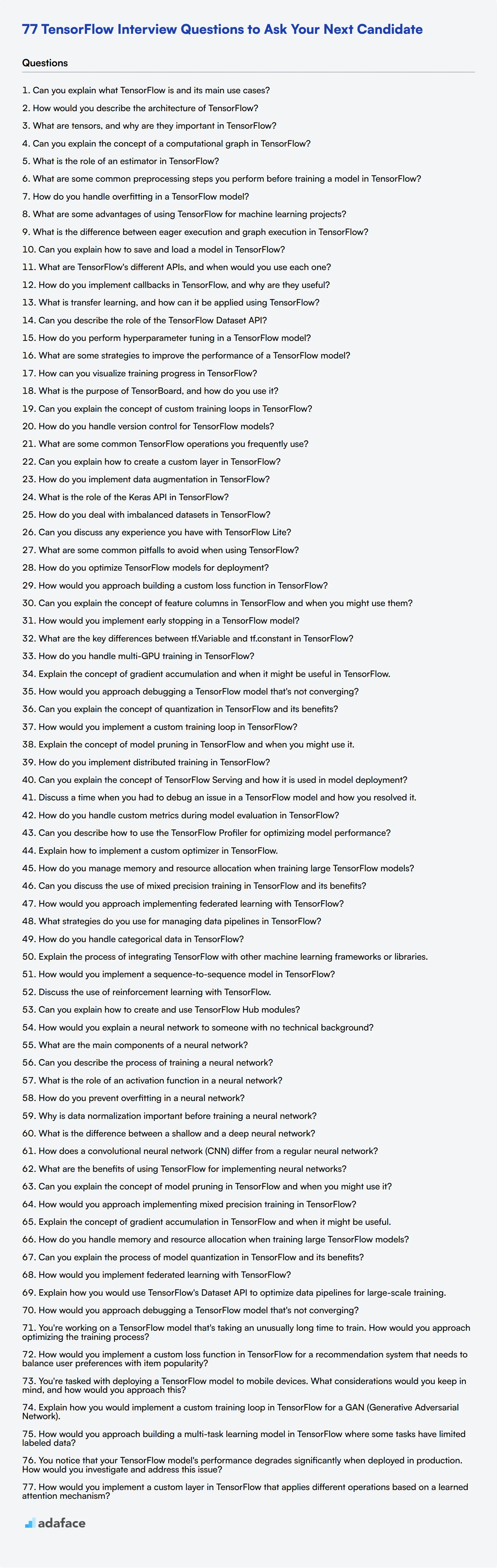

This blog post covers a comprehensive list of TensorFlow interview questions tailored for various experience levels, from junior to senior developers. It also includes questions related to neural networks, model optimization, and situational challenges to help you gauge a candidate's depth of knowledge.

By using these questions, you can streamline your interview process and make informed hiring decisions. Additionally, consider complementing these questions with our machine learning online test to further assess candidate skills before the interview stage.

Table of contents

8 general TensorFlow interview questions and answers

To determine whether your applicants have the right understanding and skills to use TensorFlow effectively, ask them some of these 8 general TensorFlow interview questions. This list will help you gauge their practical knowledge and problem-solving abilities, ensuring you hire the best fit for your team.

1. Can you explain what TensorFlow is and its main use cases?

TensorFlow is an open-source machine learning framework developed by Google. It allows developers to create data flow graphs—structures that describe how data moves through a series of processing nodes. Each node represents a mathematical operation, and each connection or edge is a multidimensional data array (tensor).

The main use cases for TensorFlow include building machine learning and deep learning models, natural language processing, image and video recognition, and more. It is versatile and can be used in various industries like healthcare, finance, and automotive for tasks such as predictive analytics and autonomous driving.

Look for candidates who can clearly articulate what TensorFlow is and provide specific examples of its applications. This indicates a strong foundational understanding.

2. How would you describe the architecture of TensorFlow?

TensorFlow architecture consists of three main parts: the pre-processing of data, the creation of the model, and the training and estimation of the model.

- Pre-processing: This involves preparing the data for model training. It's a crucial step to ensure the data is in the correct format.

- Model creation: Using TensorFlow's layers and operations, you can create a computational graph that represents your model.

- Training and estimation: This part involves feeding the prepared data into the model and adjusting the model's parameters to minimize the error in predictions.

Candidates should be able to explain these steps clearly and might describe how they have applied this architecture in previous projects. Their explanation should reflect a practical understanding of TensorFlow’s workflow.

3. What are tensors, and why are they important in TensorFlow?

Tensors are the core data structures in TensorFlow. They are multidimensional arrays used to represent the data being processed by the model. Tensors can range from simple scalars (single numbers) to complex multi-dimensional arrays (like matrices or higher-dimensional arrays).

Tensors are pivotal because they allow TensorFlow to handle large-scale computations efficiently. They enable the flexibility and scalability needed to build complex machine learning models. For instance, in image recognition, a tensor can represent an image as a 3D array of pixel values.

Look for candidates who can describe tensors in simple terms and explain their importance in handling and processing data within TensorFlow. This shows they grasp fundamental concepts deeply.

4. Can you explain the concept of a computational graph in TensorFlow?

A computational graph in TensorFlow is a visual representation of the mathematical operations and data flow in a machine learning model. It consists of nodes (operations) and edges (tensors). Each node performs a specific operation, like addition or multiplication, and the edges carry the data between these operations.

The computational graph allows TensorFlow to optimize computations by parallelizing operations and managing memory efficiently. This makes it possible to handle large datasets and complex models more effectively.

Candidates should be able to explain the structure and purpose of computational graphs. Their understanding of how these graphs optimize and parallelize operations can indicate their proficiency with TensorFlow.

5. What is the role of an estimator in TensorFlow?

In TensorFlow, an estimator is a high-level API that simplifies the process of creating, training, and evaluating machine learning models. Estimators encapsulate the entire model, including the training and evaluation logic, which makes it easier to implement complex models with less code.

Estimators provide built-in training loops, evaluation metrics, and serve as the bridge between your data and the model. They handle the intricacies of model training, allowing developers to focus more on model design and less on boilerplate code.

Look for candidates who can articulate the benefits of using estimators, such as ease of use and efficiency. Their response should highlight their familiarity with TensorFlow’s high-level APIs.

6. What are some common preprocessing steps you perform before training a model in TensorFlow?

Common preprocessing steps in TensorFlow include data cleaning, normalization, and augmentation. Data cleaning involves handling missing values, removing duplicates, and correcting errors. Normalization scales the data to a standard range, which helps in speeding up the training process and improving model accuracy.

Data augmentation is particularly useful in image processing and involves techniques like rotation, flipping, and cropping to generate more training examples from the existing data. This helps in making the model more robust and generalizable.

Candidates should discuss specific preprocessing techniques they have used and explain why these steps are important. This will demonstrate their practical experience and attention to detail.

7. How do you handle overfitting in a TensorFlow model?

Overfitting occurs when a model performs well on training data but poorly on unseen test data. To handle overfitting, you can use techniques like regularization, dropout, and data augmentation. Regularization adds a penalty for larger coefficients in the model, discouraging complexity. Dropout randomly drops neurons during training, preventing the model from becoming too reliant on specific paths.

Another effective method is to use cross-validation, where the training data is split into multiple subsets, and the model is trained and evaluated on these subsets to ensure robustness. Early stopping, where training is halted once performance on a validation set starts to degrade, can also be useful.

Candidates should mention multiple strategies and provide examples of how they have applied these techniques in their projects. This shows they understand the practical implications of overfitting and know how to mitigate it.

8. What are some advantages of using TensorFlow for machine learning projects?

TensorFlow offers several advantages for machine learning projects, including flexibility, scalability, and a rich ecosystem. Its flexibility allows developers to build and experiment with complex models, while its scalability ensures that these models can be deployed on various platforms, from mobile devices to distributed computing environments.

TensorFlow's ecosystem includes a plethora of tools and libraries like TensorFlow Lite for mobile and embedded devices, and TensorFlow Extended (TFX) for end-to-end machine learning pipelines. The extensive community support and comprehensive documentation also make it easier to troubleshoot and innovate.

Look for candidates who can highlight specific advantages and relate them to their past projects. Their ability to connect these benefits to practical use cases indicates a strong understanding of TensorFlow’s strengths.

20 TensorFlow interview questions to ask junior developers

To identify whether candidates have the necessary foundational skills in TensorFlow, consider asking these 20 interview questions tailored for junior developers. This list will help you assess their understanding and practical knowledge, ensuring they fit well within your team. For additional insights into candidate qualifications, check out our machine learning engineer job description.

- What is the difference between eager execution and graph execution in TensorFlow?

- Can you explain how to save and load a model in TensorFlow?

- What are TensorFlow's different APIs, and when would you use each one?

- How do you implement callbacks in TensorFlow, and why are they useful?

- What is transfer learning, and how can it be applied using TensorFlow?

- Can you describe the role of the TensorFlow Dataset API?

- How do you perform hyperparameter tuning in a TensorFlow model?

- What are some strategies to improve the performance of a TensorFlow model?

- How can you visualize training progress in TensorFlow?

- What is the purpose of TensorBoard, and how do you use it?

- Can you explain the concept of custom training loops in TensorFlow?

- How do you handle version control for TensorFlow models?

- What are some common TensorFlow operations you frequently use?

- Can you explain how to create a custom layer in TensorFlow?

- How do you implement data augmentation in TensorFlow?

- What is the role of the Keras API in TensorFlow?

- How do you deal with imbalanced datasets in TensorFlow?

- Can you discuss any experience you have with TensorFlow Lite?

- What are some common pitfalls to avoid when using TensorFlow?

- How do you optimize TensorFlow models for deployment?

10 intermediate TensorFlow interview questions and answers to ask mid-tier developers

Ready to level up your TensorFlow interviews? These 10 intermediate questions are perfect for assessing mid-tier developers. They'll help you gauge a candidate's practical knowledge and problem-solving skills without diving too deep into the technical weeds. Use these questions to spark insightful discussions and uncover the true potential of your TensorFlow candidates.

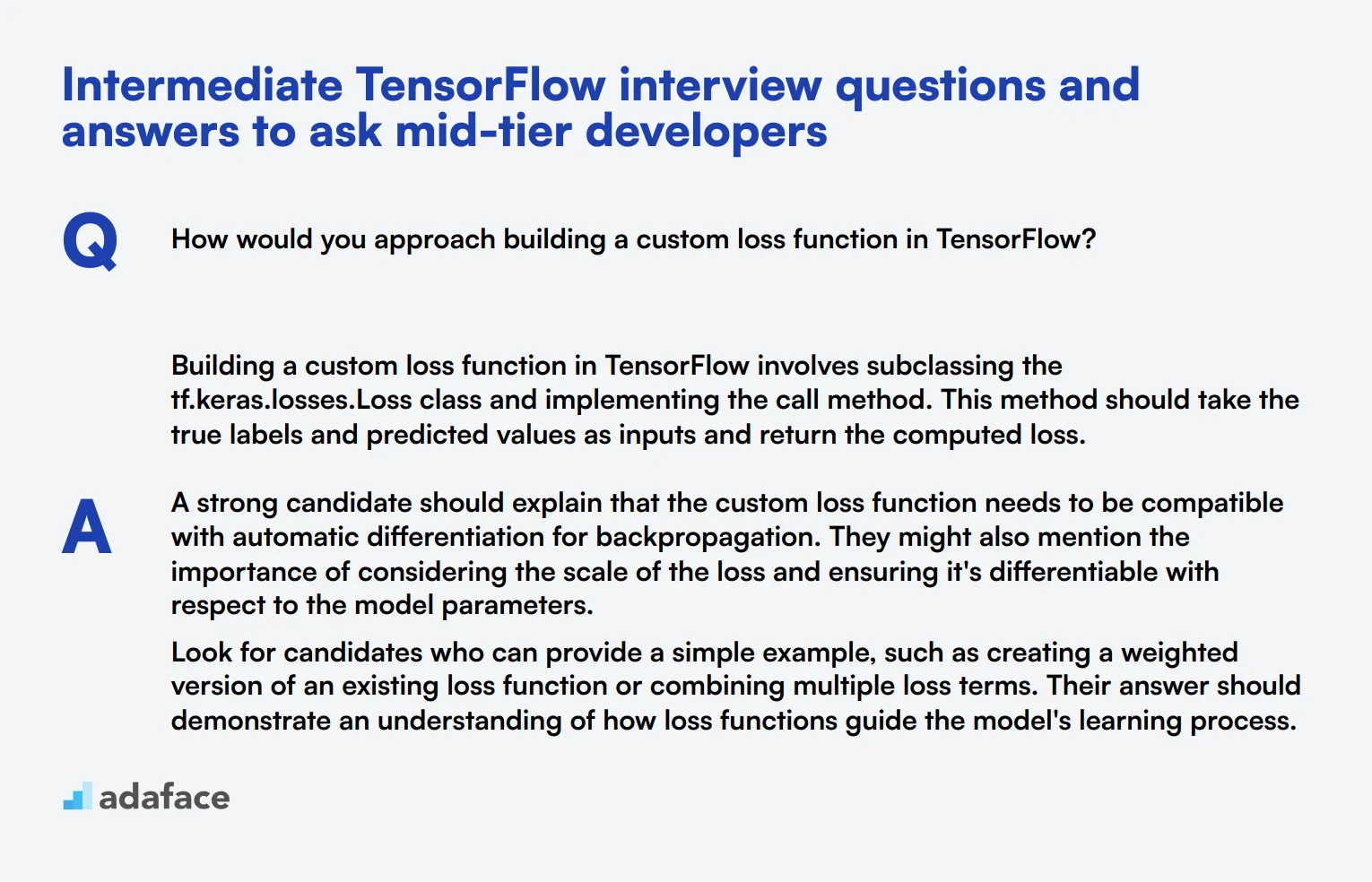

1. How would you approach building a custom loss function in TensorFlow?

Building a custom loss function in TensorFlow involves subclassing the tf.keras.losses.Loss class and implementing the call method. This method should take the true labels and predicted values as inputs and return the computed loss.

A strong candidate should explain that the custom loss function needs to be compatible with automatic differentiation for backpropagation. They might also mention the importance of considering the scale of the loss and ensuring it's differentiable with respect to the model parameters.

Look for candidates who can provide a simple example, such as creating a weighted version of an existing loss function or combining multiple loss terms. Their answer should demonstrate an understanding of how loss functions guide the model's learning process.

2. Can you explain the concept of feature columns in TensorFlow and when you might use them?

Feature columns in TensorFlow are a way to bridge the gap between raw data and the format expected by machine learning models. They act as an abstraction that allows you to transform and preprocess data before feeding it into your model.

Candidates should mention that feature columns are particularly useful when working with structured data, such as data from databases or CSV files. They help in handling various data types (numeric, categorical, etc.) and performing common preprocessing tasks like normalization, one-hot encoding, or bucketizing.

Look for answers that highlight the flexibility of feature columns in creating complex feature interactions or embedding layers for categorical variables. A strong candidate might also discuss how feature columns can be used with the tf.data API for efficient data pipelines.

3. How would you implement early stopping in a TensorFlow model?

Implementing early stopping in TensorFlow involves using the EarlyStopping callback during model training. This callback monitors a specified metric (usually validation loss) and stops training when the metric stops improving.

A good answer should include the following steps:

- Import the EarlyStopping callback from tf.keras.callbacks

- Create an instance of EarlyStopping, specifying parameters like 'monitor' (the metric to watch), 'patience' (number of epochs with no improvement before stopping), and 'restore_best_weights' (to keep the best model weights)

- Include the EarlyStopping instance in the callbacks list when calling model.fit()

Look for candidates who understand the purpose of early stopping in preventing overfitting and reducing training time. They should also be able to discuss how to choose appropriate values for patience and the monitored metric based on the specific problem and dataset.

4. What are the key differences between tf.Variable and tf.constant in TensorFlow?

tf.Variable and tf.constant are both used to represent tensors in TensorFlow, but they have crucial differences:

- Mutability: tf.Variable is mutable and can be updated during model training, while tf.constant is immutable.

- Memory allocation: Variables are stored in a separate memory area that persists across multiple executions of a graph, whereas constants are embedded in the graph itself.

- Initialization: Variables require explicit initialization, while constants are initialized when defined.

- Use cases: Variables are typically used for model parameters that need to be updated during training, while constants are used for fixed values or hyperparameters.

A strong candidate should be able to explain these differences and provide examples of when to use each. They might also mention that using variables allows for more efficient memory usage in large models, as constants are copied for each operation that uses them.

5. How do you handle multi-GPU training in TensorFlow?

Handling multi-GPU training in TensorFlow involves using the tf.distribute.Strategy API. This API provides a high-level interface for distributing training across multiple GPUs or even multiple machines.

A good answer should outline the following steps:

- Choose an appropriate distribution strategy (e.g., MirroredStrategy for single-machine multi-GPU training)

- Create the strategy object

- Use the strategy's scope to create and compile the model

- Scale the batch size according to the number of GPUs

- Use the strategy's run method or fit the model as usual

Look for candidates who understand the benefits of multi-GPU training, such as reduced training time and the ability to handle larger models or datasets. They should also be aware of potential challenges, like increased memory usage and the need for careful batch size adjustment.

6. Explain the concept of gradient accumulation and when it might be useful in TensorFlow.

Gradient accumulation is a technique where gradients are computed and accumulated over multiple small batches before performing a weight update. This allows for effectively increasing the batch size without increasing memory usage.

Candidates should explain that gradient accumulation is particularly useful when:

- Working with large models that don't fit in GPU memory with the desired batch size

- Training on machines with limited memory

- Trying to simulate larger batch sizes for better convergence

A strong answer would include how to implement gradient accumulation in TensorFlow, typically using custom training loops and tf.GradientTape. Look for candidates who understand the trade-offs, such as potentially slower training time due to more frequent CPU-GPU synchronization, but better memory efficiency.

7. How would you approach debugging a TensorFlow model that's not converging?

Debugging a non-converging TensorFlow model requires a systematic approach. A strong candidate should outline a process that includes:

- Checking the learning rate: Ensure it's not too high or too low

- Inspecting the loss function: Verify it's appropriate for the problem and implemented correctly

- Examining the data: Look for issues like incorrect normalization or data leakage

- Monitoring gradients: Use tf.summary to track gradient magnitudes and check for vanishing or exploding gradients

- Simplifying the model: Start with a simpler architecture and gradually increase complexity

- Visualizing intermediate activations: Use TensorBoard to inspect layer outputs

- Verifying the optimizer: Ensure it's suitable for the problem and configured correctly

Look for candidates who emphasize the importance of systematic experimentation and proper logging. They should also mention tools like TensorBoard for visualization and tf.debugging for runtime checks. A comprehensive answer might include discussing common pitfalls like incorrect data preprocessing or unsuitable hyperparameters.

8. Can you explain the concept of quantization in TensorFlow and its benefits?

Quantization in TensorFlow is the process of reducing the precision of the numbers used to represent a model's parameters. This typically involves converting 32-bit floating-point numbers to 8-bit integers.

The main benefits of quantization include:

- Reduced model size: Smaller models are easier to deploy, especially on mobile or edge devices

- Faster inference: Integer operations are generally faster than floating-point operations

- Lower power consumption: Particularly important for mobile and IoT devices

- Potential for specialized hardware acceleration: Some hardware is optimized for low-precision computations

A strong candidate should be able to discuss different quantization techniques available in TensorFlow, such as post-training quantization and quantization-aware training. They should also be aware of the potential trade-offs, like a possible slight decrease in model accuracy, and how to mitigate these issues.

9. How would you implement a custom training loop in TensorFlow?

Implementing a custom training loop in TensorFlow gives you fine-grained control over the training process. A good answer should outline the following steps:

- Define the model architecture

- Choose an optimizer and loss function

- Prepare the training data using tf.data.Dataset

- Define the training step function, typically using @tf.function for better performance

- Inside the training step, use tf.GradientTape to record operations for automatic differentiation

- Compute the loss and gradients

- Apply the gradients to the model's trainable variables using the optimizer

- Implement the main training loop, iterating over epochs and batches

Look for candidates who understand the benefits of custom training loops, such as implementing complex training schemes or fine-tuning the optimization process. They should also be aware of performance considerations, like using tf.function for graph execution mode.

10. Explain the concept of model pruning in TensorFlow and when you might use it.

Model pruning in TensorFlow is a technique used to reduce the size and complexity of neural networks by removing unnecessary weights or connections. This process typically involves setting small or insignificant weights to zero, effectively removing them from the model.

Candidates should mention that pruning is useful when:

- Deploying models to resource-constrained environments, like mobile devices

- Reducing inference time and power consumption

- Decreasing the risk of overfitting by reducing model complexity

A strong answer would include an explanation of different pruning techniques, such as magnitude-based pruning or structured pruning. Look for candidates who understand that pruning often requires fine-tuning the model after removing weights to maintain performance. They might also mention TensorFlow's model optimization toolkit, which provides tools for pruning.

15 advanced TensorFlow interview questions to ask senior developers

To effectively gauge the suitability of senior developers for your team, it's important to ask advanced TensorFlow interview questions. This list offers targeted questions that help you assess their deep understanding and practical experience with TensorFlow, ensuring you pick the right talent for your projects.

- How do you implement distributed training in TensorFlow?

- Can you explain the concept of TensorFlow Serving and how it is used in model deployment?

- Discuss a time when you had to debug an issue in a TensorFlow model and how you resolved it.

- How do you handle custom metrics during model evaluation in TensorFlow?

- Can you describe how to use the TensorFlow Profiler for optimizing model performance?

- Explain how to implement a custom optimizer in TensorFlow.

- How do you manage memory and resource allocation when training large TensorFlow models?

- Can you discuss the use of mixed precision training in TensorFlow and its benefits?

- How would you approach implementing federated learning with TensorFlow?

- What strategies do you use for managing data pipelines in TensorFlow?

- How do you handle categorical data in TensorFlow?

- Explain the process of integrating TensorFlow with other machine learning frameworks or libraries.

- How would you implement a sequence-to-sequence model in TensorFlow?

- Discuss the use of reinforcement learning with TensorFlow.

- Can you explain how to create and use TensorFlow Hub modules?

9 TensorFlow interview questions and answers related to neural networks

Got an upcoming interview for a machine learning role? Use these TensorFlow neural network questions to dig deep into a candidate’s understanding and expertise. Perfect for assessing how well they grasp the intricacies of neural networks within the TensorFlow framework.

1. How would you explain a neural network to someone with no technical background?

A neural network is like a web of interconnected nodes, similar to the human brain's neurons. Each node is responsible for a specific piece of information and works together to solve complex problems.

An ideal candidate should simplify the concept without jargon, indicating their ability to communicate complex ideas clearly. Look for analogies and simple explanations that make it accessible to non-technical audiences.

2. What are the main components of a neural network?

The main components of a neural network are neurons (or nodes), layers, weights, biases, and activation functions. Neurons are organized into layers: input, hidden, and output layers. Weights and biases adjust the input data, while activation functions determine the output of each neuron.

Candidates should be able to explain each component clearly, showing their understanding of how these elements work together to form a neural network. Follow up by asking for examples to assess their depth of knowledge.

3. Can you describe the process of training a neural network?

Training a neural network involves feeding it data, making predictions, comparing those predictions to the actual outcomes, and adjusting the weights and biases to minimize errors. This process is repeated over multiple iterations until the network's performance stabilizes.

Look for candidates who mention key steps like forward propagation, loss function calculation, backpropagation, and optimization. They should also highlight the importance of data quality and the number of training iterations.

4. What is the role of an activation function in a neural network?

An activation function determines the output of a neuron based on its input. It introduces non-linearity into the network, enabling it to learn and model complex data patterns. Common activation functions include ReLU, Sigmoid, and Tanh.

Candidates should be able to explain why non-linearity is crucial for neural networks and discuss different types of activation functions and their use cases. An ideal response would also touch on potential pitfalls like vanishing gradients.

5. How do you prevent overfitting in a neural network?

Preventing overfitting involves techniques like cross-validation, regularization (L1, L2), dropout, and data augmentation. Overfitting occurs when a model performs well on training data but poorly on unseen data, indicating it has learned noise rather than signal.

Strong candidates should discuss various strategies and why they are effective. They might also mention monitoring validation loss and adjusting model complexity. Look for practical examples from their past experiences.

6. Why is data normalization important before training a neural network?

Data normalization scales input features to a common range, improving training stability and convergence speed. It helps in avoiding issues where some features dominate others due to their scale, leading to better model performance.

Candidates should explain the impact of unnormalized data on training and how normalization techniques like min-max scaling or z-score standardization can be applied. Look for their understanding of how normalization affects optimization algorithms.

7. What is the difference between a shallow and a deep neural network?

A shallow neural network has one or very few hidden layers, while a deep neural network has multiple hidden layers. Deep networks can capture more complex patterns but also require more data and computational power to train effectively.

Candidates should articulate the trade-offs between shallow and deep networks, such as complexity, training time, and risk of overfitting. They should also discuss scenarios where one might be preferred over the other.

8. How does a convolutional neural network (CNN) differ from a regular neural network?

A CNN is specifically designed for processing grid-like data, such as images. It uses convolutional layers to automatically and hierarchically learn spatial hierarchies in data, making it highly effective for tasks like image recognition.

Look for candidates who can explain the unique components of CNNs, such as convolutional layers, pooling layers, and fully connected layers. They should also discuss the advantages of CNNs over traditional neural networks for image-related tasks.

9. What are the benefits of using TensorFlow for implementing neural networks?

TensorFlow provides a robust framework for building and training neural networks with extensive libraries and tools. It supports both high-level and low-level programming, offers scalability, and integrates well with other machine learning and big data tools.

Candidates should emphasize TensorFlow's flexibility, performance optimization features, and community support. A strong answer might also highlight use cases and personal experiences with TensorFlow, showcasing their practical familiarity with the framework.

8 TensorFlow interview questions and answers related to model optimization

When optimizing TensorFlow models, it's crucial to ask the right questions to gauge a candidate's expertise. These 8 TensorFlow interview questions focus on model optimization techniques, helping you identify candidates who can create efficient and high-performing machine learning models. Use these questions to assess a candidate's understanding of TensorFlow's optimization capabilities and their ability to apply them effectively.

1. Can you explain the concept of model pruning in TensorFlow and when you might use it?

Model pruning in TensorFlow is a technique used to reduce the size and complexity of neural networks by removing unnecessary weights or connections. It's based on the observation that many weights in a trained network are close to zero and don't significantly contribute to the model's output.

Pruning is typically used when:

- You need to deploy models on resource-constrained devices

- You want to reduce the model's memory footprint

- You aim to improve inference speed without significant loss in accuracy

- You're looking to reduce overfitting in large models

Look for candidates who can explain the trade-offs between model size and accuracy, and who understand the iterative nature of pruning (prune, retrain, repeat). They should also be aware of TensorFlow's built-in pruning APIs and how to use them effectively.

2. How would you approach implementing mixed precision training in TensorFlow?

Mixed precision training in TensorFlow involves using a combination of 16-bit and 32-bit floating-point types to speed up training and reduce memory usage. The key steps to implement mixed precision training are:

- Import the mixed precision module from TensorFlow

- Set the global policy to mixed precision

- Wrap the optimizer with a loss scaling optimizer

- Ensure the model's last layer uses float32 for stability

Candidates should mention that mixed precision is particularly beneficial for models with a large memory footprint or when training on GPUs that support 16-bit operations. They should also be aware of potential numerical stability issues and how to address them.

Look for answers that demonstrate an understanding of the performance benefits and potential pitfalls of mixed precision training. Strong candidates will also mention the need to benchmark and compare results with full precision training to ensure accuracy is maintained.

3. Explain the concept of gradient accumulation in TensorFlow and when it might be useful.

Gradient accumulation is a technique used to simulate larger batch sizes when training deep learning models. It involves accumulating gradients over several mini-batches before updating the model's weights. This approach is particularly useful when:

- Working with limited GPU memory that can't accommodate large batch sizes

- Training very large models where increasing the batch size improves convergence

- Dealing with datasets where sample sizes vary significantly

To implement gradient accumulation in TensorFlow, you typically:

- Compute gradients for each mini-batch without applying them

- Accumulate these gradients over a set number of steps

- Average the accumulated gradients

- Apply the averaged gradients to update the model's weights

Look for candidates who can explain how gradient accumulation affects the training process and optimization algorithms. They should also be able to discuss the trade-offs between using gradient accumulation and simply reducing the batch size or using distributed training strategies.

4. How do you handle memory and resource allocation when training large TensorFlow models?

Handling memory and resource allocation for large TensorFlow models involves several strategies:

- Using tf.data for efficient data pipelines to reduce memory usage

- Implementing gradient checkpointing to trade computation for memory

- Utilizing mixed precision training to reduce memory footprint

- Employing model parallelism to distribute the model across multiple devices

- Implementing efficient batch sizes and potentially using gradient accumulation

- Using memory-efficient optimizers like Adam with reduced precision

Candidates should also mention the importance of profiling tools like TensorFlow Profiler to identify memory bottlenecks and optimize resource usage. They might discuss strategies like pruning or quantization to reduce model size.

Look for answers that demonstrate a comprehensive understanding of both software and hardware considerations. Strong candidates will discuss the trade-offs between different approaches and how to choose the right strategy based on the specific model and hardware constraints.

5. Can you explain the process of model quantization in TensorFlow and its benefits?

Model quantization in TensorFlow is the process of converting a model's weights and activations from floating-point to lower-precision formats, such as 8-bit integers. The main steps in the quantization process are:

- Training or fine-tuning the model with quantization awareness

- Converting the model to a quantized format

- Optionally, calibrating the model using representative data

The benefits of quantization include:

- Reduced model size, making it easier to deploy on edge devices

- Faster inference times, especially on hardware with dedicated int8 operations

- Lower power consumption, which is crucial for mobile and IoT devices

- Potential for improved cache utilization due to smaller memory footprint

Look for candidates who understand the different types of quantization (post-training, quantization-aware training) and their trade-offs. They should be able to discuss potential challenges like accuracy degradation and how to mitigate them. Strong candidates will also mention TensorFlow's built-in quantization APIs and tools for evaluating quantized models.

6. How would you implement federated learning with TensorFlow?

Federated learning in TensorFlow allows training models on decentralized data without sharing the raw data. The key steps to implement federated learning are:

- Define a model architecture suitable for federated learning

- Implement a federated averaging algorithm to aggregate model updates

- Set up a central server to coordinate the training process

- Develop client-side code to train on local data and send updates

- Implement secure aggregation protocols to protect privacy

Candidates should mention TensorFlow Federated (TFF), a framework specifically designed for federated learning. They should be able to discuss the challenges of federated learning, such as dealing with non-IID data, communication efficiency, and privacy concerns.

Look for answers that demonstrate an understanding of the trade-offs between centralized and federated learning. Strong candidates will discuss how to evaluate federated models, handle stragglers (slow clients), and implement differential privacy techniques to enhance security.

7. Explain how you would use TensorFlow's Dataset API to optimize data pipelines for large-scale training.

TensorFlow's Dataset API is crucial for creating efficient data pipelines, especially for large-scale training. Key optimization strategies include:

- Using

tf.data.Dataset.from_generatorortf.data.TFRecordDatasetfor efficient data loading

- Implementing

prefetchto overlap data preprocessing and model execution

- Utilizing

cachefor small datasets that fit in memory

- Applying

mapwithnum_parallel_callsfor parallel data preprocessing

- Using

batchwith appropriate batch sizes for efficient GPU utilization

- Implementing

shufflewith a sufficiently large buffer for randomization

Candidates should also mention the importance of using tf.data.experimental.AUTOTUNE for automatic tuning of parallel calls and buffer sizes. They might discuss strategies for handling variable-length sequences or implementing custom parsing functions.

Look for answers that demonstrate an understanding of how the data pipeline can become a bottleneck in training. Strong candidates will discuss how to profile and benchmark data pipelines, and how to balance CPU and GPU utilization for optimal performance.

8. How would you approach debugging a TensorFlow model that's not converging?

Debugging a non-converging TensorFlow model requires a systematic approach:

- Check the loss function and ensure it's appropriate for the task

- Verify the data pipeline for correctness and potential issues like data leakage

- Inspect learning rate and consider implementing learning rate schedules

- Analyze gradient flow using

tf.GradientTapeor TensorBoard's histogram feature

- Implement gradient clipping to prevent exploding gradients

- Use TensorBoard to visualize training metrics and model architecture

- Start with a simpler model and gradually increase complexity

- Check for class imbalance and consider adjusting sample weights

Candidates should also mention the importance of proper initialization, regularization techniques, and potentially using a pre-trained model as a starting point.

Look for answers that demonstrate a methodical approach to debugging. Strong candidates will discuss the use of TensorFlow's debugging tools like tf.debugging module, and mention strategies for isolating the problem, such as testing on a small subset of data or using synthetic data.

7 situational TensorFlow interview questions with answers for hiring top developers

Ready to dive into the world of TensorFlow interviews? These situational questions will help you assess candidates' practical knowledge and problem-solving skills. Use them to uncover how potential hires apply TensorFlow concepts in real-world scenarios, and get a better feel for their hands-on experience.

1. You're working on a TensorFlow model that's taking an unusually long time to train. How would you approach optimizing the training process?

When optimizing a TensorFlow model's training process, I would consider several approaches:

• Analyze the input pipeline: Ensure data loading isn't a bottleneck by using tf.data for efficient data loading and preprocessing. • Use mixed precision training: Implement float16 computations where possible to speed up training on compatible hardware. • Optimize model architecture: Simplify the model if possible, or use more efficient layer types. • Leverage distributed training: Utilize multiple GPUs or TPUs if available. • Profile the model: Use TensorFlow Profiler to identify performance bottlenecks.

Look for candidates who demonstrate a systematic approach to optimization and familiarity with TensorFlow's performance-enhancing features. Strong answers will prioritize data pipeline efficiency and hardware utilization before considering model architecture changes.

2. How would you implement a custom loss function in TensorFlow for a recommendation system that needs to balance user preferences with item popularity?

To implement a custom loss function for this scenario, I would:

• Define a class that inherits from tf.keras.losses.Loss • Override the call method to compute the loss • Incorporate both user preference error and item popularity bias • Use TensorFlow operations to ensure the function is differentiable • Add weights to balance the importance of preferences vs. popularity

An ideal response should demonstrate understanding of TensorFlow's custom loss implementation and the ability to translate business requirements into mathematical formulations. Look for candidates who discuss the importance of keeping the function differentiable and mention potential challenges in balancing different aspects of the loss.

3. You're tasked with deploying a TensorFlow model to mobile devices. What considerations would you keep in mind, and how would you approach this?

When deploying a TensorFlow model to mobile devices, key considerations include:

• Model size: Optimize the model for mobile by pruning, quantization, and potentially using TensorFlow Lite. • Inference speed: Ensure the model can run efficiently on mobile processors. • Platform compatibility: Consider using TensorFlow Lite for Android and iOS deployments. • Memory usage: Minimize RAM requirements during inference. • Power consumption: Optimize to reduce battery drain during model execution.

Look for candidates who mention TensorFlow Lite and demonstrate awareness of mobile-specific challenges. Strong answers might also touch on the potential use of on-device training or transfer learning to personalize models on user devices.

4. Explain how you would implement a custom training loop in TensorFlow for a GAN (Generative Adversarial Network).

Implementing a custom training loop for a GAN in TensorFlow involves several steps:

• Define separate generator and discriminator models • Create optimizers for both models • Implement the training step using tf.GradientTape • Alternate between training the discriminator and generator • Use custom metrics to track progress • Implement a training loop that calls the training step and logs results

An ideal response should demonstrate understanding of GANs and TensorFlow's low-level APIs. Look for candidates who mention the importance of balancing generator and discriminator training, and who can explain how to use tf.function for performance optimization.

5. How would you approach building a multi-task learning model in TensorFlow where some tasks have limited labeled data?

Building a multi-task learning model with limited labeled data for some tasks requires careful consideration:

• Use a shared base network with task-specific output layers • Implement data augmentation for tasks with limited data • Utilize transfer learning by pre-training on related tasks • Employ regularization techniques like dropout or L1/L2 regularization • Implement a custom loss function that balances the importance of each task • Consider semi-supervised learning approaches for tasks with limited labels

Look for candidates who demonstrate understanding of multi-task learning architectures and strategies for dealing with imbalanced data availability across tasks. Strong answers might also discuss the potential benefits of multi-task learning in improving model generalization.

6. You notice that your TensorFlow model's performance degrades significantly when deployed in production. How would you investigate and address this issue?

To investigate and address performance degradation in production, I would follow these steps:

• Compare training and production data distributions • Check for data drift or concept drift • Analyze model inputs and outputs in production • Implement monitoring for model performance metrics • Investigate potential issues with data preprocessing pipeline • Consider retraining the model with production data • Evaluate if the model architecture is suitable for production constraints

Look for candidates who demonstrate a systematic approach to debugging and mention the importance of monitoring in production environments. Strong answers might also discuss strategies for continuous learning or model updating to adapt to changing data patterns.

7. How would you implement a custom layer in TensorFlow that applies different operations based on a learned attention mechanism?

To implement a custom layer with a learned attention mechanism in TensorFlow:

• Subclass tf.keras.layers.Layer • Implement the build method to define learnable weights for attention • Create the call method to apply attention and different operations • Use tf.math operations to ensure differentiability • Implement get_config and from_config for serialization

An ideal response should demonstrate understanding of TensorFlow's custom layer API and attention mechanisms. Look for candidates who discuss how to make the layer trainable and compatible with the rest of the TensorFlow ecosystem.

Which TensorFlow skills should you evaluate during the interview phase?

While it's impossible to assess every aspect of a candidate's TensorFlow proficiency in a single interview, focusing on key skills can provide valuable insights. The following core competencies are particularly important when evaluating TensorFlow expertise.

Python

Python is the primary language for TensorFlow development. A strong foundation in Python is essential for effectively using TensorFlow's APIs and building complex models.

To evaluate Python skills, consider using a Python online test with relevant MCQs. This can help filter candidates based on their Python proficiency.

During the interview, ask targeted questions to assess Python knowledge in the context of TensorFlow. Here's an example:

Can you explain how you would use Python list comprehensions to preprocess a dataset for a TensorFlow model?

Look for answers that demonstrate understanding of both Python list comprehensions and data preprocessing concepts. A good response should include examples of how to efficiently transform or filter data using list comprehensions.

Machine Learning Fundamentals

A solid grasp of machine learning concepts is crucial for effective use of TensorFlow. This includes understanding various algorithms, model architectures, and evaluation metrics.

To assess machine learning knowledge, you can use a machine learning online test that covers fundamental concepts and their applications.

During the interview, ask questions that reveal the candidate's understanding of machine learning principles in relation to TensorFlow. For example:

How would you approach building a classification model using TensorFlow, and what considerations would you keep in mind for model evaluation?

Look for answers that discuss model architecture selection, data preprocessing, training process, and evaluation metrics like accuracy, precision, and recall. A good response should also mention techniques like cross-validation and handling class imbalance.

TensorFlow API and Ecosystem

Familiarity with TensorFlow's API and ecosystem is key for efficient development. This includes knowledge of core modules, high-level APIs like Keras, and tools for model deployment and optimization.

To assess TensorFlow-specific knowledge, consider asking questions about its features and best practices. Here's an example:

Can you explain the difference between eager execution and graph execution in TensorFlow, and when you would use each?

Look for answers that demonstrate understanding of TensorFlow's execution models. A good response should explain that eager execution is more intuitive and easier for debugging, while graph execution is more efficient for large-scale deployments and offers better performance optimization.

Hire top talent with TensorFlow skills tests and the right interview questions

When seeking to hire candidates with TensorFlow skills, it is important to verify that they possess the necessary expertise. This ensures that you are bringing in individuals who can contribute effectively to your projects.

One of the most accurate ways to assess these skills is by using skill tests. Consider using our Deep Learning Online Test or the Neural Networks Test for precise evaluations.

After administering these tests, you can efficiently shortlist the best applicants and invite them for interviews. This focused approach helps streamline your hiring process.

To get started, sign up on our assessment platform and explore more tailored tests from our test library. This will ensure you find the right TensorFlow experts for your team.

Machine Learning Assessment Test

Download TensorFlow interview questions template in multiple formats

TensorFlow Interview Questions FAQs

General questions include topics on TensorFlow architecture, basic operations, and its applications in machine learning.

Focus on fundamental concepts such as TensorFlow basics, data preprocessing, and simple model building.

Ask about intermediate topics like custom model training, optimization techniques, and TensorFlow’s ecosystem tools.

Challenge them with advanced questions about model deployment, distributed training, and TensorFlow Extended (TFX).

Situational questions help understand how candidates approach problem-solving and real-world scenarios using TensorFlow.

These questions evaluate a candidate's ability to enhance model performance and efficiency.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources