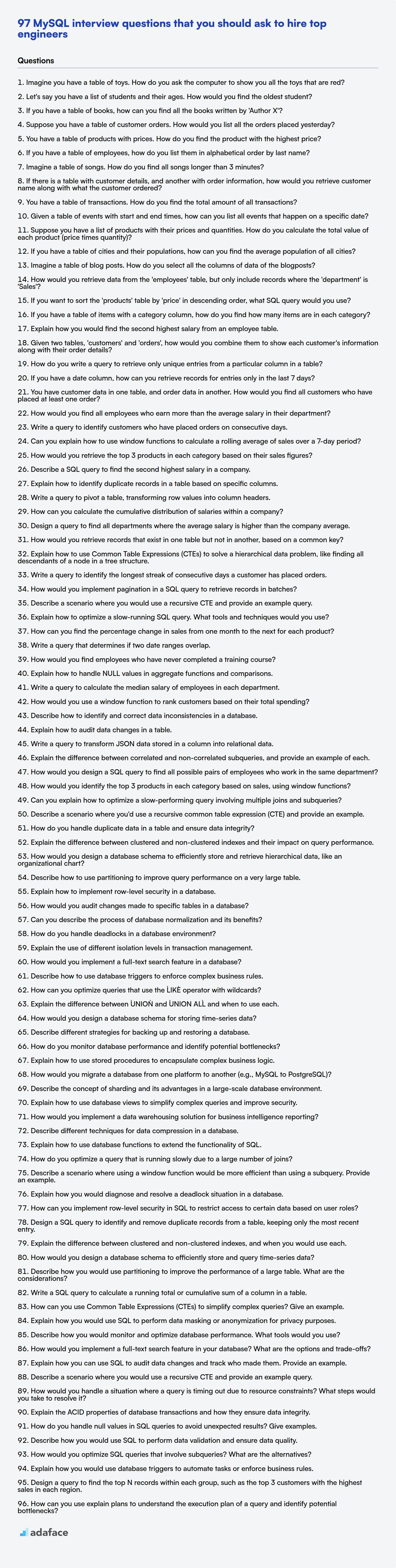

SQL skills are foundational across many technical roles, from Data Analysts to Database Administrators. Effectively assessing a candidate's SQL abilities is therefore, key to ensuring they can meet the demands of the role; just like identifying the right skills for a data engineer.

This blog post provides a range of SQL interview questions, categorized by skill level: basic, intermediate, advanced, and expert, in addition to MCQs. You will find carefully curated questions designed to evaluate candidates' SQL proficiency and problem-solving abilities.

By using these questions, you will confidently assess candidates' SQL knowledge and practical skills, and reduce time to hire by using Adaface's SQL assessments before the interview.

Table of contents

Basic SQL Queries interview questions

1. Imagine you have a table of toys. How do you ask the computer to show you all the toys that are red?

Assuming we're using SQL, you'd use a SELECT statement with a WHERE clause to filter by color. It would look something like this:

SELECT * FROM toys WHERE color = 'red';

This SQL query selects all columns (*) from the toys table, but only includes rows where the color column has the value 'red'.

2. Let's say you have a list of students and their ages. How would you find the oldest student?

To find the oldest student, I would iterate through the list, keeping track of the oldest student found so far and their age. For each student, I would compare their age to the age of the current oldest student. If the current student is older, I would update the oldest student. At the end of the iteration, the variable would hold the oldest student.

In Python, this can be done using a loop:

students = [{"name": "Alice", "age": 20}, {"name": "Bob", "age": 22}, {"name": "Charlie", "age": 19}]

oldest_student = students[0]

for student in students:

if student["age"] > oldest_student["age"]:

oldest_student = student

print(oldest_student)

3. If you have a table of books, how can you find all the books written by 'Author X'?

To find all books written by 'Author X' from a table of books, you would use a SQL query with a WHERE clause. Assuming your table is named books and has a column named author, the query would look like this:

SELECT * FROM books WHERE author = 'Author X';

This query selects all columns (*) from the books table where the value in the author column is equal to 'Author X'. The result will be a list of all books written by that author.

4. Suppose you have a table of customer orders. How would you list all the orders placed yesterday?

To list all orders placed yesterday, you would use a SQL query similar to the following:

SELECT *

FROM Orders

WHERE OrderDate = CURRENT_DATE - INTERVAL '1 day';

This query assumes that OrderDate is the column storing the date of the order. The CURRENT_DATE - INTERVAL '1 day' portion calculates yesterday's date. If the database system uses a different date function (e.g., DATE() in MySQL or GETDATE() in SQL Server), the query would need to be adjusted accordingly. Also the above query returns just the date, but you might need to adjust the format of date-time column to just date for comparison, depends on your database system.

5. You have a table of products with prices. How do you find the product with the highest price?

To find the product with the highest price, you'd typically use SQL. Assuming your table is named products and has columns product_name and price, the query would look like this:

SELECT product_name, price

FROM products

ORDER BY price DESC

LIMIT 1;

This query orders the table by the price column in descending order and then limits the result to the first row, which contains the product with the highest price. Some databases might offer alternative ways to achieve the same result, such as using a MAX() function in a subquery, but the above approach is generally efficient and readable.

6. If you have a table of employees, how do you list them in alphabetical order by last name?

Assuming your employee table has columns like first_name and last_name, you can use an SQL query like this:

SELECT * FROM employees ORDER BY last_name ASC;

This query selects all columns (*) from the employees table and orders the results alphabetically in ascending order (ASC) based on the values in the last_name column.

7. Imagine a table of songs. How do you find all songs longer than 3 minutes?

Assuming the table is named songs and has a column duration_seconds representing the song's length in seconds, the following SQL query would retrieve all songs longer than 3 minutes (180 seconds):

SELECT * FROM songs WHERE duration_seconds > 180;

This query selects all columns (*) from the songs table where the duration_seconds column is greater than 180.

8. If there is a table with customer details, and another with order information, how would you retrieve customer name along with what the customer ordered?

To retrieve customer name along with order details from two tables, one with customer details and another with order information, you would typically use a SQL JOIN operation. Assuming the tables are named Customers and Orders, and they share a common column such as CustomerID, the query would look like this:

SELECT c.CustomerName, o.OrderDetails

FROM Customers c

JOIN Orders o ON c.CustomerID = o.CustomerID;

This query joins the Customers table (aliased as c) with the Orders table (aliased as o) based on the CustomerID. It then selects the CustomerName from the Customers table and OrderDetails from the Orders table for each matching row.

9. You have a table of transactions. How do you find the total amount of all transactions?

To find the total amount of all transactions in a table, you would use the SUM() aggregate function in SQL.

SELECT SUM(transaction_amount) AS total_amount

FROM transactions;

Replace transaction_amount with the actual column name that holds the transaction amounts in your transactions table. The AS total_amount part is optional; it just gives a name to the resulting sum.

10. Given a table of events with start and end times, how can you list all events that happen on a specific date?

To list all events that happen on a specific date, you need to query the table and filter the results based on the event's start and end times. Assuming your table has columns start_time and end_time (both with appropriate datetime datatypes), and you want to find events happening on a date, say '2024-03-15', you can use the following SQL-like logic:

SELECT * FROM events

WHERE start_time <= '2024-03-15 23:59:59' AND end_time >= '2024-03-15 00:00:00';

This query selects all events where the start_time is before the end of the given date, and the end_time is after the beginning of the given date. This ensures that events that start before, end after, or occur entirely within the specified date are included in the results. Replace '2024-03-15' with the desired date.

11. Suppose you have a list of products with their prices and quantities. How do you calculate the total value of each product (price times quantity)?

To calculate the total value of each product, you simply multiply the price of the product by its quantity. For example, if a product has a price of $10 and a quantity of 5, the total value is $10 * 5 = $50.

If you are working with a list or array of products, you would iterate through the list and apply this calculation to each product. In Python, this could look like:

products = [{"name": "A", "price": 10, "quantity": 5}, {"name": "B", "price": 20, "quantity": 2}]

for product in products:

total_value = product["price"] * product["quantity"]

print(f"Total value of {product['name']}: ${total_value}")

12. If you have a table of cities and their populations, how can you find the average population of all cities?

To find the average population of all cities, you would sum the population of each city and then divide by the total number of cities. This can be done using SQL with the following steps:

- Use the

SUM()aggregate function to calculate the total population of all cities. - Use the

COUNT(*)aggregate function to count the total number of cities. - Divide the total population by the total number of cities to get the average. For example:

SELECT SUM(population) / COUNT(*) AS average_population

FROM cities;

13. Imagine a table of blog posts. How do you select all the columns of data of the blogposts?

To select all columns from a table named blogposts, you would use the following SQL query:

SELECT * FROM blogposts;

The * symbol is a wildcard that represents all columns in the table. This query retrieves every column (e.g., id, title, content, author, date_published) for each row in the blogposts table.

14. How would you retrieve data from the 'employees' table, but only include records where the 'department' is 'Sales'?

To retrieve data from the 'employees' table where the 'department' is 'Sales', you would use a SQL query with a WHERE clause. Here's an example:

SELECT * FROM employees WHERE department = 'Sales';

This query selects all columns (*) from the 'employees' table, but filters the results to only include rows where the value in the 'department' column is equal to 'Sales'.

15. If you want to sort the 'products' table by 'price' in descending order, what SQL query would you use?

To sort the 'products' table by 'price' in descending order, you would use the following SQL query:

SELECT * FROM products ORDER BY price DESC;

The ORDER BY clause is used to sort the result set. DESC keyword specifies that the sorting should be in descending order. If you wanted ascending order (which is the default), you would use ASC or omit the keyword.

16. If you have a table of items with a category column, how do you find how many items are in each category?

To find how many items are in each category in a table, you would use a GROUP BY clause in SQL. This clause groups rows that have the same value in a specified column into summary rows, like counting the occurrences of each category.

For example, if your table is named items and has a column named category, the query would look like this:

SELECT category, COUNT(*) AS item_count

FROM items

GROUP BY category;

This query selects the category and the count of items (COUNT(*)) for each category. The GROUP BY category clause ensures that the count is calculated separately for each distinct category.

17. Explain how you would find the second highest salary from an employee table.

To find the second highest salary from an employee table, you can use SQL. One common approach involves using a subquery or window functions. Using a subquery, you'd first find the maximum salary, and then select the maximum salary that is less than the maximum salary.

SELECT MAX(salary) FROM employee WHERE salary < (SELECT MAX(salary) FROM employee);

Alternatively, using window functions:

SELECT salary FROM (SELECT salary, DENSE_RANK() OVER (ORDER BY salary DESC) as rank_num FROM employee) AS ranked_salaries WHERE rank_num = 2 LIMIT 1;

This approach ranks the salaries in descending order and selects the salary with rank 2.

18. Given two tables, 'customers' and 'orders', how would you combine them to show each customer's information along with their order details?

To combine the customers and orders tables and show customer information along with order details, you'd typically use a JOIN operation in SQL. Specifically, a LEFT JOIN or INNER JOIN are common choices depending on the desired result. An INNER JOIN will only return rows where there's a match in both tables. A LEFT JOIN will return all rows from the customers table and the matching rows from the orders table. If there's no matching order for a customer, the order details columns will contain NULL values.

Here's an example SQL query assuming both tables have a common column named customer_id:

SELECT

customers.*,

orders.*

FROM

customers

LEFT JOIN

orders ON customers.customer_id = orders.customer_id;

19. How do you write a query to retrieve only unique entries from a particular column in a table?

To retrieve only unique entries from a particular column in a table, you can use the DISTINCT keyword in SQL. The basic syntax is:

SELECT DISTINCT column_name FROM table_name;

This query will return a list of all the distinct values present in the specified column, effectively removing any duplicate entries. For example, if you have a table named 'Customers' and you want to retrieve all the unique cities from the 'City' column, the query would be SELECT DISTINCT City FROM Customers;

20. If you have a date column, how can you retrieve records for entries only in the last 7 days?

To retrieve records from a date column for entries only in the last 7 days, you would typically use a WHERE clause in your SQL query that compares the date column to the current date minus 7 days.

For example, assuming your date column is named date_column and your table is named your_table, the SQL query would look like this:

SELECT * FROM your_table WHERE date_column >= DATE('now', '-7 days');

This query selects all records from your_table where the date_column is within the last 7 days, including today.

21. You have customer data in one table, and order data in another. How would you find all customers who have placed at least one order?

To find all customers who have placed at least one order, I would use a JOIN operation between the customer and order tables. Assuming the customer table has a customer_id and the order table has a customer_id referencing the customer, a SQL query would look like this:

SELECT DISTINCT c.customer_id

FROM customers c

JOIN orders o ON c.customer_id = o.customer_id;

This query joins the two tables based on the customer_id. The DISTINCT keyword ensures that each customer is only listed once, even if they have placed multiple orders. If you don't have SQL you can also use pandas/spark or any other data processing tool to achieve the same result using similar logic.

Intermediate SQL Queries interview questions

1. How would you find all employees who earn more than the average salary in their department?

To find all employees who earn more than the average salary in their department, you can use SQL. The general approach involves using a subquery or a window function.

Here's an example using a subquery:

SELECT employee_name, salary, department

FROM employees e

WHERE salary > (

SELECT AVG(salary)

FROM employees

WHERE department = e.department

);

Alternatively, using a window function:

SELECT employee_name, salary, department

FROM (

SELECT employee_name, salary, department, AVG(salary) OVER (PARTITION BY department) as avg_dept_salary

FROM employees

) AS subquery

WHERE salary > avg_dept_salary;

Both queries achieve the same goal, but the window function approach can sometimes be more efficient for larger datasets.

2. Write a query to identify customers who have placed orders on consecutive days.

To identify customers who have placed orders on consecutive days, you can use a self-join or window functions. Here's an example using SQL with window functions:

SELECT DISTINCT customer_id

FROM (

SELECT

customer_id,

order_date,

LAG(order_date, 1, order_date) OVER (PARTITION BY customer_id ORDER BY order_date) as prev_order_date

FROM

orders

) sub

WHERE DATEDIFF(order_date, prev_order_date) = 1;

This query uses the LAG window function to get the previous order date for each customer. Then, it filters for rows where the difference between the current order date and the previous order date is exactly 1 day. The outer SELECT DISTINCT ensures each customer is only listed once.

3. Can you explain how to use window functions to calculate a rolling average of sales over a 7-day period?

To calculate a rolling 7-day average of sales using window functions, you would typically use SQL. The key is the AVG() aggregate function combined with the OVER() clause to define the window. Within OVER(), you specify ORDER BY to define the order and ROWS BETWEEN to define the window frame, in this case, 6 preceding days and the current day.

Here's an example SQL query:

SELECT

sale_date,

sales_amount,

AVG(sales_amount) OVER (ORDER BY sale_date ASC ROWS BETWEEN 6 PRECEDING AND CURRENT ROW) AS rolling_avg

FROM

sales_table;

This query calculates the average sales_amount for each sale_date, considering the current day and the 6 days before it.

4. How would you retrieve the top 3 products in each category based on their sales figures?

To retrieve the top 3 products in each category based on sales figures, I'd typically use a window function in SQL. Assuming you have a table named products with columns like category_id, product_id, and sales, the query would look like this:

SELECT category_id, product_id, sales

FROM (

SELECT category_id, product_id, sales,

RANK() OVER (PARTITION BY category_id ORDER BY sales DESC) as sales_rank

FROM products

) ranked_products

WHERE sales_rank <= 3;

This query first ranks products within each category based on sales in descending order using the RANK() window function. Then, it selects only those products where the rank is less than or equal to 3, effectively giving you the top 3 products per category. The PARTITION BY clause ensures that the ranking is done separately for each category.

5. Describe a SQL query to find the second highest salary in a company.

To find the second highest salary, you can use a subquery or window functions.

Using a subquery:

SELECT MAX(salary)

FROM employee

WHERE salary < (SELECT MAX(salary) FROM employee);

Using window functions (more robust for handling edge cases like ties):

SELECT salary

FROM (

SELECT salary, DENSE_RANK() OVER (ORDER BY salary DESC) as salary_rank

FROM employee

) AS ranked_salaries

WHERE salary_rank = 2;

The window function approach is generally preferred because it can handle scenarios where multiple employees might have the same highest salary, ensuring that you still retrieve the second distinct salary.

6. Explain how to identify duplicate records in a table based on specific columns.

To identify duplicate records in a table based on specific columns, you can use SQL queries. The basic idea is to group the data by the columns you want to check for duplicates and then count the occurrences of each group. If any group has a count greater than 1, it indicates duplicate records.

Here's a general SQL approach:

SELECT column1, column2, COUNT(*)

FROM table_name

GROUP BY column1, column2

HAVING COUNT(*) > 1;

Replace column1, column2 with the names of the columns you want to check for duplicates. The HAVING COUNT(*) > 1 clause filters the results to show only those groups (combinations of column1 and column2 values) that appear more than once in the table.

7. Write a query to pivot a table, transforming row values into column headers.

Pivoting data transforms row values into column headers. The exact syntax varies depending on the database system.

For example, in SQL Server, you could use the PIVOT operator:

SELECT * FROM

(

SELECT category, product, sales FROM SalesTable

) AS SourceTable

PIVOT

(

SUM(sales)

FOR category IN ([Category1], [Category2], [Category3]) -- specify all possible categories

) AS PivotTable;

In other databases like MySQL, you might need to use conditional aggregation:

SELECT

product,

SUM(CASE WHEN category = 'Category1' THEN sales ELSE 0 END) AS Category1,

SUM(CASE WHEN category = 'Category2' THEN sales ELSE 0 END) AS Category2,

SUM(CASE WHEN category = 'Category3' THEN sales ELSE 0 END) AS Category3

FROM

SalesTable

GROUP BY product;

8. How can you calculate the cumulative distribution of salaries within a company?

To calculate the cumulative distribution of salaries, you need a dataset of all salaries. First, sort the salaries in ascending order. Then, for each salary, calculate the cumulative probability by dividing its rank (position in the sorted list) by the total number of salaries. This result represents the proportion of employees earning at or below that salary. The result is the cumulative distribution.

For example, if you have 100 salaries, the employee with the lowest salary has a cumulative probability of 1/100 = 0.01, meaning 1% of employees earn at or below that salary. The employee in the middle (50th position) has a cumulative probability of 50/100 = 0.5, or 50%. The highest paid employee would have a cumulative probability of 1.0 (100%). This can be easily visualized with a cumulative distribution function (CDF) plot.

9. Design a query to find all departments where the average salary is higher than the company average.

SELECT department

FROM employees

GROUP BY department

HAVING AVG(salary) > (SELECT AVG(salary) FROM employees);

This query first calculates the average salary for each department using GROUP BY and AVG(salary). Then, the HAVING clause filters these departments, keeping only those where the average salary is greater than the overall company's average salary (calculated by the subquery SELECT AVG(salary) FROM employees).

10. How would you retrieve records that exist in one table but not in another, based on a common key?

To retrieve records that exist in one table (Table A) but not in another (Table B) based on a common key, you can use a LEFT JOIN or a NOT EXISTS clause in SQL. A LEFT JOIN includes all rows from the left table (Table A) and the matching rows from the right table (Table B). By filtering for rows where the right table's join column is NULL, you can identify records present only in Table A.

Alternatively, the NOT EXISTS clause checks for the absence of matching records in Table B for each record in Table A. This approach is often more performant, especially with proper indexing.

Example using LEFT JOIN:

SELECT A.*

FROM TableA A

LEFT JOIN TableB B ON A.CommonKey = B.CommonKey

WHERE B.CommonKey IS NULL;

Example using NOT EXISTS:

SELECT A.*

FROM TableA A

WHERE NOT EXISTS (SELECT 1 FROM TableB B WHERE A.CommonKey = B.CommonKey);

11. Explain how to use Common Table Expressions (CTEs) to solve a hierarchical data problem, like finding all descendants of a node in a tree structure.

CTEs provide a clean and efficient way to traverse hierarchical data. For finding all descendants of a node, a recursive CTE can be used. The CTE consists of two parts: an anchor member and a recursive member. The anchor member selects the initial node (the root node for finding all descendants). The recursive member then joins the CTE with the original table to find the children of the previously selected nodes.

Here's a general example:

WITH RECURSIVE Descendants AS (

SELECT id, parent_id, name

FROM your_table

WHERE id = 'target_node_id' -- Anchor member: start from the target node

UNION ALL

SELECT t.id, t.parent_id, t.name

FROM your_table t

INNER JOIN Descendants d ON t.parent_id = d.id -- Recursive member: find children

)

SELECT * FROM Descendants;

your_table contains the hierarchical data, id is the node identifier, and parent_id represents the parent of a given node. The initial SELECT statement fetches the target node. The UNION ALL combines the initial selection with the results of the recursive step. The recursive step joins your_table with the CTE (Descendants) to find child nodes. The recursion continues until no more descendants are found.

12. Write a query to identify the longest streak of consecutive days a customer has placed orders.

To identify the longest streak of consecutive days a customer has placed orders, you can use SQL. Here's a general approach:

WITH RankedOrders AS (

SELECT

customer_id,

order_date,

ROW_NUMBER() OVER (PARTITION BY customer_id ORDER BY order_date) AS rn

FROM

orders

GROUP BY customer_id, order_date -- handles multiple orders on the same day

),

ConsecutiveDays AS (

SELECT

customer_id,

order_date,

DATE(order_date, '-' || (rn - 1) || ' days') AS date_group

FROM

RankedOrders

),

Streaks AS (

SELECT

customer_id,

date_group,

COUNT(*) AS streak_length

FROM

ConsecutiveDays

GROUP BY

customer_id, date_group

)

SELECT

customer_id,

MAX(streak_length) AS longest_streak

FROM

Streaks

GROUP BY customer_id

ORDER BY longest_streak DESC;

First, we assign a rank to each order date for each customer. Then, we calculate a date_group by subtracting the rank minus one from the order date. Consecutive dates will have the same date_group. Finally, we group by customer_id and date_group to calculate the streak_length and find the maximum streak for each customer.

13. How would you implement pagination in a SQL query to retrieve records in batches?

Pagination in SQL is typically implemented using the LIMIT and OFFSET clauses. LIMIT specifies the maximum number of rows to return, while OFFSET specifies the number of rows to skip from the beginning of the result set.

For example, to retrieve records 11-20 (a batch of 10 records starting from the 11th record), the query would look like this:

SELECT * FROM table_name ORDER BY id LIMIT 10 OFFSET 10;

In this query LIMIT 10 restricts the number of returned rows to 10, and OFFSET 10 skips the first 10 rows. This is suitable for a single database. Use a keyset pagination method if you want to scale to multiple DB shards.

14. Describe a scenario where you would use a recursive CTE and provide an example query.

A recursive CTE is useful when you need to query hierarchical or tree-structured data. For example, imagine an employee table where each employee can report to another employee (forming a management hierarchy). If you need to find all subordinates of a given manager, a recursive CTE is an efficient approach.

WITH RECURSIVE EmployeeHierarchy AS (

SELECT id, name, manager_id, 0 AS level

FROM Employees

WHERE id = 'specific_manager_id' -- Start with the specific manager

UNION ALL

SELECT e.id, e.name, e.manager_id, eh.level + 1

FROM Employees e

INNER JOIN EmployeeHierarchy eh ON e.manager_id = eh.id -- Find subordinates

)

SELECT id, name, level FROM EmployeeHierarchy;

This query starts with a specific manager and then recursively joins the Employees table with the CTE to find all employees who report to someone already in the CTE, effectively traversing the hierarchy downwards. The level column indicates the depth of each employee in the hierarchy relative to the initial manager. The query will continue until it reaches the bottom of the hierarchy (employees with no subordinates).

15. Explain how to optimize a slow-running SQL query. What tools and techniques would you use?

To optimize a slow-running SQL query, I'd start by identifying the bottleneck. I'd use tools like the database's query execution plan analyzer (e.g., EXPLAIN in MySQL or PostgreSQL) to understand how the database is executing the query and pinpoint slow operations, such as full table scans or inefficient joins. Common techniques include:

- Index Optimization: Ensuring appropriate indexes exist on columns used in

WHEREclauses,JOINconditions, andORDER BYclauses. Adding, removing, or modifying indexes based on the query's execution plan can significantly improve performance. - Query Rewriting: Restructuring the query to be more efficient. This might involve simplifying complex

WHEREclauses, avoidingSELECT *, usingJOINs instead of subqueries (or vice-versa depending on the context), and optimizingGROUP BYandORDER BYoperations. - Data Partitioning: For large tables, partitioning data can reduce the amount of data scanned by the query.

- Caching: Implementing caching mechanisms (e.g., using database-level caching or an external caching layer like Redis) to store frequently accessed data.

- Hardware Upgrades: If the database server is resource-constrained (CPU, memory, disk I/O), upgrading the hardware can improve overall performance.

- Statistics Updates: Ensuring that the database optimizer has up-to-date statistics about the data distribution in tables. These statistics are used to generate efficient execution plans.

- Analyze Query Patterns: Identifying common slow queries and optimizing them or re-designing related functionality.

I would also use database monitoring tools to observe query performance over time and identify any recurring issues. I would profile the query with the execution plan frequently to observe differences as I implemented new indexes or re-wrote the query.

16. How can you find the percentage change in sales from one month to the next for each product?

To find the percentage change in sales from one month to the next for each product, you can use the following formula:

Percentage Change = ((Current Month Sales - Previous Month Sales) / Previous Month Sales) * 100

You would apply this formula to each product individually. For example, if Product A had sales of 100 in January and 120 in February, the percentage change would be ((120 - 100) / 100) * 100 = 20%. This calculation would be repeated for each product and each month-to-month comparison.

17. Write a query that determines if two date ranges overlap.

To determine if two date ranges overlap, you can use the following logic. Let's say we have two ranges, Range A (start_a, end_a) and Range B (start_b, end_b). The ranges overlap if and only if: start_a <= end_b AND start_b <= end_a. This condition verifies that the starting point of either range falls before the ending point of the other range.

Here's an example SQL query illustrating this:

SELECT

CASE

WHEN start_a <= end_b AND start_b <= end_a THEN 'Overlap'

ELSE 'No Overlap'

END AS OverlapStatus

FROM

YourTable;

18. How would you find employees who have never completed a training course?

To find employees who haven't completed a specific training course, you'd typically query a database with employee and training information. Assuming you have an employees table and a training_completions table, you can use a LEFT JOIN and check for NULL values in the training_completions table.

For example, in SQL:

SELECT e.employee_id, e.employee_name

FROM employees e

LEFT JOIN training_completions tc ON e.employee_id = tc.employee_id AND tc.course_id = 'specific_course_id'

WHERE tc.employee_id IS NULL;

This query retrieves all employees from the employees table and attempts to match them with records in the training_completions table for the specified course. The WHERE clause filters for cases where there's no matching record in training_completions (i.e., tc.employee_id IS NULL), indicating the employee hasn't completed the course.

19. Explain how to handle NULL values in aggregate functions and comparisons.

Aggregate functions (like SUM, AVG, COUNT, MIN, MAX) typically handle NULL values by excluding them from the calculation. For example, SUM(column_with_nulls) will sum only the non-NULL values in that column. COUNT(*) counts all rows, including those with NULLs, while COUNT(column_name) counts only rows where column_name is not NULL.

Comparisons with NULL always evaluate to UNKNOWN (which is treated as neither true nor false in SQL). Therefore, you cannot use =, !=, <, >, etc. to directly compare with NULL. Instead, use IS NULL and IS NOT NULL to check for NULL values. For example, WHERE column_name IS NULL will select rows where column_name contains a NULL value.

20. Write a query to calculate the median salary of employees in each department.

Calculating the median salary requires different approaches depending on the database system. Here's a general outline using SQL and some common approaches:

WITH DepartmentSalaries AS (

SELECT

department_id,

salary,

ROW_NUMBER() OVER (PARTITION BY department_id ORDER BY salary) AS row_num,

COUNT(*) OVER (PARTITION BY department_id) AS total_employees

FROM

employees

),

MedianCalculator AS (

SELECT

department_id,

AVG(salary) AS median_salary

FROM

DepartmentSalaries

WHERE

row_num BETWEEN (total_employees + 1) / 2 AND (total_employees + 2) / 2

GROUP BY

department_id

)

SELECT

mc.department_id,

mc.median_salary

FROM

MedianCalculator mc;

This SQL code calculates the median salary for each department by first assigning a row number to each employee within their department based on salary. Then, it calculates the median by averaging the salary of the middle one or two employees (depending on whether the total number of employees is odd or even). This approach provides a general solution that can be adapted to various SQL databases.

21. How would you use a window function to rank customers based on their total spending?

To rank customers based on their total spending using a window function, you'd typically use the RANK(), DENSE_RANK(), or ROW_NUMBER() functions in SQL. These functions allow you to assign a rank to each row within a partition of your result set, without grouping the rows.

For example, assuming you have a table named orders with columns customer_id and order_amount, and you want to rank customers based on their total spending, you could use the following SQL query:

SELECT

customer_id,

SUM(order_amount) AS total_spending,

RANK() OVER (ORDER BY SUM(order_amount) DESC) AS spending_rank

FROM

orders

GROUP BY

customer_id;

This query calculates the sum of order_amount for each customer_id, and then the RANK() function assigns a rank based on the total spending, with the customer having the highest spending getting rank 1. DENSE_RANK() would assign consecutive ranks without gaps, even if there are ties in total_spending. ROW_NUMBER() assigns a unique rank to each row.

22. Describe how to identify and correct data inconsistencies in a database.

Identifying and correcting data inconsistencies involves several steps. First, data profiling helps understand data patterns and identify anomalies like out-of-range values or unusual distributions. Data inconsistencies can also be detected by implementing data validation rules at the application or database level (e.g., using constraints, triggers) to prevent invalid data entry. Then, perform data quality audits, by comparing data across different sources or within the same database using SQL queries, reporting tools, or dedicated data quality software. This involves looking for duplicate records, orphaned records, and conflicting values. Finally, use tools or custom scripts to perform data cleansing and transformation and correct discrepancies, such as data deduplication, standardization of formats, and resolving conflicting values based on business rules or source of truth.

Correcting inconsistencies requires choosing the right approach based on the specific issue. This might involve updating records manually, using SQL scripts to batch update data, or creating more complex data transformation pipelines. Always back up data before making any changes and document the correction process.

23. Explain how to audit data changes in a table.

Auditing data changes in a table involves tracking who made what changes and when. A common approach is to use triggers. You create a trigger that fires on INSERT, UPDATE, or DELETE operations on the table you want to audit. This trigger then inserts a record into an audit table.

The audit table typically includes the following columns:

table_name: The name of the table being audited.column_name: The name of the column changed.primary_key_value: The primary key of the affected row.old_value: The value before the change.new_value: The value after the change.changed_by: The user who made the change.changed_at: The timestamp of the change.

Here's an example of a trigger:

CREATE TRIGGER audit_employee

AFTER UPDATE ON employee

FOR EACH ROW

BEGIN

INSERT INTO employee_audit (

table_name,

column_name,

primary_key_value,

old_value,

new_value,

changed_by,

changed_at

)

VALUES (

'employee',

'salary',

OLD.id,

OLD.salary,

NEW.salary,

USER(),

NOW()

);

END;

Some databases also offer built-in auditing features that can simplify this process.

24. Write a query to transform JSON data stored in a column into relational data.

The specific query depends heavily on the database system you're using and the structure of your JSON data. However, the general approach involves using database-specific JSON functions to extract values from the JSON column and map them to relational columns.

For example, in PostgreSQL:

SELECT

json_data ->> 'field1' AS column1,

json_data ->> 'field2' AS column2

FROM

your_table;

Where json_data is the name of the column containing JSON, and field1, field2 are keys within the JSON object. The ->> operator extracts the value as text. Similar functions exist in other databases like MySQL (JSON_EXTRACT), SQL Server (JSON_VALUE), and others. Consult the documentation for your specific database for the correct functions and syntax.

25. Explain the difference between correlated and non-correlated subqueries, and provide an example of each.

A correlated subquery depends on the outer query for its values. The inner query executes once for each row processed by the outer query. In contrast, a non-correlated subquery is independent and executes only once. Its result is then used by the outer query.

Example:

- Non-correlated:

SELECT * FROM employees WHERE salary > (SELECT AVG(salary) FROM employees);- The subquery calculates the average salary once. - Correlated:

SELECT * FROM employees e WHERE salary > (SELECT AVG(salary) FROM departments WHERE department_id = e.department_id);- The subquery calculates the average salary for each employee's department.

26. Write a query to identify the top 5 customers who contributed to 80% of the total sales.

WITH CustomerSales AS (

SELECT

customer_id,

SUM(sale_amount) AS total_sales

FROM

sales_table

GROUP BY

customer_id

),

RankedSales AS (

SELECT

customer_id,

total_sales,

SUM(total_sales) OVER (ORDER BY total_sales DESC) AS cumulative_sales,

SUM(total_sales) OVER () AS total_overall_sales

FROM

CustomerSales

),

PercentageSales AS (

SELECT

customer_id,

total_sales,

cumulative_sales,

total_overall_sales,

(cumulative_sales * 1.0 / total_overall_sales) * 100 AS cumulative_percentage

FROM

RankedSales

)

SELECT

customer_id,

total_sales

FROM

PercentageSales

WHERE

cumulative_percentage <= 80

ORDER BY

total_sales DESC

LIMIT 5;

This SQL query identifies the top 5 customers contributing to 80% of total sales. It calculates each customer's total sales, then cumulatively sums the sales in descending order to determine the percentage contribution. Finally, it filters for customers whose cumulative percentage is less than or equal to 80% and selects the top 5 by total sales.

27. How would you design a SQL query to find all possible pairs of employees who work in the same department?

To find all possible pairs of employees who work in the same department, you can use a self-join. Here's the SQL query:

SELECT e1.employee_id, e2.employee_id

FROM employees e1

JOIN employees e2 ON e1.department_id = e2.department_id AND e1.employee_id < e2.employee_id;

This query joins the employees table to itself (e1 and e2) based on the condition that their department_id values are equal. The e1.employee_id < e2.employee_id condition prevents duplicate pairs (e.g., (A, B) and (B, A)) and also avoids pairing an employee with themselves.

Advanced SQL Queries interview questions

1. How would you identify the top 3 products in each category based on sales, using window functions?

To identify the top 3 products in each category based on sales using window functions in SQL, you can use the RANK() or DENSE_RANK() function. First, partition the data by category. Then, order the products within each category by sales in descending order. Assign a rank to each product based on its sales within its category. Finally, filter the result to include only those products with a rank less than or equal to 3.

For example, if you're using SQL:

WITH RankedSales AS (

SELECT

category,

product_name,

sales,

RANK() OVER (PARTITION BY category ORDER BY sales DESC) AS sales_rank

FROM

sales_table

)

SELECT

category,

product_name,

sales

FROM

RankedSales

WHERE

sales_rank <= 3;

2. Can you explain how to optimize a slow-performing query involving multiple joins and subqueries?

To optimize a slow query with multiple joins and subqueries, start by analyzing the query execution plan using tools like EXPLAIN. Identify the most expensive operations, such as full table scans or poorly indexed joins. Focus on optimizing the worst offenders first. Index relevant columns used in JOIN conditions and WHERE clauses. Rewrite subqueries as joins where possible or consider using temporary tables to store intermediate results. Check statistics and update them to ensure the query optimizer has the best information.

Further optimizations may include rewriting the query to minimize the amount of data processed, filtering data as early as possible, or using appropriate data types. Partitioning large tables can also improve performance. Finally, consider denormalizing the database schema if read performance is critical and write performance is less of a concern. For example if using MySQL, check slow_query_log and also performance_schema for bottlenecks and specific query insights.

3. Describe a scenario where you'd use a recursive common table expression (CTE) and provide an example.

A recursive CTE is useful when you need to traverse a hierarchical data structure, such as an organizational chart, a bill of materials, or a graph of relationships. For example, imagine you have a table Employee with columns EmployeeID and ManagerID. You want to find all employees who report, directly or indirectly, to a specific manager.

Here's an example:

WITH RECURSIVE EmployeeHierarchy AS (

-- Anchor member: select the initial manager

SELECT EmployeeID, ManagerID, EmployeeID AS RootManagerID

FROM Employee

WHERE ManagerID IS NULL -- Assuming top manager's ManagerID is NULL

UNION ALL

-- Recursive member: find subordinates of the current level

SELECT e.EmployeeID, e.ManagerID, eh.RootManagerID

FROM Employee e

INNER JOIN EmployeeHierarchy eh ON e.ManagerID = eh.EmployeeID

)

SELECT EmployeeID FROM EmployeeHierarchy WHERE RootManagerID = 123; --Example:Find all under ManagerID 123

4. How do you handle duplicate data in a table and ensure data integrity?

To handle duplicate data and ensure integrity, I'd first identify the duplicates. This can be done using SQL queries with GROUP BY and HAVING COUNT(*) > 1 clauses to find rows with identical values in key columns. Then, I'd choose a strategy for dealing with them. Options include:

- Removing duplicates: Use

ROW_NUMBER()and a common table expression (CTE) to partition the data, assign a unique number to each row within the partition, and then delete rows withrow_number > 1. - Preventing duplicates: Implement unique constraints or indexes on the relevant columns during table creation or alteration. This will prevent future insertion of duplicate rows.

- Data validation: Implement validation rules in the application layer or using database triggers to catch and handle potential duplicates before they are inserted. Another approach is to implement

MERGEstatements for upsert logic where existing records are updated or new ones are created.

5. Explain the difference between clustered and non-clustered indexes and their impact on query performance.

Clustered indexes determine the physical order of data in a table. A table can only have one clustered index. Because the data is physically sorted according to the clustered index, queries that use the clustered index to retrieve data are generally very fast. Think of it like a phone book, where the data is physically ordered by last name.

Non-clustered indexes, on the other hand, are like a separate lookup table that contains a pointer to the actual data rows. A table can have multiple non-clustered indexes. When a query uses a non-clustered index, the database engine first finds the row in the index, and then uses the pointer to retrieve the actual data from the table. This can be slower than using a clustered index because of the extra step required to retrieve the data. However, non-clustered indexes can still significantly improve query performance, especially for queries that only need to retrieve a few columns of data. For example, imagine a library catalog; it can quickly point you to the location of a book without having to scan every book on the shelves.

6. How would you design a database schema to efficiently store and retrieve hierarchical data, like an organizational chart?

There are a few common ways to model hierarchical data in a relational database. An adjacency list model uses a simple self-referencing foreign key. A table would contain columns like id, name, and parent_id. parent_id would reference the id of the parent node, or be null for the root. Retrieval is straightforward for direct children, but becomes complex for deeper hierarchies requiring recursive queries or stored procedures.

Alternatively, a materialized path model stores the entire path to a node as a string (e.g., '1/5/12'). This allows for efficient retrieval of descendants using LIKE clauses. However, updates (insertions/deletions) require updating many rows to maintain path integrity. Another approach is the nested set model which uses left and right values to represent the tree structure. Retrieving descendants is efficient (e.g., WHERE left BETWEEN node.left AND node.right), but insertions/deletions are complex and computationally expensive. The optimal choice depends on the frequency of reads versus writes and the depth of the hierarchy.

7. Describe how to use partitioning to improve query performance on a very large table.

Partitioning a large table divides it into smaller, more manageable pieces based on a chosen partitioning key (e.g., date, region). This improves query performance by allowing the database to scan only the relevant partitions instead of the entire table. This drastically reduces I/O operations and CPU usage. For example, a table of customer orders partitioned by order date enables queries for orders in a specific month to only scan that month's partition.

Different partitioning strategies exist (range, list, hash), each suited for different use cases. Range partitioning (e.g., by date) is common for time-series data. Proper partition key selection is critical; frequently queried columns are good candidates. Query predicates should include the partition key to achieve optimal performance benefits. Also remember to consider maintenance (adding/removing partitions) which may impact performance.

8. Explain how to implement row-level security in a database.

Row-level security (RLS) restricts data access at the row level, enabling users to see only the data relevant to them. Implementation typically involves creating security policies associated with database tables. These policies define filter predicates that determine which rows a user can access based on attributes like user ID, group membership, or other relevant criteria.

Common methods include using database views with WHERE clauses based on user context (less secure, harder to manage), or implementing dedicated RLS features (if available) like in PostgreSQL with CREATE POLICY. RLS policies are generally more efficient and robust since the filtering is handled by the database engine during query execution. For example, in PostgreSQL:

CREATE POLICY user_policy ON my_table

FOR SELECT

TO app_user

USING (user_id = current_user);

9. How would you audit changes made to specific tables in a database?

To audit changes to specific tables, several approaches can be used. One common method is to use database triggers. A trigger can be set up to automatically execute a function whenever data is inserted, updated, or deleted in the target table. This trigger function captures the old and new values of the changed row and inserts them into an audit table. The audit table typically includes columns for the table name, the type of operation (insert, update, delete), the timestamp of the change, the user who made the change, and the changed data itself.

Another method involves using database-specific audit features. Many modern databases offer built-in audit capabilities that allow you to track changes to data and database objects. For example, in PostgreSQL, you can use extensions like pgaudit. These features offer more robust and efficient auditing compared to triggers. Choosing between triggers and native auditing depends on the specific database system, the level of detail required, and the performance considerations.

10. Can you describe the process of database normalization and its benefits?

Database normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It typically involves dividing databases into two or more tables and defining relationships between the tables. This is achieved by following a series of normal forms (1NF, 2NF, 3NF, etc.), each with specific rules to eliminate different types of redundancy.

The benefits of normalization include reduced data redundancy, improved data consistency, easier data modification, and faster query performance in some cases. By minimizing redundant data, updates only need to be made in one place, preventing inconsistencies. This also leads to a smaller database size and improved data integrity, as data is stored logically and consistently across the database.

11. How do you handle deadlocks in a database environment?

Deadlocks occur when two or more transactions are blocked indefinitely, waiting for each other to release the resources that they hold. We can handle deadlocks using several strategies including:

- Deadlock prevention: This involves designing the system to prevent deadlocks from occurring in the first place. This can include ordering resource access, acquiring all necessary locks at once, or using timeouts to release locks.

- Deadlock detection and recovery: This approach allows deadlocks to occur, but then detects them and takes action to resolve them. Databases often have a deadlock detection mechanism that identifies cycles in the 'waits-for' graph. Once a deadlock is detected, one of the transactions involved (the 'victim') is rolled back, releasing its locks and allowing other transactions to proceed. The victim transaction may then be retried. This approach can lead to 'livelock' where a transaction is repeatedly rolled back and retried. In practice, databases typically employ this detection and recovery because prevention can be restrictive and impact performance. Setting appropriate lock timeouts is also vital.

12. Explain the use of different isolation levels in transaction management.

Transaction isolation levels control the degree to which transactions are isolated from one another's modifications. Lower isolation levels permit higher concurrency but increase the risk of phenomena like dirty reads, non-repeatable reads, and phantom reads. Common isolation levels include: Read Uncommitted (lowest level, allows dirty reads), Read Committed (prevents dirty reads), Repeatable Read (prevents dirty reads and non-repeatable reads), and Serializable (highest level, prevents all the mentioned phenomena by essentially serializing transactions).

13. How would you implement a full-text search feature in a database?

To implement full-text search, I would typically use a dedicated full-text search engine like Elasticsearch or Solr alongside the database. These engines index the text data, allowing for efficient searching based on keywords, stemming, and other text analysis techniques. The database would then be used to store the core data, with the search engine handling text-based queries. When a search query is received, it's sent to the search engine, which returns the relevant document IDs. These IDs are then used to retrieve the full records from the database.

Alternatively, some databases offer built-in full-text search capabilities. For instance, PostgreSQL has tsvector and tsquery types along with functions for indexing and querying text data. MySQL has FULLTEXT indexes. While simpler to set up initially, these built-in features might not offer the same level of performance or advanced features as dedicated search engines for large datasets or complex search requirements.

14. Describe how to use database triggers to enforce complex business rules.

Database triggers can be used to enforce complex business rules by automatically executing SQL code in response to specific database events such as INSERT, UPDATE, or DELETE operations on a table. For example, you might use a trigger to prevent an order from being created if the customer's credit limit is exceeded, or to automatically update an inventory count when a new order is placed.

To implement a complex rule, you would typically define a trigger that fires before the relevant data modification. The trigger logic then checks if the proposed change violates the business rule. If it does, the trigger can ROLLBACK the transaction, preventing the change. SQL code example:

CREATE TRIGGER check_credit_limit

BEFORE INSERT ON Orders

FOR EACH ROW

BEGIN

IF (SELECT credit_limit FROM Customers WHERE customer_id = NEW.customer_id) < NEW.order_total THEN

SIGNAL SQLSTATE '45000' SET MESSAGE_TEXT = 'Credit limit exceeded';

END IF;

END;

15. How can you optimize queries that use the `LIKE` operator with wildcards?

Optimizing LIKE queries with wildcards depends on the wildcard's position. Leading wildcards (%value) prevent index usage, requiring a full table scan, which is inefficient.

To optimize:

- Avoid leading wildcards: If possible, restructure the query to avoid

%at the beginning. For example, instead ofLIKE '%value', considerLIKE 'value%'which can use an index. - Full-text search: For complex wildcard searches, consider using full-text search capabilities offered by your database (e.g., MySQL's

MATCH AGAINST, PostgreSQL's full-text search). These are optimized for text searching. - Database-specific optimizations: Explore database-specific features and indexing strategies. For example, some databases offer trigram indexes or other techniques that can improve

LIKEperformance, regardless of wildcard position. - Limit result set: Add

LIMITclause to reduce the number of rows searched/returned, when only a limited number of result is sufficient. - Use

CONTAINSinstead ofLIKEin certain scenarios (e.g., SQL Server providesCONTAINSwhich can be more efficient for full-text searches if properly configured).

16. Explain the difference between `UNION` and `UNION ALL` and when to use each.

The UNION and UNION ALL operators in SQL are used to combine the result sets of two or more SELECT statements. The key difference is that UNION removes duplicate rows from the combined result set, while UNION ALL includes all rows, including duplicates.

Use UNION when you need a distinct set of rows from multiple sources and duplicate rows are not desired. Use UNION ALL when you want to combine all rows from multiple sources, regardless of duplicates, and performance is a concern, as removing duplicates can be a costly operation. For example, if you are archiving data and want to add one table's values into another table to create an archive, then UNION ALL should be used.

17. How would you design a database schema for storing time-series data?

A common approach is to use a wide-column store or a relational database with a specific schema. For relational databases, a simple schema could include a table with columns for timestamp, metric_name, and value. Partitioning the table by metric_name and/or time ranges (e.g., monthly) significantly improves query performance. Indexing timestamp is also crucial. For a larger scale implementation, consider using specialized time-series databases like InfluxDB or TimescaleDB, which offer optimized storage and querying capabilities for time-series data.

An alternative, often more scalable, approach involves a wide-column store, such as Cassandra or HBase. The row key would consist of the metric_name and timestamp, and the column value would hold the actual data. This allows for efficient retrieval of data for a specific metric over a time range. Data aggregation can also be performed using map-reduce jobs or other distributed computing frameworks if needed.

18. Describe different strategies for backing up and restoring a database.

Database backup and restoration are crucial for data protection and disaster recovery. Common backup strategies include: 1. Full backups: Copy the entire database. They are simple but time-consuming. 2. Differential backups: Back up only the changes since the last full backup. Restoration requires the last full and the latest differential. 3. Incremental backups: Back up only the changes since the last backup (full or incremental). Restoration requires the last full and all subsequent incremental backups in order. 4. Transaction log backups: Capture all transaction logs since the last full, differential, or transaction log backup. Used for point-in-time recovery. Restoration often involves restoring the full backup and then replaying the transaction logs. 5. Snapshot backups: Create a point-in-time copy using storage system capabilities; quick but might not be transactionally consistent.

Restoration strategies depend on the backup type and the desired recovery point. Full backups offer the simplest restore, while differential and incremental backups require restoring multiple backup sets. Transaction log backups allow for precise point-in-time recovery. Backup frequency depends on data change rate and acceptable data loss.

19. How do you monitor database performance and identify potential bottlenecks?

Database performance monitoring involves tracking key metrics and identifying deviations from established baselines. I typically use a combination of tools like built-in database monitoring features (e.g., Performance Monitor in SQL Server, Performance Schema in MySQL), third-party monitoring solutions (e.g., Datadog, New Relic), and custom scripts to gather data. Key metrics to monitor include CPU utilization, memory usage, disk I/O, query execution times, lock contention, and buffer cache hit ratios. I analyze slow queries using query analyzers/profilers to identify inefficient code, missing indexes, or poorly designed schemas. I would also check for deadlocks, excessive blocking, and long-running transactions which can point to bottlenecks.

20. Explain how to use stored procedures to encapsulate complex business logic.

Stored procedures are precompiled SQL statements stored within the database. They encapsulate complex business logic by providing a modular and reusable way to perform database operations. Instead of embedding SQL directly in applications, you call a stored procedure, passing parameters as needed. This approach offers several advantages.

Using stored procedures, you can centralize and maintain complex logic in one place. This simplifies application code, reduces redundancy, and improves security by controlling data access. For example, a stored procedure might handle a series of updates across multiple tables when a customer order is processed. You would create the stored procedure, for example in SQL Server:

CREATE PROCEDURE ProcessOrder (@CustomerID INT, @ProductID INT, @Quantity INT)

AS

BEGIN

-- Logic to update inventory, create order record, etc.

END

Then, your application simply calls EXEC ProcessOrder @CustomerID = 123, @ProductID = 456, @Quantity = 1.

21. How would you migrate a database from one platform to another (e.g., MySQL to PostgreSQL)?

Migrating a database involves several steps, from planning to execution and validation. First, assessment is crucial. Analyze the schemas, data types, stored procedures, and application dependencies to understand the scope and potential incompatibilities. Then, schema conversion may be needed. Tools like pgloader (for PostgreSQL) can assist in converting the schema and data, but manual adjustments are often necessary due to differences in data types or SQL syntax.

After the schema is ready, the next step is data migration. This can be achieved through logical replication, dump and restore, or ETL tools. Logical replication provides continuous synchronization, useful for minimal downtime migrations. Dump and restore involves exporting data from the source and importing it into the destination. Finally, thorough testing and validation are essential to ensure data integrity and application functionality in the new environment. Monitor performance closely after the migration and have a rollback plan in place.

22. Describe the concept of sharding and its advantages in a large-scale database environment.

Sharding is a database architecture pattern where a large database is horizontally partitioned into smaller, more manageable databases called shards. Each shard contains a subset of the overall data, and they all reside on separate database servers. This distribution enables parallel processing and reduces the load on any single server.

The advantages of sharding in a large-scale environment include improved performance due to data localization and parallel query execution, increased storage capacity as data is spread across multiple servers, and enhanced availability and fault tolerance because failure of one shard does not necessarily bring down the entire system. It also allows for easier scaling, as you can add more shards to handle increasing data volumes or user traffic.

23. Explain how to use database views to simplify complex queries and improve security.

Database views are virtual tables based on the result-set of a SQL statement. They simplify complex queries by encapsulating them into a single, named object. Instead of writing the same complex query repeatedly, you can query the view as you would a regular table. This promotes code reusability and makes queries easier to understand and maintain.

Views also enhance security. You can grant users access to a view that only shows specific columns or rows from underlying tables, without granting them direct access to the tables themselves. This restricts access to sensitive data. For example, a view might only expose customer names and order dates but not credit card information. You can create views using SQL CREATE VIEW command. For example:

CREATE VIEW customer_orders AS

SELECT customer_name, order_date

FROM customers

JOIN orders ON customers.customer_id = orders.customer_id;

24. How would you implement a data warehousing solution for business intelligence reporting?

A data warehousing solution typically involves these steps: First, identify the key business metrics and data sources. Then, extract, transform, and load (ETL) data from various sources (databases, APIs, flat files) into a staging area. Implement data cleansing and transformation logic, often using tools like Apache Spark or cloud-based ETL services. Finally, load the transformed data into a data warehouse (e.g., Snowflake, Amazon Redshift, Google BigQuery).

For reporting, I'd build a star schema or snowflake schema on top of the data warehouse. Then, use business intelligence tools like Tableau, Power BI, or Looker to create reports and dashboards. Regularly monitor data quality and refresh schedules to ensure accurate and timely insights. Considerations include scalability, security, and cost optimization across all stages.

25. Describe different techniques for data compression in a database.

Data compression in databases reduces storage space and improves I/O performance. Several techniques exist, including:

- Row/Page Level Compression: Compresses entire rows or pages of data using algorithms like Lempel-Ziv (LZ77) or Lempel-Ziv-Welch (LZW). This is often transparent to applications.

- Columnar Compression: Compresses individual columns separately, especially effective for data warehouses where similar data is stored in columns. Techniques include run-length encoding (RLE), dictionary encoding, and bit-vector encoding.

- Data Deduplication: Identifies and eliminates redundant data copies, storing only unique instances. Often used for backups and archival data.

- Dictionary Compression: Builds a dictionary of frequently occurring values and replaces them with shorter codes.

- Delta Compression: Stores only the differences (deltas) between successive versions of data. Useful for time-series data or audit trails.

26. Explain how to use database functions to extend the functionality of SQL.

Database functions extend SQL functionality by allowing you to create custom routines that perform specific tasks within the database. These functions can be written in a variety of languages, depending on the database system (e.g., PL/SQL in Oracle, T-SQL in SQL Server, PL/pgSQL in PostgreSQL). They encapsulate complex logic, calculations, or data manipulations that would be cumbersome or impossible to express directly in SQL.

To use them:

Define the function: You define a function within the database using a

CREATE FUNCTIONstatement, specifying its name, input parameters, return type, and the code to be executed.CREATE FUNCTION calculate_discount (price DECIMAL, discount_percent DECIMAL) RETURNS DECIMAL AS $$ BEGIN RETURN price * (1 - discount_percent/100); END; $$ LANGUAGE plpgsql;Call the function: Once defined, you can call the function directly in your SQL queries, just like built-in SQL functions. For example:

SELECT item_name, calculate_discount(price, 10) AS discounted_price FROM products;

Expert SQL Queries interview questions

1. How do you optimize a query that is running slowly due to a large number of joins?

To optimize a slow query with numerous joins, consider several strategies. First, ensure appropriate indexes exist on the join columns of all tables involved. This dramatically speeds up the join process by allowing the database to quickly locate matching rows. Secondly, review the join order. The order in which tables are joined can significantly impact performance; joining the smallest tables first can reduce the size of intermediate result sets. Sometimes rewriting the query to use subqueries, temporary tables, or common table expressions (CTEs) can help the database optimizer choose a more efficient execution plan. Finally, analyze the query execution plan provided by the database system (EXPLAIN PLAN in many SQL databases) to identify bottlenecks and areas for improvement, such as full table scans or inefficient join algorithms. Regularly updating table statistics also aids the query optimizer in making informed decisions.

Additionally, consider denormalization as a last resort if read performance is paramount and write performance is less critical. Reducing the number of joins by incorporating relevant data into a single table can improve query speed, but it comes with the cost of increased data redundancy and potential update anomalies. Carefully weigh the trade-offs before opting for denormalization. Review if the join types are appropriate, for example using LEFT JOIN when an INNER JOIN may be more appropriate if the joined record is required.

2. Describe a scenario where using a window function would be more efficient than using a subquery. Provide an example.

Window functions often outperform subqueries, especially for ranking and aggregation tasks within partitions of data. A common scenario is calculating a moving average or running total. Imagine you have a table of daily sales data for different stores and need to calculate a 7-day moving average of sales for each store. Using a subquery, you would need to execute a separate subquery for each row to calculate the average, which is computationally expensive. A window function can calculate this in a single pass.

Here's an example using SQL:

SELECT

store_id,

sale_date,

daily_sales,

AVG(daily_sales) OVER (PARTITION BY store_id ORDER BY sale_date ASC ROWS BETWEEN 6 PRECEDING AND CURRENT ROW) AS moving_average_7_day

FROM

sales_table;

In this example, the AVG() OVER() function calculates the average daily sales within a window of the current row and the 6 preceding rows, partitioned by store_id. This approach is typically much more efficient than achieving the same result with correlated subqueries, especially with larger datasets.

3. Explain how you would diagnose and resolve a deadlock situation in a database.

To diagnose a deadlock, I would first identify the processes involved. Most database systems offer tools or views to detect deadlocks, showing which processes are blocked and which resources they are waiting for. For example, in SQL Server, I'd use sp_who2 or query sys.dm_os_waiting_tasks. In PostgreSQL, I'd use pg_locks and pg_stat_activity. The output would reveal the conflicting transactions and the resources (e.g., tables, rows) they're contending for.

To resolve a deadlock, the most common approach is deadlock detection and automatic rollback (victim selection). The database system typically chooses one of the deadlocked transactions as a victim, rolls it back, and releases its resources, allowing the other transaction(s) to proceed. While automatic rollback is the standard solution, preventing deadlocks through careful transaction design is preferable. This involves ordering operations within transactions consistently, using appropriate locking strategies, and keeping transactions short. In applications, implementing retry logic after a rollback exception is crucial.

4. How can you implement row-level security in SQL to restrict access to certain data based on user roles?

Row-level security (RLS) in SQL restricts data access based on user roles. You typically achieve this using security policies or views. A security policy is a predicate function associated with a table that determines which rows a user can access. This predicate often checks user context (e.g., USER_NAME(), application role) against a column in the table or a separate roles table.

For example, in SQL Server, you would use CREATE SECURITY POLICY to define a filter predicate, which is then applied to the table. Alternatively, views can be created that filter data based on user roles; however, security policies are generally preferred for maintainability and performance. Another important feature is to implement data masking techniques to prevent exposing sensitive data.

5. Design a SQL query to identify and remove duplicate records from a table, keeping only the most recent entry.

To identify and remove duplicate records, keeping the most recent, you can use a combination of window functions and a common table expression (CTE). Assuming you have a table named your_table with columns like id, data, and created_at (timestamp), the following SQL query can achieve this:

WITH RankedRows AS (

SELECT

id,

data,

created_at,

ROW_NUMBER() OVER(PARTITION BY data ORDER BY created_at DESC) AS rn

FROM

your_table

)

DELETE FROM your_table

WHERE id IN (SELECT id FROM RankedRows WHERE rn > 1);

This query first assigns a rank to each row within partitions defined by the data column, ordering by created_at in descending order. The ROW_NUMBER() function assigns rank 1 to the most recent entry. Then, it deletes all records from the original table whose id is present in the RankedRows CTE with rank greater than 1 (duplicate entries). Adapt the PARTITION BY clause to match the columns that define a duplicate, and the ORDER BY clause for the timestamp column.

6. Explain the difference between clustered and non-clustered indexes, and when you would use each.

A clustered index determines the physical order of data in a table. There can only be one clustered index per table because data can only be physically sorted in one way. A non-clustered index is like an index in a book; it stores a separate structure containing the indexed columns and pointers back to the data rows in the table. There can be multiple non-clustered indexes per table.

Use a clustered index when you frequently retrieve data in a specific order or need efficient range queries. Good candidates for clustered indexes are columns that are often used in ORDER BY clauses or for retrieving data within a specific date range. Use non-clustered indexes when you need to speed up queries based on specific column values without affecting the physical order of the data, or when you have multiple columns you need to frequently search on but cannot all fit in a clustered index. They're also useful for columns that are not frequently used in range queries.

7. How would you design a database schema to efficiently store and query time-series data?

For efficient time-series data storage and querying, I'd opt for a schema optimized for write-heavy workloads and range-based queries. A common approach involves a table with columns for timestamp, metric name, and value. Indexing the timestamp column is crucial. Furthermore, consider using a columnar database or a time-series database (TSDB) like TimescaleDB or InfluxDB. These are specifically designed to handle time-series data efficiently, offering features such as data compression, retention policies, and optimized query engines. Partitioning the table by time (e.g., daily or weekly) can significantly improve query performance by reducing the amount of data scanned.

An example schema might look like this:

CREATE TABLE metrics (

time TIMESTAMPTZ NOT NULL,

metric_name TEXT NOT NULL,

value DOUBLE PRECISION NOT NULL

);

CREATE INDEX ON metrics (time);

8. Describe how you would use partitioning to improve the performance of a large table. What are the considerations?

Partitioning a large table involves dividing it into smaller, more manageable parts based on a specific key. This improves performance by allowing queries to scan only relevant partitions instead of the entire table. For example, if you have a table of sales data, you could partition it by date (e.g., monthly or yearly). When querying for sales in a specific month, the database only needs to scan that month's partition, drastically reducing I/O and improving query speed.

Considerations include choosing the appropriate partitioning key (a frequently queried column with good cardinality), the number of partitions (too many can lead to overhead, too few negates the benefits), and the type of partitioning (range, list, hash). Also, one must consider maintenance overhead like managing partitions. Data skew can affect performance if some partitions are significantly larger than others. Proper index maintenance on partitioned tables is also crucial.

9. Write a SQL query to calculate a running total or cumulative sum of a column in a table.

To calculate a running total in SQL, you can use window functions. The SUM() OVER() clause is the key. The ORDER BY clause within OVER() specifies the order in which the running total is calculated. If no partitioning is done, running total will be calculated over the whole table.

SELECT

column_to_sum,

SUM(column_to_sum) OVER (ORDER BY order_column)

AS running_total

FROM

your_table;

Replace column_to_sum with the name of the column you want to sum, order_column with the column that defines the order for the running total calculation, and your_table with the actual table name. The PARTITION BY clause can be added in OVER() to calculate running totals for each partition separately.

10. How can you use Common Table Expressions (CTEs) to simplify complex queries? Give an example.

CTEs, or Common Table Expressions, simplify complex queries by breaking them down into smaller, more manageable, named result sets. These named result sets can then be referenced within the main query, improving readability and maintainability. Essentially, a CTE acts like a temporary view that exists only for the duration of a single query.

For example, consider a scenario where you want to find employees who earn more than the average salary of their department. Without CTEs, this query can be quite complex with nested subqueries. With CTEs, you can first define a CTE to calculate the average salary per department and then join it with the employee table to filter employees. Here's an example:

WITH AvgSalaries AS (

SELECT department, AVG(salary) AS avg_salary

FROM employees

GROUP BY department

)

SELECT e.name, e.salary, a.department, a.avg_salary

FROM employees e

JOIN AvgSalaries a ON e.department = a.department

WHERE e.salary > a.avg_salary;