Hiring the right Splunk talent can greatly impact your team's ability to manage and analyze vast amounts of data. Asking the right questions during an interview can make the difference in identifying candidates who have both the technical prowess and critical thinking skills necessary for success.

In this blog post, we present a comprehensive list of Splunk interview questions tailored to different experience levels. These questions range from general inquiries to advanced scenarios, ensuring that you can gauge a candidate's expertise effectively.

Using this list will help you pinpoint the most suitable candidates for your open roles. For a more thorough evaluation, consider enhancing your recruitment process with a Splunk assessment test before the interview stage.

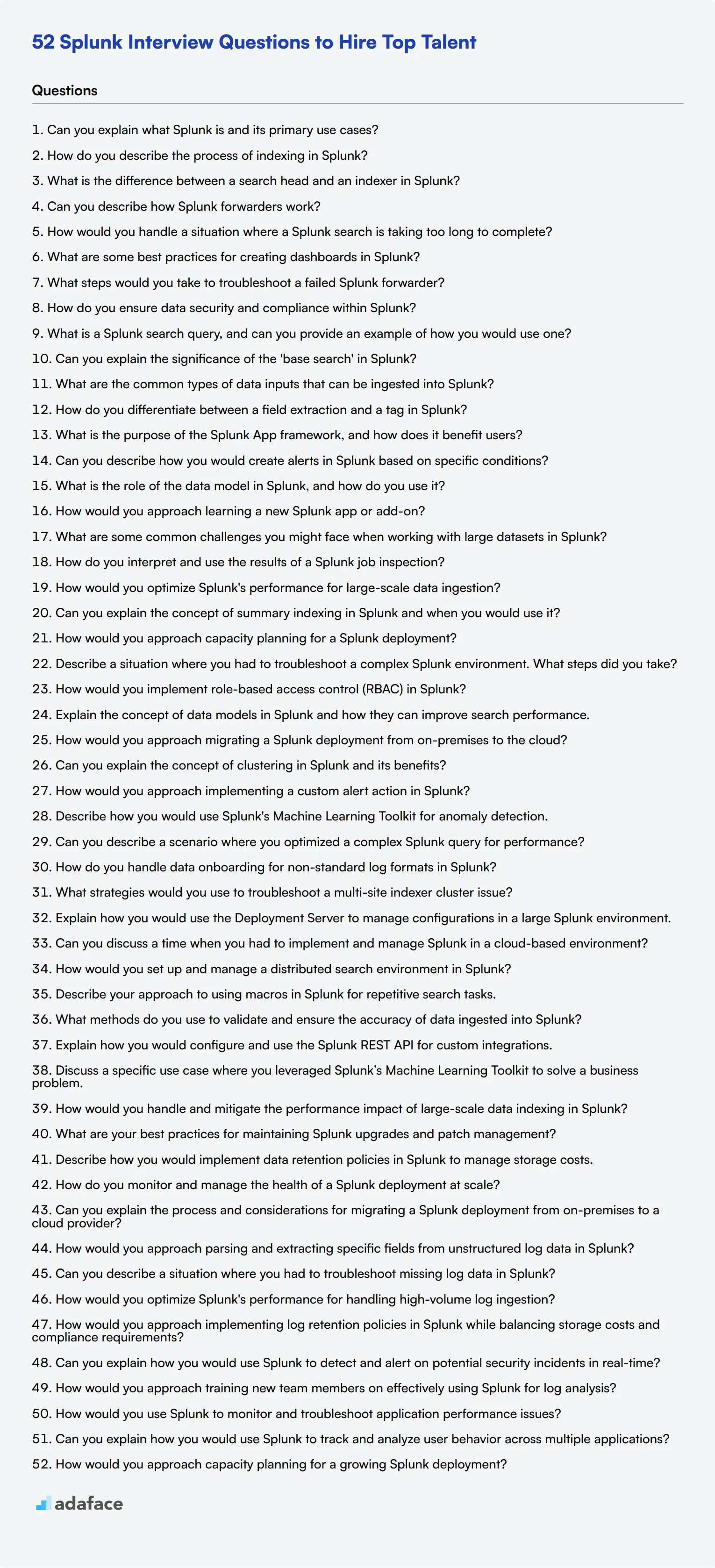

Table of contents

8 general Splunk interview questions and answers

To determine if your candidates possess a solid understanding of Splunk, ask them some of these 8 general Splunk interview questions. These questions are designed to gauge both their basic knowledge and their practical experience with the platform.

1. Can you explain what Splunk is and its primary use cases?

Splunk is a powerful platform for searching, monitoring, and analyzing machine-generated big data via a web-style interface. It collects, indexes, and correlates real-time data in a searchable repository from which it can generate graphs, reports, alerts, dashboards, and visualizations.

The primary use cases for Splunk include IT operations monitoring, security information and event management (SIEM), and business intelligence. It helps organizations in troubleshooting problems, detecting and preventing security threats, and monitoring infrastructure performance.

An ideal response would demonstrate a clear understanding of Splunk’s functionalities and its applications. Look for candidates who can articulate how Splunk fits into broader IT and business operations.

2. How do you describe the process of indexing in Splunk?

Indexing in Splunk refers to the process of storing data in a way that makes it searchable. When data is ingested into Splunk, it undergoes several stages: parsing, indexing, and storing. Parsing breaks down the raw data into individual events, while indexing stores these events in the index, making them available for search.

The indexed data is stored in buckets, which are directories that contain both the raw data and the metadata associated with it. These buckets are categorized as hot, warm, cold, or frozen, depending on how recently the data was indexed.

Look for candidates who can explain this process in a detailed yet simple manner. A strong answer would reflect their familiarity with the data lifecycle within Splunk.

3. What is the difference between a search head and an indexer in Splunk?

A search head in Splunk is a component that allows users to search, analyze, and visualize data. It distributes search requests to indexers and consolidates the results before presenting them to the user.

An indexer, on the other hand, is responsible for receiving, processing, and storing incoming data in the indexes. It performs the parsing, indexing, and storage of data, making it available for search heads to query.

A good response should highlight the distinct roles of search heads and indexers in the Splunk architecture. Look for candidates who can clearly differentiate between the two and understand their interaction.

4. Can you describe how Splunk forwarders work?

Splunk forwarders are components that collect data from various sources and send it to Splunk indexers. There are two types of forwarders: universal forwarders and heavy forwarders. Universal forwarders are lightweight and typically used to forward raw data without any processing. Heavy forwarders can perform data parsing and indexing before forwarding it.

Forwarders ensure that data from disparate sources is ingested into Splunk in a reliable and scalable manner. They are essential for distributing data collection workloads and maintaining Splunk’s performance.

Candidates should demonstrate an understanding of the role forwarders play in data collection and processing. Look for explanations that distinguish between universal and heavy forwarders and their respective use cases.

5. How would you handle a situation where a Splunk search is taking too long to complete?

To address a slow Splunk search, one could start by optimizing the search query. This involves using search filters like time range and fields to narrow down the dataset being queried. Additionally, employing summary indexing and data models can improve search performance by pre-processing and aggregating data.

Another approach is to review the system performance and resource allocation. Ensuring that the indexers and search heads have sufficient resources and are not overloaded can help in speeding up searches. Monitoring the health of the Splunk environment and addressing any bottlenecks is crucial.

A strong candidate response would include both query optimization techniques and infrastructure considerations. Look for a systematic approach to troubleshooting and improving search performance.

6. What are some best practices for creating dashboards in Splunk?

Creating effective dashboards in Splunk involves several best practices. First, understand the audience and the key metrics they need. This ensures that the dashboard is relevant and useful. Keep the dashboard simple and focused, avoiding clutter by limiting the number of panels and visualizations.

Using consistent colors, fonts, and layouts enhances readability. It's also important to use appropriate visualization types for the data being presented. For example, use line charts for trends over time and bar charts for comparing categories. Regularly reviewing and updating the dashboards based on user feedback can also improve their effectiveness.

An ideal candidate should emphasize user-centric design and clarity. Look for practical examples of how they have implemented these best practices in their previous roles.

7. What steps would you take to troubleshoot a failed Splunk forwarder?

Troubleshooting a failed Splunk forwarder involves a systematic approach. First, check the forwarder’s log files for any error messages or warnings that can provide clues about the failure. Ensure that the forwarder service is running and that there are no network connectivity issues between the forwarder and the indexer.

Verify the configuration files for any syntax errors or misconfigurations. Confirm that the forwarder has the necessary permissions to read the data sources and send data to the indexer. Restarting the forwarder service after making any changes can help in resolving the issue.

Candidates should demonstrate a methodical troubleshooting approach. Look for detailed steps and an understanding of common issues that can cause forwarder failures.

8. How do you ensure data security and compliance within Splunk?

Ensuring data security and compliance within Splunk involves implementing role-based access control (RBAC) to restrict access to sensitive data. This means defining user roles with specific permissions and assigning them to individuals based on their job responsibilities.

Implementing encryption for data in transit and at rest is also crucial. Splunk provides options for securing data using SSL/TLS encryption. Regularly auditing access logs and monitoring for any unauthorized access attempts can help in maintaining compliance.

Candidates should highlight their experience with security best practices and compliance frameworks. Look for a comprehensive approach to data security within the Splunk environment.

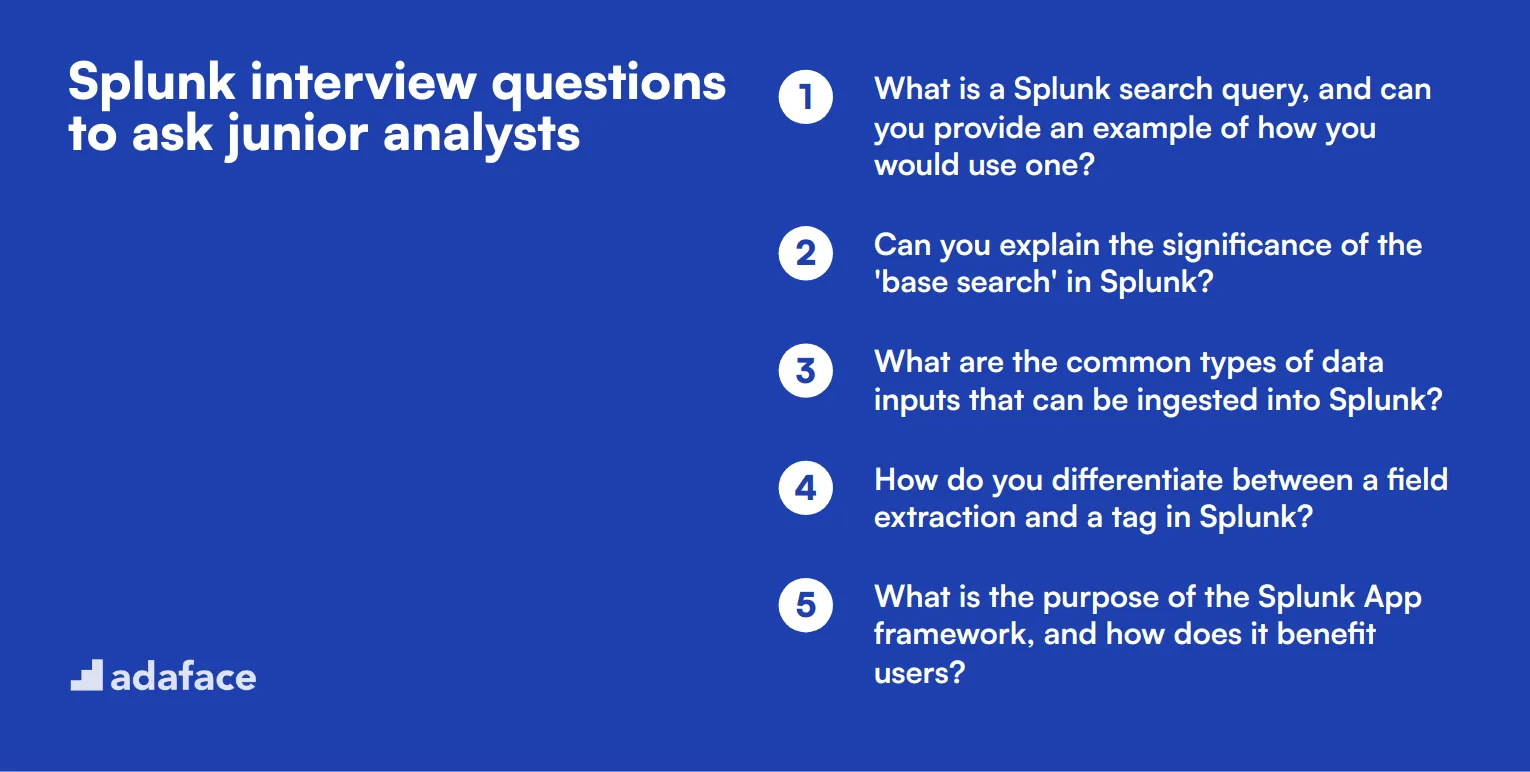

10 Splunk interview questions to ask junior analysts

To assess whether junior analysts have the foundational skills needed for success with Splunk, use this targeted list of interview questions. They will help you gauge both the technical knowledge and practical application of Splunk features. For additional insights into relevant roles, check out our data engineer job description.

- What is a Splunk search query, and can you provide an example of how you would use one?

- Can you explain the significance of the 'base search' in Splunk?

- What are the common types of data inputs that can be ingested into Splunk?

- How do you differentiate between a field extraction and a tag in Splunk?

- What is the purpose of the Splunk App framework, and how does it benefit users?

- Can you describe how you would create alerts in Splunk based on specific conditions?

- What is the role of the data model in Splunk, and how do you use it?

- How would you approach learning a new Splunk app or add-on?

- What are some common challenges you might face when working with large datasets in Splunk?

- How do you interpret and use the results of a Splunk job inspection?

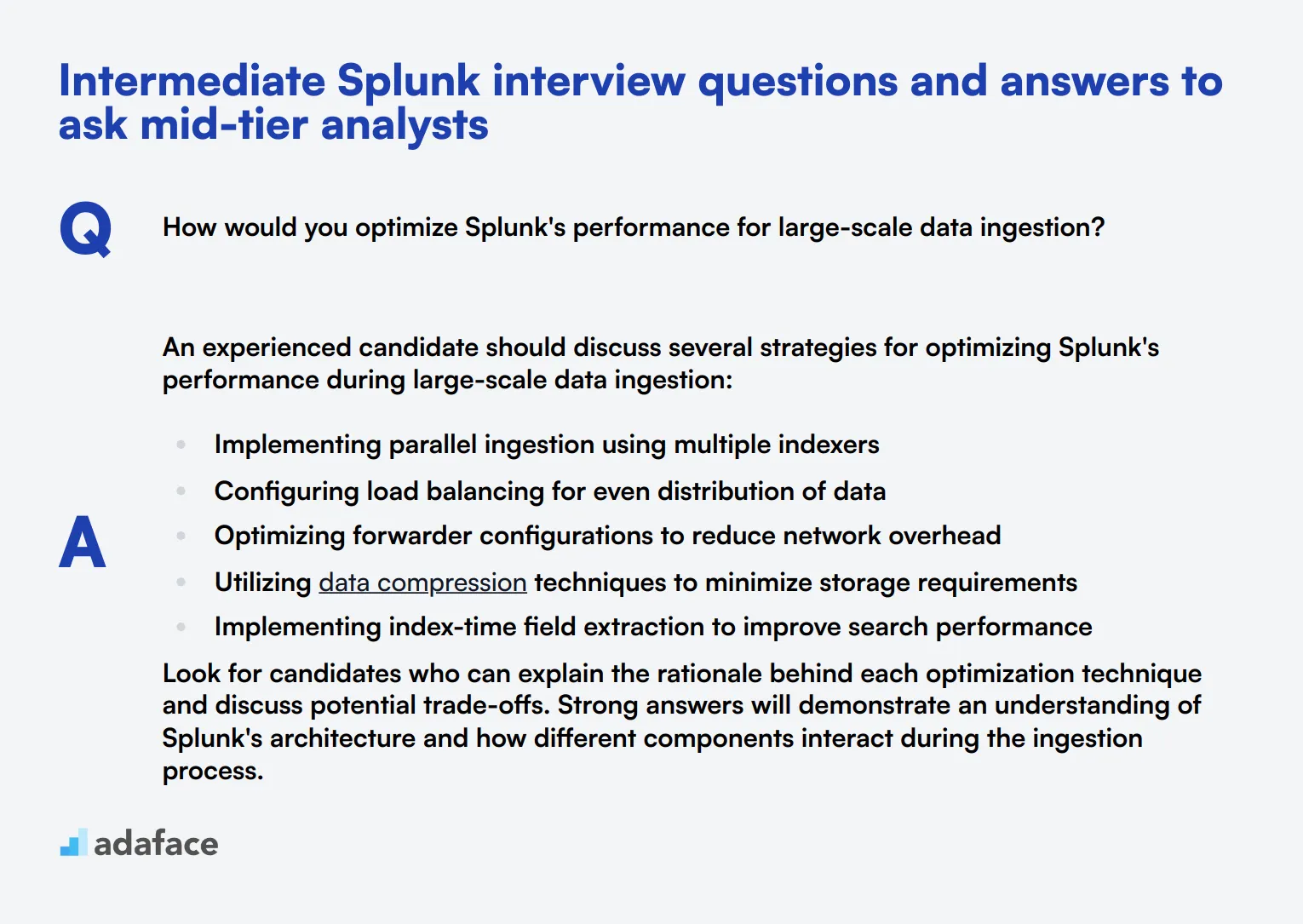

10 intermediate Splunk interview questions and answers to ask mid-tier analysts

Ready to take your Splunk interviews up a notch? These 10 intermediate questions are perfect for assessing mid-tier analysts. They'll help you gauge candidates' deeper understanding of Splunk's capabilities and their ability to apply that knowledge in real-world scenarios. Use these questions to spark insightful discussions and identify top talent.

1. How would you optimize Splunk's performance for large-scale data ingestion?

An experienced candidate should discuss several strategies for optimizing Splunk's performance during large-scale data ingestion:

- Implementing parallel ingestion using multiple indexers

- Configuring load balancing for even distribution of data

- Optimizing forwarder configurations to reduce network overhead

- Utilizing data compression techniques to minimize storage requirements

- Implementing index-time field extraction to improve search performance

Look for candidates who can explain the rationale behind each optimization technique and discuss potential trade-offs. Strong answers will demonstrate an understanding of Splunk's architecture and how different components interact during the ingestion process.

2. Can you explain the concept of summary indexing in Splunk and when you would use it?

Summary indexing is a technique used to pre-compute and store aggregated search results for faster retrieval. It's particularly useful for frequently run searches over large datasets.

Candidates should mention that summary indexing involves:

- Creating a scheduled search that runs periodically

- Storing the results in a separate summary index

- Using the summary index for subsequent searches, greatly reducing query time

Look for answers that highlight scenarios where summary indexing is beneficial, such as:

- Dashboards that need to load quickly

- Reports that are generated regularly

- Searches that involve complex calculations over large time ranges A strong candidate will also discuss potential drawbacks, like increased storage requirements and the need to manage summary index updates.

3. How would you approach capacity planning for a Splunk deployment?

Effective capacity planning is crucial for maintaining a high-performing Splunk environment. Candidates should outline a systematic approach that includes:

- Assessing current data ingestion rates and growth projections

- Analyzing search and reporting workloads

- Evaluating hardware resources (CPU, memory, storage, network)

- Considering scalability requirements for future expansion

- Planning for high availability and disaster recovery

Look for answers that demonstrate an understanding of Splunk's architecture and resource requirements. Strong candidates might mention tools like the Splunk Capacity Planning app or discuss methods for monitoring and adjusting the deployment based on changing needs. They should also emphasize the importance of regular review and adjustment of the capacity plan.

4. Describe a situation where you had to troubleshoot a complex Splunk environment. What steps did you take?

This question assesses the candidate's problem-solving skills and practical experience with Splunk. A strong answer should outline a structured approach to troubleshooting, such as:

- Identifying the specific issue and its impact

- Gathering relevant information (error messages, logs, system metrics)

- Analyzing Splunk's internal logs (splunkd.log, metrics.log)

- Isolating the problem to specific components (indexers, search heads, forwarders)

- Formulating and testing hypotheses

- Implementing and verifying the solution

- Documenting the process and lessons learned

Look for candidates who can provide a specific example from their experience, detailing the problem they encountered, the steps they took, and the outcome. Strong answers will demonstrate analytical thinking, attention to detail, and the ability to communicate technical issues clearly.

5. How would you implement role-based access control (RBAC) in Splunk?

Implementing RBAC in Splunk involves several key steps that candidates should be familiar with:

- Defining roles based on job functions or responsibilities

- Creating user groups and assigning roles to these groups

- Configuring access controls for apps, data models, and knowledge objects

- Setting up index and source-type based restrictions

- Implementing field-level security where necessary

- Using the Splunk Web interface or configuration files to manage permissions

Look for answers that emphasize the principle of least privilege and the importance of regularly auditing and updating access controls. Strong candidates might discuss the use of Splunk's built-in roles (like admin, power, user) as a starting point, and how to customize these for specific organizational needs. They should also mention the importance of integrating RBAC with existing authentication systems like LDAP or Active Directory.

6. Explain the concept of data models in Splunk and how they can improve search performance.

Data models in Splunk are hierarchical representations of datasets that define relationships between fields. They serve as a semantic layer between the raw data and the users, making it easier to create searches, reports, and visualizations.

Candidates should explain that data models:

- Accelerate searches by pre-computing summaries of data

- Provide a consistent view of data across different sources

- Enable the use of pivot tables for ad-hoc analysis

- Simplify complex searches by abstracting away the underlying SPL

Look for answers that discuss the process of creating and accelerating data models. Strong candidates will mention the trade-offs between acceleration and storage requirements, and discuss scenarios where data models are particularly beneficial, such as in creating dashboards or enabling self-service analytics for non-technical users.

7. How would you approach migrating a Splunk deployment from on-premises to the cloud?

Migrating a Splunk deployment to the cloud requires careful planning and execution. Candidates should outline a structured approach that includes:

- Assessing the current deployment (data volumes, search patterns, custom apps)

- Choosing the appropriate cloud platform (e.g., AWS, Azure, Google Cloud)

- Designing the cloud architecture (considering scalability and high availability)

- Planning for data migration (considering bandwidth and security)

- Updating data inputs and forwarders to point to the new cloud environment

- Testing the migration in a staging environment

- Executing the migration with minimal downtime

- Post-migration validation and performance tuning

Look for answers that demonstrate awareness of cloud-specific considerations, such as cost management, security implications, and the differences between on-premises and cloud infrastructures. Strong candidates might discuss the use of Splunk Cloud or the benefits of containerization for cloud deployments. They should also emphasize the importance of thorough testing and having a rollback plan.

8. Can you explain the concept of clustering in Splunk and its benefits?

Clustering in Splunk refers to the configuration of multiple indexers to work together, providing high availability and improved search performance. Candidates should be able to explain the main components of a Splunk cluster:

- Indexer Cluster: A group of indexers that work together to replicate data

- Search Head Cluster: Multiple search heads that distribute search load and provide failover

- Cluster Master: Coordinates activities within the indexer cluster

Look for answers that highlight the benefits of clustering, such as:

- Improved data availability and fault tolerance

- Better search performance through parallel processing

- Simplified management of large Splunk deployments

- Ability to handle larger data volumes

Strong candidates might also discuss considerations for setting up clusters, like network requirements, storage implications, and the importance of proper sizing and configuration.

9. How would you approach implementing a custom alert action in Splunk?

Implementing a custom alert action in Splunk allows for tailored responses to specific events or conditions. Candidates should outline the general process:

- Define the alert action's purpose and functionality

- Create a new app or add to an existing app in Splunk

- Develop the alert action script (typically in Python)

- Create the necessary configuration files (alert_actions.conf, setup.xml)

- Design the user interface for configuring the alert action

- Test the custom alert action thoroughly

- Package and deploy the app containing the custom alert action

Look for answers that demonstrate familiarity with Splunk's app structure and the Alert Actions Framework. Strong candidates might discuss best practices like error handling, logging, and making the alert action configurable. They should also mention the importance of considering performance implications, especially for alerts that might trigger frequently.

10. Describe how you would use Splunk's Machine Learning Toolkit for anomaly detection.

Using Splunk's Machine Learning Toolkit (MLTK) for anomaly detection involves leveraging built-in algorithms to identify unusual patterns in data. Candidates should outline a general approach:

- Prepare the data by selecting relevant fields and time range

- Choose an appropriate algorithm (e.g., DensityFunction for univariate, MultivariateDensityFunction for multivariate data)

- Train the model using historical data

- Apply the model to new data to detect anomalies

- Tune the model parameters to optimize performance

- Set up alerts or visualizations to highlight detected anomalies

Look for answers that demonstrate understanding of different types of anomalies (point, contextual, collective) and the strengths of various algorithms. Strong candidates might discuss the importance of feature selection, handling of seasonality, and the need for ongoing model evaluation and retraining. They should also mention potential use cases, such as detecting security threats, identifying system failures, or spotting unusual business transactions.

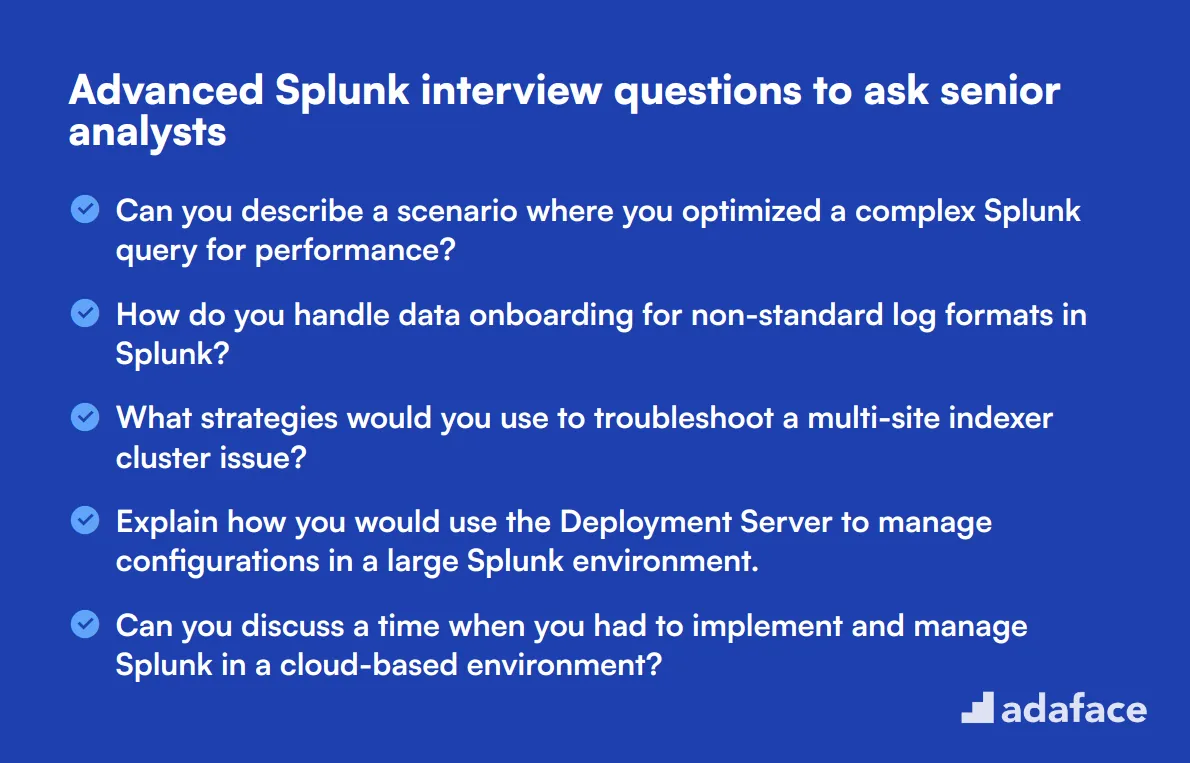

15 advanced Splunk interview questions to ask senior analysts

To ensure your candidates possess advanced Splunk skills, use these expert-level interview questions tailored for senior analysts. These questions will help you assess their deep technical understanding and problem-solving abilities, ensuring you select the best fit for your team. Consider referencing our data scientist job description for further insights on technical roles.

- Can you describe a scenario where you optimized a complex Splunk query for performance?

- How do you handle data onboarding for non-standard log formats in Splunk?

- What strategies would you use to troubleshoot a multi-site indexer cluster issue?

- Explain how you would use the Deployment Server to manage configurations in a large Splunk environment.

- Can you discuss a time when you had to implement and manage Splunk in a cloud-based environment?

- How would you set up and manage a distributed search environment in Splunk?

- Describe your approach to using macros in Splunk for repetitive search tasks.

- What methods do you use to validate and ensure the accuracy of data ingested into Splunk?

- Explain how you would configure and use the Splunk REST API for custom integrations.

- Discuss a specific use case where you leveraged Splunk’s Machine Learning Toolkit to solve a business problem.

- How would you handle and mitigate the performance impact of large-scale data indexing in Splunk?

- What are your best practices for maintaining Splunk upgrades and patch management?

- Describe how you would implement data retention policies in Splunk to manage storage costs.

- How do you monitor and manage the health of a Splunk deployment at scale?

- Can you explain the process and considerations for migrating a Splunk deployment from on-premises to a cloud provider?

9 Splunk interview questions and answers related to log management

When it comes to log management in Splunk, asking the right questions can help you identify candidates who truly understand the nuances of handling and analyzing log data. These questions will help you gauge a candidate's ability to troubleshoot, optimize, and extract valuable insights from logs. Use this list to uncover the log wizards among your applicants!

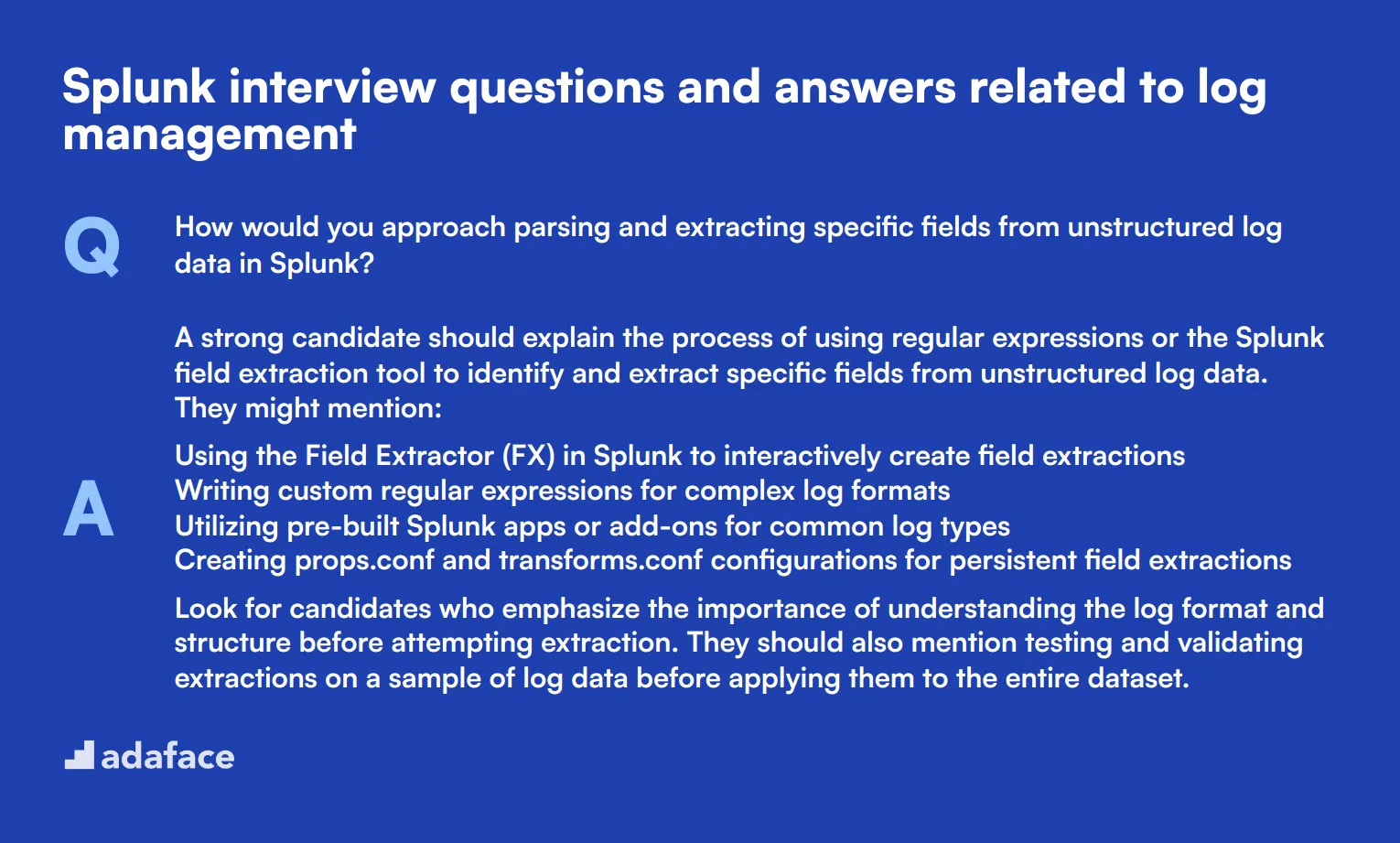

1. How would you approach parsing and extracting specific fields from unstructured log data in Splunk?

A strong candidate should explain the process of using regular expressions or the Splunk field extraction tool to identify and extract specific fields from unstructured log data. They might mention:

- Using the Field Extractor (FX) in Splunk to interactively create field extractions

- Writing custom regular expressions for complex log formats

- Utilizing pre-built Splunk apps or add-ons for common log types

- Creating props.conf and transforms.conf configurations for persistent field extractions

Look for candidates who emphasize the importance of understanding the log format and structure before attempting extraction. They should also mention testing and validating extractions on a sample of log data before applying them to the entire dataset.

2. Can you describe a situation where you had to troubleshoot missing log data in Splunk?

An experienced candidate should outline a systematic approach to troubleshooting missing log data, such as:

- Verifying the data source is still generating logs

- Checking Splunk forwarder configurations and connectivity

- Examining indexer logs for any ingestion errors

- Reviewing search head configurations and time ranges

- Investigating any recent changes to the Splunk environment or data sources

Look for candidates who demonstrate a methodical problem-solving approach and mention the importance of communication with both the data source owners and Splunk administrators during the troubleshooting process. They should also emphasize the need to document findings and implement preventive measures for future occurrences.

3. How would you optimize Splunk's performance for handling high-volume log ingestion?

A knowledgeable candidate should discuss various strategies for optimizing Splunk's performance during high-volume log ingestion, including:

- Implementing parallel parsing to distribute the workload across multiple cores

- Utilizing heavy forwarders for pre-processing and filtering of logs before indexing

- Configuring index-time field extractions to reduce search-time processing

- Implementing data model acceleration for frequently used searches

- Tuning indexer cluster configurations for optimal performance

Pay attention to candidates who mention the importance of monitoring system resources and Splunk metrics to identify bottlenecks. They should also discuss the need for capacity planning and potentially scaling the Splunk infrastructure to handle increased log volumes.

4. How would you approach implementing log retention policies in Splunk while balancing storage costs and compliance requirements?

A thoughtful candidate should discuss a multi-faceted approach to implementing log retention policies:

- Analyzing compliance requirements and business needs to determine retention periods

- Utilizing Splunk's built-in data retention features, such as cold-to-frozen archiving

- Implementing summary indexing for long-term storage of aggregated data

- Using data roll-up techniques to reduce granularity of older data

- Exploring cloud storage options for cost-effective long-term archiving

Look for candidates who emphasize the importance of regular policy reviews and the need to balance compliance requirements with storage costs. They should also mention the importance of documenting the retention policies and ensuring all stakeholders are aware of the implemented strategies.

5. Can you explain how you would use Splunk to detect and alert on potential security incidents in real-time?

A security-minded candidate should outline a comprehensive approach to using Splunk for real-time security incident detection:

- Creating correlation searches to identify patterns indicative of security threats

- Utilizing Splunk's Machine Learning Toolkit for anomaly detection

- Implementing real-time alerting based on predefined thresholds and conditions

- Integrating threat intelligence feeds to enhance detection capabilities

- Developing custom dashboards for security operations center (SOC) monitoring

Evaluate candidates based on their understanding of both Splunk's capabilities and common security threats. Look for those who mention the importance of continuously tuning and updating detection rules to adapt to evolving threats and reducing false positives.

6. How would you approach training new team members on effectively using Splunk for log analysis?

A candidate with training experience might suggest a structured approach such as:

- Starting with Splunk fundamentals and basic search syntax

- Providing hands-on exercises with real-world log data

- Teaching advanced search techniques and SPL (Search Processing Language)

- Introducing dashboard and alert creation

- Covering best practices for performance optimization and data management

Look for candidates who emphasize the importance of tailoring the training to the team's specific use cases and skill levels. They should also mention the value of ongoing learning resources, such as Splunk's documentation, community forums, and official certification programs.

7. How would you use Splunk to monitor and troubleshoot application performance issues?

An experienced candidate should describe a comprehensive approach to using Splunk for application performance monitoring:

- Ingesting application logs, metrics, and transaction data into Splunk

- Creating dashboards to visualize key performance indicators (KPIs)

- Setting up alerts for performance thresholds and anomalies

- Utilizing Splunk's Machine Learning Toolkit for predictive analytics

- Correlating application performance data with infrastructure metrics

Look for candidates who emphasize the importance of establishing performance baselines and trending over time. They should also mention the value of integrating Splunk with APM (Application Performance Management) tools for a more comprehensive view of application health.

8. Can you explain how you would use Splunk to track and analyze user behavior across multiple applications?

A candidate with experience in user behavior analysis might describe the following approach:

- Implementing consistent user identification across all applications

- Creating a data model to normalize user activity data from different sources

- Utilizing Splunk's transaction command to stitch together user sessions

- Developing custom searches and reports to identify patterns and trends

- Creating user journey maps and funnel analysis visualizations

Evaluate candidates based on their understanding of data correlation techniques and their ability to extract meaningful insights from complex datasets. Look for those who mention the importance of privacy considerations and data anonymization when dealing with user behavior data.

9. How would you approach capacity planning for a growing Splunk deployment?

A candidate with experience in Splunk administration should outline a systematic approach to capacity planning:

- Analyzing current usage patterns and growth trends

- Forecasting future data ingestion rates and storage requirements

- Assessing current hardware utilization and performance metrics

- Considering factors like retention policies and compliance requirements

- Planning for scaling options (vertical vs. horizontal) based on the deployment architecture

Look for candidates who emphasize the importance of regular capacity reviews and the need to align with business growth projections. They should also mention the value of Splunk's built-in monitoring tools and third-party capacity planning resources available in the Splunk community.

Which Splunk skills should you evaluate during the interview phase?

In the recruitment process, it's important to remember that a single interview cannot provide a complete picture of a candidate's capabilities. However, certain skills are particularly important for working with Splunk, and these should be a priority during the assessment phase. Evaluating these core skills will help you identify candidates who can effectively utilize Splunk's powerful features.

Data Analysis

To assess data analysis skills, consider using a relevant assessment test that includes multiple-choice questions focused on data interpretation and analysis techniques. You can find a suitable assessment for this skill in our library: Splunk Test.

In addition to tests, targeted interview questions can help gauge a candidate's data analysis expertise. One effective question to ask is:

Can you explain a time when you used Splunk to analyze data and how your findings impacted decision-making?

When asking this question, look for candidates who can articulate a clear process, including the data they analyzed, the tools they used within Splunk, and the insights they gained from the analysis. Their ability to connect their analysis to business outcomes will demonstrate their practical understanding of Splunk.

Log Management

To further assess log management skills, an assessment test with relevant MCQs can provide valuable insights. Consider utilizing our Splunk Test for this purpose.

You can also ask targeted questions to evaluate a candidate's grasp of log management. For example:

How do you ensure the integrity and security of log data in Splunk?

When posed with this question, listen for answers that demonstrate an understanding of best practices for log retention, security measures, and compliance standards. Candidates should show awareness of the importance of maintaining log data integrity.

Search Processing Language (SPL)

To evaluate a candidate's SPL knowledge, consider incorporating an assessment test that focuses on its syntax and usage. Our Splunk Test contains relevant questions that can help filter candidates effectively.

In addition to assessments, it's valuable to ask specific SPL-related questions during the interview. One effective question is:

Can you describe a complex search query you created using SPL and the insights it generated?

When candidates respond, look for clarity in their explanation of the SPL query structure, the logic behind their choice of commands, and how the results contributed to their analysis. Their ability to explain the query's purpose and outcome will showcase their expertise.

3 Effective Tips for Utilizing Splunk Interview Questions

Before you start implementing what you've learned, here are some tips to enhance your interview process.

1. Incorporate Skills Tests Prior to Interviews

Using skills tests before interviews helps gauge a candidate's technical abilities and ensures they meet the role's requirements. For Splunk roles, consider tests like the Splunk online assessment to evaluate candidates’ proficiency in data analysis and log management.

These tests can provide you with insights into a candidate's practical skills, helping you focus on those who genuinely fit the role. By filtering candidates based on test results, you can invite only the most qualified individuals to the interview stage, making your process more streamlined.

Once you've identified strong candidates through skills assessments, the next step is to prepare for the interviews with focused and relevant questions.

2. Compile Relevant Interview Questions

Time is limited during interviews, so it's important to select a balanced number of targeted questions. By doing this, you maximize your chances of evaluating candidates effectively across essential aspects of their skills and experiences.

Make sure to include questions that address their technical capabilities, as well as soft skills such as communication. Additionally, consider asking questions related to other areas that are relevant for the role, such as data analysis or cloud computing.

This strategic approach will foster meaningful conversations, allowing you to assess candidates more thoroughly and potentially identify strong fits for your team.

3. Ask Thoughtful Follow-Up Questions

Simply relying on prepared questions may not provide the depth needed to understand a candidate's true capabilities. Follow-up questions are essential for clarifying responses and probing deeper into a candidate's thought process.

For example, if a candidate states they have experience with Splunk queries, a good follow-up could be, 'Can you explain a complex query you've created and what it achieved?' This helps reveal the depth of their experience and can highlight their problem-solving skills.

Leverage Splunk Skills Tests to Identify Top Talent

When hiring for roles requiring Splunk expertise, confirming candidates' proficiency is key. A reliable way to assess these skills is through targeted skills tests. Consider utilizing our Splunk Online Test to accurately gauge the abilities of potential hires.

After assessing candidates with the skills test, you can effectively shortlist the top performers for interviews. For further steps in the hiring process, encourage sign-ups or visits to detailed resources like our online assessment platform for a streamlined recruitment experience.

Splunk Test

Download Splunk interview questions template in multiple formats

Splunk Interview Questions FAQs

Ask a mix of general, junior, intermediate, and advanced questions based on the candidate's experience level and the role requirements.

Tailor questions to the role, listen for detailed explanations, and use follow-up questions to gauge depth of knowledge.

Yes, combining Splunk skills tests with interviews provides a more thorough assessment of a candidate's abilities.

The number varies based on interview length, but aim for 10-15 questions covering key areas relevant to the position.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources