In the world of big data, Apache Spark stands out as a powerful engine for data processing and analytics, so recruiters need to find people who actually know Spark. As companies race to harness data for insights, identifying top Spark talent becomes super important.

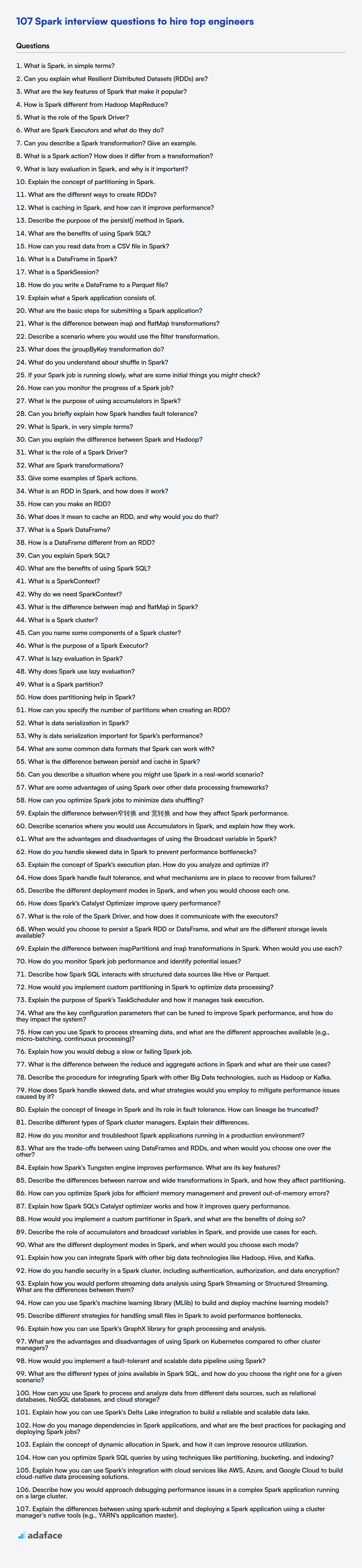

This post brings you a curated list of Spark interview questions, suitable for candidates with varying levels of expertise from freshers to experienced professionals, as well as a selection of multiple-choice questions (MCQs). Expect questions on Spark architecture, data transformations, performance tuning, and real-world applications.

By using these questions, you can better evaluate candidates and ensure they possess the skills to drive your data initiatives and before investing in interviews, consider using Adaface's online Spark test to quickly screen candidates.

Table of contents

Spark interview questions for freshers

1. What is Spark, in simple terms?

Spark is a fast and general-purpose distributed processing engine for large datasets. Think of it as a super-fast, in-memory engine that can process massive amounts of data much faster than traditional disk-based systems like Hadoop MapReduce.

It provides high-level APIs in languages like Java, Scala, Python, and R, making it easier for developers to write parallel computations. Spark also includes libraries for SQL, machine learning (MLlib), graph processing (GraphX), and stream processing (Structured Streaming), enabling a wide range of data analytics tasks.

2. Can you explain what Resilient Distributed Datasets (RDDs) are?

Resilient Distributed Datasets (RDDs) are the fundamental data structure of Apache Spark. They are an immutable, distributed collection of data that is partitioned across a cluster of machines, enabling parallel processing. RDDs are resilient because if a partition of data is lost, it can be recomputed from the lineage graph (the sequence of transformations that created the RDD).

Key characteristics of RDDs include:

- Immutability: Once created, RDDs cannot be changed. New RDDs are created through transformations.

- Distributed: Data is spread across multiple nodes in a cluster.

- Resilient: Fault-tolerant; data loss is handled automatically.

- Support for various data types, and operations like

map,filter, andreducefor data processing.

3. What are the key features of Spark that make it popular?

Spark's popularity stems from several key features. Primarily, its speed is a major draw, achieved through in-memory computation and optimized execution plans. It's significantly faster than traditional MapReduce for many workloads.

Furthermore, Spark offers ease of use, with APIs available in Scala, Java, Python, and R. Its unified engine supports various data processing tasks, including batch processing, stream processing, machine learning (MLlib), and graph processing (GraphX). Spark also boasts fault tolerance, automatically recovering from failures. Finally, it integrates well with various data sources and formats (HDFS, AWS S3, Cassandra, etc.)

4. How is Spark different from Hadoop MapReduce?

Spark and Hadoop MapReduce are both distributed processing frameworks, but they differ significantly in how they handle data processing. MapReduce is disk-based; it reads data from disk, processes it, and writes the output back to disk at each stage of a job. This makes it fault-tolerant but slower.

Spark, on the other hand, is an in-memory processing engine. It tries to keep data in memory as much as possible, reducing the number of disk I/O operations. This makes Spark much faster than MapReduce for iterative algorithms and data mining tasks. Spark also offers richer APIs for data manipulation (e.g., DataFrames, Datasets) and supports real-time processing, which MapReduce lacks natively. Here are key differences:

- Speed: Spark is generally faster due to in-memory processing.

- Data Storage: MapReduce relies heavily on disk I/O; Spark minimizes disk I/O.

- APIs: Spark provides richer and more user-friendly APIs (Scala, Python, Java, R).

- Real-time Processing: Spark supports real-time processing; MapReduce is primarily for batch processing.

5. What is the role of the Spark Driver?

The Spark Driver is the central coordinator of a Spark application. Its primary roles include:

- Maintaining Application State: The driver process keeps track of the state of the Spark application throughout its lifecycle.

- Creating SparkContext: It creates the

SparkContext, which represents the connection to the Spark cluster. - Resource Negotiation: The driver negotiates with the cluster manager (e.g., YARN, Mesos, Standalone) to allocate resources (executors) for the application.

- Task Scheduling: The driver divides the application into tasks and schedules them to run on the executors. It analyzes the DAG (Directed Acyclic Graph) of operations and optimizes the execution plan.

- Task Monitoring: It monitors the execution of tasks on the executors and handles any failures or retries.

- Executor Communication: The driver communicates with the executors to send them tasks and receive the results.

- User Code Execution: Ultimately the driver process executes the user's application code that defines the Spark jobs.

6. What are Spark Executors and what do they do?

Spark Executors are worker nodes in a Spark cluster that execute tasks assigned to them by the Spark Driver. Each executor runs in its own Java Virtual Machine (JVM). Executors are responsible for:

- Executing tasks: They run the actual code for a Spark job. These tasks are the smallest unit of work in Spark.

- Caching data: Executors can cache data in memory or on disk, which speeds up subsequent operations.

- Reporting status: Executors report the status of tasks back to the Spark Driver.

- Data Storage: Executors may also store data when instructed to do so through

persist()orcache()operations.

7. Can you describe a Spark transformation? Give an example.

A Spark transformation is a function that creates a new RDD from an existing RDD. Transformations are lazy, meaning they are not executed immediately. Instead, Spark keeps track of the transformations applied to an RDD and executes them only when an action is called. This allows Spark to optimize the execution plan and avoid unnecessary computations.

For example, map() is a transformation. It applies a function to each element of an RDD and returns a new RDD containing the results. Here's a simple example:

rdd = sc.parallelize([1, 2, 3, 4])

squared_rdd = rdd.map(lambda x: x * x)

# squared_rdd will contain [1, 4, 9, 16] but the transformation hasn't been applied until an action like collect() is called.

8. What is a Spark action? How does it differ from a transformation?

A Spark action triggers computation on a Spark RDD (Resilient Distributed Dataset). It forces the execution of the previously defined transformations. Actions return a value to the driver program or write data to external storage.

Actions differ from transformations in that transformations are lazy operations that create a new RDD from an existing one but don't execute immediately. They only define a plan of what needs to be done. Actions, on the other hand, are the catalyst that initiates this plan. Common examples of actions include collect(), count(), first(), take(), reduce(), and saveAsTextFile().

9. What is lazy evaluation in Spark, and why is it important?

Lazy evaluation in Spark means that Spark delays the execution of transformations until an action is called. Instead of executing transformations immediately, Spark builds a Directed Acyclic Graph (DAG) of operations. This DAG represents the entire workflow. The transformations are only triggered when an action (like collect(), count(), saveAsTextFile()) requires Spark to compute a result.

This is important because it allows Spark to optimize the entire execution plan. Spark can reorder transformations, combine them, or even skip unnecessary steps to improve efficiency. It also allows Spark to avoid processing data until it's absolutely needed, saving computation resources. For example, consider two transformations: map() followed by filter(). With lazy evaluation, Spark might be able to combine these into a single step during actual execution, rather than performing them separately.

10. Explain the concept of partitioning in Spark.

Partitioning in Spark is dividing data in a RDD (Resilient Distributed Dataset) logically into smaller chunks. Each partition resides on a node in the cluster, enabling parallel processing. Spark operations are executed on each partition concurrently, enhancing performance and scalability.

Key aspects of partitioning:

- Data Locality: Spark strives to place partitions close to the data source to minimize data transfer.

- Parallelism: Enables computations on different partitions simultaneously.

- Performance Tuning: Proper partitioning is crucial for optimizing Spark application performance, including repartitioning and coalesce.

repartition()andcoalesce()methods are used for repartitioning

11. What are the different ways to create RDDs?

RDDs (Resilient Distributed Datasets) can be created in Spark using several methods:

- From an existing collection: You can parallelize an existing collection in your driver program using

sparkContext.parallelize(collection). This is useful for testing or creating small RDDs. - From external datasets: You can create RDDs from data stored in external storage systems like HDFS, S3, databases or other file formats.

sparkContext.textFile(path)is commonly used to read text files. There are other specific methods for other file types. - Transforming existing RDDs: Using transformations like

map,filter,reduceByKey, etc., you can create new RDDs from existing ones. This is the most common way to build data processing pipelines. For example:

existing_rdd.map(lambda x: x * 2)

12. What is caching in Spark, and how can it improve performance?

Caching in Spark is a mechanism to store intermediate data (RDDs, DataFrames, or Datasets) in memory or on disk across operations. This avoids recomputing the same data multiple times, which can be a significant bottleneck in iterative algorithms or when data is reused in multiple stages of a Spark application.

Caching improves performance by:

- Reducing computation time: Data is retrieved from the cache instead of being recomputed.

- Reducing disk I/O: Data can be kept in memory, avoiding costly disk reads.

- Enabling faster iterative algorithms: Algorithms like machine learning models that iteratively refine their parameters benefit greatly from caching the training data. Data can be cached by using functions such as

.cache()or.persist(). For example:val cachedData = someRDD.cache()

13. Describe the purpose of the `persist()` method in Spark.

The persist() method in Spark is used for caching RDDs (Resilient Distributed Datasets) or DataFrames in memory or on disk. By default, Spark computes RDDs/DataFrames each time an action is called on them. This can be inefficient if the same RDD/DataFrame is used multiple times. persist() avoids recomputation by storing the RDD/DataFrame after its first computation.

Calling persist() is a hint to Spark that you plan to reuse the RDD/DataFrame, so Spark will keep it in memory for faster access during subsequent operations. You can specify a storage level using the StorageLevel class (e.g., MEMORY_ONLY, DISK_ONLY, MEMORY_AND_DISK). If there's not enough memory, Spark will spill the data to disk based on the storage level chosen. unpersist() can be used to manually remove the RDD/DataFrame from cache.

14. What are the benefits of using Spark SQL?

Spark SQL offers several benefits, including:

- Unified Data Access: Provides a single interface to query various data sources like Hive, Parquet, JSON, and JDBC databases. This simplifies data integration and analysis.

- SQL Compatibility: Allows users familiar with SQL to easily interact with Spark data using standard SQL syntax or DataFrame API. This reduces the learning curve.

- Performance Optimization: Leverages Spark's distributed processing capabilities and Catalyst optimizer to significantly improve query performance compared to traditional database systems. It includes features like cost-based optimization and code generation.

- Integration with Spark Ecosystem: Seamlessly integrates with other Spark components like Spark Streaming and MLlib, enabling building comprehensive data pipelines.

- Scalability: Scales easily to handle large datasets and complex queries across a cluster of machines.

- Dataframe API: Offers a programmatic way to interact with data, providing type safety and the ability to perform complex data transformations.

- User Defined Functions (UDFs): Supports creating custom functions that can be used in SQL queries, extending the functionality of Spark SQL.

15. How can you read data from a CSV file in Spark?

In Spark, you can read data from a CSV file using the spark.read.csv() method. This method returns a DataFrame, which is a distributed collection of data organized into named columns.

To read a CSV file, you can use the following code:

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder.appName("ReadCSV").getOrCreate()

# Read the CSV file into a DataFrame

df = spark.read.csv("path/to/your/file.csv", header=True, inferSchema=True)

# Show the DataFrame

df.show()

path/to/your/file.csv: Replace this with the actual path to your CSV file. It can be a local path or a path on a distributed file system like HDFS.header=True: Specifies that the first row of the CSV file contains the column names.inferSchema=True: Enables Spark to automatically infer the data types of the columns based on the data in the CSV file. While convenient, for production workloads, it's better to define the schema explicitly for performance and consistency. You can define a schema like this:

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

schema = StructType([

StructField("name", StringType(), True),

StructField("age", IntegerType(), True)

])

df = spark.read.csv("path/to/your/file.csv", schema=schema)

16. What is a DataFrame in Spark?

In Spark, a DataFrame is a distributed collection of data organized into named columns. It's conceptually similar to a table in a relational database or a DataFrame in Pandas (Python) or R. DataFrames allow you to structure your data, enabling operations like filtering, grouping, and joining using Spark's SQL engine.

Key features of Spark DataFrames include: schema inference, optimization through Catalyst, and support for various data sources like JSON, CSV, Parquet, and more. DataFrames provide a high-level API that simplifies data manipulation and analysis in a distributed environment, supporting languages like Scala, Java, Python, and R.

17. What is a SparkSession?

A SparkSession is the entry point to Spark functionality. It's the unified interface for interacting with Spark's various components. It provides a way to create DataFrames, register DataFrames as tables, execute SQL queries, access SparkContext, and interact with various data sources.

Think of it as the master object you need to begin any Spark application. It essentially combines SparkContext, SQLContext, and HiveContext (where available) into a single object. To create a SparkSession, you typically use the SparkSession.builder API.

18. How do you write a DataFrame to a Parquet file?

To write a DataFrame to a Parquet file, you typically use a DataFrameWriter provided by libraries like Apache Spark or pandas (with extensions like pyarrow). Here's a general example using Spark:

dataframe.write.parquet("path/to/parquet/file")

This command saves the DataFrame to the specified path in Parquet format. You can also specify options like compression codecs. For example, dataframe.write.option("compression", "snappy").parquet("path/to/parquet/file") will use Snappy compression.

19. Explain what a Spark application consists of.

A Spark application consists of a Driver program and a set of Executor processes. The Driver program maintains the SparkContext, which coordinates the application's execution. It defines the transformations and actions on data. The Executor processes run on worker nodes in the cluster and are responsible for executing tasks assigned by the Driver.

Specifically, it involves these components:

- SparkContext: The entry point to Spark functionality. It represents the connection to a Spark cluster.

- RDDs (Resilient Distributed Datasets): Fundamental data structure of Spark. Immutable, distributed collection of data.

- Transformations: Operations that create new RDDs from existing ones (e.g.,

map,filter). They are lazy. - Actions: Operations that trigger computation and return a value to the Driver program (e.g.,

count,collect). - Executors: Distributed agents that run tasks. Each executor runs in its own JVM.

20. What are the basic steps for submitting a Spark application?

Submitting a Spark application generally involves these steps:

- Package your application: Bundle your code and dependencies into a JAR file (for Java/Scala) or a

.pyfile/zip (for Python). - Prepare the Spark environment: Ensure that Spark is installed and configured correctly on the cluster or local machine where you intend to run the application.

- Use

spark-submit: This is the primary tool for launching Spark applications. You'll specify various parameters like the application JAR/Python file, master URL, deployment mode (cluster or client), executor memory, number of cores, and any application-specific arguments. - Monitor the application: After submission, Spark provides web UIs to monitor the progress and resource usage of your application. Check the logs for any errors or performance bottlenecks.

21. What is the difference between `map` and `flatMap` transformations?

Both map and flatMap are transformations used to apply a function to each element of a collection. The key difference lies in how they handle the results of the function application.

map applies a function to each element and returns a new collection containing the results. If the function returns a collection itself, map will result in a collection of collections. In contrast, flatMap flattens the resulting collection of collections into a single collection. In essence, flatMap applies a function that returns a collection and then concatenates all the resulting collections into one. flatMap is commonly used to avoid nested collections when working with transformations that produce multiple values for each input value.

22. Describe a scenario where you would use the `filter` transformation.

I would use the filter transformation when I need to selectively process elements from a collection based on a specific condition. For example, imagine I have a list of customer objects and I want to create a new list containing only customers who have made a purchase in the last month.

customers = [{'name': 'Alice', 'last_purchase': '2024-01-15'}, {'name': 'Bob', 'last_purchase': '2024-03-20'}, {'name': 'Charlie', 'last_purchase': '2024-03-25'}]

import datetime

cutoff_date = datetime.date.today() - datetime.timedelta(days=30)

recent_customers = filter(lambda c: datetime.datetime.strptime(c['last_purchase'], '%Y-%m-%d').date() >= cutoff_date, customers)

recent_customers_list = list(recent_customers)

print(recent_customers_list) # Output: [{'name': 'Charlie', 'last_purchase': '2024-03-25'}]

In this scenario, filter allows me to efficiently create a subset of the original customer list that meets my criteria, without modifying the original list.

23. What does the `groupByKey` transformation do?

The groupByKey transformation in Spark groups the elements of a RDD based on the key. It takes an RDD of key-value pairs (K, V) and returns a new RDD of (K, Iterable

Important considerations:

groupByKeycan be expensive due to shuffling all data across the network.- It can lead to out-of-memory errors if a key has a very large number of values.

- Alternatives like

reduceByKeyoraggregateByKeyare often preferred for better performance, as they perform some aggregation on the mapper side before shuffling.

24. What do you understand about shuffle in Spark?

Shuffle in Spark is a process of redistributing data across partitions in a distributed manner. This occurs when data from different input partitions needs to be combined or aggregated together, such as in operations like groupByKey, reduceByKey, join, and repartition. The shuffle operation involves transferring data across the network between executor nodes, making it one of the most expensive operations in Spark in terms of performance.

During a shuffle, data is first written to disk on the mapper side (the task that produces the data). Then, executors on the reducer side (the task that consumes the data) fetch the necessary data from the mappers. This process involves serialization, deserialization, and network I/O. Because of its overhead, it's crucial to optimize shuffle operations by minimizing the amount of data shuffled. Techniques like using mapPartitions and broadcast variables can help to reduce or avoid shuffles where possible.

25. If your Spark job is running slowly, what are some initial things you might check?

If my Spark job is running slowly, some initial checks would include:

- Data Skew: Uneven data distribution across partitions can lead to some tasks taking significantly longer than others. Check for skew by examining task durations in the Spark UI. Repartitioning, salting, or using techniques like

reduceByKeywith custom partitioners can help mitigate this. - Insufficient Resources: Make sure the Spark cluster has enough CPU and memory resources allocated for the job. Monitor resource utilization in the Spark UI or cluster management tools (e.g., YARN, Kubernetes) and adjust the number of executors, cores per executor, and executor memory as needed.

- Inefficient Code: Examine the Spark application code for potential performance bottlenecks. Look for unnecessary shuffles, large aggregations, or operations that could be optimized. Use

explain()to analyze the query execution plan. Caching frequently accessed DataFrames/Datasets using.cache()or.persist()can also improve performance. - Serialization: Check the serialization method being used. Kryo serialization is typically faster and more compact than Java serialization. Configure Spark to use Kryo serialization using

spark.serializer=org.apache.spark.serializer.KryoSerializer. - Too Many Small Files: If the input data consists of a large number of small files, it can lead to excessive overhead. Consolidate small files into larger ones before processing.

- Spark Configuration: Review other Spark configuration parameters like

spark.default.parallelismto make sure they are appropriately set for the cluster size and workload.

26. How can you monitor the progress of a Spark job?

You can monitor the progress of a Spark job using several methods:

- Spark UI: This web interface (typically accessible on port 4040 of the driver node) provides detailed information about the job's execution, including stages, tasks, executors, storage, and environment. It is the most common and comprehensive way to monitor Spark jobs.

- Spark History Server: For completed jobs, the Spark History Server provides a persistent view of the Spark UI. This is useful for analyzing past job performance.

- Metrics System: Spark exposes metrics that can be collected and monitored using external monitoring systems like Prometheus, Graphite, or Ganglia. This allows for real-time monitoring and alerting.

- Logging: Spark logs detailed information about the job's execution, which can be useful for debugging and troubleshooting. You can configure the logging level and output destination using

log4j.properties. - Programmatic Monitoring: You can use the Spark API to programmatically monitor the job's progress. For instance, you can listen for events like

SparkListenerJobStart,SparkListenerJobEnd,SparkListenerStageCompletedetc., and then display progress information.

27. What is the purpose of using accumulators in Spark?

Accumulators in Spark are used to provide a write-only variable that can be efficiently updated in parallel across worker nodes. They are specifically designed for accumulating values, such as counters or sums, during the execution of Spark jobs. This allows for tracking global statistics or aggregating information that would be difficult or inefficient to gather otherwise. Only the driver program can access the accumulator's value.

Essentially, accumulators help in debugging and understanding the behavior of a distributed computation by providing insight into how data is being processed across the cluster. They are particularly useful for implementing counters (similar to MapReduce counters) or for accumulating sums of values distributed across the executors in the Spark cluster. Example use case: val myCounter = spark.sparkContext.longAccumulator("My Counter").

28. Can you briefly explain how Spark handles fault tolerance?

Spark achieves fault tolerance primarily through Resilient Distributed Datasets (RDDs) and their lineage. RDDs track the sequence of transformations applied to create them. If a partition of an RDD is lost (due to node failure), Spark can reconstruct it by replaying the transformations in the lineage graph, starting from the original data. This process is known as data recomputation. Checkpointing can also be used to truncate the lineage graph by saving intermediate RDDs to stable storage, reducing recomputation time.

In addition, Spark utilizes techniques like data replication (persisting copies of RDD partitions on multiple nodes) to minimize data loss. Spark's driver program also supports high availability modes, allowing the driver to be restarted in case of failure, further ensuring the resilience of Spark applications. The DAGScheduler helps with job recovery in case of failures during job execution.

Spark interview questions for juniors

1. What is Spark, in very simple terms?

Spark is a fast, general-purpose distributed processing engine for large datasets. Think of it as a supercharged version of MapReduce. It lets you process huge amounts of data much faster than traditional methods.

Instead of writing data to disk after each step (like MapReduce), Spark keeps data in memory, which makes it much quicker for iterative algorithms and complex data transformations. It offers APIs in languages like Python, Scala, Java, and R, making it accessible to a wide range of developers. Spark is commonly used for big data processing, machine learning, and real-time data analytics.

2. Can you explain the difference between Spark and Hadoop?

Spark and Hadoop are both big data processing frameworks, but they differ in their approach. Hadoop primarily uses MapReduce, which involves writing data to disk after each stage of processing, making it suitable for large datasets and batch processing. Spark, on the other hand, performs in-memory data processing, leading to significantly faster execution, especially for iterative algorithms and real-time analytics. However, Spark's in-memory processing can be limited by available RAM.

Essentially, Hadoop is a distributed storage (HDFS) and processing (MapReduce) system. Spark can run on top of Hadoop (using YARN for resource management and HDFS for storage) or independently. Spark offers a richer set of tools including Spark SQL, Spark Streaming, MLlib (machine learning), and GraphX (graph processing), whereas Hadoop's core is MapReduce. Spark is often preferred for speed and ease of use, while Hadoop is better for very large datasets where cost-effectiveness and fault tolerance are critical.

3. What is the role of a Spark Driver?

The Spark Driver is the main process in a Spark application. Its primary roles include:

- Coordinating and managing the execution of Spark jobs: It's responsible for translating user code into Spark tasks and distributing these tasks to the Spark executors. It communicates with the cluster manager (e.g., YARN, Mesos, or Spark's standalone cluster manager) to allocate resources for the executors.

- Maintaining application state: The Driver keeps track of the state of the Spark application, including the DAG (Directed Acyclic Graph) of operations, the location of data partitions, and the status of executors.

- Providing the SparkContext: The

SparkContextis created in the Driver and provides the entry point to all Spark functionality. It allows you to create RDDs (Resilient Distributed Datasets), which are the fundamental data abstraction in Spark.

4. What are Spark transformations?

Spark transformations are operations on RDDs (Resilient Distributed Datasets) that create new RDDs. Transformations are lazy, meaning they are not executed immediately. Instead, Spark remembers the sequence of transformations applied to an RDD, and they are only executed when an action is called.

Some common Spark transformations include:

map(): Applies a function to each element of the RDD.filter(): Returns a new RDD containing only the elements that satisfy a given condition.flatMap(): Similar tomap(), but each input item can be mapped to zero or more output items (by returning a sequence).groupByKey(): Groups the values for each key in the RDD into a single sequence.reduceByKey(): Merges the values for each key using a specified reduce function.union(): Returns a new RDD containing all elements from both RDDs.join(): Performs an inner join between two RDDs.

For example:

rdd = sc.parallelize([1, 2, 3, 4, 5])

squared_rdd = rdd.map(lambda x: x * x) #transformation

result = squared_rdd.collect() #action

print(result)

In this example, map is a transformation. The collect function is an action that triggers the execution of the map transformation.

5. Give some examples of Spark actions.

Spark actions trigger the execution of a Spark RDD lineage graph (DAG) to return a value to the driver program or write data to external storage. Some common examples include:

collect(): Returns all elements of the RDD as an array to the driver program. Use with caution on large datasets, as it can cause memory issues on the driver.count(): Returns the number of elements in the RDD.first(): Returns the first element of the RDD.take(n): Returns the first n elements of the RDD.reduce(func): Aggregates the elements of the RDD using a function func (which takes two arguments and returns one).saveAsTextFile(path): Saves the RDD to a text file in a given directory.

6. What is an RDD in Spark, and how does it work?

An RDD (Resilient Distributed Dataset) is the fundamental data structure of Spark. It is an immutable, distributed collection of data that is partitioned across multiple nodes in a cluster, allowing for parallel processing. RDDs support two types of operations:

- Transformations: These operations create new RDDs from existing ones (e.g.,

map,filter,reduceByKey). Transformations are lazy, meaning they are not executed immediately. Instead, Spark builds a lineage graph of transformations. - Actions: These operations trigger the computation of the RDD and return a value to the driver program (e.g.,

count,collect,saveAsTextFile). When an action is called, Spark traverses the lineage graph, optimizes the execution plan, and executes the necessary transformations in parallel across the cluster.

7. How can you make an RDD?

RDDs (Resilient Distributed Datasets) can be created in several ways in Spark. The two most common methods are:

- From an existing collection: You can create an RDD from a collection (like a list) already present in your driver program using

sparkContext.parallelize(collection). This distributes the data across the cluster. - From an external dataset: You can create an RDD from data stored in external sources such as a file system (e.g., HDFS, local file system), a database, or an API.

sparkContext.textFile(path)reads a text file and creates an RDD where each line is an element.

For example:

# From a list

data = [1, 2, 3, 4, 5]

rdd_from_list = sparkContext.parallelize(data)

# From a text file

rdd_from_file = sparkContext.textFile("path/to/your/file.txt")

8. What does it mean to cache an RDD, and why would you do that?

Caching an RDD means storing it in memory or on disk after its initial computation. This avoids recomputing the RDD each time it's used in subsequent operations. Spark's lazy evaluation means transformations aren't executed until an action is called. Without caching, the lineage graph from the original data source would be re-evaluated for every action performed on the RDD. You cache an RDD by calling rdd.cache() or rdd.persist(StorageLevel.MEMORY_ONLY).

9. What is a Spark DataFrame?

A Spark DataFrame is a distributed collection of data organized into named columns. It's conceptually similar to a table in a relational database or a DataFrame in Python's pandas. DataFrames allow you to structure your data and then perform operations on it using SQL-like queries or DataFrame API functions.

Key characteristics include: schema enforcement, meaning each column has a specified data type; distributed processing across a cluster; and optimization through Spark's Catalyst optimizer, which automatically improves query performance. The ability to handle structured and semi-structured data sets makes DataFrames a powerful tool in Spark. You can create DataFrames from various sources, including structured data files, tables in Hive, external databases, or existing RDDs (Resilient Distributed Datasets).

10. How is a DataFrame different from an RDD?

RDDs (Resilient Distributed Datasets) are the fundamental building blocks of Spark, representing an immutable, distributed collection of data. They offer fine-grained control and flexibility, particularly for complex data transformations.

DataFrames, on the other hand, are built on top of RDDs and provide a higher-level abstraction, similar to tables in relational databases. Key differences include:

- Schema: DataFrames have a schema, providing structure and enabling optimized query execution through Spark's Catalyst optimizer.

- Optimization: DataFrames benefit from automatic query optimization, leading to significant performance improvements in many common data processing tasks.

- Data Types: DataFrames handle structured and semi-structured data more efficiently, offering better support for various data types and built-in functions.

- API: DataFrames offer a user-friendly API with SQL-like syntax, making them easier to use for data analysis and manipulation.

11. Can you explain Spark SQL?

Spark SQL is a Spark module for structured data processing. It provides a programming interface, called DataFrames, which are distributed collections of data organized into named columns. Spark SQL allows you to query data using SQL or a DataFrame API in Python, Java, Scala, and R.

Key features include:

- DataFrame API: Enables you to work with data in a more structured way.

- SQL Interface: Lets you query data using SQL statements.

- Data Source Connectivity: Supports reading and writing data from various sources like Hive, JDBC databases, Parquet, JSON, and more.

- Optimized Execution: Utilizes Spark's Catalyst optimizer to improve query performance.

- Integration with Spark Ecosystem: Seamlessly integrates with other Spark components like Spark Streaming and MLlib.

12. What are the benefits of using Spark SQL?

Spark SQL offers several key benefits. It provides a unified interface for querying data from various sources like Parquet, JSON, Hive, and relational databases using SQL or DataFrame API. This abstraction simplifies data access and integration. Spark SQL optimizes queries using the Catalyst optimizer, which can significantly improve performance compared to traditional MapReduce approaches.

Furthermore, it enables seamless integration with other Spark components like Spark Streaming and MLlib, allowing you to build end-to-end data pipelines for real-time analytics and machine learning. Its ability to process structured and semi-structured data efficiently makes it a versatile tool for data warehousing, data science, and business intelligence applications. Using a common SQL interface also lowers the barrier to entry for data analysts already familiar with SQL.

13. What is a SparkContext?

A SparkContext is the entry point to any Spark functionality. It represents a connection to a Spark cluster and can be used to create RDDs, accumulators, and broadcast variables. Think of it as the driver program's handle to the Spark cluster; it coordinates the application's execution.

It uses the SparkConf object to get parameters required for submitting the Spark Application to the cluster. Only one SparkContext may be active per JVM. You must stop() the active SparkContext before creating a new one.

14. Why do we need SparkContext?

SparkContext is the entry point to any Spark functionality. It represents the connection to a Spark cluster and can be used to create RDDs, accumulators, and broadcast variables. Think of it as the driver program's handle to the Spark cluster.

Essentially, it's needed for:

- Connecting to the Spark cluster manager (e.g., Standalone, YARN, Mesos).

- Coordinating the execution of Spark jobs across the cluster.

- Providing access to Spark's API (e.g.,

sc.textFile(),sc.parallelize()).

15. What is the difference between `map` and `flatMap` in Spark?

Both map and flatMap are transformations in Spark that apply a function to each element of an RDD. The key difference lies in the output of the function.

map applies a function to each element and returns a new RDD with the results. The function's output corresponds directly to a single element in the new RDD. In contrast, flatMap applies a function to each element, but the function can return a sequence (e.g., a list or array) of zero, one, or multiple elements. flatMap then flattens this sequence into a single RDD. This is useful when you want to transform each input element into multiple output elements. Here's a code snippet in pyspark:

rdd = sc.parallelize(["hello world", "how are you"])

map_rdd = rdd.map(lambda x: x.split())

# Result: [['hello', 'world'], ['how', 'are', 'you']]

flatmap_rdd = rdd.flatMap(lambda x: x.split())

# Result: ['hello', 'world', 'how', 'are', 'you']

16. What is a Spark cluster?

A Spark cluster is a distributed computing system that allows you to process large datasets in parallel. It consists of a driver node (the master) that coordinates tasks and worker nodes that execute those tasks. These worker nodes are the compute resources where the actual data processing happens.

Spark clusters can be deployed in various ways, including standalone mode, YARN, and Mesos. Each deployment option has different resource management capabilities. The driver program divides the application into stages and tasks, and schedules them across the worker nodes for parallel execution, enabling faster data processing than single-machine approaches.

17. Can you name some components of a Spark cluster?

A Spark cluster comprises several key components that work together to process data in parallel. These components include:

- Driver Program: The main application that coordinates the execution of Spark jobs.

- Cluster Manager: Allocates resources to the Spark application. Common cluster managers are: Standalone, YARN, and Mesos.

- Worker Nodes: Machines in the cluster that execute tasks assigned by the driver. Each worker node has executors.

- Executors: Processes that run on worker nodes and execute the tasks. They provide in-memory storage for caching data.

- SparkContext: Represents the connection to a Spark cluster and can be used to create RDDs, accumulators and broadcast variables on that cluster.

- RDD (Resilient Distributed Dataset): Fundamental data structure of Spark; an immutable distributed collection of data.

18. What is the purpose of a Spark Executor?

A Spark Executor is a worker process that runs computations and stores data on a cluster node. Its primary purpose is to execute tasks assigned by the Spark Driver. Think of it as the muscle of the Spark application.

Specifically, an Executor:

- Executes tasks (units of work) on data partitions.

- Stores computed results in memory or on disk (caching).

- Provides in-memory data storage for RDDs or DataFrames (when caching is enabled).

- Reports task status (success, failure) back to the Driver.

- Each executor runs in its own JVM process.

19. What is lazy evaluation in Spark?

Lazy evaluation in Spark is an optimization technique where Spark delays the execution of transformations until an action is called. Instead of immediately executing transformations, Spark creates a Directed Acyclic Graph (DAG) of transformations. This DAG represents the entire workflow.

The benefit of lazy evaluation is that Spark can optimize the entire workflow before execution. This includes combining multiple transformations into a single stage, filtering data early in the process to reduce the amount of data processed, and choosing the most efficient execution plan. Essentially, Spark only computes the result when it is absolutely necessary (when an action is triggered), potentially saving significant processing time and resources.

20. Why does Spark use lazy evaluation?

Spark employs lazy evaluation primarily for optimization. By delaying the execution of transformations until an action is called, Spark can analyze the entire transformation graph and optimize it. This allows for techniques like pipelining transformations (e.g., combining multiple map operations), and avoiding unnecessary data processing. For example, if you filter a dataset and then only use a small portion, Spark won't process the entire dataset before filtering.

Specifically, lazy evaluation enables:

- Optimization: Spark can reorder and combine operations for efficiency.

- Reduced Computation: Operations are only performed when their results are needed.

- Fault Tolerance: Allows for recomputation of lost partitions by retracing the lineage graph, ensuring data recovery in case of failures.

21. What is a Spark partition?

A Spark partition is the smallest unit of data distribution in Spark. It represents a chunk of data within a Spark RDD (Resilient Distributed Dataset) or DataFrame that resides on a single node in the cluster. Spark distributes data across partitions to enable parallel processing.

Each partition is processed by a single task. Spark aims to create a partition for each core in the cluster, thus maximizing parallelism. The number of partitions impacts the level of parallelism achieved during computations. You can control the number of partitions using methods like repartition() or coalesce().

22. How does partitioning help in Spark?

Partitioning in Spark is a crucial optimization technique. It involves dividing the data into smaller, more manageable chunks called partitions. These partitions are then distributed across different nodes in the Spark cluster, enabling parallel processing. This dramatically speeds up computations, especially for large datasets, as different nodes can work on different partitions simultaneously, avoiding single-point bottleneck.

Benefits include:

- Parallelism: Tasks are executed concurrently on different partitions.

- Fault Tolerance: If a partition fails, only that partition needs to be recomputed, not the entire dataset.

- Improved Performance: Reduces data transfer overhead and allows efficient data processing by processing data closer to where it is stored (data locality).

23. How can you specify the number of partitions when creating an RDD?

You can specify the number of partitions when creating an RDD in several ways:

When creating an RDD from a file using

textFile(),hadoopFile(), or similar methods, you can pass an optionalminPartitionsargument to specify the minimum number of partitions. Spark may create more partitions than specified, but it will not create fewer.When creating an RDD from an existing collection using

sc.parallelize(), you can pass a second argument specifying the number of partitions. For example:data = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10] rdd = sc.parallelize(data, numSlices=4)Here,

numSlicesdetermines the number of partitions.You can repartition an existing RDD using

repartition(numPartitions)orcoalesce(numPartitions)methods.repartitionshuffles the data, ensuring even distribution across partitions, whilecoalesceattempts to minimize data movement and should be used when decreasing the number of partitions.

24. What is data serialization in Spark?

Data serialization in Spark is the process of converting data objects into a format that can be easily stored or transmitted across a network. This is crucial because Spark operates in a distributed environment where data needs to be moved between different nodes. Serialization ensures that data can be efficiently transferred and reconstructed on the receiving end.

Spark uses serialization to optimize data storage and transfer during various operations, such as shuffling data between executors, caching RDDs/DataFrames in memory or on disk, and persisting data to storage systems. Choosing the right serialization method can significantly impact the performance of Spark applications. Common serialization libraries used include Java serialization, Kryo serialization, and custom serialization strategies. Kryo is often preferred over Java serialization due to its speed and efficiency.

25. Why is data serialization important for Spark's performance?

Data serialization is crucial for Spark's performance because Spark operates on distributed data across a cluster. Serialization is the process of converting data objects into a format that can be easily transmitted over the network and stored on disk. Without efficient serialization, Spark would suffer from significant overhead during data shuffling and persistence.

Specifically, it impacts these areas:

- Network transfer: Reduced serialized data size minimizes network bandwidth usage during shuffles (e.g.,

groupByKey,reduceByKey). - Disk I/O: Smaller serialized data results in faster read/write operations when persisting RDDs to disk (e.g., using

cache()orpersist(MEMORY_AND_DISK_SER)). - Memory usage: Compact serialized data allows Spark to fit more data in memory, reducing the likelihood of spilling to disk, which is much slower. Spark typically uses

Java serializationorKryo serialization-Kryois faster and more compact but requires registration of classes.

26. What are some common data formats that Spark can work with?

Spark can work with a variety of data formats. Some common ones include:

- Text files: Plain text data, often processed line by line.

- JSON: A widely used format for structured data.

- CSV: Comma-separated values, a common format for tabular data.

- Parquet: A columnar storage format optimized for querying large datasets.

- ORC: Another columnar storage format, similar to Parquet, designed for Hadoop.

- Avro: A row-oriented data serialization system.

- SequenceFile: A Hadoop-specific binary file format.

- Relational databases: Spark can connect to and read data from databases like MySQL, PostgreSQL, etc., using JDBC.

Spark provides built-in support for many of these formats, and you can use external libraries or connectors to work with others. For example, reading a CSV file:

df = spark.read.csv("path/to/file.csv", header=True, inferSchema=True)

27. What is the difference between `persist` and `cache` in Spark?

cache() and persist() in Spark are both used for storing RDDs, DataFrames, or Datasets in memory or on disk to speed up subsequent computations. cache() is essentially a shorthand for persist(MEMORY_ONLY), meaning it stores the data in memory only. If there isn't enough memory, Spark will recompute the partitions as needed.

persist() offers more control as it allows specifying different storage levels using StorageLevel class. This includes options like MEMORY_ONLY, DISK_ONLY, MEMORY_AND_DISK, with and without serialization, and with different replication factors. persist() gives flexibility to choose the storage strategy based on data size, memory constraints, and fault tolerance requirements.

28. Can you describe a situation where you might use Spark in a real-world scenario?

Imagine a large e-commerce company wanting to analyze customer purchase patterns to improve recommendations and marketing campaigns. They have terabytes of transaction data stored across a distributed system. Spark would be ideal for this.

We could use Spark to process this massive dataset in parallel. For example, we could calculate:

- Frequently bought together items: Using association rule mining algorithms available in Spark's MLlib library.

- Customer segmentation: Clustering customers based on their purchasing behavior using k-means or other clustering algorithms.

- Predictive analytics: Build models to predict future purchases using Spark's machine learning capabilities. The analyzed data will enhance customer experience and provide data for targeted marketing campaigns and inventory management.

29. What are some advantages of using Spark over other data processing frameworks?

Spark offers several advantages over other data processing frameworks. Primarily, its in-memory processing capabilities lead to significantly faster execution speeds, especially for iterative algorithms and complex transformations. It also supports multiple programming languages like Python, Java, Scala, and R, providing flexibility for developers with different backgrounds. Spark's unified engine handles batch processing, stream processing, machine learning, and graph processing, simplifying the development of complex data pipelines.

Furthermore, Spark's ability to run on various cluster managers like Hadoop YARN, Apache Mesos, and Kubernetes makes it easily integrable into existing data infrastructure. Its fault tolerance, achieved through Resilient Distributed Datasets (RDDs), ensures data recovery in case of failures. Additionally, Spark SQL allows you to query structured data using SQL or HiveQL, broadening access for data analysts comfortable with SQL.

Spark intermediate interview questions

1. How can you optimize Spark jobs to minimize data shuffling?

Minimizing data shuffling in Spark jobs is crucial for performance. Several techniques can be employed.

- Use broadcast variables: Broadcast smaller datasets to all worker nodes to avoid sending the larger dataset across the network during joins or transformations.

- Use partitioning effectively: Ensure data is partitioned appropriately based on the keys used in joins or aggregations. Use

repartition()orcoalesce()cautiously, understanding their impact. If data is already partitioned correctly on source, avoid repartitioning. Consider usingbucketByfor pre-partitioning and sorting data on disk. - Use

mapPartitions(): Instead ofmap(), which processes data row-by-row,mapPartitions()allows you to work with an entire partition at once, reducing the number of operations and potentially improving efficiency. - Optimize join operations: If possible, use

broadcastHashJoin(Spark might do this automatically for small tables). Ensure join keys are of the same data type. - Filter early: Apply filters as early as possible in the data pipeline to reduce the amount of data that needs to be shuffled.

- Use accumulators: Accumulators are useful for updating values from executors without shuffling.

- Caching: If the same data is used repeatedly, cache the RDD or DataFrame using

cache()orpersist(). However, be mindful of memory usage. - Avoid unnecessary

groupByKey(): PreferreduceByKey()oraggregateByKey()as they perform pre-aggregation on each partition before shuffling data.

2. Explain the difference between窄转换 and 宽转换 and how they affect Spark performance.

Narrow transformations in Spark are those where each partition of the RDD depends only on a single partition of the parent RDD. Examples include map, filter, and union. Narrow transformations are very efficient because they allow Spark to perform pipelined execution, meaning transformations can be executed in a single stage without shuffling data across the network. This also allows for optimal fault tolerance, as a lost partition can be reconstructed from a single parent partition.

Wide transformations, on the other hand, require data from multiple partitions of the parent RDD to compute partitions of the child RDD. groupByKey, reduceByKey, and join are examples. These transformations involve shuffling data across the network, which is an expensive operation in terms of time and resources. Furthermore, wide transformations introduce shuffle dependencies, which create stages in Spark's execution plan. If a stage fails due to node failures, the entire stage including the shuffle process need to be recomputed which can hurt performance significantly.

3. Describe scenarios where you would use Accumulators in Spark, and explain how they work.

Accumulators in Spark are useful for aggregating values across executors and back to the driver program in a fault-tolerant manner. Scenarios include:

- Counters: Tracking the number of events, like errors, processed records, or skipped items during data processing. For example, counting malformed records while parsing a log file.

- Debugging: Observing the progress of a Spark job by incrementing an accumulator at various stages of a transformation pipeline. This allows tracking how many records pass through each stage.

- Custom Metrics: Collecting custom statistics beyond what Spark provides by default, such as calculating the sum of specific values that meet certain conditions during processing.

Accumulators are write-only from executors; only the driver can read their final value after the Spark job completes. Executors perform updates locally, and Spark handles aggregating these updates during the job. Example (Python):

sc = SparkContext.getOrCreate()

error_count = sc.accumulator(0)

def process_record(record):

try:

# Perform processing logic

pass

except Exception as e:

error_count.add(1)

rdd.foreach(process_record)

print(f"Number of errors: {error_count.value}")

4. What are the advantages and disadvantages of using the Broadcast variable in Spark?

Broadcast variables in Spark offer performance improvements by allowing read-only variables to be cached on each machine rather than being shipped with tasks. This reduces network traffic and serialization costs, especially for large datasets needed by multiple tasks. The main advantage is reduced network traffic and memory usage on the driver node, leading to faster job execution.

However, disadvantages exist. Creating a broadcast variable involves initial serialization and distribution overhead. Furthermore, all executors store a copy of the broadcast variable which can consume significant memory if the variable is large, potentially leading to memory issues or impacting the space available for caching data partitions. Also, since these are read-only, updates require creating a new broadcast variable, which can be inefficient if the underlying data changes frequently. Thus, judicious use is important, especially with large, infrequently-updated datasets.

5. How do you handle skewed data in Spark to prevent performance bottlenecks?

To handle skewed data in Spark and prevent performance bottlenecks, several strategies can be employed. Salting is a common technique. We add a random prefix or suffix to the skewed key, which distributes the data more evenly across partitions. This prevents single partitions from becoming overloaded during shuffles.

Another method involves using broadcast variables for smaller dimension tables. This avoids shuffling large amounts of data when performing joins. Additionally, employing techniques like pre-filtering and approximate aggregations can reduce the data volume before performing costly operations on the skewed data. Finally, consider using adaptive query execution(AQE), which Spark automatically uses to handle skewed partitions. AQE can detect and split skewed partitions during the query execution.

6. Explain the concept of Spark's execution plan. How do you analyze and optimize it?

Spark's execution plan, also known as the Directed Acyclic Graph (DAG), represents the series of transformations and actions Spark will perform to execute a job. It visualizes the data flow and operations involved, breaking down the high-level code into stages and tasks. Analyzing the execution plan is crucial for performance tuning. You can view it using explain() method on a DataFrame or RDD.

To analyze and optimize the execution plan:

- Understand the DAG: Identify stages, shuffles (data movement), and resource usage.

- Identify bottlenecks: Look for stages with high duration or excessive data shuffling.

- Optimize transformations: Reorder operations, use more efficient functions (e.g.,

reduceByKeyinstead ofgroupBy), and avoid unnecessary shuffles. - Adjust partitioning: Increase or decrease the number of partitions to optimize parallelism.

- Cache intermediate results: Use

cache()orpersist()to avoid recomputing data. - Tune Spark configuration: Adjust settings like

spark.executor.memory,spark.executor.cores, andspark.default.parallelism.

7. How does Spark handle fault tolerance, and what mechanisms are in place to recover from failures?

Spark achieves fault tolerance primarily through Resilient Distributed Datasets (RDDs) and their lineage. RDDs track the transformations applied to them, forming a lineage graph. If a partition of an RDD is lost due to a node failure, Spark can reconstruct that partition by replaying the transformations in the lineage graph from the original data. This process is called data lineage-based recovery.

Other mechanisms include:

- Checkpointing: RDDs can be persisted to storage (e.g., HDFS) to shorten lineage graphs and speed up recovery, especially for iterative algorithms.

- Data Replication: Spark can replicate data across multiple nodes to increase data availability and reduce the impact of node failures.

- Task Retries: If a task fails, Spark automatically retries it on another node.

- Driver Redundancy: The driver program, which coordinates the Spark application, can be made fault-tolerant using a standby driver.

8. Describe the different deployment modes in Spark, and when you would choose each one.

Spark offers several deployment modes, each suited for different environments and use cases:

- Local Mode: Runs Spark on a single machine, using multiple threads to simulate a cluster. Ideal for development, testing, and debugging, as it doesn't require a cluster setup. However, it's not suitable for production workloads.

- Standalone Mode: A simple cluster manager that comes bundled with Spark. Easy to set up and manage, it's a good option for small to medium-sized clusters, or when you don't need the advanced features of more robust cluster managers.

- YARN (Yet Another Resource Negotiator): Leverages Hadoop's YARN resource manager. This is the most common choice in Hadoop environments, as it allows Spark to share resources with other YARN applications. Use this when you already have a Hadoop cluster and want to integrate Spark into it.

- Mesos: Another cluster manager that can run Spark alongside other frameworks like Hadoop MapReduce, MPI, etc. Offers fine-grained resource sharing and is suitable for multi-tenant environments. Use this when you have a Mesos cluster and need to run diverse workloads.

- Kubernetes: Container orchestration system that can manage Spark applications. This is becoming increasingly popular, especially in cloud environments, offering scalability, isolation, and ease of management through containerization. Use this if you are running Spark on Kubernetes or in a cloud environment that leverages Kubernetes.

9. How does Spark's Catalyst Optimizer improve query performance?

Spark's Catalyst Optimizer significantly improves query performance through a series of rule-based and cost-based optimizations. It transforms a logical query plan into a more efficient physical execution plan. Catalyst works in four phases: Analysis, Logical Optimization, Physical Planning, and Code Generation.

Some key optimizations include:

- Predicate Pushdown: Moving filter conditions closer to the data source to reduce the amount of data processed.

- Column Pruning: Removing unnecessary columns from the query plan to reduce data size.

- Cost-Based Optimization (CBO): Choosing the most efficient join order based on data statistics.

- Rule-Based Optimization (RBO): Applying a set of predefined rules to improve the query plan, such as constant folding or simplification of expressions.

- Join Reordering: Optimizing the order in which tables are joined. It uses statistics to estimate the cost of different join orders and selects the one with the lowest cost.

- For example, consider a SQL query:

SELECT * FROM tableA JOIN tableB ON tableA.id = tableB.id WHERE tableA.value > 10 - Catalyst might first apply predicate pushdown, filtering

tableAto only rows wherevalue > 10before performing the join, thus reducing the amount of data that needs to be processed during the join.

- For example, consider a SQL query:

10. What is the role of the Spark Driver, and how does it communicate with the executors?

The Spark Driver is the heart of a Spark application. It's responsible for coordinating the execution of a Spark job. Its primary roles include:

- Job Management: Converting Spark application code into tasks and stages, scheduling tasks to executors.

- Resource Negotiation: Communicating with the cluster manager (e.g., YARN, Mesos, or Spark's standalone cluster manager) to request resources (CPU, memory) for executors.

- Task Distribution: Sending tasks to executors for processing.

- Result Collection: Receiving results from executors after task completion.

- Maintaining Application State: Keeping track of the state of the Spark application, including DAG (Directed Acyclic Graph) of operations and metadata.

The Spark Driver communicates with the executors through a cluster manager. The driver negotiates resources with the cluster manager, which then launches executors on worker nodes. The driver and executors communicate via TCP sockets. The driver serializes tasks and sends them to the executors. Executors deserialize the tasks, execute them, and then send the results back to the driver. The communication happens using protocols like Akka.

11. When would you choose to persist a Spark RDD or DataFrame, and what are the different storage levels available?

You should persist a Spark RDD or DataFrame when you plan to reuse it multiple times in your Spark application. Persisting avoids recomputation of the RDD/DataFrame each time it's accessed, which can significantly improve performance, especially for expensive transformations or iterative algorithms.

The available storage levels are:

MEMORY_ONLY: Stores the RDD as deserialized Java objects in the JVM. If the RDD does not fit in memory, some partitions will not be cached and will be recomputed on the fly each time they're needed.MEMORY_AND_DISK: Stores the RDD as deserialized Java objects in the JVM. If the RDD does not fit in memory, store the partitions on disk, and read them from disk when they're needed.DISK_ONLY: Stores the RDD partitions only on disk.MEMORY_ONLY_SER: Stores the RDD as serialized Java objects (one byte array per partition). More space-efficient thanMEMORY_ONLY, but more CPU intensive.MEMORY_AND_DISK_SER: Similar toMEMORY_ONLY_SER, but spills to disk if the RDD doesn't fit in memory.OFF_HEAP: Similar toMEMORY_ONLY_SER, but store the data in off-heap memory. The memory is out of JVM.MEMORY_ONLY_2,MEMORY_AND_DISK_2, etc.: Same as the levels above, but replicate each partition on two cluster nodes. Provides fault tolerance.

12. Explain the difference between `mapPartitions` and `map` transformations in Spark. When would you use each?

map and mapPartitions are transformations in Spark used to apply a function to RDD elements. map applies the provided function to each element of the RDD individually, resulting in a new RDD with the transformed elements. In contrast, mapPartitions applies the function to each partition of the RDD. The function receives an iterator of the elements within the partition and returns an iterator of the transformed elements.

You would use mapPartitions when the transformation requires performing some setup or initialization steps that can be done once per partition rather than once per element, leading to performance optimizations. Examples include opening a database connection or initializing a machine learning model. If you just need to transform individual elements without any per-partition setup, map is generally more appropriate and easier to use.

13. How do you monitor Spark job performance and identify potential issues?

I monitor Spark job performance using several tools and techniques. The Spark UI is my primary go-to, providing detailed information on stages, tasks, executors, and resource utilization. I look for long-running tasks, skewed data distributions, and inefficient shuffles, which are common performance bottlenecks. I also use external monitoring tools like Prometheus and Grafana to track cluster-level metrics such as CPU, memory, and network usage to identify resource constraints impacting Spark jobs.

To identify potential issues, I examine Spark logs for errors and warnings. I pay close attention to garbage collection behavior, looking for excessive GC pauses that can impact job latency. I also use profiling tools to identify hot spots in the code and optimize performance. Additionally, I proactively set up alerting based on key metrics to be notified of any performance degradation or job failures. spark.eventLog.enabled and spark.history.fs.logDirectory are key configurations for logging and debugging purposes. Code profiling tools such as Java Flight Recorder or Flame Graphs can assist.

14. Describe how Spark SQL interacts with structured data sources like Hive or Parquet.

Spark SQL provides a unified interface to interact with various structured data sources, including Hive and Parquet. For Hive, Spark SQL leverages the Hive metastore to access schema information and table metadata. It uses Hive's query parser and optimizer to translate SQL queries into Spark jobs. Spark can then execute these jobs, reading data directly from the underlying storage (like HDFS) where the Hive tables reside.

For Parquet, Spark SQL has built-in support. It can directly read and write Parquet files. Spark SQL utilizes Parquet's schema information and columnar storage format for efficient data processing. When querying Parquet files, Spark's query optimizer can take advantage of Parquet's metadata (like min/max values for columns) to perform predicate pushdown, reducing the amount of data that needs to be read.

15. How would you implement custom partitioning in Spark to optimize data processing?

To implement custom partitioning in Spark, you would define a custom Partitioner class that extends org.apache.spark.Partitioner. This class needs to override two key methods: numPartitions which returns the number of partitions, and getPartition(key: Any) which determines the partition ID (an integer between 0 and numPartitions - 1) for a given key.

Once you have your custom Partitioner, you can apply it to your RDD or DataFrame using the partitionBy() transformation, passing an instance of your Partitioner class. This re-distributes the data across the cluster according to your custom logic. For example, if you wanted to partition data by the first letter of a string, you can calculate the hashcode based on the first character.

import org.apache.spark.Partitioner

class CustomPartitioner(numParts: Int) extends Partitioner {

override def numPartitions: Int = numParts

override def getPartition(key: Any): Int = {

val k = key.toString

Math.abs(k.charAt(0).toInt % numPartitions)

}

}

val data = sc.parallelize(Seq(("apple", 1), ("banana", 2), ("apricot", 3), ("blueberry", 4)))

val partitionedData = data.partitionBy(new CustomPartitioner(2))

16. Explain the purpose of Spark's TaskScheduler and how it manages task execution.

The TaskScheduler in Spark is responsible for distributing tasks to worker nodes (executors) and managing their execution. It receives task sets from the DAGScheduler and schedules them across the cluster based on available resources and data locality.

The TaskScheduler performs several crucial functions:

- Resource Allocation: It negotiates with the cluster manager (e.g., YARN, Mesos, or Spark's standalone scheduler) to acquire resources for running tasks.

- Task Scheduling: It assigns tasks to executors, taking into account data locality preferences to minimize data transfer. Tasks are scheduled to executors that are close to the data they need.

- Fault Tolerance: It monitors task execution and retries failed tasks, up to a configured number of attempts. If a task fails repeatedly, the TaskScheduler may mark the corresponding stage as failed.

- Task Management: It keeps track of the state of each task (e.g., running, completed, failed) and provides updates to the DAGScheduler. It also manages task cancellations if necessary.

17. What are the key configuration parameters that can be tuned to improve Spark performance, and how do they impact the system?

Several key configuration parameters can be tuned to improve Spark performance. spark.executor.memory controls the amount of memory allocated to each executor; increasing it can reduce disk spills and improve processing speed, but excessive allocation can starve the driver or other applications. spark.executor.cores defines the number of cores per executor; increasing it allows for more parallelism within each executor, but oversubscription can lead to contention. spark.default.parallelism sets the default number of partitions for shuffles; increasing it can improve parallelism for wide transformations like joins, but too many partitions can increase overhead. spark.driver.memory defines the memory allocated to the driver process. Increasing this memory can help when collecting large datasets on the driver or when running complex aggregations on the driver side. Specifically for shuffling, consider spark.shuffle.partitions, and parameters related to shuffle managers like spark.shuffle.sort.bypassMergeThreshold.

Furthermore, consider adjusting parameters related to data serialization, such as using Kryo serialization (spark.serializer and registering custom classes) to improve serialization speed and reduce data size. Setting appropriate levels for logging and monitoring is also crucial for troubleshooting. Finally, adjusting parameters for dynamic allocation, like spark.dynamicAllocation.enabled, spark.dynamicAllocation.minExecutors, and spark.dynamicAllocation.maxExecutors, can help optimize resource utilization based on the workload demands. Proper memory management (managing garbage collection overhead) for the executor and driver processes by tuning spark.executor.extraJavaOptions and spark.driver.extraJavaOptions can also lead to improved overall performance. Also, consider adjusting the persistence level (MEMORY_AND_DISK, OFF_HEAP) depending on the size and access pattern of your RDD/DataFrame.

18. How can you use Spark to process streaming data, and what are the different approaches available (e.g., micro-batching, continuous processing)?

Spark processes streaming data primarily using Spark Streaming (DStreams) and Structured Streaming. Spark Streaming uses a micro-batching approach, dividing the data stream into small batches processed at regular intervals. This provides fault tolerance and integrates well with batch processing. Structured Streaming builds on Spark SQL, allowing you to express streaming computations using SQL-like syntax and DataFrames. It supports both micro-batching and continuous processing.

The two main approaches are:

- Micro-batching (DStreams and Structured Streaming): Divides the stream into small batches, processed as Spark jobs. Offers high throughput and fault tolerance.

- Continuous Processing (Structured Streaming): Processes data continuously with low latency (near real-time). Achieved through a dedicated set of long-running tasks. Requires a fault-tolerant source, and state management is more complex than micro-batching.

19. Explain how you would debug a slow or failing Spark job.

Debugging a slow or failing Spark job involves several steps. First, I'd examine the Spark UI to identify bottlenecks. This includes checking the stages, tasks, and executors to pinpoint where time is being spent. Look for skewed data, which can cause some tasks to take significantly longer than others. Also, check for excessive shuffling, which is often a performance killer. Resource allocation should be examined; insufficient memory or cores can lead to slow processing. For errors, the Spark UI provides detailed logs for each task, helping identify exceptions and their origins. If the issue isn't apparent in the UI, consider adding logging statements to your code to track the flow of data and the values of key variables.

If issues persist, consider profiling the code using tools like Spark's built-in profiler or external profilers like Java Flight Recorder. This can expose hot spots in the code where optimization is needed. Also, you can adjust Spark configuration parameters to optimize performance, such as spark.executor.memory, spark.executor.cores, and spark.default.parallelism. If data is the source of the problem, investigate sampling data to help recreate error scenarios. Using EXPLAIN to analyze spark's execution plans for queries can also unveil problems with how the query is structured.

20. What is the difference between the `reduce` and `aggregate` actions in Spark and what are their use cases?

Both reduce and aggregate are Spark actions used for combining elements of an RDD, but they differ in their flexibility and use cases. reduce is simpler; it takes a function that combines two elements of the same type and returns a new element of that same type. This function must be commutative and associative to ensure consistent results in a distributed environment. A typical use case is summing numbers in an RDD. aggregate, on the other hand, offers more flexibility. It takes a zero value, a sequential function, and a combine function. The sequential function combines an element from the RDD with the accumulator, while the combine function merges two accumulators. This allows you to work with different input and output types, making it suitable for more complex aggregations like calculating the average (where you need to maintain both the sum and count).

21. Describe the procedure for integrating Spark with other Big Data technologies, such as Hadoop or Kafka.

Integrating Spark with Hadoop typically involves leveraging Spark's ability to read data directly from HDFS (Hadoop Distributed File System). You configure Spark to point to your Hadoop cluster's resource manager (YARN) for resource negotiation and job scheduling. This allows Spark jobs to process data stored in HDFS, using Hadoop's data storage infrastructure. For example, you'd configure spark.master to yarn and set necessary Hadoop configurations like fs.defaultFS in Spark's configuration.

Integrating with Kafka involves using the spark-streaming-kafka package, or kafka-clients. This allows Spark Streaming to subscribe to Kafka topics and process incoming data in real-time or near real-time. Spark can consume data from Kafka using either the Direct Approach (recommended for Kafka 0.10+) or the Receiver-based approach. The Direct Approach allows Spark to directly read data from Kafka partitions, managing offsets itself which results in efficient and reliable data consumption. You need to include the relevant Kafka dependencies in your Spark application and then use the appropriate Spark Streaming API (e.g., KafkaUtils.createDirectStream) to establish the connection and process the data.

Spark interview questions for experienced

1. How does Spark handle skewed data, and what strategies would you employ to mitigate performance issues caused by it?

Spark handles skewed data poorly by default, leading to uneven task completion times and overall performance degradation. Some partitions might process significantly more data than others, causing some executors to be idle while others are overloaded.

Strategies to mitigate skewed data include: 1) Salting: Add a random prefix or suffix to skewed keys to distribute them across multiple partitions. 2) Broadcasting: For join operations, broadcast smaller tables to all executors to avoid shuffling the larger skewed table. 3) Pre-filtering: Filter out skewed keys before a large join or aggregation. 4) Custom Partitioning: Use a custom partitioning function to distribute data more evenly. 5) Using spark.sql.adaptive.enabled and spark.sql.adaptive.skewJoin.enabled: Enabling adaptive query execution and skew join optimization can automatically detect and handle skew in join operations.