Interviewing Software Testers can be challenging; finding candidates who truly understand testing principles and practices requires a solid plan. A well-prepared list of interview questions ensures that you can thoroughly assess a candidate's knowledge and skills, as discussed in our skills required for software tester post.

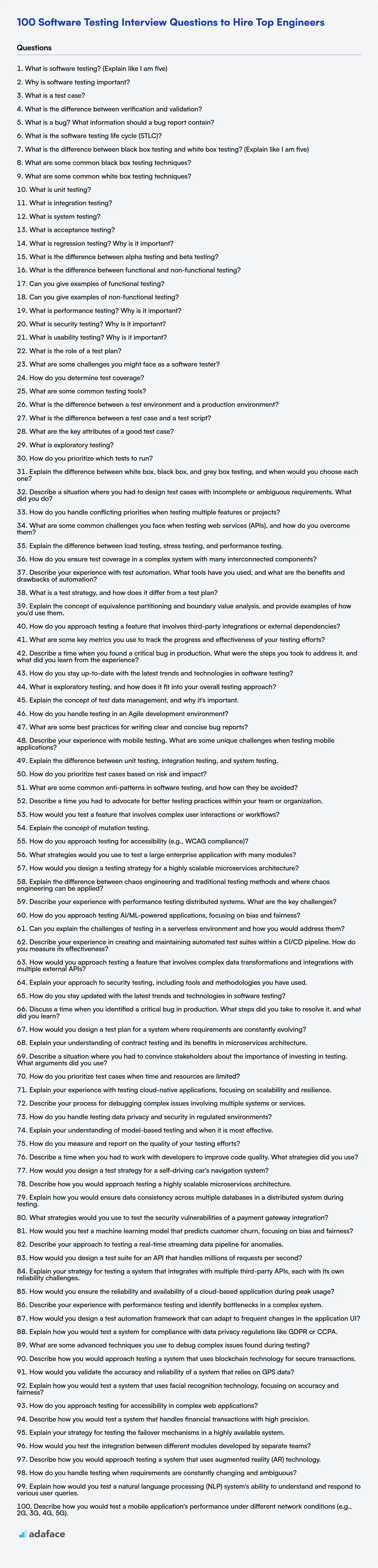

This blog post provides a curated list of Software Testing interview questions, covering basic to expert levels, along with multiple-choice questions, to help you evaluate candidates effectively. We have divided the questions into four categories - Basic, Intermediate, Advanced and Expert.

By using these questions, you'll gain insights into candidates' testing prowess and identify top talent, but before the interview, you can also use Adaface's QA Engineer Test to screen candidates quickly.

Table of contents

Basic Software Testing interview questions

1. What is software testing? (Explain like I am five)

Imagine you're building a really cool toy! Software testing is like playing with the toy before giving it to your friends to make sure it works. We poke it, push it, and try to break it in different ways. If it breaks, we tell the toy builder so they can fix it before anyone gets sad or frustrated playing with it. That way, everyone has lots of fun with the toy!

Testing is really important. It means we're checking if the computer program does what we want it to do. Just like checking if your shoes fit before you run a race, we are making sure everything is right before it's time to use the program. We can check if things work the right way, don't break when we do something unexpected, and are safe to use.

2. Why is software testing important?

Software testing is crucial because it helps ensure the quality, reliability, and performance of software applications. It identifies bugs, errors, and defects before the software is released to end-users, preventing potential failures, data loss, and security vulnerabilities. Early detection and resolution of issues saves time and resources, minimizing development costs and improving user satisfaction.

Ultimately, effective software testing contributes to building trust in the software and the organization behind it. Without thorough testing, the risk of releasing faulty software increases significantly, potentially leading to negative consequences such as financial losses, damage to reputation, and even legal liabilities.

3. What is a test case?

A test case is a specific set of conditions and inputs under which a tester will determine whether an application, software system or one of its features is working as expected. It documents the detailed steps, input data, preconditions, expected results, and postconditions for a specific test scenario.

Essentially, it's a single execution to validate a specific requirement. A well-written test case ensures that testing is performed methodically and thoroughly. Test cases are atomic, meaning each case tests one specific thing.

4. What is the difference between verification and validation?

Verification is the process of checking that the software meets the specified requirements, ensuring "Are we building the product right?". It focuses on the implementation and confirms whether the code, design, architecture, etc., fulfill the initial specifications. Activities involved are reviews, inspections, and testing. In essence, it aims to catch defects early in the development cycle.

Validation, on the other hand, confirms that the software meets the user's needs and intended use, asking "Are we building the right product?". It evaluates the software in a real-world environment to ensure it performs as expected and satisfies the customer. Acceptance testing and user feedback are crucial parts of validation. Successfully validating a product means it's fit for purpose and meets the stakeholders' expectations.

5. What is a bug? What information should a bug report contain?

A bug is a defect or flaw in a software application that causes it to produce an incorrect or unexpected result, or to behave in unintended ways. It's a deviation from the expected or required behavior.

A good bug report should contain the following information:

- Summary: A concise description of the bug.

- Steps to reproduce: Detailed steps that consistently trigger the bug.

- Expected result: What should happen if the code was working correctly.

- Actual result: What actually happened.

- Severity: How critical is the bug (e.g., critical, major, minor).

- Priority: How quickly the bug needs to be fixed (e.g., high, medium, low).

- Environment: Information about the system where the bug was found (e.g., operating system, browser, hardware).

- Version: The specific version of the software where the bug exists.

- Attachments: Any relevant files, such as screenshots, log files, or code snippets. For example, a code snippet like this:

def add(a, b): return a - b # Bug: Should be a + b

6. What is the software testing life cycle (STLC)?

The Software Testing Life Cycle (STLC) is a sequential series of activities performed to conduct software testing. It ensures that the software application meets the quality standards and business requirements. It includes various phases such as: Requirement Analysis, Test Planning, Test Case Development, Environment Setup, Test Execution, and Test Cycle Closure.

The main purpose of the STLC is to define a set of steps that can be followed to ensure that the software testing process is effective and efficient. The STLC helps testers to identify and fix defects early in the development process, which can save time and money.

7. What is the difference between black box testing and white box testing? (Explain like I am five)

Imagine you have a toy car. Black box testing is like playing with the car without knowing how it works inside. You just push it, turn the wheels, and see if it goes. You don't open it up to look at the engine or gears. White box testing is like taking the car apart and looking at all the pieces. You check if the engine is connected correctly, if the gears are spinning right, and if everything is put together the way it should be.

So, black box testing is about testing what the toy does, and white box testing is about testing how the toy does it.

8. What are some common black box testing techniques?

Common black box testing techniques include:

- Equivalence Partitioning: Dividing the input data into partitions, assuming that the system will behave similarly for all data within one partition.

- Boundary Value Analysis: Testing the boundary values of input domains, as errors often occur at the edges of these domains.

- Decision Table Testing: Creating decision tables to represent complex logic and test different combinations of conditions and actions.

- State Transition Testing: Testing the different states of a system and the transitions between those states based on various inputs or events.

- Use Case Testing: Deriving test cases directly from use cases to ensure that the system fulfills its intended functionality from a user's perspective.

- Error Guessing: A technique where testers use their experience and intuition to guess where errors might occur.

9. What are some common white box testing techniques?

White box testing techniques examine the internal structure and code of a software application. Some common techniques include:

- Statement Coverage: Ensures that each statement in the code is executed at least once.

- Branch Coverage: Ensures that every branch (e.g.,

if/elsestatements) is executed. - Path Coverage: Ensures that every possible path through the code is executed. This is the most thorough, but also the most complex.

- Condition Coverage: Tests each logical condition in a program to determine the outcome of each. For example, in the code snippet

if (x > 0 && y < 10), bothx > 0andy < 10conditions would be tested for true and false outcomes. - Data Flow Testing: Examines how data moves through the system to identify issues like uninitialized variables.

10. What is unit testing?

Unit testing is a software testing method where individual units or components of a software application are tested in isolation. The purpose is to validate that each unit of the software code performs as designed. A unit can be a function, method, module, or object.

During unit testing, test cases are created to verify the behavior of the unit under different conditions. These tests are typically automated and are run frequently, often as part of a continuous integration process. Benefits include early detection of bugs, easier debugging, and improved code quality. Here is a python example using pytest:

def add(x, y):

return x + y

# test_add.py

import pytest

from your_module import add

def test_add_positive():

assert add(2, 3) == 5

11. What is integration testing?

Integration testing is a type of software testing where individual units or components of a system are combined and tested as a group. The purpose is to verify that the different units interact correctly and that data is passed properly between them. It focuses on testing the interfaces between modules to ensure that they work together harmoniously.

Integration testing helps uncover issues such as data communication problems, incorrect assumptions made by developers about module interactions, and interface incompatibilities. It can be performed in several ways, including top-down, bottom-up, and big-bang approaches.

12. What is system testing?

System testing is a type of software testing that validates the complete and integrated software product. It verifies that all components work together as expected and that the system meets its overall requirements. This testing is typically performed after integration testing and before acceptance testing.

System testing often involves testing the system's functionality, performance, security, and usability. Testers may use various techniques, including black-box testing (where the internal structure of the system is not known) and white-box testing (where the internal structure is known), to thoroughly evaluate the system's behavior.

13. What is acceptance testing?

Acceptance testing is a type of software testing performed to determine if a system satisfies its acceptance criteria. It focuses on validating the end-to-end functionality of the system, ensuring it meets the business requirements and is usable by the intended users or stakeholders. It's often the final testing phase conducted before releasing the software to production.

The goal is to confirm the system behaves as expected in a real-world scenario. Unlike other testing types, acceptance tests are often conducted by end-users or domain experts rather than developers or testers. Common types of acceptance testing include User Acceptance Testing (UAT), Business Acceptance Testing (BAT), and Operational Acceptance Testing (OAT).

14. What is regression testing? Why is it important?

Regression testing is re-running functional and non-functional tests to ensure that previously developed and tested software still performs after a change. Changes might include enhancements, bug fixes, configuration changes, or even migration to a different environment. Essentially, it verifies that new code doesn't adversely affect existing features.

It's crucial because it helps maintain the stability and reliability of the software. Without it, bug fixes or new features could unintentionally introduce new problems or reintroduce old ones. This ensures that existing functionality remains intact, minimizes risks associated with code changes, and helps deliver a quality product. By repeating tests it checks software consistency.

15. What is the difference between alpha testing and beta testing?

Alpha and beta testing are both types of software testing performed before a product is released to the general public, but they differ in several key aspects. Alpha testing is typically conducted internally by the development team or a select group of internal users. The goal is to identify bugs, defects, and usability issues early in the development cycle. The environment is usually controlled, and the focus is on functionality and performance.

Beta testing, on the other hand, is conducted by a larger group of external users who are representative of the target audience. The goal is to gather feedback on the product's usability, reliability, and overall user experience in a real-world environment. Beta testers provide feedback on how the product performs under normal usage conditions, helping to identify issues that may not have been apparent during internal testing. Beta testing often occurs after alpha testing when the software is more stable.

16. What is the difference between functional and non-functional testing?

Functional testing verifies that each function of the software application operates in conformance with the requirement specification. It focuses on what the system does, by validating the actions and outputs. Examples include unit, integration, system, and acceptance testing.

Non-functional testing, on the other hand, checks aspects like performance, security, usability, and reliability. It assesses how the system works, focusing on the user experience and operational characteristics rather than specific features. Examples include performance, load, stress, security, and usability testing.

17. Can you give examples of functional testing?

Functional testing verifies that each function of a software application operates in conformance with the requirement specification. Examples include testing the login functionality of a website by entering valid and invalid credentials and verifying the system's response. Another example is testing a shopping cart's checkout process by adding items, applying discounts, and confirming the order details and payment processing work correctly.

Specifically, examples include:

- Unit Testing: Testing individual functions or modules.

- Integration Testing: Testing the interaction between different modules.

- System Testing: Testing the entire system as a whole.

- Acceptance Testing: Testing by end-users to validate requirements are met.

- Ensuring a calculator application correctly performs addition, subtraction, multiplication, and division.

- Validating that a search engine returns relevant results for given keywords.

18. Can you give examples of non-functional testing?

Non-functional testing focuses on aspects like performance, security, usability, and reliability rather than specific features. Examples include:

- Performance Testing: Checking response times, throughput, and stability under various load conditions (e.g., stress testing, load testing, endurance testing).

- Security Testing: Identifying vulnerabilities and ensuring data confidentiality, integrity, and availability (e.g., penetration testing, vulnerability scanning).

- Usability Testing: Evaluating how easy the system is to use and understand by real users. This could include A/B testing or user interviews.

- Reliability Testing: Assessing the system's ability to function without failure for a specified period (e.g., fault injection, recovery testing).

- Accessibility Testing: Verifying that the application is usable by people with disabilities, conforming to standards like WCAG.

- Scalability Testing: Determining the system's ability to handle increasing amounts of work or data.

19. What is performance testing? Why is it important?

Performance testing is evaluating the speed, stability, and scalability of a software application under various workloads. It ensures the system can handle expected user traffic, data volume, and transaction rates without unacceptable delays or errors.

It's important because it helps identify bottlenecks, prevent performance issues in production, ensure a positive user experience, and validate that the system meets defined performance requirements. This can save costs associated with downtime or customer dissatisfaction.

20. What is security testing? Why is it important?

Security testing is a type of software testing that uncovers vulnerabilities, threats, risks in a software application and prevents malicious attacks from intruders. It helps determine if the system protects data and maintains functionality as intended.

It is important because it helps to:

- Identify weaknesses before attackers can exploit them.

- Protect sensitive data.

- Ensure compliance with regulations.

- Maintain user trust and avoid reputational damage.

- Reduce the risk of financial losses due to security breaches.

21. What is usability testing? Why is it important?

Usability testing is a method of evaluating a product or service by testing it with representative users. During a test, participants try to complete typical tasks while observers watch, listen and take notes. The goal is to identify any usability problems, collect qualitative and quantitative data, and determine the participant's overall satisfaction with the product.

It's important because it helps ensure that a product is easy to use, efficient, and satisfying for its intended users. This can lead to increased user adoption, reduced support costs, improved customer satisfaction, and ultimately, a more successful product. Identifying usability issues early in the development process can save time and resources by preventing costly redesigns later on.

22. What is the role of a test plan?

A test plan serves as a blueprint for the testing process. It defines the scope, objectives, resources, and schedule of testing activities. Its primary role is to outline the strategy for verifying that a software product meets its requirements and is fit for purpose.

Specifically, the test plan typically covers: Test objectives , Scope of testing , Testing approach , Test environment, Entry and exit criteria, Resource allocation, and Schedule. By documenting these aspects, the test plan helps to ensure a structured, consistent, and efficient testing effort, and it enables better communication and collaboration among stakeholders.

23. What are some challenges you might face as a software tester?

As a software tester, I anticipate facing several challenges. One key challenge is staying updated with the ever-evolving technologies, testing methodologies, and development practices. This requires continuous learning and adaptation. Another potential challenge includes working with incomplete or ambiguous requirements, which can make test case design and execution difficult. Communication and collaboration with developers, product owners, and other stakeholders can also present hurdles, particularly when resolving conflicting opinions or priorities, or when dealing with tight deadlines.

Other common challenges are maintaining a high level of objectivity and avoiding bias during testing, accurately reporting defects and providing constructive feedback, and ensuring sufficient test coverage within time and resource constraints. Prioritization of test cases, when faced with a large test suite and limited time, can be tough. Dealing with complex systems and identifying root causes of issues in such systems can be challenging. Finally, building and maintaining effective automated test suites is essential for regression testing and requires strong technical skills and careful planning.

24. How do you determine test coverage?

Test coverage is determined by measuring the extent to which the codebase has been executed by the tests. Several metrics exist to quantify this.

Common techniques include line coverage (percentage of lines executed), branch coverage (percentage of code branches taken), statement coverage (percentage of statements executed), and condition coverage (percentage of boolean sub-expressions evaluated to both true and false). Tools like JaCoCo (Java), Coverage.py (Python), or llvm-cov (C++) can automatically collect this data during test execution. Analyzing the reports generated by these tools identifies areas of the code that are not adequately tested, allowing for the creation of new tests to improve coverage and reduce risk.

25. What are some common testing tools?

Several testing tools are commonly used in software development. Some popular options include:

- Selenium: A widely-used tool for automating web browser testing. It supports multiple browsers and programming languages.

- JUnit: A unit testing framework for Java.

- pytest: A popular and versatile testing framework for Python.

- TestNG: A testing framework for Java, inspired by JUnit and NUnit but introducing new functionalities.

- Cypress: A modern front-end testing tool built for the web. It provides faster, easier and more reliable testing for anything that runs in a browser.

- JMeter: An Apache project that can be used as a load testing tool for analyzing and measuring the performance of web applications or a variety of services.

- Postman: A popular API testing tool used to test HTTP requests.

26. What is the difference between a test environment and a production environment?

A test environment is a replica of the production environment used for software testing before release. Its purpose is to identify bugs and ensure the application functions as expected without affecting live users. It often contains test data and may have different configurations or mock services.

Production, on the other hand, is the live environment where the application is deployed for end-users. It contains real user data and is designed for high availability, performance, and security. Changes to production are typically heavily controlled and monitored.

27. What is the difference between a test case and a test script?

A test case is a detailed set of steps, conditions, and inputs to verify a specific feature or functionality. It outlines what needs to be tested, including the expected outcome. Test cases are often written in a structured document or test management system.

On the other hand, a test script is the implementation of a test case. It's the actual code or automated instructions that execute the steps defined in the test case. Test scripts translate the 'what' into 'how' by using programming languages or automation tools to interact with the system under test and validate the results. For instance, if a test case says 'Verify login functionality,' a test script might use Selenium to automate the login process and assert that the user is successfully logged in.

28. What are the key attributes of a good test case?

A good test case possesses several key attributes. Primarily, it should be clear and concise, describing the exact steps and expected outcome unambiguously. It must be repeatable, producing the same result every time it's executed, and independent of other test cases to avoid cascading failures. Furthermore, a good test case should be maintainable, easy to update as the system evolves. It should also be traceable back to requirements to ensure comprehensive coverage.

Crucially, a good test case must be complete, covering all relevant conditions, including valid and invalid inputs, boundary conditions, and error handling. Consider using techniques like equivalence partitioning and boundary value analysis to achieve completeness. Finally, it should be efficient, executing in a reasonable timeframe and not consuming excessive resources.

29. What is exploratory testing?

Exploratory testing is a software testing approach where test design and test execution occur simultaneously. Testers explore the software to learn about it, identify potential issues, and design and execute tests on the fly, rather than relying on pre-written test cases. It emphasizes the tester's skill, intuition, and experience to uncover unexpected defects and provides flexibility to adapt testing based on emerging information.

Exploratory testing is particularly useful when requirements are vague, incomplete, or changing rapidly, or when you need to quickly assess the quality of a system. It is also beneficial for finding subtle or unexpected issues that might be missed by scripted testing.

30. How do you prioritize which tests to run?

Prioritizing tests depends on the context, but generally I consider risk, impact, and frequency. I prioritize tests that cover critical functionality or frequently used features, as failures in these areas have a higher impact. Tests for recent code changes or bug fixes are also prioritized to ensure stability and prevent regressions. I also run tests based on risk. Which areas of the system is most prone to failure. Prioritize the tests covering those areas.

Specifically I would prioritize tests in the following order:

- Smoke tests: Ensure basic functionality is working.

- Regression tests: Verify bug fixes and prevent regressions.

- Critical path tests: Focus on core business processes.

- High-risk area tests: Cover areas prone to failure.

- Exploratory tests: Uncover unexpected issues.

Test selection can also be automated via tools by tagging tests based on risk, priority, or affected components.

Intermediate Software Testing interview questions

1. Explain the difference between white box, black box, and grey box testing, and when would you choose each one?

White box testing involves testing the internal structure and code of a software application. Testers need knowledge of the code to design test cases, focusing on code coverage and logic paths. It's chosen when thorough testing of internal workings is critical, like in complex algorithms or safety-critical systems.

Black box testing, on the other hand, treats the application as a 'black box,' focusing on the inputs and outputs without knowledge of the internal implementation. Test cases are designed based on requirements and specifications. This is suitable when testing from the user's perspective or when the internal code is inaccessible or irrelevant to the testing goals. Grey box testing is a hybrid approach where testers have partial knowledge of the internal structure. This allows for more targeted testing based on architectural design, data flow diagrams, or database schemas. It's useful when some level of internal knowledge can improve testing effectiveness without the overhead of full white-box testing.

2. Describe a situation where you had to design test cases with incomplete or ambiguous requirements. What did you do?

In a previous role, I encountered a project where the requirements for a new reporting module were vaguely defined. To address this, I first prioritized clarifying the ambiguities by directly communicating with the business analysts and stakeholders. I asked targeted questions to understand the intended functionality, data sources, and expected outputs of the reports. When direct clarification wasn't immediately possible, I made informed assumptions based on my understanding of similar modules and industry best practices, documenting these assumptions clearly in the test case documentation.

Next, I designed test cases covering both positive and negative scenarios, as well as boundary conditions. Because the requirements were fluid, I created a flexible test suite that was easily adaptable. This included writing modular test cases that could be modified quickly as requirements evolved. I also focused on exploratory testing to uncover unexpected issues and used the results of exploratory sessions to refine my test cases and further clarify requirements. I continuously communicated with the development team and stakeholders about my progress, assumptions, and findings, ensuring that we were all aligned as the project progressed.

3. How do you handle conflicting priorities when testing multiple features or projects?

When faced with conflicting testing priorities, I first try to understand the business impact and urgency of each feature or project. I'd then communicate with the stakeholders, like product managers and developers, to understand their perspectives and the rationale behind the priorities. Based on the information gathered, I'd work with the team to re-evaluate and potentially re-prioritize tasks. This often involves negotiating timelines or scope, and clearly communicating any potential risks or delays associated with the adjusted plan.

If re-prioritization isn't possible, I'd focus on effective time management and resource allocation. This could involve breaking down large tasks into smaller, manageable chunks, leveraging automation where possible (e.g., automated tests), and collaborating closely with other testers to distribute the workload efficiently. Regularly communicating progress and any roadblocks to stakeholders ensures transparency and allows for timely adjustments if needed.

4. What are some common challenges you face when testing web services (APIs), and how do you overcome them?

Testing web services (APIs) presents several challenges. One common issue is dealing with data validation. Ensuring the API correctly handles various data types, boundary conditions, and invalid inputs requires thorough testing, which can be addressed by implementing comprehensive test suites with parameterized tests and fuzzing techniques. Another challenge is verifying authentication and authorization mechanisms; incorrect implementation can lead to security vulnerabilities. This can be overcome by using tools like Postman or specialized API security testing tools to simulate different user roles and permissions, validating that access controls are correctly enforced.

Other challenges include handling asynchronous requests, dealing with complex data formats (like nested JSON structures), and ensuring performance under load. Asynchronous request testing can be done by mocking external services or using message queues. For complex data formats, utilize schema validation tools and libraries within test frameworks to assert the response structure. Performance testing can be addressed with tools like JMeter or Gatling to simulate high traffic scenarios and measure response times and throughput, leading to identifying performance bottlenecks and optimizing the API.

5. Explain the difference between load testing, stress testing, and performance testing.

Load testing evaluates a system's behavior under expected conditions. It simulates the anticipated number of concurrent users and transactions to determine response times, throughput, and resource utilization. The goal is to identify bottlenecks and ensure the system can handle normal usage.

Stress testing, on the other hand, pushes the system beyond its limits by simulating extreme conditions, such as a sudden surge in user traffic or a prolonged period of high demand. The aim is to find the breaking point of the system, identify vulnerabilities, and assess its ability to recover from failures. Performance testing is a broader term that encompasses both load and stress testing, along with other tests like endurance testing and scalability testing, to evaluate various aspects of a system's performance.

6. How do you ensure test coverage in a complex system with many interconnected components?

Ensuring test coverage in a complex system involves a multi-faceted approach. First, identify critical components and their interactions through techniques like dependency analysis and risk assessment. Then, prioritize testing efforts based on risk and impact.

Employ a combination of testing types: unit tests for individual components, integration tests for interactions between components, and end-to-end tests to validate the entire system's functionality. Utilize code coverage tools to measure the percentage of code executed by tests, but remember that high code coverage doesn't necessarily mean comprehensive testing. Also, incorporate techniques like property-based testing to generate various scenarios and data inputs to check edge cases. Consider using contract testing to ensure services adhere to agreed-upon contracts. Finally, implement continuous integration and continuous delivery (CI/CD) pipelines that automatically run tests upon code changes to catch regressions early.

7. Describe your experience with test automation. What tools have you used, and what are the benefits and drawbacks of automation?

I have experience designing, implementing, and maintaining automated tests for various software applications. My experience includes automating unit, integration, and end-to-end tests. I've worked with tools like Selenium, Cypress, and Jest. I've also used CI/CD tools like Jenkins and GitHub Actions to integrate automated tests into the development pipeline. For API testing, I've utilized tools like Postman and libraries like Supertest (Node.js). The choice of tool usually depends on the project's needs and technology stack.

Benefits of test automation include increased test coverage, faster feedback loops, and reduced manual effort, ultimately leading to higher quality software. However, drawbacks include the initial investment in setting up the automation framework, the need for ongoing maintenance as the application evolves, and the potential for false positives if tests are not designed correctly. Also automation can sometimes be brittle so careful design is needed to ensure the tests don't fail unnecessarily.

8. What is a test strategy, and how does it differ from a test plan?

A test strategy is a high-level document outlining the overall approach to testing for a project or product. It defines the what and why of testing, including the types of tests to be performed, the testing levels, and the overall objectives and scope. It's a guiding document that ensures testing aligns with business goals and overall project objectives.

A test plan, on the other hand, is a more detailed document that specifies how the testing will be carried out. It includes specifics like test schedules, resources, test environment setup, test data requirements, entry/exit criteria, and specific test cases or scenarios to be executed. The test plan operationalizes the test strategy, providing a concrete roadmap for the testing team.

9. Explain the concept of equivalence partitioning and boundary value analysis, and provide examples of how you'd use them.

Equivalence partitioning is a black-box testing technique that divides the input data into partitions (or classes) such that all members of a given partition are likely to be treated the same by the software. We test one value from each partition, assuming that if one value in the partition works, all others will also work. This reduces the number of test cases needed. For instance, if a field accepts ages between 18 and 65, we create three partitions: less than 18, 18-65, and greater than 65. We then test one value from each partition like 17, 30, and 70.

Boundary value analysis focuses on testing the values at the edges of the input domain. It's based on the idea that errors often occur at the boundaries of the input ranges. Using the same age example, we'd test the boundary values: 17, 18, 65, and 66. This means testing the minimum, just above the minimum, the nominal value, just below the maximum, and the maximum values. For example, if we had a method calculateDiscount(int quantity), where the discount changes for quantities of 1-10, 11-20, and 21-30. We'd use boundary value analysis and test with values: 0, 1, 10, 11, 20, 21, 30, and 31. These values would likely expose errors better than random values.

10. How do you approach testing a feature that involves third-party integrations or external dependencies?

When testing features with third-party integrations, I focus on isolating and mocking the external dependencies to ensure reliable and predictable tests. I use tools like mock libraries or service virtualization to simulate the behavior of the third-party service. This allows me to test various scenarios, including success, failure, and edge cases, without actually interacting with the real service, preventing test flakiness and dependency on external systems.

My approach involves defining clear contracts or interfaces for the integration, testing the data mapping and transformation logic, and verifying the error handling mechanisms. Specifically, I would use libraries like requests-mock in Python or Mockito in Java to stub the external API calls. Furthermore, I would pay special attention to testing rate limiting, authentication, and data validation to ensure the integration behaves as expected under different conditions.

11. What are some key metrics you use to track the progress and effectiveness of your testing efforts?

Key metrics for tracking testing progress and effectiveness include: Test Coverage (percentage of code or requirements covered by tests), Test Execution Rate (percentage of tests executed), Test Pass/Fail Rate (percentage of tests passing versus failing), Defect Density (number of defects found per unit of code or time), and Defect Removal Efficiency (percentage of defects found during testing versus those found in production).

I also track metrics related to Test Cycle Time (time taken to complete a testing cycle) and Mean Time To Detect (MTTD) & Mean Time To Resolve (MTTR) for defects. These metrics help identify bottlenecks, improve test processes, and ultimately deliver higher-quality software faster. Tracking these is paramount to a successful quality assurance program. Tools often used to calculate these metrics are JIRA, TestRail, and custom reporting scripts using languages such as Python.

12. Describe a time when you found a critical bug in production. What were the steps you took to address it, and what did you learn from the experience?

During a high-traffic sales event, our monitoring system alerted us to a sudden spike in failed order placements. Investigating the logs, I quickly identified a race condition in the inventory update service that caused double-selling of limited-stock items. This was critical as it directly impacted revenue and customer satisfaction.

Immediately, I alerted the on-call team and we implemented a temporary fix by disabling the problematic feature, preventing further order failures. Simultaneously, I worked on a permanent solution, introducing a distributed lock to serialize inventory updates. After thorough testing in a staging environment, the fix was deployed to production. We also issued refunds to affected customers and implemented improved monitoring and alerting to catch similar issues earlier. The key learning was the importance of rigorous concurrency testing and proactive monitoring in distributed systems.

13. How do you stay up-to-date with the latest trends and technologies in software testing?

I stay up-to-date through a variety of channels. I regularly read industry blogs and publications like Martin Fowler's blog and the Ministry of Testing. I also follow key influencers and thought leaders on platforms like LinkedIn and Twitter. Subscribing to relevant newsletters, such as those from testing tool vendors, helps me stay informed about new product features and testing methodologies.

Furthermore, I actively participate in online communities and forums like Stack Overflow and Reddit's r/softwaretesting to learn from others' experiences and discuss current trends. I also attend webinars, workshops, and conferences when possible. Finally, practical application by experimenting with new tools and techniques in personal projects or at work is crucial. For example, I recently explored using pytest with the requests library for API testing and documented my findings.

14. What is exploratory testing, and how does it fit into your overall testing approach?

Exploratory testing is a software testing approach where test design and test execution happen simultaneously. Instead of relying on pre-written test cases, testers dynamically design and execute tests based on their understanding of the system, past test results, and potential areas of risk. It emphasizes learning about the application while testing, fostering creativity and adaptability.

In my overall testing approach, exploratory testing complements more structured methods like requirements-based testing and regression testing. I use it to uncover unexpected issues and gain a deeper understanding of the application's behavior, especially in areas not covered by existing test cases. It's most effective when combined with other testing strategies to achieve comprehensive test coverage. Often it is used to generate new test cases that may be automated for future regression testing.

15. Explain the concept of test data management, and why it's important.

Test data management (TDM) encompasses the strategies and practices used to plan, design, generate, acquire, store, control, and maintain the data used for software testing. It's important because effective TDM directly impacts the quality, speed, and cost of testing. Without it, testing can be inaccurate, time-consuming, and expensive. For example, testing with production data might expose sensitive information and without consistent data, tests aren't repeatable.

TDM ensures that the right data is available in the right format at the right time for testing. This can involve creating synthetic data, masking production data, or using data virtualization techniques. Benefits include improved test coverage, reduced risk of data breaches, and faster test execution cycles.

16. How do you handle testing in an Agile development environment?

In an Agile development environment, testing is a continuous and collaborative activity integrated throughout the entire software development lifecycle. We emphasize testing early and often, shifting testing left. This means involving testers from the initial stages of planning and design to identify potential issues and provide feedback on requirements. We adopt test-driven development (TDD) and behavior-driven development (BDD) where appropriate.

Our testing approach is iterative, with testing activities occurring in each sprint. We automate tests (unit, integration, end-to-end) to ensure rapid feedback and regression testing. Collaboration between developers, testers, and business stakeholders is crucial for defining acceptance criteria and ensuring that the delivered software meets the user's needs. We also value exploratory testing to uncover unexpected issues.

17. What are some best practices for writing clear and concise bug reports?

- Use a clear and descriptive title: Summarize the issue concisely. Bad: "Something is broken." Good: "Login button unresponsive on Chrome v115".

- Provide steps to reproduce: List the exact steps needed to trigger the bug. Numbered lists are helpful.

- Describe the expected and actual results: Clearly state what should happen and what actually happened.

- Include relevant environment information: Operating system, browser version, device, etc. Use a code block to show configuration files or commands used.

- Attach screenshots or videos: Visual evidence can be invaluable.

- Specify severity/priority: Indicate the impact of the bug.

- Be concise and avoid irrelevant details: Stick to the facts.

- Use a consistent format: This helps with readability and organization.

18. Describe your experience with mobile testing. What are some unique challenges when testing mobile applications?

I have experience testing mobile applications on both iOS and Android platforms. My experience includes creating and executing test plans, writing test cases, performing functional, regression, performance, and usability testing. I've used tools like Appium and Espresso for automated testing, and Charles Proxy for network traffic analysis.

Mobile testing presents unique challenges such as: fragmented OS versions and device types that increase testing matrix and make it difficult to ensure consistency. Network conditions (2G/3G/4G/5G/WiFi) are highly variable, impacting performance and stability. Interruptions (calls, SMS, notifications) can disrupt application flow. Battery consumption and resource management are critical considerations that require special attention.

19. Explain the difference between unit testing, integration testing, and system testing.

Unit testing focuses on verifying individual components or functions of a software application in isolation. The goal is to ensure that each unit of code works as expected. Integration testing, on the other hand, tests the interaction between different units or modules that have already been unit tested. It verifies that these units work together correctly when combined.

System testing is the process of testing a completely integrated system to verify that it meets its specified requirements. It involves testing the entire system from end to end, simulating real-world scenarios, and validating that all components work together as expected and that the system fulfills its overall purpose.

20. How do you prioritize test cases based on risk and impact?

Prioritizing test cases based on risk and impact involves identifying areas most likely to fail (risk) and those failures causing the most significant problems (impact). Risk is typically assessed by considering the probability of failure and the potential damage it could cause. Impact considers factors such as business criticality, data integrity, and user experience. High-risk, high-impact test cases get the highest priority. For example, tests covering core functionalities like login or payment processing should be prioritized because failures there significantly impact the entire system.

Specifically, I would create a risk assessment matrix. This matrix would have 'likelihood' on one axis and 'impact' on the other. Examples of likelihood criteria include 'very likely', 'likely', 'possible', 'unlikely', 'very unlikely'. Impact criteria could be 'critical', 'major', 'moderate', 'minor', 'negligible'. Each test case is then evaluated and placed within the matrix, enabling clear prioritization from highest to lowest risk. Regression tests covering recently changed code would also be prioritized highly, because recent changes increase the risk of introducing bugs.

21. What are some common anti-patterns in software testing, and how can they be avoided?

Common anti-patterns in software testing include the Ice Cream Cone Anti-Pattern, where most testing effort is concentrated on UI testing, leaving underlying layers untested. This is avoided by shifting left, implementing a testing pyramid with more unit and integration tests. Another is the Mushroom Management anti-pattern, keeping developers in the dark about testing progress. Avoid this by promoting transparency and collaboration with shared dashboards and regular communication. Test Isolation Neglect can also cause flakiness: make sure your tests are isolated using mocks/stubs. Finally, Ignoring Edge Cases leads to bugs in production; avoid by using boundary value analysis and equivalence partitioning when designing tests.

22. Describe a time you had to advocate for better testing practices within your team or organization.

Early in my career, I joined a team that relied heavily on manual testing and lacked automated test coverage for critical components. Recognizing the increasing risk of regressions and the time-consuming nature of manual efforts, I advocated for introducing automated unit and integration tests. I started by demonstrating the value through a proof of concept, writing unit tests for a core module that was frequently modified. I presented the results, highlighting the increased confidence in code changes and the reduced time spent on manual regression testing.

To overcome resistance, I also organized a workshop to teach team members the basics of the testing framework we were using, which helped to get buy-in. Over time, we gradually increased test coverage and shifted towards a more test-driven development approach. I also created a shared library of testing utilities for our team.

23. How would you test a feature that involves complex user interactions or workflows?

For complex user interactions, I'd focus on a mix of testing types. First, I'd break down the workflow into smaller, testable units and use unit tests to verify the logic of individual components. Then, I'd write integration tests to ensure that these components work correctly together. Finally, I'd employ end-to-end tests, simulating real user scenarios, to validate the entire workflow from start to finish. I would use a data driven approach using multiple sets of input data.

Additionally, I'd explore using exploratory testing to uncover unexpected issues or edge cases. Also, I would consider user acceptance testing (UAT) to obtain feedback from real users, especially when dealing with usability or user experience aspects. I may also automate the process to run different input dataset for each interaction.

24. Explain the concept of mutation testing.

Mutation testing is a type of software testing where you introduce small, artificial errors (mutations) into the source code of a program. Each mutated version of the code is called a mutant. The goal is to determine if your existing tests can detect these mutations. If your tests fail on a mutant, it means your tests are effective at catching that type of error.

If your tests don't fail on a mutant, it indicates that either the mutation is equivalent to the original code (meaning it doesn't change the behavior), or, more importantly, that your tests are insufficient and need to be improved to cover that scenario. Common mutations include changing arithmetic operators (+ to -), relational operators (> to <), or negating conditions (if (x) to if (!x)). The percentage of mutants killed (tests fail) versus the total number of mutants is the mutation score, which reflects the quality of the test suite.

25. How do you approach testing for accessibility (e.g., WCAG compliance)?

I approach accessibility testing by first understanding the relevant guidelines, such as WCAG (Web Content Accessibility Guidelines). Then, I employ a combination of automated and manual testing techniques. Automated tools like WAVE, axe DevTools, and Lighthouse can quickly identify common issues like missing alt text, poor color contrast, and improper heading structures.

For manual testing, I use screen readers (NVDA, VoiceOver), keyboard-only navigation, and color contrast analyzers to simulate the experience of users with disabilities. I'll also validate form labels, ARIA attributes, and ensure proper focus order. It's important to involve users with disabilities in the testing process to get direct feedback and identify issues that automated and manual tests might miss.

26. What strategies would you use to test a large enterprise application with many modules?

Testing a large enterprise application requires a strategic approach. I'd prioritize risk-based testing, focusing on modules with the highest impact and likelihood of failure. This involves identifying critical functionalities and integration points. A combination of testing types is necessary, including unit tests for individual components, integration tests for module interactions, system tests to validate the end-to-end functionality, and user acceptance testing (UAT) to ensure it meets business requirements. Performance testing (load and stress) is also crucial to ensure scalability.

Furthermore, a phased approach can be effective. This might involve testing in stages, such as core modules first, followed by less critical components. Test automation is essential for regression testing, especially after code changes. Utilize a test management tool for tracking test cases, results, and defects. Effective communication and collaboration between development, testing, and business teams are vital for a successful testing process. Consider using mocking frameworks like Mockito (Java) to isolate components for unit testing.

Advanced Software Testing interview questions

1. How would you design a testing strategy for a highly scalable microservices architecture?

A testing strategy for a highly scalable microservices architecture should employ a pyramid approach, prioritizing automated tests. Start with unit tests to validate individual microservice components in isolation. Then, implement integration tests to verify interactions between services, focusing on contract testing to ensure APIs adhere to defined specifications. For scalability, load and performance tests are crucial, simulating high user traffic to identify bottlenecks. Finally, end-to-end tests simulate real user scenarios across multiple services.

Monitoring and logging are also key. Incorporate chaos engineering to inject failures and test resilience. Automate deployment and testing using CI/CD pipelines to ensure faster feedback. Shift-left testing by engaging development teams in testing activities early in the SDLC.

2. Explain the difference between chaos engineering and traditional testing methods and where chaos engineering can be applied?

Chaos engineering and traditional testing differ significantly in their approach. Traditional testing aims to verify expected behavior by following predefined test cases and validating outputs against known inputs. It focuses on finding bugs under controlled conditions. Chaos engineering, on the other hand, proactively introduces real-world failures and unexpected conditions into a system to identify weaknesses and improve resilience. It’s about breaking things on purpose to learn how they react.

Chaos engineering is best applied in complex, distributed systems, such as microservices architectures or cloud-based applications, where emergent behavior and unexpected interactions are common. It's particularly valuable for testing failure recovery mechanisms, monitoring systems, and infrastructure resilience. While you still need traditional testing for functional verification, chaos engineering augments it by revealing vulnerabilities that standard tests might miss.

3. Describe your experience with performance testing distributed systems. What are the key challenges?

My experience with performance testing distributed systems involves using tools like JMeter, Gatling, and Locust to simulate user load and analyze system behavior under stress. I've worked on testing microservices architectures, message queues (like Kafka), and distributed databases. A typical workflow includes defining performance goals (latency, throughput), creating realistic test scenarios, executing tests, monitoring key metrics (CPU, memory, network I/O), and identifying bottlenecks.

Key challenges include: Complexity: Distributed systems have many moving parts, making it difficult to pinpoint the root cause of performance issues. Environment setup: Replicating a production-like environment for testing can be costly and complex. Data consistency: Ensuring data integrity across distributed nodes during high load is crucial. Monitoring: Collecting and analyzing metrics from various components requires robust monitoring infrastructure. Coordination: Coordinating tests and analyzing results across multiple teams and systems can be challenging. Network latency: Network issues become more pronounced. Race conditions: It is essential to look out for race conditions that may occur under high load which may not be apparent in testing.

4. How do you approach testing AI/ML-powered applications, focusing on bias and fairness?

Testing AI/ML applications for bias and fairness requires a multifaceted approach. First, data bias is a primary concern. We need to rigorously examine the training data for skewed representation, ensuring diverse and representative datasets are used. Metrics like disparate impact and statistical parity can help quantify bias in outcomes. Secondly, model bias should be addressed by using different model architectures, regularization techniques, and fairness-aware algorithms designed to mitigate bias during training. Finally, during evaluation, use diverse test datasets and evaluate performance across different demographic groups, measuring relevant fairness metrics to identify and address potential biases in the model's predictions. Use techniques such as A/B testing to compare model outputs across different demographic groups. Document findings and iterate on the model and data.

5. Can you explain the challenges of testing in a serverless environment and how you would address them?

Testing in a serverless environment presents unique challenges due to its distributed and event-driven nature. Traditional testing methods often fall short. Key challenges include: Statelessness: Serverless functions are often stateless, making it difficult to track state and debug issues. Event-Driven Architecture: Testing event flows and ensuring proper triggering and handling of events can be complex. Integration Testing: Validating interactions between different serverless functions and services is crucial but challenging to set up. Cold Starts: The latency introduced by cold starts can impact performance and requires specific testing. Limited Observability: Monitoring and debugging can be difficult due to the lack of traditional server logs and metrics.

To address these challenges, I'd employ strategies like: Unit testing: rigorously testing individual functions in isolation using mocking to simulate dependencies. Integration testing: using tools like AWS SAM Local or Serverless Framework to deploy and test functions locally or in a staging environment, focusing on end-to-end workflows. Contract testing: verifying that functions adhere to defined contracts for input and output data. Performance testing: simulating realistic workloads to identify cold start issues and performance bottlenecks. Monitoring and logging: utilizing serverless-specific monitoring tools (e.g., AWS CloudWatch, Datadog) to gain visibility into function behavior and performance. Using testing frameworks designed for serverless: such as Jest, Mocha, or Chai with appropriate plugins.

6. Describe your experience in creating and maintaining automated test suites within a CI/CD pipeline. How do you measure its effectiveness?

I have extensive experience creating and maintaining automated test suites integrated within CI/CD pipelines using tools like Jenkins, GitLab CI, and Azure DevOps. My approach involves defining a clear test strategy encompassing unit, integration, and end-to-end tests. I utilize testing frameworks such as JUnit, pytest, Selenium, and Cypress to write and execute these tests. The suites are designed to provide rapid feedback during the development cycle, ensuring code quality and preventing regressions. For example, a typical pipeline would trigger unit tests on every commit, integration tests on pull requests, and end-to-end tests during deployments to staging environments.

I measure the effectiveness of automated test suites through several key metrics. These include: Test Coverage (percentage of code covered by tests), Test Execution Time (how long the tests take to run), Test Pass Rate (percentage of tests that pass), Defect Detection Rate (number of defects found by the automated tests before production), and Mean Time To Detect (MTTD) for failures. Analyzing these metrics helps identify areas for improvement in the test suite, such as adding missing tests or optimizing existing ones. Code analysis tools like SonarQube are integrated to measure code quality and complexity, which further informs the test strategy.

7. How would you approach testing a feature that involves complex data transformations and integrations with multiple external APIs?

Testing a feature with complex data transformations and multiple API integrations requires a multi-faceted approach. First, I'd focus on unit testing the individual data transformation functions to ensure they handle various input scenarios correctly, including edge cases and error conditions. Mocking external API calls is crucial here, allowing me to isolate the transformation logic. For example, using libraries like unittest.mock in Python to simulate API responses and verify the transformations produce the expected output.

Next, I'd implement integration tests to verify the interactions between different components and the external APIs. This involves setting up test environments that mimic the production environment as closely as possible. Tools like Postman or specialized API testing frameworks can be used to send requests to the actual APIs and validate the responses. I'd also use contract testing to ensure that the data being sent to and received from the APIs conforms to the agreed-upon schemas. Finally, end-to-end tests should cover the entire feature flow, ensuring that the system as a whole functions as expected.

8. Explain your approach to security testing, including tools and methodologies you have used.

My approach to security testing is multifaceted, encompassing vulnerability scanning, penetration testing, and security audits. I prioritize identifying potential weaknesses in systems and applications before they can be exploited. I typically start with vulnerability scanning using tools like Nessus, OpenVAS, or Nmap to automatically detect known vulnerabilities. This is followed by penetration testing, where I simulate real-world attacks to assess the impact of those vulnerabilities. Tools like Burp Suite, OWASP ZAP, and Metasploit are used to perform these tests. Methodologies I follow include OWASP Testing Guide and NIST guidelines.

Beyond technical tools, I also conduct security audits, reviewing code, configurations, and access controls to ensure they adhere to security best practices. Static code analysis tools like SonarQube can be used to identify potential security flaws in the code. The overall goal is to ensure a layered approach to security, combining automated tools with manual analysis to provide comprehensive coverage. I also emphasize the importance of continuous security testing as an integral part of the software development lifecycle, to catch and fix vulnerabilities early on.

9. How do you stay updated with the latest trends and technologies in software testing?

I stay updated through a variety of channels. I actively follow industry blogs and websites like Ministry of Testing, Guru99, and relevant vendor blogs (e.g., BrowserStack, Sauce Labs). I also participate in online communities and forums such as Stack Overflow and relevant subreddits (r/softwaretesting) to learn from other professionals and understand common challenges and solutions. I subscribe to newsletters and attend webinars/conferences focused on software testing to learn about new tools, techniques, and best practices.

Specifically, I often look into areas like test automation frameworks (Selenium, Cypress, Playwright), performance testing tools (JMeter, LoadView), and emerging technologies like AI-powered testing and low-code testing platforms. When I learn something new, I try to practically apply it in a personal project or contribute to open-source projects. This helps me solidify my understanding and stay current with the evolving landscape of software testing.

10. Discuss a time when you identified a critical bug in production. What steps did you take to resolve it, and what did you learn?

During a high-traffic period, our monitoring system alerted us to a sudden spike in error rates for a critical payment processing service. I immediately checked the logs and discovered a NullPointerException occurring intermittently. The stack trace pointed to a recent code deployment that introduced a new feature related to discount calculations. It seemed that in some edge cases, a discount object was not being properly initialized, leading to the error.

To resolve this, I first alerted the on-call team and initiated a rollback to the previous stable version of the service. This immediately mitigated the error rate and stabilized the system. Then, I reproduced the bug in a staging environment, identified the root cause in the code, and implemented a fix that included proper null checks and object initialization. Before deploying the fix to production, I thoroughly tested it with different input scenarios. We then deployed the patched version with continuous monitoring to ensure stability. I learned the importance of comprehensive unit and integration tests, especially for edge cases and handling null values. Additionally, this experience reinforced the value of having robust monitoring and alerting systems to quickly identify and respond to production issues.

11. How would you design a test plan for a system where requirements are constantly evolving?

When dealing with constantly evolving requirements, my test plan would prioritize flexibility and adaptability. I'd use an agile testing approach, focusing on continuous testing and feedback loops. This includes:

- Incremental Test Design: Instead of creating a comprehensive test plan upfront, tests are designed and executed in small increments, aligned with each iteration or sprint.

- Risk-Based Testing: Prioritize testing based on the likelihood and impact of potential failures. This ensures critical functionalities are tested thoroughly even with limited time.

- Automated Testing: Heavily rely on automated tests (unit, integration, and UI where feasible) to ensure rapid feedback and regression testing. Tools and frameworks should be easily adaptable to code changes.

- Exploratory Testing: Incorporate exploratory testing to discover unexpected issues that might be missed by predefined test cases. This can uncover nuanced behaviours in rapidly changing systems.

- Communication & Collaboration: Maintain close communication with developers and product owners to understand new requirements and changes promptly. Early involvement helps in designing effective tests and reduces the chances of late discovery of defects.

- Continuous Integration/Continuous Delivery (CI/CD): Integrate tests into the CI/CD pipeline to ensure that every code change is automatically tested and validated.

12. Explain your understanding of contract testing and its benefits in microservices architecture.

Contract testing is a technique for ensuring that microservices can communicate with each other. It verifies that each service adheres to the contracts (agreements) it has with its consumers. In essence, a consumer service defines what it expects from a provider service, and the provider service verifies that it meets these expectations. This differs from traditional integration testing, which tests the entire system end-to-end.

The benefits in a microservices architecture are significant. Contract testing allows teams to test services independently, reducing the need for complex and brittle end-to-end tests. This leads to faster feedback cycles, increased development velocity, and improved reliability. It also encourages better communication and collaboration between teams, as they need to explicitly define the contracts between their services. For example, if service A, a consumer, expects service B, a provider, to return a JSON object with fields id and name, the contract test for service B would verify that it indeed returns such an object. This ensures that when service A calls service B, it will receive the data it expects, avoiding runtime errors.

13. Describe a situation where you had to convince stakeholders about the importance of investing in testing. What arguments did you use?

I once worked on a project where stakeholders were hesitant to allocate sufficient resources for testing, prioritizing feature development instead. I addressed this by highlighting the potential long-term benefits of robust testing. My arguments included:

- Reduced Development Costs: I explained that catching bugs early through testing is significantly cheaper than fixing them later in the development cycle or, worse, after deployment. Fixing bugs in production can involve emergency patches, downtime, and potentially data loss, all of which are expensive.

- Improved Product Quality and Reliability: I emphasized that thorough testing leads to a more stable and reliable product, resulting in a better user experience and increased customer satisfaction. This can lead to positive reviews, increased adoption, and ultimately, higher revenue.

- Reduced Risk: I argued that investing in testing reduces the risk of critical failures and security vulnerabilities, which could damage the company's reputation and lead to legal liabilities. I presented data from similar projects demonstrating the cost of neglecting testing versus the return on investment in testing efforts. I also used examples of production defects that would have been found earlier with more testing and the actual cost to resolve those defects.

14. How do you prioritize test cases when time and resources are limited?

When facing limited time and resources, prioritize test cases based on risk and impact. Focus on testing critical functionalities and those most likely to fail or cause significant harm to the user experience. Consider using a risk assessment matrix to categorize test cases based on their probability of failure and the potential impact of that failure.

Specific techniques include:

- Prioritize tests covering core functionality (smoke tests).

- Focus on tests related to recent code changes (regression tests).

- Prioritize tests covering high-traffic areas or frequently used features.

- Consider testing edge cases and boundary conditions, but only after core functionality is covered.

- Defer tests with low risk and impact to future testing cycles or automate them, if possible. Use Exploratory testing for other areas.

15. Explain your experience with testing cloud-native applications, focusing on scalability and resilience.

I have experience testing cloud-native applications with a focus on scalability and resilience. For scalability, I've used tools like JMeter and Gatling to simulate user load and identify bottlenecks in the application architecture. I've also used cloud provider specific tools like AWS CloudWatch and Azure Monitor to track key metrics like CPU utilization, memory consumption, and network latency under load. This involved testing horizontal scaling capabilities (adding more instances) and vertical scaling (increasing the resources of existing instances).

Regarding resilience, my experience includes testing fault tolerance by simulating failures of different components, such as databases, message queues, or individual microservices. Tools like Chaos Monkey or custom scripts were used to inject these failures. I've also worked with teams to implement and test retry mechanisms, circuit breakers, and load shedding strategies to ensure the application remains available even when parts of the system fail. Key areas included validating that the system could recover automatically from failures and maintain service levels, as well as verifying alert and monitoring systems were properly configured to detect and respond to such events. We also conducted disaster recovery exercises by simulating complete region or availability zone failures.

16. Describe your process for debugging complex issues involving multiple systems or services.

My process for debugging complex, multi-system issues involves a structured approach. First, I focus on isolation and reproduction: I try to narrow down the failing component and reproduce the error consistently. Next, I employ systematic data gathering: checking logs across all involved systems, monitoring metrics (CPU, memory, network I/O), and examining any available error traces or dumps. I utilize correlation IDs and timestamps to follow the flow of a request across services. I then perform root cause analysis: based on gathered data I formulate hypotheses and test them methodically. Tools like tcpdump, strace, and debuggers become crucial. Effective communication is essential: I collaborate closely with relevant teams (networking, database, etc.) to gather insights and validate assumptions. Finally, I document the problem, solution, and steps taken for future reference, adding monitoring or alerts to prevent recurrence.

17. How do you handle testing data privacy and security in regulated environments?

Testing data privacy and security in regulated environments requires a multi-faceted approach. Firstly, data masking and anonymization techniques are crucial when using production-like data in test environments. This ensures that sensitive information is not exposed during testing. Secondly, strict access controls and role-based permissions should be implemented to limit who can access test data and environments. Data encryption, both in transit and at rest, is also important.

Regular security audits and penetration testing are essential to identify vulnerabilities. These audits should specifically focus on data privacy aspects. Furthermore, it's important to maintain detailed audit logs of all data access and modifications within the test environments. Finally, compliance with relevant regulations (e.g., GDPR, HIPAA) needs to be continuously monitored and validated through testing and documentation. Automation using tools for data masking and automated security scans can streamline the process.

18. Explain your understanding of model-based testing and when it is most effective.

Model-based testing (MBT) is a software testing technique where test cases are derived from a model that describes the expected behavior of the system under test (SUT). This model can be a formal specification, a state machine diagram, or even a less formal representation like a use case diagram. Instead of manually writing test cases, the model is used to automatically generate them, increasing test coverage and efficiency. It is most effective when testing complex systems with many possible states and transitions, systems requiring high reliability or safety, or systems where requirements are well-defined and stable, allowing for a reliable model to be created. MBT is also beneficial for regression testing, as the model can be reused to generate new test cases after changes to the system.

19. How do you measure and report on the quality of your testing efforts?

I measure and report on testing quality using a combination of quantitative and qualitative metrics. Quantitatively, I track metrics like:

- Defect Density: Number of defects found per unit of code or test hours.

- Test Coverage: Percentage of code covered by automated tests, often measured using tools like SonarQube or JaCoCo.

- Test Execution Rate: Number of tests executed per unit time and pass/fail rate of executed tests.

- Defect Removal Efficiency: Percentage of defects found before release versus after release. Also Mean Time To Detect and Mean Time To Resolve bugs post-release.

Qualitatively, I gather feedback from developers and stakeholders regarding the clarity and usefulness of test reports, the thoroughness of testing, and the overall impact of testing on product quality. I also focus on continuous improvement by analyzing defect trends to identify areas where the testing process can be improved, and by regularly reviewing and updating test strategies to align with evolving project needs.

20. Describe a time when you had to work with developers to improve code quality. What strategies did you use?

In a previous role, I noticed inconsistencies in the codebase that were leading to bugs and hindering maintainability. To address this, I initiated a collaborative effort with the development team focusing on improving code quality. We implemented several strategies including:

- Code Reviews: I actively participated in code reviews, providing constructive feedback on coding style, logic, and potential performance bottlenecks. I focused on identifying areas where code could be made more readable, testable, and efficient. For example, suggesting refactoring complex methods into smaller, more manageable functions.

- Static Analysis Tools: We introduced static analysis tools like SonarQube to automatically detect code smells, bugs, and security vulnerabilities. This helped us identify areas needing improvement quickly. We configured rulesets to align with our team's coding standards.

- Unit Testing: I advocated for increased unit test coverage and worked with developers to write more comprehensive tests. I emphasized the importance of testing edge cases and boundary conditions. We adopted a Test-Driven Development (TDD) approach for new features whenever possible.

- Refactoring Sessions: We scheduled dedicated refactoring sessions to address technical debt and improve the overall design of the codebase. These sessions provided a collaborative environment where developers could share knowledge and learn from each other.

Expert Software Testing interview questions

1. How would you design a test strategy for a self-driving car's navigation system?

A test strategy for a self-driving car's navigation system would involve a layered approach. First, unit tests would verify individual modules like path planning algorithms, sensor data processing, and localization accuracy. Simulation plays a critical role, including: creating a diverse range of scenarios (weather conditions, road types, pedestrian behavior) in a virtual environment and executing thousands of tests to identify edge cases and potential failures.

Then, integration tests would assess the interaction between these modules, focusing on data flow and system-level functionality. Finally, real-world testing on closed courses and public roads (with safety drivers) would validate the system's performance in complex and unpredictable environments. This includes regression testing, performance testing (latency, resource usage), and fault injection to ensure robustness. Key metrics: accuracy of route calculation, adherence to traffic rules, safety incident rates, and recovery from unexpected events.

2. Describe how you would approach testing a highly scalable microservices architecture.