Hiring the right candidate with the appropriate Snowflake skills is paramount for any data-driven organization. Prepared interview questions can help significantly in identifying the most adept candidates for your team.

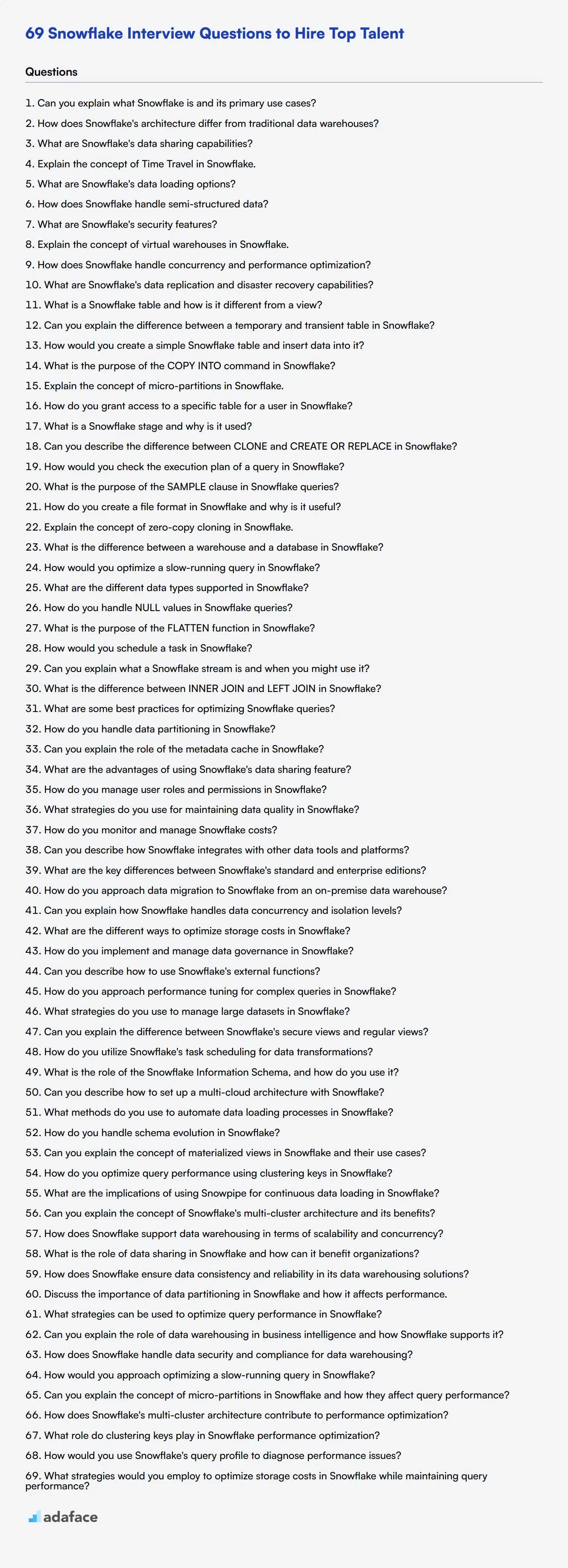

This blog post covers a comprehensive list of Snowflake interview questions segmented into basic, intermediate, and advanced levels, along with specialized sections on data warehousing and performance optimization. These questions are crafted to help you evaluate applicants at various skill tiers.

Using these questions will streamline your interview process and enhance your ability to assess candidates' Snowflake expertise. For a more thorough evaluation, consider leveraging an online Snowflake test before conducting interviews.

Table of contents

10 basic Snowflake interview questions and answers to assess applicants

Ready to assess your Snowflake developer candidates? These 10 basic interview questions will help you gauge their understanding of key concepts and practical applications. Use this list to kickstart your interviews and identify candidates with the right mix of technical skills and problem-solving abilities.

1. Can you explain what Snowflake is and its primary use cases?

Snowflake is a cloud-based data warehousing platform that provides a fully managed, scalable solution for storing and analyzing large volumes of structured and semi-structured data. It separates compute and storage, allowing for independent scaling of resources.

Primary use cases include data warehousing, data lakes, data engineering, data science, data application development, and secure data sharing across organizations.

Look for candidates who can articulate Snowflake's cloud-native architecture and its benefits, such as scalability, performance, and ease of use. Strong answers will also touch on real-world applications and how Snowflake differs from traditional on-premises data warehouses.

2. How does Snowflake's architecture differ from traditional data warehouses?

Snowflake's architecture is unique in its separation of compute, storage, and cloud services layers. This multi-cluster, shared data architecture allows for:

- Independent scaling of compute and storage

- Concurrent querying without resource contention

- Automatic query optimization and caching

- Near-zero maintenance, as Snowflake manages all infrastructure

Ideal responses should highlight the benefits of this architecture, such as improved performance, cost-efficiency, and flexibility. Candidates might also compare it to traditional data warehouses that typically have tightly coupled compute and storage.

3. What are Snowflake's data sharing capabilities?

Snowflake offers secure data sharing capabilities that allow organizations to share live, governed data with other Snowflake accounts without moving or copying the data. This feature enables:

- Sharing of databases, tables, or views

- Real-time access to shared data

- Granular access controls

- Creation of data marketplaces or exchanges

Look for candidates who understand the implications of secure data sharing for business collaboration and data monetization. They should be able to explain how this feature differs from traditional data sharing methods and its potential applications in various industries.

4. Explain the concept of Time Travel in Snowflake.

Time Travel is a feature in Snowflake that allows users to access historical data at any point within a defined period. It enables:

- Query data as it existed at a specific point in the past

- Restore tables, schemas, or databases that were accidentally dropped

- Create clones of tables at specific points in time

- Analyze data changes over time

Strong candidates should be able to discuss the practical applications of Time Travel, such as auditing, data recovery, and historical analysis. They might also mention the default retention period and how it can be configured.

5. What are Snowflake's data loading options?

Snowflake provides various methods for loading data into its platform:

- Bulk loading using the COPY command

- Continuous loading with Snowpipe

- External tables for querying data in external storage

- Data loading from cloud storage services (e.g., AWS S3, Azure Blob Storage)

- Integration with ETL tools and data integration platforms

Look for candidates who can explain the pros and cons of each method and provide examples of when to use each approach. They should also be familiar with data engineering concepts related to data loading and transformation.

6. How does Snowflake handle semi-structured data?

Snowflake provides native support for semi-structured data through its VARIANT data type, which can store JSON, Avro, ORC, Parquet, and XML data. Key features include:

- Automatic parsing and optimization of semi-structured data

- Ability to query nested data using dot notation or FLATTEN functions

- Schema-on-read capabilities, allowing for flexible data models

Ideal responses should demonstrate an understanding of how this feature simplifies working with diverse data types and enables more agile data analytics. Candidates might also discuss the performance implications and best practices for querying semi-structured data in Snowflake.

7. What are Snowflake's security features?

Snowflake offers a comprehensive set of security features to protect data and ensure compliance:

- End-to-end encryption for data at rest and in transit

- Role-based access control (RBAC)

- Multi-factor authentication

- IP whitelisting

- Secure data sharing

- Automatic encryption key rotation

- Column-level security

Strong candidates should be able to explain how these features work together to create a secure data environment. They might also discuss Snowflake's compliance certifications (e.g., SOC 2, HIPAA, GDPR) and how to implement security best practices in a Snowflake deployment.

8. Explain the concept of virtual warehouses in Snowflake.

Virtual warehouses in Snowflake are compute clusters that execute SQL queries and data loading/unloading operations. Key characteristics include:

- Independent scaling of compute resources

- Ability to create multiple warehouses for different workloads

- Automatic suspension and resumption to optimize costs

- Support for different sizes (X-Small to 4X-Large) to match performance needs

Look for candidates who can explain how virtual warehouses enable workload isolation and resource management. They should also be able to discuss strategies for optimizing warehouse usage to balance performance and cost.

9. How does Snowflake handle concurrency and performance optimization?

Snowflake addresses concurrency and performance through several mechanisms:

- Multi-cluster warehouses for automatic query load balancing

- Result caching to improve query performance

- Automatic query optimization and pruning

- Resource monitors to control compute costs

- Workload management through virtual warehouses

Strong candidates should be able to explain how these features work together to handle high concurrency scenarios and optimize query performance. They might also discuss best practices for designing efficient queries and managing resources in a Snowflake environment.

10. What are Snowflake's data replication and disaster recovery capabilities?

Snowflake provides built-in features for data replication and disaster recovery:

- Automatic data replication across availability zones

- Database replication across regions or cloud providers

- Fail-safe storage for short-term data recovery

- Time Travel for point-in-time recovery

- Zero-copy cloning for creating instant backups

Look for candidates who can explain the importance of these features in ensuring business continuity and data availability. They should be able to discuss scenarios where each capability would be useful and how to implement a comprehensive disaster recovery strategy using Snowflake.

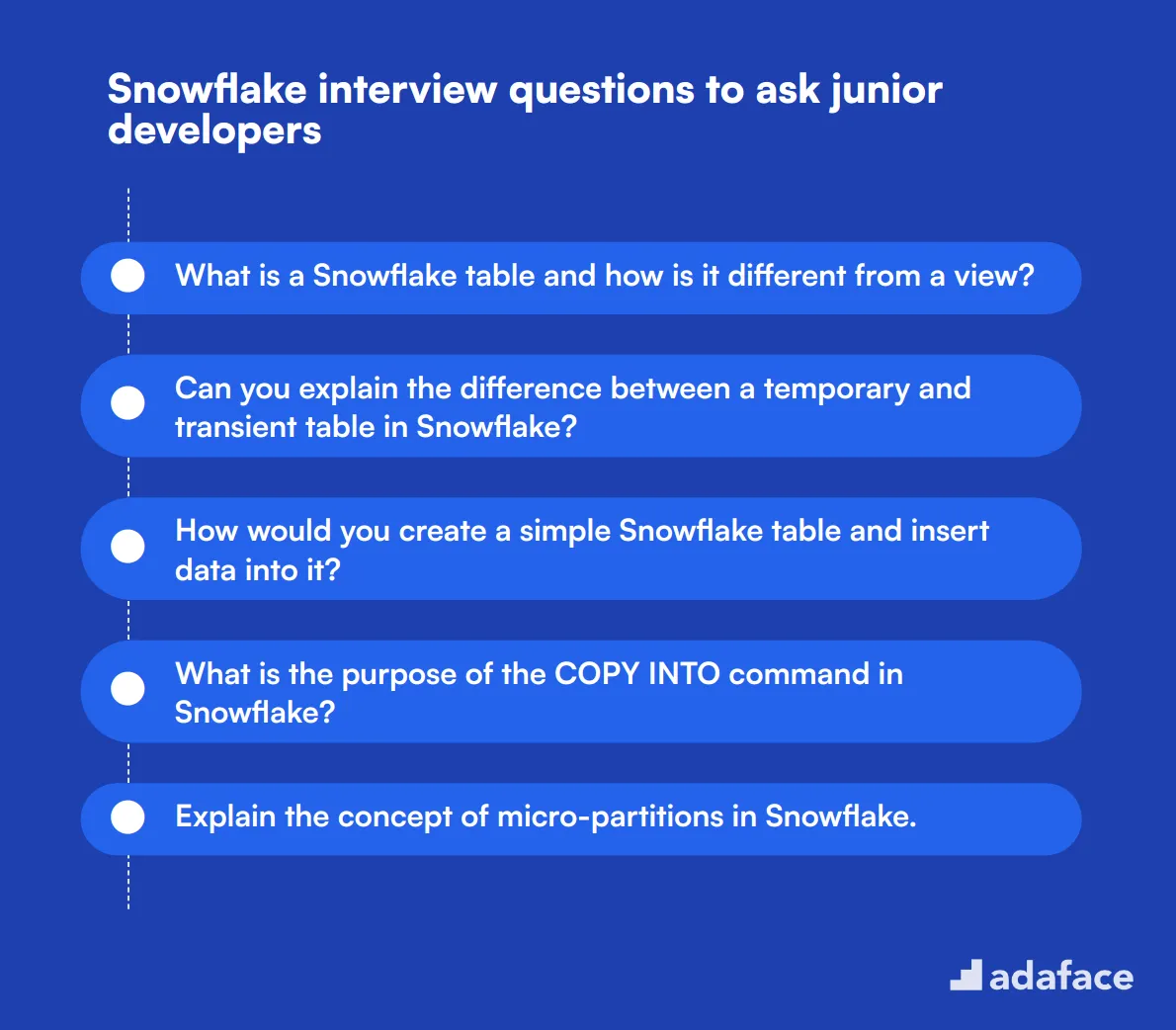

20 Snowflake interview questions to ask junior developers

To assess the foundational knowledge of junior Snowflake developers, use these 20 interview questions. They cover basic concepts and practical skills, helping you identify candidates with potential for growth in this role.

- What is a Snowflake table and how is it different from a view?

- Can you explain the difference between a temporary and transient table in Snowflake?

- How would you create a simple Snowflake table and insert data into it?

- What is the purpose of the COPY INTO command in Snowflake?

- Explain the concept of micro-partitions in Snowflake.

- How do you grant access to a specific table for a user in Snowflake?

- What is a Snowflake stage and why is it used?

- Can you describe the difference between CLONE and CREATE OR REPLACE in Snowflake?

- How would you check the execution plan of a query in Snowflake?

- What is the purpose of the SAMPLE clause in Snowflake queries?

- How do you create a file format in Snowflake and why is it useful?

- Explain the concept of zero-copy cloning in Snowflake.

- What is the difference between a warehouse and a database in Snowflake?

- How would you optimize a slow-running query in Snowflake?

- What are the different data types supported in Snowflake?

- How do you handle NULL values in Snowflake queries?

- What is the purpose of the FLATTEN function in Snowflake?

- How would you schedule a task in Snowflake?

- Can you explain what a Snowflake stream is and when you might use it?

- What is the difference between INNER JOIN and LEFT JOIN in Snowflake?

10 intermediate Snowflake interview questions and answers to ask mid-tier developers

To effectively gauge whether mid-tier Snowflake developers have the right skills for your team, ask them some of these intermediate interview questions. These questions will help you assess their ability to handle more complex tasks without diving too deep into the technical weeds.

1. What are some best practices for optimizing Snowflake queries?

Optimizing Snowflake queries involves several best practices. First, encourage efficient use of SELECT statements by specifying only the columns you need. This reduces the amount of data processed and speeds up the query.

Another key practice is to use clustering keys effectively. Clustering your tables based on frequently queried columns can significantly improve query performance. Additionally, avoid using SELECT * as it fetches all columns, which can be inefficient.

Look for candidates who can discuss these best practices and provide real-world examples of how they've optimized queries in the past. Ideal responses should include specific techniques and their impact on performance.

2. How do you handle data partitioning in Snowflake?

In Snowflake, data partitioning is managed through micro-partitions, which are automatically created and maintained by the platform. These micro-partitions allow for efficient data storage and retrieval without requiring manual intervention.

Candidates should explain that while traditional data warehouses often require manual partitioning, Snowflake automates this process. This results in more efficient query performance and reduced storage costs.

Look for candidates who understand the concept of micro-partitions and can articulate the benefits of automated data partitioning in Snowflake. An ideal answer should highlight the efficiency and cost-saving aspects.

3. Can you explain the role of the metadata cache in Snowflake?

The metadata cache in Snowflake plays a critical role in speeding up query execution. It stores information about the schema, tables, and micro-partitions, allowing for quicker access to this data during query execution.

By caching metadata, Snowflake reduces the need for repetitive scans of the underlying data, which can significantly enhance performance, especially for complex queries.

Ideal candidates should demonstrate an understanding of how the metadata cache works and its importance in optimizing query performance. They should also discuss scenarios where metadata caching has made a tangible impact on their projects.

4. What are the advantages of using Snowflake's data sharing feature?

Snowflake's data sharing feature allows organizations to share live data securely and efficiently with external partners without data replication. This ensures that all parties have access to the most up-to-date information while maintaining data security and governance.

Another advantage is the ease of collaboration. By sharing data directly from Snowflake, organizations can avoid the complexities and costs associated with traditional data sharing methods like FTP or email.

Candidates should highlight these advantages and provide examples of how they've utilized data sharing in their projects. Look for answers that emphasize security, efficiency, and real-world applications of this feature.

5. How do you manage user roles and permissions in Snowflake?

Managing user roles and permissions in Snowflake involves creating roles that align with job functions and assigning these roles to users. Roles can be granted specific privileges, such as access to databases, schemas, or tables, ensuring that users only have the permissions needed for their tasks.

Candidates should mention the principle of least privilege, which involves giving users the minimum level of access necessary to perform their duties. This helps maintain security and compliance.

Look for candidates who can discuss their approach to setting up roles and permissions and provide examples of how they've implemented these practices in previous projects. An ideal answer should demonstrate a clear understanding of security best practices.

6. What strategies do you use for maintaining data quality in Snowflake?

Maintaining data quality in Snowflake involves several strategies, including data validation rules, automated data cleaning processes, and regular audits. These practices help ensure that the data remains accurate, consistent, and reliable.

Candidates might also mention the use of Snowflake's built-in features like data profiling and automated alerts for detecting anomalies or inconsistencies in the data.

An ideal response should highlight a combination of automated and manual processes for maintaining data quality. Look for candidates who can provide examples of how they've implemented these strategies in their projects.

7. How do you monitor and manage Snowflake costs?

Monitoring and managing Snowflake costs involves using the platform's built-in tools to track resource usage and set up cost-control measures. This includes monitoring virtual warehouse usage, setting up resource monitors, and leveraging Snowflake's detailed billing and usage reports.

Candidates should also mention the importance of optimizing storage and compute resources to avoid unnecessary costs. This includes cleaning up unused tables, optimizing query performance, and scaling virtual warehouses based on demand.

Look for candidates who can discuss their approach to cost management and provide examples of how they've successfully reduced costs in their previous work. An ideal answer should demonstrate a proactive approach to cost optimization.

8. Can you describe how Snowflake integrates with other data tools and platforms?

Snowflake offers robust integration capabilities with various data tools and platforms, including ETL tools, BI tools, and cloud storage services. This allows organizations to seamlessly move data in and out of Snowflake, enabling comprehensive data analysis and reporting.

Candidates should mention specific integrations they've worked with, such as connecting Snowflake with Tableau for data visualization or using Apache Kafka for real-time data streaming.

Look for candidates who can discuss their experience with different integrations and provide examples of how these integrations have benefited their projects. An ideal answer should demonstrate a solid understanding of Snowflake's interoperability.

9. What are the key differences between Snowflake's standard and enterprise editions?

Snowflake offers different editions, each tailored to specific needs. The standard edition provides essential features for data storage and querying, while the enterprise edition includes advanced features like multi-cluster warehouses, more robust security options, and additional data sharing capabilities.

Candidates should mention that the enterprise edition also offers higher levels of support and compliance features, making it suitable for larger organizations with more complex data needs.

Look for candidates who can articulate these differences and explain why an organization might choose one edition over the other. An ideal answer should demonstrate an understanding of the trade-offs between cost and features.

10. How do you approach data migration to Snowflake from an on-premise data warehouse?

Data migration to Snowflake involves several key steps, including planning, data extraction, data transformation, and data loading. It's crucial to develop a detailed migration plan that outlines each step and identifies potential challenges.

Candidates should mention the importance of data validation and testing throughout the migration process to ensure data integrity. Using Snowflake's built-in tools and third-party ETL tools can streamline the migration and minimize downtime.

Look for candidates who can discuss their experience with data migration projects and provide examples of successful migrations. An ideal answer should highlight a structured approach and a focus on data quality and minimal disruption.

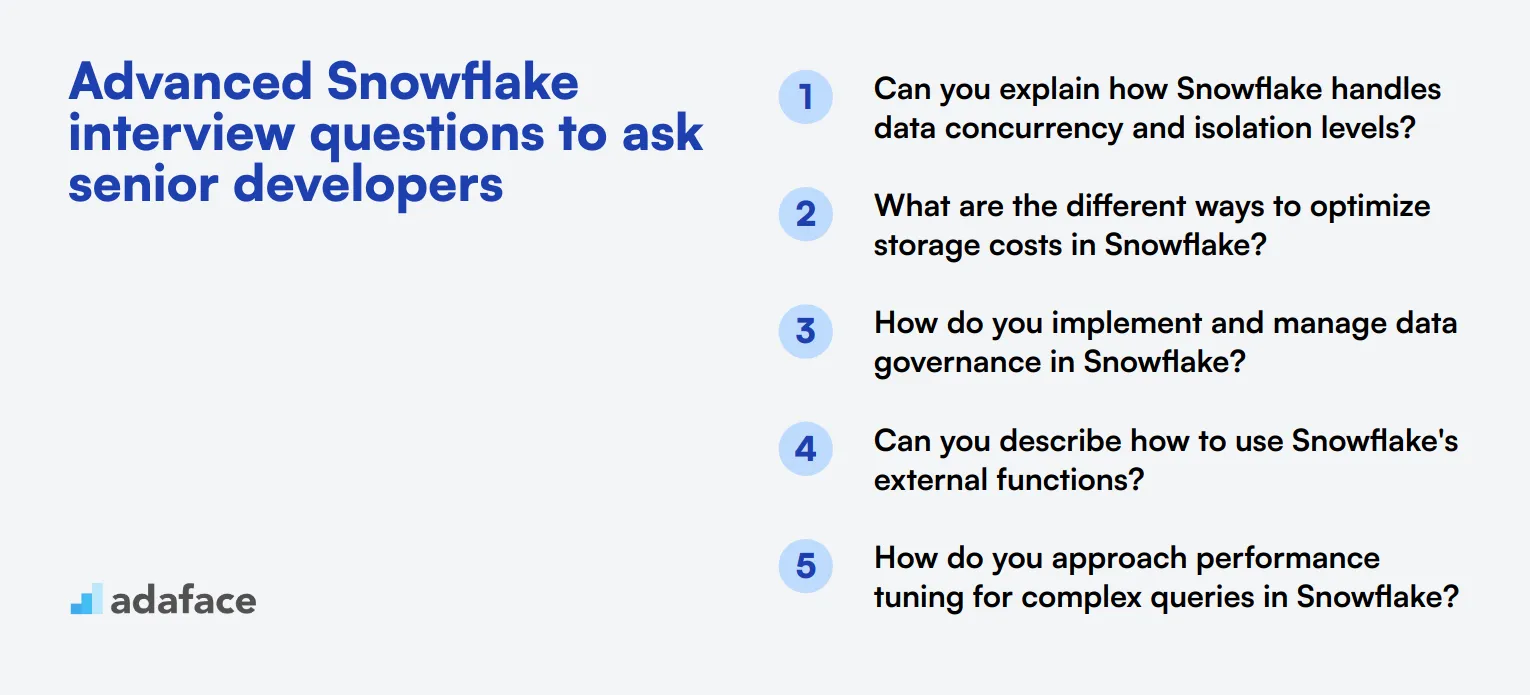

15 advanced Snowflake interview questions to ask senior developers

To assess whether your candidates possess the advanced skills necessary for complex tasks in Snowflake, utilize these interview questions. They are designed to dive deep into the intricacies of Snowflake's capabilities and workflows, helping you identify top-notch talent for your Snowflake developer job description.

- Can you explain how Snowflake handles data concurrency and isolation levels?

- What are the different ways to optimize storage costs in Snowflake?

- How do you implement and manage data governance in Snowflake?

- Can you describe how to use Snowflake's external functions?

- How do you approach performance tuning for complex queries in Snowflake?

- What strategies do you use to manage large datasets in Snowflake?

- Can you explain the difference between Snowflake's secure views and regular views?

- How do you utilize Snowflake's task scheduling for data transformations?

- What is the role of the Snowflake Information Schema, and how do you use it?

- Can you describe how to set up a multi-cloud architecture with Snowflake?

- What methods do you use to automate data loading processes in Snowflake?

- How do you handle schema evolution in Snowflake?

- Can you explain the concept of materialized views in Snowflake and their use cases?

- How do you optimize query performance using clustering keys in Snowflake?

- What are the implications of using Snowpipe for continuous data loading in Snowflake?

8 Snowflake interview questions and answers related to data warehousing

To find out if candidates have the essential data warehousing knowledge for Snowflake, use these interview questions. They are designed to help you gauge their understanding and experience without getting too deep into technical jargon.

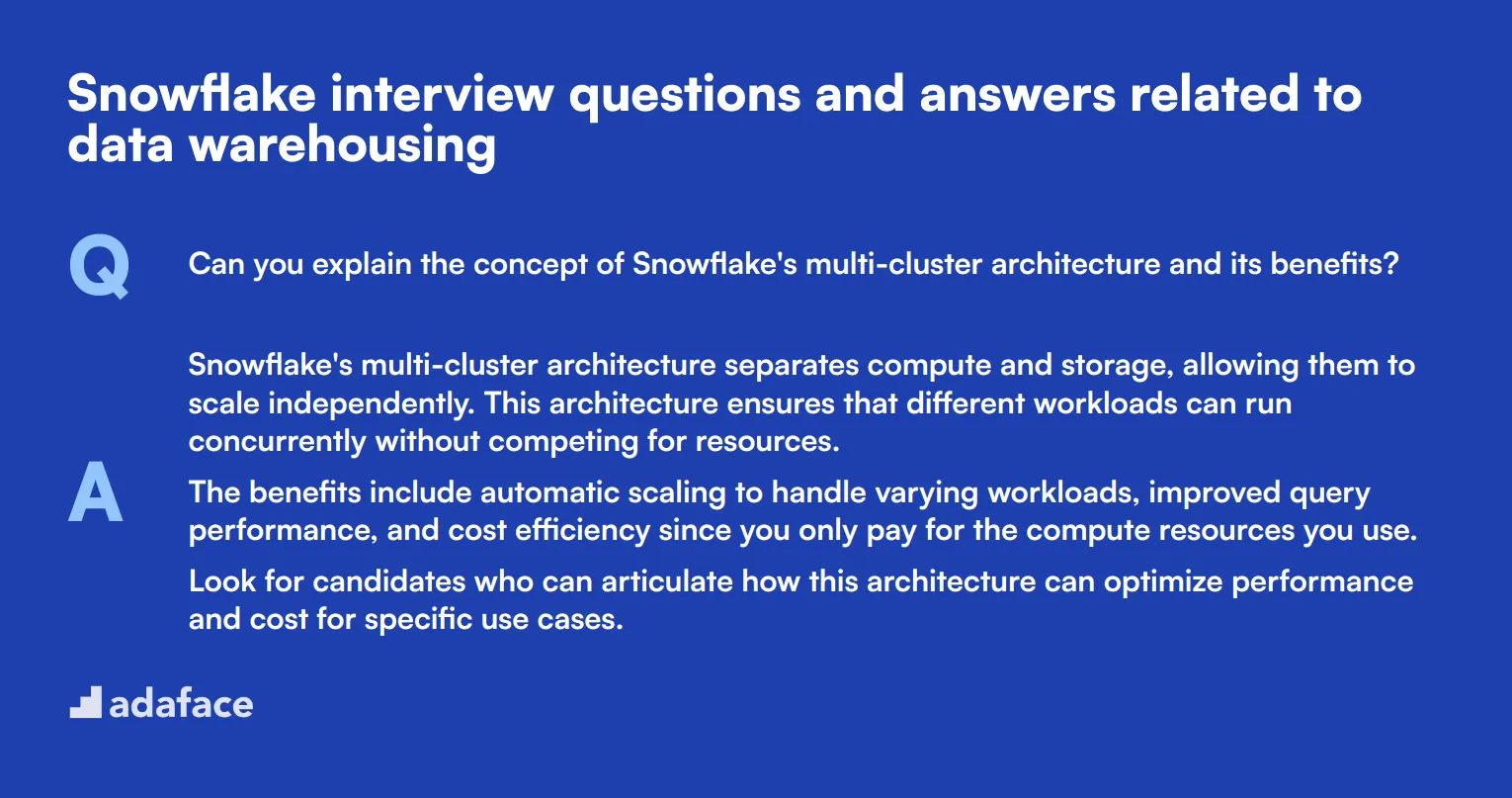

1. Can you explain the concept of Snowflake's multi-cluster architecture and its benefits?

Snowflake's multi-cluster architecture separates compute and storage, allowing them to scale independently. This architecture ensures that different workloads can run concurrently without competing for resources.

The benefits include automatic scaling to handle varying workloads, improved query performance, and cost efficiency since you only pay for the compute resources you use.

Look for candidates who can articulate how this architecture can optimize performance and cost for specific use cases.

2. How does Snowflake support data warehousing in terms of scalability and concurrency?

Snowflake supports scalability and concurrency through its unique multi-cluster architecture. It can automatically add or remove compute clusters based on the workload, ensuring seamless performance.

Additionally, Snowflake's architecture allows multiple users to run queries simultaneously without affecting each other's performance, thanks to its ability to isolate workloads.

Ideal responses should highlight understanding of how Snowflake's architecture manages to provide high concurrency and scalability, and examples of real-world scenarios would be a plus.

3. What is the role of data sharing in Snowflake and how can it benefit organizations?

Data sharing in Snowflake allows organizations to share live data across different accounts without the need to move or copy data. This feature is secure and ensures real-time access to shared data.

Benefits include faster data access, reduced storage costs, and the ability to share data with partners, vendors, or within different departments seamlessly.

Candidates should explain how data sharing can simplify collaboration and improve data-driven decision-making processes within an organization.

4. How does Snowflake ensure data consistency and reliability in its data warehousing solutions?

Snowflake ensures data consistency and reliability through its ACID-compliant transactions, which guarantee that all transactions are processed reliably and follow the principles of atomicity, consistency, isolation, and durability.

Additionally, Snowflake's architecture includes features like zero-copy cloning and time travel, which provide data recovery and historical data analysis capabilities.

Look for candidates who understand these concepts and can explain how they contribute to maintaining data integrity and reliability.

5. Discuss the importance of data partitioning in Snowflake and how it affects performance.

Data partitioning in Snowflake is achieved through micro-partitions, which are automatically created and maintained by the system. These micro-partitions help in organizing and optimizing data storage.

Effective data partitioning leads to improved query performance because it allows Snowflake to scan only the relevant micro-partitions, reducing the amount of data processed.

Candidates should emphasize the significance of micro-partitions in performance optimization and provide examples of how they benefit from properly partitioned data.

6. What strategies can be used to optimize query performance in Snowflake?

Several strategies can optimize query performance in Snowflake, including proper data partitioning, using clustering keys, and optimizing SQL queries. Clustering keys help organize similar data together, which can significantly improve query performance.

Other strategies include avoiding unnecessary data transformations, leveraging materialized views, and using Snowflake's query profiling tools to identify and address performance bottlenecks.

Look for candidates who can explain these strategies and provide examples of when and how they have successfully implemented them to enhance query performance.

7. Can you explain the role of data warehousing in business intelligence and how Snowflake supports it?

Data warehousing plays a crucial role in business intelligence by providing a centralized repository for data from various sources, enabling comprehensive analysis and reporting.

Snowflake supports business intelligence by offering features like data sharing, real-time data access, and seamless integration with BI tools. Its scalable architecture ensures that analytical workloads can run efficiently without performance degradation.

Candidates should be able to discuss the connection between data warehousing and business intelligence and how Snowflake's features facilitate effective BI operations.

8. How does Snowflake handle data security and compliance for data warehousing?

Snowflake provides robust data security and compliance through features like end-to-end encryption, role-based access control, and detailed auditing capabilities. It also complies with various industry standards and regulations, such as GDPR and HIPAA.

The platform includes additional security measures like network policies, multi-factor authentication, and support for external tokenization.

Look for candidates who understand these security features and can explain their importance in maintaining data integrity and compliance in a data warehousing environment.

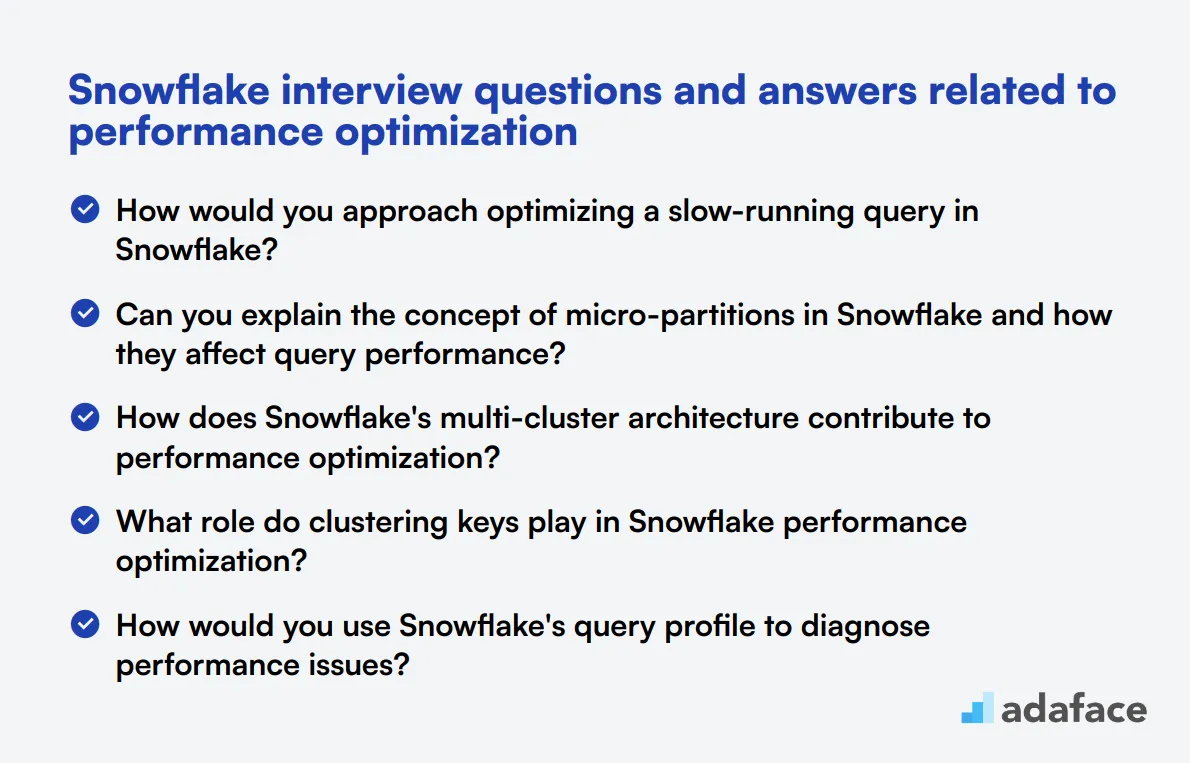

6 Snowflake interview questions and answers related to performance optimization

To assess a candidate's expertise in Snowflake performance optimization, consider asking these insightful questions. They'll help you gauge whether your applicants have the right skills to tackle complex data engineering challenges and optimize Snowflake's powerful features for maximum efficiency.

1. How would you approach optimizing a slow-running query in Snowflake?

When optimizing a slow-running query in Snowflake, I would follow these steps:

- Analyze the query execution plan using EXPLAIN or QUERY_HISTORY to identify bottlenecks.

- Check for proper indexing and consider adding appropriate clustering keys.

- Evaluate the need for materialized views for frequently accessed data.

- Optimize JOIN operations by ensuring correct join order and using appropriate join types.

- Consider using RESULT_SCAN for large intermediate results.

- Leverage Snowflake's automatic query optimization features.

Look for candidates who demonstrate a structured approach to query optimization. They should show familiarity with Snowflake's specific features and tools for performance tuning. Follow up by asking for examples of challenging queries they've optimized in the past.

2. Can you explain the concept of micro-partitions in Snowflake and how they affect query performance?

Micro-partitions in Snowflake are automatically created, immutable units of storage. Each micro-partition contains between 50 MB and 500 MB of uncompressed data, which is then compressed for storage. Snowflake uses these micro-partitions to optimize query performance in several ways:

- Pruning: Snowflake can quickly eliminate irrelevant micro-partitions during query execution.

- Data clustering: Related data is stored together, improving scan efficiency.

- Parallel processing: Multiple micro-partitions can be processed simultaneously.

- Metadata caching: Information about micro-partitions is cached for faster access.

A strong candidate should be able to explain how micro-partitions contribute to Snowflake's performance. Look for understanding of concepts like pruning and clustering, and how these features can be leveraged in query optimization strategies.

3. How does Snowflake's multi-cluster architecture contribute to performance optimization?

Snowflake's multi-cluster architecture allows for automatic and seamless scaling of compute resources. This architecture consists of virtual warehouses that can be scaled up or out as needed:

- Scale up: Increase the size of a single warehouse for more complex queries.

- Scale out: Add more warehouses to handle concurrent queries.

- Auto-scaling: Snowflake can automatically add or remove clusters based on workload.

- Resource isolation: Different workloads can be assigned to separate warehouses.

An ideal response should demonstrate understanding of how this architecture enables performance optimization. Look for candidates who can explain how to leverage multi-cluster features for different types of workloads and query patterns. Ask follow-up questions about their experience in configuring and managing virtual warehouses for optimal performance.

4. What role do clustering keys play in Snowflake performance optimization?

Clustering keys in Snowflake play a crucial role in organizing data within micro-partitions to optimize query performance. When data is clustered effectively:

- Query pruning becomes more efficient, as Snowflake can quickly identify and skip irrelevant micro-partitions.

- Data locality is improved, reducing the amount of data scanned for queries.

- Join operations can be more efficient when joining tables on clustered columns.

- Range-based queries on clustered columns perform faster.

Look for candidates who understand that while Snowflake handles much of the optimization automatically, choosing appropriate clustering keys can significantly enhance performance for specific query patterns. They should be able to discuss strategies for selecting clustering keys based on common query patterns and data distribution.

5. How would you use Snowflake's query profile to diagnose performance issues?

Snowflake's query profile is a powerful tool for diagnosing performance issues. When using it, I would focus on:

- Identifying the most time-consuming operations in the query execution plan.

- Analyzing the amount of data scanned and the effectiveness of pruning.

- Checking for spilling operations that might indicate insufficient memory allocation.

- Evaluating the distribution of work across cluster nodes.

- Examining any unexpected full table scans or cartesian products.

A strong candidate should demonstrate familiarity with interpreting query profiles and translating that information into actionable optimization strategies. Look for responses that show a methodical approach to performance analysis and the ability to correlate profile information with specific query optimizations.

6. What strategies would you employ to optimize storage costs in Snowflake while maintaining query performance?

To optimize storage costs in Snowflake while maintaining query performance, I would consider the following strategies:

- Implement appropriate data retention policies and leverage Time Travel judiciously.

- Use column-level compression where applicable to reduce storage requirements.

- Employ table clustering to improve data locality and query efficiency.

- Utilize materialized views for frequently accessed query results.

- Regularly archive or purge unnecessary data.

- Take advantage of Snowflake's automatic data compression and deduplication features.

Look for candidates who can balance cost optimization with performance considerations. They should understand Snowflake's storage architecture and be able to explain how different storage strategies impact both costs and query performance. Follow up by asking about their experience in implementing such strategies in real-world scenarios.

Which Snowflake skills should you evaluate during the interview phase?

While it's impossible to assess every aspect of a candidate's Snowflake expertise in a single interview, focusing on core skills can provide valuable insights. The following key areas are particularly important when evaluating Snowflake proficiency.

SQL and Data Warehousing

SQL proficiency is fundamental for working with Snowflake. A strong grasp of SQL and data warehousing concepts enables efficient data manipulation and analysis within Snowflake's environment.

Consider using an SQL assessment test with relevant MCQs to evaluate candidates' SQL skills. This can help filter out those with strong foundational knowledge.

During the interview, ask targeted questions to gauge the candidate's understanding of SQL in the context of Snowflake. Here's an example:

Can you explain the difference between a Snowflake table and a view, and when you would use each?

Look for answers that demonstrate understanding of Snowflake's data storage structure, query optimization, and use cases for tables versus views. A strong candidate will explain how views can be used for data security and simplifying complex queries.

Snowflake Architecture

Understanding Snowflake's unique architecture is key to leveraging its full potential. This includes knowledge of its cloud-based, separated compute and storage model.

Utilize a Snowflake-specific assessment to evaluate candidates' understanding of Snowflake's architecture and features.

To assess this skill during the interview, consider asking:

How does Snowflake's architecture differ from traditional data warehouses, and what advantages does it offer?

Strong responses will highlight Snowflake's separation of compute and storage, its ability to scale independently, and the benefits of its cloud-native design. Look for mentions of improved performance, cost-efficiency, and concurrent querying capabilities.

Performance Optimization

Optimizing Snowflake performance is crucial for efficient data processing and cost management. This skill involves understanding Snowflake's unique features and best practices for query optimization.

To evaluate a candidate's ability to optimize Snowflake performance, you might ask:

What strategies would you use to improve the performance of a slow-running query in Snowflake?

Look for answers that include techniques such as proper clustering, materialized views, query result caching, and appropriate warehouse sizing. A strong candidate will also mention the importance of analyzing query plans and leveraging Snowflake's built-in performance optimization features.

Streamline Your Snowflake Hiring Process with Skills Tests and Targeted Interviews

When hiring for Snowflake positions, it's important to verify candidates' skills accurately. This ensures you bring on board team members who can contribute effectively to your data projects.

The most efficient way to assess Snowflake skills is through online skills tests. Consider using a Snowflake online assessment to evaluate candidates' proficiency objectively.

After candidates complete the skills test, you can shortlist the top performers for interviews. This two-step process helps you focus on the most promising applicants, saving time and resources.

Ready to improve your Snowflake hiring process? Sign up to access Adaface's comprehensive assessment platform and start finding the best Snowflake talent for your team.

Snowflake Online Assessment Test

Download Snowflake interview questions template in multiple formats

Snowflake Interview Questions FAQs

The post covers basic, junior, intermediate, advanced, data warehousing, and performance optimization questions for Snowflake interviews.

The post offers 10 basic, 20 junior, 10 intermediate, and 15 advanced Snowflake interview questions.

Yes, the post includes 8 questions on data warehousing and 6 on performance optimization in Snowflake.

These questions help assess candidates' Snowflake knowledge across various levels and topics, enabling more targeted and effective interviews.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources