Spark developers are responsible for processing large datasets and ensuring data flows smoothly through various stages of data pipelines. They work with Apache Spark, a powerful open-source processing engine, to handle big data tasks efficiently and effectively.

Skills required for a Spark developer include proficiency in programming languages like Scala or Python, a deep understanding of distributed computing, and experience with big data tools and frameworks. Additionally, problem-solving abilities and strong analytical skills are crucial for success in this role.

Candidates can write these abilities in their resumes, but you can’t verify them without on-the-job Spark Developer skill tests.

In this post, we will explore 8 essential Spark Developer skills, 9 secondary skills and how to assess them so you can make informed hiring decisions.

Table of contents

8 fundamental Spark Developer skills and traits

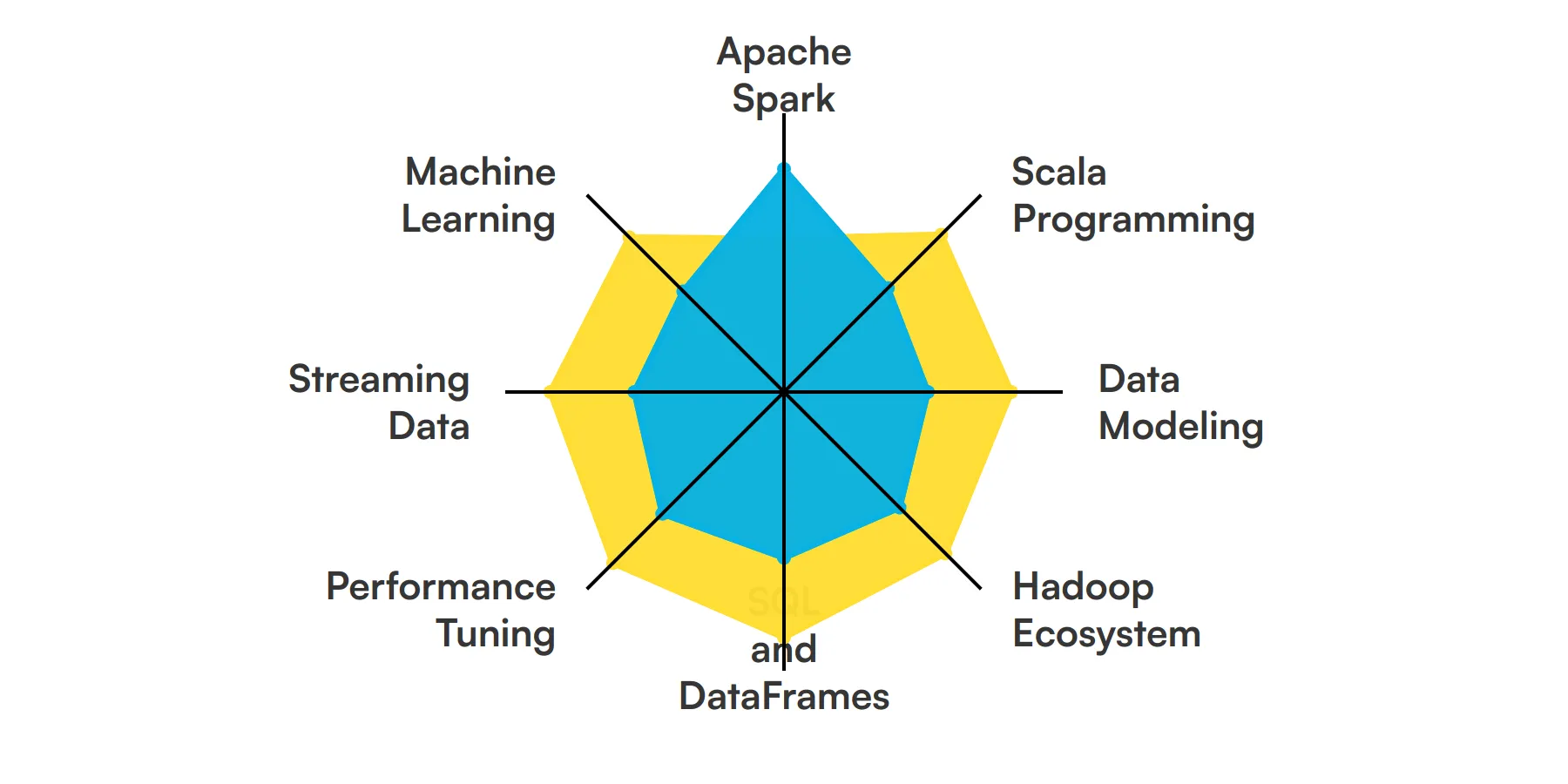

The best skills for Spark Developers include Apache Spark, Scala Programming, Data Modeling, Hadoop Ecosystem, SQL and DataFrames, Performance Tuning, Streaming Data and Machine Learning.

Let’s dive into the details by examining the 8 essential skills of a Spark Developer.

Apache Spark

Apache Spark is a unified analytics engine for large-scale data processing. A Spark developer utilizes this tool to process vast datasets quickly using Spark’s in-memory computing capabilities. This skill is fundamental for handling batch and real-time data processing tasks efficiently.

For more insights, check out our guide to writing a Spark Developer Job Description.

Scala Programming

Scala is often the language of choice for Spark applications due to its functional programming features and seamless compatibility with Spark. Developers use Scala to write concise, complex algorithms that run on Spark, leveraging its ability to handle immutable data and concurrency.

Data Modeling

Understanding data structures and designing schemas are crucial for a Spark developer. This skill involves organizing data in ways that optimize processing in Spark environments, crucial for effective data manipulation and retrieval in analytics applications.

Check out our guide for a comprehensive list of interview questions.

Hadoop Ecosystem

Knowledge of the Hadoop ecosystem, including HDFS, YARN, and MapReduce, is important as Spark often runs on top of Hadoop. This skill helps in managing data storage and resource allocation for Spark jobs, ensuring optimal performance.

SQL and DataFrames

Proficiency in SQL and using Spark DataFrames is essential for performing complex data analysis. Spark developers leverage SQL for structured data processing and DataFrames for efficient data manipulation and querying within Spark applications.

For more insights, check out our guide to writing a SQL Developer Job Description.

Performance Tuning

A Spark developer must be adept at tuning Spark applications to enhance performance. This includes optimizing configurations, managing memory usage, and fine-tuning data serialization and task partitioning to reduce processing time and resource consumption.

Streaming Data

Handling streaming data using Spark Streaming or Structured Streaming is a key skill. Developers use this to build scalable and fault-tolerant streaming applications that process data in real-time, crucial for time-sensitive analytics tasks.

Machine Learning

Utilizing Spark’s MLlib for machine learning tasks is another important skill. This involves creating scalable machine learning models directly within Spark, which is essential for developers working on predictive analytics and data mining projects.

Check out our guide for a comprehensive list of interview questions.

9 secondary Spark Developer skills and traits

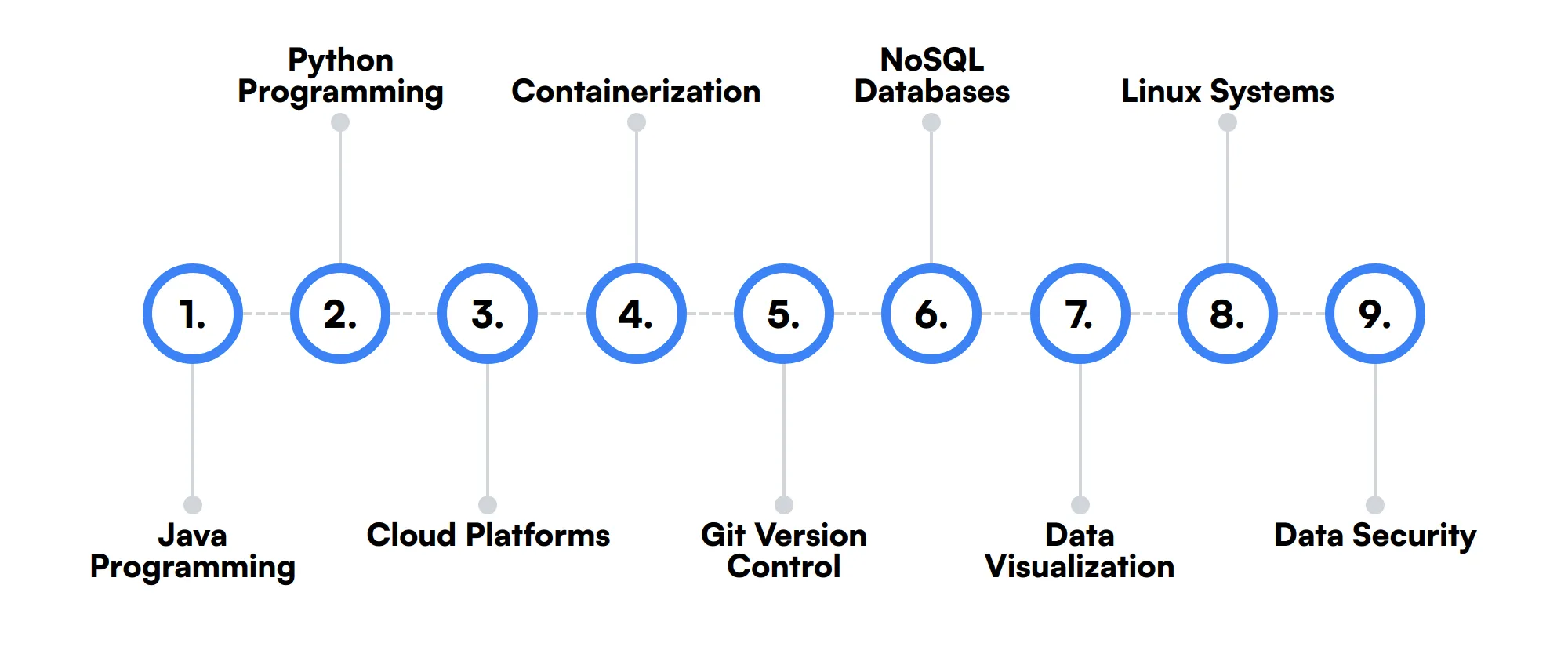

The best skills for Spark Developers include Java Programming, Python Programming, Cloud Platforms, Containerization, Git Version Control, NoSQL Databases, Data Visualization, Linux Systems and Data Security.

Let’s dive into the details by examining the 9 secondary skills of a Spark Developer.

Java Programming

While Scala is preferred, Java is also commonly used with Spark. Java’s familiarity and extensive libraries make it a practical choice for Spark development in environments where Java expertise is prevalent.

Python Programming

Python is popular in the data science community and is supported by Spark through PySpark. It’s used for rapid prototyping of Spark applications and for integrating machine learning algorithms with ease.

Cloud Platforms

Familiarity with cloud services like AWS, Azure, or GCP is beneficial as many Spark deployments are moving to the cloud. This involves managing Spark clusters in cloud environments and optimizing data storage and compute resources.

Containerization

Knowledge of Docker and Kubernetes helps in deploying Spark within containers, providing scalability and ease of deployment across various environments, which is increasingly common in modern data architectures.

Git Version Control

Proficiency in Git for version control is necessary for managing Spark application codebases, especially in collaborative and continuous integration environments.

NoSQL Databases

Understanding NoSQL databases like Cassandra or MongoDB is useful for Spark developers when dealing with unstructured data or when performance and scalability beyond traditional relational databases are required.

Data Visualization

Skills in data visualization tools and libraries can enhance the ability to present data processed by Spark in a visually appealing and informative manner, which is crucial for data-driven decision making.

Linux Systems

Most Spark environments run on Linux, so familiarity with Linux operating systems and shell scripting can be very helpful for setting up and managing Spark clusters.

Data Security

Understanding data security practices relevant to Spark, such as encryption and secure data processing, is important to ensure that data analytics projects comply with legal and ethical standards.

How to assess Spark Developer skills and traits

Assessing the skills and traits of a Spark Developer involves a nuanced understanding of both technical prowess and analytical acumen. Spark Developers are integral to managing and analyzing big data efficiently, using a suite of skills that range from mastery in Apache Spark to expertise in machine learning and data modeling. Identifying the right candidate requires a deep dive into their practical and theoretical knowledge.

Traditional methods like reviewing resumes and conducting interviews might give you a glimpse into a candidate's background, but they fall short of revealing their real-time problem-solving capabilities. This is where practical assessments come into play. By incorporating skill assessments into your hiring process, you can measure a candidate's hands-on abilities in Scala programming, performance tuning, and more.

For a comprehensive evaluation, consider using Adaface assessments, which are designed to mirror the complexity and nature of Spark-related tasks. These assessments help streamline the screening process, ensuring you spend time only on the most promising candidates, potentially reducing your screening time by 85%.

Let’s look at how to assess Spark Developer skills with these 6 talent assessments.

Spark Online Test

Our Spark Online Test evaluates a candidate's ability to work with Apache Spark, a powerful open-source processing engine for big data. This test is designed to assess skills in transforming structured data, optimizing Spark jobs, and analyzing graph structures.

The test covers Spark Core fundamentals, developing and running Spark jobs in Java, Scala, and Python, RDDs, Spark SQL, Dataframes and Datasets, Spark Streaming, and running Spark on a cluster. It also includes iterative and multi-stage algorithms, tuning and troubleshooting Spark jobs, and GraphX library for graph analysis.

Candidates who perform well demonstrate proficiency in converting big-data challenges into Spark scripts, optimizing jobs using partitioning and caching, and migrating data from various sources. They also show strong skills in real-time data processing and graph analysis.

Scala Online Test

Our Scala Online Test assesses a candidate's proficiency in the Scala programming language, which combines object-oriented and functional programming paradigms. This test is crucial for evaluating a candidate's ability to design and develop scalable applications using Scala.

The test covers Scala programming language, functional programming, object-oriented programming, collections, pattern matching, concurrency and parallelism, error handling, type inference, traits and mixins, higher-order functions, and immutable data structures. It also includes a coding question to evaluate hands-on Scala programming skills.

Successful candidates demonstrate a strong understanding of Scala's core concepts, syntax, and functional programming principles. They also show proficiency in using Scala test frameworks and designing efficient, scalable applications.

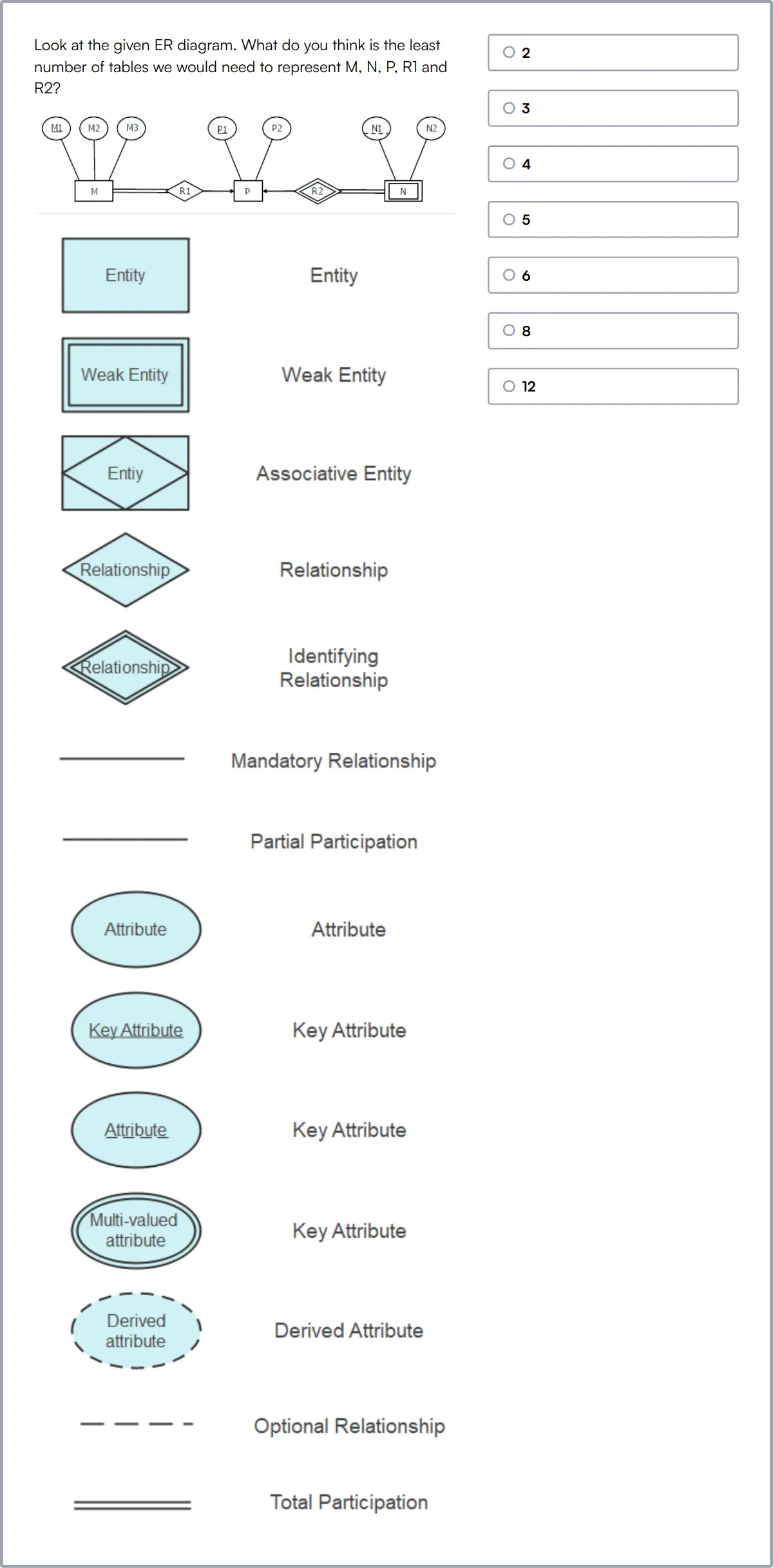

Data Modeling Skills Test

Our Data Modeling Skills Test evaluates a candidate's knowledge and abilities in database design and data modeling. This test is designed to assess skills in creating efficient and normalized database schemas.

The test covers data modeling, database design, SQL, ER diagrams, normalization, relational schema, data integrity, data mapping, data validation, and data transformation. It includes questions on both data modeling and SQL to provide a comprehensive assessment.

Candidates who perform well demonstrate proficiency in designing normalized database schemas, ensuring data integrity, and transforming data effectively. They also show strong skills in SQL and data warehousing.

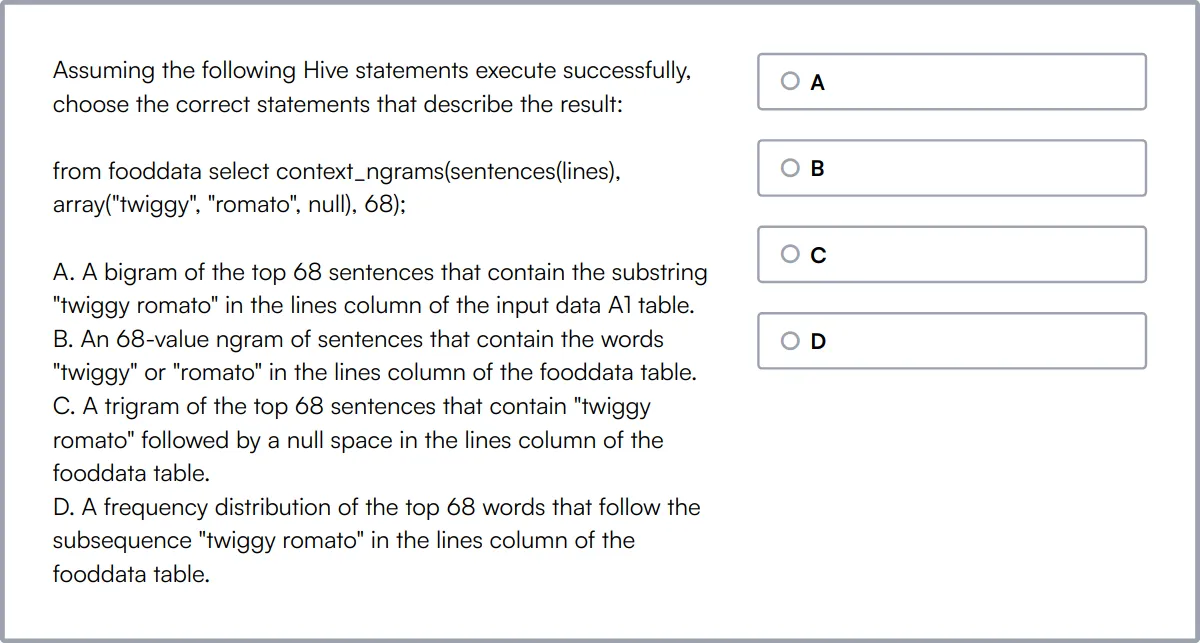

Hadoop Online Test

Our Hadoop Online Test evaluates a candidate's ability to work with the Hadoop ecosystem, a framework for distributed storage and processing of large data sets. This test is designed to assess skills in installing and configuring Hadoop clusters and running optimized MapReduce jobs.

The test covers installing and configuring Hadoop clusters, core Hadoop architecture (HDFS, YARN, MapReduce), writing efficient Hive and Pig queries, publishing data to clusters, handling streaming data, working with different file formats, and troubleshooting and monitoring.

Candidates who perform well demonstrate proficiency in managing Hadoop clusters, writing efficient data analysis queries, and handling streaming data. They also show strong skills in troubleshooting and monitoring Hadoop environments.

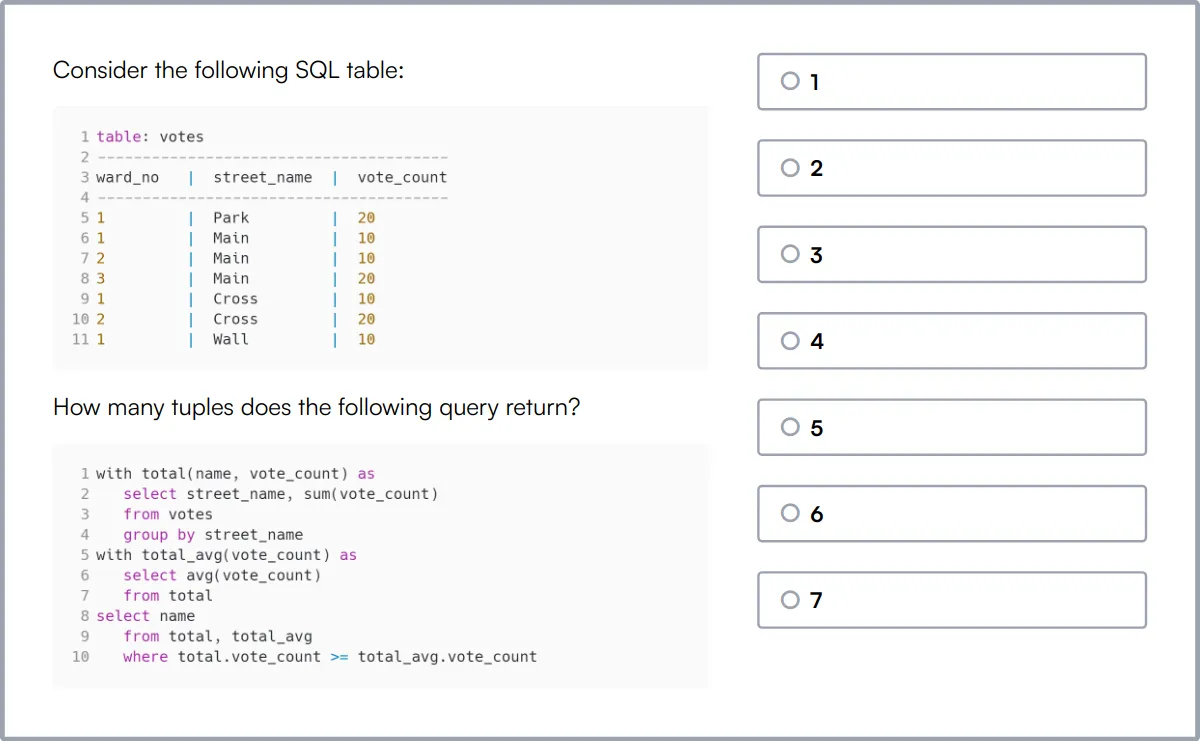

SQL Online Test

Our SQL Online Test evaluates a candidate's ability to design and build relational databases and tables from scratch. This test is designed to assess skills in writing efficient SQL queries and managing database operations.

The test covers creating databases, creating and deleting tables, CRUD operations on tables, joins and subqueries, conditional expressions and procedures, views, indexes, string functions, mathematical functions and timestamps, locks and transactions, and scale and security.

Candidates who perform well demonstrate proficiency in designing relational databases, writing efficient queries, and managing database operations. They also show strong skills in optimizing SQL queries and ensuring database security.

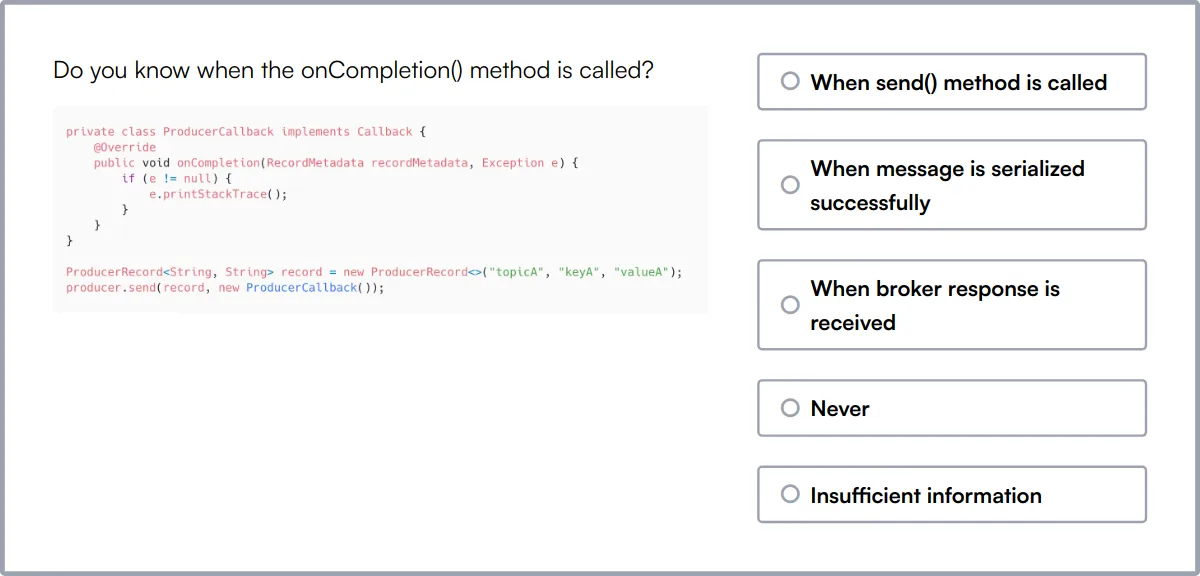

Kafka Online Test

Our Kafka Online Test evaluates a candidate's knowledge of Apache Kafka, a distributed event streaming platform. This test is designed to assess skills in working with message queues, stream processing, and distributed systems.

The test covers Kafka offsets, in-synced replica, Kafka clusters, distributed systems, event streaming, message queues, data pipelines, stream processing, fault tolerance, scalability, and data replication.

Candidates who perform well demonstrate proficiency in designing and developing scalable and fault-tolerant messaging systems. They also show strong skills in Kafka producer and consumer workflows, partitioning, and replication.

Summary: The 8 key Spark Developer skills and how to test for them

| Spark Developer skill | How to assess them |

|---|---|

| 1. Apache Spark | Evaluate proficiency in processing large datasets using Spark's core functionalities. |

| 2. Scala Programming | Assess ability to write and optimize code in Scala for Spark applications. |

| 3. Data Modeling | Check skills in designing and implementing data models for analytics. |

| 4. Hadoop Ecosystem | Gauge familiarity with Hadoop tools and their integration with Spark. |

| 5. SQL and DataFrames | Measure expertise in querying and manipulating data using SQL and DataFrames. |

| 6. Performance Tuning | Evaluate capability to optimize Spark jobs for better performance. |

| 7. Streaming Data | Assess experience in handling real-time data streams with Spark Streaming. |

| 8. Machine Learning | Check knowledge of implementing machine learning algorithms using Spark MLlib. |

Spark Online Test

Spark Developer skills FAQs

What skills are necessary for a Spark Developer?

A Spark Developer should be proficient in Apache Spark, Scala or Python programming, and familiar with the Hadoop ecosystem. Skills in SQL, DataFrames, and performance tuning are also important. Knowledge of Java, machine learning, and data security add value.

How can recruiters assess a candidate's proficiency in Apache Spark?

Recruiters can assess proficiency in Apache Spark by asking candidates to solve real-world problems using Spark, reviewing past projects, or conducting technical interviews that include coding challenges and scenario-based questions.

What is the role of Scala in Spark development?

Scala is often used in Spark development due to its functional programming features and seamless integration with Spark, allowing for more concise and readable code, which is crucial for processing large datasets efficiently.

Why is knowledge of the Hadoop ecosystem important for Spark developers?

Understanding the Hadoop ecosystem is important for Spark developers because Spark is often deployed alongside Hadoop components like HDFS for storage and YARN for resource management, enabling scalable and distributed data processing.

How important are SQL skills for a Spark Developer?

SQL skills are important for a Spark Developer as they allow the manipulation of structured data through Spark SQL and DataFrames, which simplifies data querying and can improve the performance of data processing applications.

What should recruiters look for when assessing knowledge in data security for Spark roles?

Recruiters should look for familiarity with secure data processing practices, knowledge of encryption and decryption techniques, and experience with security protocols and tools that protect data both in transit and at rest.

How can a candidate demonstrate expertise in performance tuning in Spark?

Candidates can demonstrate expertise in performance tuning by discussing specific techniques they've used to optimize Spark applications, such as adjusting Spark configurations, tuning resource allocation, and optimizing data serialization and storage formats.

What role does Python play in Spark development?

Python is used in Spark development for its simplicity and readability, making it a popular choice for data analysis and machine learning tasks within Spark ecosystems. PySpark, the Python API for Spark, enables developers to leverage Spark's capabilities using Python.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources