Kafka Engineers play a crucial role in data management and streaming processes within a company. They ensure real-time data processing and integration across various systems, facilitating immediate data availability and decision-making capabilities.

Skills required for a Kafka Engineer include a deep understanding of Apache Kafka, proficiency in Java or Scala, and strong knowledge in system design and architecture. Additionally, they need to possess analytical thinking and effective communication skills.

Candidates can write these abilities in their resumes, but you can’t verify them without on-the-job Kafka Engineer skill tests.

In this post, we will explore 9 essential Kafka Engineer skills, 10 secondary skills and how to assess them so you can make informed hiring decisions.

Table of contents

9 fundamental Kafka Engineer skills and traits

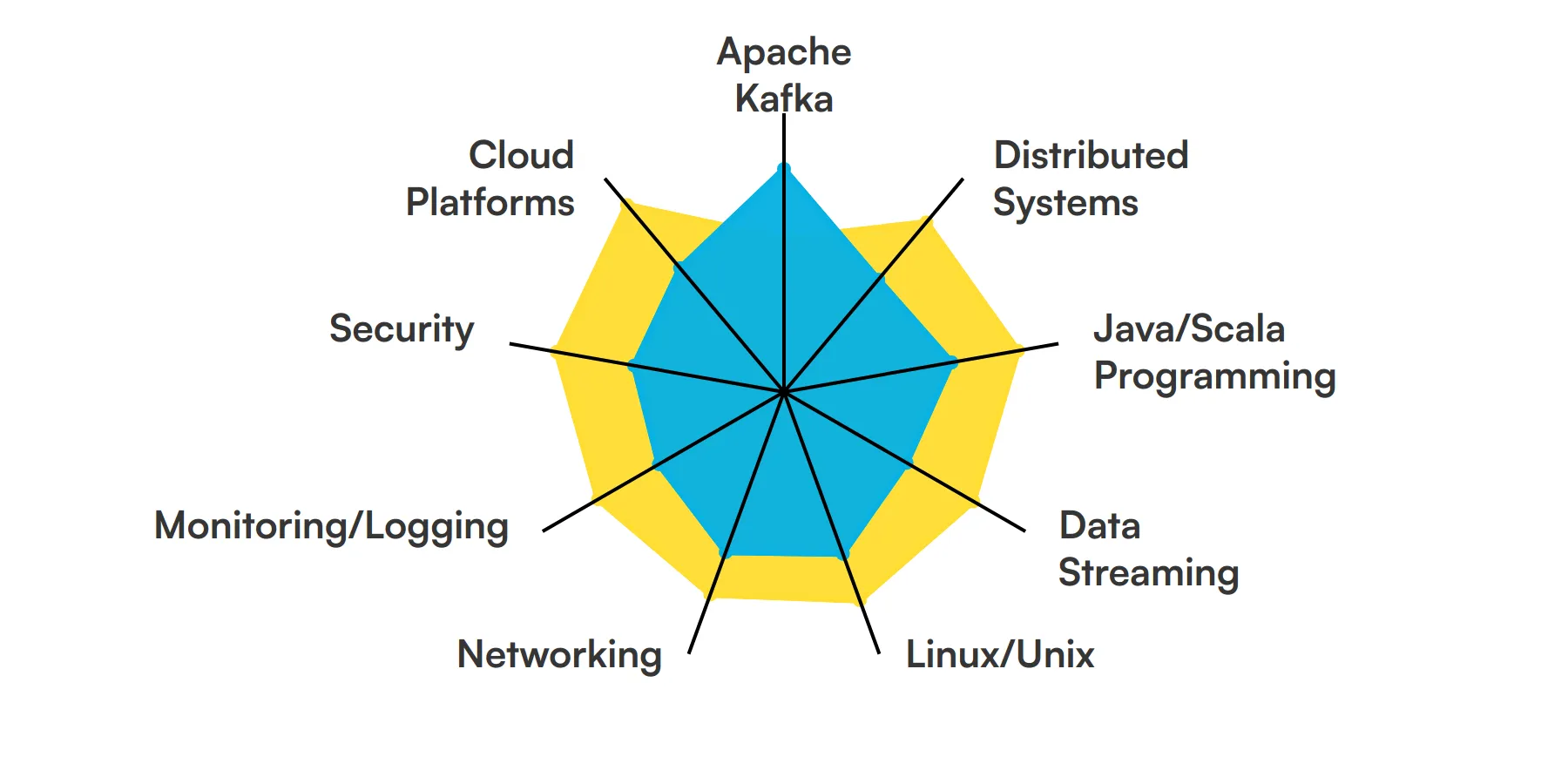

The best skills for Kafka Engineers include Apache Kafka, Distributed Systems, Java/Scala Programming, Data Streaming, Linux/Unix, Networking, Monitoring/Logging, Security and Cloud Platforms.

Let’s dive into the details by examining the 9 essential skills of a Kafka Engineer.

Apache Kafka

A Kafka Engineer must have a deep understanding of Apache Kafka, including its architecture, components, and how it handles data streams. This knowledge is crucial for setting up, managing, and troubleshooting Kafka clusters.

For more insights, check out our guide to writing a Kafka Engineer Job Description.

Distributed Systems

Knowledge of distributed systems is key for a Kafka Engineer. Kafka operates in a distributed environment, so understanding concepts like data replication, partitioning, and fault tolerance is essential for ensuring high availability and reliability.

Java/Scala Programming

Proficiency in Java or Scala is important as Kafka is primarily written in these languages. A Kafka Engineer will often need to write custom producers, consumers, and connectors, making strong programming skills a must.

Check out our guide for a comprehensive list of interview questions.

Data Streaming

Understanding data streaming concepts helps a Kafka Engineer design and implement real-time data pipelines. This includes knowledge of stream processing frameworks like Kafka Streams or Apache Flink.

Linux/Unix

Kafka typically runs on Linux/Unix systems, so familiarity with these operating systems is necessary. A Kafka Engineer should be comfortable with command-line tools, shell scripting, and system administration tasks.

For more insights, check out our guide to writing a Linux Administrator Job Description.

Networking

Strong networking skills are important for configuring and optimizing Kafka clusters. This includes understanding network protocols, security configurations, and performance tuning to ensure efficient data flow.

Monitoring/Logging

A Kafka Engineer must be adept at monitoring and logging to maintain the health of Kafka clusters. Tools like Prometheus, Grafana, and ELK stack are commonly used to track performance metrics and troubleshoot issues.

Check out our guide for a comprehensive list of interview questions.

Security

Implementing security measures such as SSL/TLS encryption, authentication, and authorization is crucial for protecting data in Kafka. A Kafka Engineer should be well-versed in these security practices.

Cloud Platforms

Experience with cloud platforms like AWS, Azure, or Google Cloud is beneficial as many organizations deploy Kafka in the cloud. Understanding cloud services and infrastructure can help in managing and scaling Kafka clusters.

For more insights, check out our guide to writing a Cloud Architect Job Description.

10 secondary Kafka Engineer skills and traits

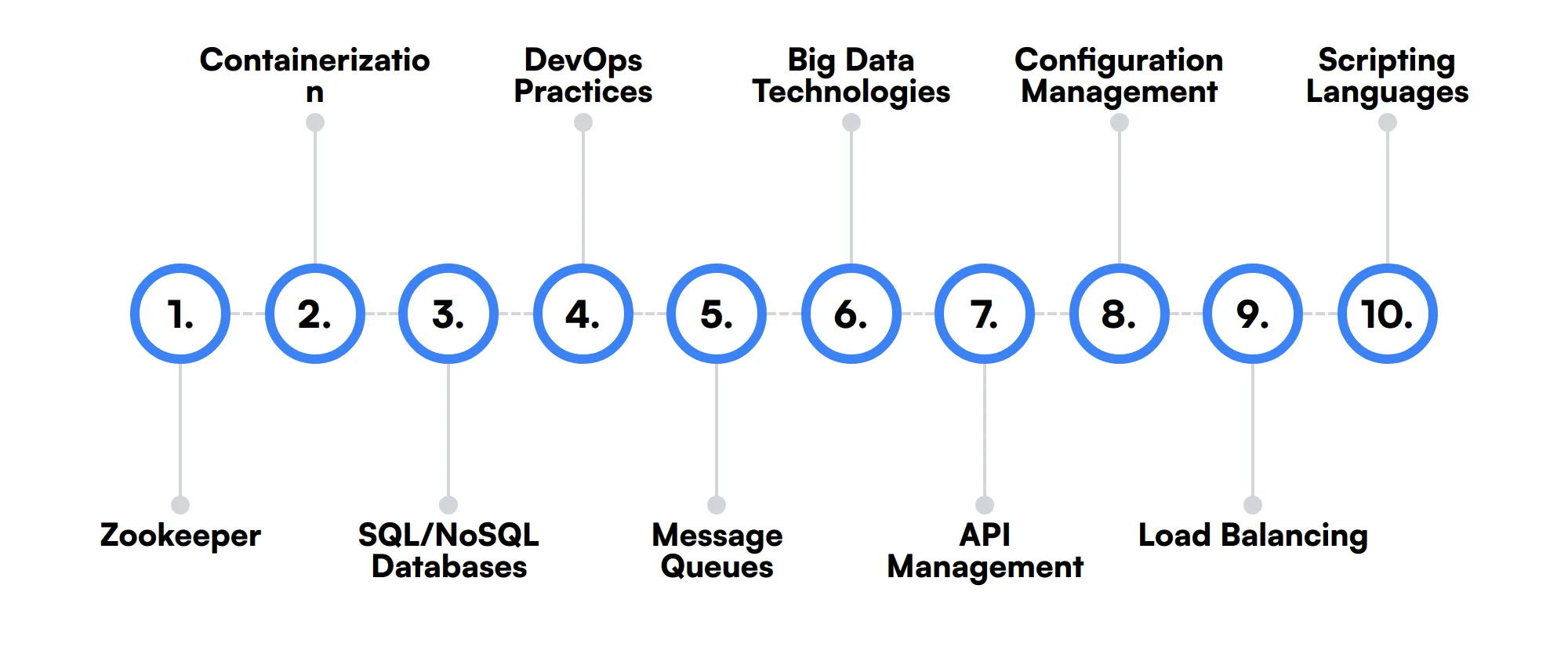

The best skills for Kafka Engineers include Zookeeper, Containerization, SQL/NoSQL Databases, DevOps Practices, Message Queues, Big Data Technologies, API Management, Configuration Management, Load Balancing and Scripting Languages.

Let’s dive into the details by examining the 10 secondary skills of a Kafka Engineer.

Zookeeper

Knowledge of Zookeeper is useful since it is often used for managing Kafka's distributed systems. Understanding how Zookeeper works can help in maintaining Kafka's configuration and state.

Containerization

Familiarity with containerization tools like Docker and orchestration platforms like Kubernetes can be advantageous. These tools help in deploying and managing Kafka clusters in a more scalable and efficient manner.

SQL/NoSQL Databases

Understanding both SQL and NoSQL databases can be beneficial for a Kafka Engineer. This knowledge helps in integrating Kafka with various data storage systems for seamless data flow and processing.

DevOps Practices

Knowledge of DevOps practices and tools like Jenkins, Ansible, or Terraform can aid in automating the deployment and management of Kafka clusters, ensuring smoother operations and faster deployments.

Message Queues

Experience with other message queue systems like RabbitMQ or ActiveMQ can provide a broader perspective on messaging patterns and help in designing more robust Kafka solutions.

Big Data Technologies

Familiarity with big data technologies like Hadoop, Spark, or Cassandra can be useful. These technologies often work in conjunction with Kafka for large-scale data processing and analytics.

API Management

Understanding API management and development is beneficial for integrating Kafka with various applications. This includes knowledge of RESTful APIs and how to expose Kafka data streams to other services.

Configuration Management

Skills in configuration management tools like Puppet or Chef can help in maintaining consistent Kafka configurations across different environments, ensuring stability and reliability.

Load Balancing

Knowledge of load balancing techniques can help in distributing the load across Kafka brokers effectively. This ensures optimal performance and prevents any single broker from becoming a bottleneck.

Scripting Languages

Proficiency in scripting languages like Python or Bash can be useful for automating routine tasks, writing custom scripts for monitoring, and managing Kafka operations more efficiently.

How to assess Kafka Engineer skills and traits

Assessing the skills and traits of a Kafka Engineer involves a nuanced understanding of both technical expertise and the ability to apply that knowledge in real-world scenarios. Kafka Engineers play a critical role in managing data streaming architectures, requiring a solid grasp of Apache Kafka, distributed systems, and various programming languages like Java or Scala. Understanding these skills in potential candidates is key to ensuring successful project outcomes.

Traditional methods like reviewing resumes and conducting interviews often fall short in accurately gauging a candidate's practical abilities. This is where skills-based assessments come into play. By implementing targeted assessments, you can measure a candidate's proficiency in critical areas such as Linux/Unix environments, networking, monitoring/logging, security practices, and their familiarity with cloud platforms.

To streamline this process and enhance the accuracy of your hiring decisions, consider utilizing Adaface assessments. These tests are designed to reflect the real-world challenges a Kafka Engineer might face, helping you identify top talent efficiently and effectively. With Adaface, companies have seen an 85% reduction in screening time, enabling a more focused approach to interviewing and selecting the right candidate.

Let’s look at how to assess Kafka Engineer skills with these 6 talent assessments.

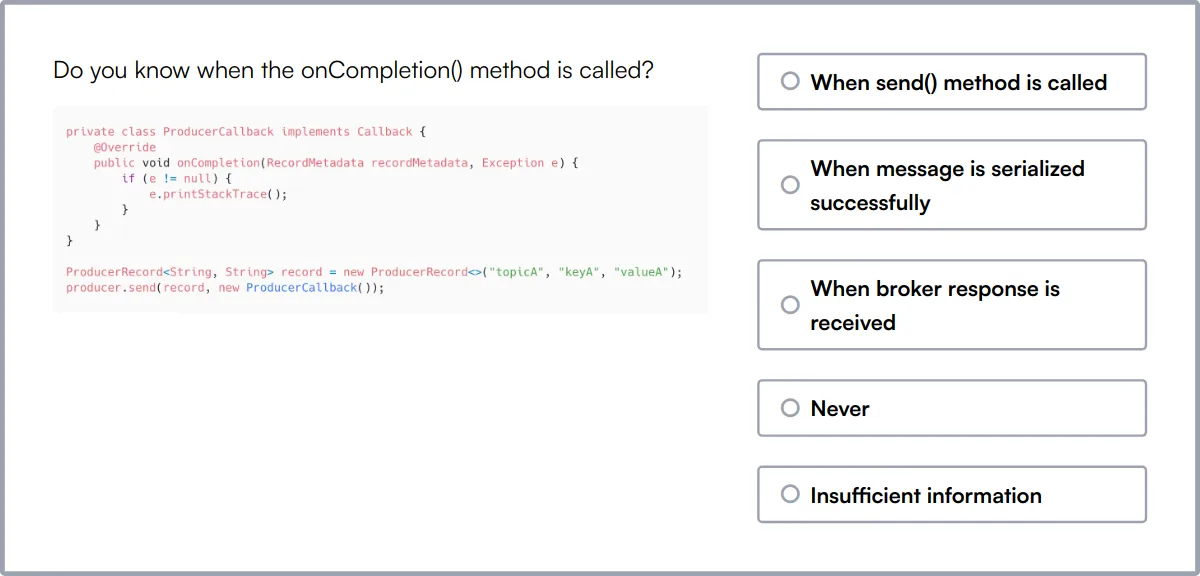

Kafka Online Test

Our Kafka Online Test evaluates candidates on their knowledge of Apache Kafka, focusing on real-time data processing and messaging systems.

The test assesses understanding of Kafka clusters, event streaming, message queues, and stream processing. It also covers fault tolerance, scalability, and data replication.

Successful candidates demonstrate the ability to design and develop scalable and fault-tolerant messaging systems using Kafka.

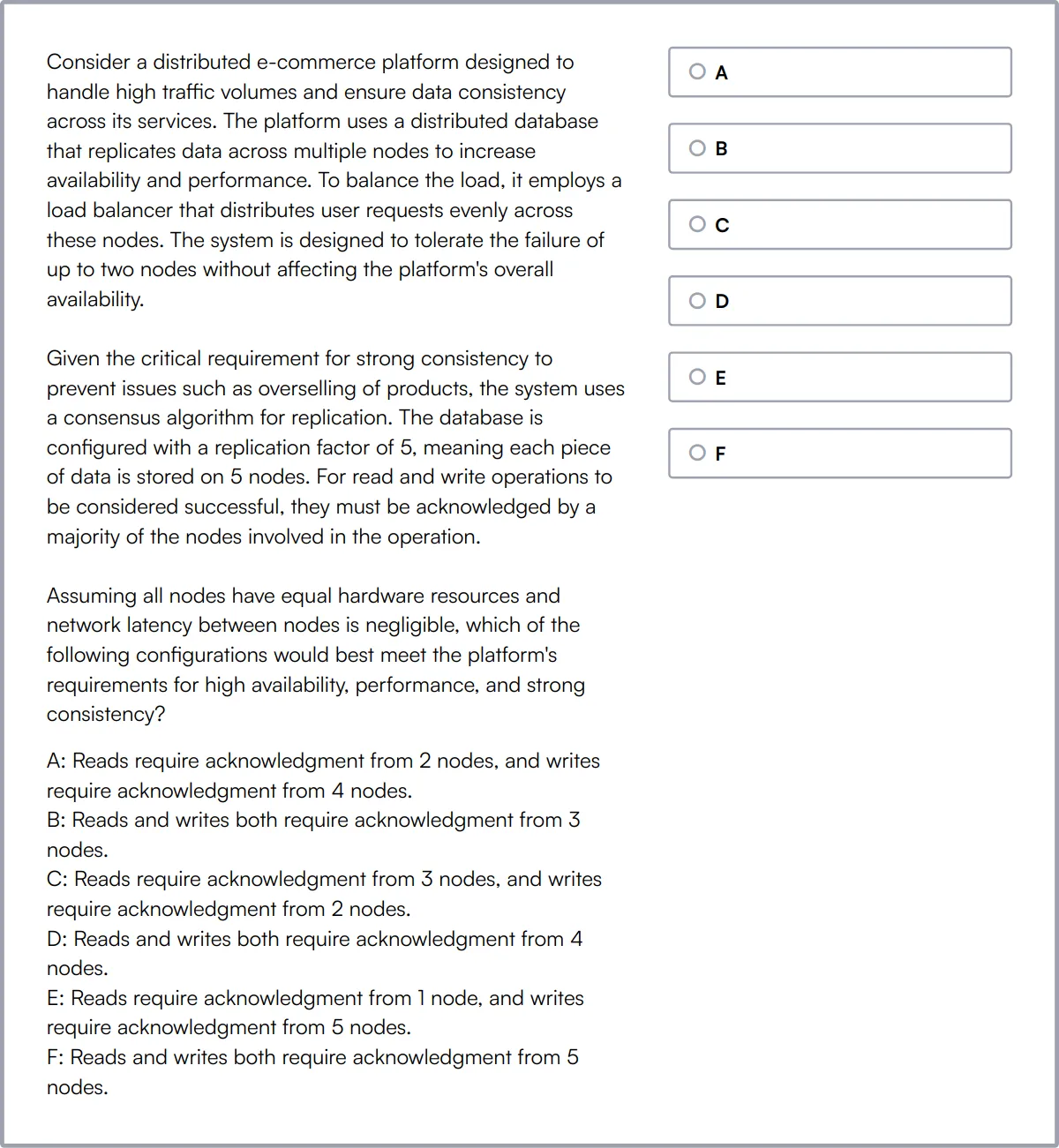

System Design Online Test

Our System Design Online Test measures proficiency in designing software systems that meet both functional and non-functional requirements.

This test evaluates candidates on system design, network protocols, database design, and cloud computing. It also touches on performance optimization and microservices architecture.

Candidates who perform well can effectively identify system requirements, choose appropriate architectures, and create high-level design specifications.

Java Online Test

The Java Online Test assesses knowledge of core Java concepts, including object-oriented programming and working with databases.

The test covers Java syntax and semantics, exception handling, multi-threading, and the Collections framework. It also includes functional programming with Lambdas and Streams API.

High-scoring candidates are proficient in coding with Java, demonstrating strong capabilities in solving complex programming challenges.

Spark Online Test

Our Spark Online Test evaluates a candidate's ability to handle big data processing using Apache Spark.

The test covers Spark Core fundamentals, data processing with Spark SQL, and Spark Streaming for real-time data. It also assesses knowledge in running Spark on a cluster and graph analysis with the GraphX library.

Candidates excelling in this test can optimize Spark jobs and effectively transform structured data with RDD API and SparkSQL.

Linux Online Test

The Linux Online Test is designed to evaluate candidates on their proficiency with the Linux operating system.

This test covers Linux command line, file system management, networking, security, and shell scripting.

Successful candidates will demonstrate the ability to efficiently design and maintain Linux-based systems and troubleshoot common issues.

Network Engineer Online Test

Our Network Engineer Online Test assesses technical knowledge and practical skills in computer networking.

The test evaluates understanding of network protocols, network security, routing and switching, and network troubleshooting. It also covers wireless networking and network performance optimization.

Candidates who score well are proficient in designing, implementing, and maintaining complex network infrastructures and resolving network issues effectively.

Summary: The 9 key Kafka Engineer skills and how to test for them

| Kafka Engineer skill | How to assess them |

|---|---|

| 1. Apache Kafka | Evaluate proficiency in setting up and managing Kafka clusters. |

| 2. Distributed Systems | Assess understanding of system design and fault tolerance. |

| 3. Java/Scala Programming | Check ability to write and optimize code in Java or Scala. |

| 4. Data Streaming | Gauge experience in real-time data processing and stream management. |

| 5. Linux/Unix | Determine skills in navigating and managing Linux/Unix environments. |

| 6. Networking | Evaluate knowledge of network protocols and troubleshooting. |

| 7. Monitoring/Logging | Assess capability to implement and interpret monitoring tools. |

| 8. Security | Check understanding of securing data and systems. |

| 9. Cloud Platforms | Gauge experience with deploying and managing services on cloud platforms. |

Kafka Online Test

Kafka Engineer skills FAQs

What are the key skills required for a Kafka Engineer?

A Kafka Engineer should have expertise in Apache Kafka, distributed systems, Java/Scala programming, data streaming, Linux/Unix, and networking.

How can I assess a candidate's knowledge of Apache Kafka?

Ask about their experience with Kafka clusters, message brokers, and their ability to handle real-time data streams. Practical tests on Kafka setup and troubleshooting can be useful.

Why is knowledge of distributed systems important for a Kafka Engineer?

Kafka operates in a distributed environment. Understanding distributed systems helps in managing data replication, fault tolerance, and scalability.

What programming languages should a Kafka Engineer be proficient in?

Proficiency in Java and Scala is important as Kafka is primarily written in these languages. Knowledge of scripting languages like Python or Bash is also beneficial.

How important is experience with cloud platforms for a Kafka Engineer?

Experience with cloud platforms like AWS, GCP, or Azure is important as many Kafka deployments are cloud-based. It helps in managing Kafka services and scaling infrastructure.

What role does Zookeeper play in Kafka, and how can I assess a candidate's knowledge of it?

Zookeeper manages Kafka's distributed systems. Assess candidates on their understanding of Zookeeper's role in leader election, configuration management, and synchronization.

How can I evaluate a candidate's skills in monitoring and logging for Kafka?

Check their experience with tools like Prometheus, Grafana, and ELK stack. Ask about their approach to monitoring Kafka clusters and handling logs.

What security skills should a Kafka Engineer possess?

They should understand encryption, authentication, and authorization in Kafka. Knowledge of SSL/TLS, Kerberos, and ACLs is important for securing Kafka clusters.

Assess and hire the best Kafka Engineers with Adaface

Assessing and finding the best Kafka Engineer is quick and easy when you use talent assessments. You can check out our product tour, sign up for our free plan to see talent assessments in action or view the demo here:

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources