Hiring the right PowerCenter developer can significantly impact your data integration projects and overall business efficiency. Knowing which questions to ask during interviews helps you identify top talent and ensures you're bringing on board developers who can drive your data initiatives forward.

This blog post provides a comprehensive list of PowerCenter interview questions, categorized by experience level and specific areas of expertise. From general concepts to advanced workflows, we cover a wide range of topics to help you thoroughly assess candidates' PowerCenter skills.

By using these questions, you'll be better equipped to evaluate candidates' knowledge and problem-solving abilities in real-world scenarios. Consider complementing your interview process with a PowerCenter skills assessment to get a more complete picture of candidates' capabilities.

Table of contents

8 general PowerCenter interview questions and answers

To streamline your hiring process and ensure you find the best candidates for your PowerCenter roles, use these eight general interview questions. They are designed to gauge a candidate's understanding of key concepts and practical experience, making your interviews more effective.

1. Can you explain the primary function of PowerCenter in data integration?

PowerCenter is an enterprise data integration platform that helps organizations to connect, manage, and integrate data from various sources. It's primarily used for extracting data from different sources, transforming it as per business requirements, and loading it into target systems (often referred to as ETL).

Look for candidates who can clearly articulate these key functions and provide examples from their previous roles. This demonstrates their practical understanding and hands-on experience with PowerCenter.

2. What are the key components of PowerCenter? Describe their roles.

The key components of PowerCenter include the Repository Service, Integration Service, and the Client Tools. The Repository Service manages metadata, the Integration Service executes the data transformation processes, and the Client Tools are used for designing and monitoring workflows.

Ideal candidates should be able to detail each component and its role within the PowerCenter architecture. This will highlight their familiarity with the platform's structure and functions.

3. How would you handle a scenario where a data mapping process fails in PowerCenter?

When a data mapping process fails, the first step is to check the session logs for errors. Identifying the specific error helps in understanding the cause of the failure. Common issues might include data type mismatches or connectivity issues. Once the issue is identified, the next step is to fix the root cause and rerun the process.

Candidates should emphasize their problem-solving skills and ability to troubleshoot effectively. Look for responses that demonstrate a systematic approach to diagnosing and resolving issues.

4. How do you ensure data quality and accuracy in PowerCenter?

Ensuring data quality in PowerCenter involves implementing validation rules, data profiling, and using transformation logic to cleanse and format data correctly. Regular audits and monitoring processes are also crucial to maintain data accuracy.

Strong candidates will discuss specific techniques and tools they use to maintain high data quality standards. They should also mention any experience with data governance practices.

5. Describe a time when you optimized a PowerCenter workflow for better performance.

Optimizing a PowerCenter workflow might involve steps like tuning the mapping logic, optimizing SQL queries, or adjusting session configurations to make better use of system resources. For example, one could use partitioning to parallelize data processing or reduce the number of active transformations.

Look for examples where candidates have successfully improved performance metrics, such as reduced processing time or increased throughput. This shows their ability to enhance system efficiency.

6. What is the use of session and workflow logs in PowerCenter?

Session and workflow logs in PowerCenter are critical for tracking the execution of data processes. They provide detailed information on the start and end time of sessions, errors encountered, and overall performance metrics. Reviewing these logs helps in troubleshooting and optimizing data workflows.

Candidates should show an understanding of how to use these logs effectively for maintaining and improving PowerCenter operations. Practical examples from past experiences will be beneficial.

7. How important is it to have knowledge of SQL when working with PowerCenter?

SQL knowledge is crucial when working with PowerCenter, as it often involves writing queries to extract, transform, and load data. SQL is used in various transformations and for interacting with databases directly. A solid grasp of SQL allows for more efficient data manipulation and troubleshooting.

Look for candidates who can discuss their experience with SQL in the context of PowerCenter projects. Their ability to integrate SQL skills with PowerCenter tasks indicates a higher level of proficiency.

8. Can you explain how version control is managed in PowerCenter?

Version control in PowerCenter is managed through the repository, where different versions of mappings, workflows, and other objects can be stored. Using versioning, teams can track changes, revert to previous versions, and manage multiple development streams efficiently.

Ideal candidates should describe their experience with version control and how it has helped them manage large-scale PowerCenter projects. This shows their ability to maintain organized and efficient development practices.

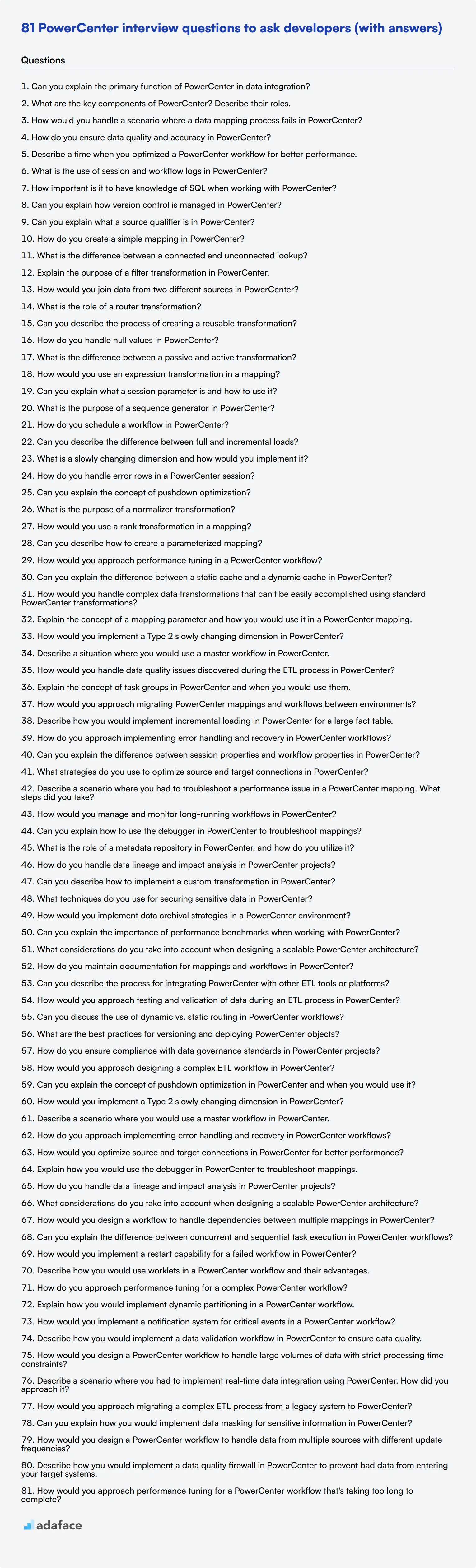

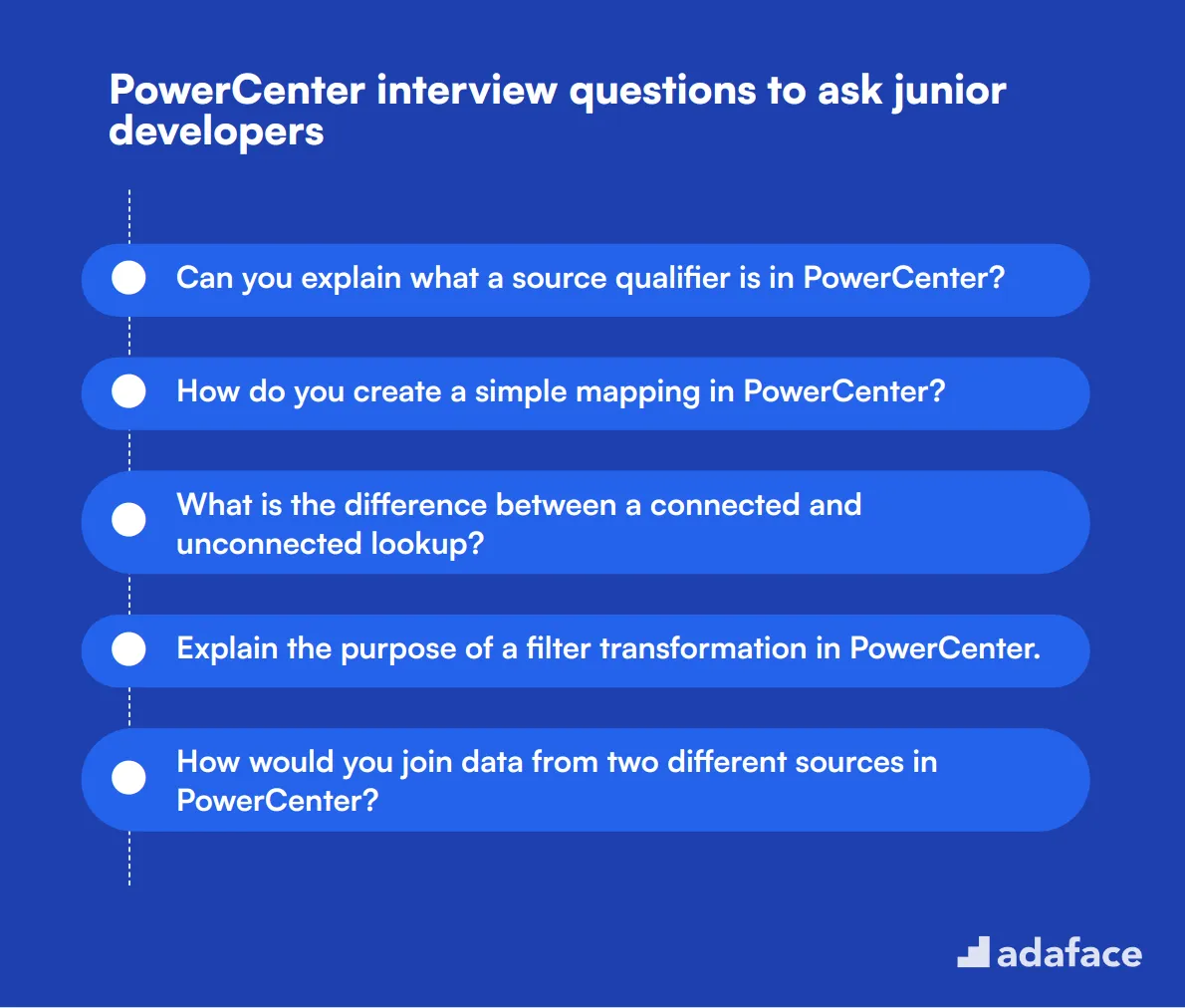

20 PowerCenter interview questions to ask junior developers

To assess junior data engineer candidates' understanding of PowerCenter basics, use these 20 interview questions. They cover essential concepts and practical scenarios, helping you identify candidates with a solid foundation in PowerCenter for entry-level positions.

- Can you explain what a source qualifier is in PowerCenter?

- How do you create a simple mapping in PowerCenter?

- What is the difference between a connected and unconnected lookup?

- Explain the purpose of a filter transformation in PowerCenter.

- How would you join data from two different sources in PowerCenter?

- What is the role of a router transformation?

- Can you describe the process of creating a reusable transformation?

- How do you handle null values in PowerCenter?

- What is the difference between a passive and active transformation?

- How would you use an expression transformation in a mapping?

- Can you explain what a session parameter is and how to use it?

- What is the purpose of a sequence generator in PowerCenter?

- How do you schedule a workflow in PowerCenter?

- Can you describe the difference between full and incremental loads?

- What is a slowly changing dimension and how would you implement it?

- How do you handle error rows in a PowerCenter session?

- Can you explain the concept of pushdown optimization?

- What is the purpose of a normalizer transformation?

- How would you use a rank transformation in a mapping?

- Can you describe how to create a parameterized mapping?

10 intermediate PowerCenter interview questions and answers to ask mid-tier developers

Ready to level up your PowerCenter interview game? These 10 intermediate questions are perfect for assessing mid-tier developers. They'll help you gauge a candidate's practical knowledge and problem-solving skills without diving too deep into the technical weeds. Use these to spark engaging discussions and uncover real-world experience in your next interview.

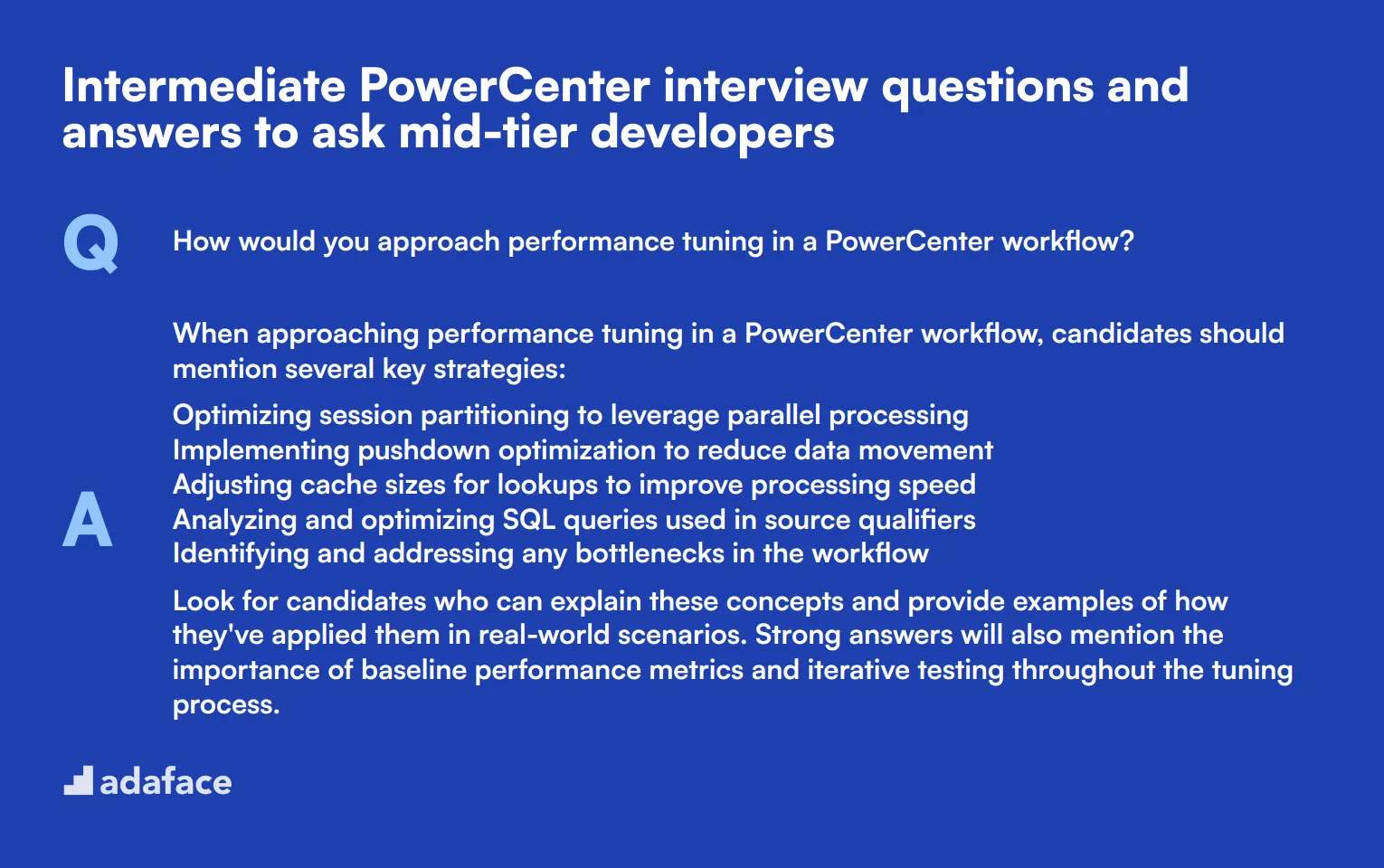

1. How would you approach performance tuning in a PowerCenter workflow?

When approaching performance tuning in a PowerCenter workflow, candidates should mention several key strategies:

- Optimizing session partitioning to leverage parallel processing

- Implementing pushdown optimization to reduce data movement

- Adjusting cache sizes for lookups to improve processing speed

- Analyzing and optimizing SQL queries used in source qualifiers

- Identifying and addressing any bottlenecks in the workflow

Look for candidates who can explain these concepts and provide examples of how they've applied them in real-world scenarios. Strong answers will also mention the importance of baseline performance metrics and iterative testing throughout the tuning process.

2. Can you explain the difference between a static cache and a dynamic cache in PowerCenter?

A strong answer should clearly differentiate between static and dynamic caches:

- Static cache: Loads all lookup data into memory before the session starts. It's ideal for smaller, relatively stable datasets that fit entirely in memory.

- Dynamic cache: Loads lookup data on-demand during session execution. It's suitable for large datasets or when memory is limited.

Candidates should be able to discuss the pros and cons of each approach. For example, static caches offer faster lookups but require more memory, while dynamic caches are more memory-efficient but may have slower performance for repeated lookups. Look for answers that demonstrate understanding of when to use each type based on data volume, update frequency, and available system resources.

3. How would you handle complex data transformations that can't be easily accomplished using standard PowerCenter transformations?

Strong candidates should mention several approaches to handling complex transformations:

- Using expression transformations with advanced functions and conditional logic

- Leveraging Java transformations for highly complex operations

- Combining multiple transformations in a specific order to achieve the desired result

- Considering external scripts or stored procedures for extremely complex logic

Look for answers that demonstrate problem-solving skills and a deep understanding of PowerCenter's capabilities. Ideal candidates will also mention the importance of performance considerations when dealing with complex transformations and the need to document these solutions thoroughly for maintainability.

4. Explain the concept of a mapping parameter and how you would use it in a PowerCenter mapping.

A mapping parameter is a variable that allows you to pass values into a mapping at runtime, making the mapping more flexible and reusable. Candidates should explain that mapping parameters can be used to:

- Dynamically set filter conditions

- Provide values for lookup conditions

- Configure source or target information

- Control transformation logic

Look for answers that include practical examples, such as using a parameter to filter data based on a date range or to switch between different source tables. Strong candidates will also mention how mapping parameters can be linked to workflow variables or session parameters for even greater flexibility in ETL processes.

5. How would you implement a Type 2 slowly changing dimension in PowerCenter?

Implementing a Type 2 slowly changing dimension (SCD) in PowerCenter involves tracking historical changes by creating new records. A good answer should outline the following steps:

- Use a Lookup transformation to check if the incoming record exists in the dimension table

- Implement an Update Strategy transformation to determine if the record is new, changed, or unchanged

- For changed records, use a Router transformation to:

- Update the existing record by setting an end date

- Insert a new record with the updated information and a new start date

- For new records, simply insert them with a start date

Look for candidates who mention the importance of maintaining surrogate keys, handling effective dates correctly, and potentially using flags to identify the current active record. Strong answers might also discuss performance considerations for large dimensions and strategies for handling multiple simultaneous changes.

6. Describe a situation where you would use a master workflow in PowerCenter.

A master workflow in PowerCenter is used to orchestrate and control the execution of multiple sub-workflows. Candidates should describe scenarios such as:

- Implementing complex ETL processes with dependencies between different data flows

- Managing end-of-day or end-of-month processes that involve multiple steps

- Coordinating data loads across different subject areas or data marts

- Implementing error handling and recovery mechanisms for a series of related workflows

Look for answers that demonstrate understanding of workflow dependencies, parallel execution, and error handling at a higher level. Strong candidates might also mention how master workflows can improve manageability of complex ETL processes and facilitate easier scheduling and monitoring.

7. How would you handle data quality issues discovered during the ETL process in PowerCenter?

Handling data quality issues in PowerCenter requires a systematic approach. Candidates should mention strategies such as:

- Implementing data validation rules using Expression transformations

- Using the Error transformation to redirect invalid records to error tables

- Leveraging PowerCenter's built-in data profiling capabilities to identify patterns and anomalies

- Creating detailed error logs and reports for analysis and remediation

- Implementing threshold-based alerts for critical data quality issues

Look for answers that emphasize the importance of proactive data quality management and the ability to balance data quality requirements with performance considerations. Strong candidates might also discuss strategies for working with source system owners to address root causes of data quality problems.

8. Explain the concept of task groups in PowerCenter and when you would use them.

Task groups in PowerCenter allow you to group related tasks within a workflow and control their execution as a unit. Candidates should explain that task groups are useful for:

- Organizing complex workflows into logical units

- Implementing parallel execution of independent tasks

- Managing dependencies between groups of tasks

- Simplifying error handling and restartability for related tasks

Look for answers that demonstrate understanding of workflow design principles and the ability to optimize process flow. Strong candidates might provide examples of how they've used task groups to improve workflow efficiency or manageability in real-world scenarios.

9. How would you approach migrating PowerCenter mappings and workflows between environments?

Migrating PowerCenter objects between environments requires careful planning and execution. Candidates should outline a process that includes:

- Using PowerCenter's native export/import functionality or third-party tools

- Ensuring all dependencies (connections, variables, etc.) are properly mapped in the target environment

- Updating connection information and other environment-specific configurations

- Implementing version control practices to track changes across environments

- Testing thoroughly in each environment before promoting to the next

Look for answers that demonstrate awareness of challenges such as maintaining consistency across environments and managing configuration differences. Strong candidates might also discuss strategies for automating the migration process and ensuring proper documentation throughout.

10. Describe how you would implement incremental loading in PowerCenter for a large fact table.

Implementing incremental loading for a large fact table in PowerCenter involves several key considerations:

- Identifying a reliable change detection mechanism (e.g., timestamp columns, change data capture)

- Using a Lookup transformation to compare incoming data with existing records

- Implementing an Update Strategy transformation to determine insert, update, or delete actions

- Considering performance optimizations such as partitioning and bulk loading

- Implementing error handling and recovery mechanisms for failed loads

Look for answers that demonstrate understanding of both the technical implementation and the business considerations of incremental loading. Strong candidates might discuss strategies for handling late-arriving facts, ensuring data consistency, and optimizing the process for very large datasets.

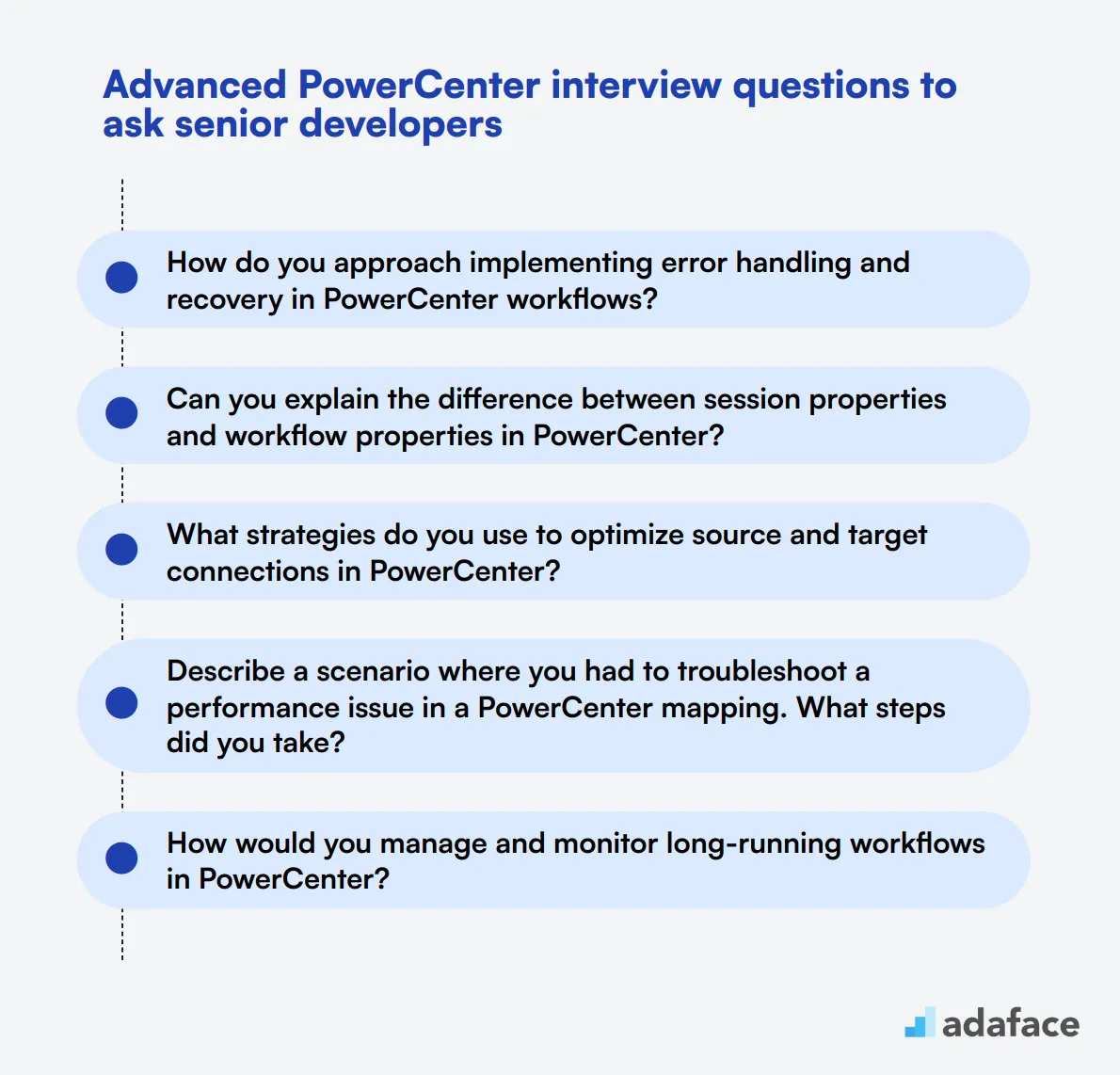

19 advanced PowerCenter interview questions to ask senior developers

To assess whether senior developers possess the advanced skills needed for effective data integration, consider using these 19 PowerCenter interview questions. They can help you evaluate candidates' technical expertise and problem-solving abilities, making your selection process more efficient. For additional guidance on roles like ETL developers, check out our job descriptions.

- How do you approach implementing error handling and recovery in PowerCenter workflows?

- Can you explain the difference between session properties and workflow properties in PowerCenter?

- What strategies do you use to optimize source and target connections in PowerCenter?

- Describe a scenario where you had to troubleshoot a performance issue in a PowerCenter mapping. What steps did you take?

- How would you manage and monitor long-running workflows in PowerCenter?

- Can you explain how to use the debugger in PowerCenter to troubleshoot mappings?

- What is the role of a metadata repository in PowerCenter, and how do you utilize it?

- How do you handle data lineage and impact analysis in PowerCenter projects?

- Can you describe how to implement a custom transformation in PowerCenter?

- What techniques do you use for securing sensitive data in PowerCenter?

- How would you implement data archival strategies in a PowerCenter environment?

- Can you explain the importance of performance benchmarks when working with PowerCenter?

- What considerations do you take into account when designing a scalable PowerCenter architecture?

- How do you maintain documentation for mappings and workflows in PowerCenter?

- Can you describe the process for integrating PowerCenter with other ETL tools or platforms?

- How would you approach testing and validation of data during an ETL process in PowerCenter?

- Can you discuss the use of dynamic vs. static routing in PowerCenter workflows?

- What are the best practices for versioning and deploying PowerCenter objects?

- How do you ensure compliance with data governance standards in PowerCenter projects?

9 PowerCenter interview questions and answers related to ETL processes

Ready to dive into the world of PowerCenter ETL processes? These 9 interview questions will help you gauge a candidate's understanding of data integration workflows. Use them to uncover how well applicants can handle real-world ETL challenges and optimize data transformation processes.

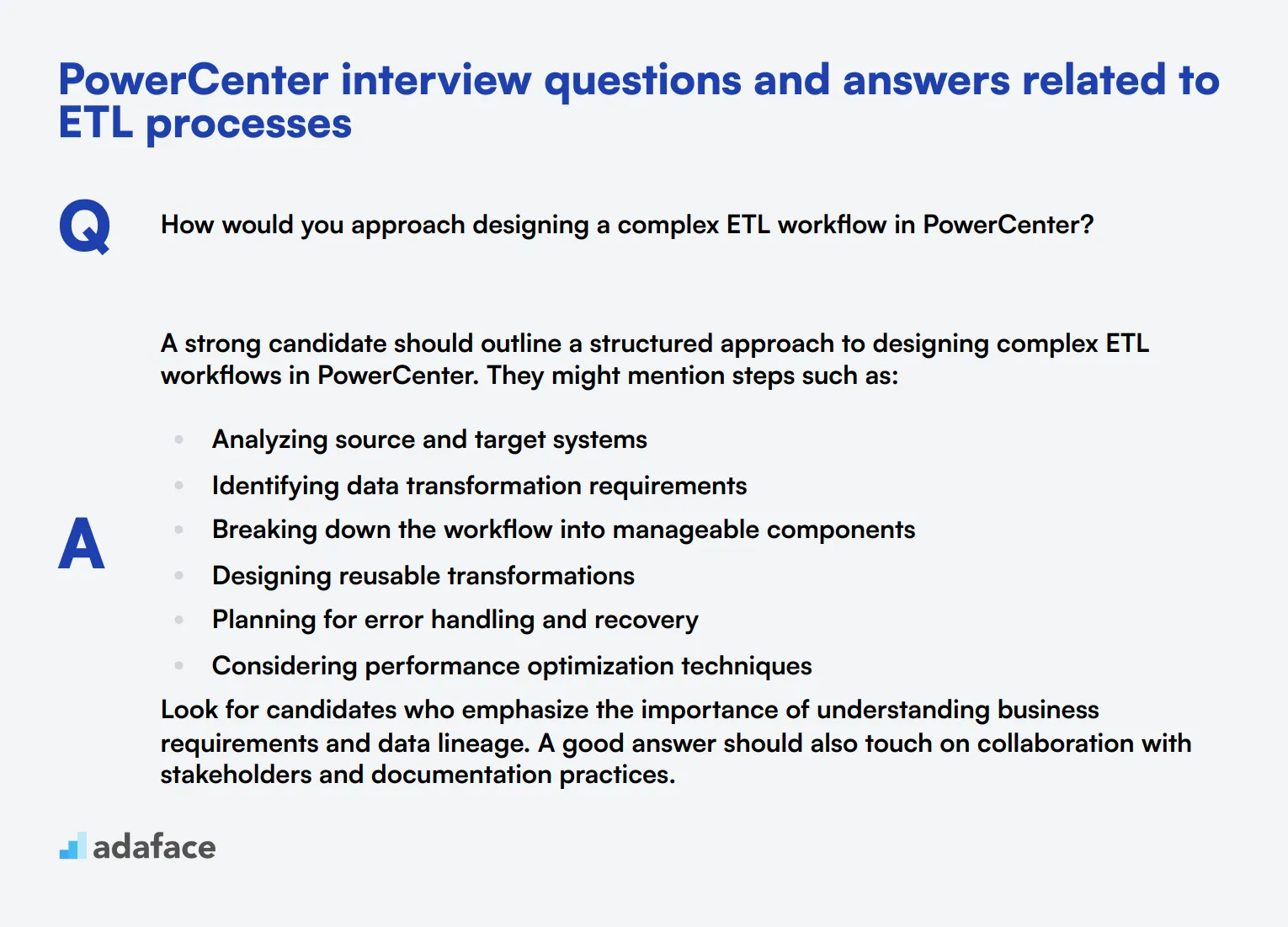

1. How would you approach designing a complex ETL workflow in PowerCenter?

A strong candidate should outline a structured approach to designing complex ETL workflows in PowerCenter. They might mention steps such as:

- Analyzing source and target systems

- Identifying data transformation requirements

- Breaking down the workflow into manageable components

- Designing reusable transformations

- Planning for error handling and recovery

- Considering performance optimization techniques

Look for candidates who emphasize the importance of understanding business requirements and data lineage. A good answer should also touch on collaboration with stakeholders and documentation practices.

2. Can you explain the concept of pushdown optimization in PowerCenter and when you would use it?

Pushdown optimization in PowerCenter refers to the practice of moving data transformation logic to the source or target database, rather than processing it within PowerCenter itself. This can significantly improve performance by reducing data movement and leveraging the processing power of the database.

Candidates should mention that pushdown optimization is particularly useful for:

- Large datasets where moving data is costly

- Complex transformations that can be efficiently handled by the database

- Scenarios where reducing network traffic is crucial

- When working with databases that have powerful query optimization capabilities

Look for answers that demonstrate an understanding of the trade-offs involved and the ability to assess when pushdown optimization is appropriate.

3. How would you implement a Type 2 slowly changing dimension in PowerCenter?

Implementing a Type 2 slowly changing dimension (SCD) in PowerCenter involves tracking historical changes by creating new records for each change. A strong answer should outline the following steps:

- Use a Lookup transformation to check if the incoming record exists in the dimension table

- Employ a Router transformation to separate new, changed, and unchanged records

- For changed records, update the end date of the existing record and set its current flag to false

- Insert a new record with the updated information, setting the start date to the current date and the current flag to true

- For new records, simply insert them with appropriate start dates and current flags

Pay attention to candidates who mention the importance of handling surrogate keys and discuss strategies for maintaining data integrity throughout the process.

4. Describe a scenario where you would use a master workflow in PowerCenter.

A master workflow in PowerCenter is used to orchestrate and control the execution of multiple sub-workflows. Candidates should describe scenarios such as:

- Complex ETL processes that involve multiple data sources and targets

- Workflows that need to be executed in a specific order

- Processes that require conditional execution based on the success or failure of previous steps

- Scenarios where parallel processing of independent workflows can improve overall performance

Look for answers that demonstrate an understanding of workflow dependencies and the ability to design efficient, scalable ETL solutions. Candidates should also mention how master workflows can simplify monitoring and error handling in complex ETL environments.

5. How do you approach implementing error handling and recovery in PowerCenter workflows?

Effective error handling and recovery in PowerCenter workflows is crucial for maintaining data integrity and ensuring smooth operations. Strong candidates should discuss strategies such as:

- Using session-level error thresholds to control when a session should fail

- Implementing error handling transformations like the Error transformation

- Designing workflows with restart capabilities for failed sessions

- Utilizing PowerCenter's built-in logging and notification features

- Creating custom error tables or files to capture detailed error information

Pay attention to candidates who emphasize the importance of proactive error prevention through data validation and thorough testing. They should also mention the value of clear error messages and documentation for troubleshooting purposes.

6. How would you optimize source and target connections in PowerCenter for better performance?

Optimizing source and target connections in PowerCenter is crucial for improving overall ETL performance. Candidates should discuss strategies such as:

- Using native connectivity options when available for better performance

- Implementing connection pooling to reduce connection overhead

- Configuring appropriate buffer sizes for read and write operations

- Utilizing bulk loading techniques for large data volumes

- Implementing partitioned reads and writes for parallel processing

- Considering pushdown optimization to leverage database capabilities

Look for answers that demonstrate an understanding of database-specific optimizations and the ability to balance performance with resource utilization. Candidates should also mention the importance of monitoring and tuning connection settings based on workload characteristics.

7. Explain how you would use the debugger in PowerCenter to troubleshoot mappings.

The debugger in PowerCenter is a powerful tool for troubleshooting mapping issues. Candidates should outline the following steps:

- Enable debug mode for the mapping in the Workflow Manager

- Set breakpoints at specific transformations or links

- Execute the mapping in debug mode

- Step through the data flow, examining row counts and data values at each stage

- Use the data viewer to inspect individual rows and identify discrepancies

- Analyze transformation logic and adjust as necessary

Look for candidates who emphasize the importance of systematic debugging and mention how they would use the debugger in conjunction with session logs and other troubleshooting techniques. They should also discuss how to effectively communicate findings to developers or stakeholders.

8. How do you handle data lineage and impact analysis in PowerCenter projects?

Data lineage and impact analysis are crucial aspects of data governance in PowerCenter projects. Strong candidates should discuss approaches such as:

- Utilizing PowerCenter's built-in lineage and impact analysis tools

- Maintaining comprehensive documentation of mappings and workflows

- Implementing naming conventions and metadata tags for easy traceability

- Using repository reporting tools to generate lineage reports

- Regularly reviewing and updating lineage information as part of change management

Look for answers that demonstrate an understanding of the importance of data lineage in regulatory compliance and decision-making processes. Candidates should also mention strategies for visualizing and communicating lineage information to stakeholders.

9. What considerations do you take into account when designing a scalable PowerCenter architecture?

Designing a scalable PowerCenter architecture requires careful consideration of various factors. Strong candidates should discuss points such as:

- Implementing a distributed architecture with separate nodes for different PowerCenter components

- Utilizing load balancing for Integration Services to distribute workload

- Planning for horizontal and vertical scaling of hardware resources

- Implementing efficient partitioning strategies for large datasets

- Designing workflows for parallel processing and optimal resource utilization

- Considering cloud-based deployments for flexibility and scalability

Look for answers that demonstrate an understanding of performance bottlenecks and the ability to design solutions that can grow with business needs. Candidates should also mention the importance of monitoring and capacity planning in maintaining a scalable architecture.

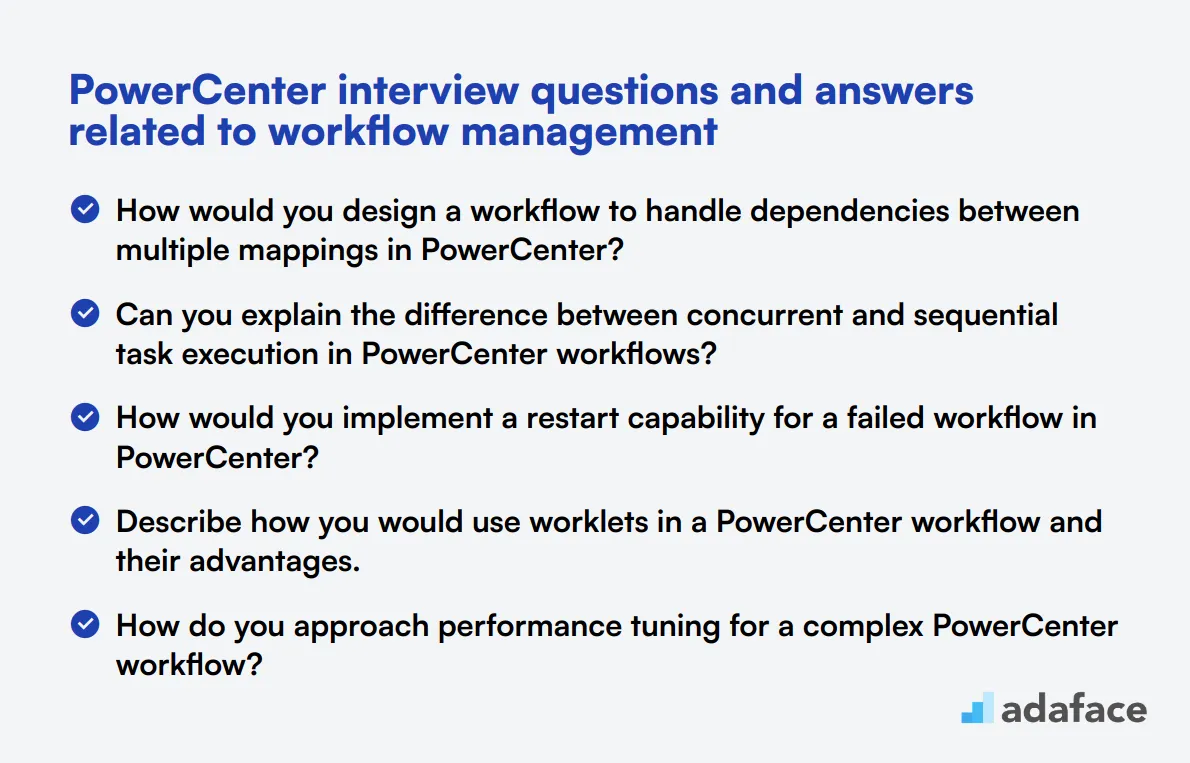

8 PowerCenter interview questions and answers related to workflow management

Ready to dive into the world of PowerCenter workflow management? These 8 interview questions will help you gauge a candidate's expertise in handling complex data integration processes. Use them to uncover how well applicants understand workflow optimization, error handling, and performance tuning in PowerCenter.

1. How would you design a workflow to handle dependencies between multiple mappings in PowerCenter?

A strong candidate should explain that designing a workflow with dependencies involves creating a sequence of tasks that respect the logical order of data processing. They might mention using workflow variables to pass information between mappings and utilizing control tasks to manage the flow.

Key points to look for in their answer include:

- Creating a main workflow with sub-workflows for complex processes

- Using workflow variables to pass data between mappings

- Implementing control tasks like Start, Stop, and Decision tasks

- Configuring task links to define the execution order

- Setting up error handling and recovery scenarios

Listen for candidates who demonstrate an understanding of workflow management best practices and can explain how they've implemented complex workflows in real-world scenarios.

2. Can you explain the difference between concurrent and sequential task execution in PowerCenter workflows?

An ideal response should clearly differentiate between concurrent and sequential task execution:

- Sequential execution: Tasks run one after another in a predefined order. Each task must complete before the next one starts. This is useful when tasks have dependencies or when resources need to be managed carefully.

- Concurrent execution: Multiple tasks run simultaneously, which can improve overall workflow performance. This is beneficial when tasks are independent of each other and system resources allow for parallel processing.

Look for candidates who can explain scenarios where each type of execution is preferable and how to configure workflows accordingly. They should also mention potential challenges with concurrent execution, such as resource contention and data consistency issues.

3. How would you implement a restart capability for a failed workflow in PowerCenter?

A comprehensive answer should outline the following steps:

- Use session properties to enable session recovery

- Implement checkpoints in the workflow to mark progress

- Utilize workflow variables to store and retrieve state information

- Create a recovery strategy using Decision tasks and conditional branches

- Implement error handling to capture and log failure points

Candidates should emphasize the importance of designing workflows with restart capability in mind from the beginning. Look for those who mention testing restart scenarios and maintaining clear documentation of the restart process for operations teams.

4. Describe how you would use worklets in a PowerCenter workflow and their advantages.

A strong answer should explain that worklets are reusable workflow objects that can be embedded within other workflows. Candidates should highlight the following advantages:

- Modularity: Worklets allow for breaking down complex workflows into manageable, reusable components

- Maintainability: Changes to a worklet affect all workflows using it, simplifying updates

- Standardization: Worklets enforce consistent processes across different workflows

- Flexibility: They can be parameterized to handle various scenarios

Look for candidates who can provide examples of when they've used worklets, such as for error handling, logging, or common data processing tasks. They should also mention any challenges they've faced with worklets and how they overcame them.

5. How do you approach performance tuning for a complex PowerCenter workflow?

An ideal response should outline a systematic approach to performance tuning:

- Analyze workflow logs and session logs to identify bottlenecks

- Review mapping designs for optimization opportunities (e.g., pushdown optimization, caching strategies)

- Examine source and target configurations for potential improvements

- Consider partitioning and bulk loading techniques where appropriate

- Optimize session properties such as buffer sizes and commit intervals

- Evaluate and adjust concurrent vs. sequential task execution

- Implement performance monitoring and establish benchmarks

Look for candidates who emphasize the importance of iterative testing and measurement throughout the tuning process. They should also mention collaboration with database administrators and infrastructure teams to ensure a holistic approach to performance optimization.

6. Explain how you would implement dynamic partitioning in a PowerCenter workflow.

A comprehensive answer should cover the following points:

- Use of partition points in mappings to define where data will be split

- Configuration of dynamic partitioning in session properties

- Explanation of partition types (hash, round-robin, key range, etc.)

- Implementation of partition override scripts for custom logic

- Consideration of target table partitioning for improved load performance

Candidates should discuss the benefits of dynamic partitioning, such as improved parallelism and scalability. Look for those who can explain how they've used dynamic partitioning to solve real-world performance challenges in data integration projects.

7. How would you implement a notification system for critical events in a PowerCenter workflow?

A strong response should outline a multi-faceted approach to notifications:

- Utilize PowerCenter's built-in notification features in workflow and session properties

- Implement custom scripts or commands to send notifications via email, SMS, or other channels

- Use event-based tasks in the workflow to trigger notifications based on specific conditions

- Integrate with external monitoring systems or enterprise notification platforms

- Set up different notification levels based on event severity (info, warning, error)

Look for candidates who emphasize the importance of clear, actionable notifications and discuss how they've implemented notification systems that balance informativeness with avoiding alert fatigue. They should also mention considerations for escalation procedures and on-call rotations for critical issues.

8. Describe how you would implement a data validation workflow in PowerCenter to ensure data quality.

An ideal answer should include the following components:

- Use of Source Qualifier transformations to perform initial data checks

- Implementation of Filter and Router transformations to identify and route invalid data

- Utilization of Lookup transformations for reference data validation

- Application of Expression transformations for complex validation rules

- Creation of separate error tables or files to capture rejected records

- Implementation of aggregate checks to validate overall data quality metrics

- Use of post-session success/failure emails to report validation results

Look for candidates who emphasize the importance of designing workflows with clear error handling and reporting mechanisms. They should also discuss strategies for handling different types of data quality issues and the importance of working closely with business stakeholders to define and refine validation rules.

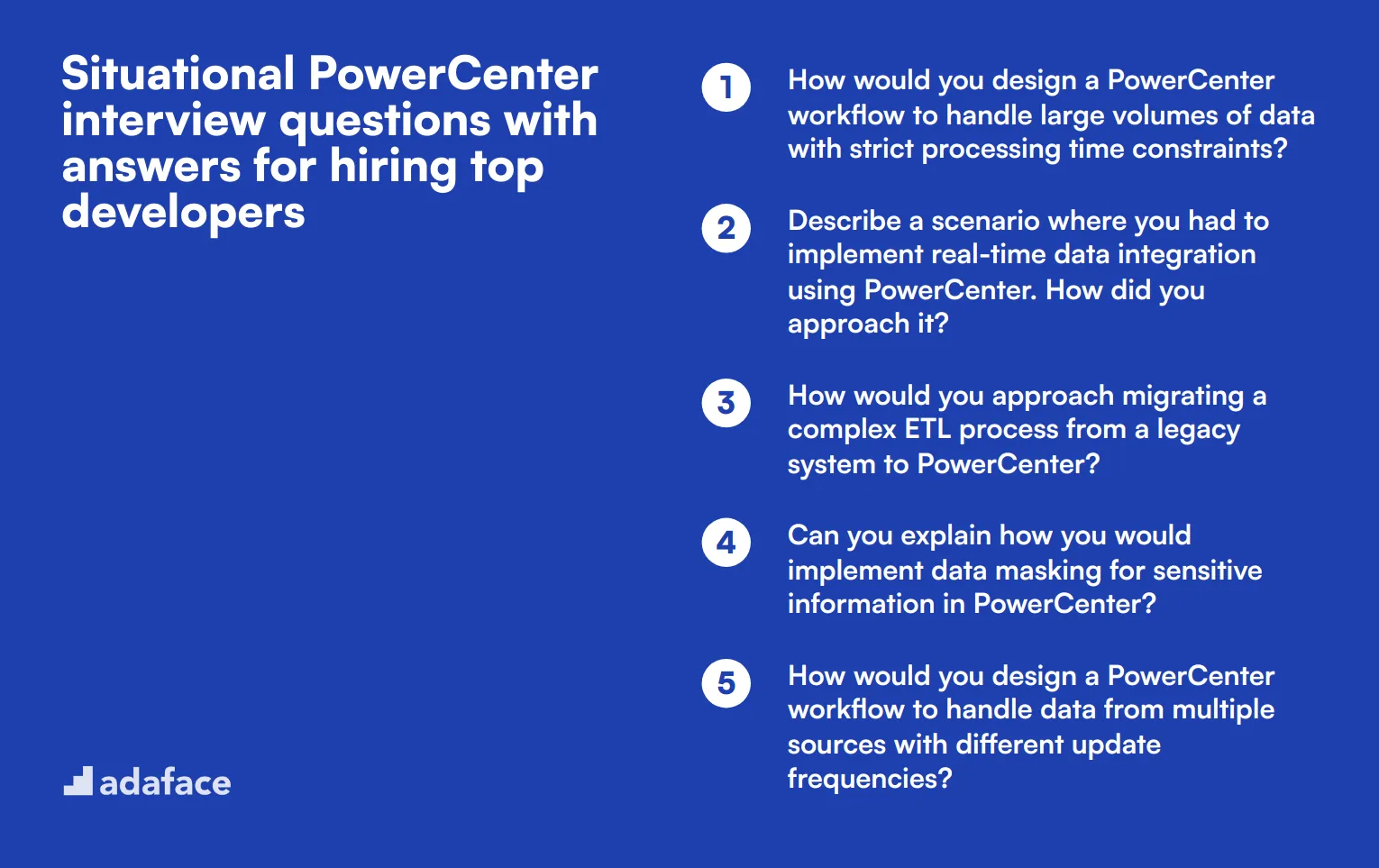

7 situational PowerCenter interview questions with answers for hiring top developers

When interviewing PowerCenter developers, situational questions can provide valuable insights into a candidate's problem-solving skills and real-world experience. These seven questions will help you assess how potential hires approach complex scenarios and leverage PowerCenter's capabilities to overcome challenges. Use them to evaluate candidates effectively and identify top talent for your data integration projects.

1. How would you design a PowerCenter workflow to handle large volumes of data with strict processing time constraints?

A strong candidate should outline a strategy that includes:

- Partitioning the data to enable parallel processing

- Utilizing pushdown optimization to reduce data movement

- Implementing aggressive caching strategies

- Considering the use of high-performance readers and writers

- Optimizing session configurations for memory and CPU usage

Look for candidates who demonstrate an understanding of PowerCenter's scalability features and can explain how they would balance performance with resource utilization. Follow up by asking about specific experiences where they've implemented such optimizations.

2. Describe a scenario where you had to implement real-time data integration using PowerCenter. How did you approach it?

An experienced candidate might describe:

- Using PowerCenter's real-time options like Web Services or Java Transformation

- Implementing Change Data Capture (CDC) techniques

- Setting up event-based workflows

- Utilizing PowerExchange for real-time connectivity

- Configuring appropriate error handling and recovery mechanisms

Pay attention to how the candidate balances real-time requirements with system performance and data integrity. Ask follow-up questions about specific challenges they faced and how they overcame them in their real-time integration projects.

3. How would you approach migrating a complex ETL process from a legacy system to PowerCenter?

A thoughtful response should include:

- Analyzing the existing ETL process and documenting current workflows

- Identifying PowerCenter equivalents for legacy transformations and functions

- Creating a mapping between old and new data structures

- Developing a phased migration plan to minimize disruption

- Implementing thorough testing and validation procedures

- Planning for performance optimization post-migration

Look for candidates who emphasize the importance of understanding both the legacy system and PowerCenter's capabilities. Their approach should demonstrate project management skills and attention to detail in ensuring a smooth transition.

4. Can you explain how you would implement data masking for sensitive information in PowerCenter?

A knowledgeable candidate should describe:

- Using Expression transformations to apply masking algorithms

- Implementing lookup transformations to replace sensitive data with masked values

- Utilizing the Data Masking Option if available in the PowerCenter version

- Ensuring consistent masking across different mappings and workflows

- Implementing role-based access control for masked data

Evaluate the candidate's understanding of data privacy concerns and their ability to balance security requirements with data usability. Ask about their experience with specific masking techniques and how they ensure the masked data remains useful for its intended purpose.

5. How would you design a PowerCenter workflow to handle data from multiple sources with different update frequencies?

An effective answer might include:

- Creating separate workflows for each data source

- Implementing a master workflow to orchestrate the individual source workflows

- Using parameters to control update frequencies

- Designing flexible mappings that can handle full and incremental loads

- Implementing proper error handling and notification mechanisms

Look for candidates who demonstrate an understanding of PowerCenter's workflow management capabilities and can explain how they would ensure data consistency across sources with different update cycles. Ask about their experience with complex data integration scenarios involving multiple sources.

6. Describe how you would implement a data quality firewall in PowerCenter to prevent bad data from entering your target systems.

A comprehensive answer should cover:

- Using Source Qualifier transformations to apply initial data quality checks

- Implementing Filter and Router transformations to segregate bad data

- Utilizing Lookup transformations for data validation against reference tables

- Applying business rules through Expression transformations

- Creating error tables or files to capture rejected records

- Implementing notifications for data quality issues

Assess the candidate's understanding of data quality principles and their ability to leverage PowerCenter's features to enforce data quality standards. Inquire about their experience in handling data quality issues and how they've measured the effectiveness of their data quality firewalls.

7. How would you approach performance tuning for a PowerCenter workflow that's taking too long to complete?

A strong candidate should outline a systematic approach:

- Analyzing session logs to identify bottlenecks

- Reviewing mapping designs for optimization opportunities

- Evaluating partitioning strategies

- Optimizing source and target configurations

- Adjusting cache settings and memory allocation

- Considering hardware upgrades or distributed processing if necessary

Look for candidates who demonstrate a methodical approach to performance tuning and can explain how they would measure the impact of their optimizations. Ask about specific tools or techniques they've used for performance analysis in PowerCenter environments.

Which PowerCenter skills should you evaluate during the interview phase?

While a single interview may not uncover every facet of a candidate's capabilities, focusing on specific skills can provide a deeper understanding of their potential in PowerCenter roles. Here, we outline the key skills to assess to ensure candidates are well-equipped to handle the challenges of PowerCenter environments.

Data Integration Expertise

Data integration is the backbone of PowerCenter, enabling the merging of data from different sources. A strong grasp of data integration principles is necessary to leverage PowerCenter effectively for ETL (Extract, Transform, Load) processes.

Consider utilizing an assessment test focused on Data Integration to evaluate candidates' understanding and proficiency. While Adaface does not currently offer a specific test for this skill, a general ETL online test could serve as a good preliminary filter.

During interviews, it’s also beneficial to include specific questions that relate to data integration challenges they might face using PowerCenter.

Can you describe a challenging data integration problem you solved using PowerCenter?

Look for detailed responses that highlight the candidate’s problem-solving approach, their use of PowerCenter features, and their ability to optimize data flows.

Workflow Management

Efficient workflow management is critical, as PowerCenter is extensively used for automating and managing workflows in data integration jobs. Candidates should demonstrate proficiency in manipulating workflows to optimize ETL processes.

To screen for Workflow Management skills, an MCQ test could be utilized to pre-assess candidates. Adaface offers this skill assessment in its library with the Informatica online test which covers PowerCenter-related skills.

Include questions in the interview to assess hands-on experience and understanding of workflow complexities in PowerCenter.

Explain how you would optimize a workflow in PowerCenter to handle large data sets more efficiently?

Effective answers should include strategies for workflow optimization, such as partitioning data or using concurrent workflows, reflecting the candidate’s depth of knowledge.

Error Handling and Debugging

In PowerCenter, the ability to efficiently identify, debug, and handle errors during the ETL processes is indispensable. This ensures data integrity and reliability in data management tasks.

For a thorough evaluation, consider administering a test that includes scenarios requiring error diagnosis and debugging. Adaface’s Informatica online test could be effectively used here.

Probe into their practical skills with targeted interview questions about error handling in PowerCenter.

Describe a scenario where you identified and resolved a data discrepancy during an ETL process using PowerCenter.

Key aspects to look for in answers include the candidate’s methodical approach to troubleshooting, use of PowerCenter tools for debugging, and preventive strategies for future issues.

Tips for Effective PowerCenter Interview Processes

Before you start putting your PowerCenter interview knowledge into action, here are some tips to enhance your hiring process. These suggestions will help you make the most of your interviews and find the best candidates.

1. Incorporate Skills Tests in Your Screening Process

Using skills tests early in your hiring process can save time and improve the quality of your candidate pool. These assessments help you objectively evaluate a candidate's technical abilities before investing time in interviews.

For PowerCenter roles, consider using an Informatica online test to assess core skills. Additionally, an ETL online test can evaluate broader data integration knowledge.

Implementing these tests allows you to focus your interviews on candidates who have demonstrated the necessary technical skills. This approach streamlines your hiring process and increases the likelihood of finding the right fit for your team.

2. Prepare a Balanced Set of Interview Questions

With limited interview time, it's crucial to ask the right questions. Aim for a mix of technical PowerCenter questions and queries that assess problem-solving and communication skills.

Consider including questions about ETL processes and data modeling to get a comprehensive view of the candidate's expertise. This balanced approach helps you evaluate both technical proficiency and soft skills essential for the role.

3. Master the Art of Follow-Up Questions

Asking follow-up questions is key to understanding a candidate's true depth of knowledge. This technique helps you differentiate between memorized answers and genuine understanding.

For example, after asking about PowerCenter mappings, follow up with a question about optimizing mapping performance. This approach allows you to assess the candidate's practical experience and problem-solving skills in real-world scenarios.

Use PowerCenter interview questions and skills tests to hire talented developers

If you are looking to hire someone with PowerCenter skills, it is important to ensure they possess these skills accurately. The best way to do this is by using skill tests such as our Informatica Online Test or ETL Online Test.

Once you use these tests, you can shortlist the best applicants and call them for interviews. To get started, you can sign up here or explore our full test library for more options.

Informatica Online Test

Download PowerCenter interview questions template in multiple formats

PowerCenter Interview Questions FAQs

You should ask a mix of general, junior, intermediate, and advanced questions covering topics like ETL processes and workflow management.

Use a combination of technical questions, situational scenarios, and practical skills tests to evaluate a candidate's PowerCenter expertise.

Yes, the post provides specific question sets for junior, mid-tier, and senior developers to match their expected skill levels.

This post includes a total of 80 PowerCenter interview questions across various categories and difficulty levels.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources