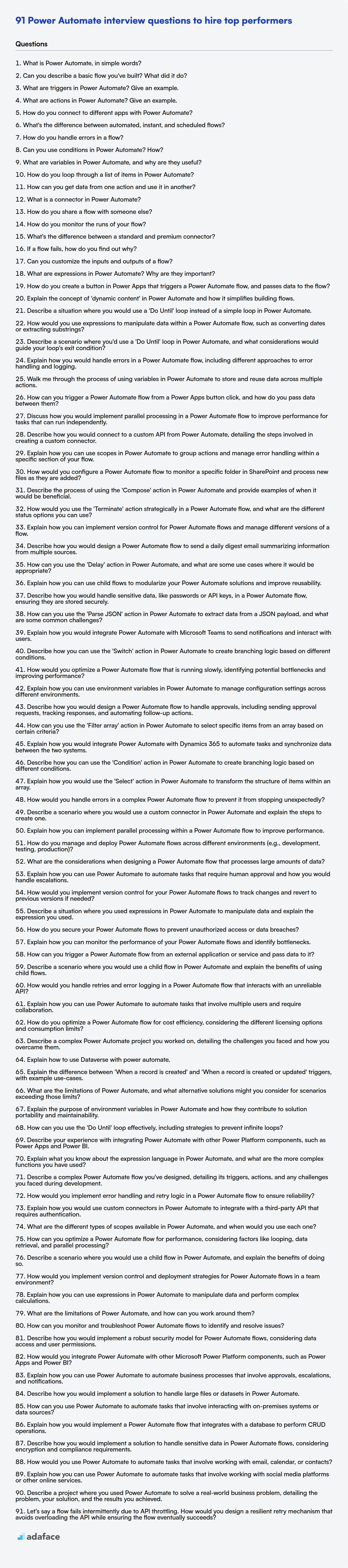

When evaluating candidates for Power Automate roles, it's important to ask the right questions to assess their abilities. Preparing a question bank ahead of the interviews can keep you on track.

This blog post provides a set of Power Automate interview questions categorized by skill level, including basic, intermediate, advanced, and expert, along with multiple-choice questions. These questions are tailored to help you gauge a candidate's expertise and suitability for the role, from understanding basic flow creation to more complex automation scenarios.

By using this curated list, you can streamline your interview process and gain confidence in your hiring decisions, ensuring you bring the best Power Automate talent to your team; before the interviews, you can also evaluate candidates using our Microsoft Power Platform Developer test.

Table of contents

Basic Power Automate interview questions

1. What is Power Automate, in simple words?

Power Automate is a cloud-based service that lets you automate repetitive tasks and workflows without writing code. Think of it as a digital assistant that can connect different apps and services to streamline your processes.

It uses a visual designer with pre-built connectors and triggers to create automated flows. For example, you can automatically save email attachments to OneDrive, get a notification when a new item is added to a SharePoint list, or post a message to Teams when a task is completed in Planner.

2. Can you describe a basic flow you've built? What did it do?

I built a basic data pipeline to process website clickstream data. It ingested raw clickstream events (user ID, timestamp, URL) from a message queue (Kafka), transformed the data to aggregate user activity (page views per user per day), and loaded the aggregated data into a data warehouse (Snowflake) for analysis.

The pipeline used Apache Spark for distributed data processing. Key steps included: reading data from Kafka using Spark Streaming, extracting relevant fields and cleaning the data, aggregating the data using Spark's aggregation functions, and writing the processed data to Snowflake using the Snowflake connector. The pipeline was scheduled to run hourly, ensuring near real-time updates to the data warehouse.

3. What are triggers in Power Automate? Give an example.

Triggers in Power Automate are events that start a flow. They essentially listen for specific occurrences and, when those occur, initiate the workflow. Triggers can be based on a schedule (e.g., daily, weekly), an event in a connected service (e.g., a new email arrives in Outlook), or a manual trigger (e.g., a button click).

For example, a trigger could be 'When a new file is created in a SharePoint library'. When a user uploads a new document to that library, the flow is automatically started. The flow might then send an email notification about the new file or move the file to another folder.

4. What are actions in Power Automate? Give an example.

Actions in Power Automate are the building blocks of a flow. They represent the tasks you want the flow to perform. Each action performs a specific operation, such as sending an email, creating a file, or updating a database record. Essentially, they define what the flow should do.

For example, the "Send an email (V2)" action is used to send an email. You configure the action with details like the recipient's address, subject, and body of the email. Other examples include: Create file, Update row, Post message in Teams etc.

5. How do you connect to different apps with Power Automate?

Power Automate connects to different apps primarily through connectors. Connectors act as a bridge, allowing Power Automate to interact with various services and applications. These connectors provide pre-built triggers and actions that simplify the process of automating tasks across different apps.

To connect, you typically search for the desired connector (e.g., 'SharePoint', 'Twitter', 'Salesforce'), authenticate using your account credentials for that service, and then use the available triggers and actions to build your flow. Some connectors are 'standard' and included with Power Automate licenses, while others are 'premium' and may require separate licensing. Custom connectors can also be created to connect to APIs that don't have pre-built connectors.

6. What's the difference between automated, instant, and scheduled flows?

Automated, instant, and scheduled flows are different types of triggers for Power Automate flows (or similar workflow automation tools). An automated flow is triggered by an event happening in a connected service, such as a file being added to OneDrive or a new email arriving. An instant flow (also known as a button flow) is triggered manually, either from a button in the Power Automate mobile app or from within a connected application. Scheduled flows run on a predefined schedule (e.g., daily, weekly, monthly), irrespective of any external event.

7. How do you handle errors in a flow?

Error handling in a flow typically involves using specific components or scopes designed to catch and manage exceptions. For example, in MuleSoft, you would use an Error Handler scope. Within this scope, you can define different error types to catch, such as ANY, HTTP:BAD_REQUEST, or custom error types. Once an error is caught, you can perform actions like logging the error, transforming the error message, or retrying the operation.

Common strategies include using try-catch blocks (or their equivalent in the specific flow technology), implementing retry mechanisms with exponential backoff, and configuring centralized error logging and monitoring. For instance, to retry an operation in Mule, you might configure a Retry Policy with settings for the number of attempts, delay between attempts, and a maximum cumulative delay. You can also raise custom errors using specific constructs like raiseError or equivalent functions depending on the tool, which can be caught and handled by appropriate error handlers.

8. Can you use conditions in Power Automate? How?

Yes, you can use conditions in Power Automate. The primary way to implement conditions is by using the Condition action. This action allows you to create branching logic based on whether a specific expression evaluates to true or false.

Inside the Condition action, you define one or more conditions to evaluate. You can use various operators such as equals, not equals, greater than, less than, contains, etc., to compare values. Based on the result of the condition, the flow will execute either the 'If true' branch or the 'If false' branch. You can nest conditions or use 'Add row' inside a condition to create complex logic.

9. What are variables in Power Automate, and why are they useful?

Variables in Power Automate are containers that store data. This data can be of various types, such as strings, numbers, dates, or booleans. They are useful because they allow you to store and manipulate data within your flows, making your flows more dynamic and flexible.

They are useful for:

- Storing values: Store data to be used later in the flow.

- Tracking progress: Keep track of loop iterations or process states.

- Performing calculations: Store the results of calculations.

- Conditional logic: Store values to be evaluated in

ifconditions. - Improving readability: Using descriptive variable names improves flow understanding.

10. How do you loop through a list of items in Power Automate?

In Power Automate, you can loop through a list of items using the Apply to each control. This control iterates over each item in an array (which is your list). You configure it by providing the array as input. Within the Apply to each control, you can then add actions to perform on each item.

Here's a basic example:

- Obtain your list (e.g., from a SharePoint list, a variable, or a previous action's output).

- Add an Apply to each control to your flow.

- In the Select an output from previous steps field of the Apply to each control, select the array (list) you want to iterate over.

- Inside the Apply to each control, add the actions you want to perform on each item in the list. You can access the current item using the

item()expression. For example, to access a property named 'Title' of each item, you might useitem()?['Title'].

11. How can you get data from one action and use it in another?

There are several ways to pass data between actions. One common approach is to use a shared state or data store. This could involve storing the data in a database, a session variable, a global variable, or a dedicated data structure accessible by both actions. Action 1 would write the data to the shared location, and then action 2 would read the data from that location.

Another way is to pass the data directly as a parameter or context in the request/response cycle if applicable. For example, in a web application, you could include the data as a query parameter in a redirect URL, or as part of the request body in a subsequent POST request. In other architectures, a message queue or event bus can also be used.

12. What is a connector in Power Automate?

In Power Automate, a connector is a proxy or a wrapper around an API that allows Power Automate to communicate with other applications, services, or data sources. Connectors provide a way to trigger flows and perform actions using those external systems without needing to write code to handle the API interactions directly.

Essentially, they handle the authentication, data formatting, and communication protocols required to interact with a specific service like SharePoint, Twitter, or SQL Server. Connectors come in two main types: standard (included with Power Automate licenses) and premium (require additional licensing). Some connectors can trigger flows (trigger connectors), while others only perform actions (action connectors).

13. How do you share a flow with someone else?

To share a flow with someone, the method depends on the specific flow tool or platform you are using. Generally, you can export the flow as a file (e.g., a .json, .yaml, or tool-specific format) and then share that file. Alternatively, some platforms offer direct sharing features, where you can grant access to the flow to specific users or groups within the platform. This often involves specifying their roles and permissions (e.g., read-only, edit access).

Specific steps will vary but usually involve finding an 'export' or 'share' option within the flow editor. For example, in Node-RED you can export flows to the clipboard as JSON, which can be imported by others. In cloud platforms like Microsoft Power Automate or AWS Step Functions, sharing is typically managed through the platform's access control mechanisms.

14. How do you monitor the runs of your flow?

I monitor flow runs through a combination of tools and techniques. Primarily, I leverage the monitoring capabilities provided by the workflow orchestration framework itself. This often includes a web-based UI or API endpoints where I can view the status of each run, including start and end times, any errors encountered, and resource utilization. I configure alerts to notify me of failures or performance bottlenecks. These alerts can be delivered via email, Slack, or other communication channels.

In addition to the framework's built-in monitoring, I incorporate logging throughout the flow. This allows me to track the progress of each step and identify the root cause of any issues. I may also use external monitoring tools to track the performance of the underlying infrastructure and dependencies, such as CPU usage, memory consumption, and network latency. Finally, I aggregate metrics into dashboards using tools like Grafana to provide a high-level overview of flow performance and identify trends.

15. What's the difference between a standard and premium connector?

In Power Automate and Power Apps, connectors allow you to connect to various data sources and services. Standard connectors are typically included with your Microsoft Power Platform license and allow access to commonly used services like SharePoint, Outlook, Twitter, and Microsoft Forms. Premium connectors, on the other hand, require a premium license to use and provide access to more specialized or enterprise-level services like Salesforce, SQL Server, Azure services, and custom APIs.

The key difference lies in the licensing requirement and the types of services they connect to. Standard connectors cater to basic integration needs, while premium connectors unlock more advanced and powerful integration capabilities for business-critical systems, but come at an additional cost.

16. If a flow fails, how do you find out why?

When a flow fails, I typically start by examining the error logs and monitoring dashboards for any immediate error messages or exceptions. These logs usually provide details about the point of failure, the type of error encountered (e.g., network timeout, data validation error), and any relevant stack traces. Analyzing the error message is usually the fastest way to pinpoint the root cause.

Next, I would investigate any recent changes to the flow or its dependencies. Code deployments, configuration updates, or infrastructure modifications can often introduce bugs or incompatibilities that lead to failures. I'd also look at the flow's input data to ensure it's valid and within expected parameters, and review the flow's configuration to make sure all services and components are reachable and configured correctly.

17. Can you customize the inputs and outputs of a flow?

Yes, you can customize the inputs and outputs of a flow. Most flow-based programming frameworks allow you to define the data types and structures that flow into and out of each component or node within the flow. This customization can involve specifying the schema for input/output data, transforming data formats, validating data, and mapping data between different components. This flexibility enables flows to integrate with various data sources and systems, and to process data in a way that is tailored to specific needs.

For example, a data processing flow might accept data from a CSV file as input, perform several transformations on the data, and output the results to a database. Each step can be customized by controlling the columns, datatypes, and formatting. Specifically, in some frameworks, you might define an input parameter as a JSON object with a certain schema, allowing the flow to receive configuration data or variables that can be used to parameterize the flow execution.

18. What are expressions in Power Automate? Why are they important?

Expressions in Power Automate are formulas that perform calculations, manipulate data, and control the flow of your automation. They are written using a formula language that resembles Excel formulas. Expressions are denoted by starting with an equals sign (=) when used directly in the Power Automate designer, or are enclosed in curly braces @{...} in certain contexts.

Expressions are crucial because they enable dynamic behavior. Instead of using static values, you can use expressions to work with variables, date/time values, and perform logical operations. This allows you to build more flexible and adaptable flows that can handle a variety of scenarios. For instance, you can use an expression to concatenate strings, calculate dates, or make decisions based on the value of a variable such as if(greater(variables('myNumber'), 10), 'Large', 'Small').

19. How do you create a button in Power Apps that triggers a Power Automate flow, and passes data to the flow?

To create a button in Power Apps that triggers a Power Automate flow and passes data, first, create a Power Automate flow with a Power Apps trigger. The trigger defines the input parameters the flow will receive. In Power Apps, add a button control. In the button's OnSelect property, use the PowerAutomateFlowName.Run() function, passing the desired data as arguments. These arguments correspond to the input parameters defined in your Power Automate trigger. For example, MyFlow.Run(TextInput1.Text, Dropdown1.Selected.Value).

Make sure your Power Apps environment and Power Automate environment are the same, and the Power Apps user has permissions to run the flow. The passed data will be available in the flow as trigger inputs, which you can then use in subsequent actions within the flow.

20. Explain the concept of 'dynamic content' in Power Automate and how it simplifies building flows.

Dynamic content in Power Automate refers to the data available from previous steps in a flow. Instead of hardcoding values, you can use tokens representing these values. These tokens act as placeholders that are automatically populated with the actual data when the flow runs. For example, if your flow starts with a 'When a new email arrives' trigger, dynamic content includes information like the sender's address, subject, and body of the email.

Using dynamic content simplifies flow creation by allowing you to easily pass data between actions. You don't need to manually extract or transform data; Power Automate handles it for you. When you're configuring an action, a dynamic content panel appears, displaying available values from previous steps. You can simply select the desired value, and Power Automate inserts the corresponding token into the action's input field.

21. Describe a situation where you would use a 'Do Until' loop instead of a simple loop in Power Automate.

I would use a 'Do Until' loop in Power Automate when I need to execute a set of actions at least once, and then continue looping based on a specific condition. Unlike a simple loop, 'Do Until' guarantees that the actions within the loop will run one time before the condition is evaluated. A suitable example is retrying an API call that might initially fail due to a temporary network issue. The flow will attempt the API call, and then check if the call was successful. If not, it retries until a maximum number of attempts or a success is achieved.

Specifically, if I want to retry an action a certain number of times or until a specific condition is met, but always try at least one time, 'Do Until' is the better option. An alternative approach involves initializing a variable to track retries. For instance, consider this scenario:

Initialize variable retryCount = 0

Do Until retryCount >= maxRetries OR successCondition == true

Increment retryCount

// Attempt the action

If (Action Succeeded)

Set Variable successCondition = true

Else

// Wait before retrying

End If

End Do

Intermediate Power Automate interview questions

1. How would you use expressions to manipulate data within a Power Automate flow, such as converting dates or extracting substrings?

In Power Automate, expressions are used to manipulate data within flows. For example, to convert a date, I'd use the formatDateTime() function. formatDateTime(triggerBody()?['Date'], 'yyyy-MM-dd') would format a date from a trigger. Extracting substrings can be done with functions like substring(). substring(triggerBody()?['Text'], 0, 5) extracts the first 5 characters.

Other useful functions include: concat() for joining strings, int() for converting to integers, add() for adding numbers, length() for getting string length, and logical functions like if(), and(), or(). These functions are often combined for more complex data transformations. Also, remember to use the compose action to test out complex expressions before using them in other actions.

2. Describe a scenario where you'd use a 'Do Until' loop in Power Automate, and what considerations would guide your loop's exit condition?

A 'Do Until' loop in Power Automate is useful when you need to repeat an action or a set of actions until a specific condition is met. For example, imagine an approval process where you need to repeatedly send reminders to an approver until they either approve or reject the request. The actions inside the loop would involve sending the reminder email, and the loop would continue 'until' the approval status becomes 'Approved' or 'Rejected'.

The exit condition for the loop is crucial. Considerations include:

- Clear Exit Condition: Ensure the exit condition is well-defined and achievable to prevent infinite loops. The condition should be based on a variable that is modified within the loop.

- Timeout: Implement a maximum loop count or a timeout mechanism (e.g., checking if a certain duration has elapsed) as a safeguard against scenarios where the exit condition might never be met. This can be achieved by incrementing a counter within the loop and exiting when the counter exceeds a threshold, or comparing the current time to a start time to enforce time based limits.

- Error Handling: Account for potential errors that might prevent the exit condition from being met (e.g., the API call to check the approval status fails). Implement error handling within the loop to gracefully handle such situations and prevent the flow from getting stuck.

- Rate limiting: When working with APIs ensure you are not hitting the API rate limits. Consider adding a delay action inside the loop.

3. Explain how you would handle errors in a Power Automate flow, including different approaches to error handling and logging.

In Power Automate, I handle errors primarily using the 'Configure Run After' setting on actions. By default, an action runs if the preceding action 'is successful'. I modify this to also run if the preceding action 'has failed', 'has timed out' or 'is skipped'. This allows me to create parallel error handling paths. For example, if an action fails, I can send a notification email, log the error to a SharePoint list, or retry the action. I often use a 'Scope' action to group related actions and their associated error handling logic, simplifying flow management and providing a single point for error evaluation using the 'Configure Run After' of the 'Scope' action itself.

For logging, I use actions like 'Create item' in SharePoint or 'Append to array variable' to store error details. I include information like the flow run ID, timestamp, failed action name, and the error message itself. To retrieve the error message, the expression result('ActionName')?['error']?['message'] is useful. By centralizing error logging, it's easier to monitor flow health and troubleshoot issues. Another approach to error handling and logging is to send errors using the 'Respond to PowerApps or Flow' action back to the calling application.

4. Walk me through the process of using variables in Power Automate to store and reuse data across multiple actions.

To use variables in Power Automate, you first need to initialize them. You can do this by adding an "Initialize variable" action. Here, you'll define the variable's name, data type (e.g., String, Integer, Boolean, Array, Object), and its initial value. Once initialized, you can use "Set variable" action to modify the variable's value at any point in the flow. To access the variable's value in subsequent actions, you'll typically use the variable's name surrounded by curly braces within an expression, like @variables('VariableName'). For example, you might use this expression in a "Compose" action or within a condition to check its value. These values can then be used by other actions. For example: Using the variable in a SharePoint create item action.

5. How can you trigger a Power Automate flow from a Power Apps button click, and how do you pass data between them?

To trigger a Power Automate flow from a Power Apps button click, you use the PowerAutomate.Run() function in the button's OnSelect property. You select the desired flow from the dropdown that appears after typing PowerAutomate.Run(). To pass data from Power Apps to the flow, you define input parameters in your Power Automate flow. These input parameters then become available as arguments to the PowerAutomate.Run() function in Power Apps. For example, if your flow expects a text input named 'TextInput', you'd call the flow like this: PowerAutomate.Run(TextInput: TextInputControl.Text). The flow can then use the TextInput value passed from Power Apps.

6. Discuss how you would implement parallel processing in a Power Automate flow to improve performance for tasks that can run independently.

To implement parallel processing in a Power Automate flow, I would leverage the 'Apply to each' action combined with concurrency control. Specifically, within the 'Apply to each' action's settings, I would enable 'Degree of Parallelism' and set it to a value greater than 1 (e.g., 5 or 10) depending on the nature of the tasks and any rate limits of the connectors being used. This instructs Power Automate to run multiple iterations of the loop concurrently. The number you choose should be tested, as too many can lead to connector throttling.

Alternatively, you could use the 'Scope' action inside the 'Apply to each' action to group related actions and then configure error handling at the scope level. This allows for better control over how errors are handled within each parallel branch. Ensure that the operations within the loop or scope are truly independent, as data dependencies can lead to unpredictable results and errors. For example, if each loop iteration updates a different row in a database based on a unique key, it is safe to run in parallel. The 'Apply to each' action will wait for all iterations to complete before proceeding to the next step in the flow.

7. Describe how you would connect to a custom API from Power Automate, detailing the steps involved in creating a custom connector.

To connect to a custom API from Power Automate, you'd create a custom connector. First, you need your API's OpenAPI definition (Swagger file) or a Postman Collection. In Power Automate, navigate to Data > Custom Connectors and click 'New custom connector'. You can then import your API definition file (OpenAPI/Postman) or build from scratch. After importing/defining, configure security (e.g., API Key, OAuth 2.0), test the connection, and define any actions/triggers. Once created, the custom connector is available within Power Automate flows to interact with your custom API.

The steps generally involve:

- Preparing your API documentation (OpenAPI or Postman Collection).

- Creating a new custom connector.

- Importing the API definition.

- Configuring Security.

- Defining actions and triggers.

- Testing the connector.

- Using the connector in a Power Automate flow. Example of action definition:

{

"summary": "Get User",

"description": "Retrieves user details.",

"operationId": "getUser",

"request": {

"parameters": [

{

"name": "userId",

"in": "query",

"required": true,

"schema": {

"type": "string"

}

}

]

},

"responses": {

"200": {

"description": "Successful operation",

"schema": {

"type": "object"

}

}

}

}

8. Explain how you can use scopes in Power Automate to group actions and manage error handling within a specific section of your flow.

Scopes in Power Automate allow you to group actions together, treating them as a single block. This is useful for organizing your flow and, more importantly, for handling errors. You can configure a 'Run after' setting on actions after the scope, specifically to handle failures within the scope. For example, you can have one action run only if the scope succeeds, and another action run only if the scope fails. This enables you to implement try-catch-finally patterns or other forms of localized error handling within your flow.

By using scopes, you isolate the error handling to a specific section of your flow. If an action within the scope fails, the scope itself is marked as failed. You can then use this status to trigger specific error handling steps, such as sending a notification, logging the error, or attempting a retry. This prevents errors from cascading through your entire flow and provides a more structured approach to dealing with issues.

9. How would you configure a Power Automate flow to monitor a specific folder in SharePoint and process new files as they are added?

To monitor a SharePoint folder and process new files using Power Automate, you would start by using the 'When a file is created (properties only)' trigger for SharePoint. Configure the 'Site Address' and 'Folder ID' to point to the specific SharePoint site and folder you want to monitor.

After the trigger, add actions to process the new files. For example, you might use 'Get file content' to retrieve the file's content, then use subsequent actions to parse the content, store it in a database, send an email notification, or move the file to a different location. You can use conditional statements and loops as required to process multiple or different file types. Make sure to handle any potential errors gracefully by using the 'Configure run after' setting to configure error paths.

10. Describe the process of using the 'Compose' action in Power Automate and provide examples of when it would be beneficial.

The 'Compose' action in Power Automate is essentially a data operation that allows you to manipulate data and create new data outputs. You can think of it as a variable assignment. It takes an input, which can be a static value, an expression, or the output from a previous action, and stores it as its output. This output can then be used in subsequent actions in your flow.

It's beneficial in various scenarios. For example, you can use 'Compose' to:

- Create a formatted string by combining multiple data points (e.g., concatenating first and last names).

- Perform calculations using expressions and store the result.

- Transform data from one format to another.

- Create a consistent output for a variable that might change in format from different input sources. For instance, converting date formats to a standard ISO format.

- Simplify complex expressions by breaking them down into smaller, more manageable steps.

- Initialize a variable to a specific value if it is initially null.

11. How would you use the 'Terminate' action strategically in a Power Automate flow, and what are the different status options you can use?

The 'Terminate' action in Power Automate is strategically used to halt a flow's execution prematurely based on specific conditions. This is useful for error handling, preventing unnecessary resource consumption when a flow has failed or reached a point where further execution is pointless. It's also useful for controlling flow execution based on complex logic.

The 'Terminate' action offers different status options: 'Succeeded', 'Failed', and 'Cancelled'. 'Succeeded' indicates the flow completed a subset of work successfully before termination. 'Failed' indicates a problem occurred, and the flow is intentionally stopped. 'Cancelled' signifies that the flow was stopped for a non-error-related reason, such as user intervention or a change in requirements. You can set the status option based on the evaluation of some condition, the use of variables, or if you've captured errors from any particular stage using a try-catch pattern.

12. Explain how you can implement version control for Power Automate flows and manage different versions of a flow.

Power Automate lacks built-in version control like traditional software development tools. However, you can achieve version management through these methods:

- Solutions: Package flows within Solutions. Exporting and importing Solutions creates snapshots of flow versions. Each export is a point-in-time backup that can be restored.

- Save As: Use the 'Save As' feature to create a copy of a flow before making significant changes. This creates a new flow, preserving the original. Document the changes made in the new flow's description. Develop a naming convention (e.g., FlowName_v1, FlowName_v2) to track versions. While this approach doesn't provide diffing capabilities, it allows you to revert to previous iterations.

13. Describe how you would design a Power Automate flow to send a daily digest email summarizing information from multiple sources.

To design a Power Automate flow for a daily digest email, I would start with a Scheduled trigger to run the flow daily at a specific time. Then, I'd add actions to collect data from multiple sources. For example, if the data is in SharePoint lists, I'd use the 'Get items' action for each list. If the data is in Dataverse, I would use 'List rows' action. If it comes from an API, I'd use the HTTP action.

Next, I'd transform and consolidate the data into a single, readable format using actions like Compose and Parse JSON (if APIs return JSON). Finally, I would use the 'Send an email (V3)' action to create the digest email, populating the body with the aggregated and formatted data. Consider using HTML formatting within the email body for better readability. Error handling should be included with Try...Catch blocks to log failures and ensure the digest continues to run even if one data source is temporarily unavailable.

14. How can you use the 'Delay' action in Power Automate, and what are some use cases where it would be appropriate?

The 'Delay' action in Power Automate pauses a flow for a specified duration. You can configure the delay in terms of days, hours, minutes, or seconds. The flow execution will halt for the set time before proceeding with the subsequent actions.

Some use cases include:

- Sending Reminders: Delaying notifications until closer to a deadline.

- Polling for Status Changes: Inserting a delay before checking the status of a process to avoid excessive API calls.

- Batch Processing: Spreading out processing loads to prevent system overload, or to work in line with business working days.

- Waiting for External Systems: Pausing the flow while waiting for an external system to complete a task or update data.

15. Explain how you can use child flows to modularize your Power Automate solutions and improve reusability.

Child flows in Power Automate allow you to break down complex automation into smaller, reusable components. Instead of building the same logic repeatedly within multiple flows, you can create a child flow containing that logic and then call it from other flows. This modular approach improves maintainability because you only need to update the logic in one place (the child flow) and all parent flows that call it will automatically inherit the changes.

To use child flows, create a new flow and set its trigger to 'Manually trigger a flow'. Define input and output parameters for the child flow. Then, in your parent flow, use the 'Run a child flow' action to call the child flow, passing in the required input parameters. The parent flow can then use the output parameters returned by the child flow. This approach reduces complexity and promotes code reuse across your Power Automate solutions.

16. Describe how you would handle sensitive data, like passwords or API keys, in a Power Automate flow, ensuring they are stored securely.

To handle sensitive data like passwords or API keys in Power Automate, I'd primarily leverage Azure Key Vault. Store the sensitive information as secrets in Key Vault. Then, in the Power Automate flow, use the Azure Key Vault connector to retrieve these secrets at runtime. This avoids hardcoding or directly storing sensitive data within the flow definition.

Alternatively, for less critical secrets or when Key Vault isn't feasible, utilize Power Automate's built-in secure input parameters and environment variables. Secure input parameters mask the input values in the flow design and history. Environment variables allow storing configuration values outside the flow definition, promoting better manageability and security by separating configuration from code. Never include sensitive data directly in flow logic or comments.

17. How can you use the 'Parse JSON' action in Power Automate to extract data from a JSON payload, and what are some common challenges?

The 'Parse JSON' action in Power Automate allows you to define the structure of a JSON payload using a schema. You provide the JSON content and a schema (either manually entered or generated from a sample), and the action outputs the JSON data as individual properties that can be used in subsequent actions. This is essential for working with APIs or other data sources that return data in JSON format.

Common challenges include: incorrect schema definition leading to parsing errors, dealing with nested JSON structures which requires properly defined schemas, handling optional or missing fields gracefully (using null values or conditional checks), and managing data type conversions as the parsed JSON might not always represent the expected data type.

18. Explain how you would integrate Power Automate with Microsoft Teams to send notifications and interact with users.

To integrate Power Automate with Microsoft Teams for notifications and user interaction, I'd primarily use the 'Microsoft Teams' connector within Power Automate. For sending notifications, I'd leverage the 'Post message in a chat or channel' action. This action allows me to send messages to specific users, channels, or group chats. The flow can be triggered by various events, like data changes in a SharePoint list, a new entry in a database, or even a scheduled recurrence.

For user interaction, I would use actions such as 'Post a choice of options as the Flow bot to a user' or 'Create an adaptive card'. The 'Post a choice of options' action lets users select from predefined choices, and the flow can then take actions based on their selection. Adaptive cards provide more complex and interactive UIs within Teams. The responses from users can then be processed by the flow to update data, trigger other processes, or send further notifications. The combination of these actions provides robust integration.

19. Describe how you can use the 'Switch' action in Power Automate to create branching logic based on different conditions.

The 'Switch' action in Power Automate allows you to create branching logic by evaluating a single expression against multiple cases. The flow then follows the path associated with the matching case. Think of it like a more structured 'if...elseif...else' statement.

To use it, you configure the 'On' field with the expression you want to evaluate. Then, you define multiple 'Case' branches, each with a specific value to compare against the 'On' expression. If a case matches, the actions within that case are executed. You can also define a 'Default' case that executes if none of the other cases match. This provides a clean way to handle different conditions based on a single variable or expression without deeply nested 'Condition' actions.

20. How would you optimize a Power Automate flow that is running slowly, identifying potential bottlenecks and improving performance?

To optimize a slow Power Automate flow, first identify bottlenecks. Common causes include inefficient connectors, large data processing, or excessive iterations. Use the Power Automate analytics to pinpoint slow steps. Then, optimize these areas:

- Reduce iterations: Use 'Do Until' loops sparingly and explore alternatives like array operations or 'Apply to each' with concurrency enabled in settings. Also, ensure loops have proper exit conditions. Limit number of iterations.

- Optimize connectors: Use the most efficient connector for the task. For example, use 'Filter array' instead of iterating through a large array. Batch operations where possible. Avoid unnecessary data retrieval. Consider the premium connectors for speed.

- Minimize data transfer: Only retrieve necessary data. Use OData filters in 'Get items' actions to limit the data returned. Remove unnecessary fields from the retrieved data before subsequent actions.

- Parallel processing: Enable concurrency in 'Apply to each' actions where possible to process items in parallel.

- Use variables efficiently: Minimize the use of variables, especially within loops, as they can add overhead.

- Simplify expressions: Use simpler expressions to reduce processing time. Review and simplify complex expressions.

- Check condition logic: Optimize conditional branches to reduce unnecessary executions. Verify the logic is correct. Use switch statements where appropriate.

- Error Handling: Implement effective error handling to prevent flows from getting stuck in infinite loops or failing repeatedly, which can consume resources and slow down the overall performance.

After implementing optimizations, re-run the flow and use analytics to verify the improvements. Consider breaking large flows into smaller, more manageable sub-flows.

21. Explain how you can use environment variables in Power Automate to manage configuration settings across different environments.

Environment variables in Power Automate allow you to store and manage configuration settings that can change between environments (e.g., development, testing, production) without modifying the flow itself. You define environment variables within your Power Platform environment, specifying a data type (text, number, boolean, etc.) and a default value. When you move a flow between environments, you can update the environment variable values to match the specific environment's settings.

To use them, create environment variables in Power Platform. Within your flow, use dynamic content to reference the environment variable instead of hardcoding values. When deploying the flow to a new environment, update the environment variable values in that target environment. This ensures the flow uses the correct configuration settings without needing to be manually edited. You can also use connection references which connect to services using an environment variable. This improves security and streamlines deployment.

22. Describe how you would design a Power Automate flow to handle approvals, including sending approval requests, tracking responses, and automating follow-up actions.

I would design a Power Automate flow triggered by an event, such as a new item in a SharePoint list. The flow would use the 'Start and wait for an approval' action to send an approval request to the designated approver(s). The approval type would depend on the scenario (e.g., 'Approve/Reject - First to respond'). The details of the item requiring approval, such as name and description, would be included in the approval request using dynamic content.

Following the approval action, a condition would check the outcome of the approval (Approved/Rejected). If approved, the flow would proceed to update the SharePoint item's status to 'Approved' and potentially trigger other actions like sending a confirmation email or creating a task. If rejected, the flow would update the item's status to 'Rejected', send a notification to the requestor explaining the rejection, and potentially create a task for review/modification. A scheduled cloud flow with recurrence trigger could also be set up to monitor for pending approvals. If an approval is pending for longer than a specified duration, the flow can send a reminder email to the approver.

23. How can you use the 'Filter array' action in Power Automate to select specific items from an array based on certain criteria?

The 'Filter array' action in Power Automate allows you to select items from an array based on specific criteria using conditions. You provide the array you want to filter and then define one or more conditions that each item in the array must satisfy to be included in the output. These conditions typically involve comparing a field within the array items to a specific value or another field.

For example, if you have an array of objects with 'name' and 'age' fields, you could use the filter array action to select only those objects where the 'age' is greater than 25. This is done by configuring the 'Filter array' action with the array as the 'From' input, and setting the condition to 'age' 'is greater than' '25'.

24. Explain how you would integrate Power Automate with Dynamics 365 to automate tasks and synchronize data between the two systems.

To integrate Power Automate with Dynamics 365 for automation and data synchronization, I'd primarily leverage Dynamics 365 triggers and actions within Power Automate. For example, a trigger could be 'When a record is created' in Dynamics 365. Then, actions would be defined based on the trigger, such as updating a related record, sending an email, or creating a task. The 'Common Data Service (current environment)' connector is the main integration point, allowing you to directly interact with Dynamics 365 entities and fields. For data synchronization, Power Automate can be used to copy data from Dynamics 365 to other systems or vice-versa, using appropriate connectors for external systems.

Dataverse connector facilitates the data transfer and operations with dynamics 365. For instance, consider creating a flow that automatically creates a contact in Dynamics 365 when a new row is added to an Excel spreadsheet stored in OneDrive. This requires setting up a trigger (e.g., 'When a row is added') in OneDrive and then using the 'Create a new record' action within the Dataverse connector to populate the contact details in Dynamics 365 using the data from the Excel row.

25. Describe how you can use the 'Condition' action in Power Automate to create branching logic based on different conditions.

The 'Condition' action in Power Automate allows you to create branching logic by evaluating a condition and executing different branches (True or False) based on the outcome. You define a condition using operators like equals, greater than, less than, contains, etc., to compare values from variables, expressions, or data sources. For example, you can check if a variable 'OrderTotal' is greater than 100, and if True, send a notification to the sales team, else do nothing.

Within the 'Condition' action, you specify what actions to perform under the 'If yes' (True) branch and the 'If no' (False) branch. These branches can contain any number of actions, including other 'Condition' actions to create nested branching. This allows you to create complex workflows that adapt to different scenarios based on data and conditions.

26. Explain how you would use the 'Select' action in Power Automate to transform the structure of items within an array.

The 'Select' action in Power Automate is used to transform the structure of items within an array by mapping properties from the input array to a new set of properties in the output array. It essentially allows you to rename, create new, or derive properties based on the original array's items. You provide the input array and then define key-value pairs representing the desired structure of the output array.

For example, if you have an array of customer objects with firstName, lastName, and email, but you only want an array of objects with fullName and email, you would use 'Select'. The 'From' field would contain the customer array and the 'Map' section would have:

fullName:item()?['firstName']+ ' ' +item()?['lastName']email:item()?['email']

Advanced Power Automate interview questions

1. How would you handle errors in a complex Power Automate flow to prevent it from stopping unexpectedly?

To handle errors in a complex Power Automate flow and prevent unexpected stops, I would primarily use the 'Configure run after' setting on each action. This allows me to specify alternative paths for execution if an action fails, times out, or is skipped. Specifically, I'd configure important actions to 'Run after' only when the preceding action 'has failed' or 'has timed out'. This allows me to implement error handling logic, such as sending a notification, logging the error, or attempting a retry.

Further, I'd use Try-Catch patterns using Scope actions. Put the actions that might fail within the Try scope. Configure the Catch scope to run only if the Try scope has failed. Inside the Catch scope, log the error details (using variables to store the error message and flow run details), send a notification, and potentially attempt a graceful shutdown of the flow or retry a limited number of times using a Do Until loop and a delay. Careful consideration should be given to retries, to avoid infinite loops. Finally, utilize expressions like result('ActionName') to inspect the outcome of actions for specific error codes or conditions.

2. Describe a scenario where you would use a custom connector in Power Automate and explain the steps to create one.

A custom connector in Power Automate is useful when you need to connect to a service or API that isn't supported by the pre-built connectors. For example, imagine you have an internal HR system with a custom API for accessing employee data. You could create a custom connector to retrieve employee information based on their ID and use that data in your Power Automate flows.

To create one, follow these steps:

- Define the API: Understand the API endpoints (e.g., GET employee by ID) and authentication methods.

- Create the Connector Definition: In Power Automate, go to Data > Custom Connectors and create a new connector.

- Provide General Information: Give the connector a name, description, and upload an icon.

- Configure Security: Specify the authentication type (e.g., API Key, OAuth 2.0). Configure the necessary parameters, like API key name or OAuth endpoints.

- Define the Definition: This is where you define the actions and triggers supported by the connector. For each action (e.g., 'Get Employee Details'), you specify:

- Request: Define the HTTP method (GET, POST, etc.), the URL, and any required headers or body parameters.

- Response: Define the schema of the data that the API returns. You can either manually enter the schema or provide a sample response to automatically generate it.

- Test the Connector: Use the test operation feature within the custom connector creation to ensure it connects and retrieves data correctly before use in any flows.

- Use in Flows: Once the connector is configured and tested, you can use it like any other standard connector in your Power Automate flows.

3. Explain how you can implement parallel processing within a Power Automate flow to improve performance.

To implement parallel processing in Power Automate and improve flow performance, you can leverage the 'Apply to each' action in conjunction with disabling its sequential processing setting. By default, 'Apply to each' processes items sequentially. However, by navigating to the 'Apply to each' action's settings and turning off the 'Sequential processing' toggle, you enable parallel processing. This allows the flow to process multiple items within the loop concurrently, drastically reducing the overall execution time, especially when dealing with a large number of items.

Alternatively, you could use multiple parallel branches stemming from a trigger. Each branch executes independently and simultaneously. This is especially useful when you have distinct tasks that don't depend on each other's output. Keep in mind that while parallel processing can significantly improve performance, it can also increase the risk of concurrency issues, such as exceeding API limits or data corruption if not handled carefully. Error handling and proper throttling configurations are essential when implementing parallel processing.

4. How do you manage and deploy Power Automate flows across different environments (e.g., development, testing, production)?

I typically use solutions to manage and deploy Power Automate flows across different environments. First, I create a solution in the development environment and add the relevant flows, connections, and other dependencies to it. Then, I export the solution as a managed or unmanaged package. For deployment to testing or production, I import the solution package into the target environment. Connections and environment variables often need to be updated after import to reflect the target environment's configuration.

For automated deployments, I use Azure DevOps pipelines with the Power Platform Build Tools extension. This allows me to automate the export and import of solutions, update environment variables, and manage connection references in a controlled and repeatable manner. This ensures consistency and reduces the risk of manual errors during deployments.

5. What are the considerations when designing a Power Automate flow that processes large amounts of data?

When designing a Power Automate flow to handle large datasets, several considerations are critical. Firstly, throttling limits are a major concern; Power Automate has limitations on the number of API calls within a given timeframe. To mitigate this, consider using techniques like pagination to process data in batches, rather than attempting to process everything at once. Secondly, performance should be optimized by using efficient connectors and minimizing complex calculations or loops within the flow. If transformation is required, consider delegating that to a more performant system like Azure Data Factory, or use Dataverse computed columns where possible.

Furthermore, error handling and logging are essential. Implement robust error handling to gracefully manage failures and prevent data loss. Leverage built-in logging mechanisms or external services like Azure Monitor to track flow executions and identify potential bottlenecks. Consider using parallel processing via child flows to speed up the overall execution where appropriate. Be mindful of connector licensing implications when choosing parallel execution strategies.

6. Explain how you can use Power Automate to automate tasks that require human approval and how you would handle escalations.

Power Automate can automate tasks requiring human approval using the 'Start and wait for an approval' action. This action sends an approval request to specified users (approvers) and pauses the flow until a response is received. The flow can then branch based on the approval outcome (approved/rejected) to continue with subsequent tasks.

To handle escalations, I can implement a few strategies. First, I can configure the 'Start and wait for an approval' action to automatically reassign the approval task if it's not acted upon within a specific timeframe. Second, I can create a separate flow that monitors pending approvals and sends reminder emails or messages to approvers and their managers after a certain duration. Finally, if an approval is not resolved after multiple reminders, the flow can automatically escalate the task to a higher-level authority using conditions and additional 'Start and wait for an approval' actions.

7. How would you implement version control for your Power Automate flows to track changes and revert to previous versions if needed?

Power Automate doesn't have built-in version control like Git. However, you can implement a versioning strategy by exporting flows as .zip files before making changes. Store these exported versions in a dedicated repository (e.g., SharePoint library, Azure DevOps, or even a simple folder structure). Before modifying a flow, export it and save with a descriptive filename including a version number or date. To revert, simply import the desired .zip file.

To enhance tracking, consider using naming conventions for your exported flows (e.g., FlowName_v1.zip, FlowName_20240101.zip) and maintain a separate log (e.g., Excel sheet, text file) documenting the changes made in each version. You can also incorporate comments within the flow descriptions to note major changes made to the flow. Although not perfect, this manual approach provides basic version control and rollback capabilities.

8. Describe a situation where you used expressions in Power Automate to manipulate data and explain the expression you used.

In a recent Power Automate flow, I needed to extract the file extension from a file name stored in a SharePoint library. The file name was stored in a variable called 'FileName'. I used the split and last expressions to achieve this.

Specifically, the expression I used was:

last(split(variables('FileName'), '.'))

This expression first splits the 'FileName' variable into an array using the '.' character as a delimiter. Then, the last function retrieves the last element of the resulting array, which corresponds to the file extension (e.g., 'pdf', 'docx', 'xlsx'). This extracted file extension was then used to determine the appropriate action to take within the flow, such as routing the file to a specific folder or applying a specific metadata tag.

9. How do you secure your Power Automate flows to prevent unauthorized access or data breaches?

To secure Power Automate flows, I implement several strategies. Primarily, I leverage role-based access control (RBAC) through the Power Platform admin center to grant users only the necessary permissions to access and modify flows. Sensitive data, such as passwords or API keys, are stored securely using Azure Key Vault and accessed through connectors rather than being hardcoded within the flow. Inputs and outputs are validated and sanitized to prevent injection attacks, and sensitive data is encrypted when stored. Furthermore, I regularly monitor flow activity logs for suspicious behavior and audit trails for potential security breaches.

Additionally, connection references are secured by using service principals rather than individual user accounts where possible. Data Loss Prevention (DLP) policies are configured to prevent sensitive data from leaving the organization's approved boundaries. Finally, I follow Microsoft's best practices for Power Automate security, staying updated on the latest security features and recommendations. Regularly reviewing and updating security measures as needed is also crucial.

10. Explain how you can monitor the performance of your Power Automate flows and identify bottlenecks.

You can monitor Power Automate flow performance using the Power Automate portal's analytics. This provides insights into flow runs, errors, and duration. Specifically, you can:

- Monitor run history: Track the success/failure rate and duration of each run.

- Analyze error messages: Identify common error patterns to pinpoint bottlenecks.

- Use the Power Automate analytics dashboard: Visualize key metrics like run frequency, success rates, and execution times. Also, use the 'Monitor' tab to drill down into specific flows and their runs.

- Implement error handling: Use try-catch blocks within your flows to gracefully handle exceptions and log relevant information to services like Azure Monitor or Application Insights for detailed debugging and monitoring.

By regularly reviewing these metrics and logs, you can identify slow actions, inefficient loops, or external service dependencies that might be causing performance bottlenecks in your Power Automate flows.

11. How can you trigger a Power Automate flow from an external application or service and pass data to it?

You can trigger a Power Automate flow from an external application or service using an HTTP request. Power Automate provides an HTTP request trigger that generates a unique URL. Your external application can then send a POST request to this URL to initiate the flow. You can pass data to the flow within the body of the HTTP request as a JSON payload. The flow can then parse this JSON and use the data in subsequent actions. Alternatively, you can use the 'When a HTTP request is received' trigger and configure it's schema to dictate the input structure.

Another option is to use a custom connector. You can define your own API endpoints using the custom connector feature, which can call a Power Automate flow to execute actions. The external application would then interact with your API endpoint rather than directly triggering the flow. This offers a layer of abstraction and potentially more control over authentication and authorization.

12. Describe a scenario where you would use a child flow in Power Automate and explain the benefits of using child flows.

A good scenario for using a child flow in Power Automate is when you need to perform the same set of actions multiple times within a larger, more complex flow. For example, let's say you have a flow that processes new customer orders. Part of that process involves sending a welcome email, creating a record in a CRM system, and logging the order details to a database. Instead of repeating those three actions every time a new order comes in, you can create a child flow that handles these tasks and then call the child flow from the main order processing flow.

The benefits of using child flows in this scenario include:

- Reusability: The child flow can be used in multiple parent flows, reducing redundancy and making your flows more maintainable.

- Modularity: Child flows break down complex flows into smaller, more manageable pieces, making them easier to understand and debug.

- Maintainability: If you need to change how the welcome email is sent, for example, you only need to update the child flow, not every flow that sends welcome emails.

- Readability: Parent flows become cleaner and easier to read because the repetitive actions are encapsulated in a separate child flow.

13. How would you handle retries and error logging in a Power Automate flow that interacts with an unreliable API?

To handle retries and error logging with an unreliable API in Power Automate, I would use the Configure run after settings and the Scope action. For retries, configure the action calling the API to 'Run after' the previous action 'has failed' or 'has timed out'. Inside the 'Run after' branch, implement a delay followed by another attempt to call the API. This retry logic can be nested within a 'Do Until' loop with a counter to limit the number of retries. For error logging, I'd use the Scope action to group actions. Configure the 'Run after' setting of a subsequent action within the scope to only run if the scope 'has failed'. Within that action, I'd use actions like 'Compose' to capture error details (e.g., status code, error message) and then write these details to a logging system like Azure Log Analytics, a SharePoint list, or send an email notification.

14. Explain how you can use Power Automate to automate tasks that involve multiple users and require collaboration.

Power Automate facilitates multi-user task automation through several mechanisms. Workflows can be designed to assign tasks to specific users based on conditions or roles. Approvals are a key feature, enabling sequential or parallel approvals from multiple individuals before proceeding to the next step in the workflow. Shared mailboxes and SharePoint lists/libraries are also useful for collaboration. Tasks can be assigned based on items added to these shared resources.

For example, imagine an expense approval process. A flow is triggered when an employee submits an expense report. The flow assigns the report to the employee's manager for approval. If the expense exceeds a certain amount, a second approval task is created for the finance department. Once both approvals are received, the flow automatically updates a SharePoint list with the approval status and triggers a notification to the employee.

15. How do you optimize a Power Automate flow for cost efficiency, considering the different licensing options and consumption limits?

To optimize a Power Automate flow for cost efficiency, first, understand your licensing options (e.g., per-user, per-flow, pay-as-you-go) and choose the one that best aligns with your usage patterns. Analyze flow runs to identify inefficiencies like unnecessary actions, loops, or data retrieval. Implement techniques like reducing the frequency of triggers, optimizing data operations (filtering, projections), and minimizing API calls by batching requests where possible. Also, consider using child flows to reuse logic and reduce the complexity of the main flow, which helps in troubleshooting and maintenance. Finally, monitor flow consumption regularly to ensure you stay within your license limits and proactively adjust the flow design or licensing as needed.

Furthermore, explore alternatives to premium connectors if possible. For example, consider using HTTP actions to call APIs directly instead of relying on premium connectors if that's a viable option and doesn't introduce significant overhead. Implement error handling and retry policies to prevent failed runs that consume credits unnecessarily. Evaluate data storage options; sometimes storing intermediate results in Dataverse can be more costly than alternative storage like SharePoint, depending on the volume of data and access patterns.

16. Describe a complex Power Automate project you worked on, detailing the challenges you faced and how you overcame them.

I developed a Power Automate flow to automate invoice processing for the accounting department. The flow ingested invoices from a SharePoint library, extracted relevant data using AI Builder's form processing, validated the data against a SQL database containing vendor information, and created entries in an accounting system via its API. A significant challenge was the variability in invoice formats, which initially resulted in inconsistent data extraction. To address this, I iteratively refined the AI Builder model by training it on a more diverse dataset of invoices and implemented conditional logic within the flow to handle different invoice layouts. Another hurdle was the API integration, which required complex authentication and data transformation. This was resolved by creating a custom connector that abstracted away the authentication process and used expressions within Power Automate to correctly map the data fields.

17. Explain how to use Dataverse with power automate.

Power Automate can seamlessly integrate with Dataverse to automate business processes based on data changes within Dataverse tables. Triggers in Power Automate can be set to initiate flows when a record is created, updated, or deleted in Dataverse. For instance, a flow could be triggered when a new account is created, automatically sending a welcome email and assigning a sales representative.

Dataverse connector actions within Power Automate allow you to perform operations like creating, reading, updating, and deleting records. You can also use these actions to perform bound and unbound actions. Connections are established using your Dataverse credentials. The dynamic content feature in Power Automate makes it easy to access and use Dataverse data within your flows. A common scenario is using Power Automate to synchronize data between Dataverse and other systems, such as sending order information to an external accounting application after an order status is updated in Dataverse.

18. Explain the difference between 'When a record is created' and 'When a record is created or updated' triggers, with example use-cases.

The 'When a record is created' trigger fires only when a new record is first saved to the database. It executes one time, immediately after the record's initial creation. Common use cases involve setting initial values, sending welcome emails, or creating related records.

In contrast, the 'When a record is created or updated' trigger fires both when a new record is created and whenever an existing record is modified. This trigger executes every time the record is saved, regardless of whether it's a new record or an existing one. Typical use cases include recalculating values based on changes, logging updates, or triggering workflows based on field modifications. For example, if you want to update a 'Last Modified Date' field, this trigger is appropriate.

19. What are the limitations of Power Automate, and what alternative solutions might you consider for scenarios exceeding those limits?

Power Automate, while powerful, has limitations. It can struggle with complex data transformations, large datasets (due to throttling and API limits), and intricate error handling. Managing complex flows can also become cumbersome. Its reliance on connectors can be a bottleneck if a specific system lacks a robust connector or if connector actions are limited.

Alternatives include Azure Logic Apps (for more complex and enterprise-grade integrations), Azure Functions (for custom code and serverless execution of intricate logic), or even custom code solutions using languages like Python or C# and integrating directly with APIs and databases, especially when high performance or specialized requirements are needed. For heavy data lifting, Azure Data Factory or even SSIS would be more appropriate.

20. Explain the purpose of environment variables in Power Automate and how they contribute to solution portability and maintainability.

Environment variables in Power Automate serve as named placeholders for configuration values that can vary between different environments (e.g., development, testing, production). Instead of hardcoding values like connection strings, API endpoints, or user credentials directly into your flows and apps, you reference them through environment variables.

This approach significantly enhances solution portability and maintainability. When moving a solution from one environment to another, you only need to update the environment variables to reflect the new environment's specific configurations, rather than modifying the flows or apps themselves. This simplifies deployment and reduces the risk of errors. They also promote better security practices as sensitive information can be managed separately and securely in each environment. Furthermore, updates to configurations can be made in one place (the environment variable) and automatically propagated to all flows and apps that use it, simplifying maintenance.

21. How can you use the 'Do Until' loop effectively, including strategies to prevent infinite loops?

The Do Until loop executes a block of code repeatedly until a specified condition becomes true. Effective use involves ensuring the condition will eventually be met within the loop's execution. To prevent infinite loops, it's crucial to modify variables used in the condition inside the loop body.

Strategies include initializing variables before the loop, updating variables based on loop progress, and checking the condition within the loop to guarantee termination. For example, a common pattern is incrementing a counter: x = 0; Do Until x > 10; x = x + 1; Loop;. Without x = x + 1, the loop would never terminate, as x remains at 0.

22. Describe your experience with integrating Power Automate with other Power Platform components, such as Power Apps and Power BI.

I have experience integrating Power Automate with other Power Platform components like Power Apps and Power BI. For Power Apps, I've used Power Automate flows to handle backend processes triggered by user actions within the app, such as creating new records in Dataverse, sending email notifications, or initiating approvals. I've also passed data from Power Apps to Power Automate using the PowerApps.com connector and expressions. An example of this would be using PowerApps.com.Run('FlowName', TextInput.Text) in Power Apps to trigger a flow.

Regarding Power BI, I've used Power Automate to automate data refresh operations, ensuring that Power BI dashboards are always up-to-date. I've used the Power BI connector to trigger a dataset refresh upon certain events, such as a file being uploaded to SharePoint or a change in Dataverse. This ensures near real-time data in my Power BI dashboards. Furthermore, I've embedded Power BI reports within Power Apps for rich visualizations and used Power Automate to pass data between them, enabling seamless data-driven workflows.

23. Explain what you know about the expression language in Power Automate, and what are the more complex functions you have used?

Power Automate uses an expression language, based on the Azure Logic Apps expression language, to perform calculations, manipulate data, and control workflow behavior. These expressions are used within actions and conditions to create dynamic workflows. They are especially helpful for tasks like string manipulation, date/time calculations, mathematical operations, and data type conversions.

Some of the more complex functions I've used include:

json(): Parses a JSON string into a JSON object.xpath(): Navigates an XML document and extracts values.utcnow(): Gets the current UTC time, which I often format usingformatDateTime().addDays(),addHours(): Used for date/time arithmetic.first(),last(): To extract values from arrays.if(): For conditional logic within an expression.contains(): Checks if a string contains another string or an array contains a specific element.uriComponentEncode()anduriComponentDecode(): To encode and decode URLs.createArray()andcreateObject(): to create complex data structures I have also used nested expressions to achieve more complex logic, such asif(greater(length(variables('MyArray')), 0), first(variables('MyArray')), 'No items').

Expert Power Automate interview questions

1. Describe a complex Power Automate flow you've designed, detailing its triggers, actions, and any challenges you faced during development.

I designed a Power Automate flow to automate employee onboarding. The trigger is a new entry in a SharePoint list when HR adds a new hire. Actions include: creating a user account in Azure AD, adding the user to relevant security groups, provisioning a mailbox in Exchange Online, creating a welcome package document in OneDrive, and sending a welcome email. One challenge was handling errors when creating user accounts (e.g., username already exists). To address this, I implemented a try-catch block with error handling. The 'Try' block attempts to create the user. If it fails, the 'Catch' block logs the error to a separate SharePoint list and notifies the IT support team. Also, to manage concurrent flow executions for multiple new hires at the same time, I used variables to manage and control the flow state and update them in each stage.

2. How would you implement error handling and retry logic in a Power Automate flow to ensure reliability?

To implement error handling and retry logic in a Power Automate flow, I'd primarily use the 'Try-Catch' pattern with the 'Scope' action. Place the actions that might fail within a 'Scope' configured as the 'Try' block. Configure the 'Run after' settings on a subsequent 'Scope' action to act as the 'Catch' block; set it to run only if the 'Try' block has failed. Within the 'Catch' block, implement actions for logging the error and initiating retry logic.

For retries, employ a 'Do Until' loop. Inside the loop, re-execute the potentially failing actions. Include a condition based on a counter or other criteria to limit the number of retries. Use the 'Delay' action to introduce a pause between retry attempts to avoid overwhelming the system. Also, consider using the Terminate action to gracefully end the flow with a 'Failed' status after exhausting the retry attempts. The expression result('Your_Action_Name')?['statusCode'] within the condition can be used to determine whether the action was successful or not.

3. Explain how you would use custom connectors in Power Automate to integrate with a third-party API that requires authentication.

To integrate with a third-party API requiring authentication in Power Automate using a custom connector, I would first define the connector by providing details about the API. This includes the API's base URL, authentication type (e.g., OAuth 2.0, API key), and the operations I want to expose (e.g., GET, POST). For authentication, I'd configure the connector's security settings with the necessary credentials or connection parameters.

Once the custom connector is set up, I can use it within my Power Automate flows. I would add an action to the flow that invokes an operation defined in the custom connector, providing any required input parameters. The connector handles the authentication behind the scenes based on the configured security settings, allowing the flow to seamlessly interact with the third-party API without needing to explicitly manage authentication within the flow logic. For example, if the API requires an API key, the custom connector setup would store and manage the API key and then add it to the requests automatically in the background.

4. What are the different types of scopes available in Power Automate, and when would you use each one?

Power Automate primarily features two key scope types: global scope and local scope. Variables declared outside of any control (like 'Apply to each', 'Condition', or 'Scope' actions) have global scope, meaning they can be accessed and modified throughout the entire flow. Conversely, variables declared within a control are limited to that control's local scope; they cannot be accessed or modified outside of it.

Use global scope for variables that need to be shared and updated across different parts of your flow, such as configuration settings, counters, or overall status indicators. Utilize local scope to keep variables isolated within specific sections of your flow, which is useful for temporary calculations, loop counters, or when you want to avoid naming conflicts with global variables. For example, a local scope is very useful inside of an Apply to each loop.

5. How can you optimize a Power Automate flow for performance, considering factors like looping, data retrieval, and parallel processing?

To optimize a Power Automate flow, minimize loops by using 'Apply to each' actions efficiently. Consider using 'Select' to extract only necessary data fields. Limit data retrieval by using filters in 'Get items' actions to reduce the amount of data processed. Instead of multiple 'Get items' actions for related data, try using a single 'Get items' with OData filters, or use Dataverse relationships when possible. Use parallel processing with 'Run after' configurations, where independent tasks can run concurrently. For example, if you need to update multiple items, trigger multiple child flows that run in parallel to update items concurrently, instead of sequentially in the main flow.