Pandas is a data analysis and manipulation library and it's used across several job roles. Identifying candidates with the right Pandas skills is therefore paramount for recruiters and hiring managers aiming to build competent data teams.

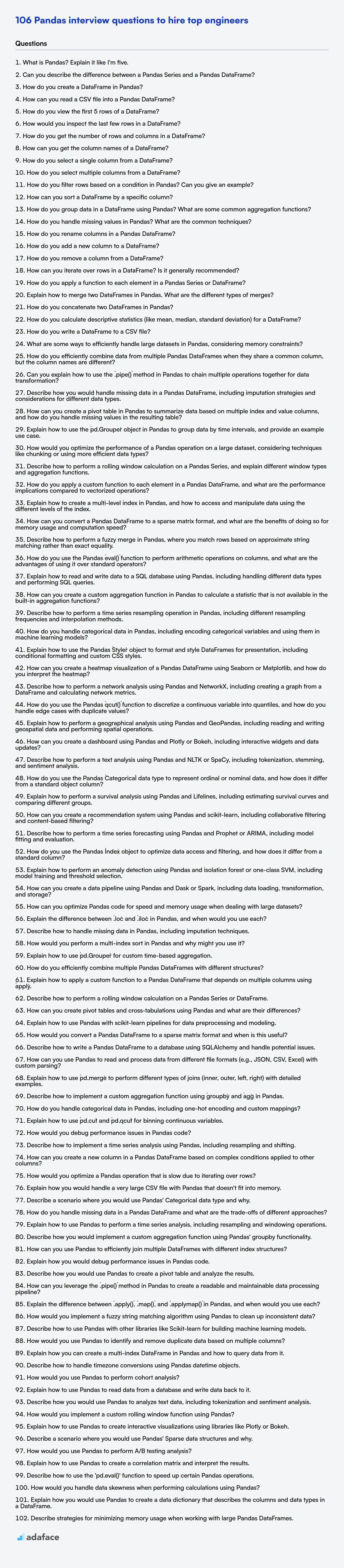

This blog post provides a collection of Pandas interview questions categorized by difficulty level, ranging from basic to expert, including a section on MCQs. The questions are designed to help you evaluate a candidate's depth of knowledge and practical skills in Pandas.

By using these questions, you can ensure that your next hire has the skills to excel; for objective skill validation, consider using a Python Pandas online test to screen candidates before the interview.

Table of contents

Basic Pandas interview questions

1. What is Pandas? Explain it like I'm five.

Imagine you have a toy box full of different things, like cars, dolls, and blocks. Pandas is like a special box that helps you organize those toys. It's like a spreadsheet, but much more powerful.

Pandas helps you put labels on your toys (columns), like "Color" or "Size", and keep track of them in rows. It's great for counting how many red cars you have or finding the biggest block. If the toys are data, pandas is like magic to play with those toys in a useful way! For example, let's say you have a list of ages:

import pandas as pd

ages = [5, 6, 5, 7, 6]

age_series = pd.Series(ages) #Pandas to the rescue

print(age_series.mean())

Here pd.Series is a simple usage that converts a basic list of numbers into something pandas can use. The .mean() calculates the average age.

2. Can you describe the difference between a Pandas Series and a Pandas DataFrame?

A Pandas Series is a one-dimensional labeled array capable of holding any data type (integers, strings, floats, Python objects, etc.). Think of it like a single column in a spreadsheet. It has an index, which can be explicitly defined or defaults to a numerical index. A DataFrame, on the other hand, is a two-dimensional labeled data structure with columns of potentially different types. You can think of it like a spreadsheet or SQL table, or a dict of Series objects. It has both a row and column index.

In essence, a DataFrame is a container for Series objects. Each column in a DataFrame is a Series. So, while a Series represents a single column of data with an index, a DataFrame represents a table of data composed of multiple Series sharing the same index.

3. How do you create a DataFrame in Pandas?

You can create a Pandas DataFrame in several ways:

From a dictionary: Where keys become column names, and values (lists, NumPy arrays, Series) become column data.

import pandas as pd data = {'col1': [1, 2], 'col2': [3, 4]} df = pd.DataFrame(data)From a list of lists/tuples: Specify column names explicitly.

data = [[1, 3], [2, 4]] df = pd.DataFrame(data, columns=['col1', 'col2'])From a NumPy array: Similar to lists of lists, specify column names.

From a CSV file: Using

pd.read_csv()From other sources: such as SQL databases, JSON files, etc.

4. How can you read a CSV file into a Pandas DataFrame?

You can read a CSV file into a Pandas DataFrame using the pd.read_csv() function. This function is part of the Pandas library and is specifically designed for parsing CSV files.

To use it, you simply pass the file path (or a file-like object) to the function like this:

import pandas as pd

df = pd.read_csv('your_file.csv')

print(df)

You can specify various optional parameters within pd.read_csv() to handle different delimiters, headers, encodings, and other file characteristics, such as:

delimiter(orsep): Specifies the character used to separate fields (e.g.,',','\t',';')header: Indicates the row number to use as column names (e.g.,0for the first row,Noneif there is no header row).encoding: Specifies the file encoding (e.g.,'utf-8','latin-1')index_col: Specifies which column to use as the index.

5. How do you view the first 5 rows of a DataFrame?

To view the first 5 rows of a Pandas DataFrame, you can use the .head() method. This method, by default, returns the first 5 rows.

df.head()

If you want to view a different number of rows, you can pass an integer argument to the .head() method, like this:

df.head(10) # View the first 10 rows

6. How would you inspect the last few rows in a DataFrame?

To inspect the last few rows of a Pandas DataFrame, you can use the .tail() method. By default, .tail() returns the last 5 rows. You can specify the number of rows you want to view by passing an integer argument to the method.

For example:

import pandas as pd

# Assuming 'df' is your DataFrame

last_10_rows = df.tail(10)

print(last_10_rows)

7. How do you get the number of rows and columns in a DataFrame?

To get the number of rows and columns in a Pandas DataFrame, you can use the .shape attribute. It returns a tuple where the first element is the number of rows and the second element is the number of columns.

For example:

import pandas as pd

data = {'col1': [1, 2], 'col2': [3, 4]}

df = pd.DataFrame(data)

rows, cols = df.shape

print(f"Rows: {rows}, Columns: {cols}")

8. How can you get the column names of a DataFrame?

You can retrieve the column names of a DataFrame using the .columns attribute. This attribute returns an Index object containing the column names. For example, in pandas, you would use df.columns to access the column names of the DataFrame df.

To convert the column names into a list, you can use df.columns.tolist(). This is useful if you need to iterate over the column names or perform other operations that require a list data structure. The syntax looks like this:

column_names = df.columns.tolist()

print(column_names)

9. How do you select a single column from a DataFrame?

To select a single column from a DataFrame, you can use square bracket notation with the column name as a string, like this: df['column_name']. This returns a pandas Series containing the data from that column. Alternatively, you can use dot notation if the column name is a valid Python identifier (i.e., no spaces or special characters), like this: df.column_name. However, using bracket notation is generally preferred as it works in all cases and is less prone to errors when column names are complex.

10. How do you select multiple columns from a DataFrame?

To select multiple columns from a DataFrame, you can use several methods. The most common approach is to pass a list of column names within square brackets [] to the DataFrame object. This will return a new DataFrame containing only the specified columns. For example, if you have a DataFrame named df and want to select columns 'col1', 'col2', and 'col3', you would use df[['col1', 'col2', 'col3']].

Alternatively, you can use the .loc accessor with a list of column names. For instance: df.loc[:, ['col1', 'col2', 'col3']]. The : indicates that you want to select all rows. This method is particularly useful when you need to combine row and column selection based on labels. Using .iloc is also an option if you prefer to use the index position of the columns: df.iloc[:, [0, 1, 2]].

11. How do you filter rows based on a condition in Pandas? Can you give an example?

To filter rows based on a condition in Pandas, you can use boolean indexing. This involves creating a boolean Series that is the same length as the DataFrame, where each element indicates whether the corresponding row satisfies the condition. You then use this boolean Series to select the rows where the value is True.

For example, if you have a DataFrame df and you want to select rows where the 'column_name' is greater than 10, you would do:

filtered_df = df[df['column_name'] > 10]

This creates a new DataFrame, filtered_df, containing only the rows that meet the specified condition. You can use various comparison operators (>, <, ==, !=, >=, <=) and logical operators (&, |, ~) to create more complex conditions.

12. How can you sort a DataFrame by a specific column?

You can sort a DataFrame by a specific column using the sort_values() method in pandas. Simply pass the column name (or a list of column names for multi-level sorting) to the by argument.

For example:

df.sort_values(by='column_name')

This sorts the DataFrame in ascending order based on the values in 'column_name'. To sort in descending order, set ascending=False:

df.sort_values(by='column_name', ascending=False)

13. How do you group data in a DataFrame using Pandas? What are some common aggregation functions?

In Pandas, you can group data in a DataFrame using the groupby() method. This method splits the DataFrame into groups based on one or more columns. After grouping, you can apply aggregation functions to each group.

Some common aggregation functions include:

sum(): Calculates the sum of values in each group.mean(): Calculates the mean of values in each group.median(): Calculates the median of values in each group.count(): Counts the number of values in each group.min(): Finds the minimum value in each group.max(): Finds the maximum value in each group.std(): Calculates the standard deviation of values in each group.agg(): Allows you to apply multiple aggregation functions at once, using code such as:df.groupby('column_name').agg(['sum', 'mean', 'count'])

14. How do you handle missing values in Pandas? What are the common techniques?

Pandas offers several ways to handle missing values, typically represented as NaN (Not a Number). Common techniques include:

- Identifying Missing Values:

isna()orisnull(): Detect missing values and return a boolean mask.notna()ornotnull(): Inverse ofisna()andisnull().

- Handling Missing Values:

dropna(): Removes rows or columns containing missing values. You can control the behavior with parameters likeaxis(rows or columns) andhow('any' or 'all').fillna(): Fills missing values with a specified value (e.g., mean, median, constant) or using methods like forward fill (ffill) or backward fill (bfill).

- Imputation: More sophisticated methods can be used to impute missing values, often involving statistical techniques or machine learning models. Libraries like

scikit-learnprovide tools for this purpose. For example,SimpleImputercan replace missing values with the mean, median, or most frequent value of the column.

For example:

df.dropna(axis=0) # Remove rows with any NaN values

df['column_name'].fillna(df['column_name'].mean(), inplace=True) # Fill NaN values in 'column_name' with the mean

15. How do you rename columns in a Pandas DataFrame?

You can rename columns in a Pandas DataFrame using several methods:

df.rename(): This is the most versatile method. You pass a dictionary where keys are the old column names and values are the new column names to thecolumnsargument.df = df.rename(columns={'old_name': 'new_name', 'another_old_name': 'another_new_name'}). To modify the DataFrame in place, useinplace=True.df.columns: You can directly assign a new list of column names to thedf.columnsattribute.df.columns = ['new_col1', 'new_col2', 'new_col3']. This method requires you to provide a list with the same length as the number of columns.df.set_axis(): Similar todf.columns, but more general. It allows renaming both rows and columns using theaxisparameter. To rename columns:df = df.set_axis(['new_col1', 'new_col2'], axis='columns'). Theinplaceparameter can also be used here.

16. How do you add a new column to a DataFrame?

You can add a new column to a DataFrame in several ways. The most common is using bracket notation, similar to how you'd add a key to a dictionary. For example, in pandas, you can directly assign a Series (or a single value) to a new column name. Another way is by using the assign() method, which creates a new DataFrame with the added or modified column(s), leaving the original DataFrame untouched.

For example, in pandas:

import pandas as pd

# Example DataFrame

data = {'col1': [1, 2], 'col2': [3, 4]}

df = pd.DataFrame(data)

# Adding a new column using bracket notation

df['new_col'] = [5, 6]

# Adding a new column using assign

df = df.assign(another_col = [7,8])

17. How do you remove a column from a DataFrame?

You can remove a column from a Pandas DataFrame in several ways. The primary methods are using the del keyword, the drop() method, or by reassigning the DataFrame without the column.

Here's a brief overview:

delkeyword:del df['column_name']- This modifies the DataFrame in place.drop()method:df.drop('column_name', axis=1)ordf.drop(columns=['column_name'])- This returns a new DataFrame with the column removed. Useinplace=Trueto modify the original DataFrame.- Reassignment:

df = df.drop('column_name', axis=1)- Assign the result ofdf.dropto the original dataframe. Same as above except it more explicitly overwrites the initial dataframe.

Example using drop():

import pandas as pd

data = {'col1': [1, 2], 'col2': [3, 4]}

df = pd.DataFrame(data)

df = df.drop('col1', axis=1) # or df.drop(columns=['col1'])

print(df)

18. How can you iterate over rows in a DataFrame? Is it generally recommended?

You can iterate over rows in a Pandas DataFrame using methods like iterrows(), itertuples(), or by directly accessing the underlying NumPy array with .values. However, iterating through rows is generally not recommended for performance reasons, especially for large DataFrames.

These methods are slow compared to vectorized operations using Pandas built-in functions or NumPy. For most tasks, you should aim to use vectorized operations, apply functions, or other Pandas/NumPy methods that operate on entire columns or DataFrames at once. For example:

- Vectorized operations:

df['new_column'] = df['column_a'] + df['column_b'] apply()function:df['new_column'] = df.apply(lambda row: some_function(row['column_a'], row['column_b']), axis=1)

19. How do you apply a function to each element in a Pandas Series or DataFrame?

To apply a function to each element in a Pandas Series or DataFrame, you can use the apply() or map() methods. For a Series, map() is specifically designed for element-wise transformations using a function or a dict-like object. apply() can also be used on Series and DataFrames for more complex operations.

For DataFrames, apply() can be used to apply a function along an axis (rows or columns). For element-wise application on a DataFrame, use applymap(). Here are examples:

- Series:

import pandas as pd s = pd.Series([1, 2, 3]) s_transformed = s.map(lambda x: x * 2) print(s_transformed) - DataFrame:

import pandas as pd df = pd.DataFrame({'A': [1, 2], 'B': [3, 4]}) df_transformed = df.applymap(lambda x: x + 1) print(df_transformed)

20. Explain how to merge two DataFrames in Pandas. What are the different types of merges?

In Pandas, you can merge two DataFrames using the pd.merge() function. It combines DataFrames based on a common column or index, similar to SQL joins. The basic syntax is pd.merge(left, right, how='inner', on='key'), where left and right are the DataFrames, how specifies the type of merge, and on specifies the column to merge on. If column names are different in the two dataframes, left_on and right_on can be specified.

Different types of merges include:

- Inner: (default) Returns only the rows with matching keys in both DataFrames.

- Outer: Returns all rows from both DataFrames, filling missing values with

NaN. - Left: Returns all rows from the left DataFrame and the matching rows from the right DataFrame. Missing values from the right DataFrame are filled with

NaN. - Right: Returns all rows from the right DataFrame and the matching rows from the left DataFrame. Missing values from the left DataFrame are filled with

NaN. - Cross: Performs a cartesian product of the rows from both DataFrames, returning all combinations. Example:

pd.merge(df1, df2, how='cross')

21. How do you concatenate two DataFrames in Pandas?

You can concatenate two DataFrames in Pandas using the pd.concat() function. This function allows you to combine DataFrames along rows (vertically) or columns (horizontally). The basic syntax is pd.concat([df1, df2]), which concatenates df1 and df2 vertically.

Key parameters include:

objs: A sequence or mapping of Pandas objects like DataFrames or Series.axis: Specifies the axis to concatenate along (0 for rows, 1 for columns).join: Specifies how to handle indexes on other axis (inner, outer).ignore_index: If true, do not use the index values along the concatenation axis. The resulting axis will be labeled 0, ..., n - 1.

For example:

import pandas as pd

df1 = pd.DataFrame({'A': [1, 2], 'B': [3, 4]})

df2 = pd.DataFrame({'A': [5, 6], 'B': [7, 8]})

df_concatenated = pd.concat([df1, df2], ignore_index=True)

print(df_concatenated)

22. How do you calculate descriptive statistics (like mean, median, standard deviation) for a DataFrame?

To calculate descriptive statistics for a DataFrame (assuming we're using pandas in Python), you can use several built-in methods. The most common is .describe(), which provides a summary of statistics for numerical columns, including count, mean, standard deviation, minimum, maximum, and quartiles. For individual statistics:

- Mean: Use

.mean()on a DataFrame or Series (column).df['column_name'].mean() - Median: Use

.median().df['column_name'].median() - Standard Deviation: Use

.std().df['column_name'].std() - Other functions like

.min(),.max(),.count(), and.quantile()are also available for specific statistics.

23. How do you write a DataFrame to a CSV file?

To write a DataFrame to a CSV file, you typically use a method provided by the DataFrame library you are using (e.g., Pandas in Python). Here's how you'd do it with Pandas:

import pandas as pd

data = {'col1': [1, 2], 'col2': [3, 4]}

df = pd.DataFrame(data)

df.to_csv('output.csv', index=False)

Key points:

to_csv()is the method used to write the DataFrame to a CSV file.- The first argument (e.g.,

'output.csv') specifies the file name. index=Falseprevents writing the DataFrame index to the CSV.

24. What are some ways to efficiently handle large datasets in Pandas, considering memory constraints?

To efficiently handle large datasets in Pandas under memory constraints, consider these strategies:

Use

chunksizewhen reading data: Read data in smaller, manageable chunks using thechunksizeparameter in functions likepd.read_csv. Process each chunk iteratively, performing aggregations or transformations as needed, and then release the memory. For example:for chunk in pd.read_csv('large_file.csv', chunksize=10000): # Process the chunk process_chunk(chunk)Specify

dtype: Explicitly define data types for columns using thedtypeparameter inpd.read_csv. Using smaller data types (e.g.,int16instead ofint64) can significantly reduce memory usage.Select only necessary columns: Read only the columns required for your analysis using the

usecolsparameter inpd.read_csv.Optimize data types after reading: Convert columns to more memory-efficient data types using functions like

pd.to_numeric(downcast='integer')orpd.to_datetime. Also convertobjectdtypes tocategorywhere appropriate to save space.Delete unnecessary data: Remove intermediate DataFrames or columns that are no longer needed using

delorDataFrame.drop()to free up memory.Use

inplace=True: When possible, use theinplace=Trueargument in Pandas operations (e.g.,fillna,drop) to modify the DataFrame directly instead of creating a copy.Consider alternative libraries: For extremely large datasets that exceed available memory, explore out-of-core computing libraries like Dask or Vaex, which allow you to work with data that doesn't fit entirely in memory.

Intermediate Pandas interview questions

1. How do you efficiently combine data from multiple Pandas DataFrames when they share a common column, but the column names are different?

To efficiently combine data from multiple Pandas DataFrames when they share a common column but the column names are different, you should first rename the common columns to a consistent name across all DataFrames. You can achieve this using the .rename() method in Pandas. After renaming, you can use functions like pd.concat(), pd.merge(), or .join() to combine the DataFrames. pd.concat() is useful for appending DataFrames vertically or horizontally. pd.merge() is used for database-style joins based on the common column. .join() is often faster than pd.merge() when joining on indices or a common column.

For example, if you have df1 with column 'ID' and df2 with column 'CustomerID' representing the same data, you'd do df2 = df2.rename(columns={'CustomerID': 'ID'}) before merging. Then, merged_df = pd.merge(df1, df2, on='ID', how='inner') to perform an inner join. Using appropriate how argument ensures only required records are included in the result.

2. Can you explain how to use the `.pipe()` method in Pandas to chain multiple operations together for data transformation?

The .pipe() method in Pandas allows you to chain multiple data transformations together in a more readable and organized way. Instead of nesting multiple function calls or assigning intermediate results to variables, you can pass functions (or callables) to .pipe() sequentially. Each function receives the DataFrame as input and returns a transformed DataFrame, which is then passed to the next function in the chain.

For example, if you have functions clean_data, feature_engineer, and analyze_data, you can chain them using .pipe() like this: df.pipe(clean_data).pipe(feature_engineer).pipe(analyze_data). This enhances code readability and maintainability. You can also rewrite functions as method chains, which can be a better pattern for reusability. The code can be rewritten as def clean_data(df): return df[...], where the function clean_data now can be applied to the dataframe object via df.pipe(clean_data).

3. Describe how you would handle missing data in a Pandas DataFrame, including imputation strategies and considerations for different data types.

Handling missing data in Pandas involves identifying and addressing NaN values. First, I'd check for missing data using df.isnull().sum() to understand the extent of the problem. I would consider removing rows or columns with excessive missing values using df.dropna(), being mindful of potential data loss. If the missing values are not too many I would look into imputation strategies which depend on the data type and context.

For numerical data, I'd consider using df.fillna(df.mean()) or df.fillna(df.median()) for mean or median imputation, respectively. For categorical data, df.fillna(df['column'].mode()[0]) is appropriate to fill with the most frequent value. More advanced imputation techniques, such as using sklearn.impute.SimpleImputer or predictive models based on other columns, might be suitable in more complex scenarios. Another option would be to use forward fill or backfill - df.fillna(method='ffill') or df.fillna(method='bfill'). It is essential to evaluate the impact of imputation on the data's distribution and any subsequent analysis.

4. How can you create a pivot table in Pandas to summarize data based on multiple index and value columns, and how do you handle missing values in the resulting table?

You can create a pivot table in Pandas using the pivot_table() function. To summarize data based on multiple index columns, pass a list of column names to the index parameter. Similarly, for multiple value columns, pass a list to the values parameter. Here's an example:

import pandas as pd

pivot_table = pd.pivot_table(data, values=['value1', 'value2'], index=['index1', 'index2'], aggfunc='sum')

To handle missing values in the resulting table, you can use the fill_value parameter in pivot_table(). This replaces NaN values with a specified value (e.g., 0). You can also use .fillna() on the resulting pivot table after creation to impute missing values using various strategies (e.g., mean, median).

pivot_table = pd.pivot_table(data, values=['value1', 'value2'], index=['index1', 'index2'], aggfunc='sum', fill_value=0)

5. Explain how to use the `pd.Grouper` object in Pandas to group data by time intervals, and provide an example use case.

The pd.Grouper object in Pandas provides a flexible way to specify the grouping operation, especially when dealing with time-series data. It's particularly useful when you need to group data by time intervals (e.g., every 5 minutes, daily, weekly). You typically use it within the groupby() method of a Pandas DataFrame or Series. You specify the key (the column to group by), the freq (the frequency of the grouping), and optionally axis (which axis to group along).

For example, consider a DataFrame df with a DateTimeIndex. df.groupby(pd.Grouper(freq='D')).sum() would group the data by day and calculate the sum for each group. freq can be any valid Pandas frequency string (e.g., 'D' for day, 'H' for hour, '5min' for 5 minutes, 'M' for month). Alternatively you can use df.groupby(pd.Grouper(key='date_column', freq='W')).mean() to group by a specific date column called date_column weekly.

6. How would you optimize the performance of a Pandas operation on a large dataset, considering techniques like chunking or using more efficient data types?

To optimize Pandas performance on large datasets, consider chunking. Read the data in smaller, manageable pieces using pd.read_csv(filename, chunksize=...). Process each chunk independently and then combine the results. Also, using the correct data types is crucial. For example, convert strings to categorical data using astype('category') if appropriate, and use smaller integer types like int16 or int8 instead of int64 when the range of values allows. This reduces memory usage and speeds up operations. Vectorization is key - avoid loops and apply operations on entire columns/Series at once using Pandas' built-in functions.

7. Describe how to perform a rolling window calculation on a Pandas Series, and explain different window types and aggregation functions.

To perform a rolling window calculation on a Pandas Series, you can use the rolling() method. This method creates a Rolling object that allows you to specify the window size. After creating the Rolling object, you can apply an aggregation function like mean(), sum(), min(), max(), or std() to calculate the rolling statistic.

Different window types include:

- Fixed Window: A window of a constant size that slides over the data.

s.rolling(window=3).mean() - Variable Window: A window that changes its size based on some criteria, often related to time. This can be achieved using

groupbywith a custom function.

Different aggregation functions include:

mean(): Calculates the mean of the values within the window.sum(): Calculates the sum of the values within the window.min(): Finds the minimum value within the window.max(): Finds the maximum value within the window.std(): Calculates the standard deviation of the values within the window.apply(func): Applies a custom function to the values within the window. For example:

import pandas as pd

s = pd.Series([1, 2, 3, 4, 5, 6, 7, 8, 9, 10])

rolling_mean = s.rolling(window=3).mean()

print(rolling_mean)

8. How do you apply a custom function to each element in a Pandas DataFrame, and what are the performance implications compared to vectorized operations?

You can apply a custom function to each element in a Pandas DataFrame using the .applymap() method. For example:

def custom_function(x):

return x * 2

df.applymap(custom_function)

While .applymap() is flexible, it's generally slower than vectorized operations (e.g., using operators like *, +, -, / directly on DataFrame columns/rows). Vectorized operations are optimized to work on entire arrays at once, leveraging NumPy's efficient computations. .applymap() iterates element-wise, which incurs overhead. For performance-critical tasks, favor vectorized operations when possible. If your custom function cannot be vectorized (e.g., involves complex conditional logic or string processing that NumPy cannot handle directly), applymap() is a viable option, but be mindful of the performance trade-off.

9. Explain how to create a multi-level index in Pandas, and how to access and manipulate data using the different levels of the index.

To create a multi-level index (also known as a hierarchical index) in Pandas, you can use the pd.MultiIndex.from_tuples() or pd.MultiIndex.from_arrays() methods. You can also set multiple columns as the index using df.set_index(['col1', 'col2']). Once created, accessing data involves using .loc[] with tuples representing the levels. For example, df.loc[('level1_value', 'level2_value')] will access data at specific index values. Slicing can also be performed within levels using slice(None) to select all within a level e.g., df.loc[(slice(None), 'level2_value'), :].

Manipulating data at specific levels often involves using the swaplevel() method to change the order of the levels, and then sorting with sort_index() if needed. You can then use the .loc[] accessor as before to assign new values to slices, or perform computations using aggregate functions like groupby(level='level_name').sum(). For instance:

import pandas as pd

import numpy as np

index = pd.MultiIndex.from_tuples([('A', 1), ('A', 2), ('B', 1), ('B', 2)], names=['Category', 'Value'])

df = pd.DataFrame({'Data': np.random.randn(4)}, index=index)

# Accessing data

print(df.loc[('A', 1)])

# Manipulating data

df = df.swaplevel(0, 1)

df = df.sort_index()

print(df.loc[(1, 'A')])

10. How can you convert a Pandas DataFrame to a sparse matrix format, and what are the benefits of doing so for memory usage and computation speed?

You can convert a Pandas DataFrame to a sparse matrix using scipy.sparse matrices in conjunction with DataFrame methods like to_numpy(). For example, you might use scipy.sparse.csc_matrix(df.to_numpy()) to create a Compressed Sparse Column (CSC) matrix. Different sparse formats like CSR (Compressed Sparse Row), CSC, COO (Coordinate list), etc., can be chosen based on the structure of the data and the operations that will be performed.

The benefits of using sparse matrices include reduced memory usage when dealing with dataframes containing a large number of zero or missing values. Storing only the non-zero elements and their indices can significantly decrease memory footprint. Furthermore, certain computations, especially those involving linear algebra, can be performed more efficiently on sparse matrices as algorithms can be optimized to avoid operations involving zero elements, leading to faster computation speeds.

11. Describe how to perform a fuzzy merge in Pandas, where you match rows based on approximate string matching rather than exact equality.

Fuzzy merging in Pandas involves matching rows from two DataFrames based on approximate string matches in one or more columns, rather than exact equality. This is often necessary when dealing with real-world data where string fields may contain slight variations, typos, or inconsistencies.

The fuzzywuzzy library, along with Pandas, is commonly used to achieve this. First, you'd calculate a similarity score between the strings in the merge columns using fuzzywuzzy.fuzz.ratio or other relevant functions like partial_ratio, token_sort_ratio, etc. Then, filter the potential matches based on a defined threshold score. Finally, you can merge the DataFrames based on these fuzzy-matched indices or create a new DataFrame containing the best matches for each row. For example:

import pandas as pd

from fuzzywuzzy import fuzz

def fuzzy_merge(df_left, df_right, left_on, right_on, threshold=90):

merged_rows = []

for i, row_left in df_left.iterrows():

best_match_index = None

best_score = 0

for j, row_right in df_right.iterrows():

score = fuzz.ratio(row_left[left_on], row_right[right_on])

if score > threshold and score > best_score:

best_score = score

best_match_index = j

if best_match_index is not None:

merged_row = pd.concat([row_left, df_right.loc[best_match_index]])

merged_rows.append(merged_row)

return pd.DataFrame(merged_rows)

12. How do you use the Pandas `eval()` function to perform arithmetic operations on columns, and what are the advantages of using it over standard operators?

The pandas.eval() function evaluates a string describing operations on Pandas DataFrames and Series. It's primarily used for arithmetic operations involving columns. For instance, df.eval('A + B * C') calculates 'A + B * C' for each row, where A, B, and C are column names in the DataFrame df. You can also assign the result to a new or existing column: df.eval('D = A + B * C', inplace=True).

Advantages over standard operators include:

- Performance:

eval()can be faster, especially for larger DataFrames, because it avoids creating temporary intermediate arrays, leveraging NumExpr under the hood (if installed) to perform vectorized operations. - Readability: Complex expressions can be more readable when written as a single string within

eval()compared to chaining multiple Pandas operations. The string representation can often mirror the mathematical expression more closely. - Memory usage: eval reduces memory usage for larger operations by optimizing the steps. Using standard operations, temporary arrays are created for each step in the calculation, increasing memory overhead.

Consider this example:

import pandas as pd

import numpy as np

# Sample DataFrame

df = pd.DataFrame({'A': np.random.rand(1000), 'B': np.random.rand(1000), 'C': np.random.rand(1000)})

# Using eval()

df['D'] = df.eval('A + B * C')

# Using standard operators

df['E'] = df['A'] + df['B'] * df['C']

# both D and E columns will have the same result, but eval() is faster and uses less memory

13. Explain how to read and write data to a SQL database using Pandas, including handling different data types and performing SQL queries.

Pandas can interact with SQL databases using the sqlalchemy library (for database connections) and the pandas.read_sql and pandas.to_sql functions. To read data, you first establish a connection to the database using sqlalchemy. Then, use pandas.read_sql to execute SQL queries and load the results into a Pandas DataFrame. pandas.read_sql automatically infers data types from the SQL database schema. Example:

import pandas as pd

from sqlalchemy import create_engine

engine = create_engine('sqlite:///:memory:') # Connect to a database (e.g., SQLite in memory)

df = pd.DataFrame({'col1': [1, 2], 'col2': ['a', 'b']})

df.to_sql('test_table', engine, if_exists='replace', index=False) # Write DataFrame to SQL

df_read = pd.read_sql('SELECT * FROM test_table', engine) # Read data from SQL

print(df_read)

To write data, create a Pandas DataFrame and use the pandas.to_sql function. Specify the table name, the engine, and how to handle existing tables (if_exists='replace' to overwrite, if_exists='append' to add). Pandas handles the data type conversions from Pandas types to SQL types. If you need more control, you can specify the dtype argument in to_sql with a dictionary mapping column names to SQLAlchemy data types. For direct SQL queries, engine.execute() can be used.

14. How can you create a custom aggregation function in Pandas to calculate a statistic that is not available in the built-in aggregation functions?

You can create a custom aggregation function in Pandas using the agg() method along with a user-defined function. This function should take a Series as input and return a single, aggregated value. For example, to calculate the range (max - min) of a column, you can define a function range_func(x): return x.max() - x.min() and then apply it using df['column_name'].agg(range_func).

Alternatively, you can use a lambda function for a more concise approach if the logic is simple. For instance: df['column_name'].agg(lambda x: x.max() - x.min()). The key is that the passed function reduces the Series to a single scalar value, representing the aggregated statistic. This enables flexible calculations beyond Pandas' built-in functions.

15. Describe how to perform a time series resampling operation in Pandas, including different resampling frequencies and interpolation methods.

In Pandas, time series resampling is done using the resample() method on a DataFrame or Series with a DateTimeIndex. The core functionality involves specifying a new frequency (e.g., 'D' for daily, 'W' for weekly, 'M' for monthly). For example, df.resample('W').mean() resamples the DataFrame df to weekly frequency and calculates the mean for each week. Different aggregation functions like sum(), min(), max(), count() can be applied after resample().

Missing values introduced during upsampling (increasing the frequency) can be handled using interpolation. Common methods include:

ffill(): Forward fill (propagates the last valid observation forward).bfill(): Backward fill (uses the next valid observation to fill the gap).interpolate(): Uses various interpolation techniques, like linear interpolation (method='linear') or polynomial interpolation (method='polynomial',order=2). For instance,df.resample('H').interpolate()resamples to hourly frequency and fills missing values using linear interpolation. Code example:df.resample('D').asfreq().fillna(method='ffill')

16. How do you handle categorical data in Pandas, including encoding categorical variables and using them in machine learning models?

Pandas offers several ways to handle categorical data. To begin, you can convert a column to a categorical type using astype('category'). This is memory-efficient, especially for columns with many repeated values. For encoding, you can use techniques like:

- One-Hot Encoding:

pd.get_dummies(df, columns=['column_name'])creates new columns for each category. This is suitable for nominal data. - Label Encoding:

df['column_name'].astype('category').cat.codesassigns a unique numerical value to each category. Scikit-learn'sLabelEncodercan also be used. Be cautious when applying it to nominal data, as it might imply an ordinal relationship where none exists. - Ordinal Encoding: If the categorical variable has a meaningful order, assign integers based on that order. You can use a dictionary mapping and the

map()function.

For using categorical data in machine learning models, many algorithms require numerical input. Therefore, encoding is crucial. One-hot encoding is commonly used with linear models, while tree-based models can sometimes handle label-encoded features directly or benefit from ordinal encoding if the categories have a logical order. Consider the algorithm's requirements and the nature of your data when choosing an encoding method.

17. Explain how to use the Pandas `Styler` object to format and style DataFrames for presentation, including conditional formatting and custom CSS styles.

The Pandas Styler object allows you to visually customize DataFrames. You can access the Styler object using df.style. It supports conditional formatting using methods like applymap (element-wise) and apply (column/row/table-wise). These methods take functions that return CSS styles based on data values.

To apply custom CSS, you can use set_properties or set_table_styles. set_properties applies inline styles to individual cells, while set_table_styles allows you to set styles using CSS selectors. Example:

def color_negative_red(val):

color = 'red' if val < 0 else 'black'

return 'color: %s' % color

s = df.style.applymap(color_negative_red).set_properties(**{'font-weight': 'bold'})

s

This code snippet demonstrates conditional formatting to color negative values in red and set font-weight bold for all cells. s is the styled dataframe that can be rendered in various output formats.

18. How can you create a heatmap visualization of a Pandas DataFrame using Seaborn or Matplotlib, and how do you interpret the heatmap?

To create a heatmap visualization of a Pandas DataFrame, you can use Seaborn's heatmap() function or Matplotlib's imshow() function along with appropriate color mapping. With Seaborn, it's straightforward: sns.heatmap(dataframe.corr(), annot=True, cmap='viridis') where dataframe is your Pandas DataFrame, annot=True displays the correlation values in each cell, and cmap sets the color scheme. Matplotlib requires a bit more setup. You can use plt.imshow(dataframe.corr(), cmap='viridis') followed by setting ticks and labels for the axes.

Interpreting the heatmap involves observing the color intensity. Each cell represents the correlation (or any other metric) between two variables. Darker or brighter colors (depending on the colormap) indicate stronger positive or negative correlations. annot=True helps to see the values directly. For example, a bright cell might suggest a strong positive correlation, while a dark cell suggests a strong negative correlation. Values near zero indicate little to no correlation.

19. Describe how to perform a network analysis using Pandas and NetworkX, including creating a graph from a DataFrame and calculating network metrics.

To perform network analysis using Pandas and NetworkX, you typically start with data representing connections between nodes, often stored in a Pandas DataFrame. You can create a graph using networkx.from_pandas_edgelist(df, source='column_containing_source_node', target='column_containing_target_node', edge_attr='column_containing_edge_attributes' ). After the graph is created, you can calculate various network metrics like degree centrality (using networkx.degree_centrality(graph)), betweenness centrality (using networkx.betweenness_centrality(graph)), and shortest paths (using networkx.shortest_path(graph, source='node1', target='node2')).

For example:

import pandas as pd

import networkx as nx

# Example DataFrame

data = {'source': ['A', 'A', 'B', 'C'], 'target': ['B', 'C', 'C', 'D'], 'weight': [1, 2, 3, 4]}

df = pd.DataFrame(data)

# Create graph from DataFrame

G = nx.from_pandas_edgelist(df, source='source', target='target', edge_attr='weight')

# Calculate degree centrality

degree_centrality = nx.degree_centrality(G)

print(f"Degree Centrality: {degree_centrality}")

20. How do you use the Pandas `qcut()` function to discretize a continuous variable into quantiles, and how do you handle edge cases with duplicate values?

The Pandas qcut() function discretizes a continuous variable into equal-sized buckets based on rank or sample quantiles. It takes the series or array-like data and the number of quantiles (q) as input. qcut() returns a categorical object or a series whose values are category names/intervals.

Handling duplicate values is important. By default, qcut() raises a DuplicateBoundError if bin edges are not unique. To handle this, use the duplicates parameter: 'raise' (default) raises the error; 'drop' drops the duplicates resulting in unequal bin sizes. For example:

import pandas as pd

data = [1, 2, 2, 3, 4, 5]

q = 4 #desired number of quantiles

#If duplicates='raise' and duplicates exist, it will raise an error

#pd.qcut(data, q, duplicates='raise')

#If duplicates='drop', duplicated edges will be dropped.

pd.qcut(data, q, duplicates='drop')

21. Explain how to perform a geographical analysis using Pandas and GeoPandas, including reading and writing geospatial data and performing spatial operations.

Pandas handles tabular data, while GeoPandas extends it to handle geospatial data. First, install them: pip install pandas geopandas. To read geospatial data (e.g., Shapefile, GeoJSON), use geopandas.read_file('path/to/file.shp'). This creates a GeoDataFrame, similar to a Pandas DataFrame but with a 'geometry' column containing spatial data (points, lines, polygons). Writing is similar: gdf.to_file('path/to/output.geojson', driver='GeoJSON').

Spatial operations include:

- Spatial joins:

geopandas.sjoin(gdf1, gdf2, how='inner', op='intersects')combines data based on spatial relationships. - Proximity analysis: Use

gdf.distance(other_geometry)to find distances between geometries. You might need to reproject the data to an appropriate coordinate reference system (CRS) usinggdf.to_crs('EPSG:4326')before performing spatial operations to ensure accuracy, particularly for distance calculations.

22. How can you create a dashboard using Pandas and Plotly or Bokeh, including interactive widgets and data updates?

To create a dashboard with Pandas, Plotly/Bokeh, and interactive widgets, you'd typically use a framework like Dash (for Plotly) or Bokeh server. You'd start by loading and processing your data with Pandas. Then, use Plotly or Bokeh to create initial plots. Next, integrate interactive widgets (dropdowns, sliders, etc.) using the chosen framework's components. These widgets would trigger callbacks that update the plots based on user selections.

For example, using Dash:

- Create Pandas DataFrame.

- Create Plotly figure from DataFrame.

- Define Dash layout with

dcc.Graph(for the plot) anddcc.Dropdown(for the widget). - Write a callback function that updates the

figureproperty of thedcc.Graphbased on thevalueproperty of thedcc.Dropdown. This involves filtering/transforming the Pandas DataFrame based on the dropdown selection and recreating the Plotly figure.

23. Describe how to perform a text analysis using Pandas and NLTK or SpaCy, including tokenization, stemming, and sentiment analysis.

Text analysis with Pandas, NLTK/SpaCy involves several steps. First, load text data into a Pandas DataFrame. Then, clean the text by removing punctuation and converting to lowercase. Next, perform tokenization using NLTK's word_tokenize or SpaCy's tokenizer. For stemming, use NLTK's PorterStemmer or LancasterStemmer to reduce words to their root form. For sentiment analysis, use NLTK's VADER (Valence Aware Dictionary and sEntiment Reasoner) or SpaCy's integration with libraries like TextBlob. The scores from sentiment analysis can be added as new columns in the Pandas DataFrame.

Example:

import pandas as pd

import nltk

from nltk.sentiment.vader import SentimentIntensityAnalyzer

nltk.download('vader_lexicon')

data = {'text': ['This is a great movie!', 'I hate this product.']}

df = pd.DataFrame(data)

sid = SentimentIntensityAnalyzer()

df['sentiment_scores'] = df['text'].apply(lambda text: sid.polarity_scores(text))

print(df)

24. How do you use the Pandas `Categorical` data type to represent ordinal or nominal data, and how does it differ from a standard object column?

Pandas Categorical data type is used to represent data that has a fixed number of possible values (categories). It efficiently stores strings (or other objects) by mapping them to integer codes, especially useful for columns with many repeated values.

For ordinal data, you can specify an ordered=True parameter, letting Pandas know the categories have a meaningful order. This enables comparisons like data['column'] > 'category_a' if the categories are ordered. For nominal data, ordered=False (the default), implying no inherent order. Categorical columns differ from standard object columns primarily in memory usage and supported operations. Categorical uses less memory, and operations like .describe() are optimized. Object columns store each string value individually, consuming more memory, particularly when repeated values are present. Using Categorical can improve performance and reduce memory footprint when dealing with data with a limited set of values.

25. Explain how to perform a survival analysis using Pandas and Lifelines, including estimating survival curves and comparing different groups.

Survival analysis examines the time until an event occurs. Using Pandas and Lifelines in Python, you can perform this analysis effectively. First, load your data into a Pandas DataFrame, ensuring you have columns for 'time' (time to event or censoring) and 'event' (1 if the event occurred, 0 if censored). Use the KaplanMeierFitter from Lifelines to estimate the survival function. Fit the model with kmf.fit(durations = df['time'], event_observed = df['event']). To plot the survival curve, use kmf.plot_survival_function().

To compare different groups, such as treatment vs. control, create separate Kaplan-Meier fits for each group. For example, filter the DataFrame for each group, fit a KaplanMeierFitter to each, and then plot their survival functions on the same axes to visually compare. Statistically, you can compare the groups using a log-rank test provided in Lifelines using logrank_test(durations_A, durations_B, event_observed_A, event_observed_B). This test assesses whether there's a statistically significant difference between the survival curves of the two groups. The p-value will indicate significance, i.e. if p < 0.05 (generally) the groups' survival curves are statistically different.

26. How can you create a recommendation system using Pandas and scikit-learn, including collaborative filtering and content-based filtering?

You can build a basic recommendation system using Pandas and scikit-learn combining collaborative and content-based filtering. For collaborative filtering, you'd use Pandas to create a user-item interaction matrix (e.g., user ratings for items). Scikit-learn's pairwise_distances can then calculate user or item similarities based on this matrix. Recommendations are generated by finding similar users or items. Here's an example:

import pandas as pd

from sklearn.metrics.pairwise import cosine_similarity

# user-item matrix (users x items)

ratings = pd.DataFrame({'user_id': [1, 1, 2, 2, 3, 3], 'item_id': [1, 2, 1, 3, 2, 3], 'rating': [5, 3, 4, 2, 1, 5]})

user_item_matrix = ratings.pivot_table(index='user_id', columns='item_id', values='rating').fillna(0)

user_similarity = cosine_similarity(user_item_matrix)

user_similarity_df = pd.DataFrame(user_similarity, index=user_item_matrix.index, columns=user_item_matrix.index)

# To get item similarity

item_similarity = cosine_similarity(user_item_matrix.T)

item_similarity_df = pd.DataFrame(item_similarity, index=user_item_matrix.columns, columns=user_item_matrix.columns)

For content-based filtering, Pandas helps in managing item features (e.g., genre, description). Scikit-learn's TfidfVectorizer converts text features into numerical representations. cosine_similarity then determines item similarities based on feature vectors. Recommendations are items similar to those the user has liked. A hybrid system combines both approaches. You can also use scikit-learn's regression models to predict ratings for items based on user and item features, offering another approach for recommendations.

27. Describe how to perform a time series forecasting using Pandas and Prophet or ARIMA, including model fitting and evaluation.

Time series forecasting with Pandas and either Prophet or ARIMA involves data preparation, model fitting, and evaluation. First, load your time series data into a Pandas DataFrame, ensuring the time column is set as the index. With Prophet, the DataFrame needs two columns named 'ds' (datetime) and 'y' (the time series value). For ARIMA, you'll likely need to ensure stationarity through differencing or transformations. Then, instantiate and fit the model. For Prophet: model = Prophet(); model.fit(df). For ARIMA (after determining p, d, q orders): model = ARIMA(df['value'], order=(p,d,q)); model_fit = model.fit().

Next, generate forecasts. With Prophet, create a future DataFrame using model.make_future_dataframe(periods=n) and predict using forecast = model.predict(future). For ARIMA, use model_fit.forecast(steps=n). Finally, evaluate the model. For both, calculate error metrics like Mean Absolute Error (MAE), Mean Squared Error (MSE), or Root Mean Squared Error (RMSE) by comparing the predicted values with the actual values from a held-out validation set. You can also visualize the forecast against the actual data to assess performance. For example, from sklearn.metrics import mean_squared_error; mse = mean_squared_error(actual, predicted).

28. How do you use the Pandas `Index` object to optimize data access and filtering, and how does it differ from a standard column?

The Pandas Index object is crucial for optimizing data access because it allows for fast lookups based on labels. Unlike standard columns, the Index is designed for efficient searching and retrieval; Pandas uses it to align data during operations and quickly select subsets of data. When filtering, using the Index can be significantly faster than filtering on a regular column, especially for large datasets, as the Index is often implemented using optimized data structures like hash tables or B-trees.

Key differences include:

- Uniqueness & Immutability:

Indexvalues are often (but not always) unique and immutable, ensuring reliable lookups. - Data Alignment: Used for automatic data alignment during operations (e.g.,

DataFramejoins). - Fast Lookups: Enables highly optimized data retrieval by label compared to iterating through a column. For example, accessing

df.loc[index_label]is much faster thandf[df['column'] == value]whenindex_labelis in theIndex.

29. Explain how to perform an anomaly detection using Pandas and isolation forest or one-class SVM, including model training and threshold selection.

Anomaly detection using Pandas and Isolation Forest (or One-Class SVM) involves several steps. First, load your data into a Pandas DataFrame. Then, pre-process the data by scaling numerical features using StandardScaler or MinMaxScaler from sklearn.preprocessing. Next, train your anomaly detection model, e.g., IsolationForest from sklearn.ensemble or OneClassSVM from sklearn.svm, using the pre-processed data. For Isolation Forest: model = IsolationForest(contamination=0.05); model.fit(data). For One-Class SVM: model = OneClassSVM(nu=0.05); model.fit(data). The contamination parameter (for Isolation Forest) and nu parameter (for One-Class SVM) estimate the proportion of outliers in the dataset.

To determine the anomaly threshold, predict anomaly scores (Isolation Forest) or decision function values (One-Class SVM) for your training data. For Isolation Forest, anomalies have lower scores. For One-Class SVM, anomalies have lower decision function values. Then, decide a threshold to separate normal and anomalous data points. A common approach is to select a percentile of the anomaly scores as the threshold. For example, you could use the 5th percentile of anomaly scores as your threshold, classifying any data point with an anomaly score below this threshold as an anomaly. Specifically, scores = model.decision_function(data); threshold = np.percentile(scores, 5). Finally, use the trained model and the selected threshold to classify new data points as normal or anomalous.

30. How can you create a data pipeline using Pandas and Dask or Spark, including data loading, transformation, and storage?

A data pipeline using Pandas, Dask, and Spark involves several stages: loading, transforming, and storing data. Pandas is suitable for smaller datasets and exploratory analysis. For larger datasets, Dask or Spark can be used for parallel processing. Here's a conceptual outline:

- Data Loading: Load data from various sources (CSV, databases, etc.) using Pandas (e.g.,

pd.read_csv()). For larger datasets, use Dask'sdd.read_csv()or Spark'sspark.read.csv(). Dask and Spark can read data in parallel. - Data Transformation:

- Pandas: Use Pandas DataFrames for cleaning, filtering, and feature engineering (e.g.,

df.dropna(),df['new_column'] = ...). - Dask: Use Dask DataFrames (created with

dd.from_pandas()) for similar operations on larger-than-memory datasets. Dask will parallelize these operations. - Spark: Use Spark DataFrames and SQL-like transformations (e.g.,

df.select(),df.filter(),df.withColumn()). Spark uses lazy evaluation and optimized query execution.

- Pandas: Use Pandas DataFrames for cleaning, filtering, and feature engineering (e.g.,

- Data Storage: Store the transformed data to a target location (e.g., CSV, Parquet, database). Use

df.to_csv()for Pandas. For Dask, usedd.to_parquet(). For Spark usedf.write.parquet(). Parquet is often a good choice for large datasets due to its columnar storage format.

For example using Dask:

import pandas as pd

import dask.dataframe as dd

# Load data using pandas

df_pandas = pd.read_csv('large_data.csv')

# Create Dask DataFrame from Pandas DataFrame

df_dask = dd.from_pandas(df_pandas, npartitions=4) # Determine reasonable partition size

# Transform the data (example: filtering)

df_transformed = df_dask[df_dask['column_name'] > 10]

# Save the transformed data

df_transformed.to_parquet('transformed_data.parquet', single_file=True)

Remember to choose Dask or Spark depending on your scale requirements and infrastructure. Dask is often easier to integrate with existing Pandas workflows, while Spark is designed for large-scale distributed computing.

Advanced Pandas interview questions

1. How can you optimize Pandas code for speed and memory usage when dealing with large datasets?

When optimizing Pandas code for large datasets, consider these strategies:

- Use appropriate dtypes: Employ smaller numerical types (e.g.,

int16instead ofint64) andcategorydtype for columns with few unique values.df['column'] = df['column'].astype('category') - Chunking: Read data in smaller chunks using

pd.read_csv(..., chunksize=...)to process data iteratively. - Vectorization: Utilize Pandas' built-in vectorized operations instead of looping through rows.

df['new_column'] = df['column1'] + df['column2']is much faster than iterating. - Avoid unnecessary copies: Use

inplace=Truewhere appropriate to modify DataFrames directly. Be mindful of chained indexing which often creates copies. Instead use.locfor direct assignment.df.loc[row_indexer, col_indexer] = value - Sparse Data Structures: When data contains a lot of zeros, consider using sparse data structures to reduce memory consumption.

- Optimized File Formats: Use file formats that are more efficient than CSV like Parquet or Feather.

- Numba/Cython: For computationally intensive operations, use Numba or Cython to compile Python code to machine code for significant speed improvements.

2. Explain the difference between `.loc` and `.iloc` in Pandas, and when would you use each?

.loc and .iloc are both used for selecting data in Pandas DataFrames, but they differ in how they reference the data.

.loc uses label-based indexing, meaning you select data based on the row and column labels (names). .iloc uses integer-based indexing, meaning you select data based on the integer positions of the rows and columns. For example, df.loc['row_label', 'column_label'] selects the element at the specified label, while df.iloc[row_position, column_position] selects the element at the specified integer position. Use .loc when you know the labels and want to select based on them. Use .iloc when you want to select by numerical index regardless of labels, which is useful for iterating through data without regard to row/column names.

3. Describe how to handle missing data in Pandas, including imputation techniques.

Handling missing data in Pandas often involves identifying, and then either removing or imputing the missing values, represented as NaN. df.isnull() and df.notnull() help identify missing values. df.dropna() removes rows or columns with missing data. The axis parameter controls whether rows (axis=0) or columns (axis=1) are dropped, and how='any' (default) drops if any value is missing, while how='all' drops only if all values are missing. thresh can specify the minimum number of non-missing values to keep a row/column. For imputation, df.fillna() replaces missing values. Common imputation techniques include filling with a constant value, the mean, median, or mode of the column. Example: df['column_name'].fillna(df['column_name'].mean(), inplace=True) replaces NaN values in column_name with the column's mean.

4. How would you perform a multi-index sort in Pandas and why might you use it?

To perform a multi-index sort in Pandas, you would use the sort_index() method on a DataFrame or Series that has a MultiIndex. You can specify the level(s) you want to sort by using the level parameter, which accepts a level name or number (or a list of them). The ascending parameter controls the sort order for each level; you can provide a boolean or a list of booleans corresponding to each level you are sorting.

You might use a multi-index sort when your data is hierarchically structured. For example, you might have sales data indexed by region and then by product category. Sorting by region and then by product category allows you to easily analyze sales performance within each region and compare product sales across different regions. It facilitates tasks like grouping, aggregation, and creating meaningful visualizations.

5. Explain how to use `pd.Grouper` for custom time-based aggregation.

pd.Grouper enables flexible time-based grouping in pandas, particularly useful when resampling isn't sufficient. Instead of relying on a fixed frequency like 'D' (daily), pd.Grouper allows you to define custom grouping intervals or base the groups on specific columns. You typically use it within groupby() to define how rows should be aggregated.

For example, you can group data by every 5 days using pd.Grouper(key='date_column', freq='5D') or group by weeks starting on Wednesday using pd.Grouper(key='date_column', freq='W-WED'). The key argument specifies the column to group by, and freq sets the grouping frequency. You can also group by a specific column's values with pd.Grouper(column='category_column') which will group by the unique values found in category_column instead of the datetime index. You would then follow this with an aggregation function like sum(), mean(), etc.

6. How do you efficiently combine multiple Pandas DataFrames with different structures?

Efficiently combining Pandas DataFrames with different structures often involves understanding the specific requirements and choosing the appropriate method.

For simple concatenation, pd.concat() is a good starting point. It can handle DataFrames with different columns and index structures using the join and axis parameters. To handle joins based on common columns, the pd.merge() (or df.merge()) function is helpful. Key parameters are how (specifying the type of join - 'inner', 'outer', 'left', 'right'), on (specifying the column(s) to join on), left_on, and right_on (when the join columns have different names). When the columns do not match exactly, consider renaming using df.rename() before merging. Combining pd.concat() and pd.merge() can solve complex scenarios.

7. Explain how to apply a custom function to a Pandas DataFrame that depends on multiple columns using `apply`.

To apply a custom function to a Pandas DataFrame that depends on multiple columns using apply, you need to pass axis=1 to the apply method. This ensures that the function is applied row-wise, allowing access to multiple columns within each row. Inside the custom function, you can access the values of different columns by referencing the column names as attributes of the row.

For example:

def my_custom_function(row):

column1 = row['column_name_1']

column2 = row['column_name_2']

# Your logic here using column1 and column2

return column1 + column2

df['new_column'] = df.apply(my_custom_function, axis=1)

In this example, my_custom_function takes a row as input, accesses 'column_name_1' and 'column_name_2', performs some operation, and returns a value which is then stored in the 'new_column'.

8. Describe how to perform a rolling window calculation on a Pandas Series or DataFrame.

To perform a rolling window calculation on a Pandas Series or DataFrame, you use the .rolling() method. This method creates a Rolling object, which you can then use to apply various aggregation functions.

For example:

import pandas as pd

# Example Series

s = pd.Series([1, 2, 3, 4, 5])

# Rolling window of size 3, calculating the mean

rolling_mean = s.rolling(window=3).mean()

print(rolling_mean)

Key parameters include window (the size of the rolling window), min_periods (the minimum number of observations required to have a value), and center (whether the window is centered). Common aggregation functions used after .rolling() are .mean(), .sum(), .std(), and .apply() for custom functions.

9. How can you create pivot tables and cross-tabulations using Pandas and what are their differences?

Pandas offers pivot_table() and crosstab() for creating pivot tables and cross-tabulations. pivot_table() is a general-purpose function that aggregates data based on specified index, columns, and values using a given aggregation function (like mean, sum, etc.). It can handle numerical and categorical data, and missing values can be managed.

crosstab() is specifically designed for computing a frequency table (cross-tabulation) between two or more categorical variables. It shows the frequency distribution of different combinations of categories. While pivot_table needs explicit aggregation function for its 'values', crosstab essentially calculates the counts (frequencies) by default. For example:

import pandas as pd

data = {'A': ['foo', 'foo', 'bar', 'bar', 'foo', 'bar'],

'B': ['one', 'one', 'two', 'one', 'two', 'two'],

'C': [1, 2, 3, 4, 5, 6]}

df = pd.DataFrame(data)

pivot_table = pd.pivot_table(df, values='C', index='A', columns='B', aggfunc='sum')

crosstab = pd.crosstab(df['A'], df['B'])

print(pivot_table)

print(crosstab)

10. Explain how to use Pandas with scikit-learn pipelines for data preprocessing and modeling.

Pandas DataFrames can be directly integrated into scikit-learn pipelines. This is helpful because scikit-learn's transformers and estimators are designed to work with NumPy arrays. Pandas provides data structures and operations that are often necessary during data preprocessing. You can use ColumnTransformer to apply different preprocessing steps to different columns of your DataFrame and then pass the result to a scikit-learn model.

Here's a basic example:

import pandas as pd

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.linear_model import LogisticRegression

from sklearn.pipeline import Pipeline

# Example DataFrame

data = {'numerical': [1, 2, 3, 4, 5], 'categorical': ['A', 'B', 'A', 'C', 'B'], 'target': [0, 1, 0, 1, 0]}

df = pd.DataFrame(data)

# Define preprocessing steps for numerical and categorical features

numerical_features = ['numerical']

numerical_transformer = Pipeline(steps=[('scaler', StandardScaler())])

categorical_features = ['categorical']

categorical_transformer = Pipeline(steps=[('onehot', OneHotEncoder(handle_unknown='ignore'))])

# Create a ColumnTransformer

preprocessor = ColumnTransformer(transformers=[('num', numerical_transformer, numerical_features),('cat', categorical_transformer, categorical_features)])

# Create the pipeline

model = Pipeline(steps=[('preprocessor', preprocessor),('classifier', LogisticRegression())])

# Fit the model

X = df.drop('target', axis=1)

y = df['target']

model.fit(X, y)

# Make predictions

predictions = model.predict(X)

print(predictions)

11. How would you convert a Pandas DataFrame to a sparse matrix format and when is this useful?

You can convert a Pandas DataFrame to a sparse matrix format using the scipy.sparse library in conjunction with DataFrame methods. The common approach involves first converting the DataFrame to a NumPy array and then using a sparse matrix constructor like csc_matrix (Compressed Sparse Column) or csr_matrix (Compressed Sparse Row). For example:

import pandas as pd

import scipy.sparse as sparse

data = {'col1': [1, 0, 0, 2, 0], 'col2': [0, 0, 3, 0, 0], 'col3': [0, 4, 0, 0, 5]}

df = pd.DataFrame(data)

sparse_matrix = sparse.csc_matrix(df.values)

This conversion is particularly useful when dealing with DataFrames containing a large number of zero or missing values. Sparse matrices efficiently store only the non-zero elements, significantly reducing memory usage and computational cost for operations like matrix multiplication or solving linear systems. This is common in scenarios like recommendation systems (user-item interactions), text analysis (term-document matrices), or network analysis where most entries are zero.

12. Describe how to write a Pandas DataFrame to a database using SQLAlchemy and handle potential issues.

To write a Pandas DataFrame to a database using SQLAlchemy, you can use the to_sql() method provided by Pandas. This method requires a SQLAlchemy engine object to establish the connection. For example:

import pandas as pd

from sqlalchemy import create_engine

# Create a SQLAlchemy engine

engine = create_engine('dialect+driver://user:password@host:port/database')

# Example DataFrame

data = {'col1': [1, 2], 'col2': [3, 4]}

df = pd.DataFrame(data)

# Write the DataFrame to the database

df.to_sql('table_name', engine, if_exists='replace', index=False)

Potential issues and solutions include:

- Table Already Exists: Use the

if_existsparameter ('fail','replace', or'append') to handle cases where the table already exists. - Data Type Mismatches: Ensure that the data types in the DataFrame align with the database table schema. You may need to cast columns using

.astype()before writing. - SQL Injection: Use parameterized queries (handled automatically by SQLAlchemy) to prevent SQL injection vulnerabilities.

- Large DataFrames: For very large DataFrames, consider writing in chunks using the

chunksizeparameter into_sql()to avoid memory issues. Also, usingmethod='multi'can improve performance. - Connection Errors: Handle potential database connection errors using

try...exceptblocks.

13. How can you use Pandas to read and process data from different file formats (e.g., JSON, CSV, Excel) with custom parsing?

Pandas offers several functions to read data from various file formats, including read_csv, read_excel, and read_json. For custom parsing, you can utilize parameters within these functions or perform post-processing.

- CSV: Use

read_csvwith arguments likedelimiter,header,names,dtype,parse_dates, and a customdate_parser. For example:import pandas as pd df = pd.read_csv('data.csv', delimiter=';', header=0, dtype={'col1': str, 'col2': int}) - Excel: Use

read_exceland specify thesheet_name,header,names, anddtype. You can also useconvertersto apply custom parsing functions to specific columns. - JSON: For JSON,

read_jsonoffers options likeorient(specifying the JSON structure),dtype, andconvert_dates. You might also need to pre-process the JSON data before loading it into Pandas if it's deeply nested or has a complex structure.

After reading the data, you can apply further custom processing using Pandas functions like apply, map, or create custom functions to clean or transform the data as needed.

14. Explain how to use `pd.merge` to perform different types of joins (inner, outer, left, right) with detailed examples.

pd.merge in pandas is a powerful function for combining DataFrames based on common columns. The how argument controls the type of join:

- Inner Join (

how='inner'): Returns only rows where the join key exists in both DataFrames. It's the default behavior. Example:import pandas as pd df1 = pd.DataFrame({'key': ['A', 'B', 'C', 'D'], 'value1': [1, 2, 3, 4]}) df2 = pd.DataFrame({'key': ['B', 'D', 'E', 'F'], 'value2': [5, 6, 7, 8]}) merged_df = pd.merge(df1, df2, on='key', how='inner') # Result contains rows with key B and D. - Outer Join (

how='outer'): Returns all rows from both DataFrames, filling missing values (NaN) where the key doesn't exist in one of the DataFrames.merged_df = pd.merge(df1, df2, on='key', how='outer') #Result contains rows from A to F; values missing are NaN - Left Join (

how='left'): Returns all rows from the left DataFrame (df1in this case), and the matching rows from the right DataFrame (df2). If there's no match in the right DataFrame, missing values are filled with NaN.merged_df = pd.merge(df1, df2, on='key', how='left') #All rows from df1 are included - Right Join (

how='right'): Returns all rows from the right DataFrame (df2), and the matching rows from the left DataFrame (df1). If there's no match in the left DataFrame, missing values are filled with NaN.merged_df = pd.merge(df1, df2, on='key', how='right') #All rows from df2 are included

The on argument specifies the column to join on. You can also use left_on and right_on if the join columns have different names in the two DataFrames.

15. Describe how to implement a custom aggregation function using `groupby` and `agg` in Pandas.

To implement a custom aggregation function with groupby and agg in Pandas, you first define your custom function. This function should take a Pandas Series as input (representing a group of data) and return a single, aggregated value. Then, you use the groupby() method to group your DataFrame by the desired column(s). Finally, you apply the agg() method, passing your custom function as an argument. Pandas will then apply your function to each group and return a DataFrame with the aggregated results.

For example:

import pandas as pd

import numpy as np

def custom_mean(series):

return np.mean(series) * 2

df = pd.DataFrame({'A': ['foo', 'bar', 'foo', 'bar',

'foo', 'bar'],

'B': [1, 2, 3, 4, 5, 6]})

grouped = df.groupby('A')['B'].agg(custom_mean)

print(grouped)

In the example, the custom_mean function will double the mean for each group in column 'A'.

16. How do you handle categorical data in Pandas, including one-hot encoding and custom mappings?

Pandas provides several ways to handle categorical data. You can convert a column to the category dtype using astype('category'). This is memory-efficient and enables categorical operations. For one-hot encoding, pd.get_dummies() is commonly used. It transforms categorical columns into numerical columns, where each category becomes a new column with binary (0 or 1) values. Example: pd.get_dummies(df, columns=['category_column']).

For custom mappings, you can use the .map() or .replace() methods. .map() applies a dictionary or function to map values. .replace() can directly substitute specific categorical values with desired numerical or string representations. For instance: df['category_column'].map({'category1': 1, 'category2': 2}) or df['category_column'].replace({'category1': 'new_value'}). These methods allow you to encode categorical variables based on domain knowledge or specific requirements.

17. Explain how to use `pd.cut` and `pd.qcut` for binning continuous variables.

pd.cut and pd.qcut are both used for binning continuous variables in pandas, but they differ in how they define the bins.

pd.cut defines bins based on equal width intervals. You provide the number of bins or the bin edges, and it divides the data range into those specified intervals. For instance, pd.cut(data, bins=4) will create 4 bins of equal width across the range of your data. pd.qcut, on the other hand, defines bins based on quantiles. This means each bin will contain roughly the same number of data points. You specify the number of quantiles (bins), and pd.qcut determines the bin edges such that each bin has (approximately) an equal frequency. For example, pd.qcut(data, q=4) will divide the data into quartiles, with each quartile containing roughly 25% of the data. Use pd.cut when you want equal-sized bins and pd.qcut when you want each bin to contain a similar number of observations even if the bin sizes vary.

18. How would you debug performance issues in Pandas code?

To debug performance issues in Pandas code, I'd start by profiling. I'd use tools like cProfile or %prun in Jupyter notebooks to identify the slowest parts of the code. Then, I'd focus on optimizing those specific sections.

Here are some common optimization techniques:

- Vectorization: Use Pandas' built-in functions and avoid explicit loops (

for,while) whenever possible. Pandas functions are optimized for vectorized operations. - Data Types: Ensure you're using the most efficient data types (e.g.,

int32instead ofint64if the range allows,categorytype for repetitive string columns). - Avoid

apply:applycan be slow. Try to use vectorized operations ornp.vectorizeas alternatives. - Indexing: Use appropriate indexing for efficient data selection and filtering. Consider setting an index if you perform frequent lookups on a specific column.

- Chunking: For large datasets, process data in smaller chunks using

chunksizeparameter inread_csv. - Query/Eval: Use

query()andeval()for faster boolean filtering and computations, especially with larger datasets. - Inspect DataFrames: Look for memory usage issues using

df.info(memory_usage='deep'). Look at dtypes.

I'd also use timeit to measure the execution time of different approaches to see which one performs best.

import pandas as pd

import timeit

def slow_method(df):

return df['A'].apply(lambda x: x * 2)

def fast_method(df):

return df['A'] * 2

df = pd.DataFrame({'A': range(100000)})

print("Slow method:", timeit.timeit(lambda: slow_method(df), number=10))

print("Fast method:", timeit.timeit(lambda: fast_method(df), number=10))