Screening candidates for OpenCV skills can be challenging, especially if you're not sure which questions will accurately gauge their proficiency. Knowing the right questions to ask can help ensure you hire developers with the right expertise and avoid costly hiring mistakes like this.

In this blog post, we provide an extensive list of OpenCV interview questions tailored to different levels of expertise, ranging from basic to advanced. These questions are organized into categories such as basic, junior developers, image processing, computer vision algorithms, and situational questions.

Using these questions will help you identify top talent and make informed hiring decisions. Additionally, you can complement these interviews with our OpenCV online test for a comprehensive assessment of the candidates' skills.

Table of contents

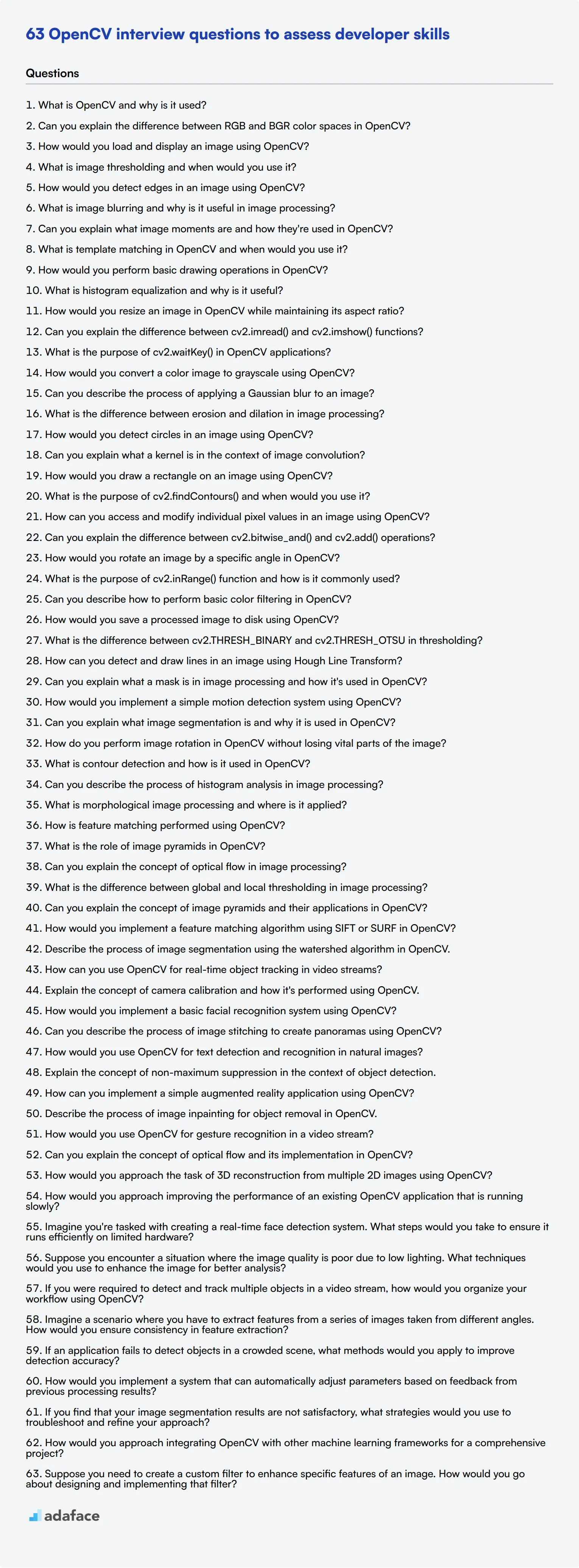

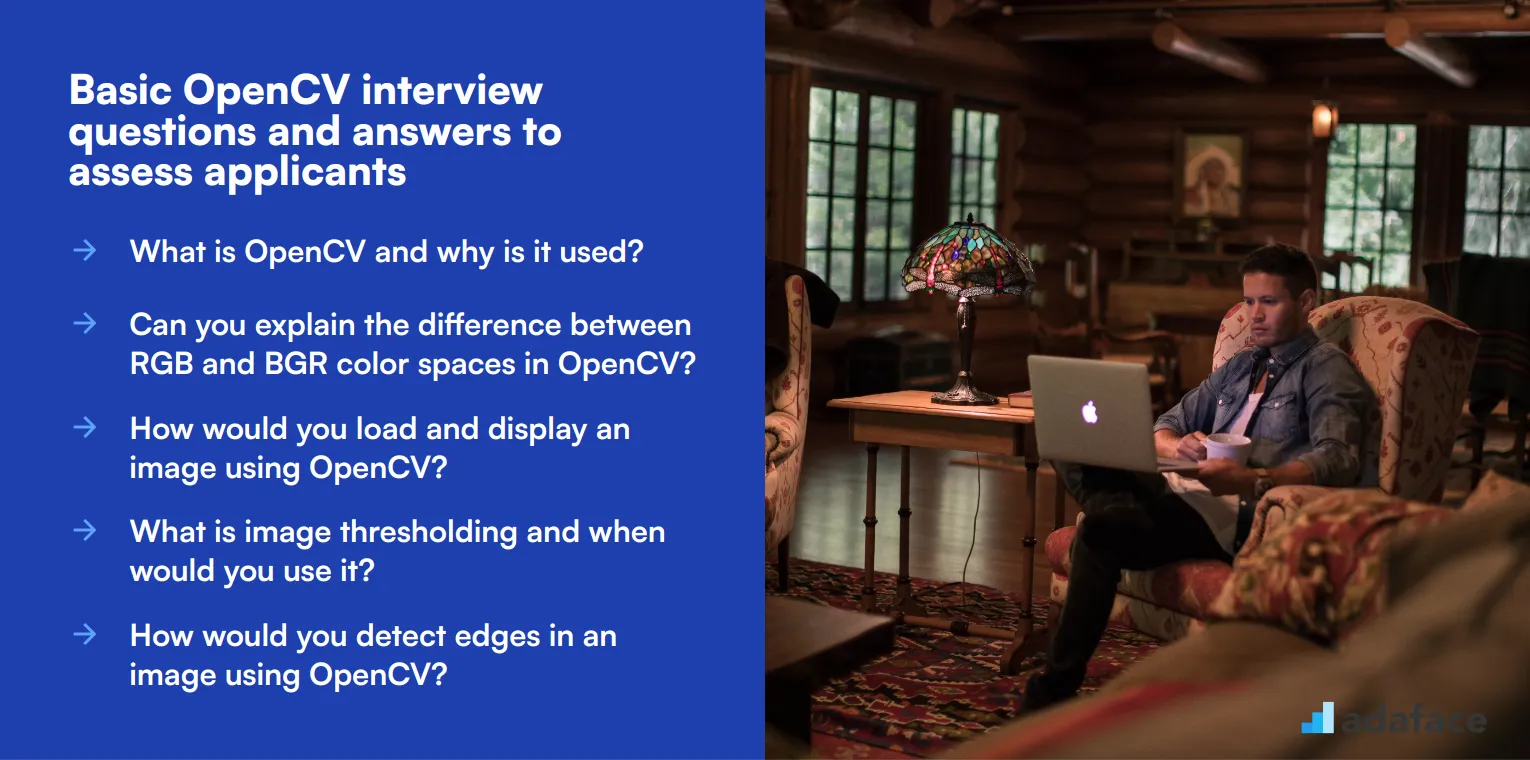

10 basic OpenCV interview questions and answers to assess applicants

Ready to assess your OpenCV candidates? These 10 basic questions will help you gauge applicants' understanding of computer vision fundamentals. Use them to kickstart your interviews and get a sense of candidates' practical knowledge. Remember, the goal is to spark conversation, not to stump them!

1. What is OpenCV and why is it used?

OpenCV (Open Source Computer Vision Library) is an open-source library of programming functions mainly aimed at real-time computer vision. It's used for image processing, video capture and analysis, including features like face detection and object recognition.

A strong candidate should mention that OpenCV is cross-platform and can be used with multiple programming languages. They might also touch on its efficiency for real-time applications and its extensive function library.

Look for answers that demonstrate an understanding of OpenCV's practical applications in fields like robotics, security, or augmented reality. This shows they grasp the library's real-world relevance.

2. Can you explain the difference between RGB and BGR color spaces in OpenCV?

In OpenCV, images are typically stored in BGR (Blue-Green-Red) format, while most other libraries and display methods use RGB (Red-Green-Blue). This is a historical quirk in OpenCV's development.

Candidates should explain that when reading or displaying an image with OpenCV, the color channels are in BGR order. This means if you want to use the image with other libraries or display it correctly, you often need to convert between BGR and RGB.

Look for answers that demonstrate awareness of this OpenCV peculiarity and its implications for image processing. A strong candidate might mention methods to convert between color spaces or discuss potential pitfalls of forgetting this difference.

3. How would you load and display an image using OpenCV?

To load and display an image using OpenCV, you typically use the imread() function to read the image file, and the imshow() function to display it. You also need to use waitKey() to keep the window open and destroyAllWindows() to close it properly.

A good answer should mention error handling, such as checking if the image was successfully loaded. They might also discuss different flags for imread() that affect how the image is interpreted (e.g., color, grayscale).

Look for candidates who can explain this process clearly and demonstrate understanding of basic OpenCV functions. Bonus points if they mention best practices or potential issues, like filepath handling or window naming conventions.

4. What is image thresholding and when would you use it?

Image thresholding is a simple method of image segmentation. It's used to create binary images from grayscale images. The process replaces each pixel in an image with a black pixel if the image intensity is less than a fixed constant (threshold value), or a white pixel if the intensity is greater than that constant.

Thresholding is commonly used for tasks like separating foreground from background, or isolating objects of interest in an image. It's particularly useful in scenarios with high contrast between the object and the background.

Look for answers that demonstrate understanding of different thresholding techniques (e.g., simple thresholding, adaptive thresholding) and when each might be appropriate. A strong candidate might discuss the challenges of choosing an appropriate threshold value and methods to address this.

5. How would you detect edges in an image using OpenCV?

Edge detection in OpenCV is typically done using gradient-based methods like the Sobel, Laplacian, or Canny edge detectors. The Canny edge detector is often preferred due to its ability to detect strong and weak edges, and its lower susceptibility to noise.

A good answer should outline the basic steps: converting the image to grayscale, reducing noise (e.g., with Gaussian blur), applying the edge detection algorithm, and potentially applying thresholding to obtain binary edges.

Look for candidates who can explain the concept of edge detection and its applications. They should be able to discuss the trade-offs between different methods and parameters that affect the result, such as thresholds in the Canny detector.

6. What is image blurring and why is it useful in image processing?

Image blurring, also known as image smoothing, is a technique used to reduce noise and detail in an image. It's achieved by convolving the image with a low-pass filter kernel. Common blurring techniques in OpenCV include Gaussian blur, median blur, and bilateral filtering.

Blurring is useful as a preprocessing step in many computer vision tasks. It can help reduce noise, smooth out minor variations, and reduce the impact of small details that might interfere with larger-scale analysis. It's often used before edge detection, thresholding, or feature detection to improve results.

Look for answers that demonstrate understanding of different blurring methods and their effects. A strong candidate might discuss how the choice of kernel size affects the result, or mention the trade-off between noise reduction and loss of detail.

7. Can you explain what image moments are and how they're used in OpenCV?

Image moments are scalar quantities used to characterize an image's content or a shape within an image. They capture basic properties like the area, centroid, and orientation of a shape or region of interest.

In OpenCV, moments can be calculated using the moments() function. They're often used for tasks like finding the center of an object, determining its orientation, or as features for shape matching and recognition.

Look for answers that demonstrate understanding of different types of moments (spatial moments, central moments, Hu moments) and their applications. A strong candidate might discuss how moments can be used for simple object tracking or shape analysis in computer vision applications.

8. What is template matching in OpenCV and when would you use it?

Template matching is a technique for finding areas of an image that match (or are similar to) a template image. In OpenCV, this is typically done using the matchTemplate() function, which slides the template image over the input image and compares the template and patch of input image under the template image.

This technique is useful for tasks like object detection, especially when you're looking for a specific object with a known appearance. It's particularly effective for rigid objects that don't change shape or orientation.

Look for answers that discuss different matching methods (e.g., correlation, squared difference) and their trade-offs. A strong candidate might mention limitations of template matching, such as sensitivity to scale and rotation, and suggest potential workarounds or alternative approaches for more complex scenarios.

9. How would you perform basic drawing operations in OpenCV?

OpenCV provides several functions for drawing shapes and text on images. Common drawing operations include drawing lines (line()), rectangles (rectangle()), circles (circle()), and text (putText()). These functions typically take parameters specifying the image to draw on, the coordinates, color, thickness, and any shape-specific parameters.

A good answer should demonstrate familiarity with these basic drawing functions and their parameters. Candidates might mention that drawing operations modify the image in-place, and discuss strategies for working with copies of images to preserve the original.

Look for answers that show understanding of the coordinate system in OpenCV images (origin at top-left) and color representation (BGR in OpenCV). Bonus points for mentioning more advanced drawing functions or discussing how drawing can be used in practical applications like annotating detected objects.

10. What is histogram equalization and why is it useful?

Histogram equalization is a method to adjust image contrast by effectively spreading out the most frequent intensity values. It works by computing the histogram of pixel intensities in the image, then transforming the image so that the histogram of the output image is roughly uniform.

This technique is useful for enhancing the contrast of images, especially when the usable data of the image is represented by close contrast values. It can help to better distribute the intensities across the histogram, potentially making features more distinguishable.

Look for answers that demonstrate understanding of image histograms and how equalization affects them. A strong candidate might discuss limitations of histogram equalization (like potential over-enhancement of noise) and mention variations like adaptive histogram equalization. They might also touch on when this technique is particularly useful in image processing pipelines.

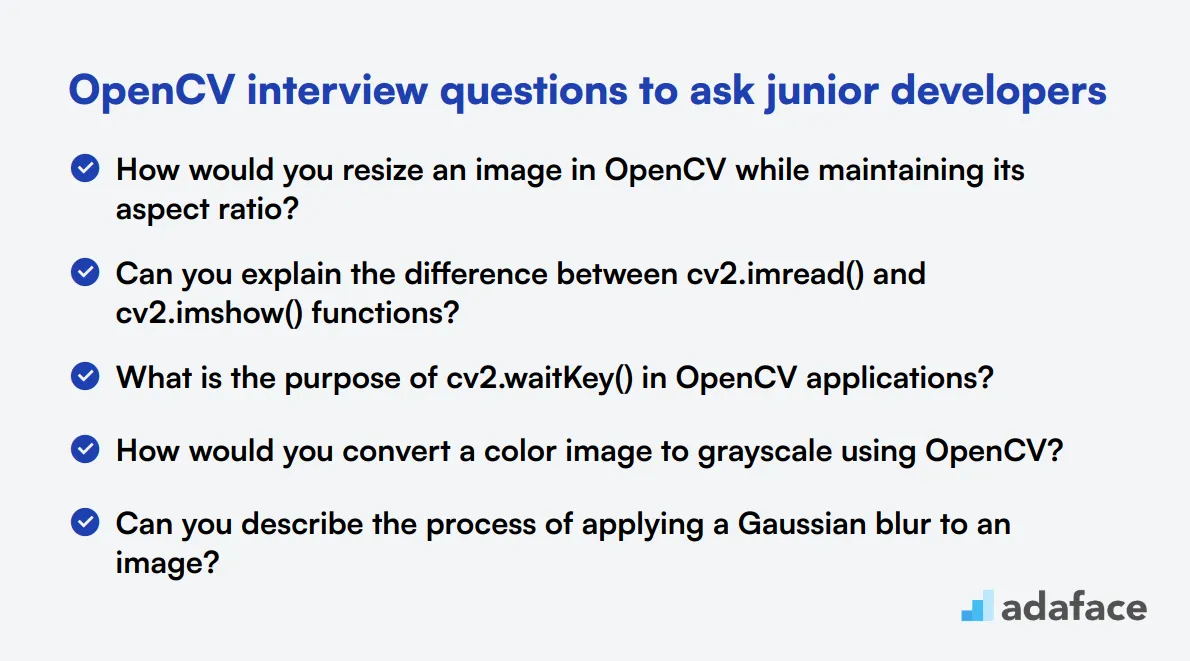

20 OpenCV interview questions to ask junior developers

To assess a junior developer's practical understanding of OpenCV, consider using these 20 interview questions. They are designed to evaluate basic computer vision skills and OpenCV proficiency, helping you identify candidates who can hit the ground running in your projects.

- How would you resize an image in OpenCV while maintaining its aspect ratio?

- Can you explain the difference between cv2.imread() and cv2.imshow() functions?

- What is the purpose of cv2.waitKey() in OpenCV applications?

- How would you convert a color image to grayscale using OpenCV?

- Can you describe the process of applying a Gaussian blur to an image?

- What is the difference between erosion and dilation in image processing?

- How would you detect circles in an image using OpenCV?

- Can you explain what a kernel is in the context of image convolution?

- How would you draw a rectangle on an image using OpenCV?

- What is the purpose of cv2.findContours() and when would you use it?

- How can you access and modify individual pixel values in an image using OpenCV?

- Can you explain the difference between cv2.bitwise_and() and cv2.add() operations?

- How would you rotate an image by a specific angle in OpenCV?

- What is the purpose of cv2.inRange() function and how is it commonly used?

- Can you describe how to perform basic color filtering in OpenCV?

- How would you save a processed image to disk using OpenCV?

- What is the difference between cv2.THRESH_BINARY and cv2.THRESH_OTSU in thresholding?

- How can you detect and draw lines in an image using Hough Line Transform?

- Can you explain what a mask is in image processing and how it's used in OpenCV?

- How would you implement a simple motion detection system using OpenCV?

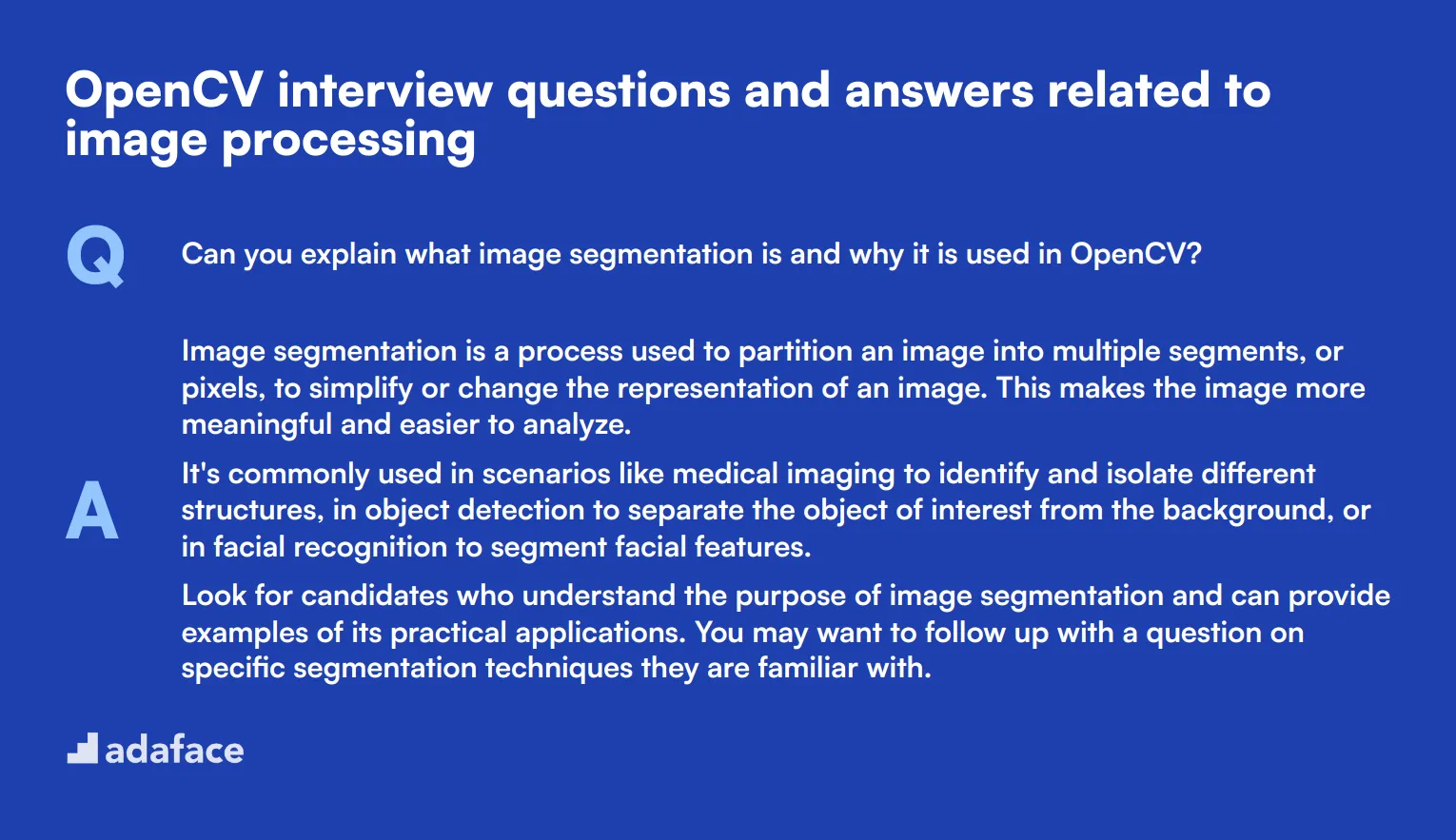

9 OpenCV interview questions and answers related to image processing

To ensure your candidates have a solid grasp on image processing with OpenCV, dive into these practical interview questions. They are crafted to give you insight into the candidate's understanding of essential concepts and their ability to apply them effectively.

1. Can you explain what image segmentation is and why it is used in OpenCV?

Image segmentation is a process used to partition an image into multiple segments, or pixels, to simplify or change the representation of an image. This makes the image more meaningful and easier to analyze.

It's commonly used in scenarios like medical imaging to identify and isolate different structures, in object detection to separate the object of interest from the background, or in facial recognition to segment facial features.

Look for candidates who understand the purpose of image segmentation and can provide examples of its practical applications. You may want to follow up with a question on specific segmentation techniques they are familiar with.

2. How do you perform image rotation in OpenCV without losing vital parts of the image?

Image rotation in OpenCV can be achieved while maintaining the image's aspect ratio and preventing the loss of crucial parts by applying an affine transformation. This involves translating the image before rotation, and then re-translating it back.

Candidates should mention the importance of calculating the center of the image and using it as the anchor point for the rotation to ensure the image's integrity is maintained.

A suitable response will highlight their understanding of affine transformations and their practical application in image processing tasks. You can also ask about their experience with solving similar challenges in past projects.

3. What is contour detection and how is it used in OpenCV?

Contour detection is the technique of finding the contours or outlines of objects within an image. Contours are useful for shape analysis, object detection, and recognition tasks.

In OpenCV, contour detection is typically performed after a binary image is created, such as through thresholding or edge detection. The contours can be used to compute properties like area, perimeter, and moments.

Candidates who can explain how contour detection works and its applications in real-world scenarios, such as in object tracking or shape analysis, demonstrate a solid understanding of its utility in image processing.

4. Can you describe the process of histogram analysis in image processing?

Histogram analysis involves examining the distribution of pixel intensities within an image. A histogram plots the number of pixels for each intensity value, providing insight into the image's contrast, brightness, and intensity distribution.

In OpenCV, histograms are used for tasks like image enhancement, thresholding, and equalization. They can help in identifying the range of pixel values that are most frequent in an image.

Candidates should be able to discuss the significance of histogram analysis and provide examples of how they have used histograms to solve image processing problems. You might explore their familiarity with different types of histograms, such as color histograms.

5. What is morphological image processing and where is it applied?

Morphological image processing deals with the structure or morphology of features in an image. It involves operations like dilation, erosion, opening, and closing to remove noise, separate touching objects, and find specific shapes.

These operations are particularly useful in preprocessing steps for object detection and image segmentation, where the goal is to improve the structure of the image for better analysis.

A strong candidate will be able to explain the different morphological operations and provide practical examples of their use. They should also mention the significance of selecting appropriate structuring elements for different tasks.

6. How is feature matching performed using OpenCV?

Feature matching in OpenCV involves finding corresponding features between two images. Common techniques include the use of descriptors like SIFT, SURF, or ORB, followed by matching algorithms such as BFMatcher or FLANN based matcher.

This process is widely used in applications like object recognition, image stitching, and 3D reconstruction, where identifying matching points between images is crucial.

Candidates should demonstrate knowledge of different feature detection and matching techniques and discuss their experience with these methods in real projects. Ideal responses will include insights into challenges faced and how they were addressed.

7. What is the role of image pyramids in OpenCV?

Image pyramids are used to create a series of images with progressively reduced resolutions. They are useful for multi-scale image processing tasks, such as image blending, compression, and object detection.

In OpenCV, image pyramids help in analyzing images at different scales, which is particularly beneficial in detecting objects of varying sizes and in coarse-to-fine processing approaches.

Look for candidates who understand the practical applications of image pyramids and can explain how they have utilized this technique in their projects. Follow-up questions could explore specific use cases and challenges encountered.

8. Can you explain the concept of optical flow in image processing?

Optical flow refers to the apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between the observer and the scene. It is used to track the movement of objects or camera motion across a sequence of images.

In OpenCV, techniques like the Lucas-Kanade method or the Farneback algorithm are used for calculating optical flow, which is essential in applications like video stabilization, motion-based object tracking, and activity recognition.

Candidates should illustrate their understanding of optical flow concepts and discuss how they have applied these techniques in practical scenarios. You might follow up with questions on specific algorithms and their implementation challenges.

9. What is the difference between global and local thresholding in image processing?

Global thresholding applies a single threshold value to the entire image, converting it to a binary image. This method works well when the lighting conditions are uniform throughout the image.

Local thresholding, on the other hand, calculates different threshold values for different regions of the image based on local characteristics. This approach is useful in situations where the lighting varies across the image.

An ideal candidate should be able to explain the scenarios where each method is appropriate and share examples from their experience where they chose one method over the other. Consider asking about specific challenges they faced and how they overcame them.

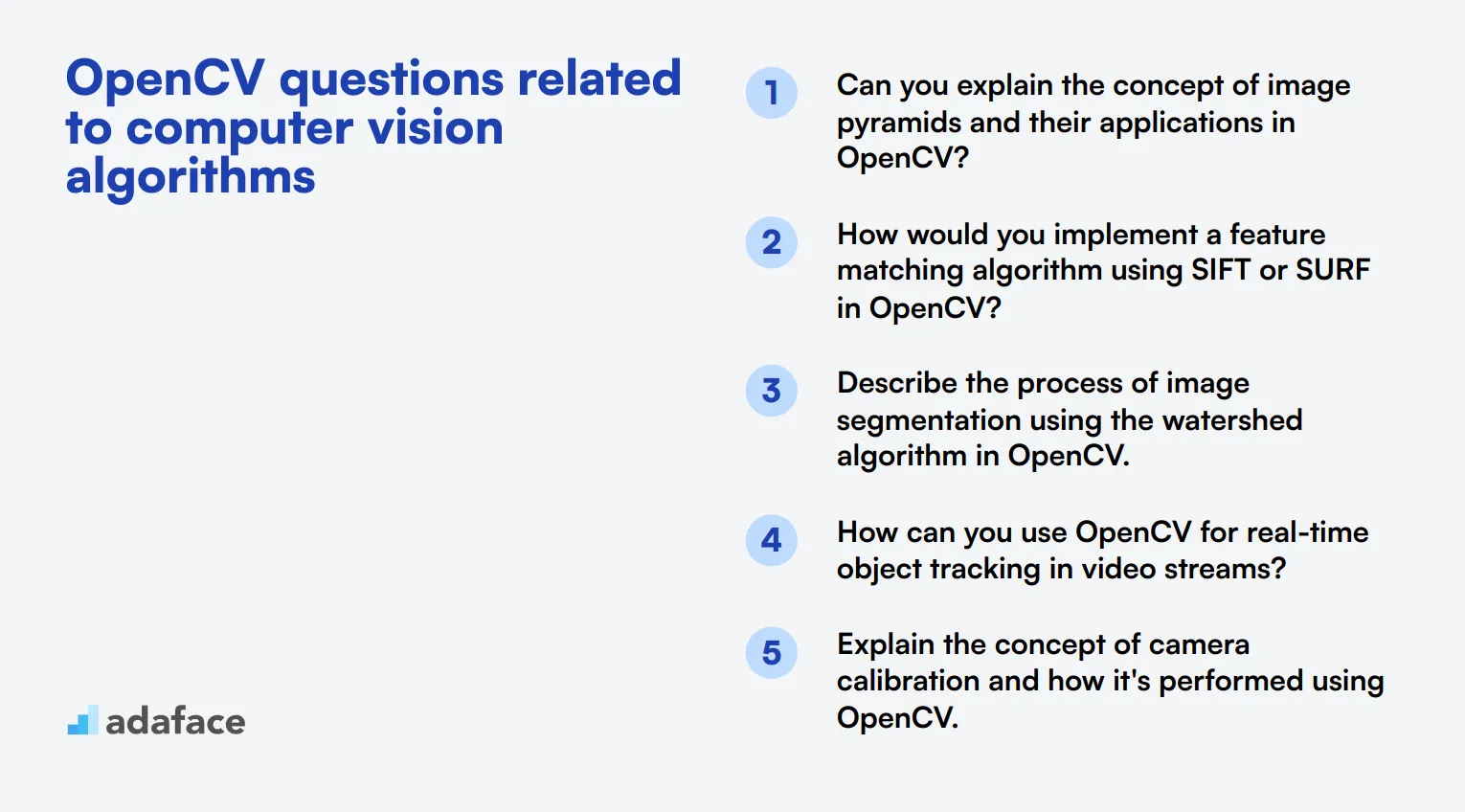

14 OpenCV questions related to computer vision algorithms

To assess a candidate's proficiency in advanced computer vision algorithms using OpenCV, consider asking some of these 14 in-depth questions. These inquiries are designed to evaluate a software engineer's understanding of complex image processing techniques and their practical applications in real-world scenarios.

- Can you explain the concept of image pyramids and their applications in OpenCV?

- How would you implement a feature matching algorithm using SIFT or SURF in OpenCV?

- Describe the process of image segmentation using the watershed algorithm in OpenCV.

- How can you use OpenCV for real-time object tracking in video streams?

- Explain the concept of camera calibration and how it's performed using OpenCV.

- How would you implement a basic facial recognition system using OpenCV?

- Can you describe the process of image stitching to create panoramas using OpenCV?

- How would you use OpenCV for text detection and recognition in natural images?

- Explain the concept of non-maximum suppression in the context of object detection.

- How can you implement a simple augmented reality application using OpenCV?

- Describe the process of image inpainting for object removal in OpenCV.

- How would you use OpenCV for gesture recognition in a video stream?

- Can you explain the concept of optical flow and its implementation in OpenCV?

- How would you approach the task of 3D reconstruction from multiple 2D images using OpenCV?

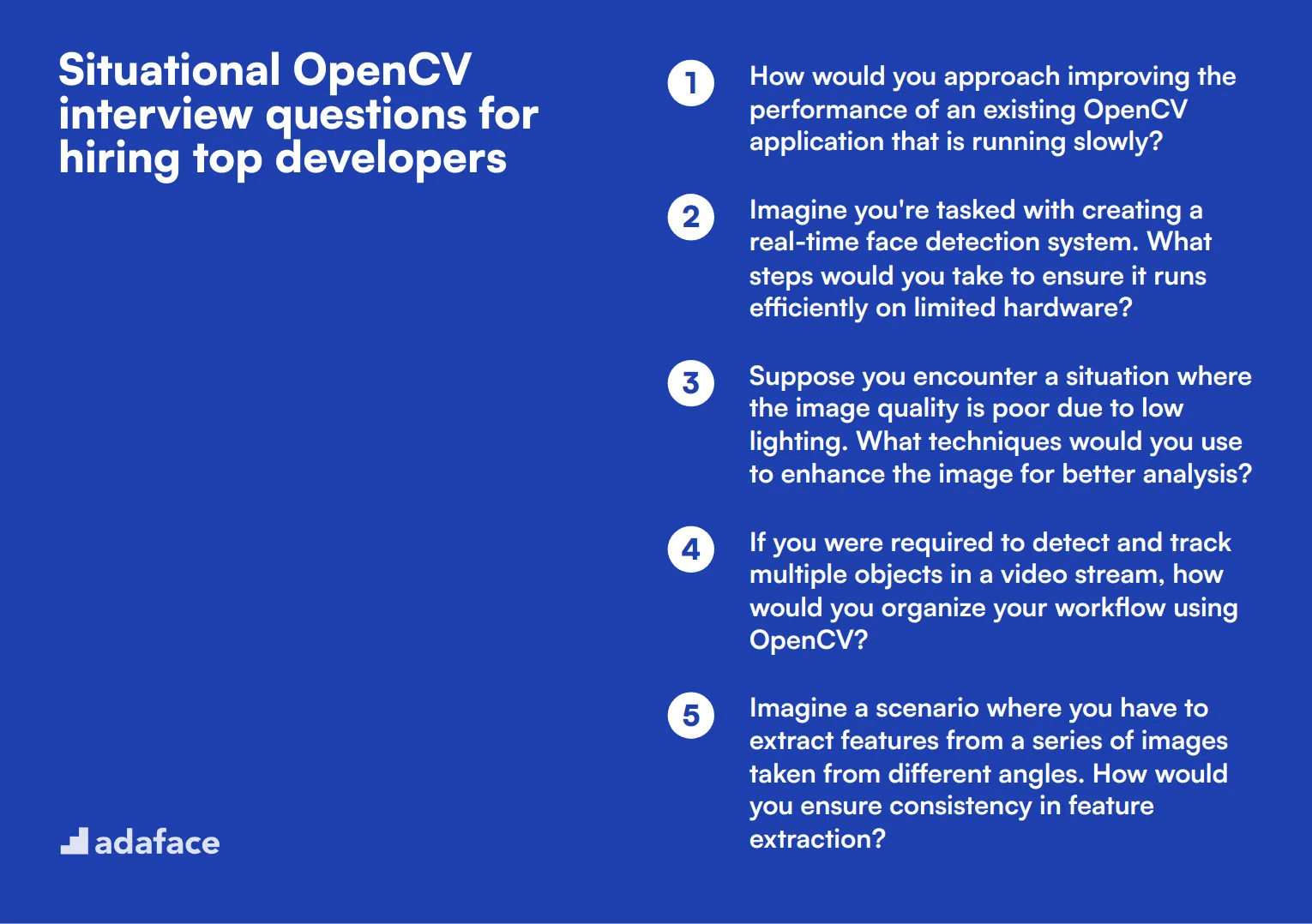

10 situational OpenCV interview questions for hiring top developers

To identify candidates who possess advanced skills in OpenCV and can handle real-world challenges, consider asking these situational questions during interviews. These questions will help you gauge their problem-solving abilities and practical knowledge in computer vision tasks, which are essential for roles like a software engineer.

- How would you approach improving the performance of an existing OpenCV application that is running slowly?

- Imagine you're tasked with creating a real-time face detection system. What steps would you take to ensure it runs efficiently on limited hardware?

- Suppose you encounter a situation where the image quality is poor due to low lighting. What techniques would you use to enhance the image for better analysis?

- If you were required to detect and track multiple objects in a video stream, how would you organize your workflow using OpenCV?

- Imagine a scenario where you have to extract features from a series of images taken from different angles. How would you ensure consistency in feature extraction?

- If an application fails to detect objects in a crowded scene, what methods would you apply to improve detection accuracy?

- How would you implement a system that can automatically adjust parameters based on feedback from previous processing results?

- If you find that your image segmentation results are not satisfactory, what strategies would you use to troubleshoot and refine your approach?

- How would you approach integrating OpenCV with other machine learning frameworks for a comprehensive project?

- Suppose you need to create a custom filter to enhance specific features of an image. How would you go about designing and implementing that filter?

Which OpenCV skills should you evaluate during the interview phase?

While it's impossible to assess every aspect of a candidate's OpenCV expertise in a single interview, focusing on core skills is crucial. By evaluating these key areas, you can gain valuable insights into a candidate's proficiency and potential fit for your team.

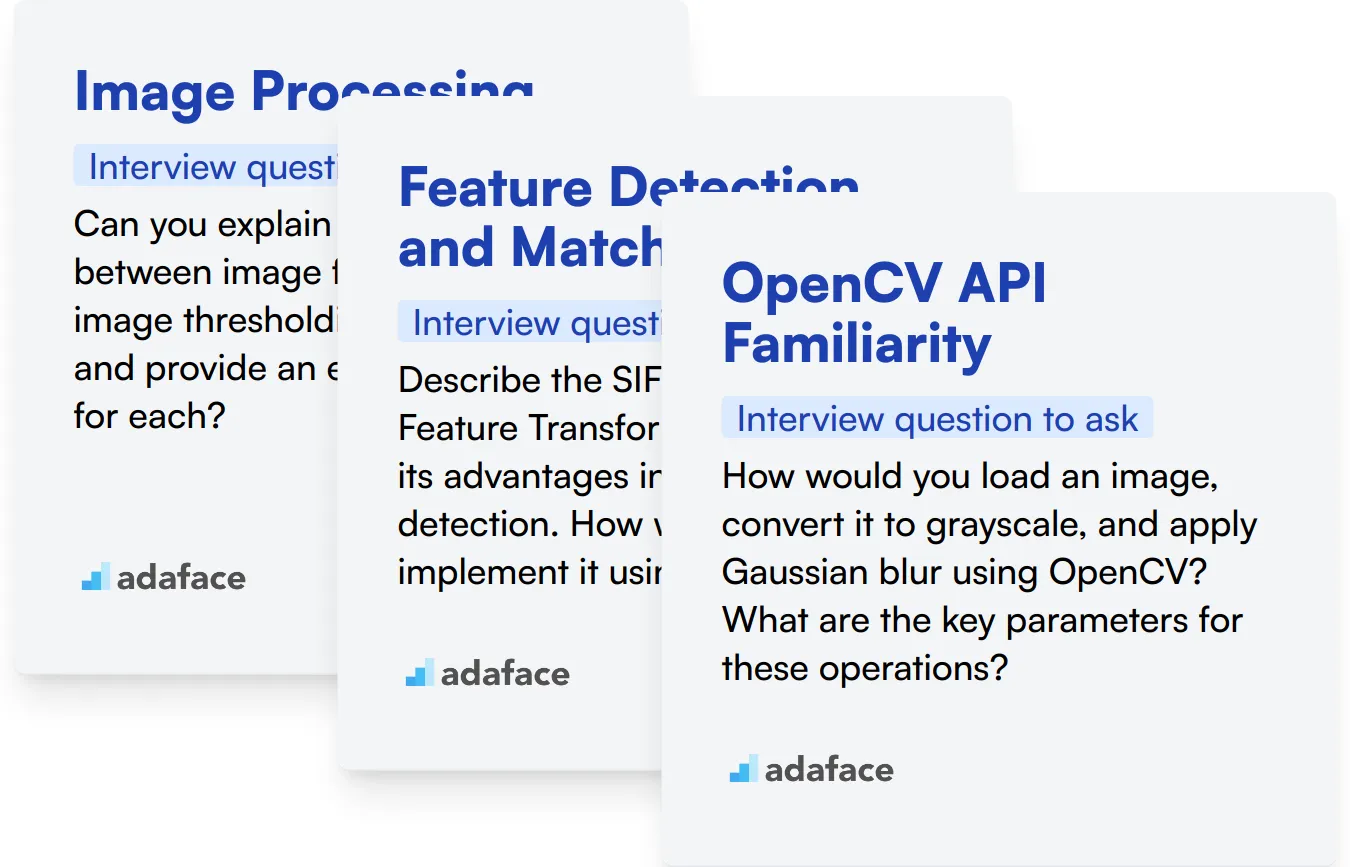

Image Processing

Image processing is a fundamental aspect of OpenCV. It involves manipulating and analyzing digital images, which is at the core of many computer vision applications.

To evaluate this skill, consider using an assessment test with relevant MCQs. This can help filter candidates based on their understanding of image processing concepts.

During the interview, you can ask targeted questions to gauge the candidate's practical knowledge of image processing techniques. Here's an example question:

Can you explain the difference between image filtering and image thresholding in OpenCV, and provide an example use case for each?

Look for answers that demonstrate understanding of both techniques. The candidate should explain that filtering modifies pixel values based on neighboring pixels, while thresholding separates an image into foreground and background based on pixel intensity.

Feature Detection and Matching

Feature detection and matching are essential for tasks like object recognition and tracking. These skills are valuable for developers working on advanced computer vision projects.

Consider using an assessment test with questions focusing on various feature detection algorithms and matching techniques to evaluate candidates' theoretical knowledge.

To assess practical understanding, you can ask a question like:

Describe the SIFT (Scale-Invariant Feature Transform) algorithm and its advantages in feature detection. How would you implement it using OpenCV?

Look for answers that explain SIFT's scale and rotation invariance, its use of keypoints and descriptors, and mention OpenCV's SIFT implementation or alternative methods like ORB for faster processing.

OpenCV API Familiarity

Proficiency with OpenCV's API is crucial for efficient development. Candidates should be familiar with core functions and data structures used in OpenCV.

An OpenCV-specific test can help assess candidates' knowledge of the library's functions and usage.

To evaluate hands-on experience, consider asking:

How would you load an image, convert it to grayscale, and apply Gaussian blur using OpenCV? What are the key parameters for these operations?

Look for answers that demonstrate familiarity with functions like cv2.imread(), cv2.cvtColor(), and cv2.GaussianBlur(). The candidate should mention key parameters like color conversion codes and kernel size for blurring.

3 Best Practices for Conducting OpenCV Interviews

Before you start putting your newfound knowledge to use, here are some tips to enhance your OpenCV interview process. These practices will help you effectively evaluate candidates and make informed hiring decisions.

1. Incorporate Skills Tests in Your Screening Process

Skills tests provide an objective measure of a candidate's OpenCV proficiency before the interview stage. They help you focus on candidates who have the technical skills required for the role.

For OpenCV roles, consider using a Computer Vision test to evaluate core concepts. Additionally, a Python Pandas test can assess data manipulation skills often used in OpenCV projects.

Implement these tests after initial resume screening but before interviews. This approach saves time by ensuring you only interview candidates with the necessary technical skills.

2. Prepare a Balanced Set of Interview Questions

Time is limited during interviews, so it's crucial to ask the right questions. Balance your OpenCV questions with other relevant topics to get a comprehensive view of the candidate.

Include questions about machine learning and data science, as these often intersect with OpenCV work. Also, consider adding questions about Python to assess their programming skills.

Don't forget to evaluate soft skills. Include questions about problem-solving and communication to ensure the candidate can work effectively in your team.

3. Ask Insightful Follow-up Questions

Prepared questions are a good start, but follow-up questions reveal a candidate's true depth of knowledge. They help you distinguish between candidates who have memorized answers and those with genuine understanding and experience.

For example, if you ask about image filtering in OpenCV, a follow-up could be, "Can you explain a situation where you'd choose Gaussian blur over median blur?" This probes their practical knowledge and decision-making skills in real-world scenarios.

Use OpenCV interview questions and skills tests to hire talented developers

If you're looking to hire someone with OpenCV skills, you need to ensure they have those skills accurately. The best way to do this is to use skill tests. Check out our OpenCV Online Test or Computer Vision Test to evaluate candidates.

Once you use these tests, you can shortlist the best applicants and call them for interviews. Head over to our signup page to get started or visit our online assessment platform for more information.

OpenCV Test

Download OpenCV interview questions template in multiple formats

OpenCV Interview Questions FAQs

In an OpenCV interview, assess skills in image processing, computer vision algorithms, practical problem-solving, and familiarity with OpenCV library functions.

Use situational questions and coding challenges to evaluate a candidate's ability to apply OpenCV concepts to real-world problems.

Key concepts include image filtering, feature detection, object recognition, and understanding of computer vision algorithms like SIFT and SURF.

Adjust the complexity of questions based on the role. Use basic concepts for juniors and more advanced topics for experienced developers.

Yes, including coding exercises can help assess a candidate's ability to implement OpenCV functions and solve practical problems.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources