Interviewing candidates for data-related roles requires a deep understanding of their technical skills, especially in NumPy, a fundamental library for numerical computing in Python. Asking the right NumPy interview questions can help you assess candidates' proficiency and make informed hiring decisions.

This blog post provides a comprehensive list of NumPy interview questions tailored for different skill levels and job roles. From basic concepts to advanced data manipulation techniques, we cover a wide range of topics to help you evaluate applicants effectively.

By using these questions, you can gain valuable insights into candidates' NumPy expertise and their ability to apply it in real-world scenarios. Consider pairing these interview questions with a NumPy skills test to get a more complete picture of candidates' abilities before the interview stage.

Table of contents

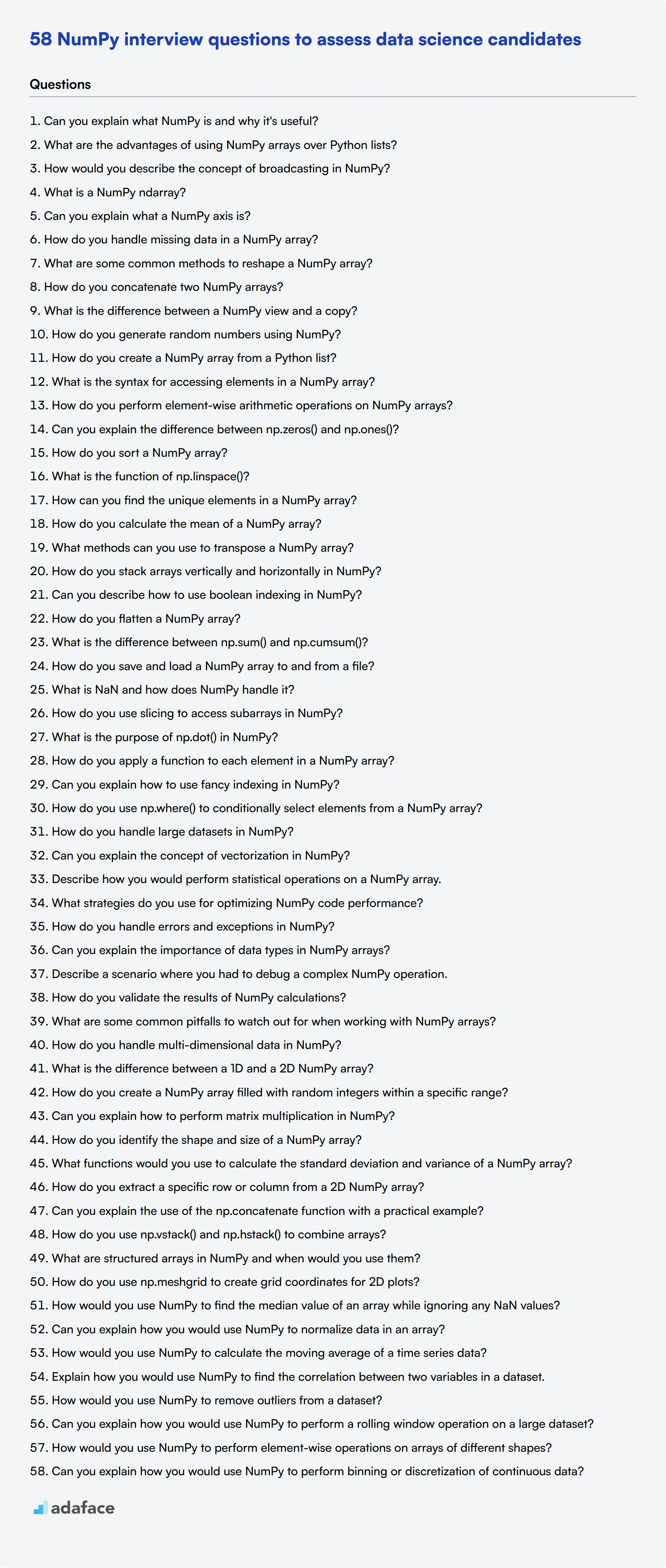

10 basic NumPy interview questions and answers to assess applicants

Looking to assess your candidates' basic understanding of NumPy? This list of questions is designed to help interviewers gauge if applicants have the foundational knowledge necessary to effectively use this crucial library. Use these questions during your interviews to ensure you're picking the right candidates for your team.

1. Can you explain what NumPy is and why it's useful?

NumPy is a powerful Python library used for numerical computing. It equips users with tools to handle arrays and matrices, along with a collection of mathematical functions to operate on these data structures.

An ideal candidate should highlight how NumPy's efficiency and ease of use make it indispensable for scientific computing, data analysis, and machine learning projects. Look for mentions of its support for large multi-dimensional arrays and various mathematical operations.

2. What are the advantages of using NumPy arrays over Python lists?

NumPy arrays are more efficient than Python lists both in terms of speed and memory usage. They support vectorized operations, which means you can apply operations to entire arrays without the need for explicit loops.

Candidates should mention that NumPy arrays are more efficient in both computation and storage, making them ideal for handling large datasets. Additionally, they should point out the convenience of using built-in functions and methods for mathematical operations.

3. How would you describe the concept of broadcasting in NumPy?

Broadcasting allows NumPy to perform arithmetic operations on arrays of different shapes. It 'stretches' smaller arrays so they have compatible shapes for element-wise operations with larger arrays.

Strong answers should include examples, such as adding a 1-D array to a 2-D array, and discuss how broadcasting simplifies code and improves efficiency. Look for candidates who understand the rules and limitations of broadcasting.

4. What is a NumPy ndarray?

A NumPy ndarray is the core data structure of the NumPy library. It stands for 'n-dimensional array' and represents a grid of values, all of the same type, indexed by a tuple of non-negative integers.

The candidate should mention that ndarrays are highly efficient for numerical calculations and can be multi-dimensional. Look for an explanation of how ndarrays differ from regular Python lists, especially in terms of performance and functionality.

5. Can you explain what a NumPy axis is?

In NumPy, axes refer to the dimensions of an array. For example, a 2D array has two axes: rows (axis 0) and columns (axis 1). Operations in NumPy often specify an axis along which to operate.

Candidates should be able to discuss how understanding axes is crucial for correctly applying functions like sum, mean, and others along specific dimensions of an array. This demonstrates their grasp of multidimensional array manipulation.

6. How do you handle missing data in a NumPy array?

Missing data in NumPy arrays is often represented using NaNs (Not a Number). Functions like numpy.isnan() can identify these NaNs, and methods like numpy.nan_to_num() can replace them with a specified value.

Look for candidates who can discuss various strategies for dealing with missing data, such as interpolation, filling with mean or median values, or simply removing the affected elements. This indicates a practical understanding of data cleaning.

7. What are some common methods to reshape a NumPy array?

Common methods to reshape a NumPy array include reshape(), ravel(), and flatten(). While reshape() changes the shape of an array without changing its data, ravel() and flatten() convert arrays into 1D.

A good response should mention how these methods are used to prepare data for different types of analysis or machine learning models. Understanding these concepts is crucial for data manipulation and preprocessing.

8. How do you concatenate two NumPy arrays?

NumPy provides functions like numpy.concatenate(), numpy.vstack(), and numpy.hstack() to concatenate arrays along different axes. concatenate() is more general, while vstack() and hstack() are used for vertical and horizontal stacking, respectively.

Candidates should be able to explain the use of these functions and discuss scenarios where each might be appropriate. This demonstrates their ability to manipulate data structures effectively.

9. What is the difference between a NumPy view and a copy?

A view is a new array object that looks at the same data of the original array, while a copy is a new array object with its own separate copy of the data. Changes to a view will affect the original array, but changes to a copy will not.

Candidates should emphasize the implications of using views versus copies in terms of memory usage and performance. This shows their understanding of efficient data handling in NumPy.

10. How do you generate random numbers using NumPy?

NumPy provides a module called numpy.random that includes functions for generating random numbers, such as rand(), randn(), and randint(). These functions allow for the creation of random arrays of different distributions and ranges.

Look for candidates who can explain the use of these functions in practical scenarios, such as initializing weights in machine learning models or creating random samples for simulations. This indicates their ability to apply NumPy’s capabilities to real-world problems.

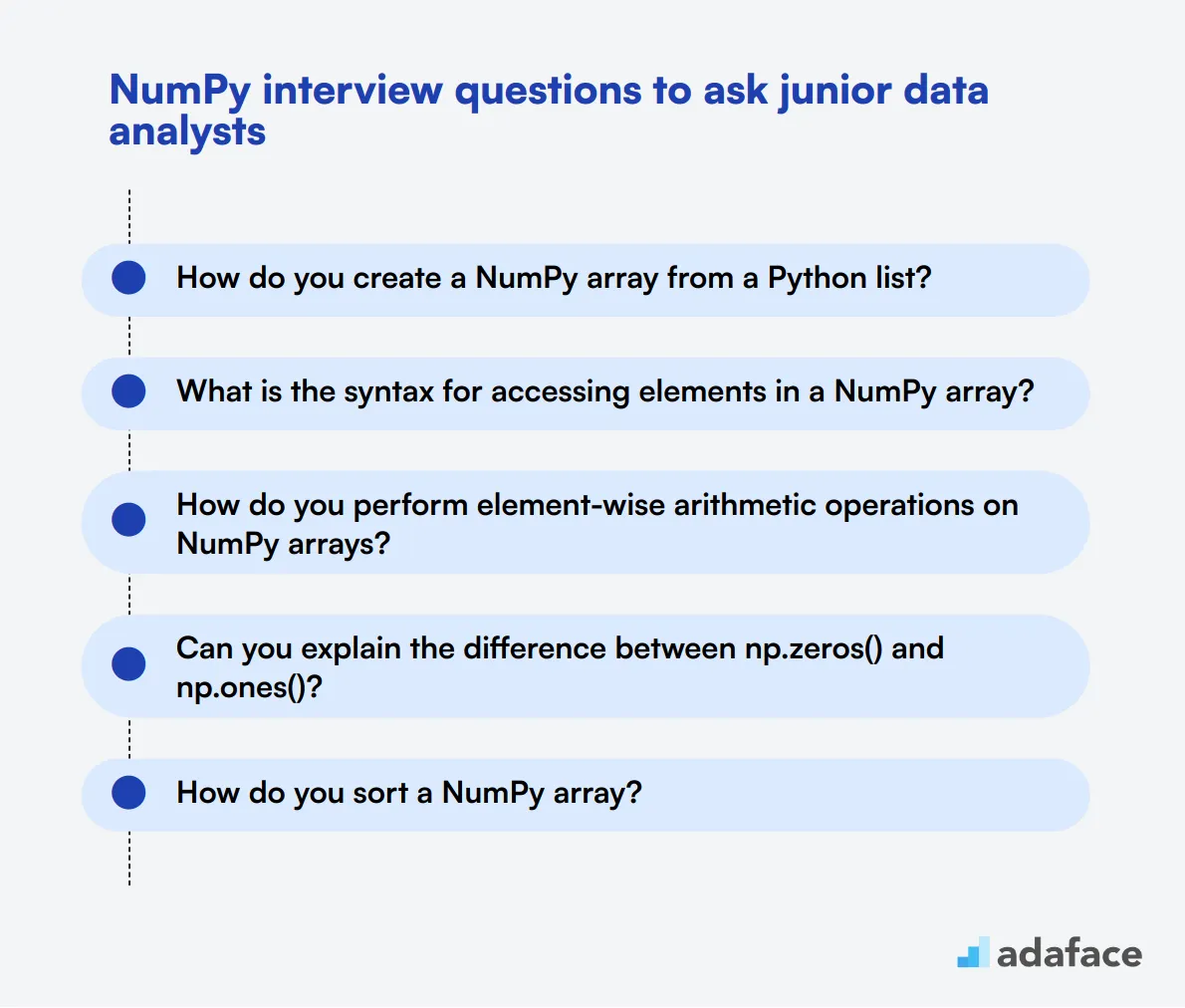

20 NumPy interview questions to ask junior data analysts

To gauge the fundamental understanding and practical skills of junior data analysts, consider asking these 20 targeted NumPy interview questions. These questions will help you identify candidates with the right technical know-how, making the hiring process more efficient.

- How do you create a NumPy array from a Python list?

- What is the syntax for accessing elements in a NumPy array?

- How do you perform element-wise arithmetic operations on NumPy arrays?

- Can you explain the difference between np.zeros() and np.ones()?

- How do you sort a NumPy array?

- What is the function of np.linspace()?

- How can you find the unique elements in a NumPy array?

- How do you calculate the mean of a NumPy array?

- What methods can you use to transpose a NumPy array?

- How do you stack arrays vertically and horizontally in NumPy?

- Can you describe how to use boolean indexing in NumPy?

- How do you flatten a NumPy array?

- What is the difference between np.sum() and np.cumsum()?

- How do you save and load a NumPy array to and from a file?

- What is NaN and how does NumPy handle it?

- How do you use slicing to access subarrays in NumPy?

- What is the purpose of np.dot() in NumPy?

- How do you apply a function to each element in a NumPy array?

- Can you explain how to use fancy indexing in NumPy?

- How do you use np.where() to conditionally select elements from a NumPy array?

10 intermediate NumPy interview questions and answers to ask mid-tier data scientists

These intermediate NumPy interview questions are perfect for evaluating the skills of mid-tier data scientists. They go beyond the basics and help you identify candidates who are ready to tackle more complex data manipulation tasks. Whether you're looking to assess problem-solving skills or practical knowledge, this list will help you find the right fit for your team.

1. How do you handle large datasets in NumPy?

Handling large datasets in NumPy often involves breaking the data into smaller chunks and processing them sequentially to avoid memory overload. Utilizing memory-mapped files can also help manage large datasets efficiently without loading the entire dataset into memory at once.

An ideal candidate could elaborate on techniques such as memory mapping and chunk processing. They might also mention the importance of optimizing array operations to minimize memory usage.

2. Can you explain the concept of vectorization in NumPy?

Vectorization in NumPy refers to the practice of replacing explicit loops with array expressions. This allows for more efficient computation by taking advantage of low-level optimizations in the NumPy library.

Candidates should demonstrate an understanding of how vectorization can significantly speed up array operations. Look for mentions of improved performance and reduced code complexity as key benefits.

3. Describe how you would perform statistical operations on a NumPy array.

Statistical operations in NumPy can be easily performed using built-in functions like mean, median, standard deviation, variance, and many others. These functions provide a quick and efficient way to compute statistical metrics directly on NumPy arrays.

A strong response should include specific examples of statistical functions and discuss the importance of these operations in data analysis. Look for candidates who can explain why these metrics are useful in the context of their work.

4. What strategies do you use for optimizing NumPy code performance?

Optimizing NumPy code performance involves several strategies such as using vectorized operations, avoiding Python loops, leveraging in-place operations, and minimizing data type conversions. Profiling tools can also help identify bottlenecks in the code.

Candidates should highlight the importance of profiling and optimization techniques. Look for an understanding of how to balance readability and performance in their code.

5. How do you handle errors and exceptions in NumPy?

Errors and exceptions in NumPy can be handled using standard Python try-except blocks. NumPy-specific errors, such as those arising from invalid operations or data type issues, should be caught and managed to ensure robust code.

A good candidate response should include examples of common NumPy errors and how to handle them. They should also emphasize the importance of thorough testing to catch and manage exceptions effectively.

6. Can you explain the importance of data types in NumPy arrays?

Data types in NumPy arrays are crucial because they determine how the data is stored and how much memory it consumes. Choosing the appropriate data type can significantly impact both performance and memory efficiency.

Candidates should demonstrate knowledge of different NumPy data types and their implications. Look for an understanding of the trade-offs between precision and memory usage.

7. Describe a scenario where you had to debug a complex NumPy operation.

Debugging complex NumPy operations often involves isolating the problem by breaking down the operation into smaller, manageable parts. Using print statements or debugging tools can help identify where the issue lies.

An ideal answer would include a specific example, detailing the steps taken to identify and resolve the issue. Look for problem-solving skills and the ability to methodically troubleshoot complex operations.

8. How do you validate the results of NumPy calculations?

Validating the results of NumPy calculations involves comparing the outcomes with known benchmarks or using alternative methods to cross-check the results. Unit tests and assertions can also be used to ensure accuracy.

Candidates should emphasize the importance of validation in ensuring the reliability of their calculations. Look for an understanding of different validation techniques and their application in real-world scenarios.

9. What are some common pitfalls to watch out for when working with NumPy arrays?

Common pitfalls when working with NumPy arrays include issues with data type conversions, unintentional broadcasting, and memory inefficiencies. It's also important to be cautious with array shapes and dimensions to avoid unexpected behaviors.

A strong response should include specific examples of these pitfalls and strategies to mitigate them. Look for an awareness of potential issues and a proactive approach to avoiding them.

10. How do you handle multi-dimensional data in NumPy?

Handling multi-dimensional data in NumPy involves understanding the array's shape and using appropriate indexing and slicing techniques. Functions like reshape, transpose, and flatten can be used to manipulate multi-dimensional arrays effectively.

Candidates should demonstrate familiarity with multi-dimensional data operations and provide examples of how they've managed such data in past projects. Look for practical experience and a clear understanding of the challenges involved.

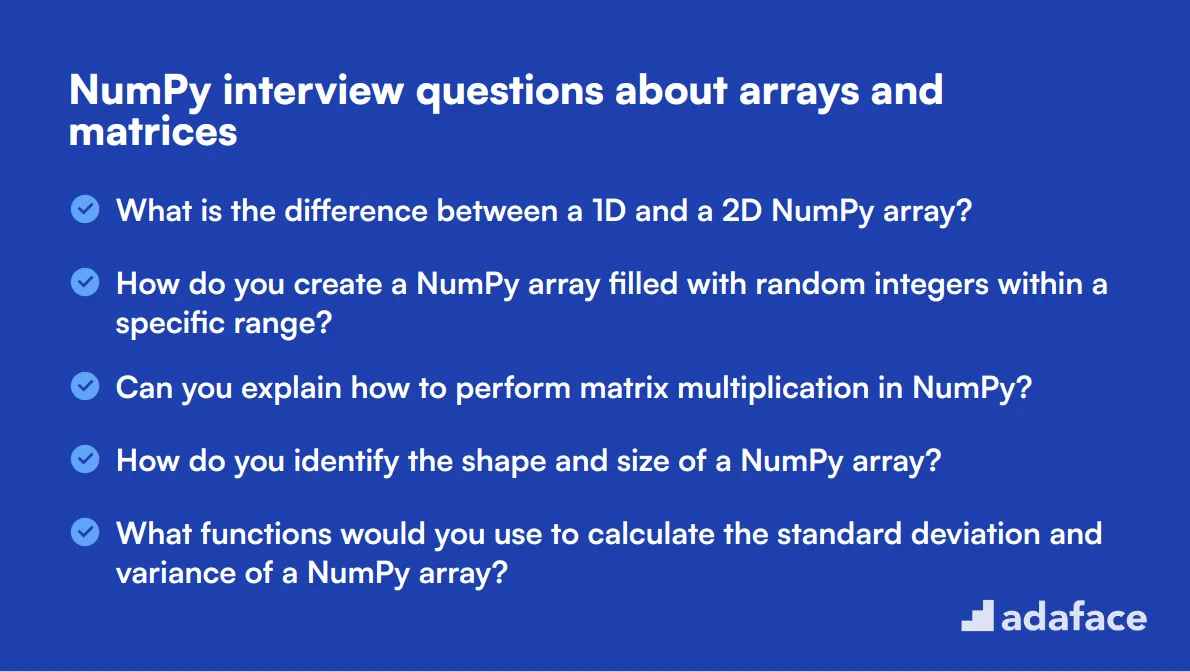

10 NumPy interview questions about arrays and matrices

To effectively assess your candidates' understanding of NumPy's array and matrix capabilities, consider using these interview questions. This list can help you gauge their technical proficiency and problem-solving skills, especially when hiring for roles like a data scientist or a data engineer.

- What is the difference between a 1D and a 2D NumPy array?

- How do you create a NumPy array filled with random integers within a specific range?

- Can you explain how to perform matrix multiplication in NumPy?

- How do you identify the shape and size of a NumPy array?

- What functions would you use to calculate the standard deviation and variance of a NumPy array?

- How do you extract a specific row or column from a 2D NumPy array?

- Can you explain the use of the np.concatenate function with a practical example?

- How do you use np.vstack() and np.hstack() to combine arrays?

- What are structured arrays in NumPy and when would you use them?

- How do you use np.meshgrid to create grid coordinates for 2D plots?

8 NumPy interview questions and answers related to data manipulation

Ready to dive into the world of NumPy data manipulation? These 8 interview questions will help you assess candidates' ability to wrangle arrays like a pro. Whether you're hiring a data scientist or a Python developer, these questions will give you insight into their NumPy skills. Use them to spark discussions and uncover how candidates think about data manipulation challenges.

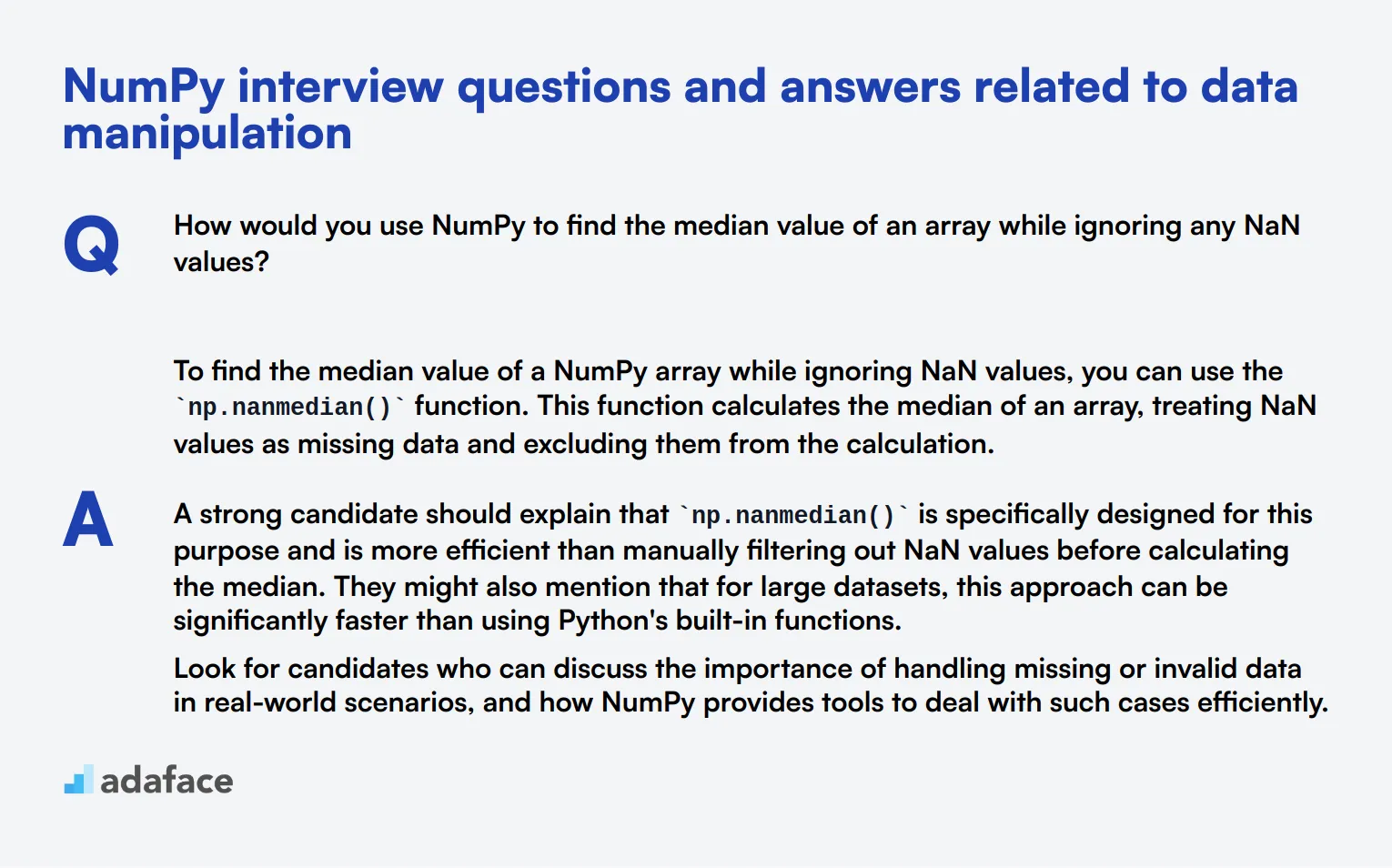

1. How would you use NumPy to find the median value of an array while ignoring any NaN values?

To find the median value of a NumPy array while ignoring NaN values, you can use the np.nanmedian() function. This function calculates the median of an array, treating NaN values as missing data and excluding them from the calculation.

A strong candidate should explain that np.nanmedian() is specifically designed for this purpose and is more efficient than manually filtering out NaN values before calculating the median. They might also mention that for large datasets, this approach can be significantly faster than using Python's built-in functions.

Look for candidates who can discuss the importance of handling missing or invalid data in real-world scenarios, and how NumPy provides tools to deal with such cases efficiently.

2. Can you explain how you would use NumPy to normalize data in an array?

Normalizing data using NumPy typically involves scaling the values to a specific range, often between 0 and 1. This can be done using the formula: (x - min(x)) / (max(x) - min(x)). In NumPy, you can implement this efficiently using array operations:

- Calculate the minimum and maximum values of the array using

np.min()andnp.max(). - Subtract the minimum value from each element in the array.

- Divide the result by the range (max - min).

An ideal candidate should explain why normalization is important in data preprocessing, especially for machine learning algorithms. They should also mention that NumPy's vectorized operations make this process very efficient for large datasets. Look for candidates who can discuss potential issues, such as handling arrays with constant values or dealing with outliers in the normalization process.

3. How would you use NumPy to calculate the moving average of a time series data?

Calculating a moving average in NumPy can be done efficiently using the np.convolve() function or a combination of np.cumsum() and array slicing. The process involves:

- Defining the window size for the moving average.

- Using

np.cumsum()to calculate the cumulative sum of the array. - Subtracting the cumulative sum at the start of each window from the end to get the sum for that window.

- Dividing by the window size to get the average.

A strong candidate should be able to explain the concept of a moving average and its applications in time series analysis. They should also discuss the trade-offs between different methods, such as using np.convolve() vs. the cumulative sum approach. Look for candidates who can talk about handling edge cases, such as the beginning and end of the time series where a full window isn't available.

4. Explain how you would use NumPy to find the correlation between two variables in a dataset.

To find the correlation between two variables using NumPy, you can use the np.corrcoef() function. This function calculates the Pearson correlation coefficient, which measures the linear relationship between two variables.

The steps involved are:

- Ensure the variables are in NumPy arrays.

- Use

np.corrcoef(x, y)where x and y are the two variables. - The result is a 2x2 correlation matrix, where the off-diagonal elements represent the correlation coefficient between x and y.

An ideal candidate should be able to interpret the correlation coefficient, explaining that it ranges from -1 to 1, where -1 indicates a perfect negative correlation, 0 indicates no correlation, and 1 indicates a perfect positive correlation. Look for candidates who can discuss the limitations of correlation, such as its inability to capture non-linear relationships, and the importance of visualizing data alongside correlation analysis.

5. How would you use NumPy to remove outliers from a dataset?

Removing outliers using NumPy typically involves identifying data points that fall outside a certain range and then filtering them out. A common method is the Interquartile Range (IQR) technique:

- Calculate Q1 (25th percentile) and Q3 (75th percentile) using

np.percentile(). - Compute the IQR: IQR = Q3 - Q1.

- Define the lower and upper bounds: lower = Q1 - 1.5 * IQR, upper = Q3 + 1.5 * IQR.

- Use boolean indexing to keep only the values within these bounds.

A strong candidate should explain why removing outliers can be important for certain analyses, but also caution against blindly removing data without understanding its significance. They should discuss alternative methods like z-score for normally distributed data. Look for candidates who emphasize the importance of visualizing the data and understanding the domain context before deciding on an outlier removal strategy.

6. Can you explain how you would use NumPy to perform a rolling window operation on a large dataset?

Performing a rolling window operation on a large dataset using NumPy can be achieved efficiently using the as_strided() function from numpy.lib.stride_tricks. This function creates a view of the original array with a sliding window, allowing for efficient computation without copying data.

The process involves:

- Defining the window size and the operation to be performed (e.g., mean, sum).

- Using

as_strided()to create a view of the data with the appropriate shape and strides. - Applying the desired operation along the appropriate axis of the resulting view.

An ideal candidate should be able to explain the concept of strides in NumPy and how as_strided() works. They should also discuss the potential memory issues with large datasets and how this method helps mitigate them. Look for candidates who can talk about the trade-offs between different methods (e.g., as_strided() vs. np.convolve()) and when to use each approach based on the size of the dataset and the specific operation required.

7. How would you use NumPy to perform element-wise operations on arrays of different shapes?

NumPy's broadcasting feature allows for element-wise operations on arrays of different shapes. The key is understanding the broadcasting rules:

- Arrays are compared from the last dimension.

- Dimensions with size 1 are stretched or 'broadcast' to match the other array.

- If the arrays don't match and neither dimension is 1, an error is raised.

A strong candidate should be able to provide examples of broadcasting, such as adding a scalar to an array or multiplying a 1D array with a 2D array. They should also discuss the benefits of broadcasting in terms of memory efficiency and code readability. Look for candidates who can explain potential pitfalls of broadcasting, such as unintended behavior when shapes are not compatible, and how to avoid them.

8. Can you explain how you would use NumPy to perform binning or discretization of continuous data?

Binning or discretization of continuous data in NumPy can be accomplished using the np.digitize() function or np.histogram(). The process typically involves:

- Defining the bin edges using

np.linspace()or manually specifying them. - Using

np.digitize(data, bins)to assign each data point to a bin. - Optionally, creating labels for the bins.

An ideal candidate should explain why binning is useful in data analysis, such as for creating histograms or reducing the effect of minor observation errors. They should discuss different binning strategies (equal-width vs. equal-frequency) and their implications. Look for candidates who can talk about the impact of bin size on the resulting analysis and how to choose an appropriate number of bins based on the data distribution and the goals of the analysis.

Which NumPy skills should you evaluate during the interview phase?

While a single interview cannot encapsulate all the nuances of a candidate's capabilities, evaluating specific skills related to NumPy can provide significant insights into their potential fit. These core skills are essential for understanding the applicant's proficiency in handling numerical data and performing calculations, which are central to data analysis tasks.

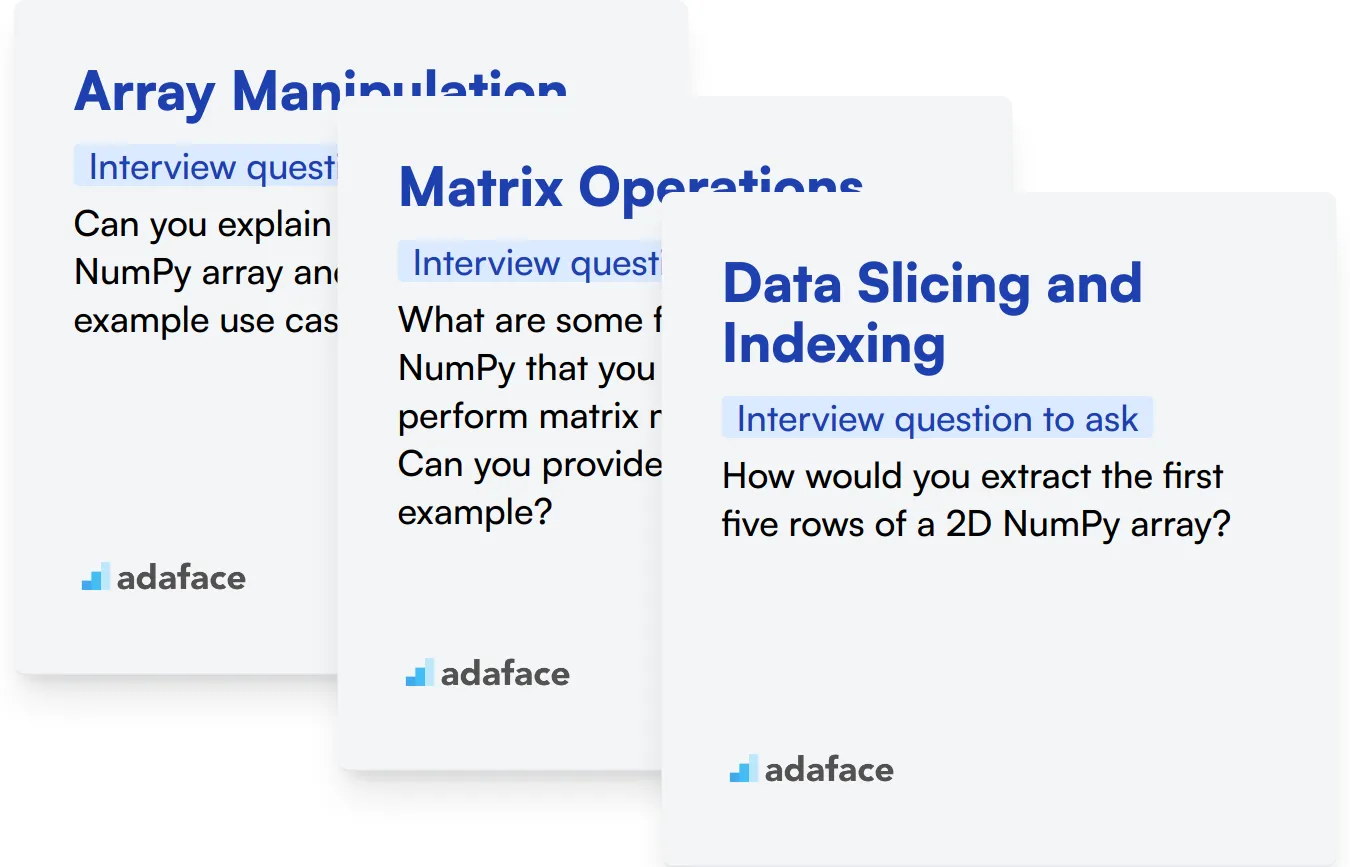

Array Manipulation

An assessment test that includes relevant multiple-choice questions can help filter candidates based on their array manipulation skills. You may find an ideal test in our library at NumPy Test.

Additionally, consider asking targeted interview questions to evaluate this skill effectively. One such question is:

Can you explain how to reshape a NumPy array and provide an example use case?

When asking this question, look for clarity in their explanation and whether they can articulate the benefits of reshaping arrays, such as preparing data for machine learning models or altering array dimensions for specific computations.

Matrix Operations

To assess this skill, consider using a focused assessment that includes relevant MCQs. Our library offers a selection of tests, including a NumPy Test that can be utilized for this purpose.

You might also want to ask specific questions about matrix operations in the interview. For example:

What are some functions in NumPy that you can use to perform matrix multiplication? Can you provide a simple example?

When evaluating their response, pay attention to their knowledge of functions like dot() and matmul(), and whether they can demonstrate these concepts with practical examples.

Data Slicing and Indexing

To gauge this skill, an assessment featuring multiple-choice questions can be beneficial. Refer to our NumPy Test for relevant questions that focus on data slicing and indexing.

Consider asking the candidate a targeted question, such as:

How would you extract the first five rows of a 2D NumPy array?

When candidates respond, look for their understanding of array slicing syntax and whether they can articulate how slicing works in different dimensions of the array.

Hire Top NumPy Talent with Skills Tests and Targeted Interview Questions

When hiring someone with NumPy skills, it's important to accurately assess their abilities. This ensures you find candidates who can truly contribute to your data analysis and scientific computing projects.

A quick and effective way to evaluate NumPy proficiency is through skills tests. Adaface's NumPy test can help you objectively measure candidates' knowledge and practical skills.

After using the test to identify top performers, you can invite them for interviews. Use the NumPy interview questions from this post to dive deeper into their experience and problem-solving abilities.

Ready to streamline your hiring process for NumPy experts? Sign up for Adaface to access our NumPy test and other data science assessments. Find the right talent for your team today.

Numpy Online Test

Download NumPy interview questions template in multiple formats

NumPy Interview Questions FAQs

The questions cover basic, junior, intermediate, and mid-tier skill levels for data analysts and scientists.

The questions are grouped by difficulty level and topic, including arrays, matrices, and data manipulation.

Yes, the questions are suitable for various data science positions, from junior analysts to experienced scientists.

Yes, answers are included for many of the questions to help assess candidate responses.

Use them to structure your technical interviews and evaluate candidates' NumPy knowledge and problem-solving skills.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources