Assessing a candidate's Natural Language Processing (NLP) skills during an interview can be daunting. Yet, asking the right questions is key to uncovering their true capabilities.

This blog post provides a comprehensive set of NLP interview questions catered to different skill levels, from basic to intermediate, and covers technical definitions as well as machine learning techniques. It's designed to help you evaluate applicants' knowledge efficiently and make informed hiring decisions.

By using these questions, you can better gauge the expertise of potential hires and ensure they fit your team's needs. Additionally, consider leveraging Adaface's NLP online test to streamline your recruitment process.

Table of contents

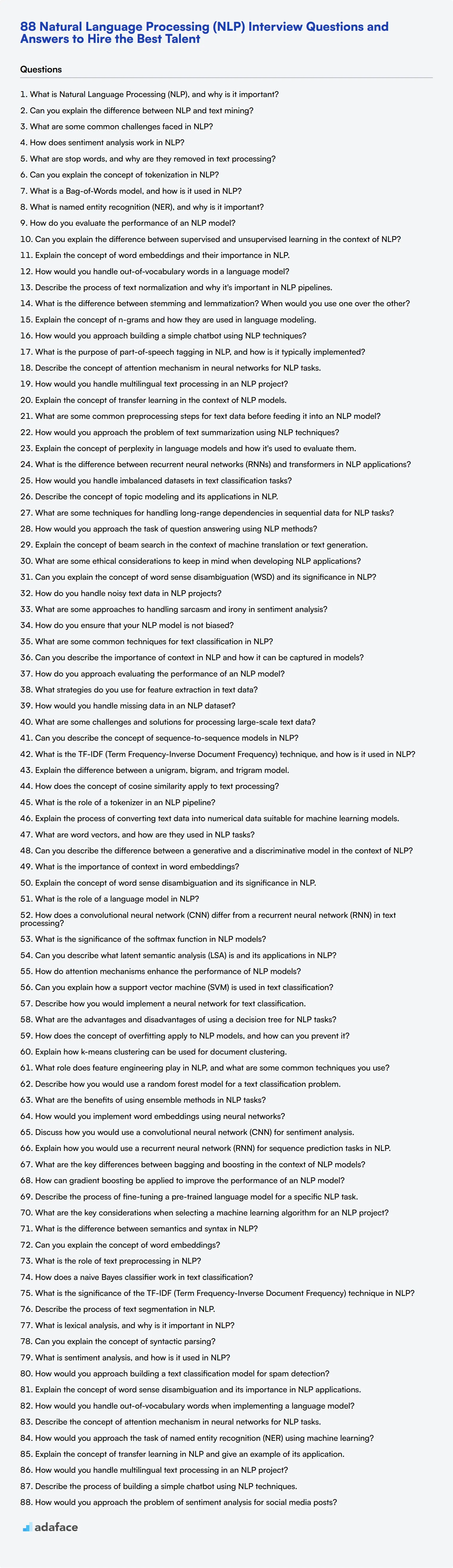

10 basic Natural Language Processing (NLP) interview questions and answers to assess candidates

To evaluate whether your candidates have a solid understanding of Natural Language Processing (NLP) fundamentals, refer to this list of essential interview questions. These questions are designed to gauge basic knowledge and practical understanding, making it easier for you to identify the right talent for your team.

1. What is Natural Language Processing (NLP), and why is it important?

Natural Language Processing (NLP) is a field of artificial intelligence that focuses on the interaction between computers and humans through natural language. It involves teaching machines to understand, interpret, and generate human language in a way that is valuable.

NLP is important because it enables applications like translation services, sentiment analysis, chatbots, and more, which can process large amounts of data quickly and efficiently. It helps in automating routine tasks, improving customer service, and providing insights from unstructured data.

When evaluating the candidate’s response, look for a clear and concise explanation, an understanding of practical applications, and the ability to relate NLP to real-world scenarios and benefits.

2. Can you explain the difference between NLP and text mining?

NLP and text mining are closely related but serve different purposes. NLP focuses on understanding and generating human language using computational techniques. It's about enabling machines to comprehend and respond in human language.

Text mining, on the other hand, involves extracting useful information from text data. It's more about analyzing large volumes of text to find patterns, trends, or insights.

An ideal candidate should be able to distinguish between the objectives and processes of NLP and text mining. Look for explanations that include examples of applications of both fields.

3. What are some common challenges faced in NLP?

Some common challenges in NLP include handling ambiguity in language, dealing with different languages and dialects, understanding context and sentiment, and managing unstructured data.

Other challenges include the need for large annotated datasets for training models and ensuring privacy and ethical considerations in data usage.

Candidates should mention real-world challenges they have encountered and how they addressed them. This shows practical experience and problem-solving skills.

4. How does sentiment analysis work in NLP?

Sentiment analysis in NLP involves identifying and categorizing opinions expressed in a piece of text to determine whether the sentiment is positive, negative, or neutral.

It typically involves techniques such as tokenization, stopword removal, and the use of algorithms like machine learning classifiers or lexicon-based approaches to analyze the sentiment.

Look for candidates who can explain the steps and methods used in sentiment analysis clearly, and provide examples of tools or libraries they have used.

5. What are stop words, and why are they removed in text processing?

Stop words are common words that occur frequently in a language but carry little meaning by themselves, such as 'and', 'the', 'is', etc. They are usually removed in text processing to reduce the dimensionality and improve the performance of NLP models.

Removing stop words helps in focusing on the more meaningful words that contribute to the context and content of the text, making the analysis more efficient.

Candidates should demonstrate an understanding of the importance of stop words and provide examples of situations where removing them improved their results.

6. Can you explain the concept of tokenization in NLP?

Tokenization is the process of breaking down a text into smaller units called tokens, which can be words, phrases, or even sentences. It is a crucial step in preprocessing text data for NLP tasks.

Tokenization helps in converting unstructured text into a structured format that can be easily analyzed by machines. It involves splitting text based on delimiters like spaces, punctuation marks, or using more advanced techniques for languages that do not use spaces.

Candidates should explain the importance of tokenization and mention any tools or libraries they have used for this purpose. Look for an understanding of different types of tokenization and their applications.

7. What is a Bag-of-Words model, and how is it used in NLP?

The Bag-of-Words (BoW) model is a simple and widely used method in NLP for representing text data. It involves converting text into a vector of word frequencies, ignoring grammar and word order.

Each unique word in the text corpus becomes a feature, and the vector represents the count of each word in a document. This model is used for tasks like text classification and clustering.

Candidates should explain the BoW model clearly and mention its limitations, such as the loss of context and word order. Look for examples of how they have used BoW in their projects.

8. What is named entity recognition (NER), and why is it important?

Named Entity Recognition (NER) is an NLP technique used to identify and classify named entities in text into predefined categories like names of persons, organizations, locations, dates, etc.

NER is important because it helps in extracting structured information from unstructured text, making it easier to analyze and understand. It is used in applications like information retrieval, question answering, and more.

Candidates should provide a clear explanation of NER and mention any tools or libraries they have used for NER tasks. Look for examples of practical applications and challenges faced.

9. How do you evaluate the performance of an NLP model?

The performance of an NLP model is typically evaluated using metrics such as accuracy, precision, recall, F1-score, and sometimes specific metrics like BLEU score for machine translation.

It is also important to evaluate the model on a diverse set of data to ensure it generalizes well and to perform error analysis to understand where the model fails.

Candidates should mention the importance of using multiple metrics and provide examples of how they have evaluated their models. Look for an understanding of the trade-offs between different metrics.

10. Can you explain the difference between supervised and unsupervised learning in the context of NLP?

In the context of NLP, supervised learning involves training a model on a labeled dataset, where the input and the corresponding output are known. It is commonly used for tasks like text classification, sentiment analysis, and named entity recognition.

Unsupervised learning, on the other hand, involves training a model on an unlabeled dataset, where the structure and patterns in the data are unknown. It is used for tasks like topic modeling and clustering.

Candidates should provide clear definitions and examples of both types of learning. Look for an understanding of when to use each approach and the challenges associated with them.

20 Natural Language Processing (NLP) interview questions to ask junior engineers

When interviewing junior engineers for NLP positions, it's crucial to assess their foundational knowledge and practical skills. Use these questions to gauge candidates' understanding of core NLP concepts and their ability to apply them in real-world scenarios.

- Explain the concept of word embeddings and their importance in NLP.

- How would you handle out-of-vocabulary words in a language model?

- Describe the process of text normalization and why it's important in NLP pipelines.

- What is the difference between stemming and lemmatization? When would you use one over the other?

- Explain the concept of n-grams and how they are used in language modeling.

- How would you approach building a simple chatbot using NLP techniques?

- What is the purpose of part-of-speech tagging in NLP, and how is it typically implemented?

- Describe the concept of attention mechanism in neural networks for NLP tasks.

- How would you handle multilingual text processing in an NLP project?

- Explain the concept of transfer learning in the context of NLP models.

- What are some common preprocessing steps for text data before feeding it into an NLP model?

- How would you approach the problem of text summarization using NLP techniques?

- Explain the concept of perplexity in language models and how it's used to evaluate them.

- What is the difference between recurrent neural networks (RNNs) and transformers in NLP applications?

- How would you handle imbalanced datasets in text classification tasks?

- Describe the concept of topic modeling and its applications in NLP.

- What are some techniques for handling long-range dependencies in sequential data for NLP tasks?

- How would you approach the task of question answering using NLP methods?

- Explain the concept of beam search in the context of machine translation or text generation.

- What are some ethical considerations to keep in mind when developing NLP applications?

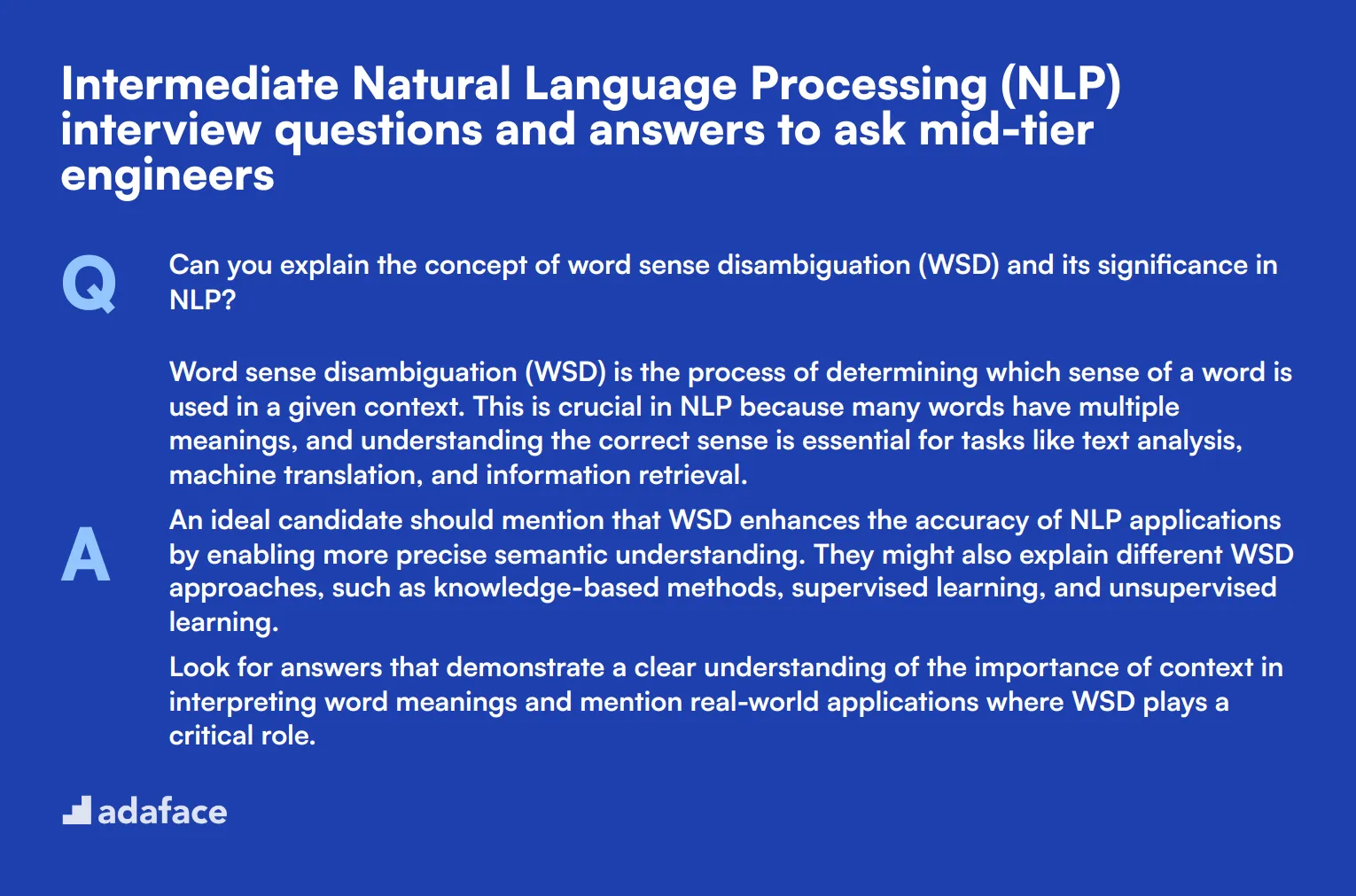

10 intermediate Natural Language Processing (NLP) interview questions and answers to ask mid-tier engineers

To gauge whether your mid-tier engineering candidates have the right proficiency in Natural Language Processing, these 10 intermediate NLP interview questions will be your go-to resource. This list will help you assess their practical knowledge and problem-solving abilities, ensuring they can handle the complexities of real-world NLP tasks.

1. Can you explain the concept of word sense disambiguation (WSD) and its significance in NLP?

Word sense disambiguation (WSD) is the process of determining which sense of a word is used in a given context. This is crucial in NLP because many words have multiple meanings, and understanding the correct sense is essential for tasks like text analysis, machine translation, and information retrieval.

An ideal candidate should mention that WSD enhances the accuracy of NLP applications by enabling more precise semantic understanding. They might also explain different WSD approaches, such as knowledge-based methods, supervised learning, and unsupervised learning.

Look for answers that demonstrate a clear understanding of the importance of context in interpreting word meanings and mention real-world applications where WSD plays a critical role.

2. How do you handle noisy text data in NLP projects?

Handling noisy text data involves several preprocessing steps to clean and prepare the data for analysis. Common techniques include removing or correcting misspellings, filtering out non-textual elements (like HTML tags), and normalizing text by converting it to lowercase.

Candidates might also mention using regular expressions for pattern matching and removing unnecessary characters. In addition, they could discuss the application of more advanced techniques, such as using machine learning models to detect and correct noise.

An ideal response should highlight the importance of preprocessing in improving model accuracy and efficiency. Look for examples of how candidates have successfully managed noisy data in past projects and any specific tools or libraries they used.

3. What are some approaches to handling sarcasm and irony in sentiment analysis?

Handling sarcasm and irony in sentiment analysis is challenging because these forms of expression often convey sentiments opposite to the literal meaning of the words used. One approach is to use contextual information and advanced models like deep learning that can capture subtle nuances in the text.

Candidates might also discuss the use of additional data sources, such as user profiles or historical data, to better understand the context. Another approach is to incorporate features like punctuation marks, emojis, and hashtags, which can provide additional clues about the intended sentiment.

Look for candidates who can articulate the difficulties involved and propose practical solutions. They should demonstrate an understanding of the limitations of current techniques and discuss ongoing research or emerging methods in this area.

4. How do you ensure that your NLP model is not biased?

Ensuring an NLP model is not biased involves several steps, starting with carefully curating a diverse and representative training dataset. It's also essential to monitor for bias during the data preprocessing and model training phases.

Candidates might mention techniques like re-sampling the data, using fairness-aware algorithms, or applying debiasing methods to mitigate bias. They could also discuss the importance of continuous evaluation and auditing of the model's performance on different demographic groups.

An ideal answer should reflect an awareness of the ethical implications of biased models and propose concrete steps to identify and reduce bias. Look for candidates who can provide examples of how they've addressed bias in their previous work.

5. What are some common techniques for text classification in NLP?

Common techniques for text classification in NLP include traditional machine learning methods like Naive Bayes, Support Vector Machines (SVM), and Decision Trees. These methods often require feature extraction techniques such as Bag-of-Words, TF-IDF, or word embeddings.

In recent years, deep learning approaches like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) networks and transformers, have become popular for text classification tasks due to their ability to capture complex patterns in the data.

Look for candidates who can compare and contrast these approaches, discussing their advantages and limitations. They should also mention the importance of feature engineering and preprocessing in achieving good classification performance.

6. Can you describe the importance of context in NLP and how it can be captured in models?

Context is crucial in NLP because the meaning of words and phrases often depends on their surrounding text. Capturing context can significantly improve the performance of NLP models, particularly for tasks like machine translation, question answering, and text summarization.

Techniques for capturing context include using word embeddings like Word2Vec or GloVe, which represent words in a continuous vector space that captures semantic relationships. More advanced methods like contextual embeddings (e.g., BERT or GPT) use transformers to capture context dynamically based on the entire sentence or paragraph.

An ideal candidate should highlight the role of context in understanding nuances and ambiguities in language. They should provide examples of how context-aware models have improved performance in specific NLP tasks and discuss any trade-offs involved in using these models.

7. How do you approach evaluating the performance of an NLP model?

Evaluating the performance of an NLP model typically involves using metrics like accuracy, precision, recall, and F1-score. The choice of metric depends on the specific task and the importance of false positives versus false negatives.

Candidates might also mention the use of cross-validation to ensure the model's robustness and the creation of a validation set that is representative of the test data. For more complex tasks, human evaluation or domain-specific metrics might be necessary.

Look for answers that demonstrate a thorough understanding of evaluation metrics and their appropriate use. Candidates should also discuss the importance of continuous monitoring and the potential need for model retraining as new data becomes available.

8. What strategies do you use for feature extraction in text data?

Feature extraction in text data involves transforming raw text into numerical representations that can be used by machine learning models. Common techniques include Bag-of-Words, TF-IDF, and word embeddings like Word2Vec, GloVe, or FastText.

Candidates might also discuss more advanced methods like contextual embeddings (e.g., BERT or GPT) and the use of domain-specific lexicons or ontologies. They could mention the importance of capturing both syntactic and semantic information in the features.

An ideal response should highlight the importance of selecting the right feature extraction technique based on the specific task and dataset. Look for examples of how candidates have used different methods in their previous projects and the impact on model performance.

9. How would you handle missing data in an NLP dataset?

Handling missing data in an NLP dataset can be approached in several ways, depending on the extent and nature of the missing data. Simple strategies include removing records with missing values or imputing missing data with a placeholder (e.g., 'UNK' for unknown).

More sophisticated methods involve using machine learning models to predict the missing values or leveraging context from surrounding text to fill in gaps. Candidates might also mention the use of data augmentation techniques to generate synthetic data.

Look for responses that demonstrate an understanding of the trade-offs involved in different approaches. Candidates should discuss the impact of missing data on model performance and provide examples of how they've handled similar issues in the past.

10. What are some challenges and solutions for processing large-scale text data?

Processing large-scale text data presents several challenges, including computational limitations, memory constraints, and the need for efficient data storage and retrieval. Common solutions involve using distributed computing frameworks like Hadoop or Spark to parallelize processing tasks.

Candidates might also discuss the use of cloud-based services and infrastructure to scale processing capabilities, as well as techniques like data sampling or dimensionality reduction to manage data size. They could mention the importance of optimizing algorithms and code for efficiency.

An ideal answer should highlight practical experience with handling large-scale text data, including specific tools and techniques used. Look for examples of how candidates have overcome scalability challenges in their previous work and any lessons learned.

15 Natural Language Processing (NLP) questions related to technical definitions

To determine whether your applicants have the right technical understanding of Natural Language Processing (NLP), ask them some of these NLP interview questions about technical definitions. These questions focus on key concepts and techniques in NLP and are designed to help you identify candidates with strong foundational knowledge. For more information on what skills to look for, check out our NLP engineer job description.

- Can you describe the concept of sequence-to-sequence models in NLP?

- What is the TF-IDF (Term Frequency-Inverse Document Frequency) technique, and how is it used in NLP?

- Explain the difference between a unigram, bigram, and trigram model.

- How does the concept of cosine similarity apply to text processing?

- What is the role of a tokenizer in an NLP pipeline?

- Explain the process of converting text data into numerical data suitable for machine learning models.

- What are word vectors, and how are they used in NLP tasks?

- Can you describe the difference between a generative and a discriminative model in the context of NLP?

- What is the importance of context in word embeddings?

- Explain the concept of word sense disambiguation and its significance in NLP.

- What is the role of a language model in NLP?

- How does a convolutional neural network (CNN) differ from a recurrent neural network (RNN) in text processing?

- What is the significance of the softmax function in NLP models?

- Can you describe what latent semantic analysis (LSA) is and its applications in NLP?

- How do attention mechanisms enhance the performance of NLP models?

15 Natural Language Processing (NLP) questions related to machine learning techniques

To determine whether your applicants have the right skills to navigate machine learning techniques in NLP, ask them some of these questions. This list will help you gauge their practical knowledge and problem-solving abilities, ensuring they are well-equipped for the role. For a detailed understanding of the skills required, refer to the NLP engineer job description.

- Can you explain how a support vector machine (SVM) is used in text classification?

- Describe how you would implement a neural network for text classification.

- What are the advantages and disadvantages of using a decision tree for NLP tasks?

- How does the concept of overfitting apply to NLP models, and how can you prevent it?

- Explain how k-means clustering can be used for document clustering.

- What role does feature engineering play in NLP, and what are some common techniques you use?

- Describe how you would use a random forest model for a text classification problem.

- What are the benefits of using ensemble methods in NLP tasks?

- How would you implement word embeddings using neural networks?

- Discuss how you would use a convolutional neural network (CNN) for sentiment analysis.

- Explain how you would use a recurrent neural network (RNN) for sequence prediction tasks in NLP.

- What are the key differences between bagging and boosting in the context of NLP models?

- How can gradient boosting be applied to improve the performance of an NLP model?

- Describe the process of fine-tuning a pre-trained language model for a specific NLP task.

- What are the key considerations when selecting a machine learning algorithm for an NLP project?

9 Natural Language Processing (NLP) interview questions and answers related to technical definitions

To determine whether your applicants have the right technical understanding of Natural Language Processing (NLP), ask them some of these interview questions about technical definitions. This list will help you gauge their fundamental knowledge and see if they can translate complex concepts into simple explanations.

1. What is the difference between semantics and syntax in NLP?

Semantics refers to the meaning of words and sentences, while syntax is about the arrangement of words to form a sentence. In other words, semantics is concerned with what the text actually means, whereas syntax focuses on the structure.

An ideal candidate should be able to distinguish between the two and provide examples of how each is used in NLP. Look for clarity in their explanations and an understanding of how these concepts apply in practical NLP tasks.

2. Can you explain the concept of word embeddings?

Word embeddings are a type of word representation that allows words to be represented as vectors in a continuous space. This technique captures the context of a word in a document, its semantic and syntactic similarity, and its relationship with other words.

A strong candidate will discuss how word embeddings like Word2Vec or GloVe are used to improve the performance of NLP models by providing a richer representation of text data. They may also mention how these embeddings can reduce the dimensionality of the text data while preserving its meaning.

3. What is the role of text preprocessing in NLP?

Text preprocessing involves cleaning and preparing text data for analysis. It includes steps like removing punctuation, converting text to lowercase, tokenization, removing stop words, and text normalization.

Candidates should highlight the importance of preprocessing in improving the accuracy and efficiency of NLP models. They should be able to explain how preprocessing helps in reducing noise and making the data more manageable and meaningful for model training.

Look for candidates who can articulate the various preprocessing techniques and their significance in creating robust NLP pipelines.

4. How does a naive Bayes classifier work in text classification?

A naive Bayes classifier is based on applying Bayes' theorem with strong (naive) independence assumptions between the features. In the context of text classification, it calculates the probability of each class given a set of words (features) and assigns the class with the highest probability to the text.

Ideal answers should demonstrate an understanding of the probabilistic nature of the model and how it uses word frequencies to make predictions. They may mention its applications in spam detection and sentiment analysis.

Candidates should also be aware of the strengths and limitations of the naive Bayes classifier, such as its simplicity and efficiency, but also its assumption of feature independence.

5. What is the significance of the TF-IDF (Term Frequency-Inverse Document Frequency) technique in NLP?

TF-IDF is a statistical measure used to evaluate the importance of a word in a document relative to a collection of documents (corpus). It combines term frequency (how often a word appears in a document) and inverse document frequency (how common or rare the word is across all documents).

Candidates should explain how TF-IDF helps in identifying important words that are not too common across documents. They might discuss its role in information retrieval and text mining applications.

Look for candidates who can clearly explain the formula and its components and how TF-IDF improves the relevance of text features for tasks like document classification and clustering.

6. Describe the process of text segmentation in NLP.

Text segmentation involves dividing text into meaningful units such as words, sentences, or topics. This process is crucial for various NLP tasks, including tokenization, sentence boundary detection, and topic modeling.

Strong candidates should discuss different segmentation techniques and tools, such as rule-based methods, machine learning models, and deep learning approaches.

Look for an understanding of how segmentation impacts downstream NLP applications and the challenges involved, such as handling ambiguities and different languages.

7. What is lexical analysis, and why is it important in NLP?

Lexical analysis is the process of converting a sequence of characters into a sequence of tokens. It involves identifying and categorizing individual words or symbols in the text.

Candidates should explain how lexical analysis serves as the foundation for more complex NLP tasks by providing a structured representation of the text. They might mention its importance in preprocessing and parsing stages.

Ideal answers will include examples of tools or libraries used for lexical analysis and discuss common challenges, such as dealing with special characters and different language scripts.

8. Can you explain the concept of syntactic parsing?

Syntactic parsing, also known as parsing or syntax analysis, is the process of analyzing the grammatical structure of a sentence. It involves identifying the syntactic relationships between words and generating a parse tree or dependency graph.

Candidates should discuss how syntactic parsing helps in understanding the structure and meaning of sentences, enabling downstream tasks like machine translation and information extraction.

Look for an understanding of different parsing techniques (e.g., constituency parsing, dependency parsing) and their applications in various NLP tasks.

9. What is sentiment analysis, and how is it used in NLP?

Sentiment analysis is the process of determining the sentiment or emotional tone expressed in a piece of text. It involves classifying text as positive, negative, or neutral based on the words and phrases used.

Strong candidates should explain how sentiment analysis is used to gauge public opinion, monitor brand reputation, and analyze customer feedback. They might discuss techniques like rule-based methods, machine learning classifiers, and deep learning models.

Look for an understanding of the challenges in sentiment analysis, such as handling sarcasm, context, and varying expressions of sentiment. Candidates should also be aware of the importance of training data and model evaluation metrics.

9 Natural Language Processing (NLP) interview questions and answers related to machine learning techniques

Ready to dive into the world of Natural Language Processing and machine learning? These nine questions will help you assess candidates' understanding of key NLP concepts and techniques. Use them to gauge how well applicants can apply machine learning approaches to language-related challenges. Remember, the best responses will blend technical knowledge with practical application!

1. How would you approach building a text classification model for spam detection?

A strong candidate should outline a step-by-step approach for building a text classification model for spam detection. They might describe the following process:

- Data collection and preprocessing: Gather a large dataset of both spam and non-spam emails. Clean the data by removing irrelevant information, handling missing values, and normalizing text.

- Feature extraction: Use techniques like TF-IDF or word embeddings to convert text into numerical features.

- Model selection: Choose appropriate algorithms such as Naive Bayes, SVM, or neural networks for classification.

- Training and validation: Split the data into training and validation sets, train the model, and evaluate its performance using metrics like accuracy, precision, and recall.

- Hyperparameter tuning: Optimize the model's parameters to improve performance.

- Testing and deployment: Evaluate the model on a separate test set and prepare it for production use.

Look for candidates who emphasize the importance of data quality, feature engineering, and model evaluation. They should also mention potential challenges like handling imbalanced datasets or dealing with evolving spam tactics.

2. Explain the concept of word sense disambiguation and its importance in NLP applications.

Word sense disambiguation (WSD) is the task of determining which sense or meaning of a word is being used in a particular context. This is crucial in NLP because many words have multiple meanings, and understanding the correct sense is essential for accurate language processing.

For example, the word 'bank' could refer to a financial institution or the edge of a river. WSD helps NLP systems choose the correct meaning based on the surrounding context.

Look for candidates who can explain the importance of WSD in various NLP applications, such as machine translation, information retrieval, and text summarization. They should also be able to discuss different approaches to WSD, including knowledge-based methods, supervised learning techniques, and more recent deep learning models.

3. How would you handle out-of-vocabulary words when implementing a language model?

Handling out-of-vocabulary (OOV) words is a common challenge in NLP. A good candidate should be able to discuss several strategies:

- Use a special token: Replace all OOV words with a unique token like '

'. - Subword tokenization: Break words into smaller units (e.g., WordPiece, BPE) to handle unseen words.

- Character-level models: Process text at the character level to handle any word.

- Open vocabulary approaches: Use techniques like fastText that can generate embeddings for unseen words.

- Hybrid approaches: Combine word and character-level representations.

Look for candidates who can explain the trade-offs between these approaches and discuss how the choice might depend on the specific application and available data. They should also mention the importance of handling OOV words during both training and inference stages.

4. Describe the concept of attention mechanism in neural networks for NLP tasks.

The attention mechanism is a technique that allows neural networks to focus on specific parts of the input when producing an output. In NLP, it's particularly useful for tasks involving long sequences, where traditional methods might lose important information.

Key points a candidate might mention:

- Attention allows the model to assign different weights to different parts of the input.

- It's especially useful in sequence-to-sequence models, like machine translation.

- Self-attention, used in transformers, allows a sequence to attend to itself.

- Attention can provide interpretability by showing which input elements the model focused on.

Look for candidates who can explain how attention addresses the limitations of fixed-length context vectors in sequence-to-sequence models. They should also be able to discuss different types of attention (e.g., soft vs. hard attention) and mention real-world applications where attention has made a significant impact, such as in machine translation or text summarization.

5. How would you approach the task of named entity recognition (NER) using machine learning?

Named Entity Recognition (NER) is the task of identifying and classifying named entities (like persons, organizations, locations) in text. A strong candidate should outline a machine learning approach to NER:

- Data preparation: Collect and annotate a dataset with named entity labels.

- Feature engineering: Extract relevant features (e.g., word embeddings, capitalization, POS tags).

- Model selection: Choose an appropriate model (e.g., CRF, BiLSTM-CRF, or transformer-based models).

- Training: Train the model on the annotated dataset.

- Evaluation: Use metrics like F1-score to assess performance.

- Fine-tuning: Iterate on the model, potentially using techniques like transfer learning.

Look for candidates who understand the sequence labeling nature of NER and can discuss the challenges, such as handling ambiguous entities or dealing with domain-specific named entities. They should also be aware of recent advancements, like the use of pre-trained language models for NER tasks.

6. Explain the concept of transfer learning in NLP and give an example of its application.

Transfer learning in NLP involves using knowledge gained from one task to improve performance on a different, but related, task. This approach is particularly powerful when dealing with limited labeled data for the target task.

A common example is using pre-trained language models like BERT or GPT. These models are trained on large amounts of general text data and can then be fine-tuned for specific tasks like sentiment analysis, question answering, or text classification with relatively small amounts of task-specific data.

Look for candidates who can explain the benefits of transfer learning, such as reduced training time and improved performance on tasks with limited data. They should also be aware of potential challenges, like catastrophic forgetting or domain mismatch between the source and target tasks. Candidates might also discuss more advanced concepts like few-shot or zero-shot learning in the context of transfer learning.

7. How would you handle multilingual text processing in an NLP project?

Handling multilingual text processing requires careful consideration of various factors. A strong candidate should discuss several approaches:

- Language detection: Implement a language detection step to identify the language of each text.

- Tokenization: Use language-specific tokenizers or a universal tokenizer that works across multiple languages.

- Translation: Consider translating all text to a common language or using multilingual models.

- Character encoding: Ensure proper handling of different character encodings (e.g., UTF-8).

- Multilingual word embeddings: Use embeddings that represent words from multiple languages in a shared vector space.

- Language-agnostic features: Utilize features that work across languages, like character n-grams.

Look for candidates who understand the challenges of multilingual processing, such as handling languages with different scripts, word order, or grammatical structures. They should also be aware of recent advancements in multilingual models like mBERT or XLM-R, which can process multiple languages without explicit translation.

8. Describe the process of building a simple chatbot using NLP techniques.

Building a simple chatbot using NLP techniques typically involves the following steps:

- Intent recognition: Identify the user's intention from their input (e.g., greeting, asking for information).

- Entity extraction: Identify important entities in the user's input (e.g., dates, names).

- Dialog management: Manage the flow of the conversation based on the recognized intent and entities.

- Response generation: Generate appropriate responses based on the current state of the conversation.

- Natural language generation: Convert the response into natural language.

Look for candidates who can explain different approaches to each step, such as rule-based vs. machine learning-based intent recognition, or template-based vs. generative response generation. They should also discuss considerations like handling context, managing conversation state, and dealing with ambiguity or errors in user input. Bonus points if they mention techniques for improving the chatbot over time, such as incorporating user feedback or using reinforcement learning.

9. How would you approach the problem of sentiment analysis for social media posts?

Approaching sentiment analysis for social media posts requires considering several unique challenges. A strong candidate might outline the following approach:

- Data collection: Gather a diverse dataset of social media posts with sentiment labels.

- Preprocessing: Handle social media-specific elements like hashtags, @mentions, emojis, and slang.

- Feature extraction: Use techniques like word embeddings or TF-IDF, potentially incorporating social media-specific features.

- Model selection: Choose an appropriate model (e.g., Naive Bayes, SVM, or deep learning models like LSTM or BERT).

- Training and evaluation: Train the model and evaluate using metrics like accuracy, F1-score, and confusion matrix.

- Handling challenges: Address issues like sarcasm detection, mixed sentiments, and context-dependent sentiments.

Look for candidates who understand the nuances of social media text, such as informal language, abbreviations, and the use of emojis to convey sentiment. They should also discuss strategies for dealing with imbalanced datasets (as positive and negative sentiments might not be equally represented) and methods for handling multi-class sentiment analysis (e.g., very negative, negative, neutral, positive, very positive).

Which Natural Language Processing (NLP) skills should you evaluate during the interview phase?

Assessing a candidate's full range of skills and capabilities in a single interview is challenging. However, focusing on certain key skills during the interview phase is important for gauging their potential in Natural Language Processing (NLP). Here are the core skills to evaluate to ensure you find the right fit for your team.

Text Preprocessing

You can use an assessment test that asks relevant MCQs to filter out this skill. Consider using our Natural Language Processing Test for this purpose.

During the interview, you can ask targeted questions to assess the candidate's understanding and experience with text preprocessing techniques.

Can you explain the process you would use to clean and preprocess a large corpus of text data?

Look for candidates who mention steps like tokenization, removing stop words, stemming, lemmatization, and handling different types of noise in the data. Their ability to detail these steps demonstrates their understanding of text preprocessing.

Feature Engineering

You can filter candidates' skills in feature engineering by using our Machine Learning Test to assess their knowledge in this area indirectly.

Asking targeted questions about feature engineering can help you understand a candidate's creativity and problem-solving skills in complex NLP tasks.

How would you create features for a sentiment analysis model from a set of product reviews?

Candidates should mention techniques like TF-IDF, word embeddings, n-grams, and possibly domain-specific features. Their ability to explain why and how they would use these features is key.

Model Evaluation

Use our Machine Learning Test which includes questions on model evaluation techniques to gauge this skill.

In the interview, you can ask questions focused on evaluating NLP models to see if the candidate understands different metrics and validation techniques.

What metrics would you use to evaluate a named entity recognition model, and why?

Look for answers mentioning precision, recall, F1-score, and the importance of a balanced dataset for validation. Candidates should also discuss the trade-offs between these metrics.

3 Tips for Using Natural Language Processing (NLP) Interview Questions

Before you start putting what you've learned into practice, here are our tips for using NLP interview questions effectively.

1. Leverage Skill Tests Before Interviews

Using skill tests before interviews can help you filter out unsuitable candidates early, saving time and resources.

Consider using specialized tests such as the Adaface NLP Online Test or the Machine Learning Online Test.

The benefits of this approach include a more focused interview process, as you'll be speaking only to those who have demonstrated the required skills. This ensures your interviews are both efficient and effective.

2. Compile and Outline Your Interview Questions

Time during an interview is limited, so it's important to carefully select the most relevant questions to assess the candidate’s abilities.

Include questions that cover a range of skills and topics. For instance, you could incorporate questions related to both technical definitions and machine learning techniques.

Additionally, consider including some soft skills interview questions to ensure a holistic evaluation of the candidate.

3. Ask Follow-Up Questions

Simply asking the prepared questions may not be enough. Follow-up questions can help you gauge the depth of a candidate's knowledge and their problem-solving capabilities.

For example, if you ask a candidate to explain the concept of word embeddings, a good follow-up question could be, 'Can you describe a scenario where using word embeddings would be advantageous?' This helps you assess not just their theoretical knowledge but also their practical understanding.

Use NLP interview questions and skills tests to hire talented engineers

If you are looking to hire someone with NLP skills, you need to ensure they have those skills accurately. The best way to do this is by using skill tests such as our NLP online test.

Once you use this test, you can shortlist the best applicants and call them for interviews. To get started, sign up on our dashboard or check out our online assessment platform.

Natural Language Processing (NLP) Test

Download Natural Language Processing (NLP) interview questions template in multiple formats

Natural Language Processing (NLP) Interview Questions FAQs

Junior engineers are often asked about basic NLP concepts, simple algorithms, and foundational machine learning techniques.

Ask questions related to technical definitions, machine learning techniques, and practical applications of NLP.

Intermediate questions should cover more advanced NLP algorithms, data preprocessing techniques, and real-world applications.

Understanding machine learning techniques is essential for NLP as they are crucial for tasks like text classification, sentiment analysis, and language modeling.

Use a mix of basic, intermediate, and advanced questions along with real-world problem-solving scenarios to gauge candidates' expertise and practical skills.

Prepare a balanced set of questions, focus on both theoretical knowledge and practical skills, and tailor questions to the experience level of the candidate.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources