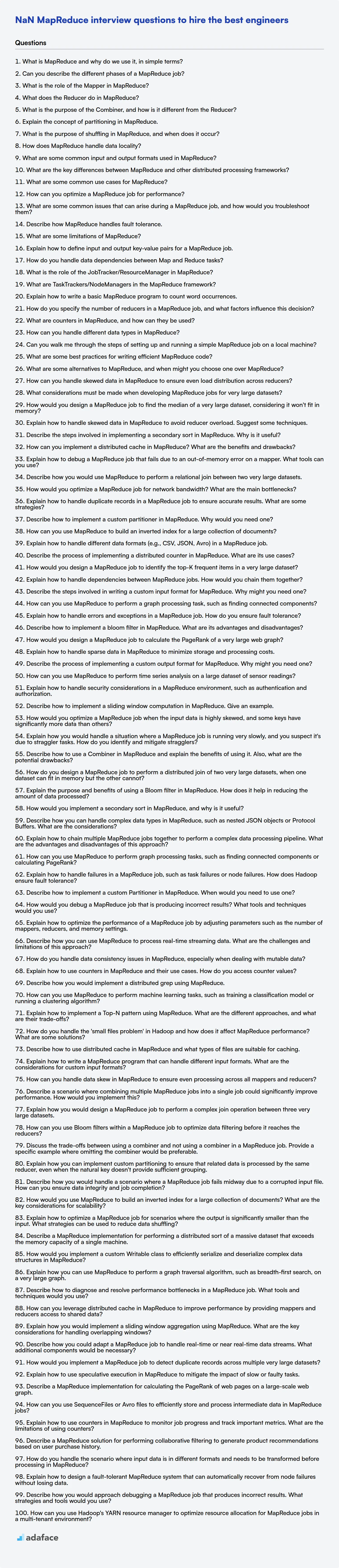

Recruiting candidates with strong MapReduce skills can be tough, as it requires a blend of theoretical knowledge and practical experience. If you're a hiring manager or recruiter, having a well-prepared list of questions is a must for evaluating candidates.

This blog post provides a curated list of MapReduce interview questions, categorized by difficulty level, including basic, intermediate, advanced, and expert. We have also included some multiple-choice questions (MCQs).

By using these questions, you can thoroughly assess a candidate's MapReduce knowledge and practical skills before the interview, and save time. Consider using our Map Reduce Online Test to streamline your evaluation process.

Table of contents

Basic MapReduce interview questions

1. What is MapReduce and why do we use it, in simple terms?

MapReduce is a programming model and an associated implementation for processing and generating large datasets. In simple terms, it's a way to break down a big problem into smaller, independent parts that can be processed in parallel across many machines. Then, it combines the results from each machine to produce the final output.

We use MapReduce for several reasons: primarily to handle huge datasets that wouldn't fit on a single machine, and to speed up processing by distributing the work across many machines in parallel. This makes it possible to analyze massive amounts of data (like web logs, social media data, etc.) much faster than would be possible with a single computer. It's also relatively fault-tolerant - if one machine fails, the job can be redistributed to another.

2. Can you describe the different phases of a MapReduce job?

A MapReduce job typically consists of several phases. These phases are broadly categorized as:

- Input Phase: The input data is split into smaller chunks, and each chunk is assigned to a Map task. This also involves input format parsing.

- Mapping Phase: Each Map task processes its assigned data chunk and produces intermediate key-value pairs.

- Shuffle & Sort Phase: The intermediate key-value pairs from the Map tasks are shuffled across the network to the Reduce tasks based on the key. Within each Reduce task, the keys are sorted. This phase is crucial for grouping related data together.

- Reducing Phase: Each Reduce task processes the sorted intermediate key-value pairs for its assigned key range. The Reduce task performs aggregation or other computations to produce the final output.

- Output Phase: The output from the Reduce tasks is written to the final output location. This also involves output format writing.

3. What is the role of the Mapper in MapReduce?

The Mapper in MapReduce is responsible for processing individual input records and transforming them into key-value pairs. Its primary role is to take raw data, apply a transformation logic (defined by the user), and output intermediate key-value pairs that are suitable for the subsequent Reduce phase.

Essentially, the Mapper performs the initial filtering, sorting, and data preparation, setting the stage for the Reducer to aggregate and process the data further. It operates in parallel across multiple input splits, allowing for distributed processing of large datasets.

4. What does the Reducer do in MapReduce?

The Reducer in MapReduce processes the intermediate data generated by the Mappers. It receives sorted input from the Mappers, where the input is grouped by key.

The primary function of the Reducer is to aggregate, summarize, or otherwise transform this intermediate data to produce the final output. This typically involves operations like summing values, filtering data, or joining datasets based on the keys received. The Reducer's output is the final result of the MapReduce job.

5. What is the purpose of the Combiner, and how is it different from the Reducer?

The Combiner's purpose is to reduce the amount of data transferred across the network from the Mappers to the Reducers. It acts as a "mini-reducer" that processes the intermediate key-value pairs generated by the Mappers on the same node where the Mapper is running. This reduces the amount of data that needs to be shuffled to the Reducers, improving performance.

The Reducer, on the other hand, processes all the intermediate key-value pairs for a particular key, across the entire cluster. Its main goal is to aggregate, filter, or transform the data based on the specific business logic to produce the final output. The Combiner is an optimization; the Reducer is required.

6. Explain the concept of partitioning in MapReduce.

Partitioning in MapReduce controls how the output of the mapper tasks is divided among the reducer tasks. The partitioner is responsible for determining which reducer will receive each intermediate key-value pair generated by the mappers.

By default, MapReduce uses a hash-based partitioner (e.g., HashPartitioner in Hadoop), which calculates the hash code of the key and then performs a modulo operation with the number of reducers to determine the partition. Custom partitioners can be implemented to provide more control over data distribution. This is important for ensuring even load balancing across reducers and can be critical for optimizing performance when dealing with skewed data. Improper partitioning can lead to some reducers being overloaded while others are idle.

7. What is the purpose of shuffling in MapReduce, and when does it occur?

The purpose of shuffling in MapReduce is to redistribute the output of the map tasks to the reduce tasks. It ensures that all key-value pairs with the same key are sent to the same reducer. This is essential for tasks like aggregation, where you need to process all values associated with a particular key together.

Shuffling occurs between the map and reduce phases. Specifically, after the map tasks have completed and before the reduce tasks begin. The map output is sorted and partitioned based on the reducer it needs to go to. This partitioned data is then transferred to the appropriate reduce nodes.

8. How does MapReduce handle data locality?

MapReduce optimizes data processing by leveraging data locality. The MapReduce framework attempts to schedule map tasks on nodes where the input data resides. This minimizes network traffic, as data doesn't need to be transferred across the network for processing. This optimization is crucial for performance, especially when dealing with large datasets.

Specifically:

- The InputFormat provides data splits and their locations (hostnames) within the cluster.

- The JobTracker (in Hadoop 1.x) or ResourceManager (in Hadoop 2.x/YARN) uses this location information to schedule map tasks close to the data. If the ideal node isn't available, it tries to schedule on a node in the same rack or, failing that, elsewhere in the cluster.

9. What are some common input and output formats used in MapReduce?

Common input formats in MapReduce include Text, SequenceFile, and Avro. Text reads data as plain text, line by line. SequenceFile is a binary format that stores key-value pairs, optimized for MapReduce. Avro is a data serialization system providing rich data structures.

Output formats often mirror input formats, such as TextOutputFormat (writing plain text) and SequenceFileOutputFormat. Avro is also used for output. Custom output formats can be defined, and formats like JSON or CSV can be supported with suitable libraries or custom implementations. MultipleOutputs is also valuable when the reduce function needs to write different kinds of output to different files.

10. What are the key differences between MapReduce and other distributed processing frameworks?

MapReduce differs from other distributed processing frameworks primarily in its programming model and execution paradigm. MapReduce relies heavily on a batch-oriented, disk-based processing model, which can introduce significant latency for iterative or real-time applications. Other frameworks, like Apache Spark or Apache Flink, offer in-memory data processing capabilities, making them significantly faster for such workloads.

Key differences include:

- Data Storage: MapReduce often relies on HDFS for data storage, leading to I/O overhead. Modern frameworks can leverage in-memory storage and more efficient data structures.

- Programming Model: MapReduce uses a rigid two-stage (Map and Reduce) programming model, limiting flexibility. Other frameworks offer richer APIs (e.g., Spark's RDDs, DataFrames) and support for stream processing.

- Fault Tolerance: While MapReduce handles fault tolerance through task retries, frameworks like Spark offer more advanced mechanisms, such as lineage-based recovery, enabling faster recovery from failures.

- Real-time processing: Frameworks like Flink are better suited for low-latency and streaming use-cases, where MapReduce is not.

11. What are some common use cases for MapReduce?

MapReduce is commonly used for processing and generating large datasets. Some use cases include:

- Log analysis: Processing web server logs to identify popular pages, user behavior, or error patterns.

- Data warehousing: ETL processes, transforming and loading data into a data warehouse.

- Indexing: Building search indexes for large document collections.

- Machine learning: Training machine learning models on massive datasets, such as calculating word co-occurrence matrices or performing large-scale matrix computations. For example,

calculating term frequency-inverse document frequency (TF-IDF). - Data mining: Discovering patterns and insights from large datasets.

12. How can you optimize a MapReduce job for performance?

Several strategies can optimize MapReduce job performance. Firstly, optimize data locality by ensuring input data is as close as possible to the compute nodes. Secondly, minimize data transfer across the network by using combiners to reduce the amount of intermediate data generated by mappers before it's sent to the reducers. Choose appropriate data formats (like Avro or Parquet) for efficient serialization and deserialization. Adjust block sizes and consider compression techniques to reduce disk I/O. Finally, configure Hadoop parameters appropriately for the specific job requirements, such as increasing the number of map or reduce tasks, adjusting memory allocation (mapreduce.map.memory.mb, mapreduce.reduce.memory.mb), and tuning garbage collection settings.

13. What are some common issues that can arise during a MapReduce job, and how would you troubleshoot them?

Common issues in MapReduce jobs include data skew, insufficient hardware resources, and incorrect configurations. Data skew leads to uneven distribution of data across mappers or reducers, causing some tasks to take significantly longer than others. You can troubleshoot this by identifying skewed keys and implementing techniques like salting or custom partitioners. Insufficient resources, like memory or disk space, result in job failures. Monitoring resource usage with tools like the Hadoop Resource Manager UI and increasing allocation based on need usually helps.

Incorrect configurations, such as wrong file paths or incorrect parameters, also lead to failures. Check the job configuration files (e.g., mapred-site.xml, yarn-site.xml) and logs for errors. Logs are essential for troubleshooting; use tools like grep to search for error messages, stack traces, or performance bottlenecks. Also verify the input data format is as expected by the mapper.

14. Describe how MapReduce handles fault tolerance.

MapReduce achieves fault tolerance primarily through replication and re-execution. The system assumes that failures are common and designs around them. When a map or reduce task fails (e.g., due to a machine crash), the master node detects the failure. It then re-schedules the failed task on another available worker node. Input data is split into chunks and replicated across multiple machines in the Hadoop Distributed File System (HDFS). This ensures that if a node containing a data chunk fails, the data is still accessible from another replica.

The master node periodically pings worker nodes. If a worker doesn't respond within a timeout, the master marks it as failed. Any map tasks completed by the failed worker are reset to the idle state, and their output is re-processed by new worker nodes because their output is stored on the local disk of the worker, which is now inaccessible. Reduce tasks in progress are also reset to idle and re-scheduled. Completed map tasks are not re-executed unless the node running the map task becomes unavailable before the reduce task has retrieved the data. The reduce tasks get notified of the new location and pull data from the new mapper.

15. What are some limitations of MapReduce?

MapReduce, while powerful, has limitations. One major drawback is its suitability for iterative algorithms. Because each MapReduce job involves reading from and writing to disk, iterative processes can be very slow. Real-time processing is also a challenge, as MapReduce is designed for batch processing and not for low-latency queries.

Another limitation is the complexity in expressing some types of algorithms. Problems that don't naturally decompose into map and reduce stages can be cumbersome to implement. Additionally, MapReduce isn't optimized for handling graph-structured data or data with complex dependencies. Other frameworks like Spark or graph databases often provide more efficient solutions for these cases.

16. Explain how to define input and output key-value pairs for a MapReduce job.

In MapReduce, defining input and output key-value pairs involves specifying the data types for both keys and values at each stage (map and reduce). This is crucial for data serialization, deserialization, and overall job execution.

Specifically:

- Input Key/Value: These are the key-value pairs that the

Mapperreceives as input. The input format is usually determined by theInputFormatclass. Common input formats includeTextInputFormat(key=byte offset, value=line of text) andSequenceFileInputFormat(key and value are Hadoop Writable objects). - Output Key/Value (Mapper): These are the key-value pairs that the

Mapperemits. You define their types in theMapperclass definition itself, as generic type parameters. For example:Mapper<KEYIN,VALUEIN,KEYOUT,VALUEOUT>whereKEYOUTandVALUEOUTare the output key and value types from the map function. - Input Key/Value (Reducer): These are the key-value pairs that the

Reducerreceives as input. TheKEYINandVALUEINtypes for theReducermust match theKEYOUTandVALUEOUTtypes of theMapper. The framework handles grouping the mapper outputs by key for the reducer. The reduce function then operates on a key and an iterator of values associated with that key. - Output Key/Value (Reducer): These are the final key-value pairs that the

Reduceremits as output. Similar to mapper output, you specify types when you define your reducer class, as generic type parameters:Reducer<KEYIN,VALUEIN,KEYOUT,VALUEOUT>whereKEYOUTandVALUEOUTare the output key and value types from the reduce function.

You must ensure that your key and value types implement the Writable interface. Common Writable implementations include Text, IntWritable, LongWritable, FloatWritable, and DoubleWritable. You can also create custom Writable classes. The output format is typically determined by the OutputFormat class, such as TextOutputFormat or SequenceFileOutputFormat.

17. How do you handle data dependencies between Map and Reduce tasks?

Data dependencies between Map and Reduce tasks in Hadoop (or similar MapReduce frameworks) are implicitly handled by the framework's shuffle and sort phase. Map tasks output key-value pairs. The framework groups all values associated with the same key together and sends them to a single Reduce task. This ensures that all data needed to process a specific key is available to the corresponding Reduce task.

If more complex dependencies are needed (e.g., a Reduce task needs data generated by another Reduce task), you might need to chain multiple MapReduce jobs together. The output of the first job becomes the input of the second job. Also, techniques like using a distributed cache (e.g., Hadoop's DistributedCache) can provide additional data to Map or Reduce tasks; however, this is generally for smaller read-only datasets and not for large-scale dependencies between tasks.

18. What is the role of the JobTracker/ResourceManager in MapReduce?

In MapReduce (specifically Hadoop 1.x), the JobTracker is the central coordinator responsible for resource management and job scheduling. It receives job submissions from clients, divides the job into tasks (map and reduce), and assigns these tasks to TaskTrackers running on different nodes in the cluster.

In Hadoop 2.x (YARN), the ResourceManager takes over resource management, while the ApplicationMaster handles job-specific scheduling. The ResourceManager negotiates resources with NodeManagers (the equivalent of TaskTrackers) and allocates them to ApplicationMasters. ApplicationMasters then manage the execution of tasks for their specific application.

19. What are TaskTrackers/NodeManagers in the MapReduce framework?

In the MapReduce framework (like Hadoop), TaskTrackers (in older Hadoop versions) or NodeManagers (in newer versions like Hadoop 2.x - YARN) are worker nodes responsible for executing tasks assigned by the JobTracker (older) or ResourceManager (newer). They run on individual machines within the cluster.

Key responsibilities include:

- Launching and monitoring Map and Reduce tasks.

- Reporting task status (progress, completion, failures) to the JobTracker/ResourceManager.

- Managing resources (CPU, memory, disk) on their respective nodes to ensure tasks have the necessary resources to run. They act as the slave daemons for the master JobTracker or ResourceManager in the cluster.

20. Explain how to write a basic MapReduce program to count word occurrences.

MapReduce for word count involves three main stages: Map, Shuffle & Sort, and Reduce.

- Map: The mapper takes input splits (e.g., lines of text) and emits key-value pairs. For word count, the mapper tokenizes the input and emits

<word, 1>for each word.// Example Mapper public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String line = value.toString(); String[] words = line.split("\\s+"); for (String word : words) { context.write(new Text(word), new IntWritable(1)); } } - Shuffle & Sort: The framework automatically shuffles and sorts the mapper outputs by key (word in this case).

- Reduce: The reducer receives the sorted key-value pairs and aggregates the values for each key. For word count, the reducer sums the counts for each word.

// Example Reducer public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int sum = 0; for (IntWritable value : values) { sum += value.get(); } context.write(key, new IntWritable(sum)); }

21. How do you specify the number of reducers in a MapReduce job, and what factors influence this decision?

The number of reducers in a MapReduce job is typically specified using configuration parameters. For Hadoop MapReduce, this is often done using the mapreduce.job.reduces property. This can be set programmatically in the driver code or through the command line when submitting the job.

Several factors influence the decision of how many reducers to use. Data volume is a key factor; larger datasets generally benefit from more reducers for parallel processing. The complexity of the reduce function also matters; more complex operations might warrant more reducers. Too few reducers can lead to bottlenecks, while too many can result in excessive overhead due to managing numerous small output files. The cluster's available resources (number of nodes, CPU cores) also play a role in determining the optimal number of reducers. Data skew is another important factor. If keys are not evenly distributed, then some reducers might take longer than others, so choose an appropriate number of reducers to minimize the effect of this. Experimentation is often necessary to fine-tune the reducer count for optimal performance.

22. What are counters in MapReduce, and how can they be used?

Counters in MapReduce are global counters that track the frequency of events during a MapReduce job. They are useful for gathering statistics about the job's execution, diagnosing problems, and monitoring performance. They can be built-in (provided by the MapReduce framework) or user-defined (created by the programmer).

Counters can be used for various purposes, such as: tracking the number of processed records, identifying malformed input records, monitoring the frequency of specific events, and measuring the efficiency of certain operations. To use a counter, you increment it in your mapper or reducer code:

context.getCounter("MyGroup", "MyCounter").increment(1);

This code snippet shows how to increment a counter named 'MyCounter' within a group called 'MyGroup'. The results are available through the MapReduce UI or programmatically after the job completes.

23. How can you handle different data types in MapReduce?

MapReduce handles different data types primarily through serialization and deserialization. Data is often converted to a standard format, typically text, for processing in the map and reduce phases. The map function is responsible for parsing the input data (e.g., from text to integers, floats, or custom objects), and the reduce function similarly handles the output from the mapper.

Custom data types can be handled by implementing custom Writable interfaces in Hadoop. Writable interface provides methods for serialization (writing the object's data to the DataOutput stream) and deserialization (reading the object's data from the DataInput stream). You can also leverage libraries like Avro or Protocol Buffers for more structured data serialization and schema evolution. These libraries define schemas for data and provide efficient serialization/deserialization mechanisms, simplifying data handling across the MapReduce pipeline. Example:

public class CustomDataType implements Writable {

private int value;

private String name;

// Constructors, getters, and setters

@Override

public void write(DataOutput out) throws IOException {

out.writeInt(value);

out.writeUTF(name);

}

@Override

public void readFields(DataInput in) throws IOException {

value = in.readInt();

name = in.readUTF();

}

}

24. Can you walk me through the steps of setting up and running a simple MapReduce job on a local machine?

To set up and run a simple MapReduce job on a local machine (assuming a Hadoop environment like Hadoop or Spark is already set up):

First, prepare your data by creating an input directory and placing your input files inside it. Then, write your MapReduce code. This usually involves defining a Mapper class (that extends Mapper or its equivalent), and a Reducer class (that extends Reducer or its equivalent). The Mapper transforms input data into key-value pairs. The Reducer aggregates the values based on keys. Package the code into a JAR file. Next, execute the MapReduce job using the command line. Specify the input directory, the output directory, and the JAR file containing your MapReduce code. For Hadoop this would be something like: hadoop jar <jar_file> <main_class> <input_directory> <output_directory>. Once the job is complete, you can find the results in the specified output directory. These results are usually split into multiple files, depending on the number of reducers used.

25. What are some best practices for writing efficient MapReduce code?

To write efficient MapReduce code, consider these best practices:

- Data Locality: Maximize data locality by ensuring the MapReduce job runs on nodes where the input data is stored. This minimizes data transfer over the network.

- Combiners: Use combiners to reduce the amount of data transferred from mappers to reducers. Combiners perform local aggregation on the mapper output before sending it to the reducers. This helps minimize network traffic.

- Data Filtering/Selection: Filter data as early as possible in the mapper phase to reduce the amount of data processed by the reducers. Avoid unnecessary processing of irrelevant data. For example, use

ifstatements or other filtering logic within the mapper function. - Compression: Compress intermediate data written to disk by the mappers and read by the reducers. This reduces disk I/O and network bandwidth usage.

- Appropriate Data Types: Choose efficient data types to minimize storage space and processing overhead. Consider using integers or longs instead of strings when possible.

- Reduce Shuffle Size: Minimize the amount of data shuffled from mappers to reducers by optimizing the mapper output and using combiners effectively.

- Partitioning: Use custom partitioners to evenly distribute the workload among reducers and avoid data skew. Skewed data can cause some reducers to take much longer than others, leading to performance bottlenecks.

- Avoid Creating Excessive Objects: Minimize object creation within the map and reduce functions, as it can lead to garbage collection overhead and performance degradation.

- Optimize Reducer Logic: Reducer logic is also critical. The reducer is often a bottleneck as it must aggregate a large amount of data so optimize the processing logic to be as efficient as possible, and consider using in-memory aggregation if possible.

26. What are some alternatives to MapReduce, and when might you choose one over MapReduce?

Alternatives to MapReduce include Spark, Flink, and Dask. Spark excels when iterative processing is needed, as it leverages in-memory computation, making it significantly faster than MapReduce for algorithms involving multiple passes over the data. Flink is a strong choice for stream processing applications due to its low-latency and fault-tolerance capabilities. Dask is suitable for scaling Python workflows, particularly those involving NumPy, Pandas, and scikit-learn, and can be deployed on single machines or distributed clusters.

MapReduce might be preferred when dealing with very large datasets where fault tolerance and scalability are paramount, and the processing logic can be expressed as simple map and reduce operations. It is also a good option if the infrastructure is already set up for MapReduce and the task doesn't require iterative processing or real-time analysis. However, for most modern data processing needs, the alternatives often offer better performance and flexibility.

27. How can you handle skewed data in MapReduce to ensure even load distribution across reducers?

Skewed data in MapReduce can lead to uneven load distribution, causing some reducers to take significantly longer than others. To mitigate this, several techniques can be employed.

- Custom Partitioning: Instead of relying on the default hash-based partitioning, implement a custom partitioning function that takes data distribution into account. This function should intelligently distribute keys across reducers to balance the workload.

- Salting: Add a random prefix (salt) to the key before partitioning. This effectively creates multiple versions of the same key, distributing them across different reducers. The reducer then needs to aggregate the results for all salted versions of the key.

- Combiners: Use combiners to perform local aggregation of data on the mappers before sending it to the reducers. This reduces the amount of data transferred across the network and the load on the reducers.

- Pre-processing: Sample the data to identify skewed keys and use this information to optimize partitioning strategies.

For example, in Java:

public class CustomPartitioner extends Partitioner<Text, IntWritable> {

@Override

public int getPartition(Text key, IntWritable value, int numReduceTasks) {

// Custom logic to distribute keys based on data distribution

return (key.hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

}

28. What considerations must be made when developing MapReduce jobs for very large datasets?

When developing MapReduce jobs for very large datasets, several considerations are critical for performance and efficiency. Data partitioning is paramount; ensure your input data is split into chunks that can be processed in parallel. Optimize the mapper and reducer functions to minimize data shuffling across the network; this can be achieved by using combiners and appropriate data structures to reduce intermediate data volume. Also, consider data locality; Hadoop attempts to run map tasks on nodes where the input data resides to reduce network traffic. Using compression for both input and output data can significantly reduce storage costs and I/O overhead.

Furthermore, memory management is essential; avoid creating large objects in memory that could lead to out-of-memory errors. Monitor the job execution closely using the Hadoop web UI and logs to identify bottlenecks and resource constraints. Configuration tuning, such as adjusting the number of mappers and reducers, the buffer sizes, and the heap size, can substantially impact job performance. Handle failures gracefully by considering fault tolerance mechanisms provided by Hadoop. Finally, consider the data formats as using formats like Parquet or ORC can provide performance improvements due to their columnar storage and compression capabilities.

Intermediate MapReduce interview questions

1. How would you design a MapReduce job to find the median of a very large dataset, considering it won't fit in memory?

To find the median of a very large dataset using MapReduce, a common approach involves these steps:

- Sampling and Initial Partitioning: Take a small, random sample of the dataset that can fit in memory. Calculate the approximate median from this sample. Use this approximate median to partition the data into three ranges:

less_than_median,around_median, andgreater_than_median. Thearound_medianrange should be relatively narrow. The Map stage would read the large dataset, and output each record with a key indicating which range it falls into. - Refinement (Second MapReduce Job): If the

around_medianpartition is still too large to fit in memory, repeat the sampling and partitioning process within just this partition. Otherwise, collect thearound_mediandata into a single reducer. In the reducer, sort thearound_mediandata and determine the exact median based on the total number of records in the original dataset. Specifically, determine how many more elements are needed to reach the true median, and count that many elements from the sortedaround_mediandata.

2. Explain how to handle skewed data in MapReduce to avoid reducer overload. Suggest some techniques.

Skewed data in MapReduce can cause some reducers to process significantly more data than others, leading to reducer overload and performance bottlenecks. To mitigate this, several techniques can be employed. One common approach is to use a combiner function to perform local aggregation on the mappers before sending data to the reducers. This reduces the amount of data being transferred across the network and the load on the reducers.

Another effective technique is salting. Salting involves adding a random prefix or suffix to the key before hashing it for reducer assignment. This distributes the skewed keys more evenly across the reducers. A more sophisticated approach would be to use custom partitioning. This allows you to define your own partitioning function that takes into account the data distribution and assigns keys to reducers in a more balanced way. For instance, if you knew that certain keys are very frequent, you can assign these keys to different reducers using a specific rule in the custom partitioner.

3. Describe the steps involved in implementing a secondary sort in MapReduce. Why is it useful?

Secondary sort in MapReduce allows you to sort values associated with a key in the reducer. This is achieved by utilizing the MapReduce framework's partitioning, sorting, and grouping capabilities.

Here are the steps:

- Composite Key Creation: Create a composite key in the mapper consisting of the natural key (the key you want to group by) and a secondary key (the key you want to sort by within each group).

- Custom Partitioner: Implement a custom partitioner to ensure that all records with the same natural key are sent to the same reducer.

- Custom Comparator (Sorting): Implement a custom comparator that compares composite keys based on both the natural key (for partitioning) and the secondary key (for sorting within the partition).

- Custom Grouping Comparator: Implement a custom grouping comparator that only compares the natural key part of the composite key. This ensures that all records with the same natural key are grouped together in the reducer, even if they have different secondary keys.

Secondary sort is useful when you need to process data in a specific order within each group defined by the primary key. For example, you might want to analyze website activity logs in chronological order for each user, or process financial transactions in the order they occurred for each account.

4. How can you implement a distributed cache in MapReduce? What are the benefits and drawbacks?

In MapReduce, a distributed cache can be implemented using the DistributedCache class in Hadoop. You add files/archives to the cache using the -files or -archives option when running the MapReduce job or programmatically. Within the mapper or reducer, you can then access these cached files from the local file system. The files are copied to the local disks of the task nodes before the tasks start.

Benefits include reduced network I/O (data is localized), improved performance (data is readily available), and simplified code (no need to fetch data from a remote source repeatedly). Drawbacks involve the cache size limitations (must fit on local disks), potential for data staleness (cache is not automatically updated), and increased job setup time (due to file distribution). Also, managing the distributed cache introduces complexity. For example, ensuring that files are correctly distributed, handling updates, and monitoring file sizes are important tasks when using the distributed cache.

5. Explain how to debug a MapReduce job that fails due to an out-of-memory error on a mapper. What tools can you use?

When a MapReduce job fails due to an out-of-memory (OOM) error on a mapper, it indicates the mapper is trying to process too much data at once. Debugging involves identifying the cause of excessive memory usage and implementing strategies to reduce it. Tools like Hadoop's web UI (ResourceManager and NodeManager UIs), YARN logs, and potentially a Java profiler can be used.

Common debugging steps include:

- Analyzing logs: Examine the YARN logs for the specific mapper task that failed to identify the exact point of failure and any related error messages. The

OutOfMemoryErrormessage will provide details. - Reviewing code: Check the mapper code for inefficient data structures, large intermediate results being stored in memory, or memory leaks. Look for places where you are storing large objects in memory without releasing them.

- Sampling Input: Try running the mapper on a small sample of input data to see if the issue can be replicated and easily debugged locally.

- Increasing memory allocation: As a temporary workaround, increase the memory allocated to the mapper using

mapreduce.map.memory.mbandmapreduce.map.java.opts. However, this only masks the underlying problem and is not a long-term solution. Address the problem by optimizing code or filtering data. - Profiling: If necessary, use a Java profiler (e.g., VisualVM, JProfiler) to analyze the mapper's memory usage during execution and pinpoint memory-intensive operations. This requires configuring the MapReduce job to enable profiling.

- Reducing data: Filter or pre-process the input data to reduce the amount of data that the mapper needs to process. This can involve techniques like data sampling or using a more selective input format.

- Optimize Data Structures: Ensure efficient use of data structures. Avoid storing unnecessary copies of data, use appropriate data types, and leverage techniques like data compression.

6. Describe how you would use MapReduce to perform a relational join between two very large datasets.

To perform a relational join between two very large datasets using MapReduce, I would follow these steps:

- Map Phase: Each mapper reads a chunk of either dataset A or dataset B. The mapper emits key-value pairs where the key is the join key (the column used for joining the two datasets) and the value is a tuple containing the table identifier (A or B) and the entire row from that table.

Example: (join_key, (A, row_from_A))or(join_key, (B, row_from_B)) - Reduce Phase: The reducer receives all the key-value pairs for a particular join key. It separates the values into two groups: rows from dataset A and rows from dataset B. For each row from A and each row from B sharing the same join key, the reducer emits the joined row.

Example: Joined_Row = row_from_A + row_from_B.

7. How would you optimize a MapReduce job for network bandwidth? What are the main bottlenecks?

To optimize a MapReduce job for network bandwidth, focus on reducing the amount of data shuffled between the map and reduce phases. The primary bottleneck is typically the shuffling of intermediate data across the network.

Several strategies can be applied. Compression of both map output and intermediate data significantly reduces the amount of data transmitted. Combiners perform local aggregation of map output before shuffling, reducing the volume of data sent to the reducers. Data locality is critical; ensure map tasks are scheduled on nodes where the input data resides to minimize network traffic for reading input data. Finally, consider data partitioning strategies to distribute data evenly across reducers and avoid skew, which can lead to uneven network load. Using gzip or snappy compression (if the data is splittable) are good options. Make sure the map output is compressed as well.

8. Explain how to handle duplicate records in a MapReduce job to ensure accurate results. What are some strategies?

Handling duplicate records in MapReduce is crucial for accurate results. One strategy is to deduplicate data during the Map phase. The mapper can emit a unique key-value pair for each unique record, effectively filtering out duplicates before further processing. Another strategy involves a dedicated deduplication MapReduce job before the main processing job.

Specific techniques include:

- Using a

Setin the mapper: Store seen records in aSet. Only emit the record if it's not already in theSet. This works well if the data volume isn't too large and theSetcan fit in memory. - Using a composite key: If duplicates are based on certain fields, create a composite key consisting of these fields. This helps the reducer identify and process only unique combinations.

- Deduplicating in the reducer: The reducer receives all values for a given key. It can then iterate through the values and remove duplicates before performing further calculations.

9. Describe how to implement a custom partitioner in MapReduce. Why would you need one?

A custom partitioner in MapReduce controls which reducer each map output is sent to. You implement it by creating a class that extends the Partitioner class and overriding the getPartition() method. This method takes the key, value, and number of reducers as input and returns an integer representing the partition (reducer) number.

You might need a custom partitioner for several reasons: to improve load balancing by distributing data more evenly across reducers, to ensure that all data for a specific key or a set of related keys goes to the same reducer (for example, to perform computations involving all data for a particular user in one place), or to optimize performance by routing data to specific reducers based on data characteristics.

10. How can you use MapReduce to build an inverted index for a large collection of documents?

MapReduce can build an inverted index by processing documents in parallel. The mapper emits key-value pairs where the key is a word found in a document, and the value is the document ID. The reducer receives all pairs with the same word as the key. It aggregates the document IDs for each word into a list, creating the inverted index entry: word -> [document1, document2, ...]. The final output is the inverted index.

11. Explain how to handle different data formats (e.g., CSV, JSON, Avro) in a MapReduce job.

To handle different data formats in a MapReduce job, you need to use appropriate input and output formats. For CSV, you can use TextInputFormat (with custom record readers to handle delimiters and quotes) or specialized CSV libraries. For JSON, use TextInputFormat and a JSON parsing library (like Jackson or Gson) within the mapper to convert each line to a JSON object. Avro requires using AvroKeyInputFormat and AvroKeyOutputFormat provided by Hadoop, along with defining an Avro schema.

The key is defining the input format correctly and parsing the data within the mapper. For output, choose a suitable output format (e.g., TextOutputFormat for writing plain text, SequenceFileOutputFormat for binary data) and serialize your data accordingly in the reducer. Choosing the right input/output format, and parsing the files correctly allows MapReduce to process diverse data structures.

12. Describe the process of implementing a distributed counter in MapReduce. What are its use cases?

Implementing a distributed counter in MapReduce involves leveraging the framework's built-in counter mechanism. Counters are global aggregate values that can be incremented within mappers and reducers. To implement a counter, define a counter group and counter name. Then, within the mapper or reducer, use the context.getCounter(groupName, counterName).increment(value) method to increment the counter. MapReduce aggregates these increments across all tasks and provides a final, global count at the end of the job.

Use cases for distributed counters include: * Counting occurrences of specific events: Track the number of times a particular error occurs or a specific data pattern is observed. * Monitoring data quality: Count the number of invalid or missing records to assess data cleanliness. * Tracking progress: Monitor the number of records processed or tasks completed to gauge job progress. * Debugging: Counters help identify the source and frequency of issues. * Performance analysis: Counters assist in calculating the number of operations performed, total time taken etc.

13. How would you design a MapReduce job to identify the top-K frequent items in a very large dataset?

To identify the top-K frequent items using MapReduce, I'd use a two-stage approach. The first MapReduce job would count the occurrences of each item. The mapper would emit (item, 1) for each item encountered. The reducer would then sum the counts for each item, outputting (item, count). The second MapReduce job would then identify the top-K items. The mapper would read the output of the first job. To ensure that all counts are accessible to a single reducer for top-K selection, the mapper emits (1, (item, count)). The reducer would maintain a priority queue (min-heap) of size K, adding items to the queue and evicting the item with the smallest count when the queue size exceeds K. Finally, the reducer would output the items in the queue as the top-K frequent items.

This approach handles very large datasets by distributing the initial counting across many mappers and reducers. The final reducer handles only the unique items and their aggregated counts making the top-K selection manageable even for very large initial datasets. The 1 in (1, (item, count)) is used as dummy key, to force all items to go into a single reducer.

14. Explain how to handle dependencies between MapReduce jobs. How would you chain them together?

Dependencies between MapReduce jobs can be handled using a workflow management system like Apache Oozie, Apache Airflow, or even simple shell scripts. These systems allow you to define a directed acyclic graph (DAG) of jobs where each node represents a MapReduce job and the edges represent dependencies. When a job completes successfully, the workflow system triggers the execution of its dependent jobs.

Chaining MapReduce jobs typically involves the following steps:

- Output of Job 1 as Input to Job 2: Ensure that the output directory of the first job is configured as the input directory for the second job. This allows data to flow seamlessly between jobs.

- Workflow Definition: Define the workflow using a system like Oozie. This involves specifying the jobs to be executed, their dependencies, and any necessary configuration parameters.

- Monitoring and Error Handling: Implement monitoring to track the progress of each job and handle any errors that may occur. For example, if a job fails, the workflow system can be configured to retry the job or send an alert.

- Example (Conceptual):

oozie job -oozie http://localhost:11000/oozie -config job.properties -run

15. Describe the steps involved in writing a custom input format for MapReduce. Why might you need one?

To write a custom input format for MapReduce, you typically need to implement the InputFormat interface. This involves creating implementations for the following: RecordReader, which defines how to read records from the input split, and InputSplit, which represents a chunk of data to be processed by a single map task.

You might need a custom input format when your data is in a non-standard format that Hadoop doesn't natively support (e.g., a custom binary format, data stored in a database needing specialized access, or handling compressed data differently). It gives you fine-grained control over how data is split and read, optimizing it for your specific data structure or storage mechanism. Example:

public class CustomInputFormat extends InputFormat<KeyType, ValueType> {

@Override

public List<InputSplit> getSplits(JobContext context) throws IOException, InterruptedException { ... }

@Override

public RecordReader<KeyType, ValueType> createRecordReader(InputSplit split, TaskAttemptContext context) throws IOException, InterruptedException { ... }

}

16. How can you use MapReduce to perform a graph processing task, such as finding connected components?

MapReduce can find connected components by iteratively propagating component IDs. Initially, each node is assigned its own unique ID. The Map function emits key-value pairs: <node_id, adjacent_node_id>. The Reduce function receives a node and all its adjacent nodes' IDs. It selects the minimum ID among the node's current ID and its neighbors' IDs, updating the node's ID to this minimum. This process repeats until no node changes its component ID in an iteration, indicating convergence. At the end, all nodes with the same ID belong to the same connected component.

- Map:

emit <node_id, adjacent_node_id> - Reduce:

min(node_id, adjacent_node_ids) - Iterate until convergence

17. Explain how to handle errors and exceptions in a MapReduce job. How do you ensure fault tolerance?

In MapReduce, error handling and fault tolerance are crucial. Errors during the map or reduce phases can cause job failures. To handle these, we can use techniques like try-catch blocks within the mapper and reducer functions to catch Exceptions. When an exception occurs, the task can log the error and potentially retry the operation a limited number of times. If retries fail, the task is marked as failed and the error is reported to the job tracker. Hadoop automatically retries failed tasks on different nodes.

For fault tolerance, Hadoop replicates the input data across multiple data nodes in HDFS. If a node fails, the data is still available from other replicas. The JobTracker monitors the progress of map and reduce tasks. If a task fails or a node goes down, the JobTracker automatically reschedules the task on another available node with the replicated data. This ensures the job completes even if some tasks or nodes fail. Additionally, speculative execution can be employed, where multiple instances of the same task run concurrently, and the first to finish is used, mitigating the impact of slow or problematic tasks.

18. Describe how to implement a bloom filter in MapReduce. What are its advantages and disadvantages?

A Bloom filter can be implemented in MapReduce to efficiently filter data. First, in a MapReduce job (Job 1), each mapper reads a portion of the dataset used to construct the Bloom filter. Each mapper calculates the k hash functions for each element in its input and sets the corresponding bits in a local Bloom filter. These local Bloom filters are then combined (e.g., via a reducer that performs a bitwise OR) to create a single, global Bloom filter. This global Bloom filter is then distributed to all nodes involved in the next MapReduce job (Job 2), often using the distributed cache. In Job 2, mappers read the data that needs to be filtered. For each record, they check if it's possibly present in the set represented by the Bloom filter. If the Bloom filter says the element is 'not present', the mapper can safely discard the record. If the Bloom filter indicates 'possibly present', the record is passed to the next stage (e.g., reducers) for further processing or written to the output.

Advantages of using a Bloom filter in MapReduce include reduced network traffic and improved processing speed because irrelevant data is filtered out early. A key disadvantage is the possibility of false positives. Bloom filters can indicate that an element is present when it is not, leading to unnecessary processing of some records. Also, Bloom filters cannot be used to delete entries once they are added.

19. How would you design a MapReduce job to calculate the PageRank of a very large web graph?

To calculate PageRank using MapReduce, the map phase would emit key-value pairs where the key is a webpage and the value is a list of its outgoing links and its current PageRank score. The reduce phase would then calculate the updated PageRank for each page based on the PageRank scores of the pages linking to it. This involves summing the contributions from incoming links and applying the damping factor.

Specifically, the map emits <target_page, contribution> for each link. The reduce step aggregates these contributions for each target_page, applies the damping factor, and outputs the updated target_page along with its new PageRank and its adjacency list for the next iteration. This process is iterated until convergence is achieved or a maximum number of iterations is reached.

20. Explain how to handle sparse data in MapReduce to minimize storage and processing costs.

Sparse data in MapReduce can be handled efficiently using several techniques to minimize storage and processing costs. First, data compression is crucial. Instead of storing default or zero values, represent only the non-zero elements. Use formats like compressed sparse row (CSR) or compressed sparse column (CSC) if appropriate. Second, employ data structures that efficiently store sparse data. For example, use dictionaries or hash tables to map indices to values. This avoids storing large arrays with mostly zero entries. During MapReduce jobs, the mapper can filter out zero values, only emitting key-value pairs for non-zero entries. This reduces the amount of data transferred and processed.

Further optimization can be achieved by partitioning and locality. Ensure that related data is grouped together to minimize data shuffling. Also, consider using combiner functions within the map phase to aggregate sparse data before sending it to the reducers. This reduces network traffic. Finally, choose data formats wisely; formats like Avro or Parquet support schema evolution and efficient encoding of sparse data by only persisting present data.

21. Describe the process of implementing a custom output format for MapReduce. Why might you need one?

To implement a custom output format for MapReduce, you need to create a class that extends org.apache.hadoop.mapreduce.OutputFormat. This involves overriding methods like getRecordWriter() to define how the output data is written. The RecordWriter class returned by getRecordWriter() handles the actual writing of key-value pairs to the desired format. Configuration, if any, is typically handled through the OutputFormat's checkOutputSpecs method.

You might need a custom output format if you need to output data in a format not natively supported by Hadoop, such as a specific file format, a database, or a messaging queue. This is particularly useful when integrating MapReduce with other systems or when dealing with specialized data storage requirements.

22. How can you use MapReduce to perform time series analysis on a large dataset of sensor readings?

MapReduce can process time series data in parallel for analysis. The map phase would read sensor readings, potentially extracting relevant features like timestamps and sensor IDs. It would then emit key-value pairs, where the key might be a time window (e.g., hourly, daily) or a sensor ID, and the value would be the sensor reading within that window.

The reduce phase aggregates data for each key. For example, if the key is a time window, the reducer could calculate statistics like average, min, max, or standard deviation of sensor readings within that window. This allows for identifying trends, anomalies, or other time-dependent patterns across the large dataset. For example:

#Example reducer

def reducer(key, values):

total = sum(values)

count = len(values)

average = total / count

yield key, average

23. Explain how to handle security considerations in a MapReduce environment, such as authentication and authorization.

Securing a MapReduce environment involves authentication and authorization to control access to data and resources. Authentication verifies the identity of users or applications, often using Kerberos. Authorization then determines what authenticated users are allowed to do, typically through Access Control Lists (ACLs) on HDFS directories and MapReduce jobs. These ACLs specify which users or groups have read, write, or execute permissions.

Other important security considerations include data encryption both in transit (using TLS/SSL for communication between nodes) and at rest (using encryption features provided by HDFS or other storage systems). Also, regular security audits and vulnerability assessments are crucial to identify and address potential weaknesses in the MapReduce infrastructure. Finally, proper configuration of the Hadoop firewall is necessary to restrict network access to essential services.

24. Describe how to implement a sliding window computation in MapReduce. Give an example.

Implementing a sliding window computation in MapReduce involves partitioning the data such that overlapping data segments are processed by the same reducer. The key idea is to create keys that allow overlapping data to be grouped together. For example, if you need a window of size k, for each data point i, emit key-value pairs ((i/k), value) and (((i/k)+1), value). This way any reducer processing key (i/k)+1 will have all data points within window size k from data point i.

Consider calculating a moving average with a window size of 3. The map function would emit two key-value pairs for each data point. For instance, if the input is (record_id, value), the map function emits: ((record_id / 3), value) and (((record_id / 3) + 1), value). The reducer receives all values associated with a given key (representing the start or end of a window) and performs the moving average calculation on the relevant data subset, effectively simulating the sliding window.

Advanced MapReduce interview questions

1. How would you optimize a MapReduce job when the input data is highly skewed, and some keys have significantly more data than others?

When dealing with skewed data in MapReduce, several techniques can be applied. One effective approach is to use a combiner. A combiner performs local aggregation on the mapper's output before sending it to the reducers, thus reducing the amount of data shuffled across the network. Another strategy is custom partitioning. Instead of using the default hash-based partitioner, implement a custom partitioner that distributes keys more evenly across reducers. For example, consider range partitioning or consistent hashing. Finally, salting can be used to break up hot keys. By appending a random suffix to a key (salting), you create multiple, less frequent keys. The reducer then needs to perform a second phase of aggregation to combine the results for the original key.

Specifically, consider a scenario where key 'A' has significantly more data than other keys. To address this:

- Combiner: Use a combiner to aggregate values associated with 'A' at the mapper level.

- Custom Partitioner: Design a partitioner that sends different 'A' keys (e.g., 'A_1', 'A_2', after salting) to different reducers.

- Salting: Prepend or append a random number or string to the skewed key 'A' during the map phase. This distributes the load across multiple reducers. In the reduce phase, remove the salt and aggregate the results. Example:

// Mapper code

String key = ... // your key

if (key.equals("A")) {

Random rand = new Random();

int salt = rand.nextInt(NUM_REDUCERS);

key = key + "_" + salt; // Salting

}

context.write(new Text(key), value);

// Reducer code

String originalKey = key.split("_")[0]; // Remove salt

2. Explain how you would handle a situation where a MapReduce job is running very slowly, and you suspect it's due to straggler tasks. How do you identify and mitigate stragglers?

When a MapReduce job is running slowly, and I suspect stragglers, I would first use the Hadoop UI or tools like YARN Resource Manager to identify the slow-running tasks. I'd look for tasks that are taking significantly longer than the average task completion time for that stage (map or reduce). Metrics like CPU utilization, I/O wait, and memory usage can also help diagnose if a task is genuinely slow or just waiting for resources.

To mitigate stragglers, I'd consider several approaches: 1) Speculative execution: Hadoop can launch duplicate tasks for the same input. The first task to complete 'wins', and the other is killed. This is enabled by default, but I'd ensure it's active. 2) Increase parallelism: Subdividing the input data into smaller chunks may help distribute the workload more evenly. However, this needs careful consideration, as excessive parallelism can introduce its own overhead. 3) Code optimization: Analyze the map and reduce functions for inefficiencies. Techniques like using combiners to reduce data transfer in the map stage, improving data structures, or optimizing algorithms can help. 4) Data skew: If the input data has a skewed distribution (some keys are much more frequent than others), it can lead to stragglers in the reduce phase. Custom partitioners can be used to distribute the skewed keys more evenly across reducers. 5) Resource allocation: Ensure that the Hadoop cluster has sufficient resources (CPU, memory, disk I/O) and that tasks are not being starved of resources.

3. Describe how to use a Combiner in MapReduce and explain the benefits of using it. Also, what are the potential drawbacks?

A Combiner in MapReduce is a semi-reducer that operates on the output of the mappers before it is sent to the reducers. It aggregates data locally at the mapper node, reducing the amount of data that needs to be transferred across the network. To use it, you would typically implement a Combiner function that mirrors the logic of the Reducer function, but operates on the mapper's output. You configure the MapReduce job to use this Combiner. The main benefit is reduced network I/O and improved job performance.

Potential drawbacks include that Combiner logic must be idempotent and commutative, as it may run zero, one, or multiple times. Also, if the Combiner's logic is significantly different from the Reducer's, it may lead to incorrect results. Debugging issues related to Combiners can sometimes be tricky because of their intermittent execution.

4. How do you design a MapReduce job to perform a distributed join of two very large datasets, when one dataset can fit in memory but the other cannot?

For a distributed join where one dataset (small dataset) fits in memory and the other (large dataset) does not, a common MapReduce strategy is a broadcast join (also called a replicated join). In the map phase, the small dataset is loaded into memory of each mapper. Each mapper then processes chunks of the large dataset. For each record in the large dataset, the mapper performs a join operation with the in-memory small dataset. The join key is used to find matching records.

The reducer phase is optional and depends on the specific requirements. It can be used for aggregation or further processing of the joined data. If no further processing is needed, the map outputs can be directly written to the final output. The small dataset is effectively broadcast to all mappers, avoiding shuffling the small dataset across the network, which would be inefficient. To optimize, the small dataset can be loaded into a hash map for fast lookups during the join operation in the mapper. Consider handling situations where a join key is not found in the small dataset.

5. Explain the purpose and benefits of using a Bloom filter in MapReduce. How does it help in reducing the amount of data processed?

A Bloom filter in MapReduce is a probabilistic data structure used to test whether an element is a member of a set. Its primary purpose is to reduce unnecessary I/O operations and network traffic by filtering out records that are highly likely not to be present in a particular reducer's input. This is particularly useful in scenarios like join operations, where you want to avoid sending records to a reducer that doesn't need them.

The benefits are significant reduction in data processed, especially in joins. Because it is used to pre-filter datasets by only allowing entries that might be in the joining key set to be passed, the amount of data processed is decreased. It accomplishes this at the cost of a small rate of false positives. In effect it performs a lossy data filtering that drastically decreases the size of the data set.

6. How would you implement a secondary sort in MapReduce, and why is it useful?

Secondary sort in MapReduce allows you to sort values associated with a key in the reduce phase. This is achieved by including the sorting criterion as part of the composite key used by the MapReduce framework. The mapper emits (composite_key, value) pairs. The composite key consists of the natural key and the secondary sort key. The partitioner and grouping comparator ensure all records with the same natural key are sent to the same reducer.

It's useful because MapReduce, by default, only sorts keys. Secondary sort enables ordering of values within each key's value set, providing more control and efficiency for tasks like time series analysis, log processing, or generating sorted lists of related items without needing to load all values into memory and sort in the reducer, improving performance and scalability.

7. Describe how you can handle complex data types in MapReduce, such as nested JSON objects or Protocol Buffers. What are the considerations?

To handle complex data types like nested JSON objects or Protocol Buffers in MapReduce, you need to define custom input and output formats. For nested JSON, you might use a library like Jackson or Gson to parse the JSON objects within your mapper and reducer. You would implement a custom InputFormat to read the JSON data and a custom RecordReader to parse each JSON record. Similarly, for Protocol Buffers, you'd use the Protocol Buffer library to serialize and deserialize the data. A custom InputFormat and RecordReader would handle reading the binary Protobuf data.

Key considerations include serialization/deserialization overhead, the size of the data (which impacts network transfer and storage), and schema evolution. Efficient serialization libraries are crucial. Ensure that the data fits within the available memory. With Protobufs, evolving the schema requires careful planning to maintain compatibility between different versions of the data. When using Json, it is also important to define the schema clearly and handle potential schema evolution scenarios. Consider using Avro if schema evolution is a major concern.

8. Explain how to chain multiple MapReduce jobs together to perform a complex data processing pipeline. What are the advantages and disadvantages of this approach?

Chaining MapReduce jobs involves using the output of one MapReduce job as the input for the next. This is typically achieved by writing the output of the first job to a persistent storage like HDFS. The subsequent MapReduce job is then configured to read its input from this location. Frameworks like Apache Pig and Apache Hive can abstract this process to make the process more automated via script execution or query evaluation. For example, using a simple pig script, data is transformed and one step is used as input to another implicitly based on how data flows through the script.

Advantages include modularity, allowing complex tasks to be broken down into smaller, manageable units. This also promotes code reusability. Disadvantages include increased I/O overhead, as data is written to and read from disk between jobs, and increased latency due to the sequential execution of jobs. Furthermore, error handling and debugging can become more complex due to the distributed nature of the jobs and interdependencies between steps.

9. How can you use MapReduce to perform graph processing tasks, such as finding connected components or calculating PageRank?

MapReduce can be adapted for graph processing by representing the graph's adjacency list as input data. Each mapper processes a node and its outgoing edges, emitting key-value pairs where the key is a destination node and the value is information relevant to the specific graph algorithm. For connected components, the mapper might emit the source node's component ID to its neighbors. A reducer then aggregates these values, updating component IDs if necessary. This process repeats iteratively until convergence.

For PageRank, the mapper distributes a node's PageRank score proportionally to its outgoing links, emitting (destination node, partial rank) pairs. The reducer sums these partial ranks to update each node's PageRank. Like connected components, PageRank calculations are iterative, requiring multiple MapReduce rounds until the PageRank values stabilize. Each iteration refines the node's PageRank score based on contributions from other nodes in the graph.

10. Explain how to handle failures in a MapReduce job, such as task failures or node failures. How does Hadoop ensure fault tolerance?

Hadoop handles failures in MapReduce jobs through several mechanisms. If a task fails (e.g., due to a bug in the code or a process crash), the TaskTracker will attempt to rerun the task on the same or a different node. Hadoop uses heartbeats from TaskTrackers to the JobTracker to detect node failures. If a TaskTracker fails to send a heartbeat within a specified timeout, the JobTracker assumes the node has failed and re-schedules any tasks running on that node to other available nodes.

Hadoop achieves fault tolerance primarily through data replication. The Hadoop Distributed File System (HDFS) stores data in blocks, and each block is replicated across multiple nodes (typically 3 by default). If a node containing a data block fails, Hadoop can retrieve the data from one of the replicas on another node. This replication strategy ensures that data is not lost even if multiple nodes fail. For task execution, Hadoop also uses a speculative execution mechanism, where multiple copies of the same task are run on different nodes. The first task to complete successfully is used, and the others are killed, mitigating the impact of slow or failing tasks.

11. Describe how to implement a custom Partitioner in MapReduce. When would you need to use one?

To implement a custom Partitioner in MapReduce, you need to create a class that extends the org.apache.hadoop.mapreduce.Partitioner class. You must override the getPartition() method, which determines the partition number for a given key-value pair based on your custom logic. This method takes the key, value, and the number of reducers as input and returns an integer representing the partition ID. Make sure to configure the MapReduce job to use your custom partitioner class via job.setPartitionerClass(YourCustomPartitioner.class);.

You would need a custom partitioner when the default partitioner (which usually uses a hash of the key) doesn't distribute the data evenly across reducers, leading to skewed data processing. This can happen when your keys have a non-uniform distribution or when you want to route related keys to the same reducer for specific processing requirements, such as performing calculations on all data for a specific customer or geographical region.

12. How would you debug a MapReduce job that is producing incorrect results? What tools and techniques would you use?

Debugging a MapReduce job producing incorrect results involves a systematic approach. First, I'd examine the input data for inconsistencies or errors. Then, I'd thoroughly review the mapper and reducer code, paying close attention to data transformations and aggregations. Key tools and techniques include: logging within the mapper and reducer to track data flow and intermediate values (e.g., using log4j or similar), using a debugger on a smaller sample of the data in a local environment, carefully examining the counters to identify potential issues, and using tools like Hadoop's web UI to monitor job progress and identify potential bottlenecks or failures. Also, analyzing the output of individual tasks can help pinpoint where the incorrect results are originating from. It is often useful to write unit tests for mapper and reducer functions to isolate and verify their correctness outside of the MapReduce environment.

13. Explain how to optimize the performance of a MapReduce job by adjusting parameters such as the number of mappers, reducers, and memory settings.

Optimizing MapReduce job performance involves tuning various parameters. For mappers, aim for a size that processes data efficiently, avoiding too many small tasks (overhead) or too few large ones (imbalance). The number of reducers depends on the desired parallelism and output size. Too few reducers can create bottlenecks, while too many can increase overhead.

Memory settings are crucial. Increase mapreduce.map.memory.mb and mapreduce.reduce.memory.mb to allow mappers and reducers to hold more data in memory, reducing disk I/O. Consider mapreduce.map.java.opts and mapreduce.reduce.java.opts for tuning JVM options, particularly heap size (-Xmx). Set mapreduce.task.io.sort.mb appropriately for sort buffer size during shuffling. Compression (e.g., Snappy) can also significantly reduce network bandwidth usage during shuffling by setting mapreduce.map.output.compress to true and mapreduce.map.output.compress.codec appropriately.

14. Describe how you can use MapReduce to process real-time streaming data. What are the challenges and limitations of this approach?

While MapReduce is traditionally used for batch processing of large datasets, it's not ideally suited for real-time streaming data due to its inherent latency. A naive approach might involve micro-batching, where you collect small chunks of streaming data over short time intervals (e.g., every few seconds) and then run a MapReduce job on each batch. This introduces significant overhead and delay, making it unsuitable for truly real-time analysis.

Challenges include high latency, the need for efficient scheduling of many small MapReduce jobs, and the overhead of job setup and teardown for each micro-batch. Limitations stem from MapReduce's design for large, static datasets rather than continuous, evolving streams. Frameworks like Apache Storm, Apache Flink, and Apache Spark Streaming are more appropriate for processing real-time streaming data due to their low-latency stream processing capabilities.

15. How do you handle data consistency issues in MapReduce, especially when dealing with mutable data?

Data consistency in MapReduce with mutable data is challenging because MapReduce is inherently designed for immutable data and batch processing. Several strategies can be employed to mitigate consistency issues:

- Idempotent Operations: Design your map and reduce functions to be idempotent. This means that running the same operation multiple times has the same effect as running it once. This is crucial for handling failures and retries.

- Data Versioning: Introduce versioning to the data. Each update creates a new version. MapReduce jobs can then operate on specific versions, ensuring consistency for that job.

- External Consistency Mechanisms: Use external systems like ZooKeeper or a distributed lock to coordinate updates and ensure consistency across multiple MapReduce jobs modifying the same data. This adds complexity but is necessary for strong consistency.

- Combine Phase awareness: The Combine phase runs on a local node and can only reduce keys on that node. If combining, it cannot work for operations like average, but it can work for operations like SUM. Be aware of this if your problem might include consistency issues.

16. Explain how to use counters in MapReduce and their use cases. How do you access counter values?

Counters in MapReduce are global counters that track metrics across the entire job. They're useful for monitoring performance, debugging, and gathering statistics. They allow you to track how many times a particular event occurred during the MapReduce job. Common use cases include counting the number of malformed input records, the number of successful operations, or the frequency of certain conditions. Counters can be defined in mappers, reducers, or the driver program.

You can access counter values after the MapReduce job completes. The values are available through the Job object's getCounters() method. You can then iterate through the counter groups and counters to retrieve the specific values. For example, in Java:

Counters counters = job.getCounters();

Counter myCounter = counters.findCounter("MyGroup", "MyCounter");

long value = myCounter.getValue();

17. Describe how you would implement a distributed grep using MapReduce.

To implement a distributed grep using MapReduce, the process involves two main stages: Map and Reduce.

In the Map stage, each mapper reads a chunk of the input data (e.g., a line from a file). The mapper then checks if the line matches the given search pattern (grep expression). If a match is found, the mapper emits a key-value pair, where the key could be the filename or line number (for context), and the value is the matching line. In the Reduce stage, the reducers collect all the key-value pairs produced by the mappers for the same key. The reducer then simply writes the key-value pairs to the output. Since grep is primarily about filtering and finding matches, there's often no complex aggregation or transformation needed in the reduce phase - it essentially acts as a collector. grep functionality is embedded inside the Map phase. The search pattern (grep expression) is passed to all mappers as an argument.

18. How can you use MapReduce to perform machine learning tasks, such as training a classification model or running a clustering algorithm?

MapReduce can be adapted for machine learning by breaking down iterative algorithms into map and reduce phases. For example, in training a classification model like logistic regression, the map phase could process subsets of the training data, calculating gradients for the model parameters. The reduce phase would then aggregate these gradients to compute the overall gradient and update the model parameters. This process is repeated iteratively until the model converges.

For clustering algorithms like k-means, the map phase could assign data points to the nearest cluster centroid. The reduce phase would then recalculate the cluster centroids based on the assigned data points. This iterative process of assignment and centroid update continues until the cluster assignments stabilize. Frameworks like Hadoop provide the distributed infrastructure needed to implement these MapReduce-based machine learning algorithms, allowing for processing of large datasets. Libraries such as Mahout provide pre-built implementations of common algorithms.

19. Explain how to implement a Top-N pattern using MapReduce. What are the different approaches, and what are their trade-offs?

Implementing a Top-N pattern in MapReduce involves identifying the N largest (or smallest) values from a dataset. There are a couple of common approaches:

- Single MapReduce Job: The map phase emits all records. The reducer receives all records and sorts them to identify the top N. This is simple to implement, but it's inefficient for large datasets because a single reducer becomes a bottleneck. It suffers from scalability issues.