Hiring the right manual tester is paramount for ensuring the software quality and delivering seamless user experiences. Assessing a candidate's skills through manual testing questions is a practical approach to understanding their expertise in the field.

This blog post covers a comprehensive set of questions tailored for different levels of experience, from junior to mid-tier testers. It includes targeted queries on areas such as test case design and execution to gauge a candidate's practical knowledge.

Utilizing these questions effectively can streamline your hiring process and enhance your evaluation accuracy. Complementing interviews with our Manual Testing online test ensures a thorough assessment of candidates' abilities before the interview stages.

Table of contents

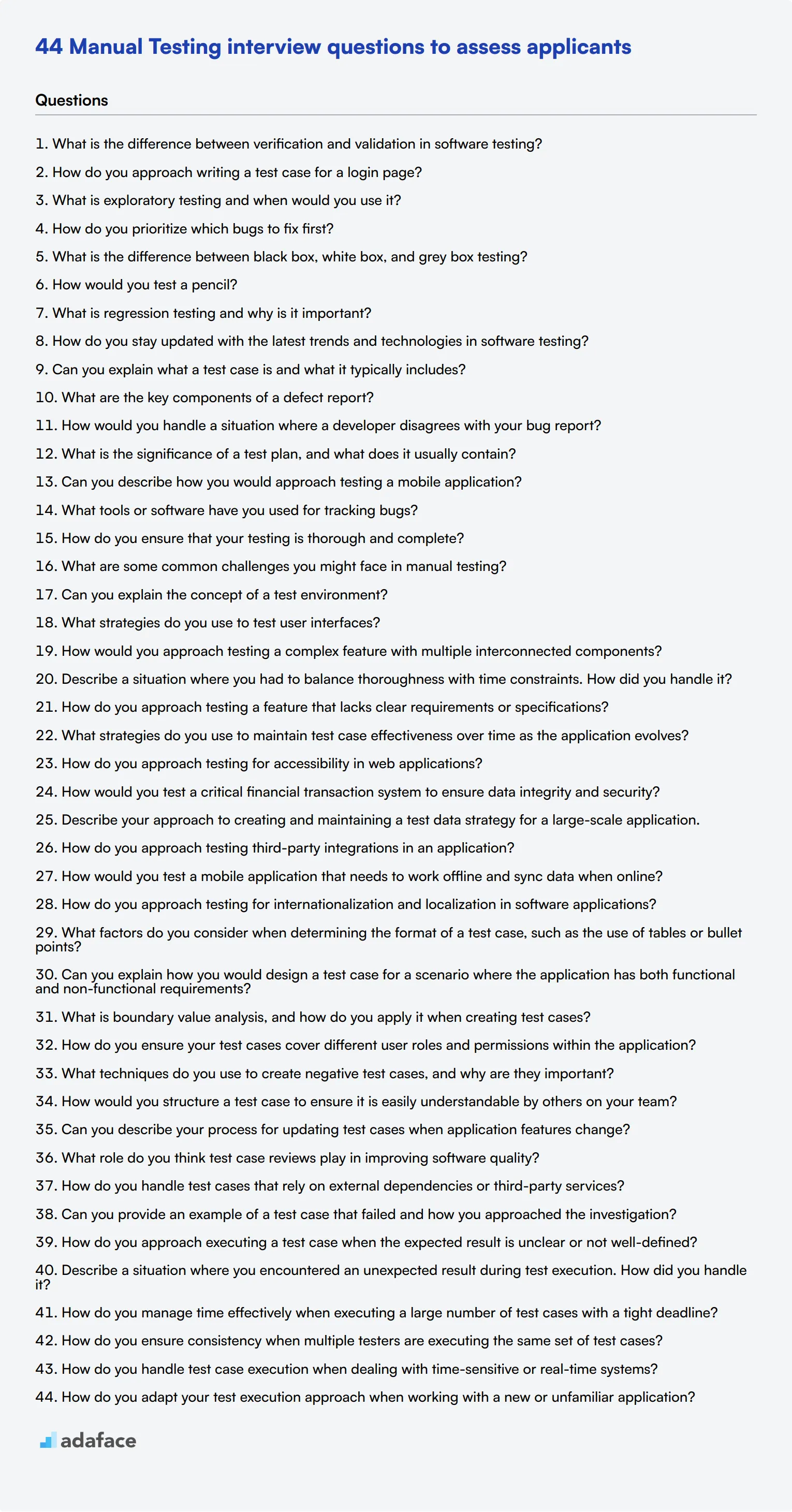

8 general Manual Testing interview questions and answers to assess applicants

Ready to uncover hidden gems in your manual testing candidates? These 8 general questions will help you assess applicants' understanding of core concepts and practical skills. Use them to spark insightful discussions and gauge how well candidates can apply their knowledge in real-world scenarios. Remember, the best answers often reveal a candidate's problem-solving approach and attention to detail.

1. What is the difference between verification and validation in software testing?

Verification and validation are two crucial aspects of the software testing process, but they serve different purposes:

- Verification: This process checks whether the software is being developed according to the specified requirements. It's about building the product right. Verification typically involves reviews, walkthroughs, and inspections of documents, designs, code, and other artifacts.

- Validation: This process determines if the developed software meets the actual needs of the end-users and stakeholders. It's about building the right product. Validation usually involves testing the actual product through various testing methods like functional, integration, and user acceptance testing.

Look for candidates who can clearly differentiate between these concepts and provide examples of how they've applied both in their testing experience. Strong answers will also touch on how verification and validation complement each other in ensuring overall software quality.

2. How do you approach writing a test case for a login page?

When writing a test case for a login page, a thorough approach should cover various scenarios. A strong answer might include the following steps:

- Identify the components: Username field, password field, login button, and any additional elements like 'Remember me' checkbox or 'Forgot password' link.

- Define positive scenarios: Successful login with valid credentials.

- Define negative scenarios: Invalid username, invalid password, empty fields, case sensitivity checks.

- Consider boundary cases: Maximum/minimum length of username and password.

- Test functionality: 'Remember me' feature, password masking, error messages.

- Check UI/UX aspects: Field labels, button text, overall layout.

- Verify security measures: Account lockout after multiple failed attempts, secure connection (HTTPS).

- Test across different browsers and devices for compatibility.

Look for candidates who demonstrate a systematic approach and consider various aspects beyond just the basic functionality. The best responses will also mention the importance of clear, replicable steps and expected results in their test cases.

3. What is exploratory testing and when would you use it?

Exploratory testing is a hands-on approach where testers simultaneously learn, design, and execute tests. Unlike scripted testing, which follows predefined test cases, exploratory testing allows testers to investigate the software dynamically, using their creativity and experience to uncover defects.

Situations where exploratory testing is particularly useful include:

- Early stages of product development when requirements are still evolving

- Testing new features or changes where formal test cases haven't been developed yet

- Complementing scripted tests to find edge cases or unexpected scenarios

- Time-constrained projects where quick feedback is needed

- Investigating a specific defect or area of concern

Strong candidates should emphasize the importance of documenting findings during exploratory testing and explain how they balance this approach with more structured testing methods. Look for answers that highlight the tester's ability to think critically and adapt their strategy based on what they discover during the testing process.

4. How do you prioritize which bugs to fix first?

Prioritizing bug fixes is a critical skill for any quality assurance engineer. A comprehensive answer should include the following factors:

- Severity: How much does the bug impact core functionality or user experience?

- Frequency: How often does the bug occur?

- Business impact: Does the bug affect revenue-generating features or critical business processes?

- User impact: How many users are affected by the bug?

- Ease of reproduction: Can the bug be consistently reproduced?

- Workarounds: Are there any temporary solutions available?

- Fix complexity: How much time and resources are required to fix the bug?

- Release schedule: Is there an upcoming release that the fix needs to be included in?

Look for candidates who can explain their reasoning process and demonstrate an understanding of balancing technical considerations with business priorities. The best answers will also mention the importance of collaborating with stakeholders (developers, product managers, etc.) in the prioritization process.

5. What is the difference between black box, white box, and grey box testing?

Black box, white box, and grey box testing are different approaches to software testing, each with its own focus and methodology:

- Black box testing: Testers have no knowledge of the internal workings of the system. They focus on inputs and outputs, testing functionality from an end-user perspective.

- White box testing: Testers have full knowledge of the internal structure and code. They design tests based on the program's logic and implementation details.

- Grey box testing: A combination of black and white box testing. Testers have partial knowledge of the internal workings and use this to design more effective tests while still focusing on functionality.

Strong candidates should be able to explain the advantages and limitations of each approach. For example, black box testing is good for finding user-oriented issues but may miss logic errors, while white box testing can uncover code-specific problems but might overlook some user scenarios. Look for answers that demonstrate understanding of when to apply each method in the testing process.

6. How would you test a pencil?

This question assesses a candidate's ability to think creatively and apply testing principles to everyday objects. A comprehensive answer might include:

- Functionality tests:

- Writing test: Does it write smoothly on different paper types?

- Sharpening test: Can it be sharpened easily without breaking?

- Eraser test (if applicable): Does the eraser remove pencil marks effectively?

- Durability tests:

- Drop test: Does it break or crack when dropped from various heights?

- Pressure test: Does the lead break under normal writing pressure?

- Quality tests:

- Lead consistency: Is the darkness and thickness of the line consistent?

- Wood quality: Is the wood free from splinters and defects?

- Usability tests:

- Grip comfort: Is it comfortable to hold for extended periods?

- Balance: Does it feel well-balanced while writing?

- Safety tests:

- Toxicity: Are the materials used non-toxic?

- Sharp edges: Are there any unsafe sharp edges?

Look for candidates who approach the task systematically, considering various aspects of the pencil's use and potential failure points. The best answers will demonstrate an ability to break down a simple object into testable components and create a comprehensive test plan.

7. What is regression testing and why is it important?

Regression testing is the process of retesting previously tested parts of a software application to ensure that recent changes or updates haven't introduced new defects or caused existing functionality to fail. It's a critical part of the software development lifecycle, especially in agile environments where frequent changes are common.

The importance of regression testing lies in its ability to:

- Maintain software quality over time

- Detect unintended side effects of code changes

- Ensure that fixed bugs stay fixed

- Validate that new features don't break existing functionality

- Build confidence in the software's stability for stakeholders

Strong candidates should be able to explain different approaches to regression testing, such as full regression, partial regression, and risk-based regression. Look for answers that demonstrate understanding of when and how to apply regression testing effectively, and awareness of tools or techniques for managing large regression test suites.

8. How do you stay updated with the latest trends and technologies in software testing?

Staying updated in the rapidly evolving field of software testing is crucial for any test engineer. A comprehensive answer might include:

- Following industry blogs and websites

- Participating in online forums and communities (e.g., Stack Overflow, Reddit)

- Attending webinars, conferences, and workshops

- Taking online courses or certifications

- Reading books and research papers on testing methodologies

- Experimenting with new testing tools and frameworks

- Networking with other professionals in the field

- Contributing to open-source testing projects

Look for candidates who demonstrate a genuine passion for learning and a proactive approach to professional development. The best answers will include specific examples of resources they use or recent trends they've incorporated into their testing practices. This question also provides insight into the candidate's commitment to continuous improvement and adaptability.

10 Manual Testing interview questions to ask junior testers

To effectively gauge the fundamental skills and knowledge of junior testers, use this list of targeted questions during interviews. These inquiries will help you explore their understanding of essential testing concepts and practices, ensuring you find the right fit for your team's quality assurance needs. For more insights, check out the quality assurance engineer job description.

- Can you explain what a test case is and what it typically includes?

- What are the key components of a defect report?

- How would you handle a situation where a developer disagrees with your bug report?

- What is the significance of a test plan, and what does it usually contain?

- Can you describe how you would approach testing a mobile application?

- What tools or software have you used for tracking bugs?

- How do you ensure that your testing is thorough and complete?

- What are some common challenges you might face in manual testing?

- Can you explain the concept of a test environment?

- What strategies do you use to test user interfaces?

10 intermediate Manual Testing interview questions and answers to ask mid-tier testers.

Ready to level up your manual testing interviews? These 10 intermediate questions are perfect for assessing mid-tier testers. They'll help you gauge a candidate's practical knowledge and problem-solving skills without diving too deep into technical jargon. Let's get cracking!

1. How would you approach testing a complex feature with multiple interconnected components?

A strong candidate should outline a systematic approach to testing complex features:

- Understand the feature's architecture and dependencies

- Break down the feature into smaller, testable units

- Create a test plan that covers individual components and their interactions

- Prioritize testing critical paths and high-risk areas

- Use a combination of unit, integration, and end-to-end testing

- Collaborate with developers to understand potential weak points

Look for answers that demonstrate analytical thinking and the ability to manage complexity. A good follow-up question might be to ask for a specific example from their past experience.

2. Describe a situation where you had to balance thoroughness with time constraints. How did you handle it?

An ideal response should showcase the candidate's ability to prioritize and make informed decisions under pressure. They might describe:

- Analyzing the project's critical features and risk areas

- Creating a streamlined test plan focusing on high-impact areas

- Employing efficient testing techniques like boundary value analysis

- Communicating clearly with stakeholders about trade-offs

- Leveraging automation for repetitive tasks to save time

Pay attention to how the candidate balances quality assurance with practical constraints. Their answer should reflect good judgment and adaptability in real-world scenarios.

3. How do you approach testing a feature that lacks clear requirements or specifications?

A competent tester should demonstrate proactivity and problem-solving skills in this scenario. A good answer might include:

- Engaging with stakeholders to clarify expectations

- Using exploratory testing techniques to understand the feature

- Documenting assumptions and creating informal specifications

- Collaborating with developers to understand intended functionality

- Creating test cases based on user stories or typical use cases

- Iteratively refining tests as more information becomes available

Look for candidates who show initiative in gathering information and can work effectively with ambiguity. This skill is crucial for a quality assurance engineer in dynamic development environments.

4. What strategies do you use to maintain test case effectiveness over time as the application evolves?

An experienced tester should have strategies to keep test cases relevant and effective:

- Regular review and update of test cases alongside product changes

- Implementing a version control system for test cases

- Collaborating with developers to understand upcoming changes

- Using data-driven testing to easily update test data

- Employing modular test design for easier maintenance

- Periodically running full regression suites to catch unexpected issues

Evaluate the candidate's understanding of test maintenance as an ongoing process. Their answer should reflect an awareness of the challenges in keeping tests current in a rapidly changing software environment.

5. How do you approach testing for accessibility in web applications?

A comprehensive answer should cover various aspects of accessibility testing:

- Familiarity with WCAG guidelines and standards

- Using screen readers and other assistive technologies

- Checking for proper semantic HTML structure

- Testing keyboard navigation and focus management

- Verifying color contrast and text resizing

- Evaluating form inputs and error messages for clarity

- Collaborating with UX designers to address accessibility early in development

Look for candidates who understand the importance of inclusive design and have practical experience in accessibility testing. This knowledge is increasingly crucial in modern web development.

6. How would you test a critical financial transaction system to ensure data integrity and security?

A strong answer should address both functional and non-functional aspects of testing a financial system:

- Thorough testing of calculation accuracy and data consistency

- Verifying proper handling of edge cases and error conditions

- Testing transaction rollback and recovery mechanisms

- Conducting security testing, including penetration testing and encryption validation

- Performance testing under various load conditions

- Compliance testing with relevant financial regulations

- Data integrity checks, including database consistency and audit trails

Evaluate the candidate's understanding of the critical nature of financial systems and their ability to think comprehensively about potential risks and failure points.

7. Describe your approach to creating and maintaining a test data strategy for a large-scale application.

An effective test data strategy is crucial for thorough testing. A good answer might include:

- Analyzing data requirements for various test scenarios

- Creating a mix of realistic and edge-case test data

- Implementing data generation tools or scripts for scalability

- Ensuring data privacy and compliance with regulations like GDPR

- Version controlling test data alongside application code

- Regularly refreshing and sanitizing test data

- Collaborating with development teams to understand data models and relationships

Look for candidates who understand the challenges of managing test data at scale and have strategies to ensure data quality, relevance, and security.

8. How do you approach testing third-party integrations in an application?

Testing third-party integrations requires a specific approach. A strong answer might include:

- Understanding the integration points and expected behaviors

- Creating mock services to simulate third-party responses

- Testing both happy paths and error scenarios

- Verifying data mapping and transformation accuracy

- Checking for proper error handling and logging

- Performance testing to ensure the integration doesn't impact system responsiveness

- Security testing, especially for data transfer between systems

Assess the candidate's ability to think beyond the boundaries of the main application and consider the complexities introduced by external dependencies.

9. How would you test a mobile application that needs to work offline and sync data when online?

Testing offline functionality and data synchronization requires a comprehensive approach:

- Verifying offline data storage and retrieval

- Testing the transition between online and offline states

- Checking data conflict resolution during synchronization

- Verifying data integrity after sync operations

- Testing various network conditions (poor connectivity, intermittent connection)

- Ensuring proper error handling and user notifications

- Performance testing of sync operations with large datasets

Look for candidates who understand the unique challenges of mobile and offline-capable applications. Their answers should demonstrate awareness of potential sync issues and user experience considerations.

10. How do you approach testing for internationalization and localization in software applications?

A comprehensive approach to internationalization and localization testing should cover:

- Verifying proper handling of different character sets and encodings

- Testing date, time, and number formats across locales

- Checking for text expansion issues in translated content

- Verifying cultural appropriateness of images and colors

- Testing right-to-left languages and their impact on UI

- Ensuring proper sorting and searching in different languages

- Collaborating with native speakers for linguistic accuracy

Evaluate the candidate's understanding of the complexities involved in creating globally accessible software. Look for awareness of both technical and cultural aspects of internationalization.

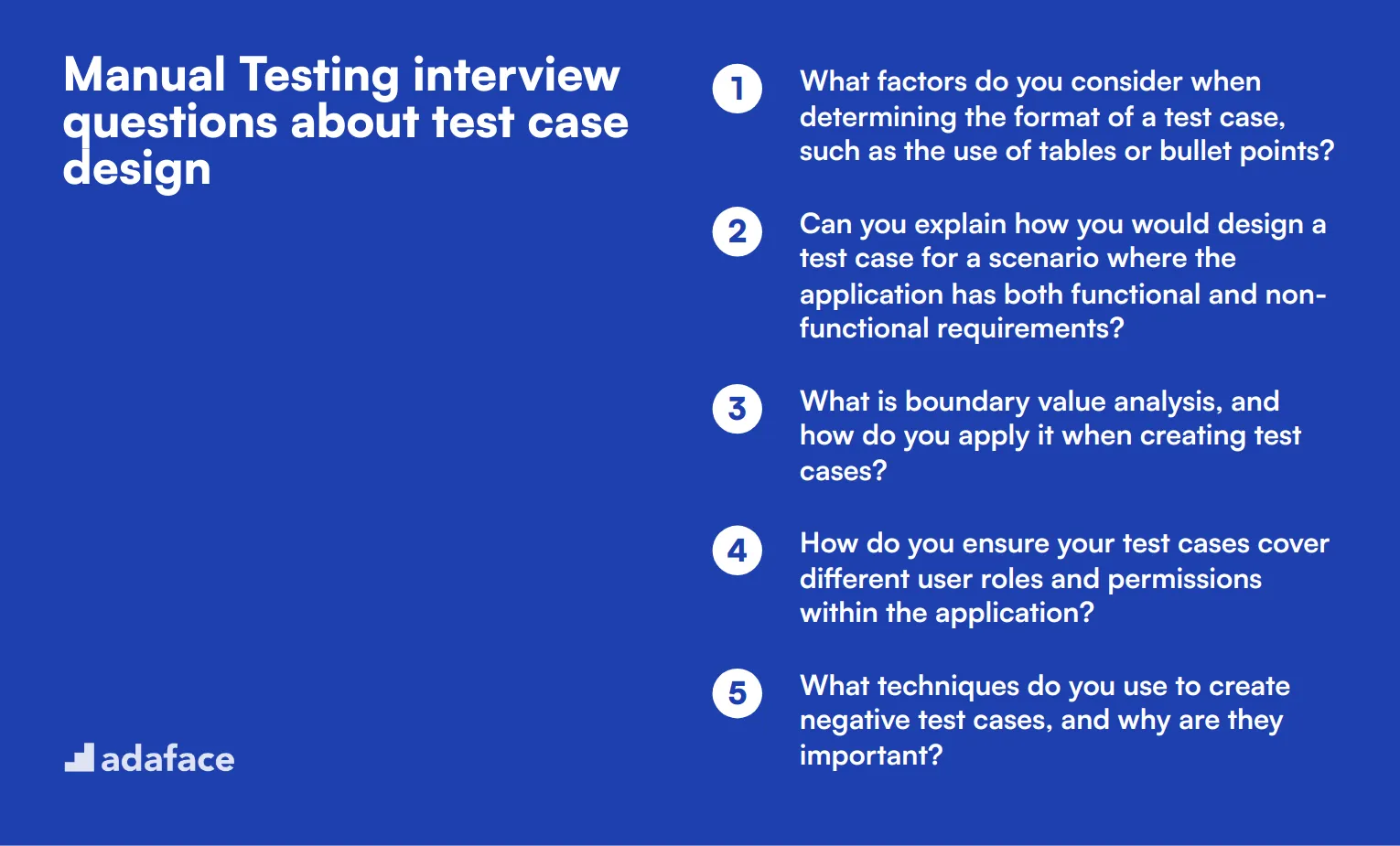

10 Manual Testing interview questions about test case design

To assess candidates' ability to design effective test cases, use these targeted questions during interviews. They help determine whether applicants possess the essential skills needed for roles like a software tester or quality assurance engineer.

- What factors do you consider when determining the format of a test case, such as the use of tables or bullet points?

- Can you explain how you would design a test case for a scenario where the application has both functional and non-functional requirements?

- What is boundary value analysis, and how do you apply it when creating test cases?

- How do you ensure your test cases cover different user roles and permissions within the application?

- What techniques do you use to create negative test cases, and why are they important?

- How would you structure a test case to ensure it is easily understandable by others on your team?

- Can you describe your process for updating test cases when application features change?

- What role do you think test case reviews play in improving software quality?

- How do you handle test cases that rely on external dependencies or third-party services?

- Can you provide an example of a test case that failed and how you approached the investigation?

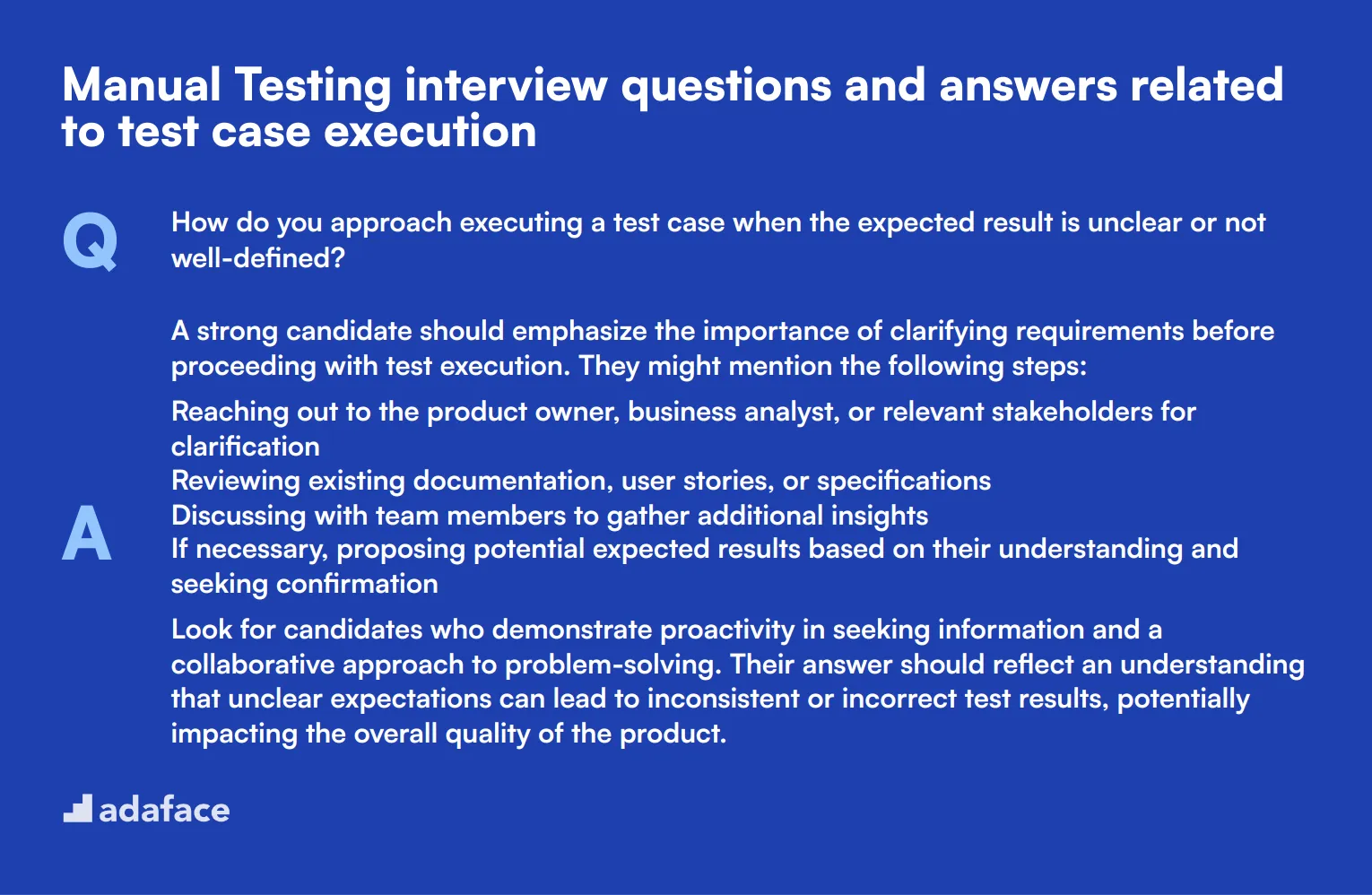

6 Manual Testing interview questions and answers related to test case execution

When it comes to test case execution, knowing the right questions to ask can make all the difference in finding the perfect candidate. This list of questions will help you dive deep into a candidate's practical experience and problem-solving skills. Use these to uncover how they approach real-world testing scenarios and handle the unexpected.

1. How do you approach executing a test case when the expected result is unclear or not well-defined?

A strong candidate should emphasize the importance of clarifying requirements before proceeding with test execution. They might mention the following steps:

- Reaching out to the product owner, business analyst, or relevant stakeholders for clarification

- Reviewing existing documentation, user stories, or specifications

- Discussing with team members to gather additional insights

- If necessary, proposing potential expected results based on their understanding and seeking confirmation

Look for candidates who demonstrate proactivity in seeking information and a collaborative approach to problem-solving. Their answer should reflect an understanding that unclear expectations can lead to inconsistent or incorrect test results, potentially impacting the overall quality of the product.

2. Describe a situation where you encountered an unexpected result during test execution. How did you handle it?

An ideal response should outline a systematic approach to handling unexpected results:

- Verify the test steps were followed correctly

- Double-check the test environment and data

- Reproduce the issue to ensure it's consistent

- Document the unexpected behavior in detail

- Consult with developers or other testers to determine if it's a potential bug or a misunderstanding of requirements

- If confirmed as a bug, create a detailed bug report

- Update the test case if necessary to reflect the correct behavior

Pay attention to candidates who emphasize the importance of thorough investigation before jumping to conclusions. Their answer should demonstrate critical thinking, attention to detail, and effective communication skills in reporting issues.

3. How do you manage time effectively when executing a large number of test cases with a tight deadline?

A strong candidate should discuss strategies for prioritizing and optimizing test execution:

- Prioritizing test cases based on criticality and risk

- Identifying and executing smoke tests or critical path tests first

- Grouping similar test cases to minimize setup time

- Utilizing test automation where applicable for repetitive or time-consuming tests

- Collaborating with team members to distribute the workload

- Regularly communicating progress and potential delays to stakeholders

Look for candidates who demonstrate the ability to balance thoroughness with efficiency. They should also show awareness of the importance of communication and teamwork in meeting tight deadlines without compromising quality.

4. How do you ensure consistency when multiple testers are executing the same set of test cases?

A good answer should address the following points:

- Developing and maintaining detailed, step-by-step test cases with clear expected results

- Establishing and documenting standard testing procedures and guidelines

- Conducting regular team meetings to discuss test cases and align on interpretation

- Using a centralized test management tool to track execution and results

- Implementing peer reviews or cross-checking of test results

- Providing training or mentoring to less experienced team members

Evaluate candidates based on their understanding of the importance of standardization and collaboration in maintaining consistency across the testing team. Look for those who emphasize clear communication and knowledge sharing as key factors in ensuring consistent test execution.

5. How do you handle test case execution when dealing with time-sensitive or real-time systems?

An effective answer should cover the following aspects:

- Understanding the timing constraints and requirements of the system

- Designing test cases that specifically target time-sensitive functionality

- Utilizing specialized tools or frameworks for performance and timing measurements

- Simulating various timing scenarios, including edge cases

- Ensuring the test environment closely mimics the production environment

- Coordinating with developers to implement appropriate logging or debugging mechanisms

- Considering the impact of external factors (e.g., network latency) on test results

Look for candidates who demonstrate awareness of the unique challenges posed by real-time systems. Their approach should show a balance between thoroughness and practicality, acknowledging that testing time-sensitive functionality often requires specialized techniques and tools.

6. How do you adapt your test execution approach when working with a new or unfamiliar application?

A strong candidate should outline a structured approach to familiarizing themselves with a new application:

- Review available documentation, including user manuals and specifications

- Explore the application through exploratory testing to understand its functionality

- Consult with developers or product owners to clarify any uncertainties

- Start with basic smoke tests to ensure core functionality

- Gradually increase test coverage as familiarity with the application grows

- Collaborate with team members who may have more experience with the application

- Document findings and create new test cases as needed

Evaluate candidates based on their ability to balance learning with effective testing. Look for those who emphasize the importance of continuous learning, asking questions, and adapting their approach as they gain more knowledge about the application.

Which Manual Testing skills should you evaluate during the interview phase?

Evaluating a candidate's manual testing skills during the interview phase is essential, but it's impossible to assess everything in a single interview. However, focusing on a few core skills can provide valuable insights into their capabilities. Here are some key manual testing skills to evaluate:

Attention to Detail

You can use an assessment test that includes relevant MCQs to filter out this skill. Check out our Attention to Detail Test.

You can also ask targeted interview questions to judge this skill specifically.

Can you describe a time when you found a defect that others missed? How did you identify it?

Look for answers that show the candidate’s thoroughness and ability to spot anomalies that were overlooked by others.

Test Case Design

Consider using an assessment test with relevant MCQs to evaluate this skill. Check our Manual Testing Test.

You can ask specific questions to judge their test case design skills.

How do you approach writing test cases for a new feature?

Look for a structured and methodical approach, clear understanding of requirements, and the ability to think from the user's perspective.

Communication Skills

You can use an assessment test focused on communication skills. Check out our Communication Test.

Ask targeted interview questions to evaluate their communication skills.

Can you describe a situation where you had to explain a complex issue to a non-technical stakeholder?

Look for answers that show the candidate’s ability to simplify complex issues, use clear language, and effectively communicate with non-technical stakeholders.

Streamline Your Manual Testing Hiring Process with Skills Tests and Targeted Interview Questions

Hiring someone with strong manual testing skills requires a thorough evaluation process. It's important to accurately assess candidates' abilities to ensure they can meet your team's needs.

One of the most effective ways to evaluate manual testing skills is through targeted skills tests. The Manual Testing Online Test or QA Engineer Test can help you quickly identify top performers.

After using these tests to shortlist the best applicants, you can invite them for interviews. This two-step process allows you to focus your time on the most promising candidates and dig deeper into their experience and problem-solving abilities.

Ready to improve your manual testing hiring process? Sign up to access our skills tests and start identifying top talent more efficiently. You can also explore our QA assessment options for a wider range of testing-related evaluations.

Manual Testing Online Test

Download Manual Testing interview questions template in multiple formats

Manual Testing Interview Questions FAQs

Look for attention to detail, analytical skills, proficiency in test case design and execution, and familiarity with various testing tools.

Ask specific questions about their understanding of testing processes, basic test case design, and any relevant experience they have.

Scenario-based questions help assess a candidate's problem-solving skills and how they handle real-world testing challenges.

Yes, combining both types helps evaluate a candidate’s technical skills and their fit within your team and company culture.

Ask them to describe specific instances where they executed test cases, the tools they used, and how they recorded and resolved defects.

Avoid overly complex questions that confuse candidates, and ensure you cover a range of topics to get a holistic view of their skills.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources