In the competitive field of Machine Learning, finding the right talent can be a daunting task for recruiters and hiring managers. A well-structured interview process with carefully crafted questions is key to identifying candidates who possess both theoretical knowledge and practical skills.

This blog post offers a comprehensive list of Machine Learning interview questions, categorized to help you assess candidates at various levels and stages of the hiring process. From initial screening to evaluating junior engineers and assessing technical concepts, data pre-processing skills, and situational problem-solving abilities, we've got you covered.

By using these questions, you'll be better equipped to identify top Machine Learning talent for your organization. Consider pairing these interview questions with a pre-screening Machine Learning assessment to streamline your hiring process and ensure you're interviewing the most qualified candidates.

Table of contents

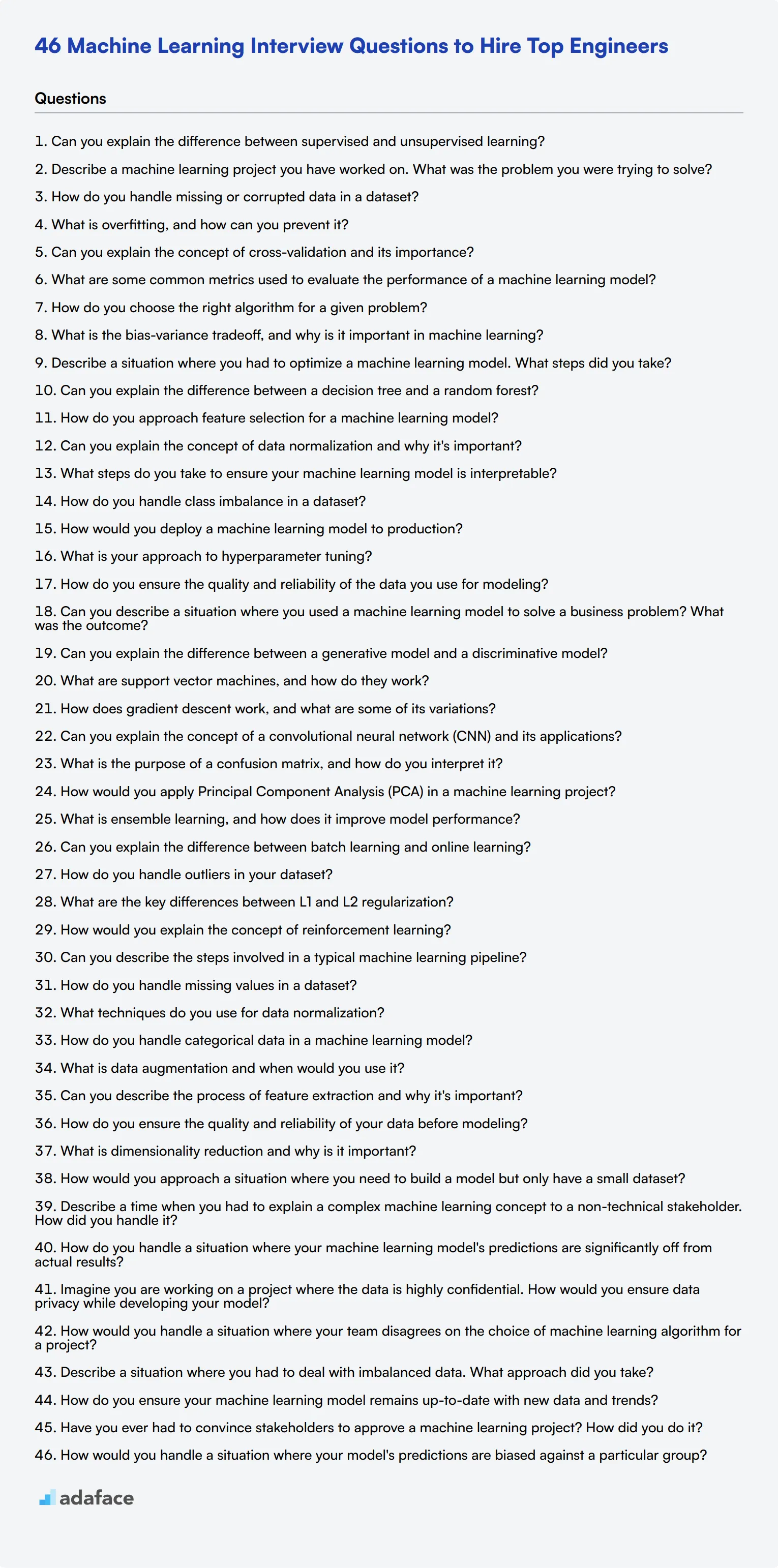

10 Machine Learning interview questions to initiate the interview

To assess whether your candidates possess the foundational skills for a role in machine learning, consider asking them some of these targeted questions. This list will help you gauge their technical expertise and problem-solving abilities effectively.

- Can you explain the difference between supervised and unsupervised learning?

- Describe a machine learning project you have worked on. What was the problem you were trying to solve?

- How do you handle missing or corrupted data in a dataset?

- What is overfitting, and how can you prevent it?

- Can you explain the concept of cross-validation and its importance?

- What are some common metrics used to evaluate the performance of a machine learning model?

- How do you choose the right algorithm for a given problem?

- What is the bias-variance tradeoff, and why is it important in machine learning?

- Describe a situation where you had to optimize a machine learning model. What steps did you take?

- Can you explain the difference between a decision tree and a random forest?

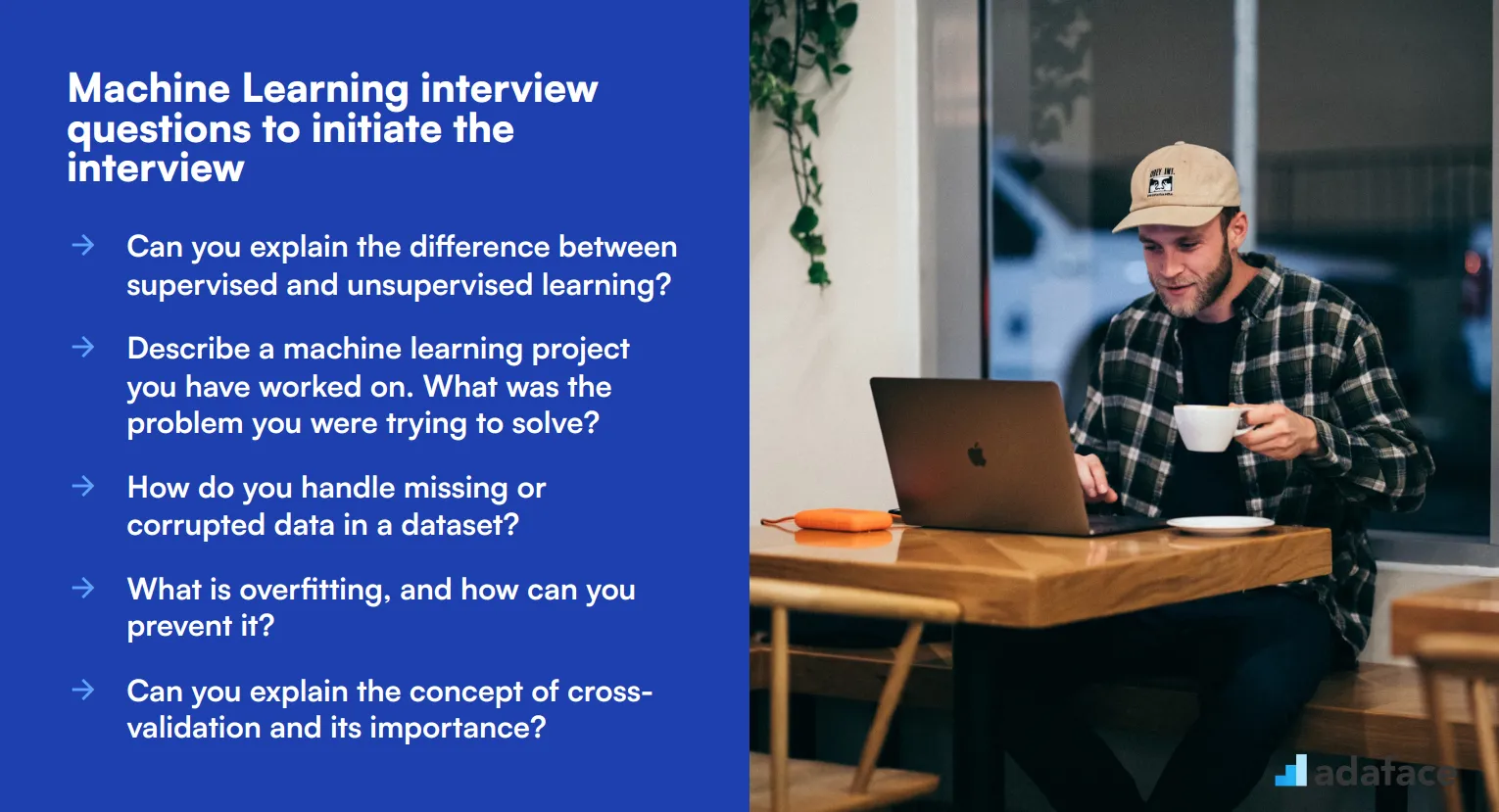

8 Machine Learning interview questions and answers to evaluate junior engineers

To gauge whether your junior engineering candidates have the necessary machine learning knowledge, utilize these practical interview questions. They are designed to prompt thoughtful responses and help you determine their readiness for real-world challenges.

1. How do you approach feature selection for a machine learning model?

Feature selection involves identifying the most important variables that contribute to the outcome you want to predict. This process can improve model performance and reduce complexity.

Candidates might mention methods such as removing irrelevant features, using statistical tests, and employing algorithmic feature importance techniques like decision tree classifiers.

Look for candidates who can explain the rationale behind their choice of features and how they evaluate the impact of each feature on the model's performance.

2. Can you explain the concept of data normalization and why it's important?

Data normalization is the process of scaling data to a standard range, typically 0 to 1, to ensure that no single feature dominates the model training.

Normalization helps in making different features comparable and improves the convergence speed of gradient-based algorithms.

Ideal candidates should be able to discuss different normalization techniques and how they apply them depending on the specific machine learning task.

3. What steps do you take to ensure your machine learning model is interpretable?

Interpretable models allow stakeholders to understand how predictions are made, which is crucial for trust and transparency.

Candidates may talk about using simpler models like linear regression or decision trees, employing model-agnostic techniques like LIME or SHAP, and visualizing feature importances.

Look for candidates who understand the balance between model complexity and interpretability, and can provide examples of how they've ensured interpretability in past projects.

4. How do you handle class imbalance in a dataset?

Class imbalance occurs when some classes are underrepresented, which can bias the model towards the majority class.

Methods to address class imbalance include resampling techniques like oversampling the minority class or undersampling the majority class, using different performance metrics, and employing algorithms designed for imbalanced data.

Candidates should demonstrate an understanding of the different techniques and discuss how they decide which method to use based on the specific problem.

5. How would you deploy a machine learning model to production?

Deploying a machine learning model involves moving it from the development environment to a production environment where it can serve real-time predictions.

Candidates might talk about using APIs, containerizing the model using Docker, and monitoring the model for performance and drift.

Look for candidates who can describe the end-to-end process, including testing and validation steps, and who understand the importance of maintaining the model post-deployment.

6. What is your approach to hyperparameter tuning?

Hyperparameter tuning involves selecting the best set of parameters for a machine learning model to optimize its performance.

Common methods include grid search, random search, and more advanced techniques like Bayesian optimization.

Candidates should explain their preferred methods and discuss the trade-offs between different approaches, particularly in terms of computational cost and time.

7. How do you ensure the quality and reliability of the data you use for modeling?

Ensuring data quality involves cleaning, preprocessing, and validating the data to make sure it is accurate and reliable.

Candidates may mention techniques such as dealing with missing values, removing duplicates, and normalizing data.

Look for candidates who emphasize the importance of understanding the data source and context, and who can discuss specific strategies they use to validate data quality.

8. Can you describe a situation where you used a machine learning model to solve a business problem? What was the outcome?

Candidates should provide a detailed example of a project where they used machine learning to address a specific business issue. This should include the problem statement, the approach they took, the model they used, and the results they achieved.

They should discuss the impact of their solution on the business, including any metrics or KPIs that improved as a result.

An ideal response will show the candidate's ability to apply machine learning techniques to real-world problems and demonstrate the value of their work to the organization.

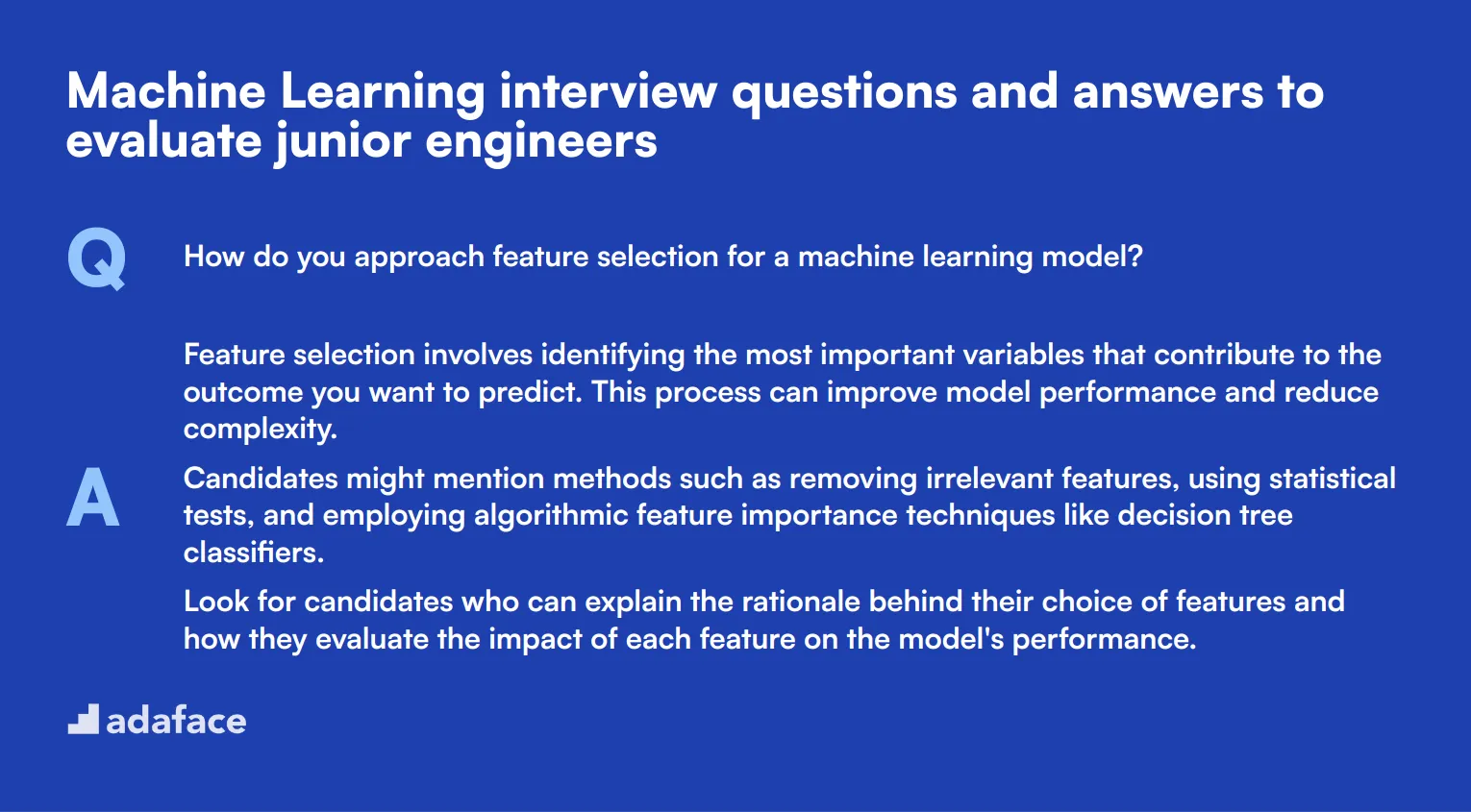

12 Machine Learning questions related to technical concepts

To evaluate whether candidates possess the necessary technical expertise in machine learning, consider using these 12 comprehensive questions during your interviews. Perfect for gauging their understanding of key concepts and practical skills, these questions will help you identify top talent for roles like data scientist.

- Can you explain the difference between a generative model and a discriminative model?

- What are support vector machines, and how do they work?

- How does gradient descent work, and what are some of its variations?

- Can you explain the concept of a convolutional neural network (CNN) and its applications?

- What is the purpose of a confusion matrix, and how do you interpret it?

- How would you apply Principal Component Analysis (PCA) in a machine learning project?

- What is ensemble learning, and how does it improve model performance?

- Can you explain the difference between batch learning and online learning?

- How do you handle outliers in your dataset?

- What are the key differences between L1 and L2 regularization?

- How would you explain the concept of reinforcement learning?

- Can you describe the steps involved in a typical machine learning pipeline?

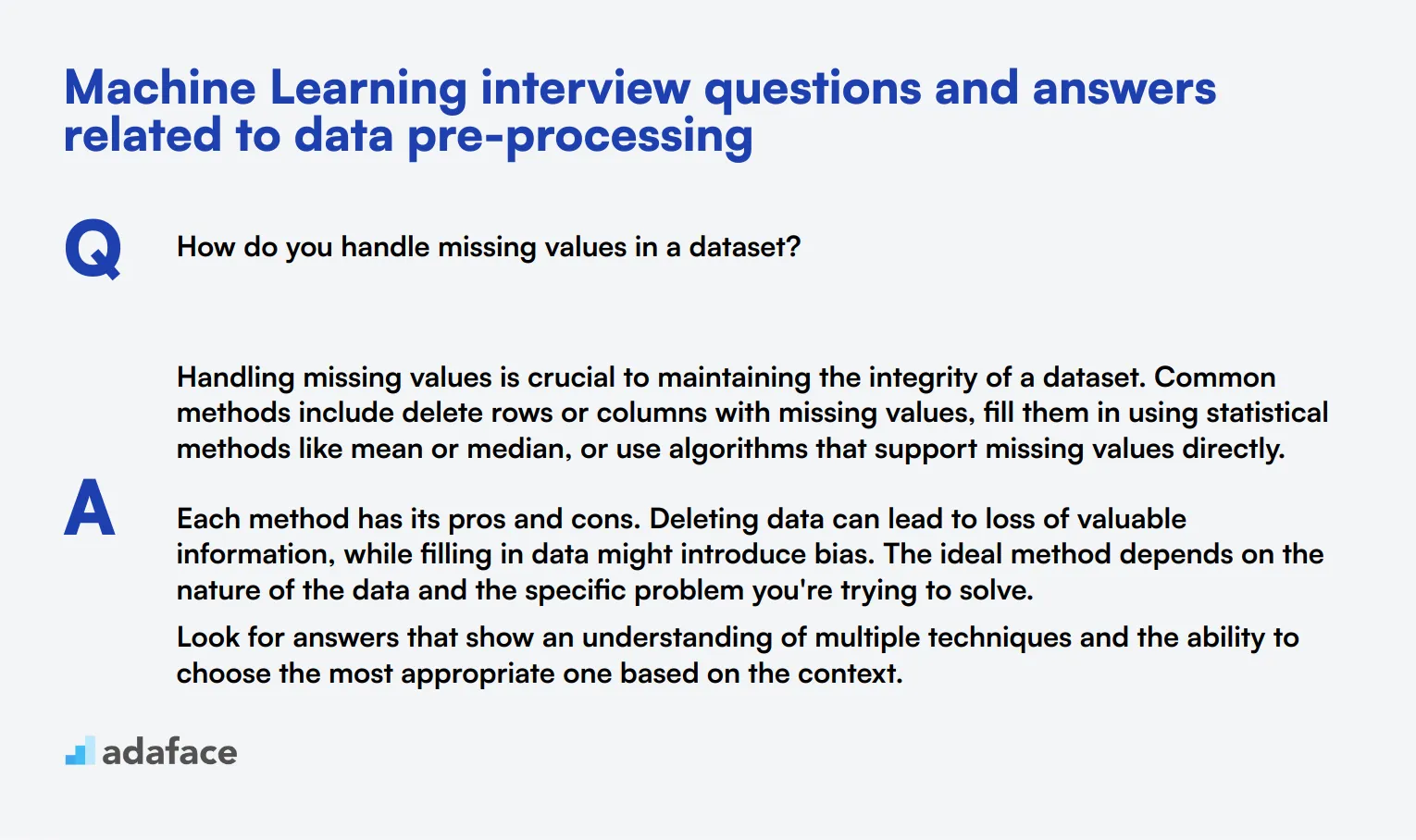

7 Machine Learning interview questions and answers related to data pre-processing

When you're interviewing candidates for a machine learning role, data pre-processing skills are critical. The following questions can help you identify whether applicants have the right knowledge and approach to handle real-world data challenges effectively.

1. How do you handle missing values in a dataset?

Handling missing values is crucial to maintaining the integrity of a dataset. Common methods include delete rows or columns with missing values, fill them in using statistical methods like mean or median, or use algorithms that support missing values directly.

Each method has its pros and cons. Deleting data can lead to loss of valuable information, while filling in data might introduce bias. The ideal method depends on the nature of the data and the specific problem you're trying to solve.

Look for answers that show an understanding of multiple techniques and the ability to choose the most appropriate one based on the context.

2. What techniques do you use for data normalization?

Data normalization is essential for ensuring that each feature has equal importance. Techniques include min-max scaling, which scales data to a fixed range, usually 0 to 1, and z-score normalization, which standardizes data based on mean and standard deviation.

Another method is decimal scaling, which moves the decimal point of values to bring them into a standard range. Each method has its use cases and limitations.

Candidates should demonstrate understanding of these techniques and the ability to choose the right one based on the specific dataset and problem.

3. How do you handle categorical data in a machine learning model?

Categorical data can be handled in several ways, such as label encoding, which assigns a unique number to each category, and one-hot encoding, which creates binary columns for each category.

Other methods include ordinal encoding for ordered categories and using algorithms that can directly handle categorical data.

Strong candidates will discuss the pros and cons of each method and explain how they choose the most suitable one based on the specific dataset and model requirements.

4. What is data augmentation and when would you use it?

Data augmentation involves creating additional data points from existing data to improve model performance. This is commonly used in image and text data.

Techniques include flipping, rotating, and cropping images, or adding noise and embedding variations in text.

Candidates should explain that data augmentation helps improve model robustness and prevents overfitting, particularly in scenarios where acquiring new data is difficult or expensive.

5. Can you describe the process of feature extraction and why it's important?

Feature extraction involves converting raw data into a set of features that can be used by a machine learning model. This process is critical as it directly impacts model performance.

Techniques vary depending on the data type, such as text, image, or numerical data, and can include methods like PCA, TF-IDF for text, or SIFT for images.

Look for candidates who can explain different techniques and understand the importance of choosing the right features to improve model accuracy and efficiency.

6. How do you ensure the quality and reliability of your data before modeling?

Ensuring data quality involves several steps, including data cleaning to remove inaccuracies, consistency checks to ensure uniformity, and validation to confirm that the data meets the required standards.

Techniques like cross-validation, anomaly detection, and outlier analysis are commonly used to ensure data reliability.

An ideal candidate will have a systematic approach to data quality, including regular checks and balances, and will emphasize the importance of reliable data in building accurate machine learning models.

7. What is dimensionality reduction and why is it important?

Dimensionality reduction is the process of reducing the number of input variables in a dataset. This is important for simplifying models, reducing computational cost, and improving model performance.

Techniques include Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE), among others.

Candidates should explain the benefits of dimensionality reduction, such as reduced risk of overfitting and enhanced model interpretability, and demonstrate an understanding of when and how to apply these techniques effectively.

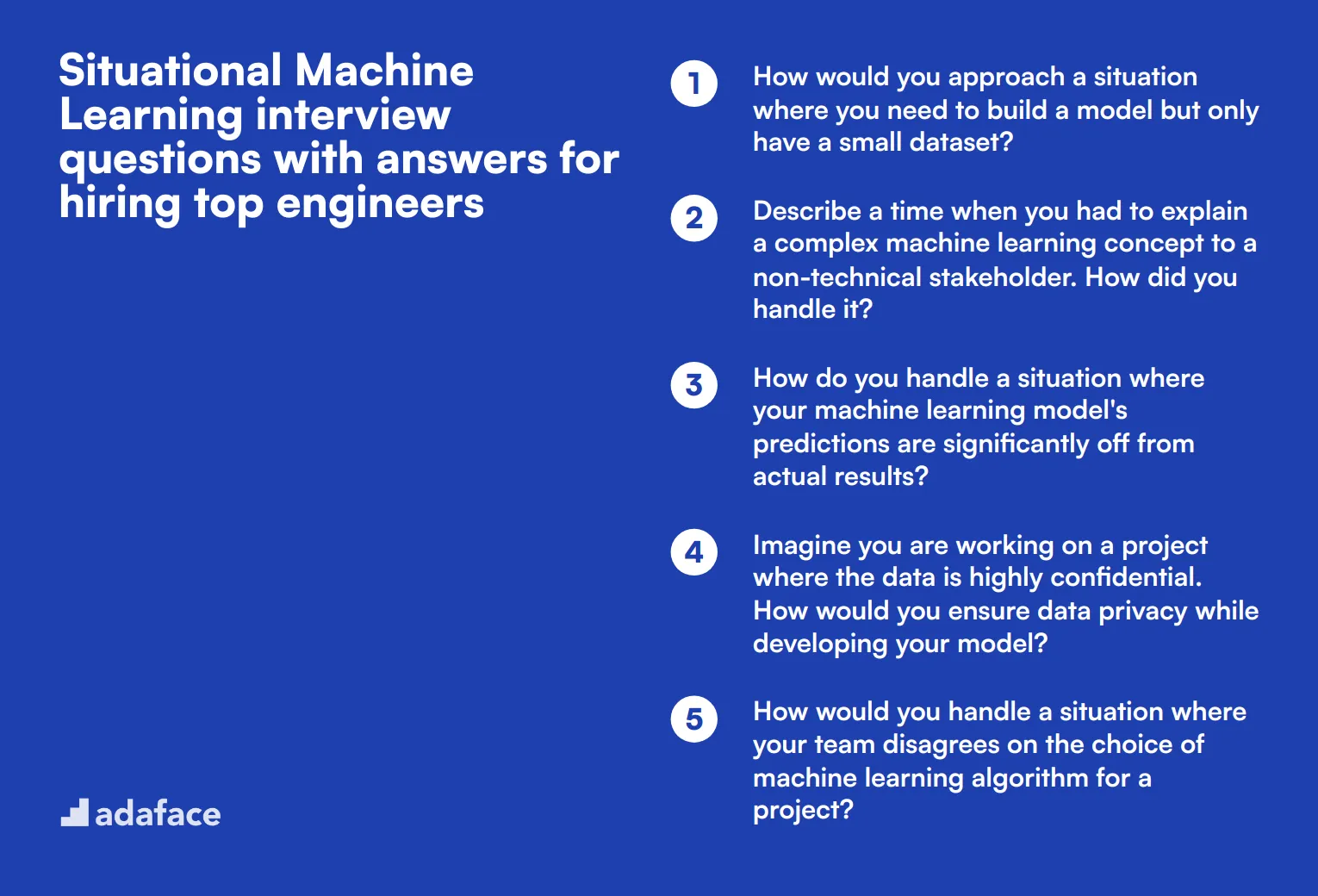

9 situational Machine Learning interview questions with answers for hiring top engineers

To hire top-tier machine learning engineers, you need to go beyond theoretical questions and dive into situational ones. These questions will help you gauge how candidates think on their feet, address real-world problems, and apply their skills in practical scenarios.

1. How would you approach a situation where you need to build a model but only have a small dataset?

When faced with a small dataset, I would first focus on data augmentation methods to artificially increase the size of the dataset. Techniques like oversampling, undersampling, and generating synthetic data can be useful.

I would also consider using simpler models that are less prone to overfitting and employ cross-validation to make the most out of the limited data. Transfer learning, where a model pre-trained on a large dataset is fine-tuned on the small dataset, can also be effective.

Look for candidates who mention creative data augmentation techniques, proper model selection, and ensuring the model's robustness through cross-validation. Follow up on specific examples they've encountered.

2. Describe a time when you had to explain a complex machine learning concept to a non-technical stakeholder. How did you handle it?

I recall a project where I had to explain the concept of a neural network to a business executive. I used simple analogies, like comparing neurons to decision-making nodes that collectively learn patterns from data, similar to how humans make decisions based on experiences.

I also visualized the process with diagrams and ensured I avoided jargon. The key was to relate the technical details to the business impact, such as how the neural network could improve customer segmentation.

Candidates should demonstrate their ability to simplify complex concepts and communicate effectively with non-technical stakeholders. Look for examples where they successfully bridged the technical-business gap.

3. How do you handle a situation where your machine learning model's predictions are significantly off from actual results?

I would start by analyzing the data pipeline to ensure there are no data quality issues, such as missing values or incorrect data formats. Then, I would evaluate the model's assumptions and parameters, checking for any misconfigurations or biases.

I would also consider whether the model is overfitting or underfitting, and I might try different algorithms or feature engineering techniques. Finally, I would validate the model using different datasets to see if the issue persists.

Look for candidates who follow a structured debugging process and are open to exploring multiple avenues to identify the root cause of the issue. Follow up on how they verify data quality and model assumptions.

4. Imagine you are working on a project where the data is highly confidential. How would you ensure data privacy while developing your model?

To ensure data privacy, I would use techniques such as data anonymization and encryption. Anonymization involves removing personally identifiable information, while encryption ensures data is securely stored and transmitted.

I would also implement strict access controls and use differential privacy techniques where possible. Differential privacy allows me to introduce 'noise' to the data, ensuring individual data points cannot be identified.

Ideal candidates should mention specific methods for protecting sensitive data and demonstrate an understanding of the principles behind data privacy. Follow up on any real-world examples they provide.

5. How would you handle a situation where your team disagrees on the choice of machine learning algorithm for a project?

First, I would gather all relevant data and metrics that support each algorithm's strengths and weaknesses. Then, I would organize a meeting to present these findings, fostering an open discussion about the pros and cons of each option.

I would also propose running a few experiments to empirically compare the performance of the different algorithms on our specific dataset. This evidence-based approach usually helps in reaching a consensus.

Look for candidates who emphasize collaboration, evidence-based decision-making, and the ability to mediate discussions. Follow up on how they ensure all team members feel heard and valued.

6. Describe a situation where you had to deal with imbalanced data. What approach did you take?

In a project with imbalanced data, I used techniques like resampling (oversampling the minority class or undersampling the majority class) and synthetic data generation methods like SMOTE (Synthetic Minority Over-sampling Technique).

I also experimented with different algorithms that handle imbalanced data better, such as decision trees or ensemble methods. Additionally, I used evaluation metrics that are more suited for imbalanced datasets, like the F1 score, precision-recall curves, and ROC-AUC.

Candidates should show a clear understanding of the challenges posed by imbalanced data and various strategies to address them. Follow up on the impact these methods had on their project outcomes.

7. How do you ensure your machine learning model remains up-to-date with new data and trends?

I implement a continuous monitoring system to track the model's performance over time. If the performance metrics degrade, it may indicate that the model needs retraining with new data.

I also schedule regular updates where the model is retrained with the latest data. Using techniques like online learning can help the model adapt to new data incrementally without needing a full retraining.

Look for candidates who emphasize the importance of ongoing model evaluation and updates. Follow up on how they monitor model performance and manage data pipelines.

8. Have you ever had to convince stakeholders to approve a machine learning project? How did you do it?

Yes, in one instance, I had to convince stakeholders about the value of a predictive maintenance model. I prepared a detailed presentation that included the potential cost savings and efficiency improvements.

I also showcased a small pilot project that demonstrated early success, providing tangible evidence of the benefits. By aligning the project goals with the business objectives, I was able to gain their approval.

Candidates should illustrate their ability to align technical projects with business goals and effectively communicate the value proposition to stakeholders. Follow up on the results achieved post-approval.

9. How would you handle a situation where your model's predictions are biased against a particular group?

Firstly, I would investigate the data to understand if there are any underlying biases. This could involve checking for imbalanced representation of different groups in the dataset and examining the feature importance.

I would then consider techniques to mitigate the bias, such as re-sampling the data, using fairness-aware algorithms, or transforming the features. Additionally, I would ensure that the evaluation metrics reflect fairness considerations.

Look for candidates who recognize the ethical implications of biased models and demonstrate proactive steps to address bias. Follow up on how they ensure fairness in their models.

Which Machine Learning skills should you evaluate during the interview phase?

In a single interview, assessors may not capture every aspect of a candidate's skills, particularly in the complex field of Machine Learning. However, focusing on a few core skills can provide insights into a candidate's potential to succeed in this role, ensuring a more informed hiring decision.

Programming Skills

To gauge programming skills effectively, consider utilizing an assessment test that includes relevant MCQs. This approach can streamline the initial screening process and help identify candidates with strong coding capabilities. For instance, you might explore the Python test in our library.

Additionally, targeted interview questions can reveal deeper insights into a candidate's programming proficiency. One effective question could be:

Can you describe a project where you implemented a Machine Learning algorithm and the programming challenges you faced?

When asking this question, look for specific examples that highlight the candidate's problem-solving abilities and understanding of code complexity. Pay attention to how they articulate their thought process and whether they can demonstrate adaptability when faced with challenges.

Statistical Knowledge

To assess this skill, consider using MCQs that test statistical concepts relevant to Machine Learning. This can help filter candidates who possess a solid understanding of these principles.

You might also ask candidates to explain how they would approach a specific statistical problem. A suitable question could be:

How would you assess whether a dataset is normally distributed?

Look for answers that demonstrate a familiarity with statistical tests (like the Shapiro-Wilk test) and a clear understanding of the implications of normality on model assumptions.

Data Pre-processing

Utilizing an assessment that includes MCQs on data pre-processing can help filter candidates who are proficient in these practices. Testing for knowledge in this area is essential for identifying suitable candidates.

To further explore this sub-skill, you may want to ask candidates the following:

Can you walk me through your process for handling missing data in a dataset?

Expect candidates to discuss various strategies, such as imputation methods or discarding missing values, and to justify their choices based on the context of the dataset.

Maximizing the Effectiveness of Machine Learning Interview Questions

Before putting your newfound knowledge into practice, consider these tips to enhance your Machine Learning interview process. These strategies will help you make the most of your interviews and select the best candidates.

1. Implement Skills Tests Before Interviews

Skills tests provide an objective measure of a candidate's abilities before the interview stage. This approach saves time and ensures you're interviewing candidates with the required technical skills.

For Machine Learning roles, consider using tests that evaluate algorithmic thinking, data analysis, and programming skills. Our Machine Learning Online Test and Data Science Test are designed to assess these key areas.

By using these tests, you can shortlist candidates more effectively and focus your interviews on deeper technical discussions and cultural fit. This process allows you to make more informed hiring decisions and improves the overall quality of your candidate pool.

2. Carefully Select Interview Questions

Time is limited during interviews, so choosing the right questions is key. Focus on questions that assess critical thinking, problem-solving, and practical application of Machine Learning concepts.

Consider including questions about related fields such as data structures, algorithms, and statistics. These areas are fundamental to Machine Learning and can provide insights into a candidate's overall technical proficiency.

Don't forget to assess soft skills like communication and teamwork. These are crucial for success in any technical role, especially in collaborative Machine Learning projects.

3. Ask Insightful Follow-up Questions

Prepared questions are a good starting point, but follow-up questions reveal a candidate's true depth of knowledge. They help you distinguish between memorized answers and genuine understanding.

For example, if you ask about feature selection methods, a follow-up could be, "How would you handle feature selection in a high-dimensional dataset?" This probes the candidate's practical problem-solving skills and experience with real-world Machine Learning challenges.

Use Machine Learning interview questions and skills tests to hire talented engineers

If you're looking to hire someone with strong Machine Learning skills, it’s important to ensure they possess the necessary expertise. The most accurate way to verify these skills is by using skills tests, such as the Machine Learning Online Test.

After utilizing this test, you'll be able to shortlist the best applicants and invite them for interviews. To take the next step, consider signing up for our platform via Adaface to access a wide range of assessments.

Machine Learning Assessment Test

Download Machine Learning interview questions template in multiple formats

Machine Learning Interview Questions FAQs

Common questions include topics on algorithms, data preprocessing, technical concepts, and situational scenarios.

Ask questions that cover fundamental concepts and problem-solving abilities in Machine Learning.

These questions present hypothetical scenarios to assess how candidates would approach and solve real-world problems.

Data preprocessing is crucial for cleaning and preparing data, which significantly affects the performance of Machine Learning models.

Combine technical questions with situational and behavioral questions to get a well-rounded assessment of the candidate.

Look for a strong understanding of concepts, clear problem-solving skills, and the ability to apply knowledge to practical situations.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources