Linux skills are in high demand, making Linux professionals valuable assets in today's IT landscape; companies want to make sure they hire the best. Ensuring candidates possess the right troubleshooting skills is as important as verifying their understanding of Linux concepts, just like any other skill.

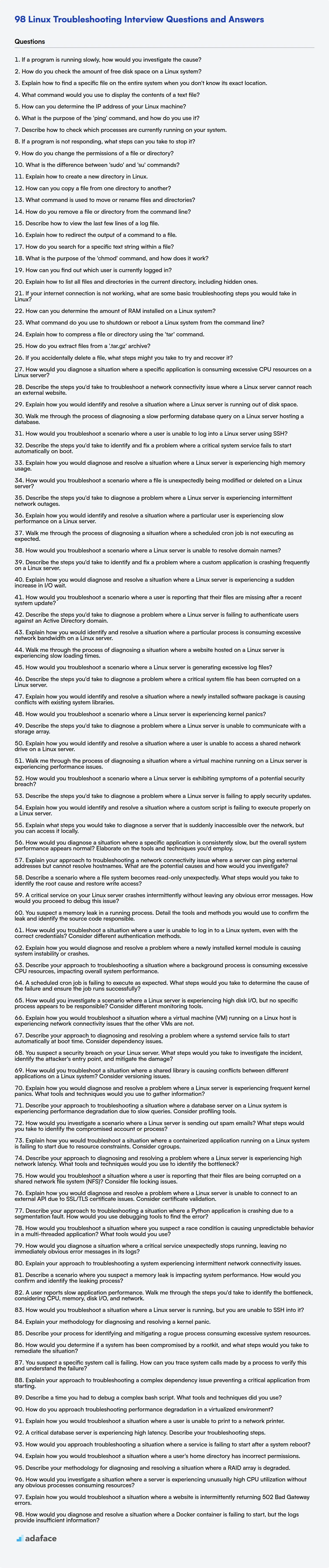

This blog post offers a categorized list of Linux troubleshooting interview questions, designed to assess candidates across different experience levels, from basic to expert; we also included a set of multiple-choice questions (MCQs) for quick evaluations.

By using these questions, you can better gauge a candidate's problem-solving and system administration capabilities, and before that, you can use Adaface's Linux online test to screen faster.

Table of contents

Basic Linux Troubleshooting interview questions

1. If a program is running slowly, how would you investigate the cause?

To investigate a slow-running program, I'd start by gathering data. I'd use profiling tools (like perf on Linux or built-in profilers in languages like Python or Java) to identify the hotspots: functions or code blocks consuming the most CPU time. Alternatively, I might examine system resource utilization (CPU, memory, disk I/O, network I/O) using tools like top, htop, or iostat to see if there's a bottleneck outside the program's code itself. Debugging can also help by inspecting the state of variables and data structures at runtime to uncover inefficiencies in algorithms or data handling.

Next, I'd analyze the collected data. If profiling points to specific code, I'd examine the algorithm's complexity, consider optimizing data structures, or refactor inefficient code. If resource utilization is high, I'd investigate the source of the load and optimize accordingly (e.g., reduce memory consumption, optimize database queries, or improve network communication). For example, optimizing database queries with EXPLAIN or rewriting inefficient loops can be solutions. Understanding system design and the intended workload is important for identifying inefficiencies.

2. How do you check the amount of free disk space on a Linux system?

To check the amount of free disk space on a Linux system, you can use the df command. The df command displays disk space usage information. A common usage is df -h, which shows the disk space in a human-readable format (e.g., KB, MB, GB).

Alternatively, the du command can be used to estimate file space usage. For example, du -sh will show the total disk usage of the current directory in human-readable format. To see the disk usage of a specific directory: du -sh /path/to/directory.

3. Explain how to find a specific file on the entire system when you don't know its exact location.

To find a file when you don't know its exact location, you can use the find command in Unix-like systems. The basic syntax is find / -name "filename". This command starts searching from the root directory (/) and looks for files or directories matching "filename". Replace "filename" with the actual name (or a pattern using wildcards like *) you're searching for. For example, find / -name "*.txt" would find all .txt files. Be mindful that searching from root can take a long time, so narrowing the search path (e.g., find /home -name "*.txt") will significantly improve speed.

Alternatively, the locate command can be faster, but it relies on a pre-built database. Before using locate, update the database with updatedb. Then, locate filename will quickly search the database for matching filenames. Note that locate might not reflect the most recent file system changes until the database is updated again. Also, use find if you have permission issues, as locate's database may contain entries you don't have permissions for.

4. What command would you use to display the contents of a text file?

To display the contents of a text file, you would typically use the cat command.

For example, to view the contents of a file named my_file.txt, you would use the following command:

cat my_file.txt

Other commands such as less and more can also be used, especially for large files, as they allow for scrolling and paging through the content.

5. How can you determine the IP address of your Linux machine?

You can determine the IP address of your Linux machine using several commands. The most common are:

ip addrorip a: This command displays detailed network interface information, including IP addresses.ifconfig: (May not be installed by default on newer systems) Displays information about network interfaces. Look for theinetfield to find the IP address.hostname -I: (Capital 'I') Prints the IP address(es) of the machine's interfaces on a single line, separated by spaces.

6. What is the purpose of the 'ping' command, and how do you use it?

The ping command is a network utility used to test the reachability of a host on an Internet Protocol (IP) network. It works by sending Internet Control Message Protocol (ICMP) echo request packets to the target host and listening for ICMP echo reply packets. Essentially, it verifies if a host is online and responsive.

To use ping, you simply type ping followed by the hostname or IP address of the target. For example, ping google.com or ping 8.8.8.8. The output shows the round-trip time for each packet, indicating the network latency. A successful ping indicates network connectivity to the target, while a failed ping suggests a network problem, such as the host being down, network congestion, or firewall issues.

7. Describe how to check which processes are currently running on your system.

To check which processes are currently running on a system, you can use different commands depending on the operating system. On Linux and macOS, the ps command is commonly used. For example, ps aux displays a comprehensive list of processes with details like user, PID, CPU usage, and memory usage. Another command is top, which provides a real-time, dynamic view of running processes, sorted by CPU usage by default.

On Windows, you can use the tasklist command in the command prompt. It displays a list of currently running processes, including their PID and memory usage. Alternatively, the Task Manager (accessible by pressing Ctrl+Shift+Esc) provides a graphical interface to view and manage running processes.

8. If a program is not responding, what steps can you take to stop it?

If a program is not responding, the first step is usually to try closing it gracefully. This can often be done by clicking the 'X' button or selecting 'File' -> 'Exit' from the application's menu. Give the application a reasonable amount of time to respond before assuming it's truly frozen.

If the application remains unresponsive, the next step is to force quit it. On Windows, this is done via the Task Manager (Ctrl+Shift+Esc), selecting the unresponsive program under the 'Processes' or 'Details' tab, and clicking 'End Task'. On macOS, you can use the Activity Monitor (found in Applications/Utilities) or the Force Quit Applications window (Command+Option+Esc) to select the application and force it to quit. On Linux, you can use the kill command in the terminal, identifying the process ID (PID) using ps or top and then running kill <PID> or, if necessary, kill -9 <PID> to forcefully terminate the process (though using kill -9 should be a last resort as it doesn't allow the program to clean up).

9. How do you change the permissions of a file or directory?

To change the permissions of a file or directory in a Unix-like operating system (like Linux or macOS), you typically use the chmod command. chmod modifies file permissions using either symbolic or numeric (octal) modes. For example, chmod u+x myfile.txt adds execute permission for the user who owns the file, while chmod 755 mydirectory sets read, write, and execute permissions for the owner, and read and execute permissions for the group and others.

chown command can also be used to change file/directory ownership.

10. What is the difference between 'sudo' and 'su' commands?

The su command (substitute user) switches the current shell's user identity. By default, it tries to become the root user, but you can specify another user. It requires the target user's password. When used without a username, su changes the environment to that of the target user; this includes setting environment variables as per their profile. It's mainly used for becoming another user.

sudo (substitute user do) executes a single command as another user (typically root) but without switching the shell's user identity. It uses the invoking user's password (or no password, depending on sudo configuration) to authenticate. sudo is commonly used to grant limited administrative privileges to users without giving them root access. It allows users to execute specific commands as root or another user, based on the rules defined in the /etc/sudoers file.

11. Explain how to create a new directory in Linux.

To create a new directory in Linux, you use the mkdir command followed by the name of the directory you want to create. For example, mkdir my_new_directory will create a directory named my_new_directory in your current working directory.

You can also create multiple directories at once using mkdir dir1 dir2 dir3. If you need to create nested directories (e.g., parent/child) and the parent directory doesn't exist, you can use the -p option: mkdir -p parent/child. This will create both the parent and child directories.

12. How can you copy a file from one directory to another?

You can copy a file from one directory to another using command-line tools or programming languages.

For command-line, you can use cp on Linux/macOS or copy on Windows. For example, in Linux/macOS, cp /path/to/source/file.txt /path/to/destination/. In Python, you can use the shutil module: import shutil; shutil.copy('/path/to/source/file.txt', '/path/to/destination/'). Remember to handle potential exceptions like FileNotFoundError.

13. What command is used to move or rename files and directories?

The mv command is used to move or rename files and directories.

For example, mv file1.txt file2.txt renames file1.txt to file2.txt. mv file.txt /path/to/new/directory/ moves file.txt to the specified directory.

14. How do you remove a file or directory from the command line?

To remove a file from the command line, you can use the rm command. For example, rm filename will delete the file named 'filename'. To remove a directory, you typically need to use the -r or -rf option with the rm command. The -r option stands for recursive, which means it will remove the directory and all its contents. The -f option stands for force, which will suppress any prompts or errors. For instance, rm -rf directoryname will forcefully remove the directory named 'directoryname' and everything within it. Use rm -rf with caution, as it can permanently delete data.

15. Describe how to view the last few lines of a log file.

To view the last few lines of a log file, you can use the tail command in most Unix-like operating systems (Linux, macOS, etc.). By default, tail displays the last 10 lines of a file.

To view a specific number of lines (e.g., the last 20 lines), use the -n option: tail -n 20 filename.log. If you want to follow the log file as it's being written to, use the -f option: tail -f filename.log. This will continuously display new lines as they are added to the file.

16. Explain how to redirect the output of a command to a file.

To redirect the output of a command to a file in a Unix-like environment (Linux, macOS), you can use the redirection operators. The most common operator is > which overwrites the file if it exists or creates it if it doesn't. For example, ls -l > file.txt redirects the output of the ls -l command to a file named file.txt.

If you want to append the output to an existing file, you can use the >> operator. For instance, echo "Hello" >> file.txt appends the string "Hello" to file.txt. You can also redirect standard error using 2> (e.g., command 2> error.log) and both standard output and standard error using &> or 2>&1 (e.g., command &> output.log or command > output.log 2>&1).

17. How do you search for a specific text string within a file?

The most common way to search for a specific text string within a file is using command-line tools like grep (on Unix-like systems) or findstr (on Windows). For example, in grep, you'd use grep "your_string" filename.txt. Similarly in findstr its findstr "your_string" filename.txt. These commands search for lines containing the specified string and print those lines to the console.

Alternatively, text editors like VS Code, Sublime Text, or Notepad++ offer powerful search functionalities using Ctrl+F (or Cmd+F on macOS). These editors allow you to search with options like case-insensitivity, whole word matching, and regular expressions. Programming languages also offer file reading and string searching capabilities, for example, in Python: with open("filename.txt", "r") as file: for line in file: if "your_string" in line: print(line).

18. What is the purpose of the 'chmod' command, and how does it work?

The chmod command is used to change the permissions of files or directories in Unix-like operating systems. It controls who can read, write, and execute a file. Permissions are defined for three classes of users: the owner of the file, the group associated with the file, and others (all other users).

chmod works by modifying the file's mode bits. These bits represent the read (r), write (w), and execute (x) permissions for each user class. It can be used in two ways: symbolic mode (e.g., chmod u+x file.txt adds execute permission for the owner) or octal mode (e.g., chmod 755 file.txt sets read/write/execute for the owner, and read/execute for group and others). Symbolic mode is often easier to understand, while octal mode is more concise for setting multiple permissions simultaneously.

19. How can you find out which user is currently logged in?

The method for determining the currently logged-in user depends on the context (operating system, application framework, etc.).

- Operating System (Linux/Unix): Use the

whoamicommand in the terminal. Alternatively, examine environment variables like$USERor$LOGNAME. - Operating System (Windows): Use the

echo %USERNAME%command in the command prompt, or examine theUSERNAMEenvironment variable. - Web Application (Python/Flask): Access the user object from the session, e.g.,

session['user'](assuming user data is stored in the session upon login). - Web Application (JavaScript/Browser): Rely on server-side logic to expose the user information, often stored in a cookie or session. This data can then be accessed using

document.cookieor by making an API call to retrieve user information.

20. Explain how to list all files and directories in the current directory, including hidden ones.

To list all files and directories (including hidden ones) in the current directory, you can use the ls command with the -a flag in Unix-like systems (Linux, macOS).

ls -a

The -a option tells ls to include all entries, even those starting with a . (dot), which are typically hidden. The output will then display all files and directories in the current location.

21. If your internet connection is not working, what are some basic troubleshooting steps you would take in Linux?

First, I'd check the physical connection: ensuring the Ethernet cable is properly plugged in or that Wi-Fi is enabled. Then, I'd use the ping command to check connectivity to the gateway or a public DNS server (e.g., ping 8.8.8.8). If ping to the gateway fails, the issue is likely local; if it succeeds, the problem is likely upstream. I would also check the network configuration using ip addr to see if an IP address has been assigned. If DHCP is used, restarting the network interface with sudo ifdown <interface> followed by sudo ifup <interface> can help. Finally, checking the network manager status (systemctl status NetworkManager) can reveal issues with the network service itself.

22. How can you determine the amount of RAM installed on a Linux system?

You can determine the amount of RAM installed on a Linux system using several commands. The most common and easiest to remember is free -h. This command displays the total, used, and free amount of RAM in a human-readable format (e.g., GB, MB). Another command is cat /proc/meminfo. This command displays a lot more detailed information about memory usage including MemTotal which represents the total RAM installed. You can also use vmstat -s to get a summary of various system statistics including total memory.

23. What command do you use to shutdown or reboot a Linux system from the command line?

To shutdown a Linux system from the command line, you can use the shutdown command. For example, sudo shutdown now will initiate an immediate shutdown. To reboot, you can use sudo reboot or sudo shutdown -r now. The shutdown command with the -r option is another way to reboot.

Alternatively, you could use systemctl poweroff to shutdown or systemctl reboot to reboot the system. These commands interact with systemd, the system and service manager for Linux, and are generally preferred on systems that use systemd.

24. Explain how to compress a file or directory using the 'tar' command.

To compress a file or directory using the tar command, you typically combine it with a compression algorithm like gzip or bzip2. For example, to create a gzipped tar archive, you can use the -czvf options: tar -czvf archive_name.tar.gz directory_or_file. This command creates an archive named archive_name.tar.gz of the specified directory_or_file using gzip compression.

For bzip2 compression, use -cjvf: tar -cjvf archive_name.tar.bz2 directory_or_file. Here, c creates, z uses gzip, j uses bzip2, v is verbose (shows files being processed), and f specifies the archive file name. Remember to replace archive_name, directory_or_file with the desired name for the archive and path to what you want to compress. For directory compression make sure you use forward slash after directory name.

25. How do you extract files from a '.tar.gz' archive?

To extract files from a .tar.gz archive, you can use the tar command with the following options:

tar -xzfv archive_name.tar.gz

Where:

-xstands for extract.-zindicates that the archive is compressed with gzip.-vmeans verbose, listing the files being extracted.-fspecifies the archive file name.

26. If you accidentally delete a file, what steps might you take to try and recover it?

If I accidentally delete a file, the first thing I would do is check the Recycle Bin (Windows) or Trash (macOS/Linux). Deleted files are often moved there rather than permanently deleted. If the file is found there, I would simply restore it.

If the file isn't in the Recycle Bin/Trash, or if I emptied it recently, I would consider using data recovery software. These tools scan the hard drive for deleted files and attempt to recover them. Some popular options include Recuva (Windows) or TestDisk (cross-platform). It's important to stop using the drive as much as possible after realizing the file is deleted to prevent it from being overwritten. Finally, if a backup solution such as Time Machine (macOS) or a cloud backup service was in place, I would restore the file from the latest backup.

Intermediate Linux Troubleshooting interview questions

1. How would you diagnose a situation where a specific application is consuming excessive CPU resources on a Linux server?

First, I'd use top or htop to identify the process consuming the most CPU. top provides a real-time view of system processes. If it's indeed the application in question, I'd then use pidstat -p <process_id> 1 to get a more detailed CPU usage breakdown over time. Next, I would try to identify the specific threads that are causing the high CPU usage via ps -Lp <process_id> -o pid,tid,%cpu,%mem,cmd. This will give a list of threads along with the CPU and memory usage. After identifying the problematic thread, I would use jstack <process_id> (if it's a Java application) or gdb -p <process_id> (for native applications) to get stack traces and identify the code sections that are actively running. Analyzing the stack traces should reveal the root cause, such as a tight loop, inefficient algorithm, or blocking I/O operation. I would also look into application logs for potential errors or warnings that might explain the high CPU usage. Finally, check if there are any scheduled tasks or cron jobs that are related to the application, or any external factors like network requests, that could contribute to the problem.

2. Describe the steps you'd take to troubleshoot a network connectivity issue where a Linux server cannot reach an external website.

First, I'd verify basic network configuration on the Linux server using commands like ip addr, route -n, and cat /etc/resolv.conf to check the IP address, gateway, and DNS settings. Next, I'd use ping to test connectivity to the gateway and external DNS servers (e.g., 8.8.8.8). If pinging the gateway fails, the problem is likely on the local network or the server's configuration. If pinging the gateway is successful but pinging 8.8.8.8 fails, the issue could be with DNS resolution or a firewall blocking outbound traffic. I'd then use traceroute or tracepath to identify where the connection is failing along the path to the external website. Finally, I would use nslookup or dig to query the DNS server and ensure the external website resolves to a valid IP address. If DNS resolution is successful and the traceroute identifies a firewall, I would review the firewall rules on the server and any network firewalls to ensure outbound traffic on port 80/443 is allowed. I'd also use tcpdump or wireshark to capture network traffic on the server to analyze the packets being sent and received to further isolate the issue.

3. Explain how you would identify and resolve a situation where a Linux server is running out of disk space.

First, I'd use df -h to identify which partitions are nearing full capacity. Then, du -hsx /* | sort -rh | head -10 would help pinpoint the largest directories consuming space within the problematic partition. From there, I'd investigate those directories to determine the cause – it could be excessive logs, large temporary files, or unexpectedly large user data. Once identified, I'd take appropriate action, such as deleting unnecessary files, compressing logs, moving data to another storage location, or increasing the partition size if feasible.

To prevent recurrence, I'd implement monitoring and alerting for disk space usage. Tools like Nagios, Zabbix, or even simple shell scripts with cron jobs can be used to trigger alerts when disk space reaches a predefined threshold. Log rotation policies should also be configured appropriately to prevent excessive log file growth.

4. Walk me through the process of diagnosing a slow performing database query on a Linux server hosting a database.

Diagnosing a slow database query involves a systematic approach. First, identify the slow query. Use database-specific tools like slow query logs (slow_query_log in MySQL, auto_explain in PostgreSQL) or performance monitoring tools to pinpoint the problematic query. Once identified, use the database's EXPLAIN command (e.g., EXPLAIN SELECT ... in MySQL/PostgreSQL) to understand the query execution plan. This reveals how the database is accessing tables, using indexes, and performing joins. Look for full table scans, missing indexes, or inefficient join strategies.

Next, analyze the Linux server itself. Use tools like top, htop, iostat, and vmstat to monitor CPU usage, memory utilization, disk I/O, and network activity. High CPU usage might indicate inefficient query processing, while high disk I/O could point to slow data retrieval. Insufficient memory can lead to swapping, further slowing down performance. Examine the network latency between the application server and the database server as network issues can manifest as perceived database slowness. Based on these observations, optimize the query (e.g., add indexes, rewrite the query), tune database configuration parameters (e.g., shared_buffers in PostgreSQL), or upgrade server resources (CPU, memory, disk).

5. How would you troubleshoot a scenario where a user is unable to log into a Linux server using SSH?

First, verify basic connectivity using ping <server_ip>. If ping fails, troubleshoot network issues (firewall, routing, DNS). If ping succeeds, check SSH server status using systemctl status sshd (or appropriate command for your distribution). Look for errors in the logs (/var/log/auth.log or /var/log/secure) which may point to authentication failures, such as incorrect passwords, key issues, or account lockouts. Ensure the user exists locally or via configured authentication (e.g., LDAP).

6. Describe the steps you'd take to identify and fix a problem where a critical system service fails to start automatically on boot.

First, I'd check the system logs (using tools like journalctl on Linux or the Event Viewer on Windows) for error messages related to the service. I'd focus on messages logged around the time the system booted. These logs can pinpoint the exact reason for the failure, such as missing dependencies, incorrect configuration, or file permission issues. I would also manually attempt to start the service. This action can expose error messages that may not appear during the automatic boot process.

Next, I'd examine the service's configuration file for errors and verify the service's dependencies are correctly installed and configured. I'd also confirm the service is enabled to start on boot using systemctl is-enabled <service-name> (on systemd systems) or similar tools. If dependencies are the issue, I'd ensure they are started before the critical service. After implementing any fixes, I would reboot the system to confirm the service starts automatically as expected. If the service still fails, I will loop through the above process, checking if the applied fix triggered other issues.

7. Explain how you would diagnose and resolve a situation where a Linux server is experiencing high memory usage.

To diagnose high memory usage on a Linux server, I'd start with free -m to get a quick overview of total, used, free, shared, buff/cache, and available memory. Then I'd use top or htop to identify the processes consuming the most memory, paying close attention to the RES (resident memory) and VIRT (virtual memory) columns. I might also use vmstat 1 to observe memory statistics in real-time.

Once I've identified the culprit processes, I'd investigate further. If it's a Java application, I'd analyze heap dumps. For other processes, I'd use pmap -x <pid> to examine the process's memory map and identify potential memory leaks or inefficient memory usage. Solutions could range from restarting the problematic service, optimizing application code (e.g., fixing memory leaks), increasing swap space (as a temporary measure), or upgrading the server's RAM.

8. How would you troubleshoot a scenario where a file is unexpectedly being modified or deleted on a Linux server?

To troubleshoot unexpected file modifications or deletions on a Linux server, I'd start by checking system logs ( /var/log/syslog, /var/log/auth.log, /var/log/audit/audit.log if auditd is enabled) for any clues about the user or process responsible. I'd use tools like auditd to monitor file access, modification, and deletion attempts for the affected files/directories. Commands like ls -l, stat, and lsof can help determine the last modification time and any open handles on the file.

Additionally, I'd investigate cron jobs and scheduled tasks for any unexpected scripts or commands that might be modifying or deleting the file. Network shares and remotely mounted filesystems should also be investigated, as changes could be originating from another system. Regularly backing up critical files can mitigate data loss while troubleshooting.

9. Describe the steps you'd take to diagnose a problem where a Linux server is experiencing intermittent network outages.

To diagnose intermittent network outages on a Linux server, I'd start by gathering information. First, I'd check the system logs (/var/log/syslog, /var/log/kern.log, /var/log/messages) for any network-related errors or warnings around the time of the outages. I'd also use tools like ping and traceroute to test connectivity to external resources and identify where the connection is failing. tcpdump or wireshark can be used to capture network traffic and analyze packets for anomalies.

Next, I'd examine the server's network configuration. I'd verify the network interface settings (ifconfig or ip addr), routing table (route -n), and DNS configuration (/etc/resolv.conf). It's also crucial to check for resource exhaustion (CPU, memory, disk I/O) using tools like top, vmstat, and iostat, as high load can sometimes manifest as network issues. I'd check the firewall rules (iptables -L or nft list ruleset) to make sure no rules are blocking traffic unexpectedly. Finally, analyze switch and router logs to see if there are issues that affect the server.

10. Explain how you would identify and resolve a situation where a particular user is experiencing slow performance on a Linux server.

To identify and resolve slow performance for a specific user on a Linux server, I'd start by checking resource utilization. I would use tools like top, htop, or ps to monitor CPU, memory, and I/O usage specifically attributed to that user's processes. iotop could help isolate I/O bottlenecks. Commands like ps -u <username> -o %cpu,%mem,pid,comm show resource consumption by a specific user. If resource exhaustion is the issue, I'd investigate which processes are consuming the most resources and consider options like optimizing the application, limiting resource usage (using ulimit), or adding more resources to the server.

If resources aren't the primary bottleneck, I'd investigate network latency. ping and traceroute can help identify network issues. I'd also check disk I/O using iostat and look for signs of slow disk performance or disk contention. If the user is accessing a database, I'd examine the database logs and query performance. Another key thing to check would be the user's processes for any deadlocks, infinite loops or extensive blocking calls. Finally, review relevant application logs for user-specific errors or warnings that might provide clues.

11. Walk me through the process of diagnosing a situation where a scheduled cron job is not executing as expected.

When a cron job fails, I start by verifying the cron job's configuration using crontab -l to ensure the schedule is correct and the command is as expected. I then check the system logs (/var/log/syslog or /var/log/cron) for any error messages related to the cron job. Specifically, I look for messages indicating failures, permission issues, or command not found errors. Furthermore, I also verify the script's permissions to make sure it's executable.

Next, I ensure the cron daemon is running with systemctl status cron. If the daemon is not running, I'll start it with systemctl start cron. I also check the script itself for errors by manually running it as the user the cron job is configured to run as to replicate the cron environment. I ensure any necessary environment variables are set correctly within the cron configuration or the script itself, and consider redirecting the script's output to a file to capture any errors or output for examination using > /path/to/logfile 2>&1 in the crontab.

12. How would you troubleshoot a scenario where a Linux server is unable to resolve domain names?

First, I'd check the /etc/resolv.conf file to ensure that the nameserver entries are correct and pointing to valid DNS servers. I'd also use ping and traceroute to verify network connectivity to those DNS servers. Then, I would use nslookup or dig to query those nameservers directly for a known domain like google.com to isolate whether the problem is with DNS resolution specifically, and not a general network connectivity issue. I'd also verify that the /etc/nsswitch.conf file has 'dns' listed for hostname resolution. Another thing I would verify is that a local DNS resolver like systemd-resolved or dnsmasq is properly configured and running, if one is intended to be used. Finally, I'd review firewall rules to make sure DNS traffic (port 53, both TCP and UDP) isn't being blocked.

13. Describe the steps you'd take to identify and fix a problem where a custom application is crashing frequently on a Linux server.

First, I'd gather information. I'd check system logs (/var/log/syslog, /var/log/messages, application-specific logs) for error messages, stack traces, and timestamps related to the crashes. I'd also use tools like top, htop, or vmstat to monitor CPU, memory, and I/O usage, looking for spikes or resource exhaustion leading up to the crash. dmesg will give insights on possible kernel level problems. ulimit -a shows resource limits which could be a root cause.

Next, based on the logs and system metrics, I'd try to pinpoint the cause. If there's a stack trace, I'd analyze it to identify the problematic code. I might use tools like gdb to attach to the running process and examine its state. If it seems like resource exhaustion, I'd investigate memory leaks or inefficient resource usage in the application code. I would also review recent code changes or updates to the application or the server environment, as these are often sources of new issues. After identifying the cause, I'd implement a fix, test it thoroughly in a staging environment, and then deploy it to production while monitoring the system for any further crashes.

14. Explain how you would diagnose and resolve a situation where a Linux server is experiencing a sudden increase in I/O wait.

To diagnose high I/O wait on a Linux server, I'd start by using top or htop to confirm the high wa (I/O wait) percentage. Next, iostat -xz 1 would provide detailed I/O statistics per device, showing which disks are experiencing high utilization, await times, and queue lengths. I would also check vmstat 1 to understand system-wide memory usage and paging activity, as excessive swapping can cause high I/O.

To resolve the issue, potential solutions depend on the root cause. If a specific process is causing high I/O, I'd investigate and optimize its I/O operations. This might involve reducing the frequency or size of writes, using asynchronous I/O, or optimizing database queries. If the disk itself is the bottleneck, I'd consider upgrading to faster storage (e.g., SSD), adding more RAM to reduce swapping, or implementing caching mechanisms. Network file system I/O issues can be addressed by optimizing the network connection or upgrading the NFS server.

15. How would you troubleshoot a scenario where a user is reporting that their files are missing after a recent system update?

First, I'd gather information: When did the update happen? What types of files are missing? Are other users affected? Has the user checked the recycle bin/trash? I'd then check the system update logs for errors or file migration information. It's possible files were moved to a different directory or renamed during the update process. A file system search using keywords related to the missing files is crucial. If volume shadow copy service (VSS) is enabled, I would attempt to restore files from a previous version. Also, I'd verify the user's profile hasn't been corrupted or a temporary profile loaded.

If the simple steps fail, a deeper dive is needed. I'd check disk integrity using chkdsk (Windows) or fsck (Linux/macOS). Reviewing system logs for file system errors is also key. It's possible that the update process triggered a hardware failure or exposed an existing vulnerability. Consider examining backup logs to determine if a recent backup can be restored. In rare instances, data recovery software might be needed as a last resort. I would always prioritize data safety by creating a disk image before attempting any potentially destructive recovery procedures.

16. Describe the steps you'd take to diagnose a problem where a Linux server is failing to authenticate users against an Active Directory domain.

To diagnose Active Directory authentication failures on a Linux server, I'd start by verifying basic network connectivity: ensuring the server can ping the domain controllers using both IP address and hostname, and that DNS resolution is functioning correctly. Next, I'd check the configuration files for the authentication service being used (e.g., sssd.conf for SSSD, krb5.conf for Kerberos). I'd specifically look for errors in the domain name, realm, or server addresses.

I would then examine the system logs (/var/log/auth.log, /var/log/secure, /var/log/messages, and logs specific to the authentication service) for error messages that indicate the nature of the failure. kinit can be used for Kerberos to retrieve a ticket and check the kerberos setup. Debugging tools such as tcpdump or wireshark to capture network traffic related to authentication can provide deeper insights. Lastly, I'd confirm that the Linux server's time is synchronized with the Active Directory domain controllers using NTP, as time discrepancies can cause authentication issues.

17. Explain how you would identify and resolve a situation where a particular process is consuming excessive network bandwidth on a Linux server.

To identify excessive network bandwidth usage by a process on a Linux server, I would start by using tools like iftop or nethogs to get a real-time view of network traffic and identify the processes consuming the most bandwidth. tcpdump can also be used to capture packets and analyze the traffic patterns if a deeper investigation is needed. Once the offending process is identified, I would investigate the process's configuration and logs to understand why it's generating so much traffic.

To resolve the issue, I would consider options like rate-limiting the process's network usage using tc (traffic control), optimizing the process's configuration to reduce unnecessary network activity, or, as a last resort, terminating the process if it's not critical. For persistent issues, reviewing and potentially redesigning the application's network communication patterns might be necessary. For example: tc qdisc add dev eth0 root handle 1: htb default 10; tc class add dev eth0 parent 1: classid 1:1 htb rate 10mbit; tc class add dev eth0 parent 1:1 classid 1:10 htb rate 1mbit; tc filter add dev eth0 protocol ip parent 1:0 prio 1 u32 match ip sport 80 0xffff flowid 1:10

18. Walk me through the process of diagnosing a situation where a website hosted on a Linux server is experiencing slow loading times.

To diagnose slow website loading times on a Linux server, I'd start by checking the basics: server resource utilization (CPU, memory, disk I/O) using tools like top, htop, iostat, and vmstat. High resource usage often indicates bottlenecks. I'd also examine the network connectivity to the server using ping and traceroute to identify potential network latency issues. Next, I would review the web server logs (e.g., Apache or Nginx) for error messages or slow query logs if a database is involved, indicating potential code or database performance problems. I would use curl -w "%{time_total}" -o /dev/null <website_url> to measure overall time taken to receive the website. I would also examine the website's code for inefficient algorithms or unoptimized images/assets using browser developer tools to analyze the waterfall chart and identify slow-loading resources.

Further diagnosis would involve profiling the application code to pinpoint slow functions or database queries. Tools like strace or perf can provide insights into system call performance. If using a database, I'd analyze query execution plans using EXPLAIN to identify optimization opportunities. Caching mechanisms (e.g., using a CDN or server-side caching) would be evaluated to improve response times for static content. Finally, consider tools like tcpdump or Wireshark to analyze network traffic in more detail, if necessary.

19. How would you troubleshoot a scenario where a Linux server is generating excessive log files?

First, identify the application or service generating the excessive logs. Use tools like du -sh /var/log to check the size of log files and tail -f /var/log/syslog or journalctl -xe to monitor logs in real-time and pinpoint the culprit. Once identified, investigate the root cause. This could be due to debug logging being enabled, an application error causing repeated logging, or even a misconfiguration.

Next, implement solutions to mitigate the issue. Consider adjusting the logging level of the application, fixing the underlying error causing the excessive logging, or implementing log rotation using logrotate. Configuring logrotate to compress and archive older logs can help manage disk space. Also, ensure adequate monitoring is in place to detect similar issues in the future. If verbose logging is needed temporarily, remember to disable it once troubleshooting is complete.

20. Describe the steps you'd take to diagnose a problem where a critical system file has been corrupted on a Linux server.

First, I would attempt to identify the corrupted file. This might involve reviewing system logs (/var/log/syslog, /var/log/messages, /var/log/audit/audit.log, etc.) for error messages or unusual activity preceding the system malfunction. Tools like dmesg can also reveal kernel-level errors. Once the file is identified, I'd try to determine the extent of the corruption, is it fully or partially corrupt.

Next, depending on the nature and criticality of the file, I would try to replace it from a known good source. This could be a backup, a replica from another server in the cluster, or from the original installation media/package. If it's a configuration file, I might be able to recreate it using default settings or previous known configurations. I would verify the replacement by running md5sum or sha256sum to compare it with the known good checksum if available, and then restart the relevant service. Finally, I'd implement preventative measures like regular backups and file system integrity checks (using tools like AIDE or Tripwire) to avoid future occurrences. A rootkit scan might be beneficial as well to rule out security compromises.

21. Explain how you would identify and resolve a situation where a newly installed software package is causing conflicts with existing system libraries.

First, I would try to identify the specific system libraries causing the conflict. Common tools for this include ldd (Linux) or otool -L (macOS) to list dependencies of the newly installed software and comparing them to the existing system libraries. I would also examine system logs (e.g., /var/log/syslog, /var/log/messages, or Windows Event Viewer) for error messages or warnings that point to library conflicts. If the software has its own logs, those would be helpful, too.

To resolve the conflict, I'd explore several options. One might be using a containerization technology like Docker to isolate the new software and its dependencies. Another approach would involve using virtual environments (e.g., Python's venv or Conda) to create an isolated environment for the software. Alternatively, if the conflicting libraries are version-related, downgrading or upgrading the conflicting libraries might be a solution, but this needs to be approached carefully to avoid breaking other system components. As a last resort, I'd explore statically linking the required libraries with the new software, but this can increase the software's size and might introduce security vulnerabilities.

22. How would you troubleshoot a scenario where a Linux server is experiencing kernel panics?

Troubleshooting kernel panics on a Linux server involves several steps. First, capture the panic message from the console (physical or serial). Analyze the error messages, paying close attention to the call trace which shows the functions that were executing when the panic occurred. This will give hints about the source of the problem - possibly a driver, faulty hardware, or a software bug. Check system logs (/var/log/syslog, /var/log/kern.log) for related errors before the panic occurred.

Next, if the kernel panic is reproducible, try booting into a previous kernel version from the bootloader (GRUB). If the older kernel works, the issue might be with the newer kernel or its modules. Investigate recent kernel updates, driver installations, or configuration changes. Tools like kdump can be configured to capture a memory dump of the kernel at the time of the panic, which can be analyzed offline using tools like crash to provide more detailed insights. Hardware diagnostics should also be performed (memory tests, disk checks) to rule out hardware failures.

23. Describe the steps you'd take to diagnose a problem where a Linux server is unable to communicate with a storage array.

To diagnose a communication problem between a Linux server and a storage array, I'd start with a layered approach. First, I'd check the physical layer: cable connections, port status on both the server and the array, and ensure there are link lights. Then, I'd move to the network layer. I'd use ping and traceroute to verify basic network connectivity between the server and the storage array's IP address. If that fails, I'd investigate routing tables (route -n) and firewall rules (iptables -L or firewall-cmd --list-all) on the server to ensure traffic isn't being blocked. Also, confirming the correct subnet mask and gateway settings is crucial.

Next, I'd look at the storage protocol layer (e.g., iSCSI, Fibre Channel). For iSCSI, I'd use iscsiadm to discover targets and check session status. For Fibre Channel, tools like systool -c fc_host -v or lsscsi can show connected devices and their states. I'd also examine the storage array's logs for any error messages related to the server's connection attempts. Finally, I'd check if the server's HBA driver and firmware are compatible with the storage array and are correctly installed. Any multipathing software (e.g., Device Mapper Multipath) would also be inspected for configuration errors and path failures.

24. Explain how you would identify and resolve a situation where a user is unable to access a shared network drive on a Linux server.

First, I would verify the user's credentials and network connectivity. I'd check if the user can ping the server and if their username and password are correct. I would also check if the user's account is locked or disabled. Next, I'd investigate the server-side configuration. This includes checking if the Samba (or NFS) service is running, the share is properly configured in the smb.conf (or /etc/exports for NFS) file with correct permissions for the user, and the server's firewall allows traffic on the necessary ports (139, 445 for Samba; 111, 2049 for NFS). I would also check the logs (/var/log/samba/log.smbd, /var/log/syslog) for error messages.

To resolve the issue, I would start by correcting any misconfigurations found in the above steps. If it's a permission issue, I'd use chmod and chown to adjust the file permissions or modify the Samba share configuration. If it's a firewall issue, I'd use iptables or firewalld to open the required ports. Finally, I would restart the Samba or NFS service to apply the changes. If the issue persists, I would analyze the logs more deeply or consult with other team members.

25. Walk me through the process of diagnosing a situation where a virtual machine running on a Linux server is experiencing performance issues.

To diagnose VM performance issues on a Linux server, I'd start by checking the host server's resource utilization (CPU, memory, disk I/O, network). Tools like top, htop, iostat, and vmstat can help identify bottlenecks. If the host is maxed out, the VM's performance will suffer. Next, I'd investigate the VM itself using tools like top or htop within the VM to identify resource-intensive processes. We can also check VM specific logs for errors. If the VM's CPU or memory usage is high, it may indicate an application issue or insufficient resources allocated to the VM.

After basic checks, I'd investigate the virtual disk I/O performance. Tools like iotop inside the VM or host can identify processes or VMs consuming excessive disk I/O. Network performance can be assessed with iftop or tcpdump to identify potential network bottlenecks or high traffic volume. Finally, consider hypervisor-level monitoring tools (like those offered by VMware or KVM) for a more holistic view of resource allocation and performance metrics across all VMs on the host.

26. How would you troubleshoot a scenario where a Linux server is exhibiting symptoms of a potential security breach?

First, isolate the system from the network to prevent further damage. Then, gather information: check system logs (/var/log/auth.log, /var/log/syslog, /var/log/secure), review running processes (ps aux), and examine network connections (netstat -tulnp or ss -tulnp). Look for suspicious activity like unusual user logins, unauthorized processes, or unexpected network connections. Use tools like chkrootkit or rkhunter to scan for rootkits.

Next, analyze the gathered data. Correlate log entries with process and network information to identify the source and scope of the breach. Investigate any suspicious files or processes by checking their checksums against known good versions or submitting them to online analysis services like VirusTotal. Finally, based on the findings, implement appropriate remediation steps, such as removing malware, patching vulnerabilities, and restoring from backups. Consider engaging security professionals for assistance.

27. Describe the steps you'd take to diagnose a problem where a Linux server is failing to apply security updates.

To diagnose why a Linux server isn't applying security updates, I'd start by checking the update configuration files (e.g., /etc/apt/sources.list for Debian/Ubuntu or /etc/yum.repos.d/ for Red Hat/CentOS) to ensure the repositories are correctly defined and accessible. Then, I'd examine the logs of the package manager (/var/log/apt/history.log, /var/log/yum.log, /var/log/dnf.log) for any error messages or failed update attempts. I would also manually attempt an update using the package manager (e.g., sudo apt update && sudo apt upgrade or sudo yum update or sudo dnf upgrade) to see any immediate error output.

Next, I'd investigate potential network connectivity issues to rule out problems reaching the update servers, using tools like ping or traceroute. I would also check for disk space issues, particularly on the /boot and / partitions, as insufficient space can prevent updates. Finally, I'd check for conflicting packages or dependencies that might be blocking the update process, which often requires some investigation of the package manager's error messages.

28. Explain how you would identify and resolve a situation where a custom script is failing to execute properly on a Linux server.

To identify and resolve a failing custom script on a Linux server, I would first check the script's logs, if any exist, for error messages or unusual behavior. I'd also examine system logs (/var/log/syslog, /var/log/messages) for related errors around the script's execution time. To pinpoint the problem, I would then manually execute the script with debugging flags (bash -x script.sh) or using a debugger like pdb (if it's a Python script) to step through the code and inspect variable values. For permission issues, I would use ls -l to check file permissions and ownership, and correct them with chmod or chown if necessary.

After identifying the root cause, whether it's a syntax error, missing dependency, incorrect file permissions, or an environment issue, I'd apply the appropriate fix. This could involve editing the script, installing missing packages using apt or yum, adjusting file permissions, or modifying environment variables. Finally, I'd test the script thoroughly after applying the fix to ensure it's functioning correctly and monitor it for any recurrence of the issue.

29. Explain what steps you would take to diagnose a server that is suddenly inaccessible over the network, but you can access it locally.

First, I would check the server's network configuration using tools like ip addr, route -n, and ping to verify its IP address, gateway, and DNS settings are correct. I'd also examine the server's firewall rules (iptables -L, firewall-cmd --list-all) to ensure that network traffic isn't being blocked. Then, I would verify that the network service is running using systemctl status <network_service> e.g systemctl status networking or systemctl status NetworkManager. I will also use netstat -tulnp or ss -tulnp to check if the service I'm trying to access is listening on the correct port and IP address.

Next, I would focus on network connectivity outside the server. I would use traceroute or mtr from a different machine on the network to identify where the connection is failing. I will check the switch and router configurations for any access control lists (ACLs) or firewall rules that might be blocking traffic to the server. If the server is in a different subnet, I would check the routing tables on the intermediate routers.

Advanced Linux Troubleshooting interview questions

1. How would you diagnose a situation where a specific application is consistently slow, but the overall system performance appears normal? Elaborate on the tools and techniques you'd employ.

To diagnose a consistently slow application despite normal system performance, I'd focus on application-specific bottlenecks. First, I'd use application performance monitoring (APM) tools like New Relic, Dynatrace, or even built-in profiling tools (if available) to pinpoint slow code execution paths, database query performance, and external API call latency. These tools help identify the exact functions or transactions causing delays. Next, I'd examine application logs for error messages, warnings, or unusual patterns that could indicate underlying problems, such as resource leaks or configuration issues.

If APM isn't available, I'd use system-level tools in a more targeted way. For example, strace on Linux or Process Monitor on Windows can trace system calls made by the application, revealing slow I/O operations or contention on specific resources. Database query logs can highlight inefficient queries, prompting index optimization or query rewriting. Also checking application-specific configuration for suboptimal settings or resource limitations is crucial, for instance, the java heap size for Java applications or connection pool size for database connections. Network latency between the application and its dependencies (e.g., database, external APIs) should also be measured using tools like ping, traceroute, or mtr to rule out network-related issues.

2. Explain your approach to troubleshooting a network connectivity issue where a server can ping external addresses but cannot resolve hostnames. What are the potential causes and how would you investigate?

When a server can ping external addresses but can't resolve hostnames, the primary suspect is a DNS issue. The server has basic network connectivity, confirmed by successful pings, but isn't translating domain names into IP addresses. I'd first check the configured DNS server settings on the server (e.g., in /etc/resolv.conf on Linux or in the network adapter settings on Windows) to ensure they're correct and pointing to a valid, functioning DNS server.

Next, I would use nslookup or dig to query the DNS server directly and see if it can resolve hostnames. If nslookup fails, this indicates a problem with the DNS server itself or the server's ability to reach it. I'd then investigate network connectivity to the DNS server (ping, traceroute), DNS server configurations, and firewall rules that might be blocking DNS traffic (port 53). Other potential causes include a faulty DNS cache on the server (which I would flush), or incorrect DNS suffix search order which can be configured through the network settings of the server.

3. Describe a scenario where a file system becomes read-only unexpectedly. What steps would you take to identify the root cause and restore write access?

A file system might unexpectedly become read-only due to several reasons. One common scenario is a file system corruption or error detected by the operating system. When this happens, the OS often remounts the file system in read-only mode to prevent further data corruption. This could also happen due to disk errors (bad sectors), insufficient disk space, or a misconfigured mount option.

To identify the root cause and restore write access, I would first check the system logs (/var/log/syslog or similar) for error messages related to the file system. I would then run dmesg to examine kernel messages for disk I/O errors or file system corruption reports. Next, I'd use df -h to verify that the disk isn't full. If the file system is corrupted, fsck (file system check) might be necessary to repair it. If the disk has errors, tools like smartctl (if supported) can provide information about its health. Finally, after addressing the root cause, I'd remount the file system with read-write permissions using the mount -o remount,rw /mount/point command. If disk errors are apparent, replacing the failing hardware is generally the best course of action.

4. A critical service on your Linux server crashes intermittently without leaving any obvious error messages. How would you proceed to debug this issue?

First, I'd ensure the system is configured to capture sufficient debugging information. This includes checking /var/log/syslog and /var/log/messages for any related entries around the crash times. I'd also configure systemd-journald for persistent logging if it's not already. Next, I'd examine resource usage (CPU, memory, disk I/O) using tools like top, vmstat, and iostat to identify potential resource exhaustion.

If the service is crashing without explicit errors, I'd use strace to trace system calls made by the service before the crash. This can reveal which system call is failing or leading to the crash. Another valuable tool is gdb. If a core dump is generated (check /proc/sys/kernel/core_pattern and ensure core dumps are enabled), I'd analyze the core dump using gdb to determine the exact point of failure. Consider adding more verbose logging to the service itself if possible and consider using a monitoring solution to alert when the service is down and collect performance stats.

5. You suspect a memory leak in a running process. Detail the tools and methods you would use to confirm the leak and identify the source code responsible.

To confirm a memory leak, I'd start with tools like top, htop, or ps to observe the process's memory consumption over time. A steadily increasing resident set size (RSS) or virtual memory size (VSZ) would indicate a potential leak. Then, I'd use memory profiling tools such as valgrind (specifically Memcheck) or AddressSanitizer (ASan) if recompilation is feasible, or tools like gdb with pmap or heaptrack if it's not. These tools would help pinpoint the allocation sites that are not being freed. For Java applications, tools like VisualVM or Java Mission Control can be used to analyze the heap and identify memory leaks.

Once I've identified the allocation sites, I'd examine the corresponding source code. I'd look for patterns like allocations without corresponding deallocations, objects being added to collections without being removed, or circular references preventing garbage collection. Static analysis tools can also help to identify potential memory leak issues in the code. Code reviews, focusing on memory management, also helps to find the source of memory leaks.

6. How would you troubleshoot a situation where a user is unable to log in to a Linux system, even with the correct credentials? Consider different authentication methods.

First, I'd verify the user's account status using passwd -S <username> to check if the account is locked or disabled. I'd also check /var/log/auth.log (or similar, depending on the system) for any authentication failures, which might provide clues about the cause (e.g., invalid shell, PAM configuration issues, or brute-force attempts). If using SSH keys, I'd confirm the user's ~/.ssh/authorized_keys file is correctly configured and that the permissions on the .ssh directory and authorized_keys file are restrictive enough (700 for .ssh and 600 for authorized_keys). For password authentication, I'd check if the user's password has expired using chage -l <username>. Finally, I would test if sudo su - <username> works, which often bypasses some login restrictions and helps isolate the issue.

If the issue persists, I'd investigate PAM (Pluggable Authentication Modules) configuration in /etc/pam.d/* which controls authentication policies, especially the common-auth, common-account, and common-session files. Incorrect PAM configurations can prevent logins, even with correct credentials. For systems using network authentication (like LDAP or Active Directory), I would verify network connectivity and the status of the authentication server. Commands like id <username> should return information from the network directory server if it's correctly configured.

7. Explain how you would diagnose and resolve a problem where a newly installed kernel module is causing system instability or crashes.

To diagnose and resolve system instability after installing a new kernel module, I'd first try to reproduce the issue consistently. Then, I would check system logs (/var/log/syslog, dmesg) for any errors or warnings related to the module. Disabling the module (using rmmod if possible, or blacklisting it in /etc/modprobe.d/ and rebooting if not) would be the next step to confirm if it's indeed the culprit. I'd also verify the module's dependencies and ensure they are compatible with the current kernel.

If the module is the problem, I would inspect its source code for potential bugs or memory leaks. Tools like valgrind could be used to analyze its behavior in a controlled environment. If a bug is found, I'd attempt to fix it and rebuild the module. If no bugs are apparent, I would consider compatibility issues with other hardware or software on the system and consult relevant documentation or forums for potential solutions or known issues.

8. Describe your approach to troubleshooting a situation where a background process is consuming excessive CPU resources, impacting overall system performance.

My approach to troubleshooting high CPU usage by a background process involves several steps. First, I'd identify the process using tools like top, htop, or ps to pinpoint the specific process consuming excessive CPU. Once identified, I'd analyze the process's logs and configuration to understand its function and any recent changes. I'd also use tools like strace or perf to profile the process and identify which system calls or functions are consuming the most CPU time.

Next, I would consider potential causes, such as inefficient algorithms, infinite loops, excessive I/O, or resource contention. Based on the profiling data, I would attempt to optimize the code, adjust process priorities using nice, limit resource usage (e.g., memory), or reschedule the process to off-peak hours. If the issue persists, I would investigate external factors like database queries, network activity, or hardware limitations that might be contributing to the problem. I'd monitor the system closely after implementing any changes to ensure the issue is resolved and doesn't reoccur.

9. A scheduled cron job is failing to execute as expected. What steps would you take to determine the cause of the failure and ensure the job runs successfully?

First, I'd check the cron job configuration using crontab -l to verify the schedule is correct and hasn't been accidentally modified. Next, I'd examine system logs (e.g., /var/log/syslog, /var/log/cron) for error messages related to the cron job execution. This often provides clues about why the job failed, such as incorrect file paths, missing dependencies, or permission issues. I would also examine the output that the cron job produces to standard output and standard error, possibly redirecting the output to files to make debugging easier. * * * * * /path/to/script.sh > /tmp/cron.log 2>&1

To ensure the job runs successfully, I would manually execute the script using the same user context as the cron job (using sudo -u <user> /path/to/script.sh) to reproduce the error and debug it in real-time. I'd also add error handling and logging within the script itself to provide more detailed information in case of future failures. Finally, I'd double-check file permissions and ensure all necessary dependencies are installed and accessible to the user running the cron job.

10. How would you investigate a scenario where a Linux server is experiencing high disk I/O, but no specific process appears to be responsible? Consider different monitoring tools.

To investigate high disk I/O on a Linux server when no single process seems responsible, I'd start with iotop to get a real-time view of I/O usage by process. If iotop doesn't pinpoint a specific process, I'd suspect kernel activity or background tasks. Then, I'd use iostat -xz 1 to analyze overall disk utilization, including metrics like %util (percentage of time the disk is busy) and await (average time for I/O operations). Also, check /proc/vmstat for pswpin and pswpout counters for detecting swapping activity, which can cause disk I/O. Additionally, I would use perf (Linux perf_events) to profile kernel disk I/O related functions to pinpoint exact kernel functions consuming I/O.

If the above steps don't reveal the cause, I would look for resource contention or underlying storage issues. I'd examine system logs (/var/log/syslog, /var/log/kern.log) for disk errors or related warnings. I'd consider if any scheduled tasks (cron jobs) or system daemons are intermittently causing the high I/O. Network file systems (NFS) or other remote storage can also be a source, so I'd check network connectivity and the status of remote mounts using df -h. For specific filesystems (e.g., XFS, ext4), filesystem-specific tools might offer more granular diagnostics. If using LVM, check LVM stats.

11. Explain how you would troubleshoot a situation where a virtual machine (VM) running on a Linux host is experiencing network connectivity issues that the other VMs are not.

First, I'd verify the VM's network configuration (IP address, subnet mask, gateway, DNS) using ip addr, ip route, and /etc/resolv.conf. I'd ping the gateway and other VMs on the same network to check basic reachability. Next, I'd examine the VM's firewall rules using iptables -L or firewall-cmd --list-all to ensure traffic isn't blocked. After that, I would check the VM's network interface configuration file (e.g., /etc/network/interfaces or files in /etc/sysconfig/network-scripts/) for any errors. I'll also make sure the interface is up with ip link set <interface> up. If all that looks good, I'd check the Linux host's network configuration to ensure there are no routing or bridging issues affecting only that specific VM. Finally I'd ensure the hypervisor isn't doing something strange.

12. Describe your approach to diagnosing and resolving a problem where a systemd service fails to start automatically at boot time. Consider dependency issues.

When a systemd service fails to start automatically at boot, I first check the service status using systemctl status <service_name>. This will show error messages and the reason for the failure. I then examine the system logs (journalctl -u <service_name>) for more detailed information, focusing on timestamps around the boot process. Dependency issues are a common cause; I'd inspect the Requires, After, and Before directives in the service unit file. If a required service isn't starting, the dependent service will likely fail too.

To resolve dependency problems, I ensure that all dependencies are correctly configured and enabled. The systemctl list-dependencies <service_name> command is invaluable for understanding the service's dependency tree. If a circular dependency exists, the service file needs modification. I carefully adjust the After and Requires directives to resolve the loop. If an external factor like network access is a dependency, I’ll check for network-online.target in the After directive. Finally, I'd try manually starting each dependency in the correct order to pinpoint the exact point of failure and address that specific issue before re-enabling the main service.

13. You suspect a security breach on your Linux server. What steps would you take to investigate the incident, identify the attacker's entry point, and mitigate the damage?

First, isolate the server to prevent further damage. This involves disconnecting it from the network. Then, gather evidence: examine system logs (/var/log/auth.log, /var/log/syslog, /var/log/secure), web server logs (if applicable), and application logs. Check for unusual processes using tools like ps, top, and netstat to identify suspicious activity or connections. Review user accounts for any unauthorized or recently created accounts, and check .bash_history files for unusual commands.

To identify the entry point, analyze the logs for suspicious login attempts, failed SSH attempts, or vulnerabilities exploited. Check for unauthorized file modifications using tools like find with date/time parameters. Once the entry point is identified, patch the vulnerability, remove any malware or backdoors, and restore the system from a clean backup if available. Change all compromised passwords and implement multi-factor authentication. Finally, analyze the root cause to prevent future incidents and improve security measures, such as implementing intrusion detection systems and regular security audits.

14. How would you troubleshoot a situation where a shared library is causing conflicts between different applications on a Linux system? Consider versioning issues.

When a shared library causes conflicts between applications, especially due to versioning, I'd start by identifying the conflicting library using tools like ldd to check which applications are using it. Then, I'd use ls -l or file to determine the exact version of the library loaded by each application.

To resolve the conflicts, I'd consider these approaches:

- Symbol versioning: Ensures that different versions of the same library can coexist by using versioned symbols.

- Using different library paths: Setting

LD_LIBRARY_PATHfor individual applications to point to the correct library version. Caution: This should be used carefully as it can cause unintended consequences. - Containerization: Isolating applications and their dependencies in containers (e.g., Docker) to prevent conflicts.

- Static Linking: Linking the library statically with each application, eliminating the need for a shared library altogether (if feasible and license allows).

15. Explain how you would diagnose and resolve a problem where a Linux server is experiencing frequent kernel panics. What tools and techniques would you use to gather information?

To diagnose frequent kernel panics on a Linux server, I'd start by gathering information using these tools and techniques. First, I would examine the system logs, especially /var/log/syslog, /var/log/kern.log, and the output of dmesg immediately after a reboot. These logs often contain valuable clues about the cause of the panic, such as driver issues, hardware errors, or memory corruption. I would also configure kdump to capture a memory dump (vmcore) when a kernel panic occurs. This allows for offline analysis using tools like crash or gdb to pinpoint the exact code location and state that triggered the panic.

Next, I'd analyze the kernel crash dump using crash or gdb. This involves examining the call stack, registers, and other relevant memory regions to identify the root cause. Potential culprits include faulty hardware (memory, CPU), buggy kernel modules or drivers, and kernel configuration issues. To rule out hardware problems, I'd run memory tests (e.g., Memtest86+) and monitor CPU temperatures and voltages. If a specific driver is suspected, I'd try updating or removing it. Additionally, reviewing recent system changes, such as kernel updates or configuration modifications, can help identify potential causes. Finally, enabling sysrq can provide a way to trigger a manual crash and collect information when the system is about to panic which helps debug.

16. Describe your approach to troubleshooting a situation where a database server on a Linux system is experiencing performance degradation due to slow queries. Consider profiling tools.

When troubleshooting slow database queries on Linux, I'd start by identifying the problematic queries using tools like mysqladmin processlist (for MySQL) or pg_stat_activity (for PostgreSQL). I would also look at the database server's slow query log, if enabled. After identifying slow queries, I'd use profiling tools like EXPLAIN to analyze the query execution plan and identify bottlenecks like missing indexes or full table scans. pt-query-digest or pgBadger can aggregate slow query logs for easier analysis.

On the system level, I'd monitor resource usage using tools like top, vmstat, and iostat to check for CPU, memory, or disk I/O bottlenecks. Network latency could be checked using ping or traceroute. If the system resources are constrained, I'd investigate the root cause and consider adding more resources or optimizing the database configuration. For example, increasing buffer pool size. Finally, I will run the slow queries with strace to check system calls and get more insight.

17. How would you investigate a scenario where a Linux server is sending out spam emails? What steps would you take to identify the compromised account or process?

To investigate a Linux server sending spam, I'd start by examining the mail logs (/var/log/mail.log or similar) for patterns, sending IPs, and timestamps related to the spam. I'd use tools like grep, awk, and tail to filter and analyze these logs. I'd also check the mail queue using mailq or postqueue -p to identify the messages and their senders.

Next, I'd try to identify the compromised account or process. This involves checking user activity, looking for suspicious cron jobs, and examining running processes with top or ps aux for unusual resource usage or connections. I'd review user login history (/var/log/auth.log or /var/log/secure) for unauthorized access. Tools like netstat or ss can help identify processes making connections to external mail servers. Also check for any recently installed packages. Once the account or process is identified, I'd secure the account (e.g., changing passwords, disabling the account) and investigate how the compromise occurred.

18. Explain how you would troubleshoot a situation where a containerized application running on a Linux system is failing to start due to resource constraints. Consider cgroups.

First, I'd check the container logs and system logs (e.g., journalctl) for error messages indicating resource exhaustion (CPU, memory, disk I/O). Then, I'd use docker stats or kubectl top (if using Kubernetes) to monitor the resource usage of the failing container and other containers on the same host. Using docker inspect <container_id> will show configured resource limits. If the container is hitting configured limits I would adjust the resource limits in the container orchestration system (Docker Compose, Kubernetes deployments etc.) or docker run command. Also, I would check the host's resource usage with tools like top, htop, or free -m to identify overall system resource pressure. If the host is under resource constraints, I would consider scaling up the host, moving containers to other hosts, or optimizing resource usage by other applications.

To investigate cgroup limits directly, I would navigate to the cgroup directory for the container, typically located under /sys/fs/cgroup/memory/docker/<container_id> or /sys/fs/cgroup/cpu/docker/<container_id>. Here, I could inspect files like memory.limit_in_bytes and cpu.shares to verify the enforced resource limits. Misconfiguration or unexpected values in these files could also point to the root cause. It's also possible that an OOMKilled event happened which can be checked via dmesg.

19. Describe your approach to diagnosing and resolving a problem where a Linux server is experiencing high network latency. What tools and techniques would you use to identify the bottleneck?

To diagnose high network latency on a Linux server, I'd start by confirming the issue with ping or traceroute to different destinations, both internal and external. I'd then use tools to identify the bottleneck. tcpdump or Wireshark can capture network traffic for analysis, looking for retransmissions, delays, or unusual packet sizes. iftop or nload helps monitor network interface utilization to see if saturation is occurring. ethtool can check for interface errors or speed/duplex mismatches.

Further investigation would involve examining server resource usage with tools like top or htop to rule out CPU or memory contention affecting network performance. netstat or ss can reveal established connections and their states, helping to identify specific applications or hosts contributing to the latency. I'd also check system logs (/var/log/syslog, /var/log/kern.log) for relevant error messages. If the issue persists, I'd analyze the network path, checking switches and routers for congestion or misconfiguration using their respective monitoring tools or command-line interfaces.

20. How would you troubleshoot a situation where a user is reporting that their files are being corrupted on a shared network file system (NFS)? Consider file locking issues.