When evaluating potential hires for Kafka-related roles, asking the right interview questions ensures you identify candidates with the necessary skills and experience. These questions are crucial for roles where understanding distributed systems, message processing, and real-time data streaming is critical.

In this post, we provide a comprehensive list of Kafka interview questions curated for different levels and scenarios to help you evaluate candidates effectively. Our collection includes questions for initiating interviews, assessing junior developers, understanding message processing, exploring distributed systems, and situational queries aimed at top-tier talent.

Using this guide can streamline your interview process and help you pinpoint the best candidates for your Kafka-related roles. Pair your interview process with our Kafka skills test to pre-screen candidates and ensure you interview only the best.

Table of contents

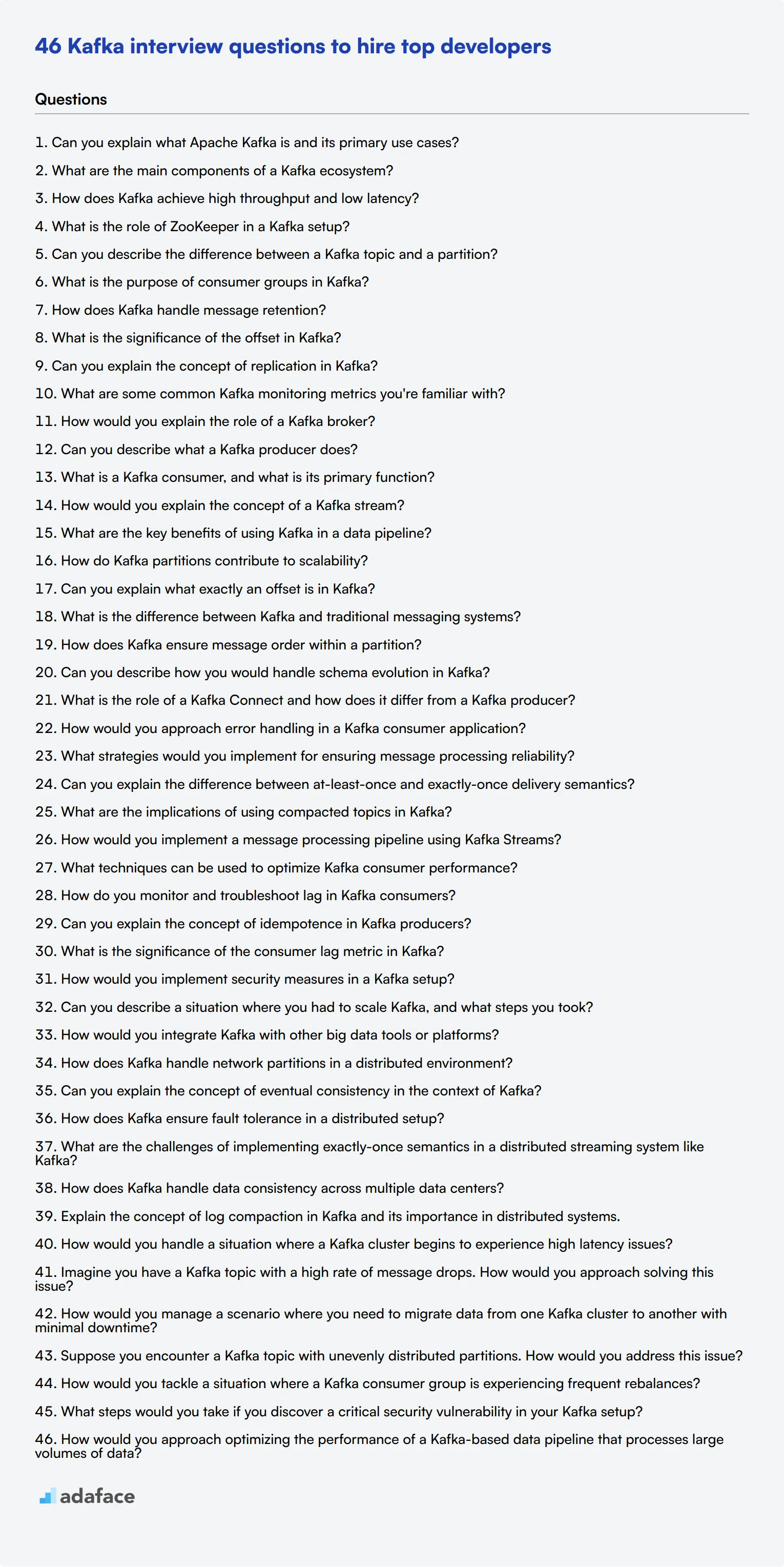

10 Kafka interview questions to initiate the interview

To kickstart your interview process for a Kafka engineer position, use these straightforward questions. They're designed to gauge a candidate's basic understanding of Kafka and its core concepts, helping you quickly assess their foundational knowledge.

- Can you explain what Apache Kafka is and its primary use cases?

- What are the main components of a Kafka ecosystem?

- How does Kafka achieve high throughput and low latency?

- What is the role of ZooKeeper in a Kafka setup?

- Can you describe the difference between a Kafka topic and a partition?

- What is the purpose of consumer groups in Kafka?

- How does Kafka handle message retention?

- What is the significance of the offset in Kafka?

- Can you explain the concept of replication in Kafka?

- What are some common Kafka monitoring metrics you're familiar with?

8 Kafka interview questions and answers to evaluate junior developers

To evaluate whether junior developers have a foundational understanding of Kafka, consider using these specific interview questions. They are designed to gauge the candidate’s grasp on essential concepts without diving too deep into technical specifics. Perfect for determining if applicants are ready to grow and thrive in your team!

1. How would you explain the role of a Kafka broker?

A Kafka broker is essentially a server that stores and serves Kafka messages. It handles all the data exchange between producers and consumers, making sure the data flows smoothly and efficiently.

An ideal candidate should mention that brokers are responsible for maintaining the data and ensuring fault tolerance within the Kafka cluster. Look for candidates who understand the importance of brokers in managing partitions and ensuring data replication.

2. Can you describe what a Kafka producer does?

A Kafka producer is responsible for sending records to Kafka topics. Producers push data to Kafka, ensuring the messages are delivered to the correct topic and partition.

Look for candidates who explain the various configurations and settings, such as acks and retries, that producers can use to ensure message delivery reliability. This indicates a deeper understanding of Kafka's robust data handling capabilities.

3. What is a Kafka consumer, and what is its primary function?

A Kafka consumer reads records from Kafka topics. Consumers subscribe to topics and process the messages in real-time or batch.

Candidates should mention how consumers can be part of consumer groups to ensure that the load is distributed and that messages are processed exactly once. This highlights their understanding of Kafka's scalability and fault tolerance mechanisms.

4. How would you explain the concept of a Kafka stream?

Kafka Streams is a client library for building applications and microservices, where the input and output data are stored in Kafka clusters. It's used for processing real-time data streams.

Look for candidates who can discuss how Kafka Streams allows for complex transformations and aggregations on the data flowing through Kafka. This shows they understand how to utilize Kafka for real-time data processing.

5. What are the key benefits of using Kafka in a data pipeline?

Kafka offers high throughput, low latency, scalability, durability, and reliability, making it an ideal choice for real-time data pipelines. It can handle large volumes of data and ensures that the data is processed in the right order.

Candidates should highlight Kafka's ability to integrate with various systems and its support for both stream processing and batch processing. This indicates they understand the versatility and robustness Kafka brings to data pipelines.

6. How do Kafka partitions contribute to scalability?

Kafka partitions allow topics to be split into several smaller parts, enabling parallel processing. Each partition can be hosted on different brokers, thus distributing the load.

Look for candidates who explain that partitions help in achieving higher throughput since multiple consumers can read from different partitions concurrently. This understanding is crucial for scaling Kafka clusters efficiently.

7. Can you explain what exactly an offset is in Kafka?

An offset in Kafka is a unique identifier assigned to each record within a partition. It keeps track of the consumer's position, ensuring messages are read in the correct order.

Candidates should mention that offsets enable consumers to resume reading from where they left off in case of failures, ensuring no data is lost. This demonstrates an understanding of Kafka's reliability and fault tolerance features.

8. What is the difference between Kafka and traditional messaging systems?

Kafka is designed for high throughput, fault tolerance, and scalability, unlike traditional messaging systems that may not handle large volumes of data as efficiently. Kafka's architecture allows for distributed data storage and parallel processing.

Strong candidates should highlight Kafka's ability to handle both real-time and batch data, its support for stream processing, and its robust data retention policies. This shows they understand the advantages of using Kafka for modern data needs.

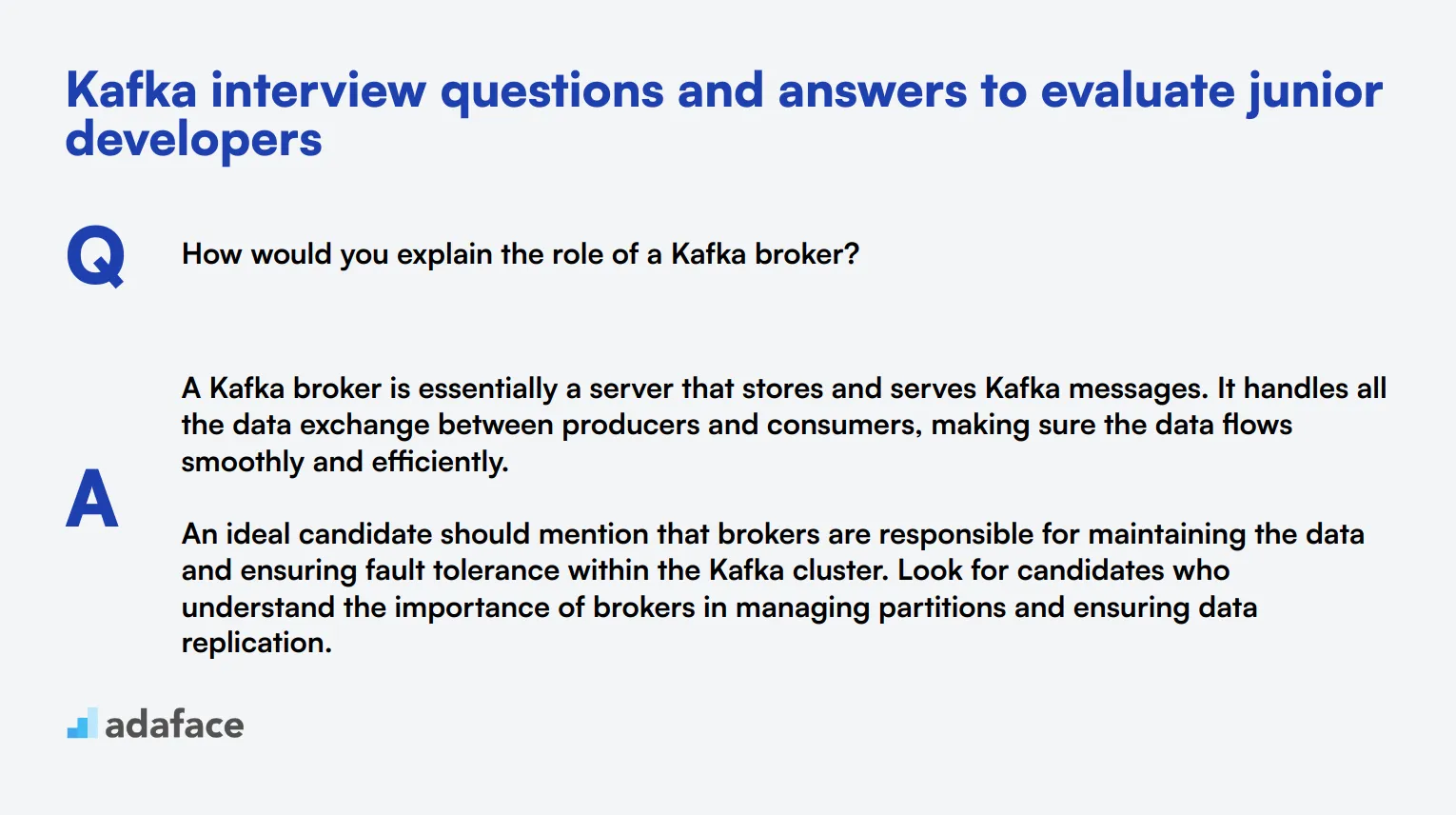

15 Kafka questions related to message processing

To evaluate whether candidates possess the necessary skills for effective message processing in Kafka, consider using this curated list of interview questions. These questions can be incorporated into your hiring process for roles such as a Kafka engineer or related positions to assess technical proficiency and problem-solving abilities.

- How does Kafka ensure message order within a partition?

- Can you describe how you would handle schema evolution in Kafka?

- What is the role of a Kafka Connect and how does it differ from a Kafka producer?

- How would you approach error handling in a Kafka consumer application?

- What strategies would you implement for ensuring message processing reliability?

- Can you explain the difference between at-least-once and exactly-once delivery semantics?

- What are the implications of using compacted topics in Kafka?

- How would you implement a message processing pipeline using Kafka Streams?

- What techniques can be used to optimize Kafka consumer performance?

- How do you monitor and troubleshoot lag in Kafka consumers?

- Can you explain the concept of idempotence in Kafka producers?

- What is the significance of the consumer lag metric in Kafka?

- How would you implement security measures in a Kafka setup?

- Can you describe a situation where you had to scale Kafka, and what steps you took?

- How would you integrate Kafka with other big data tools or platforms?

6 Kafka interview questions and answers related to distributed systems

When interviewing for Kafka roles related to distributed systems, it's crucial to assess candidates' understanding of complex architectures. These questions will help you gauge applicants' knowledge of Kafka's distributed nature and how it interacts with other systems. Use them to identify candidates who can navigate the intricacies of large-scale data processing and streaming.

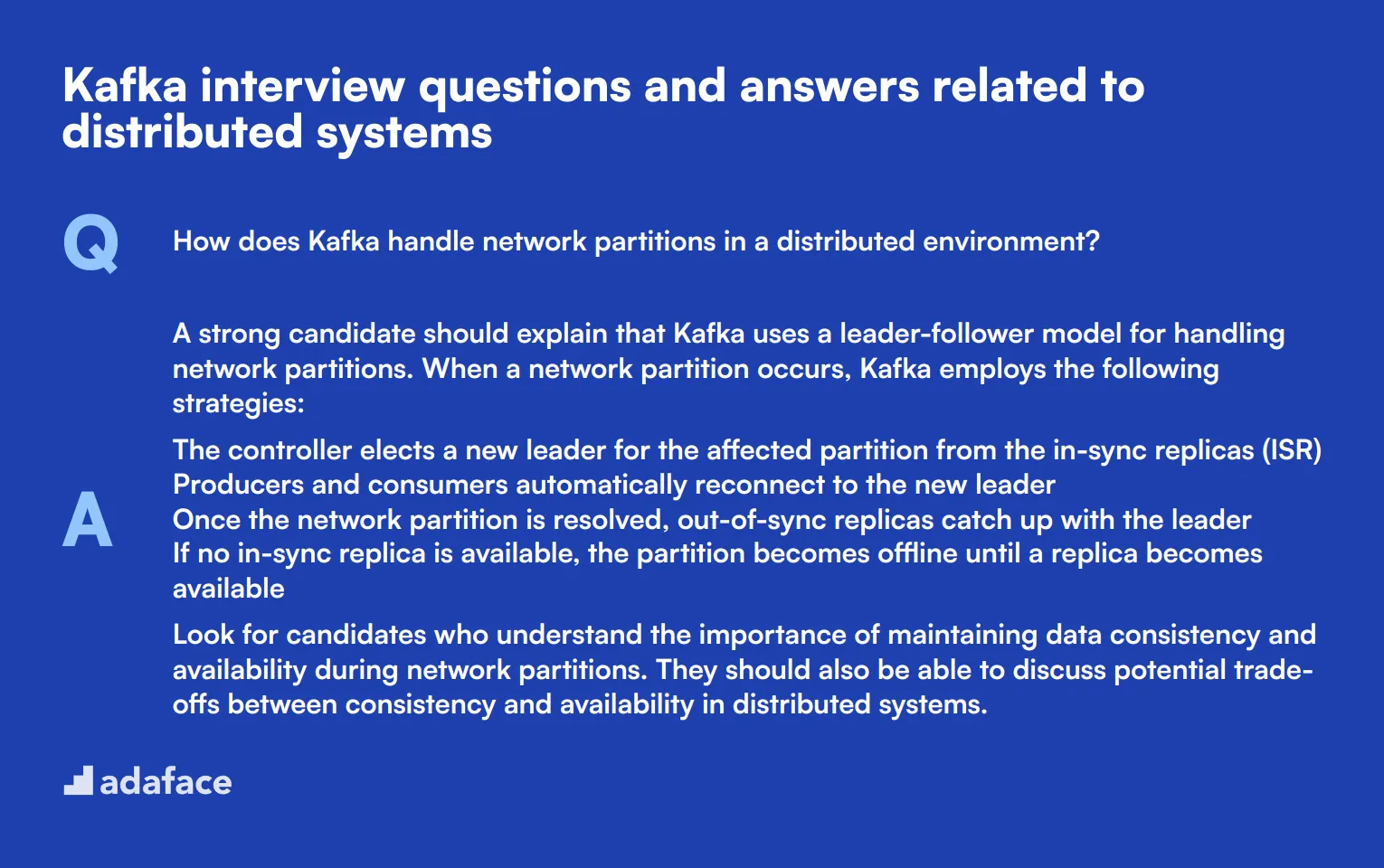

1. How does Kafka handle network partitions in a distributed environment?

A strong candidate should explain that Kafka uses a leader-follower model for handling network partitions. When a network partition occurs, Kafka employs the following strategies:

- The controller elects a new leader for the affected partition from the in-sync replicas (ISR)

- Producers and consumers automatically reconnect to the new leader

- Once the network partition is resolved, out-of-sync replicas catch up with the leader

- If no in-sync replica is available, the partition becomes offline until a replica becomes available

Look for candidates who understand the importance of maintaining data consistency and availability during network partitions. They should also be able to discuss potential trade-offs between consistency and availability in distributed systems.

2. Can you explain the concept of eventual consistency in the context of Kafka?

Eventual consistency in Kafka refers to the guarantee that all replicas of a partition will eventually have the same data, given enough time without updates. This concept is crucial for distributed systems like Kafka.

In Kafka:

- Producers write to the leader partition

- Followers asynchronously replicate data from the leader

- Consumers can read from any replica, potentially seeing slightly outdated data

- Over time, all replicas converge to the same state

A strong candidate should be able to explain the trade-offs between consistency and performance. They should also discuss scenarios where eventual consistency is acceptable and where stronger consistency guarantees might be necessary.

3. How does Kafka ensure fault tolerance in a distributed setup?

Kafka ensures fault tolerance through several mechanisms:

- Replication: Each topic partition is replicated across multiple brokers

- Leader-Follower Model: One broker is the leader for a partition, others are followers

- In-Sync Replicas (ISR): Kafka tracks which followers are up-to-date

- Automatic Leader Election: If a leader fails, a new one is elected from the ISR

- Rack Awareness: Replicas can be distributed across different racks or data centers

Look for candidates who can explain these concepts in detail and discuss how they contribute to Kafka's reliability. They should also be able to talk about potential failure scenarios and how Kafka handles them.

4. What are the challenges of implementing exactly-once semantics in a distributed streaming system like Kafka?

Implementing exactly-once semantics in Kafka involves addressing several challenges:

- Idempotent Producers: Ensuring that duplicate messages are not introduced

- Transactional Guarantees: Allowing atomic writes across multiple partitions

- Consumer Offsets: Managing consumer progress to avoid reprocessing

- Network Failures: Handling scenarios where messages might be sent but not acknowledged

- Performance Trade-offs: Balancing exactly-once guarantees with system throughput

A strong candidate should be able to explain these challenges and discuss Kafka's solutions, such as idempotent producers and transactional APIs. They should also be aware of the performance implications and scenarios where exactly-once semantics are crucial.

5. How does Kafka handle data consistency across multiple data centers?

Kafka handles data consistency across multiple data centers through a feature called MirrorMaker. This tool allows for the replication of data between Kafka clusters in different locations. The process involves:

- Setting up a separate Kafka cluster in each data center

- Using MirrorMaker to consume messages from the source cluster

- Producing these messages to the destination cluster

- Configuring topics for cross-data center replication

- Managing potential conflicts and latency issues

Look for candidates who can discuss the challenges of multi-data center setups, such as network latency, conflict resolution, and ensuring consistency. They should also be able to explain scenarios where multi-data center replication is necessary and potential alternatives or optimizations.

6. Explain the concept of log compaction in Kafka and its importance in distributed systems.

Log compaction is a feature in Kafka that allows the system to retain only the latest value for each key in a log. This is particularly important for distributed systems that use Kafka as a backing store or for event sourcing.

Key aspects of log compaction:

- Retains at least the last known value for each message key

- Reduces storage requirements while maintaining data integrity

- Useful for rebuilding application state after crashes

- Enables faster recovery and reloading of caches in distributed systems

A strong candidate should be able to explain scenarios where log compaction is beneficial, such as in key-value stores or for maintaining configuration data. They should also discuss potential trade-offs and limitations of log compaction.

7 situational Kafka interview questions with answers for hiring top developers

To identify developers who can effectively navigate real-world challenges in Kafka environments, use these situational interview questions. They are designed to reveal how candidates approach problem-solving and decision-making under complex scenarios.

1. How would you handle a situation where a Kafka cluster begins to experience high latency issues?

Candidates should explain that they would start by identifying the root cause of the latency. This involves checking the Kafka metrics for any unusual spikes in resource usage, looking at network bandwidth, and examining the performance of the Kafka brokers and ZooKeeper nodes.

An ideal candidate should highlight steps like optimizing producer and consumer configurations, considering hardware upgrades, or tuning JVM parameters if necessary. They should also mention monitoring tools they have used in the past to diagnose such issues.

Look for responses that showcase a systematic approach to troubleshooting and familiarity with Kafka's monitoring and performance metrics.

2. Imagine you have a Kafka topic with a high rate of message drops. How would you approach solving this issue?

First, candidates might mention that they would look into the producer settings, such as the buffer size, batch size, and the acks configuration. They might also investigate the consumer lag to ensure that consumers are keeping up with the message rate.

They should also consider whether the issue is related to broker performance, possibly due to resource constraints or suboptimal configurations. Exploring Kafka logs for errors and warnings can provide additional insights.

Strong answers will demonstrate a thorough understanding of Kafka's producer and consumer configurations, as well as experience with monitoring and log analysis tools.

3. How would you manage a scenario where you need to migrate data from one Kafka cluster to another with minimal downtime?

Candidates should mention using tools like MirrorMaker to replicate data between Kafka clusters. They might also talk about setting up the new cluster to run concurrently with the old one, gradually redirecting traffic to ensure minimal disruption.

They should emphasize the importance of testing the new cluster thoroughly before the migration and having a rollback plan in case of issues. Ensuring data consistency and handling potential conflicts should also be part of their strategy.

Look for answers that reflect a strong understanding of Kafka migration tools and strategies, as well as risk mitigation techniques.

4. Suppose you encounter a Kafka topic with unevenly distributed partitions. How would you address this issue?

Candidates might start with examining the partition key strategy used by the producers to ensure it provides even distribution. They could also mention the need to rebalance the partitions manually or using Kafka's built-in rebalancing tools.

The response should include steps like adjusting the partition count or reassigning partitions to different brokers to balance the load. They might also discuss the potential need to recalibrate the producer logic if the current key strategy is causing the imbalance.

An ideal response will show a clear, methodical approach to diagnosing and addressing partition imbalance, as well as familiarity with Kafka's partitioning mechanisms.

5. How would you tackle a situation where a Kafka consumer group is experiencing frequent rebalances?

Candidates should explain that they would first check for issues such as network instability, consumer crashes, or inefficient consumer configurations. They might mention adjusting the session and heartbeat timeouts to reduce the likelihood of unnecessary rebalances.

They could also talk about optimizing the consumer logic to ensure it's processing messages efficiently and not causing excessive lag. Implementing proper error handling and retry mechanisms could also help stabilize the consumer group.

Look for responses that indicate a deep understanding of Kafka consumer configurations and strategies to minimize rebalancing disruptions.

6. What steps would you take if you discover a critical security vulnerability in your Kafka setup?

Candidates should mention immediate steps like isolating the vulnerable components and applying security patches or updates. They should also discuss the importance of conducting a thorough security audit to identify any other potential weaknesses.

They might bring up the need to update security configurations, such as enabling encryption, authentication, and access control mechanisms. Additionally, monitoring and logging should be enhanced to detect any suspicious activities.

Strong candidates will emphasize the importance of a proactive security posture and continuous monitoring to prevent future vulnerabilities.

7. How would you approach optimizing the performance of a Kafka-based data pipeline that processes large volumes of data?

Candidates should discuss strategies like optimizing producer and consumer settings, partitioning topics effectively, and ensuring efficient data serialization formats. They might also mention the importance of tuning JVM parameters and Kafka broker configurations.

They could also talk about scaling the Kafka cluster by adding more brokers or using techniques like tiered storage to handle large volumes of data more efficiently. Monitoring and profiling tools can help identify bottlenecks and areas for improvement.

Look for answers that reflect a comprehensive understanding of Kafka performance tuning and the ability to handle large-scale data processing challenges.

Which Kafka skills should you evaluate during the interview phase?

While it's impossible to fully assess a candidate's capabilities in a single interview, focusing on a few core Kafka skills can provide significant insights. For Kafka roles, these skills are not only directly related to daily tasks but are also indicative of a candidate's overall ability to contribute to your team's success.

Kafka Architecture Understanding

A deep understanding of Kafka's architecture is fundamental for any developer working in this domain. Knowledge of topics such as topics, partitions, brokers, producers, consumers, and the role of Zookeeper in Kafka is critical for effective system design and troubleshooting.

Evaluating a candidate's grasp on Kafka architecture can be effectively started with an MCQ assessment. Consider using the Kafka Online Test to assess this knowledge efficiently before proceeding to more in-depth discussions.

To delve further into their understanding, you can also pose specific interview questions.

Can you explain how Kafka uses Zookeeper?

Look for detailed explanations that connect Zookeeper's role with Kafka's performance and fault tolerance. Candidates should demonstrate clear understanding of how Kafka components interact through Zookeeper.

Data Processing with Kafka Streams

Proficiency in Kafka Streams is essential for building real-time streaming applications. This skill signifies a developer's ability to handle data processing pipelines effectively within Kafka.

Assessing practical expertise can be done through targeted interview questions.

How would you design a Kafka Streams application to aggregate real-time data from multiple sources?

Expect detailed architecture plans including considerations for state management, fault tolerance, and scalability. The candidate's response should reflect an understanding of stream processing patterns.

Performance Tuning and Optimization

Kafka's performance tuning and optimization is essential to manage large data flows efficiently. Candidates must be familiar with configuration, troubleshooting, and optimizing Kafka setups to ensure data integrity and low latency.

A preliminary MCQ assessment could be beneficial here. Consider including this assessment phase using industry-standard Kafka tests to filter candidates.

Subsequently, specific questions can verify their practical expertise.

What strategies would you employ to optimize a Kafka producer's performance?

Successful answers should include methods like batch sizing, data compression, and tuning producer configurations to enhance throughput and reduce latency.

Enhance Your Team with Expert Kafka Candidates Using Adaface

When aiming to hire someone with Kafka skills, confirming that candidates genuinely possess the required abilities is fundamental. It's important that they demonstrate both theoretical understanding and practical competence.

The best way to assess these skills is through targeted skills tests. Consider utilizing the Kafka Online Test, designed to evaluate a candidate's proficiency efficiently before the interview process.

After candidates complete the skills test, you can effectively shortlist the top performers. This allows you to focus your interviewing efforts on candidates who have already demonstrated a high level of competence in Kafka.

To move forward, guide your recruitment process efficiently by registering on the Adaface platform. Begin by signing up here, or explore detailed pricing plans and features here to find the best fit for your hiring needs.

Kafka Online Test

Download Kafka interview questions template in multiple formats

Kafka Interview Questions FAQs

A Kafka developer should have strong knowledge of distributed systems, experience with message processing, and good command of programming languages like Java or Scala.

You can use targeted questions about Kafka architecture, message processing, and real-world scenarios to understand their experience and problem-solving abilities.

Kafka provides a reliable and scalable platform for handling real-time data streams, which is essential for modern distributed systems and microservices architecture.

Advanced topics can include Kafka Streams, KSQL, exactly-once semantics, and integration with other big data tools like Spark and Hadoop.

Focus on basic Kafka concepts, such as producers, consumers, topics, and partitioning, and ask them to explain these concepts in their own words.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources