Hiring a Spark Developer can be a game-changer for companies looking to harness the power of big data analytics. However, many recruiters stumble when it comes to identifying the right talent. The key is to understand that Spark Developers aren't just coders - they're data architects who can transform raw information into actionable insights. It's not just about finding someone who knows the technology; it's about finding a problem-solver who can drive your data strategy forward.

This comprehensive guide will walk you through the process of hiring a top-notch Spark Developer. We'll cover everything from crafting an effective job description to conducting technical interviews. For a deeper dive into the specific skills to look for, check out our detailed breakdown of Spark Developer skills.

Table of contents

What does a Spark Developer do?

A Spark Developer is responsible for developing large-scale data processing applications using Apache Spark. They transform high volumes of data into actionable insights, helping companies make informed decisions.

On a daily basis, a Spark Developer works on various tasks:

- Designing and implementing data pipelines using Apache Spark

- Writing complex Spark SQL queries to manipulate data

- Optimizing Spark jobs for performance and scalability

- Collaborating with data engineers and data scientists to integrate Spark applications

- Ensuring data quality and troubleshooting any issues in Spark environments

For a comprehensive look at the skills required for a Spark Developer, refer to our blog.

Spark Developer Hiring Process

Hiring a Spark Developer can be a streamlined process if you follow the right steps. Typically, the entire process takes around 1-2 months.

- Craft a clear job description: Ensure your job description highlights the necessary skills and experience for a Spark Developer. Post this on platforms where tech talent frequently visits.

- Resume screening: Collect resumes within the first week. Look for candidates with experience in Spark, Scala, and big data technologies.

- Skills assessment: Utilize technical screening tools to test the candidate's Spark-related skills. This helps in narrowing down the list to the most competent candidates.

- Interview stage: Conduct interviews for shortlisted candidates. Focus on technical competency as well as cultural fit within your team.

- Final selection and offer: Choose the best candidate based on their performance in assessments and interviews. Proceed with the offer letter and onboarding process.

This process ensures that you hire a top-tier Spark Developer who aligns with your technical demands and cultural expectations. Let's embark on each step in more detail to optimize your recruitment strategy.

Key Skills and Qualifications for Hiring a Spark Developer

When hiring a Spark Developer, crafting the ideal candidate profile can be challenging. What may seem crucial for one organization might only be optional for another. It's important to clearly differentiate between required and preferred qualifications to find the best fit for your team.

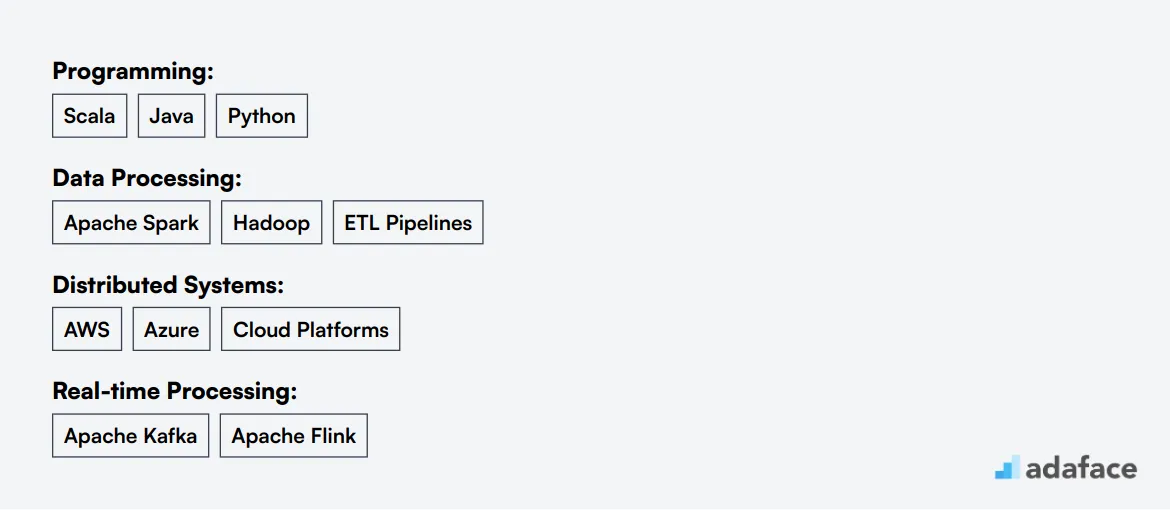

Here are some common skills and qualifications to consider when evaluating candidates for this role:

- Required Skills:

- Proven experience in developing with Apache Spark and the Hadoop ecosystem

- In-depth understanding of Spark architecture and performance tuning

- Strong programming skills in Scala, Java, or Python

- Experience with data processing and ETL pipeline design

- Knowledge of distributed systems and cloud platforms like AWS or Azure

- Preferred Skills:

- Experience with real-time data processing using Apache Kafka or Flink

- Familiarity with data warehousing solutions like Snowflake or Redshift

- Experience with machine learning libraries and frameworks

- Understanding of CI/CD processes and tools

- Strong communication skills for collaboration with cross-functional teams

| Required skills and qualifications | Preferred skills and qualifications |

|---|---|

| Proven experience in developing with Apache Spark and the Hadoop ecosystem | Experience with real-time data processing using Apache Kafka or Flink |

| In-depth understanding of Spark architecture and performance tuning | Familiarity with data warehousing solutions like Snowflake or Redshift |

| Strong programming skills in Scala, Java, or Python | Experience with machine learning libraries and frameworks |

| Experience with data processing and ETL pipeline design | Understanding of CI/CD processes and tools |

| Knowledge of distributed systems and cloud platforms like AWS or Azure | Strong communication skills for collaboration with cross-functional teams |

How to Write an Effective Spark Developer Job Description

Once you've identified the ideal candidate profile for your Spark Developer role, the next step is crafting a compelling job description to attract top talent. Here are some key tips to make your Spark Developer job description stand out:

- Highlight specific responsibilities: Clearly outline the day-to-day tasks and projects the Spark Developer will handle, such as developing data processing pipelines or optimizing big data workflows.

- Balance technical requirements: List must-have skills like proficiency in Scala or Java, experience with Spark ecosystem, and familiarity with distributed computing concepts.

- Emphasize soft skills: Include qualities like problem-solving abilities, teamwork, and communication skills to find well-rounded candidates.

- Showcase your company's appeal: Mention unique projects, learning opportunities, or company culture to attract passionate Spark Developers.

Top Platforms to Hire Spark Developers

Once you have your job description ready, it's time to source candidates. Listing your Spark Developer role on various job platforms will help you reach a broader audience and attract the right talent.

LinkedIn is ideal for listing full-time Spark Developer roles due to its vast professional network and recruitment tools.

Indeed

Indeed is popular for full-time job listings across various industries, including tech, with a wide candidate reach.

Upwork

Upwork is a leading platform to hire freelance Spark Developers for specific projects or short-term work.

Some of the best platforms to consider include LinkedIn for its professional network, and Indeed, which is popular across industries. Additionally, Upwork and Toptal are excellent options for sourcing freelance Spark Developers, providing flexibility for project-based work. For companies interested in company culture, Glassdoor offers insight along with job listings, and Hired connects you with vetted tech candidates actively seeking opportunities.

How to screen Spark Developer resumes?

In the world of tech recruitment, resume screening is a key step in narrowing down a vast pool of candidates to find the ideal Spark Developer. This initial screening helps identify resumes that align with your job requirements, saving time and effort in the interview process.

Manually screening resumes involves spotting specific keywords related to Spark development. Look for mentions of Apache Spark, Hadoop ecosystem, Scala, Java, or Python. Other important keywords might include ETL pipeline design, distributed systems, and cloud platforms like AWS or Azure. These skills are fundamental in assessing a candidate's fit for the role.

Leverage AI LLMs to automate and enhance the screening process. Tools like Claude or ChatGPT can help by scanning resumes for matching keywords and highlighting potential candidates. This approach offers an objective way to sift through numerous applications quickly and effectively.

Here's a sample prompt you can use to streamline your process:

TASK: Screen resumes to match job description for Spark Developer role

INPUT: Resumes

OUTPUT: For each resume, provide following information:

- Email id

- Name

- Matching keywords

- Score (out of 10 based on keywords matched)

- Recommendation (detailed recommendation of whether to shortlist this candidate or not)

- Shortlist (Yes, No or Maybe)

RULES:

- If you are unsure about a candidate's fit, put the candidate as Maybe instead of No

- Keep recommendation crisp and to the point.

KEYWORDS DATA:

- Apache Spark, Hadoop ecosystem

- Programming (Scala, Java, Python)

- Distributed systems (AWS, Azure)

Recommended Skills Tests for Assessing Spark Developers

Skills tests are a great way to evaluate Spark developers objectively. They help you assess candidates' practical abilities beyond what's listed on their resumes. Let's look at the top tests to use when hiring Spark developers.

Spark Online Test: This Spark test evaluates a candidate's proficiency in Apache Spark fundamentals, RDD operations, and Spark SQL. It's ideal for assessing core Spark skills required for data processing and analysis tasks.

PySpark Test: For roles involving Python with Spark, a PySpark assessment is valuable. It checks the candidate's ability to use PySpark for data manipulation, machine learning, and streaming applications.

Scala Online Test: Since Spark is written in Scala, a Scala test can be useful. It assesses a developer's proficiency in Scala programming, which is often used for complex Spark applications.

Java Online Test: Many Spark applications are written in Java. A Java assessment helps evaluate a candidate's Java skills, which are often necessary for Spark development and maintenance.

Data Engineer Test: A broader data engineering assessment can be helpful. It covers various aspects of data processing, including Spark, and helps evaluate a candidate's overall data engineering capabilities.

Case Study Assignments for Hiring Spark Developers

Case study assignments can be effective for evaluating Spark developers, but they come with drawbacks. They're often time-consuming, leading to lower completion rates and potentially losing good candidates. Despite these challenges, well-designed case studies can provide valuable insights into a candidate's skills.

Data Pipeline Optimization: This case study asks candidates to optimize a Spark data pipeline for better performance. They might need to identify bottlenecks, implement partitioning strategies, or use appropriate Spark APIs to improve efficiency. This assignment tests practical Spark knowledge and problem-solving skills.

Real-time Stream Processing: Candidates are tasked with designing a real-time streaming application using Spark Streaming. They should propose an architecture, handle data ingestion, implement windowing operations, and ensure fault tolerance. This case study assesses understanding of streaming concepts and Spark's streaming capabilities.

Machine Learning with Spark: This assignment involves building a machine learning pipeline using Spark MLlib. Candidates might need to preprocess data, select features, train a model, and evaluate its performance at scale. It tests both Spark and machine learning skills, which are often required for Spark developer roles.

Structuring Technical Interviews for Spark Developers

After candidates pass the initial Spark Developer skills tests, it's time for technical interviews. These interviews are crucial for assessing a candidate's deep understanding and practical experience with Spark. They allow you to evaluate problem-solving skills and technical knowledge in real-world scenarios.

Here are some key questions to ask in Spark Developer interviews:

- How would you optimize a Spark job that's running slowly?

- Can you explain the difference between RDD and DataFrame in Spark?

- What's your experience with Spark Streaming for real-time data processing?

- How do you handle data skew in Spark?

- Can you describe a complex ETL pipeline you've built using Spark?

These questions help assess the candidate's practical experience, problem-solving skills, and deep understanding of Spark's core concepts and best practices.

What's the difference between a Spark Developer and a Spark Data Engineer?

The roles of a Spark Developer and a Spark Data Engineer can often be confused due to their overlapping areas in big data projects. However, the core focus and responsibilities of these two positions are distinct.

A Spark Developer primarily focuses on application development using Apache Spark. They typically have skills in Spark, Scala, and Java, and work on projects that involve creating Spark applications and analytics tools. They are responsible for batch and real-time processing, but their knowledge of cloud platforms is usually basic.

In contrast, a Spark Data Engineer is more concerned with the data pipeline and infrastructure. Their core skills include Spark, Hadoop, SQL, and NoSQL, which are essential for large-scale data processing and ETL (Extract, Transform, Load) tasks. They possess advanced expertise in cloud platforms like AWS, Azure, and GCP, and focus on cluster and job optimization as well as advanced data modeling skills.

| Spark Developer | Spark Data Engineer | |

|---|---|---|

| Primary Focus | Application development | Data pipeline and infrastructure |

| Core Skills | Spark, Scala, Java | Spark, Hadoop, SQL, NoSQL |

| Data Processing | Batch and real-time processing | Large-scale data processing and ETL |

| Cloud Platforms | Basic knowledge | Advanced expertise (AWS, Azure, GCP) |

| Performance Optimization | Application-level optimization | Cluster and job optimization |

| Data Modeling | Basic understanding | Advanced data modeling skills |

| Typical Projects | Spark applications, analytics tools | Data lakes, warehouses, pipelines |

| Team Collaboration | Works with data scientists | Works with data architects, DBAs |

What are the ranks of Spark Developers?

When hiring Spark Developers, it's important to differentiate between various ranks as their responsibilities and expertise can vary significantly. This helps in aligning the right talent with your project's specific needs.

• Junior Spark Developer: A Junior Spark Developer is typically an entry-level position. They usually have a foundational understanding of Spark and its ecosystem but need guidance from more experienced developers to complete complex tasks.

• Spark Developer: A mid-level Spark Developer possesses substantial experience with Spark and can independently handle a variety of projects. They are expected to write efficient code, optimize performance, and collaborate with data engineers and data scientists.

• Senior Spark Developer: Senior Spark Developers are highly skilled professionals who lead the development of complex data processing solutions. They are responsible for architectural decisions, mentoring junior staff, and ensuring best practices in coding and performance optimization.

• Lead Spark Developer: The Lead Spark Developer oversees the entire Spark development team. They not only guide the technical direction but also manage project timelines, coordinate with other teams, and ensure that the Spark solutions align with business goals.

Hire the Best Spark Developers

In this post, we covered everything from understanding the role of a Spark Developer to structuring technical interviews effectively. We explored the key skills and qualifications needed, how to write a compelling job description, and where to find top talent. Additionally, we looked at how to screen resumes and the differences between Spark Developers and Spark Data Engineers.

To ensure you hire the most suitable Spark Developers, it's critical to focus on crafting precise job descriptions and utilizing relevant skills tests. Consider using a Spark Online Test to assess candidates accurately and make informed hiring decisions. Remember, the right tools and processes can significantly enhance the quality of your recruitment.

Spark Online Test

FAQs

Look for candidates with a strong background in computer science or related fields, experience with big data technologies, proficiency in Scala or Java, and familiarity with distributed computing concepts. Certifications in Apache Spark or related technologies can be a plus.

Use a combination of technical interviews, coding challenges, and Spark-specific skills assessments. Ask candidates to solve real-world problems using Spark and evaluate their approach to data processing and analysis.

Look for Spark Developers on specialized job boards, tech-focused social media platforms, data science conferences, and through referrals from your current tech team. Online communities focused on big data and Apache Spark can also be good sources.

Include required technical skills (Spark, Scala/Java, SQL), desired experience with big data technologies, specific responsibilities, and any industry-specific knowledge. Highlight exciting projects or challenges they'll work on. You can find a sample Spark Developer job description here.

Start with questions about Spark architecture and concepts, move on to problem-solving scenarios involving large datasets, and include a coding exercise using Spark. Ask about their experience with data pipelines and integrating Spark with other technologies.

Senior Spark Developers typically have 5+ years of experience, can architect complex data solutions, optimize Spark jobs for performance, and lead teams. Junior developers may have 1-3 years of experience and focus more on implementing existing designs and basic Spark operations.

Cloud computing knowledge is increasingly important as many Spark applications run on cloud platforms. Familiarity with services like AWS EMR, Azure HDInsight, or Google Dataproc can be a significant advantage for a Spark Developer.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources