Hiring a Kafka Engineer is a strategic decision for businesses leveraging real-time data streaming to gain competitive advantages. Many companies struggle with pinpointing the exact skills required for the role, often underestimating the complexity of managing Kafka at scale. Kafka Engineers need a deep understanding of distributed systems, troubleshooting, and integration with various data sources, which is where many hiring processes falter.

This article guides you through hiring a Kafka Engineer, from understanding why this role is essential to structuring interviews and identifying the right skills and platforms to find the best candidates. For detailed insights into the specific skills required, you can check out our Kafka Engineer Interview Questions.

Table of contents

Why Hire a Kafka Engineer?

To determine if you need a Kafka Engineer, start by identifying data streaming challenges in your organization. For example, you might be struggling with real-time data processing for a large-scale IoT application or facing issues with high-volume message queuing in a microservices architecture.

Consider these common scenarios where a Kafka Engineer can add value:

- Implementing fault-tolerant, scalable data pipelines

- Optimizing system performance for high-throughput event streaming

- Designing and maintaining complex data integration workflows

If these challenges align with your business needs, it may be time to hire a full-time Kafka Engineer. However, if you're just starting to explore Kafka's potential, working with a consultant or service provider can be a good first step before building an in-house team.

Kafka Engineer Hiring Process

Hiring a Kafka Engineer is a critical step for organizations looking to harness the power of distributed systems. If you're not sure where to start, let's walk through the process together.

- Draft a comprehensive job description: Start by creating a detailed Kafka Engineer job description that outlines the necessary skills and responsibilities.

- Post the job and collect resumes: Use relevant platforms to attract potential candidates. You should start receiving applications within the first week.

- Shortlist and conduct skill tests: Evaluate candidates with role-specific skill assessments to filter out the best fit.

- Conduct interviews: Engage in technical and behavioral interviews to assess candidate compatibility and expertise.

- Make your decision: After thorough evaluation, make an offer to the candidate who best meets your criteria.

The process generally takes about 4-6 weeks, depending on your pace. In the following sections, we'll break down each step further with detailed insights and tips to ensure a smooth hiring experience.

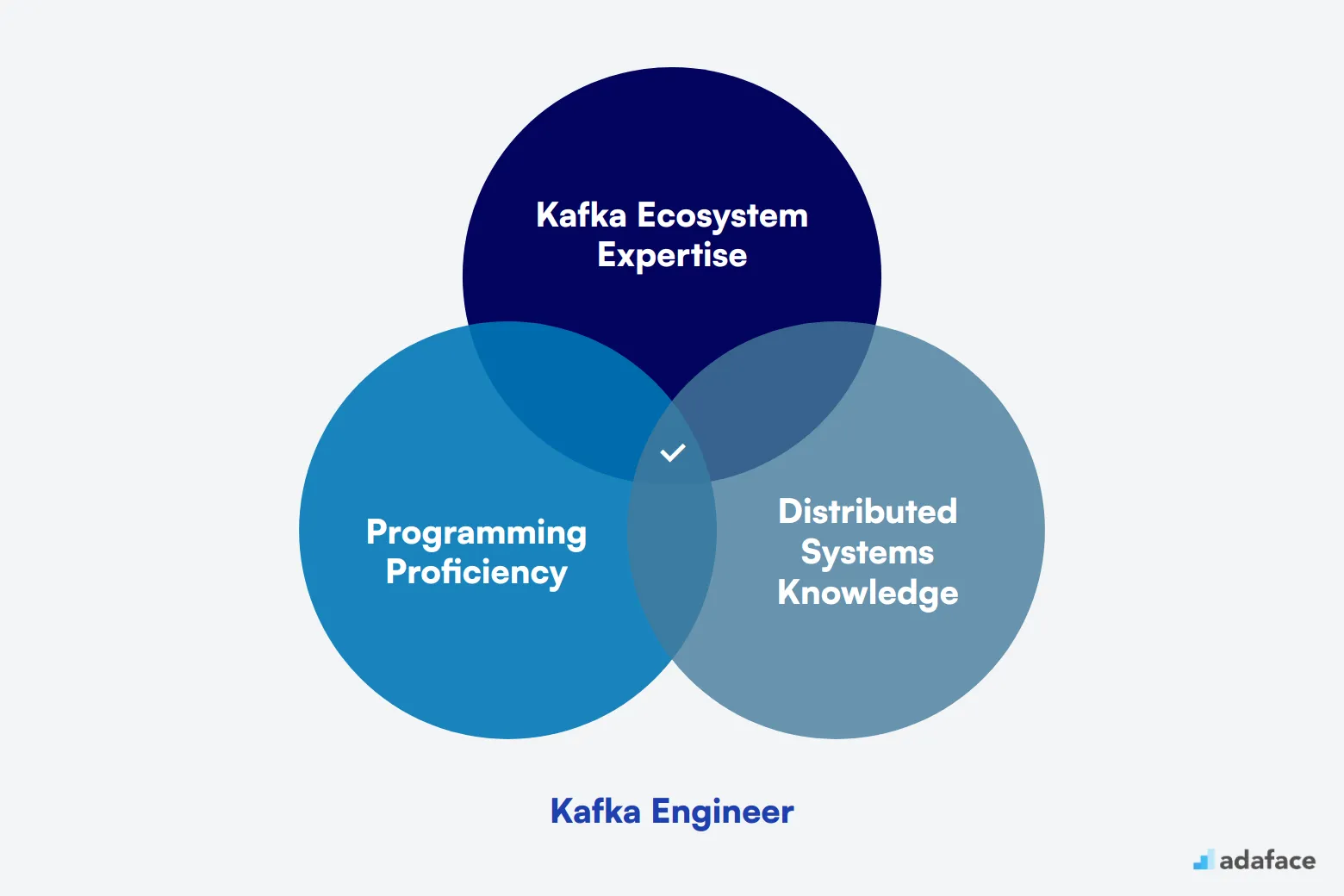

Skills and qualifications to look for in a Kafka Engineer

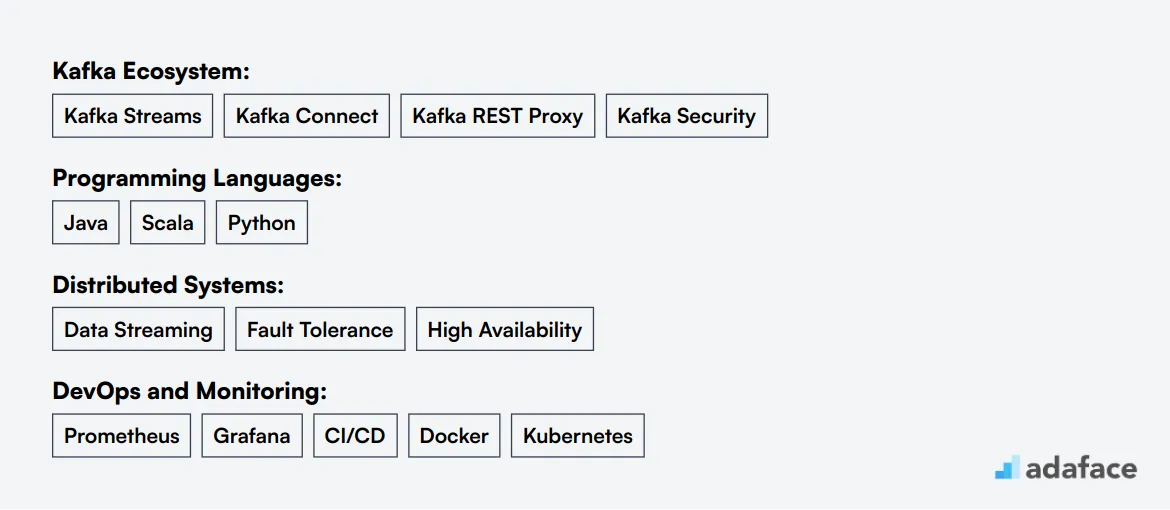

When hiring a Kafka Engineer, it's easy to get tangled up in the myriad skills and qualifications that candidates might possess. The challenge lies in distinguishing between what is truly required and what might simply be a nice-to-have for your specific team setup and project stage. Not every skill is required by default; for instance, experience with Kafka Streams might be crucial for one team but less relevant for another.

To create a candidate profile that aligns with your company's needs, it's essential to define the line between required and preferred skills. Required skills typically include experience with Apache Kafka, proficiency in Java, Scala, or Python, and a solid understanding of distributed systems. On the preferred side, familiarity with cloud platforms or monitoring tools like Prometheus can be advantageous.

You can explore more about setting up an effective assessment test to evaluate these skills, helping you streamline the hiring process and find the best fit for your team.

| Required skills and qualifications | Preferred skills and qualifications |

|---|---|

| Three or more years of experience working with Apache Kafka | Experience with cloud platforms such as AWS, Azure, or Google Cloud |

| Strong proficiency in Java, Scala, or Python programming languages | Knowledge of other messaging systems like RabbitMQ or Apache Pulsar |

| Experience with distributed systems and data streaming | Hands-on experience with monitoring tools like Prometheus or Grafana |

| Understanding of Kafka ecosystem, including Kafka Streams, Kafka Connect, and Kafka REST Proxy | Familiarity with DevOps practices and CI/CD pipelines |

| Proficient in troubleshooting and optimizing Kafka clusters | Strong communication skills and experience in collaborative, team-oriented environments |

10 platforms to hire Kafka Engineers

Now that we have a detailed job description, it's time to explore platforms to list and source candidates. Utilizing popular job listing sites can significantly enhance your chances of finding skilled Kafka Engineers who fit your requirements.

LinkedIn is widely used for professional networking and recruiting full-time employees, making it a prime platform for hiring Kafka Engineers.

Indeed

Indeed is a mainstream job listing website suitable for full-time positions, providing a vast talent pool including Kafka Engineers.

Stack Overflow Jobs

An excellent resource for tech-specific roles, Stack Overflow Jobs is ideal for targeting developers such as Kafka Engineers.

Start with well-known platforms such as LinkedIn and Indeed for a broad reach. For more tech-specific needs, consider Stack Overflow Jobs or Hired. Don't overlook freelance platforms like Upwork and Toptal, which can be great for short-term projects. Finally, niche sites like AngelList for startups and Dice for tech roles can also yield excellent candidates.

Keywords to Look for in Kafka Engineer Resumes

Resume screening is a time-saver when hiring Kafka Engineers. It helps you quickly identify candidates with the right skills and experience before moving to interviews.

When manually screening resumes, focus on key Kafka-related terms. Look for 'Apache Kafka', 'Kafka Streams', 'Kafka Connect', and 'Kafka REST Proxy'. Also, check for programming languages like Java, Scala, or Python, and experience with distributed systems.

AI tools can speed up resume screening. You can use ChatGPT or Claude with a custom prompt to analyze resumes against your job requirements. This skills-based hiring approach can help you shortlist candidates more effectively.

Here's a sample prompt for AI-assisted resume screening:

TASK: Screen resumes for Kafka Engineer role

INPUT: Resumes

OUTPUT:

- Candidate Name

- Matching keywords

- Score (out of 10)

- Shortlist recommendation

KEYWORDS:

- Apache Kafka, Kafka Streams, Kafka Connect

- Java, Scala, Python

- Distributed systems, Data streaming

- Cloud platforms (AWS, Azure, GCP)

- Monitoring tools (Prometheus, Grafana)

Recommended Skills Tests for Assessing Kafka Engineers

Skills tests are a reliable way to evaluate Kafka Engineers beyond their resumes. They provide objective insights into a candidate's technical abilities and problem-solving skills. Here are five recommended tests to assess potential Kafka Engineers:

Kafka Skills Test: This Kafka online test evaluates a candidate's proficiency in Apache Kafka, including topics like producers, consumers, brokers, and stream processing. It's essential for gauging their core Kafka knowledge.

Java Skills Test: Since Kafka is built in Java, a strong foundation in Java is crucial. A Java assessment helps determine if candidates can effectively work with Kafka's codebase and develop custom components.

Data Engineering Test: A Data Engineer test assesses skills in data processing, ETL operations, and distributed systems. These are key for Kafka Engineers who often work with large-scale data pipelines.

Cloud Computing Test: Many Kafka deployments run on cloud platforms. A cloud computing test helps evaluate a candidate's understanding of cloud infrastructure and services, which is valuable for Kafka cluster management.

DevOps Skills Test: Kafka Engineers often need DevOps skills for deployment and maintenance. A DevOps test can assess their ability to automate processes, manage configurations, and ensure system reliability.

Case Study Assignments to Hire Kafka Engineers

Case study assignments can be a useful tool in evaluating Kafka Engineers, although they come with some drawbacks such as being time-consuming and potentially leading to lower candidate participation. It's important to balance the depth of evaluation with the candidate experience. Here are a few case study examples tailored for assessing Kafka Engineers.

The Distributed Messaging System Design assignment focuses on designing a scalable messaging system using Kafka. This task is recommended to assess a candidate's ability to architect a system that handles large-scale data processing. Understanding the fundamentals of Kafka is critical in this test, which can be outlined in the Kafka Engineer job description.

The Data Stream Processing Exercise involves processing real-time data streams to identify patterns or anomalies. This case study helps in assessing the candidate's proficiency in leveraging Kafka's stream processing capabilities and integrating it with other data processing tools.

For evaluating debugging skills, the Kafka Cluster Troubleshooting case study can be employed. Candidates are asked to resolve issues within a Kafka cluster, which tests their problem-solving and technical expertise in managing Kafka infrastructure.

Structuring Technical Interviews for Kafka Engineer Candidates

After candidates pass the initial Kafka Engineer skills tests, it's time for technical interviews. These interviews are key to assessing a candidate's hard skills and problem-solving abilities. While skills tests help filter out unqualified applicants, technical interviews reveal the best-suited candidates for the role.

Here are some example interview questions for Kafka Engineers:

- Explain Kafka's architecture and its main components.

- How does Kafka ensure fault tolerance and high availability?

- Describe the process of data replication in Kafka.

- What strategies would you use to optimize Kafka's performance?

- How would you handle message ordering and exactly-once delivery in Kafka?

- Can you explain Kafka's offset management and its importance?

What's the difference between a Kafka Developer and a Kafka Administrator?

People often see Kafka Developer and Kafka Administrator roles as interchangeable because both work within the Apache Kafka ecosystem. However, they focus on different aspects of the Kafka platform and require distinct skill sets.

A Kafka Developer primarily concentrates on building and optimizing Kafka applications. This role involves tasks like developing producers and consumers, integrating APIs, and solving application logic issues. Proficiency in programming languages such as Java, Scala, and the Spring framework is key, with a focus on event streaming and software architecture growth.

In contrast, a Kafka Administrator is responsible for the management and maintenance of the Kafka infrastructure. Their work includes cluster configuration, tuning, and ensuring performance reliability. Familiarity with tools like Zookeeper and Kafka Connect is important, as well as a solid understanding of scaling and security. The growth path here leans towards systems architecture.

For a detailed exploration of the skills required for a Kafka Engineer, you can explore more resources to help identify the right fit for your team.

| Kafka Developer | Kafka Administrator | |

|---|---|---|

| Primary Focus | Develop Kafka applications | Manage Kafka infrastructure |

| Skillset | Programming, API Integration | System Administration, Monitoring |

| Tools Familiarity | Java, Scala, Spring | Zookeeper, Kafka Connect |

| Responsibilities | Design and implement producers/consumers | Cluster configuration and tuning |

| Problem Solving | Application logic issues | Performance and reliability |

| Experience Level | 2+ years in development | 2+ years in administration |

| Growth Path | Software Architect | Systems Architect |

| Keywords | Development, API, Event Streaming | Infrastructure, Scaling, Security |

What are the ranks of Kafka Engineers?

Many organizations struggle to differentiate between various data engineering roles. For Kafka Engineers, there's a clear progression path that reflects increasing expertise and responsibilities.

- Junior Kafka Engineer: This entry-level position involves basic Kafka operations, monitoring, and troubleshooting. They work under supervision and focus on learning the fundamentals of Kafka architecture and data streaming.

- Kafka Engineer: At this mid-level, engineers design and implement Kafka-based solutions. They have a deep understanding of Kafka internals and can optimize performance for various use cases.

- Senior Kafka Engineer: These professionals lead Kafka initiatives and mentor junior team members. They architect complex Kafka ecosystems, integrate with other big data technologies, and make high-level design decisions.

- Principal Kafka Engineer: At the top of the technical ladder, Principal Engineers shape the overall Kafka strategy for an organization. They often collaborate with data architects to design enterprise-wide data streaming solutions.

- Kafka Engineering Manager: This role combines technical expertise with people management skills. They oversee Kafka engineering teams, set priorities, and align Kafka initiatives with business goals.

Streamline Your Kafka Engineer Hiring Process

We've covered the key aspects of hiring Kafka Engineers, from understanding their role to structuring interviews and assessing skills. The process involves careful consideration of qualifications, technical expertise, and cultural fit.

The most important takeaway is to use well-crafted job descriptions and skills tests to make your hiring accurate. These tools help you identify top talent efficiently and ensure you're bringing the right Kafka Engineers on board to drive your data streaming projects forward.

Kafka Online Test

FAQs

A Kafka Engineer is responsible for designing, implementing, and maintaining Kafka clusters. They work on ensuring data is processed and streamed efficiently and securely across systems. This role requires optimizing Kafka performance and troubleshooting any issues that arise.

Key skills include experience with distributed systems, proficiency in programming languages like Java or Scala, understanding of data streaming, message systems, and experience with cloud platforms. For a comprehensive list, see our skills required for Kafka Engineer.

You can find Kafka Engineers on platforms like LinkedIn, Stack Overflow, and specialized tech job boards. Networking within tech communities and attending data engineering conferences can also be effective ways to find talent.

Technical skills can be assessed using coding tests and real-world case study assignments tailored to Kafka. You can explore our Kafka online test for effective assessments.

A Kafka Developer focuses on building and deploying applications using Kafka, while a Kafka Administrator manages and maintains the Kafka infrastructure, ensuring its stability and performance.

The interview process should include a mix of technical assessments, problem-solving exercises, and behavioral questions. Focus on the candidate's experience with distributed systems and their problem-solving approach. For detailed guidance, refer to our section on structuring technical interviews.

Ranks can range from junior to senior levels, with lead and architect roles for those with significant experience. Responsibilities and expectations increase with seniority, focusing more on strategic implementation and system-wide optimization.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources