Hiring a Hadoop Developer can be a complex task for recruiters and hiring managers. Many organizations struggle to find candidates with the right mix of technical skills and big data expertise. The challenge often lies in identifying professionals who can not only work with Hadoop ecosystems but also understand business needs and translate them into effective data solutions.

This guide will walk you through the process of hiring a Hadoop Developer, from understanding the role to conducting effective interviews. We'll cover key skills to look for, where to find top talent, and how to assess candidates effectively. For a comprehensive overview of Hadoop Developer skills, check out our skills required for Hadoop Developer guide.

Table of contents

Hiring Process for Hadoop Developer Role

The hiring process for a Hadoop Developer typically spans about 1-2 months. It involves a series of steps to ensure you find the right candidate for your team.

- Define the role: Craft a clear and concise job description outlining the skills and responsibilities required for the Hadoop Developer position. Posting it on relevant platforms is crucial to attract suitable candidates.

- Resume screening: Expect to start receiving resumes within the first 3-4 days. Review the applications to shortlist candidates based on their experience and relevant skills.

- Skill assessment: After shortlisting, invite candidates to participate in skill testing. This could include practical tests or case studies specific to Hadoop development. Allocate about a week for candidates to complete these assessments.

- Interviews: Conduct interviews with the top candidates from the skill assessment stage. This is your opportunity to evaluate their technical expertise and cultural fit within your team.

- Offer stage: Finally, extend an offer to the candidate who best meets your needs. Be prepared for negotiations and discussions regarding salary and benefits.

In summary, the entire hiring process can be streamlined into these steps. Expect some variations in timeline based on your specific organization and speed of decision-making. Now, let’s explore each of these steps in greater detail to provide you with useful resources and checklists.

Skills and Qualifications to Look for in a Hadoop Developer

Hiring a Hadoop Developer can be tricky, especially when it comes to defining the right candidate profile. What might be essential for one organization could simply be a nice-to-have for yours. Clarifying what's required versus what is preferred can streamline your hiring process and ensure you attract the best talent.

When outlining the skills and qualifications, consider distinguishing between required skills—those that are non-negotiable—and preferred skills, which can enhance a candidate's fit but are not mandatory. This approach not only helps in narrowing down candidates but also opens the field to those who bring diverse experiences.

Here are the required and preferred skills and qualifications to consider when hiring a Hadoop Developer:

| Required skills and qualifications | Preferred skills and qualifications |

|---|---|

| Three or more years of experience with Hadoop ecosystem | Experience with cloud services such as AWS or Azure |

| Proficiency in Hadoop-related tools such as HDFS, MapReduce, Hive, and Pig | Knowledge of Spark, Kafka or other big data tools |

| Strong programming skills in Java or Python | Experience with data visualization tools like Tableau or Power BI |

| Experience with data warehousing and ETL processing | Familiarity with machine learning concepts |

| Solid understanding of distributed computing principles | Proven success in cross-functional team environments |

Top 10 Platforms to Hire Hadoop Developers

Now that you have crafted a detailed job description, the next step is to post it on job listing sites to attract potential candidates. Finding the right platform is key to sourcing skilled Hadoop Developers who meet your project's specific needs.

LinkedIn is ideal for finding and hiring full-time Hadoop Developers with a professional network and comprehensive profiles.

Indeed

Indeed is a broad job listing platform that's great for reaching a large pool of candidates for full-time positions.

Upwork

Upwork is a leading platform for hiring freelance Hadoop Developers for project-based or short-term work.

The remaining platforms include Freelancer and AngelList for startups or freelance arrangements, Dice and GitHub Jobs for tech-focused roles, and Stack Overflow Jobs for highly technical positions. Toptal and FlexJobs are excellent for remote hiring, allowing you to tap into a global pool of talented developers. Explore more about harnessing these platforms in our resource on remote hiring.

Keywords to Look for in a Hadoop Developer Resume

Resume screening helps recruiters quickly identify promising Hadoop Developer candidates from a large applicant pool. It's a time-saving first step before moving on to more in-depth evaluation methods.

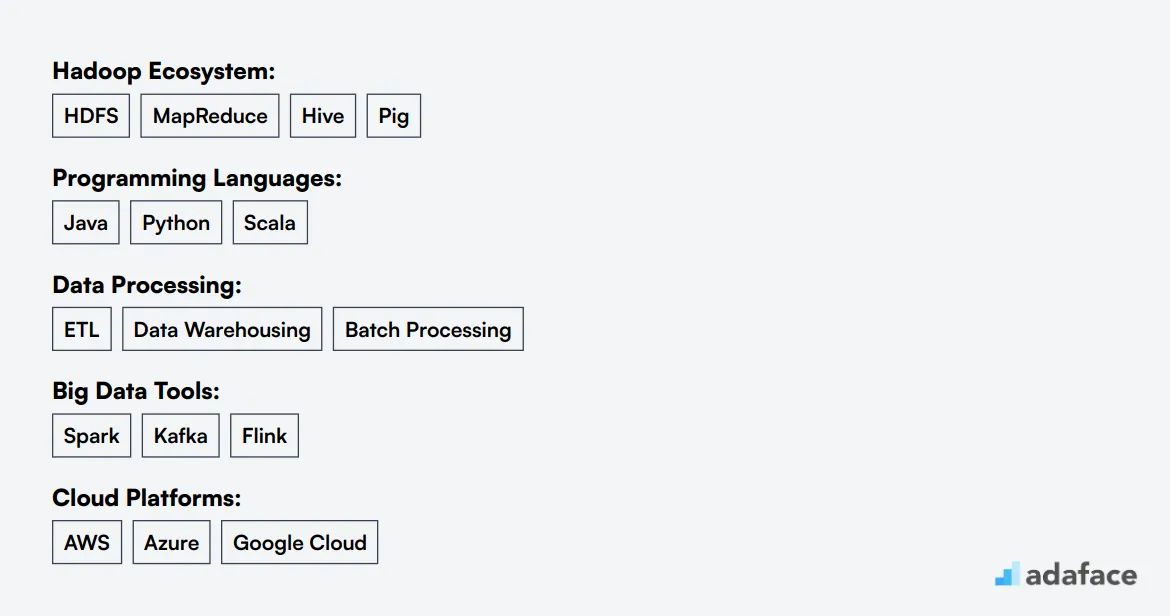

When manually screening resumes, focus on key Hadoop skills and tools. Look for experience with HDFS, MapReduce, Hive, and Pig, as well as programming languages like Java or Python. Familiarity with cloud platforms like AWS or Azure is also valuable.

AI-powered resume screening can streamline this process even further. Tools like GPT can analyze resumes against a set of predefined criteria, helping you quickly surface the most qualified candidates.

Here's a sample prompt for AI-assisted Hadoop Developer resume screening:

TASK: Screen resumes for Hadoop Developer role

INPUT: Resumes

OUTPUT: For each resume, provide:

- Email

- Name

- Matching keywords

- Score (out of 10)

- Recommendation

- Shortlist (Yes/No/Maybe)

KEYWORDS:

- Hadoop ecosystem (HDFS, MapReduce, Hive, Pig)

- Programming (Java, Python)

- Big data tools (Spark, Kafka)

- Cloud platforms (AWS, Azure)

- Data processing (ETL, data warehousing)

Recommended Skills Tests for Assessing Hadoop Developers

Skills tests are an effective way to evaluate Hadoop Developer candidates beyond their resumes. They provide objective insights into a candidate's technical abilities and problem-solving skills. Here are the top tests we recommend for assessing Hadoop Developers:

Hadoop Test: This Hadoop online test evaluates a candidate's understanding of core Hadoop concepts, HDFS, and MapReduce. It helps gauge their ability to work with large-scale distributed data processing.

MapReduce Test: The MapReduce online test assesses a candidate's proficiency in writing and optimizing MapReduce jobs. This is key for processing and analyzing big data efficiently in Hadoop ecosystems.

Hive Test: A Hive test evaluates the candidate's skills in using Hive for data warehousing and SQL-like queries on Hadoop. It's important for developers who need to work with structured data in Hadoop.

Pig Test: The Pig online test checks a candidate's ability to use Pig Latin for scripting and data flow. This is useful for assessing skills in high-level data processing on Hadoop.

Data Engineer Test: A comprehensive Data Engineer test can evaluate broader skills relevant to Hadoop development. It covers aspects like data modeling, ETL processes, and big data technologies, providing a well-rounded assessment of a candidate's capabilities.

Structuring the Interview Stage for Hiring Hadoop Developers

Once candidates have successfully passed the skills tests, it's crucial to bring them into the technical interview stage where their hard skills are further assessed. Skills tests are excellent for filtering out those who do not meet the basic requirements, but technical interviews ensure you find the best fit for the Hadoop Developer role. This phase allows you to evaluate candidates' problem-solving abilities and their hands-on experience.

Here are some sample interview questions to consider: 1. Can you explain the major components of the Hadoop ecosystem? This helps assess their overall understanding of Hadoop. 2. How do you handle data input and output in Hadoop? This question sheds light on their data management skills. 3. What are some optimization techniques you implement in Hadoop jobs? Through this, you evaluate their efficiency in managing resources. 4. Describe a challenging project you worked on using Hadoop. This question provides insight into their problem-solving skills and experience. For more specialized skills, you could consider exploring Hadoop Developer Interview Questions.

What is the cost of hiring a Hadoop Developer?

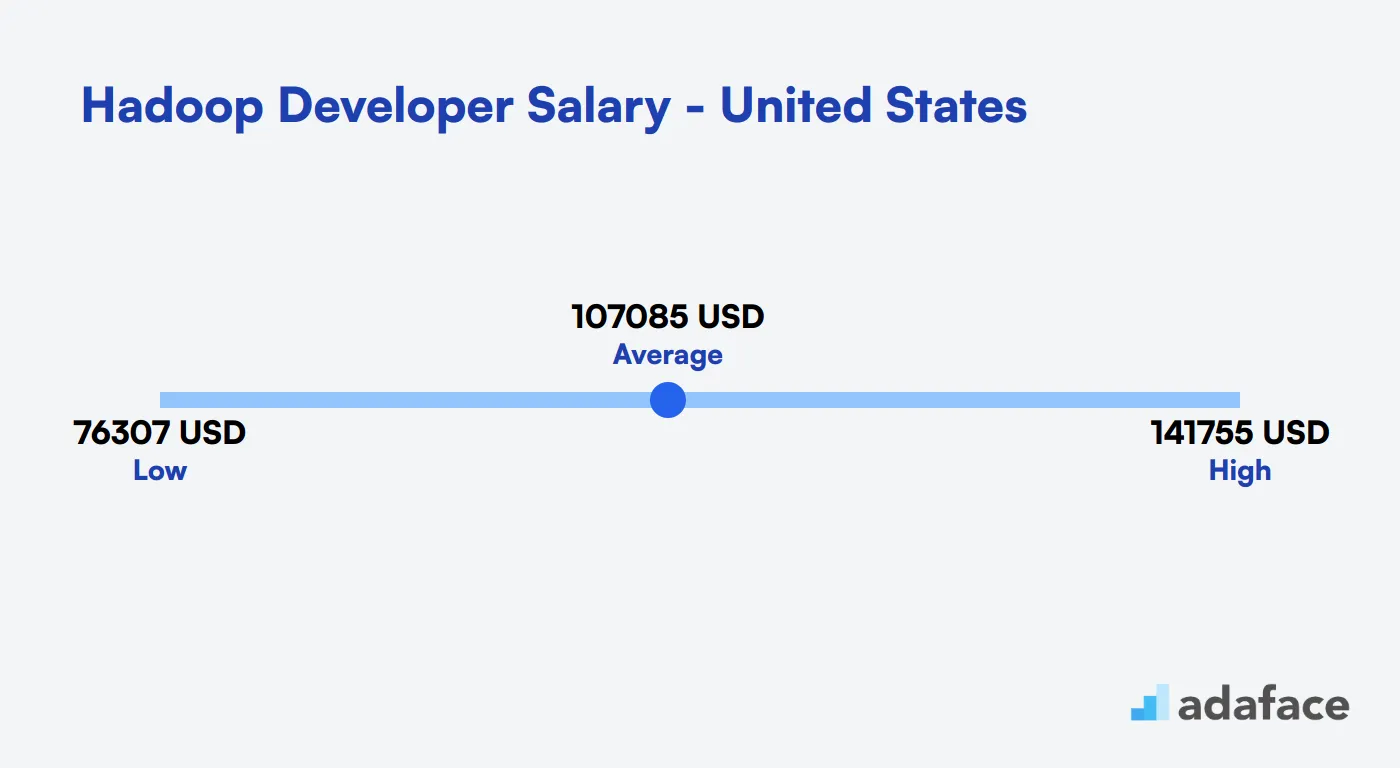

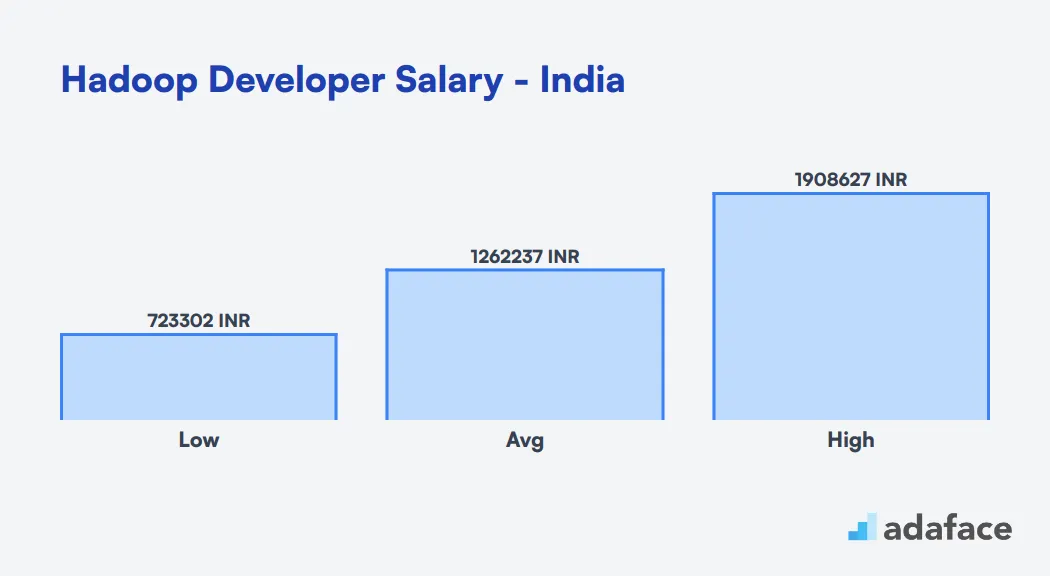

Hiring a Hadoop Developer can vary significantly based on factors such as geography, experience level, and the specific skills required. In the United States, salaries range from $76,307 to $141,755, while in India, the average annual salary is approximately INR 1,262,237, with a range from INR 723,303 to INR 1,908,627. Understanding these variations will help you set a competitive salary that attracts the right talent.

Hadoop Developer Salary in the United States

The average salary for Hadoop Developers in the United States ranges from $76,307 to $141,755, with a median of $104,004. This variation depends on factors like location, experience, and company size.

Top-paying cities for Hadoop Developers include Washington, DC, with a median salary of $130,001, and San Jose, CA, offering up to $130,157. Other tech hubs like New York, NY, and Dallas, TX, also provide competitive salaries in the $70,000 to $80,000 range.

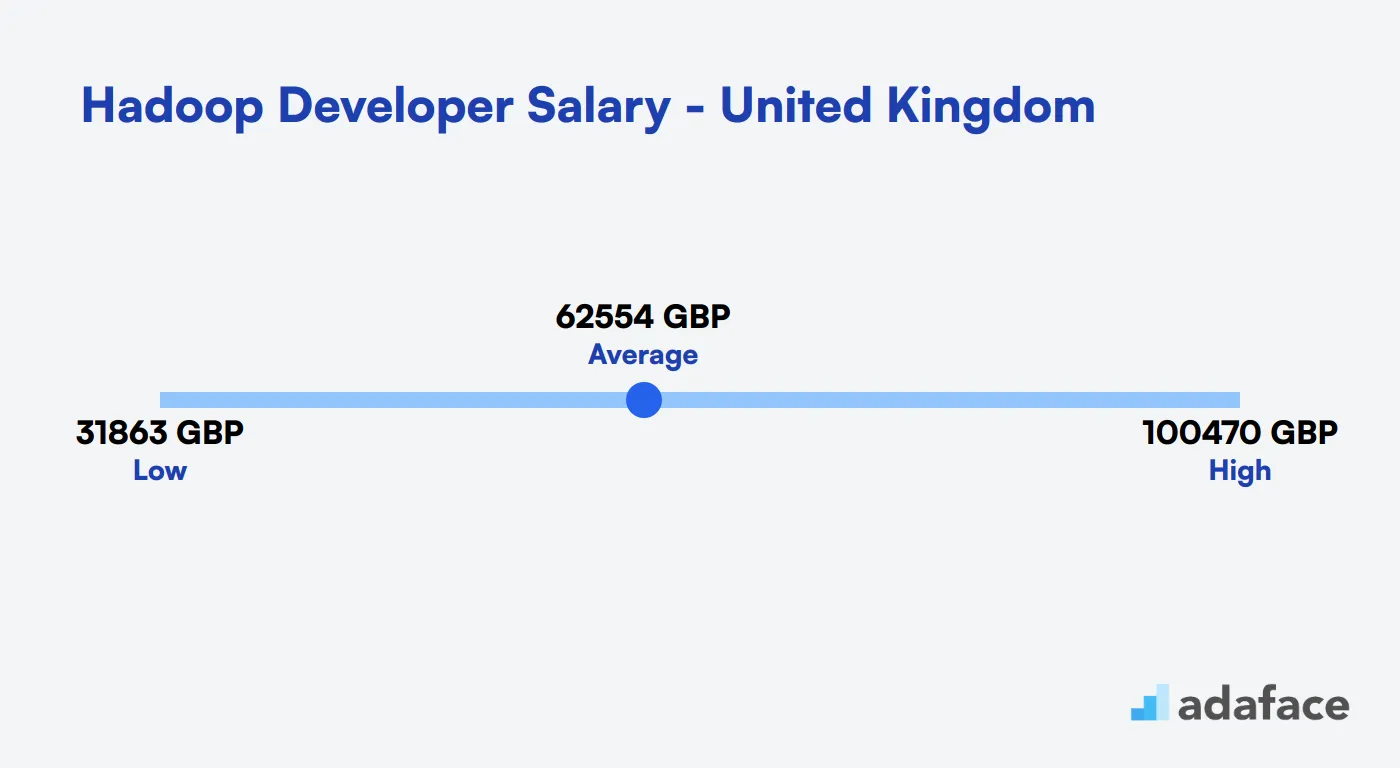

Hadoop Developer Salary United Kingdom

The average salary for a Hadoop Developer in the United Kingdom is approximately £55,000 annually. Entry-level positions may start at around £40,000, while experienced professionals can earn upwards of £80,000. These figures can vary based on factors such as location, experience, and the complexity of projects handled.

Hadoop Developer Salary in India

The average salary for a Hadoop Developer in India is approximately INR 1,262,237 annually. Salaries can range from a minimum of INR 723,303 to a maximum of about INR 1,908,627, depending on experience and location.

Key cities such as New Delhi and Bengaluru offer higher salary ranges, with averages around INR 1,514,667 and INR 1,250,000 respectively. Other cities like Pune and Chennai have average salaries closer to INR 833,503 and INR 1,052,993.

What's the difference between a Hadoop Developer and a Hadoop Administrator?

Many people confuse Hadoop Developers and Hadoop Administrators due to their shared involvement with Hadoop ecosystems. However, these roles have distinct responsibilities and skill sets that set them apart in the big data landscape.

Hadoop Developers focus on creating applications that process and analyze large datasets. They work primarily with programming languages like Java and use tools such as MapReduce, Hive, Pig, and Spark. Their main goal is to improve data analysis efficiency and develop data-centric solutions.

On the other hand, Hadoop Administrators manage and maintain the Hadoop infrastructure. They are responsible for cluster configuration, monitoring, and load balancing. Their toolkit includes Linux, Shell Scripting, HBase, Ambari, and ZooKeeper. Administrators aim to ensure system reliability and uptime.

While Developers typically work within development teams to create data processing jobs, Administrators collaborate with operations teams to keep the Hadoop ecosystem running smoothly. Understanding these differences is key for recruiters to find the right fit for their organization's Hadoop needs.

| Hadoop Developer | Hadoop Administrator | |

|---|---|---|

| Primary Role | Develop Hadoop applications | Manage Hadoop clusters |

| Focus Area | Application development | Cluster configuration |

| Technical Skills | Java, MapReduce, Hive | Linux, Shell Scripting, HBase |

| Responsibilities | Data processing, Writing jobs | Cluster monitoring, Load balancing |

| Tools | Pig, Spark | Ambari, ZooKeeper |

| Problem Solving | Data-centric solutions | Infrastructure-centric solutions |

| Work Environment | Development teams | Operations teams |

| Key Objective | Data analysis efficiency | System reliability and uptime |

What are the ranks of Hadoop Developers?

Many hiring managers often get confused about the various roles within the Hadoop ecosystem. Understanding the different ranks of Hadoop developers is essential for effective recruitment, as each level possesses unique skills and responsibilities.

• Junior Hadoop Developer: This entry-level position is typically filled by recent graduates or professionals who are new to the Hadoop environment. Junior developers often assist in basic coding tasks, data processing, and learning the Hadoop tools and frameworks.

• Hadoop Developer: A mid-level Hadoop developer usually has a few years of experience and is capable of working independently. They are responsible for designing, developing, and implementing Hadoop solutions, along with optimizing performance and ensuring data quality.

• Senior Hadoop Developer: This role requires extensive experience and a deep understanding of Hadoop architecture. Senior developers lead projects, mentor junior team members, and are often involved in strategic planning and technology choices related to Big Data.

• Hadoop Architect: The Hadoop architect is responsible for the overall design and architecture of Hadoop solutions. They have advanced skills in system integration and ensure that all components work together efficiently, often working closely with other IT teams.

• Hadoop Administrator: While not a developer in the traditional sense, the Hadoop administrator plays a vital role in managing and maintaining Hadoop clusters. They handle installation, configuration, and performance tuning, ensuring system reliability.

For more insights on the responsibilities and requirements of a Hadoop developer, you may want to refer to our detailed Hadoop Developer Job Description.

Streamline Your Hadoop Developer Hiring Process

In this post, we've covered the key aspects of hiring Hadoop Developers, from crafting job descriptions to conducting interviews. We've explored the essential skills, qualifications, and platforms to find top talent in this field.

The most important takeaway is to use accurate job descriptions and skills assessments to make your hiring process more effective. Consider using a Hadoop online test to evaluate candidates' technical abilities objectively. This approach will help you identify the best Hadoop Developers who can contribute to your data-driven projects.

Hadoop Online Test

FAQs

Key skills for a Hadoop Developer include proficiency in Java, Python, or Scala, experience with Hadoop ecosystem tools (HDFS, MapReduce, Hive, Pig), knowledge of SQL and NoSQL databases, and understanding of big data concepts. Soft skills like problem-solving and communication are also important.

You can assess Hadoop skills through a combination of technical interviews, coding challenges, and skills assessments. Consider using our Hadoop online test to evaluate candidates' practical knowledge and problem-solving abilities in Hadoop environments.

Good sources for finding Hadoop Developers include specialized job boards, LinkedIn, GitHub, tech conferences, and big data meetups. You can also consider partnering with universities offering big data programs or using recruitment agencies specializing in tech talent.

A job description for a Hadoop Developer should include required technical skills, experience level, specific responsibilities, projects they'll work on, and any industry-specific knowledge needed. For a detailed template, refer to our Hadoop Developer job description guide.

While there's overlap, Hadoop Developers specifically focus on building and maintaining Hadoop-based big data solutions. Data Engineers have a broader scope, working with various data technologies beyond Hadoop. For more on Data Engineer roles, see our Data Engineer job description.

Ask a mix of technical and situational questions. Cover Hadoop architecture, MapReduce concepts, data processing scenarios, and problem-solving approaches. For a comprehensive list, check our Hadoop Developer interview questions article.

Besides technical skills, assess cultural fit and soft skills. Look for candidates who demonstrate good communication, teamwork, and adaptability. Consider involving team members in the interview process and possibly conduct a trial project to see how the candidate collaborates in real scenarios.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources