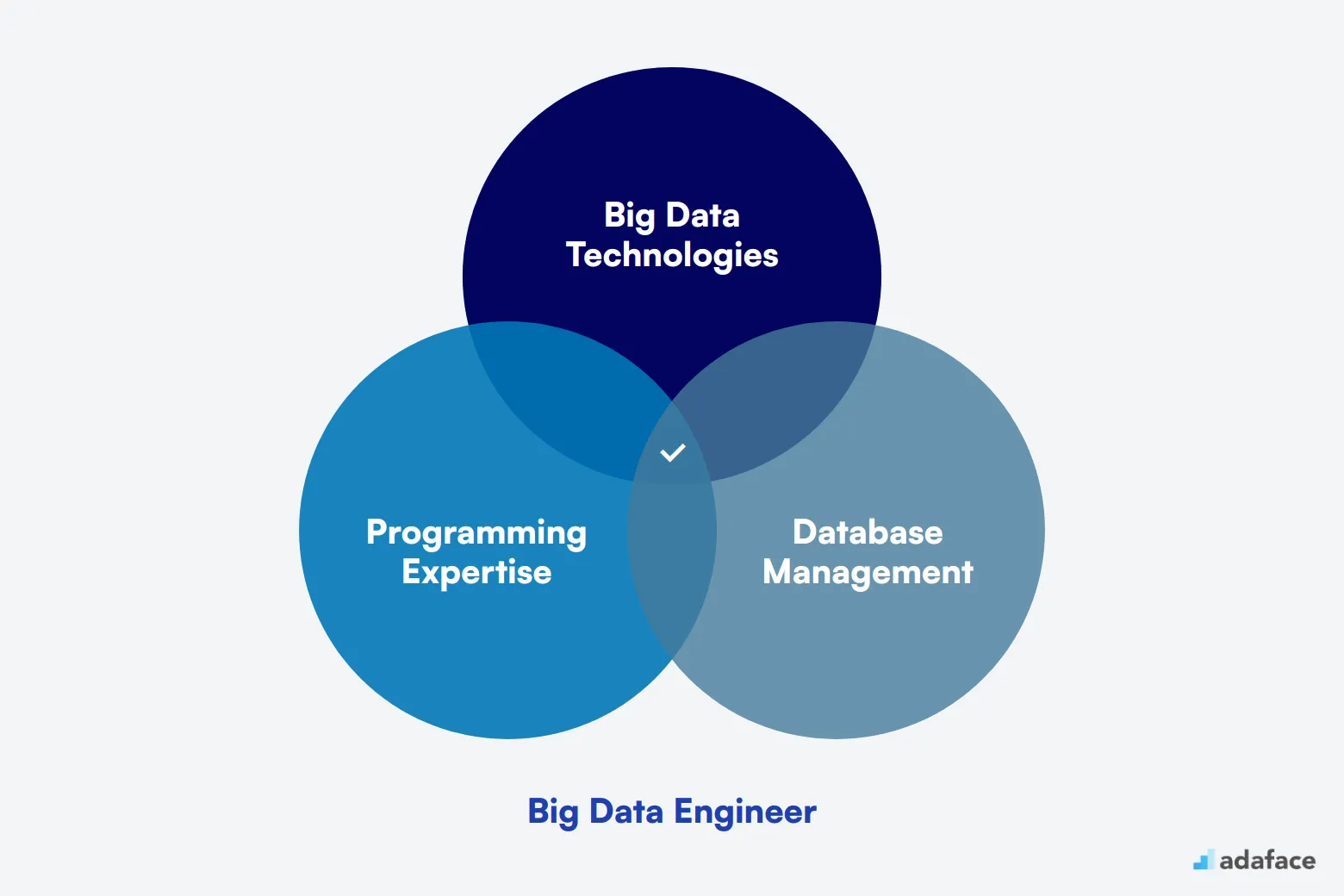

In today's data-driven world, hiring a Big Data Engineer is critical for organizations looking to harness the power of big data to drive business decisions. However, many companies struggle to identify the right talent due to a lack of understanding of what truly defines an exceptional Big Data Engineer. These professionals must not only have deep technical expertise but also the ability to understand business needs and translate them into actionable data insights.

This article provides a comprehensive guide for recruiters and hiring managers on how to hire the best Big Data Engineers for their teams. We will cover key aspects such as the hiring process, skills to look for, platforms to find candidates, and how to effectively assess their capabilities. For detailed job descriptions and questions, you can explore our Big Data Engineer Job Description.

Table of contents

Why Hire a Big Data Engineer?

To determine if your company needs a Big Data Engineer, start by identifying data-related challenges or opportunities. For instance, you might be struggling to process large volumes of customer data for personalized marketing campaigns.

Consider use cases where a Big Data Engineer could add value:

- Optimizing data pipelines for real-time analytics

- Implementing distributed computing solutions for faster data processing

- Designing scalable data storage systems to handle growing datasets

If these tasks align with your business goals, it may be time to hire a Big Data Engineer. For smaller projects or to test the waters, consider working with a consultant before committing to a full-time hire.

Big Data Engineer Hiring Process

The hiring process for a Big Data Engineer role typically takes around 1-2 months. Here's a brief overview of the timeline:

- Job Description: Post an accurate and detailed job description on relevant job boards and career sites.

- Resume Screening: Expect resumes to start pouring in within the first 3-4 days. Shortlist candidates based on their qualifications and experience.

- Skill Assessment: Evaluate the shortlisted candidates' technical skills through coding tests or case studies. This process may take about a week.

- Interviews: Conduct technical and behavioral interviews with the top candidates to assess their fit for the role.

- Offer Stage: Extend an offer to the most suitable candidate.

While the overall process may take 1-2 months, the timeline can vary depending on the urgency of the hire and the availability of suitable candidates. We'll dive deeper into each step to ensure a smooth and efficient hiring process.

Skills and Qualifications to Look for in a Big Data Engineer

When hiring a Big Data Engineer, crafting the right candidate profile can be tricky. For instance, while many engineers might have experience with Hadoop, your company could prioritize other frameworks like Spark. It's important to clearly differentiate between required skills and those that are simply nice to have.

Start by considering foundational skills such as a Bachelor's degree in Computer Science or related fields, and a few years of experience in big data engineering. Candidates should also show proficiency in programming languages like Python or Java and have experience with big data frameworks such as Hadoop or Spark.

While a Master's degree and expertise in cloud platforms like AWS or Google Cloud can be advantageous, these are not necessarily deal-breakers. Familiarity with real-time data processing tools like Kafka or knowledge of data warehousing solutions can also set a candidate apart. For more insights, our tech recruitment blog offers additional guidance.

| Required skills and qualifications | Preferred skills and qualifications |

|---|---|

| Bachelor's degree in Computer Science, Engineering, or a related field | Master's degree in Computer Science or a related field |

| Three or more years of experience in big data engineering or software development | Experience with cloud platforms like AWS, Google Cloud, or Azure |

| Proficiency in programming languages such as Python, Java, or Scala | Knowledge of real-time data processing tools like Kafka or Storm |

| Experience with big data frameworks such as Hadoop and Spark | Familiarity with data warehousing solutions such as Redshift or Snowflake |

| Strong SQL skills and experience with databases like MySQL, PostgreSQL, or MongoDB | Ability to work in an agile and collaborative environment |

How to write a Big Data Engineer job description?

Once you have a candidate profile ready, the next step is to capture that information in the job description to attract the right candidates. A well-crafted JD is key to finding the right Big Data Engineer for your organization.

- Highlight key responsibilities and impact: Clearly outline the specific tasks that the Big Data Engineer will be responsible for, such as designing data processing systems or developing data models. Emphasising how their work contributes to business goals will attract candidates who are looking for meaningful roles.

- Balance technical skills with soft skills: While it's important to specify technical expertise in tools like Hadoop or Spark, don't neglect soft skills such as teamwork and critical thinking. A well-rounded engineer who can collaborate and communicate effectively will likely integrate better into your team.

- Showcase your company’s unique selling points: Communicate what sets your company apart. Whether it’s the innovative projects, opportunities for growth, or a strong team culture, these aspects can entice top-tier talent. Highlighting a compelling company culture can make your role more appealing compared to others in the market.

For a detailed understanding, refer to the Big Data Engineer job description.

Top Platforms to Find Big Data Engineers

Now that you have a well-crafted job description, it's time to post it on job listing sites to attract qualified Big Data Engineers. The right platform can significantly impact the quality and quantity of applicants you receive. Let's explore some of the best options available.

LinkedIn Jobs

Ideal for posting full-time positions and reaching a large pool of professional Big Data Engineers. Offers robust search and filtering options for recruiters.

Indeed

Widely used job board with a large user base. Suitable for posting various types of Big Data Engineer positions and reaching a diverse candidate pool.

Dice

Specialized in tech jobs, making it excellent for targeting Big Data Engineers. Offers tools for precise skill-based searching and candidate matching.

Other notable platforms include Stack Overflow Jobs, Kaggle Jobs, AngelList, and FlexJobs. These cater to specific niches like tech communities, data science specialists, startup enthusiasts, and remote workers respectively. Choosing the right mix of platforms based on your company's needs and the role's requirements can help you find the perfect Big Data Engineer for your team.

Keywords to Look for in Big Data Engineer Resumes

Resume screening is a critical first step in hiring Big Data Engineers. It helps you quickly identify candidates with the right skills and experience before investing time in interviews.

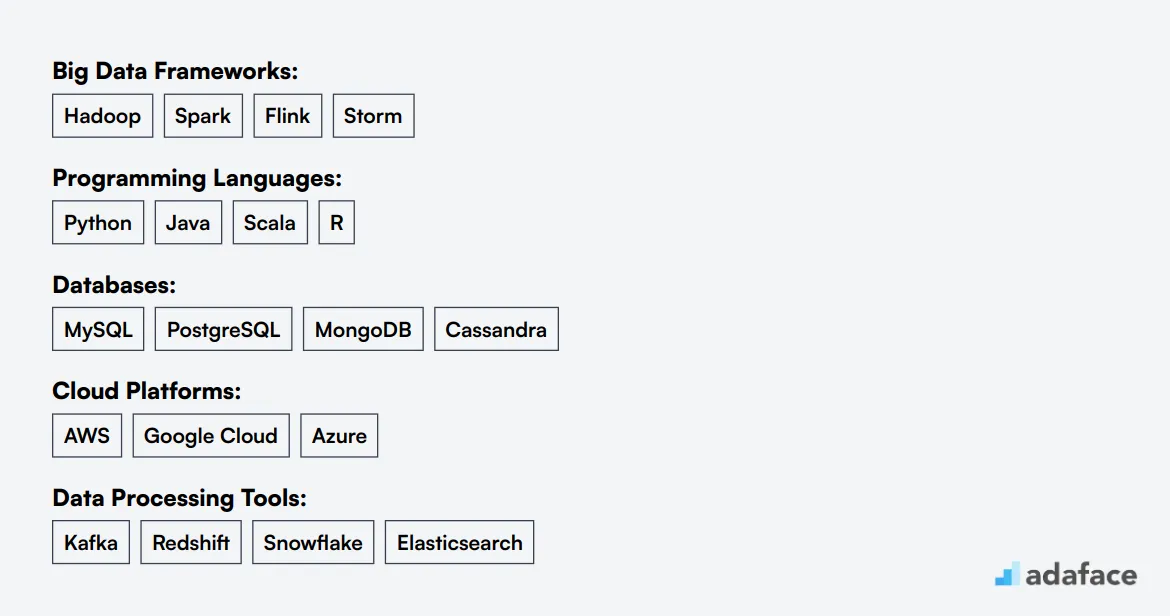

When manually screening resumes, focus on key technical skills like Hadoop, Spark, Python, Java, and SQL. Look for experience with cloud platforms (AWS, Google Cloud, Azure) and data processing tools (Kafka, Redshift). Our Big Data Engineer skills guide can help you identify must-have competencies.

AI-powered tools can streamline the screening process. Use large language models like GPT or Claude with a custom prompt to analyze resumes against your job requirements. This can save time and ensure consistent evaluation across all applications.

Here's a sample prompt for AI resume screening:

TASK: Screen resumes for Big Data Engineer role

INPUT: Resumes

OUTPUT:

- Candidate name and email

- Matching keywords

- Score (0-10)

- Shortlist recommendation (Yes/No/Maybe)

KEYWORDS:

- Big Data: Hadoop, Spark, Flink

- Languages: Python, Java, Scala

- Databases: SQL, MongoDB, Cassandra

- Cloud: AWS, GCP, Azure

- Tools: Kafka, Redshift, Snowflake

Which skills tests should you use to assess Big Data Engineers?

Using skills tests is a smart way to measure the actual abilities of Big Data Engineers beyond their resumes. These tests help you objectively evaluate candidates' skills in managing and processing large datasets, ensuring that you find the right fit for your projects.

The Data Engineer Test is tailored to assess a candidate's ability to design, build, and manage scalable data pipelines. This test ensures that your candidates are adept at handling data integration and processing with technologies like SQL and NoSQL databases. Data Engineer Test

Use the Hadoop Online Test to gauge your candidates’ knowledge of Hadoop's ecosystem and their ability to work with complex distributed systems. This is important for roles involving processing vast amounts of data using MapReduce and HDFS. Hadoop Online Test

The Apache NiFi Online Test helps you understand if candidates can efficiently design and maintain data flows. This test is perfect for roles that require seamless data movement and transformation across diverse systems. Apache NiFi Online Test

Consider the Spark Online Test to assess proficiency in using Apache Spark for data processing tasks. This test is ideal for evaluating skills related to real-time and batch processing, ensuring smooth handling of big data projects. Spark Online Test

Finally, evaluate candidates’ messaging and stream-processing capabilities with the Kafka Online Test. This test measures their ability to design applications on top of Kafka, essential for high-throughput data handling. Kafka Online Test

Effective Case Study Assignments for Hiring Big Data Engineers

Case study assignments are valuable tools for assessing Big Data Engineers, but they come with challenges. They can be time-consuming, and may deter candidates, leading to potential loss of top talent. Despite these drawbacks, they provide deep insights into a candidate’s problem-solving abilities and technical skills. Let's look at a few recommended case study assignments.

Data Pipeline Design: This assignment involves creating a data pipeline to process large datasets. It's recommended because it evaluates a candidate’s ability to design scalable and efficient pipelines, a key skill for Big Data Engineers. Review the Big Data Engineer interview questions to align your case study with industry standards.

Real-time Data Processing: In this case study, candidates are tasked with setting up a system for real-time data processing using technologies like Apache Kafka or Spark. This tests their proficiency in handling real-time data streams and implementing solutions that require low-latency processing.

Data Storage Optimization: This assignment challenges candidates to optimize data storage solutions for cost and performance. It is crucial for evaluating their understanding of various storage solutions and their ability to implement effective storage strategies.

How to Structure Interview Stage for Hiring Big Data Engineers

After candidates pass the initial skills tests, it's important to bring them into technical interviews where their hard skills can be further evaluated. Skills tests are excellent for filtering out unfit candidates, but they might not always find the best-suited individual for the role. In these interviews, focus on questions that probe their practical knowledge and problem-solving capabilities.

Here are some example questions to consider during a Big Data Engineer interview: 1. What are the key differences between Hadoop and Spark, and when would you use each? This question assesses understanding of popular big data tools. 2. Can you explain a time you optimized a complex data processing task? This demonstrates experience with improving workflows. 3. How do you ensure data quality and integrity within a large dataset? This reveals their approach to data management. 4. Describe your experience with NoSQL databases like Cassandra or MongoDB. Testing their familiarity with NoSQL development is crucial. 5. How do you handle real-time data streaming? Their ability to manage high-velocity data streams is essential for big data engineering.

What are the ranks of Big Data Engineers?

Big Data Engineers often have a career progression that reflects their growing expertise and responsibilities. Understanding these ranks can help recruiters and hiring managers better target their search for the right talent level.

- Junior Big Data Engineer: This entry-level position is for those new to the field. They typically work on smaller projects under supervision, learning the ropes of big data technologies and best practices.

- Big Data Engineer: At this mid-level rank, engineers take on more complex projects independently. They have a solid grasp of big data tools and can design and implement data pipelines.

- Senior Big Data Engineer: These professionals lead projects and mentor junior team members. They have deep expertise in multiple big data technologies and can architect large-scale data solutions.

- Lead Big Data Engineer: This role involves overseeing entire big data initiatives. They collaborate with stakeholders to align data strategies with business goals and manage teams of engineers.

- Big Data Architect: At the top of the technical ladder, architects design enterprise-wide big data ecosystems. They make high-level decisions about technologies and methodologies used across the organization.

When hiring, it's important to align the rank with your project needs and team structure. You can use Big Data Engineer interview questions to assess candidates at different levels effectively.

Hire the Best Big Data Engineers for Your Team

We've covered the key aspects of hiring Big Data Engineers, from understanding their role to crafting job descriptions and conducting interviews. The process involves identifying essential skills, using the right platforms, and assessing candidates through various methods.

The most important takeaway is to use accurate job descriptions and skills tests to make your hiring process more effective. By focusing on these elements, you'll be better equipped to find and hire top-tier Big Data Engineers who can drive your data initiatives forward.

Data Engineer Test

FAQs

Look for technical proficiency in tools like Hadoop and Spark, experience with databases, programming skills in languages like Python or Java, and strong problem-solving abilities.

A Big Data Engineer is responsible for designing, building, and maintaining scalable data systems, ensuring efficient data processing and storage to enable data-driven insights.

Use technical assessments and practical case study assignments to evaluate candidates' technical knowledge and problem-solving skills. Consider utilizing tests such as the Data Engineer Test.

Post job openings on tech job boards, engage with niche communities, and consider platforms like LinkedIn or specialized recruitment agencies focused on tech talent.

Clearly outline job expectations, required skills, and qualifications. Highlight specific tools and technologies your team uses. For sample job descriptions, refer to our Big Data Engineer Job Description guide.

Common stages include initial screening, technical interviews focusing on problem-solving and coding, and final round interviews evaluating cultural fit and collaboration skills.

Skills tests should assess data processing capabilities, database management, and programming skills. Consider tools like the Data Engineer Test and Spark Online Test.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources