Hiring data professionals who are skilled in Hive can be difficult without the right screening process. Preparing a list of questions ensures that you can evaluate candidates thoroughly and understand their expertise in Hive.

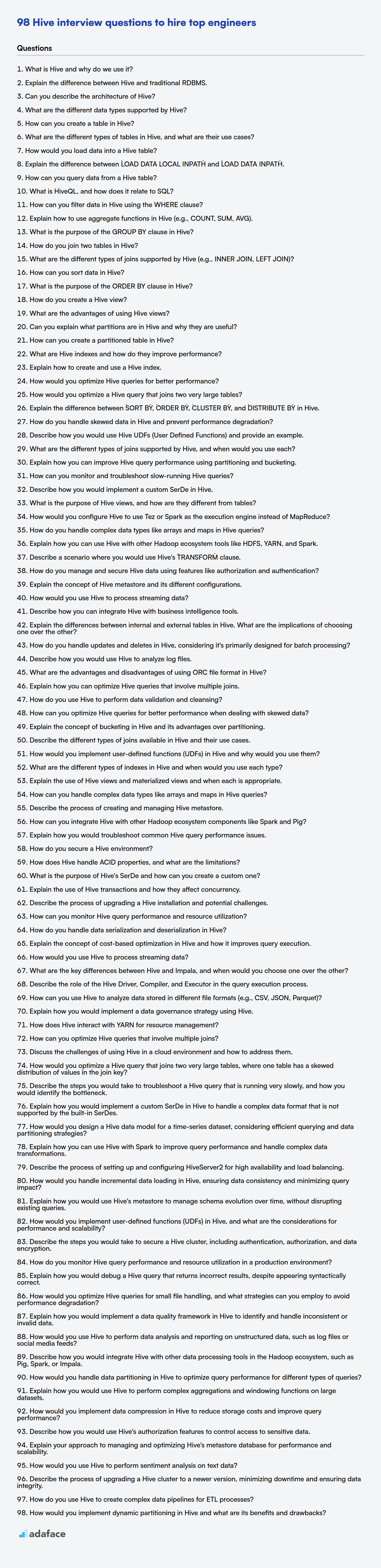

This blog post provides a list of interview questions categorized by skill level, starting from basic concepts to expert-level knowledge, including multiple-choice questions (MCQs). The questions are designed to assess a candidate's understanding of Hive, its architecture, and its practical applications, similar to what we cover when assessing big data engineers.

By using these questions, you can streamline your interview process and identify top talent for your team or even use a ready-made Hive test to screen candidates.

Table of contents

Basic Hive interview questions

1. What is Hive and why do we use it?

Hive is a data warehouse system built on top of Hadoop for providing data query and analysis. It provides an SQL-like interface (HiveQL) to query data stored in Hadoop Distributed File System (HDFS) or other compatible storage systems like Amazon S3. Hive translates these queries into MapReduce jobs, enabling users familiar with SQL to easily process and analyze large datasets without needing in-depth knowledge of Java or MapReduce programming.

We use Hive because it simplifies the process of querying and analyzing large datasets stored in Hadoop. It offers several benefits, including:

- SQL-like Interface: Reduces the learning curve for users familiar with SQL.

- Scalability: Leverages Hadoop's distributed processing capabilities to handle large volumes of data.

- Data Summarization, Query, and Analysis: Enables efficient data analysis through aggregation and filtering.

- Integration with Hadoop Ecosystem: Seamlessly integrates with other Hadoop components like HDFS and YARN.

2. Explain the difference between Hive and traditional RDBMS.

Hive and traditional RDBMS (like MySQL, PostgreSQL, Oracle) differ significantly in their architecture and intended use. RDBMS are designed for online transaction processing (OLTP), emphasizing data integrity, atomicity, consistency, isolation, and durability (ACID) properties. They typically store structured data in a normalized format and are optimized for fast, random access to individual rows or small sets of rows via SQL queries with indexes.

Hive, on the other hand, is built for online analytical processing (OLAP) on large datasets stored in distributed storage systems like Hadoop. It trades ACID compliance for scalability and fault tolerance. Hive converts SQL-like queries (HQL) into MapReduce jobs, which perform batch processing on the entire dataset. Hive supports schema-on-read, allowing it to work with various data formats (e.g., CSV, JSON, Parquet) and is suitable for data warehousing and large-scale data analysis rather than real-time transactions. Therefore, RDBMS are row oriented, while Hive is column oriented, by default.

3. Can you describe the architecture of Hive?

Hive's architecture is built around enabling SQL-like queries on Hadoop data. It consists of several key components. The Hive client submits queries. These queries are processed by the Driver, which parses, plans, and optimizes the query. Metadata about tables and data is stored in the Metastore (typically a relational database). The Compiler translates the HiveQL query into a MapReduce (or other execution engine like Spark or Tez) job. The Execution Engine then executes this job on the Hadoop cluster. Results are fetched and returned to the client.

In essence, Hive acts as a bridge between SQL-familiar users and the underlying Hadoop ecosystem. It provides an abstraction layer, allowing users to query and analyze large datasets using SQL-like syntax without needing to write complex MapReduce jobs directly. The metastore is crucial for managing table schemas and data locations, ensuring that Hive knows how to access and interpret the data stored in HDFS or other storage systems.

4. What are the different data types supported by Hive?

Hive supports both primitive and complex data types.

Primitive data types include: TINYINT, SMALLINT, INT, BIGINT, BOOLEAN, FLOAT, DOUBLE, STRING, BINARY, TIMESTAMP, DATE, VARCHAR, and CHAR. These represent basic data values like integers, decimals, text strings, and dates.

Complex data types are:

ARRAY: An ordered collection of elements of the same type.MAP: An unordered collection of key-value pairs, where keys are of the same type and values are of the same type.STRUCT: A record type that encapsulates a fixed set of named fields, each of which can have a different type.UNION: Can hold a value of one of several specified types. (Less commonly used).

5. How can you create a table in Hive?

You can create a table in Hive using the CREATE TABLE statement. The basic syntax involves specifying the table name, column names and their data types, and optionally, details about the data format, storage location, and other table properties.

Here's a simple example:

CREATE TABLE employees (

id INT,

name STRING,

salary DOUBLE

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS TEXTFILE;

This creates a table named employees with three columns: id (integer), name (string), and salary (double). It also specifies that the data is delimited by commas and stored as a text file.

6. What are the different types of tables in Hive, and what are their use cases?

Hive supports several table types, each suited for different use cases. Managed (Internal) Tables are managed entirely by Hive. Hive controls the data's lifecycle; when a managed table is dropped, both the metadata and the data are deleted. These are suitable for temporary or intermediate data that doesn't need to be preserved outside of Hive.

External Tables, on the other hand, only have their metadata managed by Hive. The data resides in an external location (e.g., HDFS, cloud storage). When an external table is dropped, only the metadata is removed; the data remains untouched. This is ideal for data that is also used by other tools or processes, or when you need to preserve the data even after the table is no longer needed in Hive. Other types include Partitioned Tables (data is divided into partitions based on column values, improving query performance), Bucketed Tables (data is divided into buckets, enabling efficient sampling and joining), View (virtual tables based on a query), Materialized View (precomputed view data which is stored physically) and Temporary Tables (only visible to the current session).

7. How would you load data into a Hive table?

Data can be loaded into a Hive table using several methods:

- LOAD DATA statement: This is the most common and straightforward method. You can load data from a local file system or HDFS into a Hive table. The

LOAD DATAcommand can optionally specifyLOCALto load from the local file system or omit it to load from HDFS. You can also specifyOVERWRITEto replace existing data or omit it to append. For example:LOAD DATA LOCAL INPATH '/path/to/local/file.txt' OVERWRITE INTO TABLE my_table; LOAD DATA INPATH '/path/to/hdfs/file.txt' INTO TABLE my_table; - INSERT statement: Data can also be inserted into a Hive table using the

INSERTstatement, often from the results of aSELECTquery from another table or a subquery. This is useful for transforming and loading data simultaneously:INSERT INTO TABLE my_table SELECT * FROM another_table WHERE condition; - External Tables: Creating an external table allows Hive to recognize data already present in HDFS without moving it. The

LOCATIONclause in theCREATE EXTERNAL TABLEstatement specifies the HDFS path where the data resides.CREATE EXTERNAL TABLE my_external_table (col1 STRING, col2 INT) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LOCATION '/path/to/hdfs/data'; - Sqoop: While technically not a Hive command, Sqoop can be used to import data from relational databases directly into Hive tables.

8. Explain the difference between `LOAD DATA LOCAL INPATH` and `LOAD DATA INPATH`.

LOAD DATA INPATH loads data from a location on the Hadoop Distributed File System (HDFS) into a Hive table. The data must reside in HDFS. In contrast, LOAD DATA LOCAL INPATH loads data from the local file system of the machine where the Hive client is running. This command first copies the data from the local file system to a temporary location in HDFS before loading it into the Hive table. Security considerations may restrict the usage of LOCAL, as enabling hive.security.authorization.enabled may disable LOAD DATA LOCAL INPATH.

9. How can you query data from a Hive table?

Data can be queried from a Hive table using HiveQL, which is similar to SQL. The primary way to query data is using the SELECT statement. For example:

SELECT * FROM my_table WHERE column1 = 'value';

This statement retrieves all columns from the table my_table where the value of column1 is 'value'. Other standard SQL clauses like GROUP BY, ORDER BY, JOIN, and LIMIT are also supported. You can execute HiveQL queries via the Hive CLI, Beeline, or programmatically using JDBC/ODBC drivers.

10. What is HiveQL, and how does it relate to SQL?

HiveQL is a SQL-like query language for Apache Hive, a data warehouse system built on top of Hadoop. It allows users to query and manage large datasets residing in distributed storage, such as HDFS or object stores, using a familiar SQL syntax.

The relationship between HiveQL and SQL is that HiveQL is inspired by SQL and shares many of the same keywords and concepts (e.g., SELECT, FROM, WHERE, GROUP BY). However, HiveQL is not a full implementation of the SQL standard. It is designed to work with the MapReduce paradigm and has some limitations and extensions specific to the Hadoop ecosystem. For example, it supports custom functions written in Java or Python and has specific constructs for handling data partitioning and bucketing for efficient querying.

11. How can you filter data in Hive using the WHERE clause?

In Hive, you can filter data using the WHERE clause in your SQL-like queries. The WHERE clause is used to specify conditions that rows must satisfy to be included in the result set. It's similar to how it functions in standard SQL.

For example, if you have a table named employees with columns like employee_id, name, department, and salary, you can filter the data to retrieve only employees in the 'Sales' department with a salary greater than 50000 using the following query:

SELECT employee_id, name, salary

FROM employees

WHERE department = 'Sales' AND salary > 50000;

12. Explain how to use aggregate functions in Hive (e.g., COUNT, SUM, AVG).

Aggregate functions in Hive compute a single result from multiple input rows. COUNT returns the number of rows, SUM returns the sum of values, and AVG returns the average. You typically use them with GROUP BY to calculate aggregates for each group. If GROUP BY is omitted, the aggregate function is applied to all rows. For example, SELECT COUNT(*) FROM table_name; will count all rows. SELECT department, AVG(salary) FROM employees GROUP BY department; calculates the average salary for each department. DISTINCT can be used with some aggregate functions to consider only unique values, such as COUNT(DISTINCT column_name). Commonly used aggregate functions include MIN, MAX, and VARIANCE as well.

Here's how to calculate the average of the 'sales' column:

SELECT AVG(sales) FROM sales_table;

And this will display the number of records for each region:

SELECT region, COUNT(*) FROM sales_table GROUP BY region;

13. What is the purpose of the GROUP BY clause in Hive?

The GROUP BY clause in Hive is used to group rows with the same values in one or more columns into a summary row. It allows you to perform aggregate functions (like COUNT, SUM, AVG, MIN, MAX) on these groups, providing summarized results for each unique combination of grouping column values.

Essentially, it transforms a table into a summarized version based on the specified columns. Think of it as applying a 'fold' or 'reduce' operation to your data where data rows with identical columns are grouped together.

14. How do you join two tables in Hive?

In Hive, you join two tables using the JOIN clause, similar to SQL. The basic syntax is SELECT ... FROM table1 JOIN table2 ON table1.column = table2.column;. You can specify the join type, such as INNER JOIN, LEFT OUTER JOIN, RIGHT OUTER JOIN, or FULL OUTER JOIN. If no join type is specified, INNER JOIN is used by default.

Example:

SELECT a.name, b.city FROM employees a JOIN departments b ON a.dept_id = b.id;

You can also perform more complex joins using multiple conditions or joining more than two tables. Hive also supports LEFT SEMI JOIN, CROSS JOIN and MAP JOIN which has different usage scenarios. MAP JOIN is useful for joining a large table with a smaller table, where the smaller table can fit into memory.

15. What are the different types of joins supported by Hive (e.g., INNER JOIN, LEFT JOIN)?

Hive supports several types of joins, similar to SQL. These include:

INNER JOIN: Returns rows only when there is a match in both tables.LEFT JOIN(orLEFT OUTER JOIN): Returns all rows from the left table and the matching rows from the right table. If there's no match in the right table, it returnsNULLfor the right table's columns.RIGHT JOIN(orRIGHT OUTER JOIN): Returns all rows from the right table and the matching rows from the left table. If there's no match in the left table, it returnsNULLfor the left table's columns.FULL OUTER JOIN: Returns rows when there is a match in either the left or right table. If there is no match for a row, the missing side will containNULL.LEFT SEMI JOIN: Returns rows from the left table if they match a row in the right table, but only includes columns from the left table. It's like anINNER JOINbut without the right table's columns and does not return duplicates from the left table even if there are multiple matches in the right table.CROSS JOIN: Returns the Cartesian product of the two tables (every row in the first table combined with every row in the second table).

16. How can you sort data in Hive?

In Hive, you can sort data using the ORDER BY clause for total ordering and SORT BY for sorting within partitions. ORDER BY guarantees a global order but is usually slow because it uses a single reducer (unless hive.enforce.sorting=false is set). SORT BY sorts data within each reducer, leading to faster sorting, especially for large datasets, but doesn't guarantee global order.

For example:

SELECT * FROM table_name ORDER BY column_name;

SELECT * FROM table_name SORT BY column_name;

If you want to control the number of reducers for SORT BY, you can use SET mapreduce.job.reduces=<number_of_reducers>; before the SORT BY query. CLUSTER BY is a shorthand for DISTRIBUTE BY and SORT BY when the sort and distribute keys are the same.

17. What is the purpose of the ORDER BY clause in Hive?

The ORDER BY clause in Hive is used to sort the result set of a query in ascending or descending order based on one or more columns. It ensures a global order across all partitions in the table, but it's important to note that this global ordering can be computationally expensive, especially for large datasets, as it forces all the data through a single reducer.

Unlike SORT BY, which sorts data within each reducer, ORDER BY guarantees a fully sorted result. You can specify the sort order for each column using ASC for ascending (default) or DESC for descending. When using ORDER BY, a single reducer is typically used which can cause performance issues for large datasets. For example:

SELECT * FROM employee ORDER BY salary DESC;

18. How do you create a Hive view?

You create a Hive view using the CREATE VIEW statement. The basic syntax is CREATE VIEW view_name AS query; where query is a valid HiveQL SELECT statement.

For example:

CREATE VIEW employee_salaries AS

SELECT emp_id, emp_name, salary

FROM employees

WHERE department = 'Sales';

This creates a view named employee_salaries that displays the emp_id, emp_name, and salary from the employees table, but only for employees in the 'Sales' department. Views are essentially stored queries; they don't store data themselves.

19. What are the advantages of using Hive views?

Hive views offer several advantages. Primarily, they simplify complex queries by encapsulating the logic. This allows users to query the view as if it were a regular table, without needing to understand the underlying complexity. This abstraction improves code readability and maintainability. Also, using views can enhance data security by exposing only necessary columns or aggregated data to certain users.

Furthermore, views provide a layer of insulation between the physical schema and the user. If the underlying table structure changes (e.g., adding or renaming columns), you can modify the view definition to accommodate these changes without affecting the applications that use the view. This promotes flexibility and reduces the impact of schema evolution. They also optimize queries by pre-calculating or filtering data.

20. Can you explain what partitions are in Hive and why they are useful?

In Hive, partitions are a way to divide a table into smaller parts based on the values of one or more columns. Essentially, they allow you to organize your data within the Hive table's directory structure. This is different from sharding because partitions are based on the actual data within the column.

Partitions are useful because they drastically improve query performance. When you query a partitioned table, Hive can filter out irrelevant partitions based on the WHERE clause, scanning only the relevant data. This reduces the amount of data that needs to be read, processed, and speeds up query execution significantly. For example, if a table is partitioned by date, a query for data from a specific month will only scan the partition for that month. Without partitioning, the whole table would be scanned. You can think of them like indexes, but at the level of the filesystem structure.

21. How can you create a partitioned table in Hive?

To create a partitioned table in Hive, you use the PARTITIONED BY clause in the CREATE TABLE statement. This clause specifies the column(s) on which the table will be partitioned. Partition columns are not part of the data itself but are metadata used to improve query performance by filtering data based on partition values.

Here's an example:

CREATE TABLE my_table (

id INT,

name STRING

)

PARTITIONED BY (city STRING)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS TEXTFILE;

In this example, the my_table is partitioned by the city column. When you insert data, you'll specify the partition value:

INSERT INTO TABLE my_table PARTITION (city='New York') VALUES (1, 'Alice');

22. What are Hive indexes and how do they improve performance?

Hive indexes are used to speed up query performance on large tables. Without indexes, Hive must perform a full table scan to find relevant data, which can be slow. Indexes allow Hive to quickly locate the rows that match the query criteria.

Indexes improve performance by creating a pointer to the location of data in the table, thus avoiding full table scans. However, creating and maintaining indexes adds overhead, so it's important to choose columns that are frequently used in WHERE clause for indexing.

23. Explain how to create and use a Hive index.

To create a Hive index, you use the CREATE INDEX statement. For example: CREATE INDEX employee_id_idx ON TABLE employee(id) AS 'org.apache.hadoop.hive.ql.index.compact.CompactIndexHandler' WITH DEFERRED REBUILD; This creates an index named employee_id_idx on the id column of the employee table. The WITH DEFERRED REBUILD clause means the index won't be populated immediately. To populate it, use ALTER INDEX employee_id_idx ON employee REBUILD; After rebuilding the index, Hive can automatically use it to speed up queries that filter on the indexed column. To drop an index, use DROP INDEX employee_id_idx ON employee;

24. How would you optimize Hive queries for better performance?

To optimize Hive queries, consider several strategies. Partitioning tables by frequently used filter columns drastically reduces the amount of data scanned. Bucketing can further improve performance when joining tables on bucketed columns, as it limits the join to matching buckets. File format selection matters; using optimized formats like ORC or Parquet improves storage efficiency and query speed due to features like columnar storage and predicate pushdown. Compressing data using Snappy or Gzip minimizes I/O overhead. Enable vectorization to process data in batches, improving CPU utilization. Adjust Hive configuration parameters like hive.exec.parallel to allow parallel execution of tasks. Using EXPLAIN can help identify bottlenecks and optimize query execution plans.

Intermediate Hive interview questions

1. How would you optimize a Hive query that joins two very large tables?

To optimize a Hive query joining two very large tables, consider several strategies. First, optimize the join operation itself. Use a MAPJOIN if one table is significantly smaller than the other; this loads the smaller table into memory on each mapper, avoiding shuffling. If both tables are large, ensure proper partitioning and bucketing on the join keys. This allows Hive to only read relevant partitions/buckets, drastically reducing I/O.

Second, optimize data access and processing. Consider using appropriate file formats like ORC or Parquet for efficient storage and retrieval. Enable vectorized query execution to process data in batches. Increase the number of reducers to leverage parallel processing, but avoid over-partitioning, which adds overhead. Finally, analyze the query execution plan using EXPLAIN to identify bottlenecks and adjust configurations accordingly. Hive configuration parameters such as hive.optimize.cp, hive.vectorized.execution.enabled, and hive.exec.reducers.max can be tuned for optimal performance.

2. Explain the difference between `SORT BY`, `ORDER BY`, `CLUSTER BY`, and `DISTRIBUTE BY` in Hive.

ORDER BY performs a global sort on all data using a single reducer, which can be slow for large datasets. SORT BY performs a local sort within each reducer, providing faster results but not a globally sorted output. CLUSTER BY is a shorthand for DISTRIBUTE BY followed by SORT BY, ensuring that rows with the same value in the specified column are sent to the same reducer and then sorted locally within that reducer. DISTRIBUTE BY controls how rows are distributed among reducers based on the specified column, but it doesn't guarantee any sorting.

3. How do you handle skewed data in Hive and prevent performance degradation?

Skewed data in Hive can significantly degrade performance. Here's how to handle it:

- Identify Skew: Analyze your data to find columns where certain values occur disproportionately more often than others. Use

COUNT DISTINCTor sampling techniques. - Data Skew Optimization techniques:

hive.optimize.skewjoin: Set totrue. Hive will split the skewed keys into separate files and join them individually.hive.skewjoin.key: Define the threshold for skew. If the number of rows for a key exceeds this, it's considered skewed.- Salting: Add a random number to skewed keys. This distributes the skewed keys across more reducers. Use

distribute by rand()before the join.

Example using salting:

SELECT /*+ MAPJOIN(small_table) */

a.*,

b.*

FROM

(SELECT *, round(rand() * 10) AS salt FROM big_table) a

JOIN

small_table b ON a.key = b.key and a.salt = b.salt;

4. Describe how you would use Hive UDFs (User Defined Functions) and provide an example.

Hive UDFs allow you to extend Hive's built-in functionality by creating custom functions. I would use them when I need to perform operations on data that are not natively supported by Hive's built-in functions, or when I need to encapsulate complex logic for reusability. This makes queries cleaner and more maintainable.

For example, suppose I need to implement a custom string encoding/decoding function. I could write a Java class with a method annotated with @UDFType, and then register this class as a UDF in Hive. Here's a simplified example:

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.hive.ql.udf.UDFType;

@UDFType(deterministic = true)

public class SimpleEncoder extends UDF {

public String evaluate(final String s) {

if (s == null) { return null; }

return java.util.Base64.getEncoder().encodeToString(s.getBytes());

}

}

Then, in Hive, I'd add the jar containing this class and create a temporary function:

ADD JAR /path/to/my/jar/simple-encoder.jar;

CREATE TEMPORARY FUNCTION simple_encode AS 'SimpleEncoder';

SELECT simple_encode(my_string_column) FROM my_table;

5. What are the different types of joins supported by Hive, and when would you use each?

Hive supports several types of joins, each suited for different scenarios:

- INNER JOIN: Returns rows when there is a match in both tables.

- LEFT OUTER JOIN: Returns all rows from the left table and the matched rows from the right table. If there is no match in the right table, it returns NULLs for the right table's columns.

- RIGHT OUTER JOIN: Returns all rows from the right table and the matched rows from the left table. If there is no match in the left table, it returns NULLs for the left table's columns.

- FULL OUTER JOIN: Returns all rows when there is a match in one of the tables. If there are unmatched rows, it returns NULLs for the columns of the table without a match.

- LEFT SEMI JOIN: Returns rows from the left table if there's a match in the right table. It only returns columns from the left table and is more efficient than

INNER JOINif you only need columns from the left table. - CROSS JOIN: Returns the Cartesian product of two tables, which means every row from the first table is combined with every row from the second table. Use it with caution because the result set can be very large. It's typically used when you need all possible combinations of rows from two tables or for generating test data.

6. Explain how you can improve Hive query performance using partitioning and bucketing.

Partitioning and bucketing are key techniques to optimize Hive query performance by reducing the amount of data scanned. Partitioning divides a table into smaller parts based on a specific column (e.g., date), storing each partition in a separate directory. This allows Hive to only read the relevant partitions when a query includes a filter on the partitioning column, significantly reducing I/O. For instance, if a table is partitioned by date, a query for data from a specific month will only scan the corresponding month's partition.

Bucketing further refines data organization within partitions. Bucketing divides data within a partition into a fixed number of buckets based on the hash of one or more columns. This helps in efficient sampling and joining of data. When joining two bucketed tables on the bucketing column, Hive can perform a map-side join, which is much faster since it only compares data from corresponding buckets, thus improving performance.

7. How can you monitor and troubleshoot slow-running Hive queries?

To monitor and troubleshoot slow-running Hive queries, several strategies can be employed. First, enable Hive's query logging to track query execution times and resource consumption. Utilize tools like Hive's Explain Plan to understand the query execution plan and identify potential bottlenecks such as full table scans or inefficient joins. Furthermore, Hadoop's monitoring tools like YARN Resource Manager and Ganglia can help assess cluster resource utilization (CPU, memory, disk I/O) and identify resource constraints impacting query performance.

For troubleshooting, examine Hive's query logs for errors and warnings. Analyze the execution plan for opportunities to optimize the query, such as adding appropriate indexes in underlying storage, rewriting the query using more efficient joins, or partitioning the data. Check for data skewness, which can significantly impact performance. Consider increasing the resources allocated to the Hive job (e.g., increasing the number of mappers/reducers) if the cluster has available capacity. Using tools like jstack or Java profilers on the HiveServer2 process may help to identify deadlocks or long running processes.

8. Describe how you would implement a custom SerDe in Hive.

To implement a custom SerDe in Hive, you need to create a Java class that implements the org.apache.hadoop.hive.serde2.SerDe interface. This interface requires implementing three core methods: initialize(), deserialize(), and serialize(). The initialize() method handles initialization and configuration based on table properties. The deserialize() method converts the input data (typically from a Hadoop InputFormat) into Hive objects. The serialize() method converts Hive objects into a format suitable for storage (e.g., a delimited string).

After implementing the SerDe class, you would package it into a JAR file and add it to Hive's classpath using the ADD JAR command. Then, you'd create a Hive table, specifying the fully qualified name of your SerDe class using the ROW FORMAT SERDE clause in the CREATE TABLE statement. For example: CREATE TABLE my_table (col1 STRING, col2 INT) ROW FORMAT SERDE 'com.example.MyCustomSerDe';. You will also need to add the relevant properties needed by your SerDe during table creation using the WITH SERDEPROPERTIES clause.

9. What is the purpose of Hive views, and how are they different from tables?

Hive views are virtual tables based on the result-set of a query. They simplify complex queries by encapsulating them, allowing users to interact with the view as if it were a regular table. This promotes code reusability and improves query readability. Views don't store data physically.

The key differences from tables are:

- Data Storage: Tables store data physically, while views only store the query definition.

- Updatability: Tables can be updated directly, while views are typically read-only (although some views can be updated under certain conditions).

- Schema: Tables have a fixed schema defined at creation. Views inherit their schema from the underlying query.

- Materialization: Tables are materialized meaning they exist as physical files. Views are virtual and only materialized when queried.

10. How would you configure Hive to use Tez or Spark as the execution engine instead of MapReduce?

To configure Hive to use Tez or Spark as the execution engine instead of MapReduce, you need to set the hive.execution.engine property in the Hive configuration.

For Tez, set hive.execution.engine=tez. For Spark, set hive.execution.engine=spark. This can be done either in the hive-site.xml file, through the Hive command line using the SET command (e.g., SET hive.execution.engine=tez;), or within a Hive script. It's also important to ensure that Tez or Spark are properly installed and configured in your Hadoop environment for Hive to utilize them. Additionally, configure Tez or Spark specific properties (like memory settings) for optimal performance.

11. How do you handle complex data types like arrays and maps in Hive queries?

Hive provides built-in support for handling complex data types like arrays and maps. For arrays, you can use functions like size() to get the array length, array[index] to access elements, and explode() to flatten the array into multiple rows. For maps, you can use map['key'] to access values, map_keys() and map_values() to get lists of keys and values respectively. explode() can also be used on maps to create rows of key-value pairs.

Example using explode with arrays:

SELECT value FROM my_table LATERAL VIEW explode(my_array_column) AS value;

Example using explode with maps:

SELECT key, value FROM my_table LATERAL VIEW explode(my_map_column) AS key, value;

These functions and the lateral view enable efficient querying and manipulation of complex data types in Hive.

12. Explain how you can use Hive with other Hadoop ecosystem tools like HDFS, YARN, and Spark.

Hive is a data warehousing tool built on top of Hadoop that allows querying and managing large datasets residing in distributed storage. It interacts seamlessly with several Hadoop ecosystem components.

- HDFS: Hive primarily uses HDFS (Hadoop Distributed File System) as its underlying storage layer. Hive tables are, by default, stored as files in HDFS. Hive reads data from HDFS for query processing and writes output data back to HDFS. The metadata about the Hive tables, schemas, and partitions are stored in metastore (typically a relational database like MySQL or Postgres).

- YARN: Hive leverages YARN (Yet Another Resource Negotiator) for resource management and job scheduling. When a Hive query is submitted, it's translated into MapReduce jobs (or Tez or Spark jobs). YARN allocates resources (CPU, memory) to these jobs across the Hadoop cluster.

- Spark: Hive can integrate with Spark as its execution engine. Instead of using MapReduce, Hive can execute queries using Spark, which can significantly improve query performance, especially for complex queries. This is done using Hive on Spark. We can set

hive.execution.engine=sparkin Hive configuration to use spark as the execution engine. You can use the spark context inside your hive jobs. - Other tools: Hive can also work with other tools like Pig (although it is less common now with the rise of Spark), HBase (for direct querying of HBase tables), and Kafka (for streaming data ingestion).

13. Describe a scenario where you would use Hive's `TRANSFORM` clause.

I would use Hive's TRANSFORM clause when I need to process data with an external script or program that is not directly supported by Hive's built-in functions. For instance, imagine needing to perform sentiment analysis on text data stored in Hive using a Python script that leverages a specific NLP library.

In this scenario, the TRANSFORM clause allows me to pass the text data from Hive to the Python script, execute the sentiment analysis using the script, and then ingest the results back into Hive. This is useful when you need to apply custom logic or integrate external tools into your Hive data processing pipeline. For example:

SELECT

TRANSFORM (text_column) USING 'python /path/to/sentiment_analyzer.py'

AS sentiment

FROM

my_table;

14. How do you manage and secure Hive data using features like authorization and authentication?

Hive data security can be managed through authorization and authentication. Authentication verifies user identity, often using Kerberos integration. Authorization controls user access to Hive objects (databases, tables, views, functions) using Hive's SQL-standard authorization or the more fine-grained Ranger integration.

Specifically, we can grant or revoke privileges using GRANT and REVOKE statements in HiveQL. For example, GRANT SELECT ON TABLE my_table TO user1; allows user1 to only read data from my_table. Using Apache Ranger allows for centralized security administration, auditing, and masking/filtering sensitive data within Hive. Data encryption in transit (using TLS) and at rest (using Hadoop's encryption features) further enhances security.

15. Explain the concept of Hive metastore and its different configurations.

The Hive metastore is a central repository that stores metadata about Hive tables, partitions, schemas, columns, and their locations in HDFS. It acts as a directory for Hive, allowing it to discover and access data. It's crucial for Hive's operation as it provides the necessary information for query planning and execution.

Different metastore configurations exist, primarily:

- Embedded Metastore: Uses Derby as the database and is suitable for single-user testing due to its simplicity. Data is stored on disk.

- Local Metastore: Uses an external database like MySQL or PostgreSQL. Multiple Hive instances can connect, but only one can write at a time.

- Remote Metastore: Uses a separate database server (like MySQL, PostgreSQL or Oracle) and one or more metastore servers. This is the most common and robust configuration for production environments, allowing multiple Hive instances to connect and providing better scalability and reliability. The metastore service is independent of the Hive service.

16. How would you use Hive to process streaming data?

Hive isn't inherently designed for real-time streaming data processing due to its batch-oriented nature. However, you can process near real-time data using a combination of Hive and other streaming technologies. One approach is to use a streaming platform like Kafka or Flume to ingest the data, then store it in HDFS in small batches. Hive can then periodically query these batches as they arrive.

Specifically, you'd use a combination of technologies. The streaming data will be loaded into HDFS as small, manageable chunks. You can then create a Hive table over that data. Finally, schedule a cron job (or use a tool like Oozie) to run Hive queries against the data at regular intervals, say every few minutes. Each query would process newly arrived data and store the results into another Hive table for further analysis or reporting. Although not true real-time processing, this simulates real-time for many use cases.

17. Describe how you can integrate Hive with business intelligence tools.

Integrating Hive with business intelligence (BI) tools typically involves using a JDBC or ODBC driver to connect the BI tool to the HiveServer2. The BI tool can then query Hive tables and views using SQL. The results are retrieved and visualized within the BI tool.

Specifically, you'd configure the BI tool (e.g., Tableau, Power BI, Looker) to use the Hive JDBC/ODBC driver. This involves providing the HiveServer2 hostname, port, database name, and authentication credentials. Once the connection is established, users can browse Hive metadata, construct SQL queries, and build dashboards and reports based on the data stored in Hive.

18. Explain the differences between internal and external tables in Hive. What are the implications of choosing one over the other?

Internal tables in Hive have their data managed entirely by Hive. When you drop an internal table, both the metadata in the metastore and the data in HDFS are deleted. External tables, on the other hand, only have their metadata managed by Hive. The data resides in an external location specified during table creation. Dropping an external table only removes the metadata; the data remains untouched in HDFS.

The implication of choosing one over the other depends on your data management strategy. Use internal tables when you want Hive to fully manage the lifecycle of your data. They are suitable for temporary or intermediate data. Use external tables when the data is shared with other tools or processes, or when you want to preserve the data even if the table definition is dropped. External tables are ideal for data that originates outside of Hive or needs to be accessible to other systems.

19. How do you handle updates and deletes in Hive, considering it's primarily designed for batch processing?

Hive traditionally lacked direct support for updates and deletes due to its design for batch processing and reliance on HDFS's immutable nature. However, newer versions introduce mechanisms for handling these operations, primarily through ACID (Atomicity, Consistency, Isolation, Durability) transactions.

To enable updates and deletes, you need to configure your Hive tables as ACID tables. This involves setting properties like transactional=true and using the ORC file format. With ACID enabled, you can use UPDATE, DELETE, and INSERT statements (with some restrictions) similar to relational databases. Be aware that enabling ACID features can impact performance, so it's crucial to consider the use cases and data volume before implementing them. Alternative methods for updating or 'deleting' data might involve creating new tables with the desired changes and replacing the original table. This could be more efficient than ACID transactions in certain batch scenarios.

20. Describe how you would use Hive to analyze log files.

To analyze log files using Hive, I would first create a Hive table that maps to the structure of the log files. This might involve defining columns based on the log format (e.g., timestamp, log level, message). I would then load the log data into Hive, possibly from HDFS or another data source, using the LOAD DATA INPATH command. The file format would typically be defined as TEXTFILE with appropriate delimiters.

Once the data is in Hive, I would use SQL-like queries to analyze the logs. For example, I might count the number of errors within a specific time range, identify the most frequent error messages, or correlate events based on timestamp and other log attributes. I can leverage Hive's built-in functions for string manipulation, date/time operations, and aggregations to extract valuable insights from the log data. Finally, the query output can be used to identify trends or anomalies to help with debugging and system monitoring.

21. What are the advantages and disadvantages of using ORC file format in Hive?

ORC (Optimized Row Columnar) file format offers several advantages in Hive. Firstly, it significantly improves query performance due to its columnar storage, predicate pushdown, and efficient compression techniques. Secondly, ORC reduces storage space requirements compared to row-based formats like Text or Sequence files. Finally, ORC supports complex data types and ACID properties.

However, ORC also has some disadvantages. The primary drawback is the higher write complexity and CPU overhead during data ingestion and transformation, which can make write operations slower. Also, ORC files are not as human-readable as text files, making debugging and manual data inspection more challenging. Initial creation of ORC files may also take a substantial amount of time for large datasets.

22. Explain how you can optimize Hive queries that involve multiple joins.

Optimizing Hive queries with multiple joins involves several techniques. Firstly, leverage join order optimization. Hive's cost-based optimizer can intelligently reorder joins to minimize data shuffled between mappers and reducers, prioritizing smaller tables on the right-hand side of the join. You can influence this with hints like /*+ STREAMTABLE(table_name) */ to specify the streaming table. Secondly, use bucketed joins when joining tables bucketed on the join keys. This avoids the shuffle phase as matching buckets reside on the same nodes. If the data fits into memory, use map joins (SET hive.auto.convert.join=true;), which perform the join in the mapper, reducing the need for a reducer. Finally, ensure proper data partitioning to reduce the amount of data read during the joins. Always analyze query execution plans (EXPLAIN <query>) to identify bottlenecks and refine optimization strategies.

23. How do you use Hive to perform data validation and cleansing?

Hive can be used for data validation and cleansing through several techniques. We can leverage Hive's SQL-like interface to write queries that identify and correct data inconsistencies, errors, and missing values.

Specific techniques include: checking for null values using IS NULL, verifying data types with functions like CASE WHEN or regular expressions (RLIKE), validating against acceptable ranges or sets of values using WHERE clauses, and deduplicating data with ROW_NUMBER() and partitioning. For example, you could check if a date column contains valid dates or if a numerical column falls within an expected range. Data cleansing can involve replacing invalid values with defaults, trimming whitespace with TRIM(), or transforming data using UDFs (User Defined Functions). These processes often involve creating new, cleansed tables based on the validated data. Below is an example of a Hive query to check for null values in a table:

SELECT *

FROM your_table

WHERE column1 IS NULL OR column2 IS NULL;

Advanced Hive interview questions

1. How can you optimize Hive queries for better performance when dealing with skewed data?

When dealing with skewed data in Hive, several optimization techniques can be employed. One effective approach is using SET hive.optimize.skewjoin = true; This enables Hive to optimize joins where one table has skewed data. Additionally, specifying the skewed values using SET hive.skewjoin.key = (key1, key2, ...); and SET hive.skewjoin.mapjoin.map.tasks = 10000; can help. The hive.skewjoin.mapjoin.map.tasks property controls the number of map tasks used for the skewed join. Setting hive.optimize.union.all = true can also help in some cases.

Another strategy is to pre-filter the skewed values into separate tables and then join these smaller tables with the larger table. Bucketing can also improve performance. Consider using CLUSTERED BY (column_name) INTO N BUCKETS to distribute the data more evenly. Furthermore, consider data sampling. If the skewed values are relatively small compared to the whole data, consider data sampling the skewed keys with 'hive.sample.key.percent' to avoid full table scan.

2. Explain the concept of bucketing in Hive and its advantages over partitioning.

Bucketing in Hive is a data organization technique within a partition. It divides data into a fixed number of buckets based on the hash of one or more columns. Unlike partitioning, which creates separate directories for each distinct value of the partition key, bucketing distributes data within the same directory. This makes queries faster when joining tables on the bucketing column because it limits the amount of data that needs to be scanned.

Advantages of bucketing over partitioning include: * Improved query performance: When joining tables on the bucketed column, Hive can efficiently compare only the corresponding buckets, reducing I/O and processing time. * Sampling: Bucketing facilitates data sampling, allowing you to retrieve a representative subset of the data for analysis or testing. * Even data distribution: Bucketing can help distribute data more evenly across nodes in a cluster, preventing data skew and improving overall performance. Partitioning can lead to data skew when a partition key has a disproportionately large number of records. * Number of buckets are fixed: A fixed number of buckets are created during table creation. This is good in cases where number of distinct values in a column increase rapidly over time - which can lead to too many partitions.

3. Describe the different types of joins available in Hive and their use cases.

Hive supports several types of joins, mirroring those found in SQL: INNER JOIN, LEFT OUTER JOIN, RIGHT OUTER JOIN, FULL OUTER JOIN, LEFT SEMI JOIN, and CROSS JOIN. INNER JOIN returns rows when there's a match in both tables. LEFT OUTER JOIN returns all rows from the left table and matched rows from the right, filling in NULLs if no match exists. RIGHT OUTER JOIN does the opposite, returning all rows from the right table and matched rows from the left. FULL OUTER JOIN combines both left and right outer joins, returning all rows and filling in NULLs where there are no matches.

LEFT SEMI JOIN is similar to an inner join but only returns columns from the left table, and only one row for each match. It's optimized for IN or EXISTS subqueries. CROSS JOIN returns the Cartesian product of the tables, meaning every row from the left table is paired with every row from the right table. This can result in very large tables and should be used cautiously. Example of INNER JOIN:

SELECT a.val, b.val FROM a JOIN b ON (a.key = b.key);

4. How would you implement user-defined functions (UDFs) in Hive and why would you use them?

To implement UDFs in Hive, you'd typically write a Java class that extends the UDF class (or GenericUDF for more complex scenarios). This class would contain a method named evaluate() which takes arguments as input and returns a value. You then compile this Java code into a JAR file. Finally, you register the JAR file with Hive using ADD JAR <jar_location> and create a temporary or permanent function using CREATE TEMPORARY FUNCTION <function_name> AS '<java_class_name>'. The temporary function is only available for the current session, while the permanent function stays until dropped.

You'd use UDFs when built-in Hive functions don't provide the functionality you need. For example, you might have a specific string transformation or data validation routine that is custom to your business requirements. UDFs allow you to extend Hive's capabilities and perform complex data manipulations within your Hive queries.

5. What are the different types of indexes in Hive and when would you use each type?

Hive supports several index types, each suited for different use cases. Compact Index is the default and stores the index data in a separate table, useful for medium-sized tables where quick lookups are needed. Bitmap Index uses bitmaps to represent the presence of values, ideal for columns with low cardinality (few distinct values) and equality predicates.

When to use which? Use Compact index when you want generic purpose indexing and table size is not huge. Bitmap indexes are best suited for columns with a small number of distinct values, like gender or boolean flags, and are very efficient for WHERE clauses using equality (=). They excel in scenarios involving multiple conditions combined with AND or OR. Bitmap indexes are generally faster, but consume more disk space as compared to compact indexes.

6. Explain the use of Hive views and materialized views and when each is appropriate.

Hive views are virtual tables defined by a query. They don't store data; instead, the query is executed every time the view is accessed. They are useful for simplifying complex queries, providing a layer of abstraction, and controlling data access by exposing only necessary columns. Use them when you need a logical view of data without the overhead of storing it physically and when the underlying data changes frequently.

Materialized views, on the other hand, store the results of the query physically. They are useful for improving query performance, especially for frequently accessed or computationally expensive queries. The data in a materialized view can be refreshed periodically or on demand. Use them when query performance is critical, the underlying data doesn't change too often, and you can tolerate some staleness in the data.

7. How can you handle complex data types like arrays and maps in Hive queries?

Hive provides built-in support for complex data types like arrays and maps. You can work with arrays using functions like size() to get the array length, array[index] to access specific elements, and explode() to flatten the array into multiple rows. For maps, you can use map['key'] to access values by key, size() to get the map size, and explode() to transform the map into key-value pairs as separate rows.

Specifically:

- Arrays: Use

array[index]to access elements,size(array)to get the size,explode(array)to convert to rows. Example:SELECT explode(my_array) AS element FROM my_table; - Maps: Use

map['key']to access values,size(map)to get the size,explode(map)to convert to rows. Example:SELECT explode(my_map) AS (key, value) FROM my_table;

8. Describe the process of creating and managing Hive metastore.

Creating and managing a Hive metastore involves several steps. Initially, you'll choose a metastore type, such as the embedded Derby database (for single-user testing), a local MySQL or PostgreSQL instance, or a remote metastore service. The choice depends on factors like scalability and multi-user access. Configuration is done primarily through hive-site.xml, where you specify the JDBC connection string, database credentials, and metastore server URI (if using a remote metastore). For example, you might configure javax.jdo.option.ConnectionURL and javax.jdo.option.ConnectionUserName.

After configuring the metastore, the Hive service creates the necessary database schemas and tables within the chosen database. Managing the metastore involves regular backups of the metastore database to prevent data loss. For production environments, consider using a dedicated metastore service and implementing proper security measures, such as access controls and encryption. Additionally, regularly update the metastore schema to benefit from new features and improvements in Hive. Remember to version control hive-site.xml to track configuration changes.

9. How can you integrate Hive with other Hadoop ecosystem components like Spark and Pig?

Hive integrates with Spark and Pig through various mechanisms. For Spark, you can use the SparkSession to read data directly from Hive tables using the Hive metastore. This allows Spark to leverage Hive's data warehouse capabilities and SQL interface. For example, using spark.sql("SELECT * FROM hive_table") in Spark will execute a Hive query.

For Pig, you can use the HCatLoader and HCatStorer to read data from and write data to Hive tables, respectively. Pig's integration with Hive through HCatalog allows Pig scripts to interact with Hive's metadata, simplifying data access and storage. Also, Pig scripts can be translated into MapReduce jobs that execute against data stored in Hive.

10. Explain how you would troubleshoot common Hive query performance issues.

To troubleshoot Hive query performance, I'd start by analyzing the query execution plan using EXPLAIN <query>. This helps identify bottlenecks like full table scans or inefficient joins. I'd then focus on optimizing these areas. Common strategies include:

- Data partitioning: Divide the data into smaller, manageable chunks based on a frequently used filter column.

- Bucketing: Further divide partitions into buckets for faster data retrieval.

- File format optimization: Using formats like ORC or Parquet for efficient storage and retrieval and enabling compression can make a huge difference.

- Join optimization: Ensure proper join order (smaller table first) and consider using broadcast joins for small tables.

- Increase resources: Check if the Hadoop cluster has enough resources allocated to Hive. Increase memory for map and reduce tasks by adjusting

hive.tez.container.sizeormapreduce.map.memory.mbandmapreduce.reduce.memory.mb. - Vectorization and Cost Based Optimization (CBO): Enable

hive.vectorized.executionandhive.cbo.enablefor performance improvements. - Check for skewed data: Identify skewed data using

ANALYZE TABLE <table_name> COMPUTE STATISTICS FOR COLUMNS <column_name>and handle withhive.optimize.skewjoin. - Avoid using

SELECT *: Select only the required columns. - Filter data as early as possible: Apply filters in the

WHEREclause to reduce the amount of data processed.

I'd also monitor query execution using tools like HiveServer2 Web UI or YARN Resource Manager to identify resource bottlenecks and adjust configurations accordingly.

11. How do you secure a Hive environment?

Securing a Hive environment involves several layers. Authentication is key, and can be achieved through Kerberos integration. Authorization is also crucial. Hive supports SQL standards-based authorization using GRANT and REVOKE statements to control user access to databases, tables, and views. Row-level filtering and data masking can be implemented through views or user-defined functions (UDFs) to restrict access to sensitive data. Encryption, both at rest and in transit, protects data from unauthorized access. At-rest encryption can be implemented through HDFS encryption, while in-transit encryption uses SSL/TLS for communication.

Regular auditing and monitoring are also critical. Enable Hive's audit logging to track user activity and detect suspicious behavior. Furthermore, securing the underlying Hadoop infrastructure, which Hive relies on, is paramount. This includes securing HDFS, YARN, and other Hadoop components, as vulnerabilities in these components can compromise the entire Hive environment. Finally, keeping Hive and all related software up-to-date with the latest security patches is necessary for mitigating known vulnerabilities.

12. How does Hive handle ACID properties, and what are the limitations?

Hive provides ACID (Atomicity, Consistency, Isolation, Durability) properties through Hive ACID transactions. These transactions allow for reliable updates and deletes within Hive tables. To enable ACID, you need to configure Hive to use a transactional manager and specify the table property transactional=true. However, Hive's ACID support has limitations. It is not fully ACID compliant like traditional relational databases, especially when dealing with concurrent writes to the same partitions. Also, full ACID is only supported for ORC formatted tables. Other formats may have partial or no ACID support. Performance can also be a concern as ACID operations can be slower than non-ACID operations due to the overhead of transaction management.

13. What is the purpose of Hive's SerDe and how can you create a custom one?

SerDe in Hive (Serializer/Deserializer) is an interface that handles the serialization and deserialization of data. It translates data between Hive's internal representation and the format of the data source (e.g., delimited text, JSON, Avro). This allows Hive to read and write data in various formats.

To create a custom SerDe:

- Implement the

org.apache.hadoop.hive.serde2.SerDeinterface. This involves implementing theinitialize,deserialize, andserializemethods. - In

initialize, you configure the SerDe (e.g., read properties). deserializeconverts the input data stream to a Hive object (usually aWritable).serializeconverts Hive objects into a format suitable for storage.- Package your custom SerDe implementation into a JAR file and add it to the Hive classpath. Finally, specify your SerDe in the

CREATE TABLEstatement using theROW FORMAT SERDEclause. For example:CREATE TABLE my_table (...) ROW FORMAT SERDE 'com.example.MySerDe';

14. Explain the use of Hive transactions and how they affect concurrency.

Hive transactions, enabled through ACID properties, ensure data consistency and reliability, especially when multiple users or processes are concurrently accessing and modifying the same data. Without transactions, concurrent operations could lead to data corruption or inconsistencies.

Transactions in Hive affect concurrency by providing mechanisms like locking and atomicity. When a transaction begins, Hive acquires locks on the tables or partitions involved, preventing other transactions from modifying the same data concurrently. This ensures that each transaction operates on a consistent snapshot of the data. If a transaction fails, the changes are rolled back, maintaining data integrity. This serialized access reduces concurrency but guarantees data consistency. SET hive.support.concurrency=true; and using an appropriate transaction manager is required to enable this.

15. Describe the process of upgrading a Hive installation and potential challenges.

Upgrading a Hive installation generally involves the following steps: backing up the Hive metastore database, stopping the Hive services (e.g., HiveServer2, metastore), upgrading the metastore schema using the schematool command provided with the new Hive version, upgrading the Hive binaries on all nodes in the cluster, and then restarting the Hive services. Important considerations include carefully reviewing the release notes for breaking changes and compatibility issues. Also test the upgraded environment thoroughly with sample queries and workflows before putting it into production.

Potential challenges include metastore schema upgrade failures (due to inconsistencies or database compatibility issues), configuration changes required by the new Hive version (e.g., deprecated properties), and compatibility issues with existing UDFs or custom SerDes. Downgrading may not always be supported so a proper rollback plan should be in place. Insufficient disk space or memory during the upgrade can also lead to problems. Another frequent issue is client driver compatibility after the server has been updated so proper driver upgrade plan needs to be available.

16. How can you monitor Hive query performance and resource utilization?

To monitor Hive query performance and resource utilization, several tools and techniques can be employed. The Hive Web UI provides a basic overview of query execution, including query plans and execution statistics. For more detailed monitoring, tools like Hadoop's Resource Manager UI can be used to track resource consumption (CPU, memory, disk I/O) by Hive jobs.

Furthermore, Hive provides built-in logging capabilities that can be analyzed to identify slow-running tasks or bottlenecks. Consider enabling verbose logging and examining the logs for details on task execution times. Metrics can also be collected using custom scripts or monitoring tools like Ganglia or Prometheus, by exposing Hive's JMX metrics and collecting them over time. These metrics can include query execution time, number of rows processed, and resource utilization, facilitating performance analysis and optimization.

17. How do you handle data serialization and deserialization in Hive?

Hive handles data serialization and deserialization using SerDe (Serializer/Deserializer) interfaces. SerDe allows Hive to read data from and write data to various data formats. Hive uses different SerDe implementations to handle different data formats like text files, CSV, JSON, Avro, Parquet, and ORC.

When reading data (deserialization), the SerDe interprets the raw bytes in a file and converts them into Hive's internal row format. When writing data (serialization), the SerDe transforms Hive's internal row format into the desired output format. You can specify the SerDe to use when creating a table using the SERDEPROPERTIES clause, and by specifying the ROW FORMAT SERDE clause in the CREATE TABLE statement. Example: ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

18. Explain the concept of cost-based optimization in Hive and how it improves query execution.

Cost-based optimization (CBO) in Hive is a query optimization strategy that uses statistical data about the data to estimate the cost of different query execution plans and chooses the plan with the lowest estimated cost. Unlike rule-based optimization (RBO), which relies on predefined rules, CBO leverages statistics like table and column sizes, data distribution, and number of distinct values to make informed decisions. This leads to potentially faster and more efficient query execution.

CBO improves query execution by considering multiple factors, such as join orders, join algorithms, and the selection of appropriate indexes (if they exist). By accurately estimating the cost (resource consumption, time) associated with each possible plan, Hive's CBO can select the most optimal one. This results in reduced resource utilization, faster query completion times, and improved overall cluster performance, especially for complex queries involving large datasets.

19. How would you use Hive to process streaming data?

Hive is primarily designed for batch processing of data at rest, not real-time streaming data. It's built on top of Hadoop and uses MapReduce (or Tez/Spark) for processing, which inherently involves latency. However, you can use Hive in conjunction with other technologies to process streaming data in a near real-time fashion using a micro-batching approach.

Here's the general approach:

- Ingest Streaming Data: Use a streaming platform like Apache Kafka or Apache Flume to ingest the streaming data.

- Store in HDFS/Object Store: Configure Kafka/Flume to write the data into HDFS or a compatible object store (e.g., S3) in small batches (e.g., every few minutes).

- Create External Hive Table: Create an external Hive table that points to the directory where the streaming data is being written. The table schema should match the format of the data being written (e.g., JSON, CSV, Parquet).

- Query the Table Periodically: Use Hive to query the external table. Since it's an external table, Hive will read the latest data available in the specified directory. You can schedule a job to run these queries periodically (e.g., every few minutes) to get near real-time insights.

Keep in mind, this is not true real-time processing, but rather micro-batch processing with Hive operating on relatively small, recently added batches of data. For true real-time processing, consider technologies like Apache Spark Streaming, Apache Flink, or Apache Storm.

20. What are the key differences between Hive and Impala, and when would you choose one over the other?

Hive and Impala are both SQL-like query engines for data stored in Hadoop, but they differ significantly in performance and use cases. Hive translates SQL-like queries into MapReduce jobs, making it suitable for batch processing of large datasets, complex transformations, and ETL tasks. It emphasizes scalability and fault tolerance. In contrast, Impala is an MPP (Massively Parallel Processing) query engine that processes data directly in memory, offering much faster query execution times, especially for interactive queries and real-time analytics. Impala is often preferred when low latency is crucial.

The key differences lie in their architecture and execution model. Hive uses MapReduce, leading to higher latency, whereas Impala leverages a distributed, in-memory processing approach for speed. Thus, choose Hive for large-scale batch processing, complex transformations, and scenarios where latency is not a primary concern. Opt for Impala when you need fast query response times for interactive analysis, real-time dashboards, and exploratory data analysis on Hadoop data.

21. Describe the role of the Hive Driver, Compiler, and Executor in the query execution process.

The Hive Driver acts as the entry point for Hive queries. It receives the query, performs authentication and authorization checks, and then passes the query to the Compiler. The Compiler is responsible for parsing the query, performing semantic analysis, and generating an execution plan. This plan is essentially a series of MapReduce or Spark jobs.

The Executor takes the execution plan generated by the Compiler and executes it. This involves submitting the MapReduce or Spark jobs to the Hadoop cluster, monitoring their progress, and retrieving the results. It then returns the final results to the user through the Driver. Essentially, the Driver is the interface, the Compiler is the planner, and the Executor is the worker.

22. How can you use Hive to analyze data stored in different file formats (e.g., CSV, JSON, Parquet)?

Hive can analyze data stored in different file formats by using SerDe (Serializer/Deserializer). When creating a Hive table, you specify the ROW FORMAT and STORED AS clauses to define how Hive should read and write the data. For example, for a CSV file, you might use ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' and STORED AS TEXTFILE. For JSON data, you can use a JSON SerDe such as org.apache.hadoop.hive.serde2.JsonSerDe or a custom one and specify STORED AS TEXTFILE. For Parquet files, you simply use STORED AS PARQUET.

Then, Hive uses the specified SerDe to understand the structure of the data and allows you to query it using SQL-like queries. Hive converts these queries into MapReduce, Tez, or Spark jobs that process the data accordingly. For optimized storage formats like Parquet or ORC, Hive can leverage features like predicate pushdown to improve query performance.

23. Explain how you would implement a data governance strategy using Hive.

Implementing a data governance strategy in Hive involves several key aspects. First, we would establish data ownership by defining roles and responsibilities for data stewards responsible for data quality and access control. Next, implement a robust metadata management system by leveraging Hive's metastore to capture and maintain information about tables, schemas, and data lineage. We can use tools like Atlas integrated with Ranger for centralized metadata management.

Then, enforce data quality through data validation rules, profiling, and monitoring. Hive supports CHECK constraints and custom UDFs for data validation. Access control can be enforced using Apache Ranger to define fine-grained policies based on roles or attributes. Finally, data lineage can be tracked using Atlas to understand the origin and transformation of data, ensuring accountability and compliance. We can store sensitive data using encryption techniques.

24. How does Hive interact with YARN for resource management?

Hive relies on YARN for managing resources in a Hadoop cluster. When a Hive query is submitted, Hive translates it into a series of MapReduce jobs (or other execution engines like Tez or Spark). Hive then requests resources from YARN to execute these jobs. YARN's ResourceManager allocates containers (resources like CPU and memory) to Hive's jobs, which are executed by YARN's NodeManagers on the cluster's worker nodes.

Specifically, Hive's execution engine (e.g., MapReduce) acts as a YARN application. It requests containers from YARN's ResourceManager, and once allocated, it launches tasks (e.g., mappers and reducers) within those containers. YARN monitors the resource usage of these containers and reclaims them upon completion of the tasks. This allows Hive to efficiently utilize the cluster's resources and scale its queries based on the available capacity managed by YARN.

25. How can you optimize Hive queries that involve multiple joins?

To optimize Hive queries with multiple joins, several strategies can be employed. First, ensure proper data partitioning and bucketing based on the join keys to minimize data scanned. Enable cost-based optimization (hive.cbo.enable=true) so Hive can choose the most efficient join order. Consider using broadcast joins (hive.auto.convert.join=true and hive.mapjoin.smalltable.filesize) for smaller tables to avoid shuffle operations. Always filter data as early as possible in the query to reduce the size of the datasets being joined. Check skew joins using hive.optimize.skewjoin. Finally, if dealing with large tables, explore using common table expressions (CTEs) or materialized views to pre-compute and store intermediate results.

For example, instead of writing a complex nested query, use CTEs:

WITH table1_filtered AS (

SELECT * FROM table1 WHERE condition

),

table2_filtered AS (

SELECT * FROM table2 WHERE condition

)

SELECT * FROM table1_filtered JOIN table2_filtered ON table1_filtered.id = table2_filtered.id;

26. Discuss the challenges of using Hive in a cloud environment and how to address them.

Using Hive in a cloud environment presents several challenges. Data locality, traditionally a strength of Hadoop, becomes less relevant in cloud environments where storage and compute are often decoupled. This can lead to increased network traffic and latency as Hive queries access data stored remotely in object storage like AWS S3 or Azure Blob Storage. To address this, consider using optimized file formats like Parquet or ORC which reduce data size and improve query performance. Also, leverage cloud-native metastores like AWS Glue or Azure Data Catalog for improved metadata management and scalability.

Another challenge is managing compute resources. In on-premise deployments, Hive typically runs on a dedicated Hadoop cluster. In the cloud, it's often more cost-effective to use transient or auto-scaling clusters. Efficiently managing these resources requires proper configuration of Hive on Spark or Tez execution engines to optimize resource utilization. Furthermore, consider using cloud-specific features like AWS EMR step concurrency or Azure Synapse Analytics Serverless SQL pools to optimize cost and performance. Security and access control are also critical, and should be managed using cloud-native IAM roles and policies to restrict access to data and resources.

Expert Hive interview questions

1. How would you optimize a Hive query that joins two very large tables, where one table has a skewed distribution of values in the join key?

When joining two large Hive tables with a skewed distribution in the join key of one table, optimization is crucial. A standard join can lead to a single reducer handling a disproportionately large amount of data, causing performance bottlenecks. Several strategies can be employed. First, consider using hive.optimize.skewjoin and associated parameters (e.g., hive.skewjoin.key, hive.skewjoin.mapjoin.map.tasks). These parameters instruct Hive to handle skewed keys by splitting them into separate map-join tasks, allowing for better parallelism.

Alternatively, you can pre-filter the skewed key values in one table and broadcast the smaller, filtered table to all map tasks. This way, the join operation only needs to process the remaining non-skewed keys using a regular join. For example, using a WHERE clause to filter out skewed values and then performing a MAPJOIN with a smaller table containing only those skewed values improves performance dramatically. This approach reduces data skewness in the main join operation, leading to better resource utilization and faster query execution.

2. Describe the steps you would take to troubleshoot a Hive query that is running very slowly, and how you would identify the bottleneck.

To troubleshoot a slow Hive query, I'd start by understanding the query's execution plan using EXPLAIN <query>. This reveals how Hive intends to process the data, highlighting potential full table scans, joins, or sorting operations. I'd then check Hive configurations like hive.exec.parallel to ensure parallel execution is enabled, and hive.vectorized.execution.enabled for vectorized query execution. Also, verify sufficient memory allocation for Hive and YARN. I would examine the data using DESCRIBE FORMATTED <table_name> to understand data skew and partitions. Skewed data can cause bottlenecks in mappers/reducers.

To identify the bottleneck, I would analyze the YARN ResourceManager UI to see resource utilization of the Hive jobs. Look for straggling tasks (long-running mappers/reducers) which indicate a bottleneck. HiveServer2 logs can also reveal specific errors or performance issues. If the bottleneck is data skew, strategies like bucketing, salting, or using different join strategies (e.g., MAPJOIN for smaller tables) might be needed. If the bottleneck is the query plan, consider rewriting the query, adding appropriate indexes (if using a storage format that supports them like ORC), or using more efficient file formats like Parquet or ORC that support predicate pushdown.

3. Explain how you would implement a custom SerDe in Hive to handle a complex data format that is not supported by the built-in SerDes.

To implement a custom SerDe in Hive, I would first identify the data format and its structure. Then, I'd write a Java class implementing the org.apache.hadoop.hive.serde2.SerDe interface. This class will contain the logic for serialization (converting Hive data types to the custom format) and deserialization (converting the custom format to Hive data types). The key methods to implement are initialize(), deserialize(), and serialize(). The initialize() method sets up the SerDe with configuration parameters. The deserialize() method takes a Writable (Hadoop's data serialization format) representing a row in the custom format and converts it into a Hive Object. The serialize() method does the reverse, taking a Hive Object and converting it into a Writable. Error handling and schema definition are crucial aspects to consider within these methods.

After compiling the Java code into a JAR file, I would add the JAR to Hive's classpath using the ADD JAR command. Finally, I would create a Hive table specifying the custom SerDe using the ROW FORMAT SERDE clause, providing the fully qualified name of my SerDe class. For example:

CREATE TABLE my_table (

col1 STRING,

col2 INT

) ROW FORMAT SERDE 'com.example.CustomSerDe'

WITH SERDEPROPERTIES (

'field.delimiter' = ','

) STORED AS TEXTFILE;

This allows Hive to read and write data in the custom format using my defined SerDe.

4. How would you design a Hive data model for a time-series dataset, considering efficient querying and data partitioning strategies?

For a time-series dataset in Hive, I would design a data model partitioned by time. A common approach is to partition by year, month, and day. This allows for efficient querying when filtering by specific time ranges. The table format would typically be ORC or Parquet for optimized storage and query performance.

Specifically, the table DDL would include PARTITIONED BY (year INT, month INT, day INT). When loading data, you'd need to specify the partition values. For querying, WHERE year = 2023 AND month = 10 would efficiently target only the relevant partitions. Consider bucketing within partitions if further query optimization on a particular column is required; for example, CLUSTERED BY (sensor_id) INTO 100 BUCKETS.

5. Explain how you can use Hive with Spark to improve query performance and handle complex data transformations.

Hive and Spark can be integrated to leverage the strengths of both systems. Hive provides a SQL-like interface and metastore for managing structured data, while Spark offers a powerful and fast processing engine for data transformations and analysis. By using Spark as the execution engine for Hive queries, we can significantly improve query performance compared to Hive's default MapReduce engine. This is because Spark processes data in memory and utilizes optimized execution plans. We can configure Hive to use Spark by setting the spark.master and hive.execution.engine properties in hive-site.xml.