Hiring the right Hadoop professionals is crucial for organizations dealing with big data challenges. Asking the right interview questions helps assess candidates' knowledge and experience effectively.

This blog post provides a comprehensive list of Hadoop interview questions for different experience levels and areas of expertise. We cover general Hadoop concepts, questions for junior data engineers, processing frameworks, and storage mechanisms.

Using these questions will help you identify top Hadoop talent for your team. Consider combining them with a pre-interview Hadoop skills assessment for a more thorough evaluation process.

Table of contents

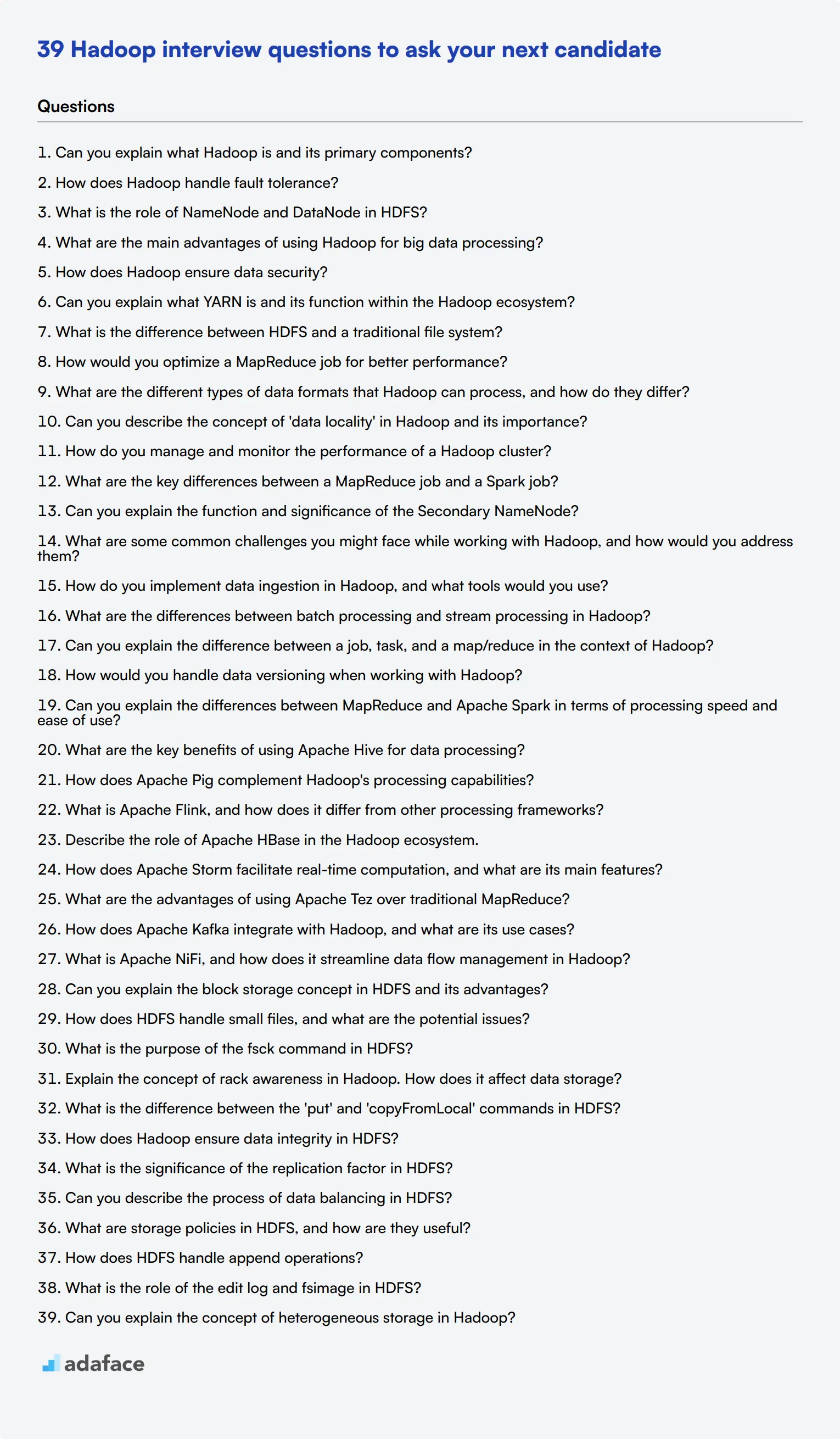

8 general Hadoop interview questions and answers to assess candidates

If you're looking to assess a candidate's understanding and practical knowledge of Hadoop, you're in the right place! These questions are designed to give you a clear picture of their Hadoop expertise and how they can contribute to your data projects.

1. Can you explain what Hadoop is and its primary components?

Hadoop is an open-source framework designed for distributed storage and processing of large datasets using simple programming models. The primary components of Hadoop include the Hadoop Distributed File System (HDFS) for storage, and MapReduce for processing.

An ideal candidate should be able to detail these components and possibly mention additional tools within the Hadoop ecosystem like YARN and Hadoop Common. Look for candidates who can explain these concepts clearly and succinctly, indicating a solid understanding of the framework.

2. How does Hadoop handle fault tolerance?

Hadoop ensures fault tolerance primarily through data replication. In HDFS, data blocks are replicated across multiple nodes. If one node fails, the data is still accessible from another node with a replica.

Candidates should also mention that Hadoop's MapReduce framework has mechanisms to reassign tasks from failed nodes to other nodes. An impressive answer will include examples of how this mechanism works in real-world scenarios. Look for candidates who can articulate the importance of fault tolerance in big data processing.

3. What is the role of NameNode and DataNode in HDFS?

In HDFS, the NameNode acts as the master server that manages the metadata and file system namespace. The DataNodes are responsible for storing the actual data blocks. The NameNode keeps track of where data is stored across the DataNodes.

A strong candidate will explain how the NameNode coordinates access to the data and how DataNodes report their status to the NameNode. Look for clarity in their explanation and a good understanding of how these components interact within the HDFS architecture.

4. What are the main advantages of using Hadoop for big data processing?

Hadoop offers several advantages for big data processing: scalability, flexibility, cost-efficiency, and fault tolerance. It can scale out to accommodate increasing data volumes by adding more nodes. Its flexibility allows it to process structured and unstructured data.

Candidates should also highlight the cost benefits of using commodity hardware and the robustness of the system due to its fault tolerance capabilities. Look for responses that demonstrate a clear understanding of how these advantages apply in practical scenarios.

5. How does Hadoop ensure data security?

Hadoop ensures data security through various means such as Kerberos authentication, HDFS file permissions, and data encryption. Kerberos provides a secure method for authenticating users. HDFS file permissions control access at the file and directory level.

Candidates should also mention data encryption during transit and at rest to protect sensitive information. An ideal response will include a discussion on best practices for implementing these security measures in a Hadoop environment.

6. Can you explain what YARN is and its function within the Hadoop ecosystem?

YARN (Yet Another Resource Negotiator) is a core component of Hadoop that handles resource management and job scheduling. It allows multiple data processing engines such as MapReduce, Spark, and others to run and process data stored in HDFS.

An effective answer will include details on how YARN separates resource management from the data processing logic, enabling better scalability and resource utilization. Look for candidates who can discuss the benefits of this architecture in managing large-scale data processing jobs.

7. What is the difference between HDFS and a traditional file system?

HDFS is designed to handle large datasets in a distributed manner across multiple nodes, providing high throughput and fault tolerance. In contrast, traditional file systems are not optimized for the distributed storage and processing of large volumes of data.

Candidates should emphasize the scalability, fault tolerance, and data locality advantages of HDFS. Look for answers that demonstrate an understanding of why HDFS is more suitable for big data applications compared to traditional file systems.

8. How would you optimize a MapReduce job for better performance?

To optimize a MapReduce job, one can tune various configuration parameters such as the number of mappers and reducers, use combiners to reduce data transfer, and optimize the data input format. Additionally, partitioning and sorting can be used to enhance performance.

Candidates should mention practical techniques like using counters for performance monitoring and adjusting the block size for optimal data distribution. Look for specific examples from their past experience where they successfully optimized a MapReduce job.

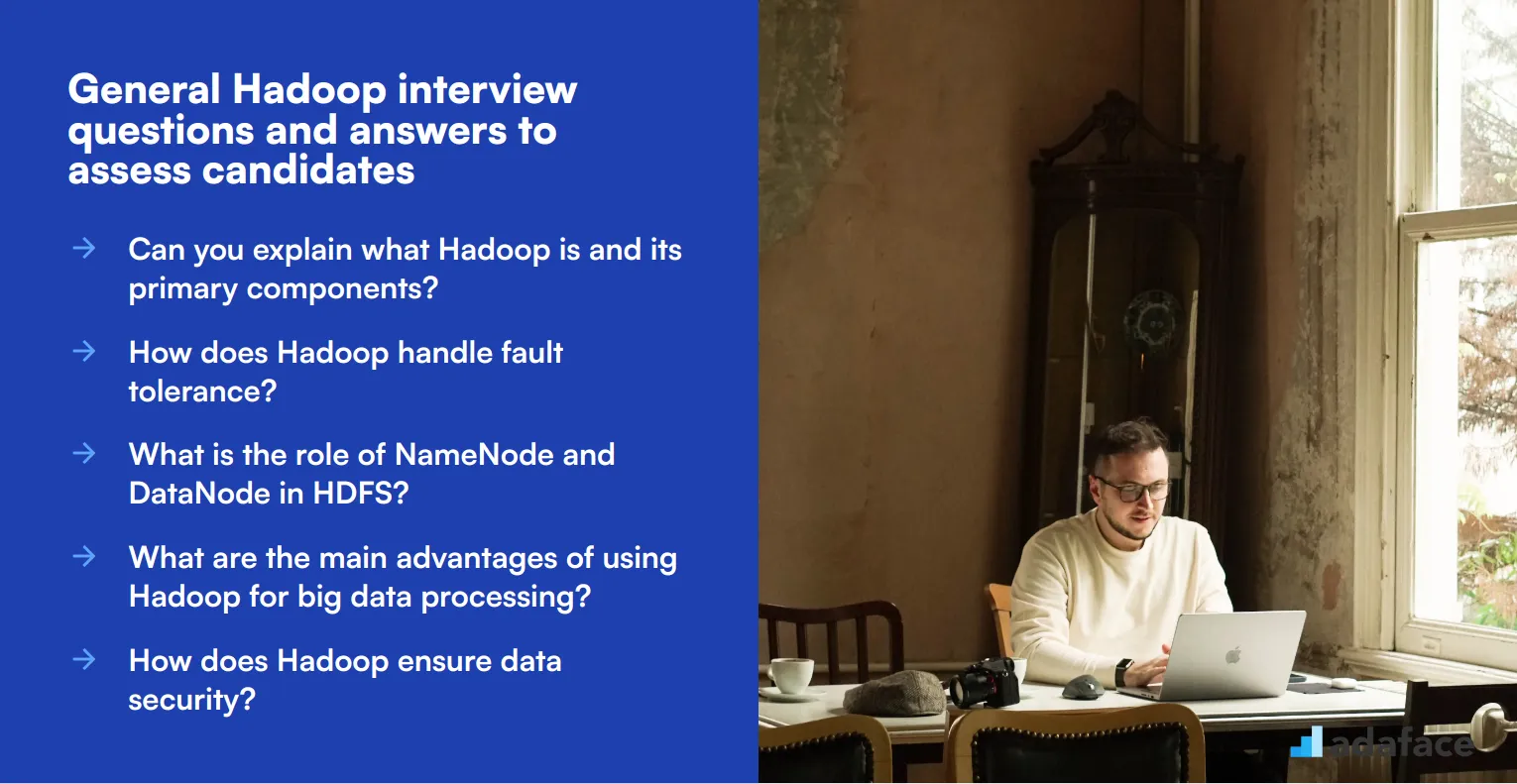

10 Hadoop interview questions to ask junior data engineers

To ensure your candidates possess the necessary skills and understanding of Hadoop, utilize this list of targeted questions. These inquiries are designed to help you gauge their technical knowledge and problem-solving abilities, making your hiring process more effective. For a comprehensive understanding of the role, consider reviewing the Hadoop developer job description.

- What are the different types of data formats that Hadoop can process, and how do they differ?

- Can you describe the concept of 'data locality' in Hadoop and its importance?

- How do you manage and monitor the performance of a Hadoop cluster?

- What are the key differences between a MapReduce job and a Spark job?

- Can you explain the function and significance of the Secondary NameNode?

- What are some common challenges you might face while working with Hadoop, and how would you address them?

- How do you implement data ingestion in Hadoop, and what tools would you use?

- What are the differences between batch processing and stream processing in Hadoop?

- Can you explain the difference between a job, task, and a map/reduce in the context of Hadoop?

- How would you handle data versioning when working with Hadoop?

9 Hadoop interview questions and answers related to processing frameworks

When it comes to evaluating candidates for Hadoop roles, understanding their grasp of various processing frameworks is crucial. Use this list of questions to gauge their practical knowledge and problem-solving skills in a business context.

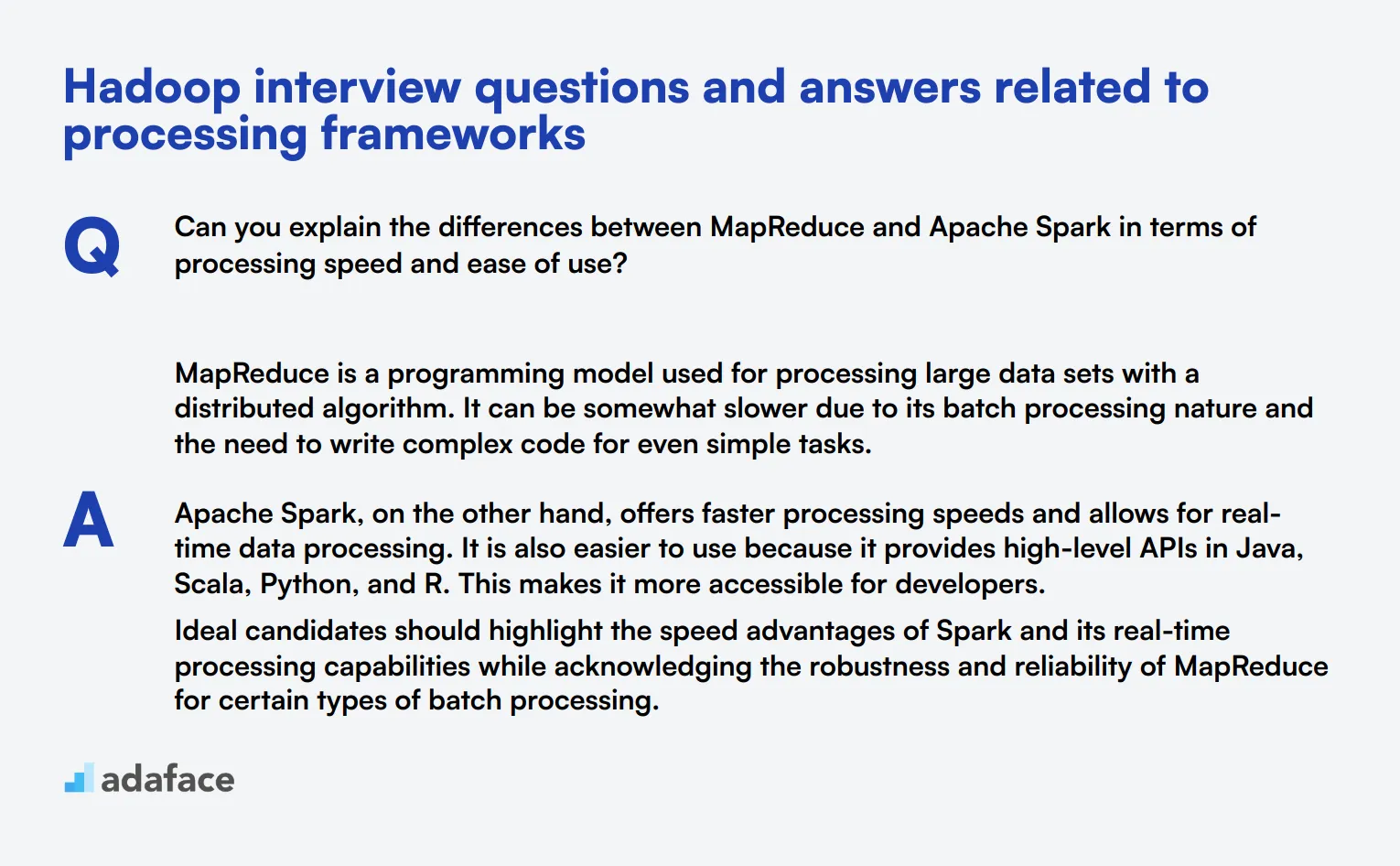

1. Can you explain the differences between MapReduce and Apache Spark in terms of processing speed and ease of use?

MapReduce is a programming model used for processing large data sets with a distributed algorithm. It can be somewhat slower due to its batch processing nature and the need to write complex code for even simple tasks.

Apache Spark, on the other hand, offers faster processing speeds and allows for real-time data processing. It is also easier to use because it provides high-level APIs in Java, Scala, Python, and R. This makes it more accessible for developers.

Ideal candidates should highlight the speed advantages of Spark and its real-time processing capabilities while acknowledging the robustness and reliability of MapReduce for certain types of batch processing.

2. What are the key benefits of using Apache Hive for data processing?

Apache Hive is a data warehouse software that facilitates reading, writing, and managing large datasets residing in distributed storage. It is built on top of Hadoop and provides an SQL-like interface to query data.

The key benefits of using Hive include its ability to handle large datasets efficiently, its user-friendly query language (HiveQL), and its integration with Hadoop, which allows it to leverage the power of Hadoop's distributed computing capabilities.

Look for candidates who can articulate how Hive simplifies complex queries and integrates seamlessly with the larger Hadoop ecosystem, making it a powerful tool for data analysts and engineers.

3. How does Apache Pig complement Hadoop's processing capabilities?

Apache Pig is a high-level platform for creating programs that run on Hadoop. It provides a scripting language called Pig Latin, which simplifies the process of writing complex data transformations.

Pig is particularly useful for ETL (Extract, Transform, Load) processes and is designed to handle both structured and semi-structured data. Its ability to break down tasks into a series of MapReduce jobs makes it a versatile tool for data processing.

Candidates should discuss how Pig's high-level language can make data processing tasks easier and more efficient, especially for those who are not familiar with Java-based MapReduce programming.

4. What is Apache Flink, and how does it differ from other processing frameworks?

Apache Flink is a stream processing framework that allows for real-time data processing with low latency. Unlike batch processing frameworks like MapReduce, Flink can process data as it arrives, making it suitable for applications that require real-time insights.

Flink offers features like stateful stream processing, which allows it to maintain state across events, and exactly-once processing guarantees, which ensure data accuracy even in the case of failures.

Ideal candidates should emphasize Flink's real-time processing capabilities and its advantages over other frameworks in terms of latency and state management. They should also be able to discuss scenarios where Flink would be the preferred choice.

5. Describe the role of Apache HBase in the Hadoop ecosystem.

Apache HBase is a distributed, scalable, NoSQL database that runs on top of the Hadoop Distributed File System (HDFS). It is designed for real-time read/write access to large datasets.

HBase supports random, real-time read/write access to data, making it suitable for applications that require quick lookups and updates. It is modeled after Google's Bigtable and is particularly useful for sparse data sets that are common in many big data use cases.

When answering, candidates should focus on HBase's ability to handle large volumes of data with real-time access and its integration with the Hadoop ecosystem, which allows it to leverage Hadoop's storage and computational capabilities.

6. How does Apache Storm facilitate real-time computation, and what are its main features?

Apache Storm is a real-time computation system designed for processing large streams of data. It can process millions of data points per second per node and is known for its low latency and high throughput.

Key features of Storm include its distributed nature, fault-tolerance, and ease of use. It also supports a wide range of programming languages, making it accessible to a broader audience.

Look for candidates who can explain Storm's real-time processing capabilities and its suitability for applications like real-time analytics, machine learning, and continuous computation. They should also mention its fault-tolerance and scalability.

7. What are the advantages of using Apache Tez over traditional MapReduce?

Apache Tez is an application framework built on Hadoop YARN that allows for more complex and efficient data processing workflows compared to traditional MapReduce. It is designed to reduce the overhead associated with launching and managing MapReduce jobs.

Tez allows for more flexible and efficient data processing by enabling Directed Acyclic Graphs (DAGs) of tasks, which can improve performance and resource utilization. It also supports features like data pipelining and in-memory data sharing.

Candidates should highlight Tez's ability to optimize data processing workflows and its performance advantages over traditional MapReduce, making it a powerful tool for complex data processing tasks.

8. How does Apache Kafka integrate with Hadoop, and what are its use cases?

Apache Kafka is a distributed streaming platform that can handle high-throughput, low-latency data feeds. It is often used to collect and distribute real-time data streams to various systems, including Hadoop for storage and processing.

Kafka can integrate with Hadoop through connectors and streaming APIs, allowing it to serve as a data ingestion layer that feeds data into Hadoop for batch processing or real-time analytics.

Ideal answers should discuss Kafka's role in enabling real-time data pipelines and its use cases, such as log aggregation, real-time analytics, and stream processing applications. Candidates should also mention how Kafka's integration with Hadoop enhances its capabilities.

9. What is Apache NiFi, and how does it streamline data flow management in Hadoop?

Apache NiFi is a data integration tool that helps automate the movement of data between different systems. It provides a user-friendly interface for designing data flows and supports real-time data ingestion, routing, and transformation.

NiFi excels at managing data flow with features like backpressure, data provenance, and ease of use. It is particularly useful for scenarios where data needs to be ingested from multiple sources and routed to various destinations, including Hadoop.

Candidates should explain how NiFi simplifies data flow management and enhances data integration processes in Hadoop environments. They should also mention its real-time capabilities and ease of use, which make it a valuable tool for data engineers.

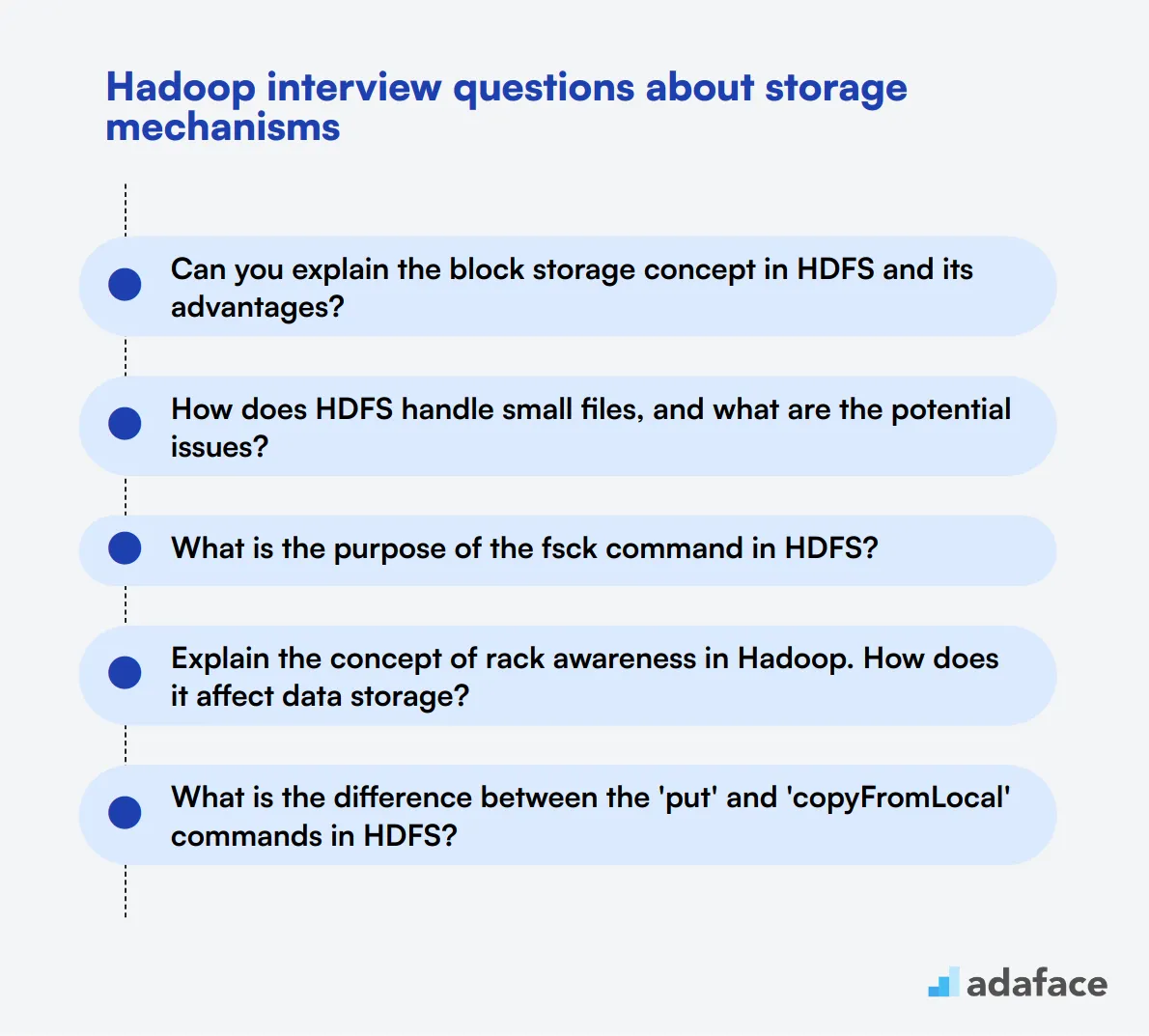

12 Hadoop interview questions about storage mechanisms

To assess a candidate's understanding of Hadoop's storage mechanisms, use these 12 interview questions. They cover key concepts and practical aspects of Hadoop's storage capabilities, helping you evaluate the applicant's expertise in managing and optimizing data storage within Hadoop ecosystems.

- Can you explain the block storage concept in HDFS and its advantages?

- How does HDFS handle small files, and what are the potential issues?

- What is the purpose of the fsck command in HDFS?

- Explain the concept of rack awareness in Hadoop. How does it affect data storage?

- What is the difference between the 'put' and 'copyFromLocal' commands in HDFS?

- How does Hadoop ensure data integrity in HDFS?

- What is the significance of the replication factor in HDFS?

- Can you describe the process of data balancing in HDFS?

- What are storage policies in HDFS, and how are they useful?

- How does HDFS handle append operations?

- What is the role of the edit log and fsimage in HDFS?

- Can you explain the concept of heterogeneous storage in Hadoop?

Which Hadoop skills should you evaluate during the interview phase?

Assessing a candidate's Hadoop skills in a single interview is a challenging task. While it's impossible to gauge every aspect of their expertise, focusing on core skills will help you make informed hiring decisions.

Hadoop Ecosystem Knowledge

You can use an assessment test that includes relevant MCQs to gauge a candidate's familiarity with the Hadoop ecosystem. For example, our Hadoop assessment contains questions specifically designed to evaluate this skill.

Additionally, targeted interview questions can help assess their knowledge further. One effective question to consider is:

Can you explain the differences between HDFS and traditional file systems?

When asking this question, look for candidates to mention aspects such as data replication, fault tolerance, and the ability to handle large files, which are fundamental to how HDFS operates compared to conventional file systems.

Data Processing Frameworks

To evaluate their knowledge in this area, consider using an assessment that features MCQs focused on data processing frameworks. Our Hadoop assessment includes relevant questions to help you filter candidates with strong skills.

You can also ask specific interview questions. A relevant question could be:

What are the advantages of using Spark over MapReduce?

When posed with this question, look for candidates to discuss Spark's speed, ease of use, and its ability to handle both batch and real-time processing, highlighting their understanding of why one framework may be preferred over another.

Data Storage Techniques

To assess this skill, you can use an assessment test featuring MCQs. The Hadoop assessment can provide insights into a candidate's familiarity with data storage mechanisms.

A targeted interview question could be:

How do you choose between different data formats like Avro and Parquet for a Hadoop job?

When asking this question, observe if candidates discuss factors such as schema evolution, compression, and read/write efficiency, which are important considerations in selecting the appropriate data format for specific use cases.

Hire the Best Hadoop Candidates with Adaface

If you are looking to hire someone with Hadoop skills, it's important to ensure they have the required abilities accurately.

The best way to verify these skills is by using targeted skill tests. Check out our Hadoop online test and data engineer test.

Once you use these tests, you can shortlist the top applicants and proceed to interview them.

For your next steps, you can sign up here or explore our online assessment platform for more information.

Hadoop Online Test

Download Hadoop interview questions template in multiple formats

Hadoop Interview Questions FAQs

Begin with questions about the candidate's understanding of Hadoop ecosystems, its components, and basic functionalities.

A junior data engineer should understand core Hadoop concepts, basic HDFS commands, and have some experience with MapReduce.

Ask questions about different processing frameworks like Hive, Pig, and Spark, and how they are used within the Hadoop ecosystem.

Inquire about the candidate's knowledge of HDFS, data replication, file formats, and how Hadoop handles large datasets.

Yes, familiarity with both MapReduce and Spark is beneficial as they are key to processing large datasets in Hadoop.

Start with general questions, then move to specific topics like processing frameworks and storage mechanisms, ending with problem-solving scenarios.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources