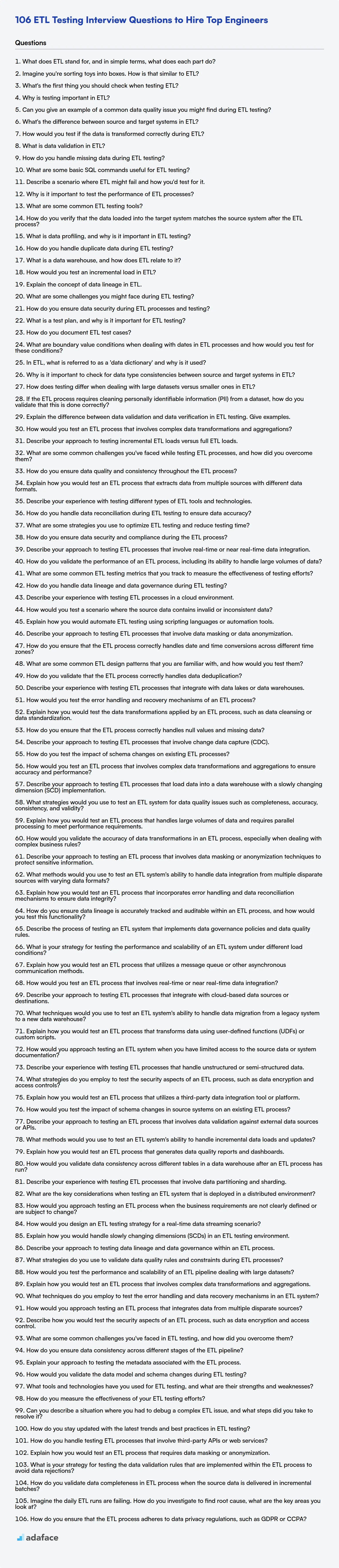

When evaluating ETL (Extract, Transform, Load) developers and testers, it is useful to have a ready list of questions to separate the wheat from the chaff. Ensure you can probe candidates effectively, just like how a data engineer needs to effectively move data.

This blog post provides a curated list of ETL testing interview questions, categorized by difficulty level. You'll find basic, intermediate, advanced, and expert-level questions, along with a set of multiple-choice questions to comprehensively assess a candidate's ETL testing expertise.

By using these questions, you can streamline your hiring process and identify top-tier ETL testing talent; to further enhance your evaluation, consider using Adaface's ETL online test before interviews.

Table of contents

Basic ETL Testing interview questions

1. What does ETL stand for, and in simple terms, what does each part do?

ETL stands for Extract, Transform, and Load. It's a process used in data warehousing to get data from different sources into a single, consistent data store.

- Extract: This is the process of reading data from various sources. These sources can be databases, flat files, APIs, etc. The data is often in different formats.

- Transform: This is where the data is cleaned, validated, and transformed into a consistent format. This might involve data type conversions, filtering, data enrichment, and applying business rules. For example converting date formats or resolving inconsistencies in address data.

- Load: This is the final step, where the transformed data is loaded into the target data warehouse or database. This needs to be done efficiently to avoid performance bottlenecks.

2. Imagine you're sorting toys into boxes. How is that similar to ETL?

Sorting toys into boxes is a great analogy for ETL (Extract, Transform, Load). Just like ETL, you have a source (a pile of toys) that needs to be organized into a target (boxes).

- Extract: Picking up toys from the pile is like extracting data from various sources (databases, files, APIs).

- Transform: Cleaning the toys, categorizing them (e.g., by color, type), or deciding which box they belong to is similar to transforming data (cleaning, filtering, aggregating).

- Load: Placing the toys into the correct boxes is like loading the transformed data into a target data warehouse or database. Each box serves a purpose; it is possible to have boxes for different purposes, with different types of toys in each.

3. What's the first thing you should check when testing ETL?

The first thing to check when testing ETL is whether the ETL process completed successfully. Examine the logs for any errors, warnings, or exceptions. Verify the ETL tool's monitoring dashboard or status reports to confirm that all steps in the pipeline finished without failure.

Specifically, look for error messages related to data source connections, transformation logic, or data destination write operations. Pay close attention to the timestamps to identify when and where the issues occurred. If the ETL process failed, addressing the root cause is the top priority before proceeding with any data validation or quality checks.

4. Why is testing important in ETL?

Testing is crucial in ETL (Extract, Transform, Load) processes because these processes are complex and prone to errors. Data inaccuracies introduced during extraction, transformation, or loading can have serious consequences for downstream reporting, analytics, and decision-making. Thorough testing helps ensure data quality, reliability, and consistency.

Specifically, testing in ETL focuses on verifying data transformations are performed correctly, data is loaded accurately into the target system, and the overall ETL process performs as expected. This reduces data corruption, ensures compliance, and reduces the chances of having to redo the entire process from the source again. Testing can take many forms from data reconciliation to checking data types, range, and data completeness.

5. Can you give an example of a common data quality issue you might find during ETL testing?

A common data quality issue in ETL testing is data type mismatch. For example, a field defined as an integer in the source system might be incorrectly loaded as a string in the target system.

Another example is incorrect data transformation. This can happen when applying a formula or function during ETL, such as converting a date format or calculating a derived value. A flaw in the conversion logic or a misunderstanding of the data's original format can lead to inaccurate results in the target system.

6. What's the difference between source and target systems in ETL?

In ETL (Extract, Transform, Load), the source system is the origin of the data. It's where the data resides before the ETL process begins. This could be anything from databases, flat files, APIs, or even cloud storage. The data in the source system is often in a raw or unprocessed format.

The target system, on the other hand, is the destination of the data after the ETL process. It's where the transformed and cleaned data is loaded for reporting, analysis, or other downstream purposes. Target systems are commonly data warehouses, data marts, or other analytical databases. The target system is optimized for querying and reporting.

7. How would you test if the data is transformed correctly during ETL?

To test if data is transformed correctly during ETL, I'd use a multi-faceted approach. First, I would perform data profiling on both the source and destination data to understand its characteristics (e.g., data types, distributions, null values). Then, I'd write SQL queries or use data comparison tools to validate data transformations, ensuring that data is being correctly mapped, cleaned, and aggregated, including checking for data completeness and accuracy.

Specifically, I would implement checks such as verifying that data types are as expected, calculations are accurate, and data integrity constraints are met. Some methods could include:

- Count verification: Ensure the number of records in the target matches the source after applying filters or aggregations.

- Data sampling: Compare a subset of records from the source to the target after the transformation.

- Schema validation: Verify that the target schema matches the expected schema after transformation.

- Business rule validation: Validate that the transformed data adheres to defined business rules.

8. What is data validation in ETL?

Data validation in ETL (Extract, Transform, Load) is the process of ensuring data quality and accuracy as it moves from source systems to a target data warehouse or data lake. It involves verifying that the data conforms to defined rules, formats, and constraints. This helps to identify and handle errors, inconsistencies, or missing values before they impact downstream processes or analytics.

Common data validation techniques include:

- Data Type Validation: Checking if data adheres to the expected data type (e.g., integer, string, date).

- Range Validation: Verifying that data falls within acceptable value ranges.

- Format Validation: Ensuring data follows a specific format (e.g., email address, phone number).

- Constraint Validation: Checking data against predefined rules or business constraints (e.g., uniqueness, referential integrity).

- Completeness Validation: Identifying missing or null values.

- Consistency Validation: Ensuring data is consistent across multiple fields or records.

9. How do you handle missing data during ETL testing?

When handling missing data during ETL testing, I focus on validating the ETL process's behavior regarding null or empty values. This involves checking if the ETL process correctly identifies, flags, or substitutes missing data based on predefined business rules. The actions taken depend on the specific requirements; for example, a default value might be applied, the record might be rejected, or the missing value might be propagated downstream.

Specifically, I would test scenarios such as ensuring numeric columns don't receive empty strings, date columns handle null dates appropriately, and required fields are validated. I would also verify that any data transformations involving missing data produce expected results. This may involve creating test cases with various missing data patterns and verifying the output using SQL queries or data comparison tools to confirm data integrity and consistency across the ETL pipeline.

10. What are some basic SQL commands useful for ETL testing?

Several basic SQL commands are useful for ETL testing. These commands help validate data transformations, data loading, and data quality.

Useful SQL commands include:

SELECT: To query and inspect data in source, staging, and target tables to ensure data is transformed and loaded correctly.COUNT: To verify the number of records in tables after ETL processes.SUM,AVG,MIN,MAX: To validate aggregated data transformations.WHERE: To filter data based on specific conditions to ensure that only the right data is transformed or loaded.JOIN: To validate the data integrated from multiple sources.INSERT,UPDATE,DELETE: To test data insertion, updates, and deletion processes within the ETL pipeline.CREATE TABLE: To set up staging tables for testing purposes.TRUNCATE TABLE: To clear staging tables before a test run.EXISTS/NOT EXISTS: To check for the presence or absence of certain records based on specific criteria.DISTINCT: To identify unique values.

For example, to compare the count of records in a source table and a target table after an ETL process, you can use SELECT COUNT(*) FROM source_table; and SELECT COUNT(*) FROM target_table;

Similarly, use SELECT column1, column2 FROM table WHERE condition; to check specific values. Code blocks are used as appropriate to represent code.

11. Describe a scenario where ETL might fail and how you'd test for it.

An ETL process might fail during the data transformation stage if, for example, a source column expected to contain numerical data unexpectedly contains null values or strings. This could cause a data type conversion error and halt the ETL pipeline.

To test for this, I would implement data profiling and validation steps early in the ETL process. Specifically, I'd use automated checks to:

- Verify data types and formats.

- Check for missing values in required fields.

- Validate data against predefined rules (e.g., checking that dates are within a valid range).

- Implement error handling to capture and log any conversion failures, allowing for investigation and correction of the source data or the transformation logic, instead of letting the entire job fail. I would also use 'try-except' blocks in the python code while reading the data using

pandasimport pandas as pd try: df['numeric_column'] = pd.to_numeric(df['string_column']) except ValueError as e: print(f"Error converting column: {e}")

12. Why is it important to test the performance of ETL processes?

Testing the performance of ETL processes is crucial for several reasons. Slow or inefficient ETL processes can become bottlenecks, delaying data availability for business intelligence, reporting, and other downstream applications. This can impact decision-making and overall business agility.

Specifically, performance testing helps identify: areas where optimization is needed (e.g., inefficient data transformations or slow database queries), potential scalability issues as data volumes grow, and whether the ETL process meets predefined service level agreements (SLAs) related to data loading times. By proactively addressing these issues, you can ensure data is delivered reliably and on time, maximizing its value to the organization. Performance issues can cause downstream processes to error out or run with stale data.

13. What are some common ETL testing tools?

Several tools are available for ETL testing, both open-source and commercial. Some common options include:

- QuerySurge: A dedicated data testing solution designed for automating the testing of data warehouses and big data implementations.

- Datagaps ETL Validator: A commercial tool specifically built for ETL testing, focusing on data quality and validation.

- Talend: While primarily an ETL tool, Talend also offers data quality features that can be used for testing.

- Informatica Data Validation Option (DVO): Part of the Informatica suite, DVO is designed for data validation and testing within ETL processes.

- Open source tools: Some general purpose testing tools or scripting languages like Python with libraries like

pandascan be used, often in conjunction with SQL for data validation.

14. How do you verify that the data loaded into the target system matches the source system after the ETL process?

Data verification after ETL involves several checks to ensure accuracy and completeness. Primarily, I would implement data reconciliation by comparing record counts between source and target tables. Additionally, I would perform data profiling and statistical analysis to compare summary statistics (e.g., min, max, average, standard deviation) of key fields. Data sampling and manual validation of randomly selected records is also crucial.

Beyond basic checks, I would use techniques such as checksums to verify data integrity. md5sum on text files, or comparing aggregated hashes after a complex transformation can identify issues. For numerical data, I might sum specific columns in both systems and compare the results. Finally, automated data quality checks that run as part of the ETL pipeline, utilizing defined rules, and alerting on anomalies can greatly enhance the verification process.

15. What is data profiling, and why is it important in ETL testing?

Data profiling is the process of examining data to collect statistics and informative summaries about it. It involves analyzing data sources to understand their structure, content, relationships, and quality. This can include identifying data types, value ranges, patterns, missing values, and potential data quality issues.

In ETL testing, data profiling is crucial because it helps testers understand the source data before designing test cases. This understanding ensures that test cases are comprehensive and effectively validate the ETL process. Specifically, it helps in:

- Identifying data quality issues early.

- Defining accurate test data.

- Validating data transformations and mappings.

- Ensuring data consistency and integrity after ETL.

16. How do you handle duplicate data during ETL testing?

During ETL testing, handling duplicate data is crucial. I typically employ several strategies. First, I'd check the ETL mapping documents to understand how the ETL process is designed to handle duplicates. Then I'd perform data profiling on the source data to identify potential duplicate records before the ETL process. I would create test cases that specifically target duplicate data scenarios, ensuring the ETL process either removes the duplicates, updates existing records appropriately, or flags them as errors, based on the business requirements.

Validating duplicate handling often involves SQL queries on the target database to count distinct records and compare them with the source data. For example:

SELECT column1, column2, COUNT(*)

FROM target_table

GROUP BY column1, column2

HAVING COUNT(*) > 1;

This query helps identify duplicate combinations of column1 and column2. I also verify that any implemented deduplication mechanisms (e.g., using primary keys or merge/purge processes) work as expected.

17. What is a data warehouse, and how does ETL relate to it?

A data warehouse is a central repository for integrated data from one or more disparate sources. It's designed for analytical reporting and data analysis, storing historical data, which helps in making informed business decisions. The data in a data warehouse is usually transformed for analytical purposes. Data warehouses are structured for fast query and reporting using tools that are optimized for data retrieval and analysis.

ETL (Extract, Transform, Load) is the process of populating a data warehouse. Extract involves pulling data from various source systems. Transform cleanses, transforms, and integrates the extracted data into a consistent format suitable for the data warehouse. This might include data type conversions, data cleansing (handling missing values, duplicates), and data aggregation. Load moves the transformed data into the data warehouse. ETL ensures that the data in the warehouse is reliable, consistent, and ready for analysis. For example, a transformation step might involve converting all dates to a common YYYY-MM-DD format or calculating derived metrics like profit_margin = (revenue - cost) / revenue.

18. How would you test an incremental load in ETL?

To test an incremental load in ETL, focus on validating that only new or modified data is processed correctly in each run. This involves several key checks:

- Data completeness: Verify that all new or modified records from the source system are loaded into the target system. You'll want to write queries to compare row counts and identify any missing data, focusing specifically on records modified after the last successful load.

- Data accuracy: Ensure that the transformed data is accurate and consistent with the source data. This involves validating the transformation logic for the incremental data set. Pay close attention to how updates and deletes are handled.

- Performance: Check that the incremental load completes within the expected time frame. Monitor load times and resource utilization, especially as the volume of incremental data grows.

- Boundary Conditions: Test scenarios for the first time incremental load, edge cases where there are no changes, and handle late arriving data.

- Data lineage: Verify that the etl process updates the watermark or timestamp to indicate the last processed transaction.

19. Explain the concept of data lineage in ETL.

Data lineage in ETL (Extract, Transform, Load) refers to tracking the origin, movement, and transformation of data as it flows through the ETL pipeline. It essentially provides a complete history of a data element, showing where it came from, what changes it underwent, and where it ended up. This traceability is crucial for data quality, auditing, and debugging.

It helps answer questions like: Where did this data come from? How was it transformed? Who has access to it? Why does this field have a specific value? By understanding the data's journey, organizations can improve data governance, ensure compliance with regulations, and quickly identify the root cause of data-related issues. For instance, if a report shows incorrect data, lineage can trace the error back to the source system or transformation step where it occurred.

20. What are some challenges you might face during ETL testing?

ETL testing presents several challenges. Data quality issues are common, including missing, inconsistent, or inaccurate data, requiring thorough validation and cleansing processes. Handling large volumes of data can also be challenging, impacting performance and requiring optimized testing strategies such as sampling or data sub-setting.

Another challenge lies in validating complex transformations. Ensuring data is accurately transformed according to business rules can be difficult, particularly with intricate logic or multiple source systems. Maintaining data lineage and audit trails to track data flow and transformations throughout the ETL process is also crucial but can be technically complex.

21. How do you ensure data security during ETL processes and testing?

Data security during ETL processes and testing is paramount. I employ several strategies, including data masking/anonymization to replace sensitive data with realistic but non-identifiable substitutes, both in transit and at rest. Access controls are strictly enforced using role-based access control (RBAC) to limit who can access data and systems. Encryption, using tools like AES-256, protects data during transit (e.g., using TLS/SSL) and storage. We also conduct regular security audits and vulnerability scans to identify and address potential weaknesses.

For testing specifically, I advocate for using synthetic or subsetted production data. This minimizes the risk of exposing sensitive information while still allowing for thorough testing of ETL logic. Data validation and integrity checks are implemented throughout the ETL pipeline to detect and prevent data corruption or unauthorized modifications. All security measures are documented and regularly reviewed to ensure compliance with relevant regulations and best practices.

22. What is a test plan, and why is it important for ETL testing?

A test plan is a detailed document outlining the strategy, objectives, schedule, estimation, deliverables, and resources required for testing a software product. For ETL (Extract, Transform, Load) testing, it's crucial because ETL processes are complex, involving data extraction from various sources, transformation based on business rules, and loading into a data warehouse. A well-defined test plan ensures all aspects of the ETL process are thoroughly validated, minimizing data quality issues and ensuring data integrity in the target system.

Importance in ETL testing stems from the need to verify data accuracy, completeness, and consistency during the ETL process. The test plan helps in defining test cases to validate data transformations, identify data errors or inconsistencies, and confirm that the data warehouse contains accurate and reliable information. It also guides the testing team in setting up the test environment, defining test data, and executing test cases effectively.

23. How do you document ETL test cases?

ETL test cases are documented to ensure comprehensive testing and maintainability. A common approach is to use a spreadsheet or a dedicated test management tool like TestRail or Zephyr. Each test case typically includes:

- Test Case ID: A unique identifier.

- Test Case Name: A descriptive name of the test.

- Description: Details of what the test verifies.

- Pre-conditions: The required state before execution (e.g., source data availability).

- Input Data: The data used for the test, often with sample values. For example:

{"customer_id": 123, "name": "John Doe"}. - Steps: Detailed actions to perform.

- Expected Result: The predicted outcome. This is often specified with example values and data validations. For example: 'Target table

customersshould contain a row withcustomer_id = 123andname = John Doe.' - Actual Result: The outcome after execution.

- Status: Pass/Fail.

- Tester: The person who executed the test.

- Date Executed: Timestamp of test execution.

- Notes: Any relevant information. For more complex ETL jobs, using a data validation language or library, such as Great Expectations or dbt tests might be relevant. These produce automated test reports from code.

24. What are boundary value conditions when dealing with dates in ETL processes and how would you test for these conditions?

Boundary value conditions when dealing with dates in ETL processes refer to testing the limits or edges of the date ranges that your system is designed to handle. These are the dates that are most likely to cause errors or unexpected behavior. For example, consider the earliest allowable date, the latest allowable date, the start and end of fiscal years, leap years, and null or missing dates. Testing these boundary conditions ensures data integrity and system stability.

To test these conditions, you would typically include test cases that specifically target these boundary values. For example, you might insert records with dates equal to the earliest and latest allowed dates, dates on either side of a fiscal year boundary, or dates in leap years (February 29th). You'd then verify that the ETL process handles these dates correctly, ensuring accurate data transformation and loading, and that no errors or data loss occur. Consider also invalid dates (e.g., February 30th) to ensure they are handled gracefully, perhaps by rejection or default value assignment.

25. In ETL, what is referred to as a 'data dictionary' and why is it used?

In ETL, a data dictionary is a centralized repository containing metadata about the data being processed. It defines attributes like data types, lengths, descriptions, sources, relationships, and business rules for each data element within the ETL pipeline.

The data dictionary serves several important purposes. It ensures data consistency and quality by providing a single source of truth for data definitions. It aids in data governance and compliance by documenting data lineage and usage. It simplifies ETL development and maintenance by providing a clear understanding of the data structure and relationships, improving collaboration among developers and analysts, reducing ambiguity, and streamlining debugging.

26. Why is it important to check for data type consistencies between source and target systems in ETL?

Data type inconsistencies between source and target systems in ETL processes can lead to several critical issues. Primarily, data loss or corruption can occur if the target system cannot accurately represent the data from the source (e.g., trying to load a string into an integer field, or a date that exceeds target range). This compromises data integrity and can skew analysis and reporting.

Secondly, inconsistencies can cause ETL process failures. The transformation pipeline might halt if it encounters incompatible data types, requiring manual intervention and delaying data availability. This can impact downstream processes that rely on the transformed data. Additionally, if the target has a smaller maximum allowable length of character compared to the source, truncation will occur. Furthermore, implicit type conversions may lead to unexpected behaviors and inaccurate results. Therefore, validating and harmonizing data types is crucial for a reliable and accurate ETL process.

27. How does testing differ when dealing with large datasets versus smaller ones in ETL?

Testing ETL processes with large datasets differs significantly from testing with smaller datasets primarily in terms of scale, performance, and data integrity. With large datasets, performance testing becomes crucial to identify bottlenecks in the ETL pipeline. This includes assessing processing time, memory usage, and disk I/O. Data validation also becomes more complex; instead of simply examining the transformed data manually, automated checks are needed to ensure data accuracy and completeness, often relying on checksums, data profiling, and statistical analysis to find anomalies.

Furthermore, error handling and fault tolerance are more critical with large datasets. A single error can halt the entire ETL process or corrupt a large portion of the data. Testing should focus on how the system recovers from failures, handles invalid data, and ensures data consistency across different stages of the pipeline. Specifically, SELECT COUNT(*) queries on source and target tables, alongside data profiling tools, play a bigger role to ensure data consistency and find outliers or discrepancies. Different performance benchmarking and data validation strategies are employed.

28. If the ETL process requires cleaning personally identifiable information (PII) from a dataset, how do you validate that this is done correctly?

Validating PII removal in an ETL process requires a multi-faceted approach. First, implement automated checks using data profiling tools and regular expressions to scan the output dataset for patterns resembling PII (e.g., email addresses, phone numbers, credit card numbers). These checks should flag any potential PII found. Critically, define a clear definition of PII relevant to the dataset and the applicable regulations.

Second, conduct manual sampling and review of the cleaned data. A small, randomly selected subset of records should be inspected by a data privacy expert or trained personnel to confirm the absence of PII and to verify that any anonymization or pseudonymization techniques (if used) were applied correctly. Consider using synthetic data or data masking during development and testing to avoid exposing real PII in non-production environments.

Intermediate ETL Testing interview questions

1. Explain the difference between data validation and data verification in ETL testing. Give examples.

Data validation ensures data conforms to predefined rules and constraints, checking for completeness, format, and data type correctness. For example, validating that a 'Date of Birth' field contains a valid date in 'YYYY-MM-DD' format or ensuring that a 'Product ID' is not null. Data verification, on the other hand, checks if the data is accurate and consistent with the source data or business requirements after transformations. An example is verifying that the sum of sales in a target table matches the sum of sales in the source table, or that a calculated 'Total Price' field is correctly derived from 'Unit Price' and 'Quantity'.

2. How would you test an ETL process that involves complex data transformations and aggregations?

Testing an ETL process with complex transformations and aggregations requires a multifaceted approach. I'd start with validating the source data to ensure its quality and consistency. Then, I'd focus on unit testing individual transformation steps using sample data and known outputs. This involves writing SQL queries or code snippets to verify each transformation logic (e.g., aggregation, filtering, data type conversions). Edge cases and boundary conditions should be given high priority.

Integration testing is critical to confirm that the entire ETL pipeline works as expected. This includes verifying data lineage, data completeness, and the accuracy of the aggregated results in the target data warehouse or data lake. I would also implement data quality checks at various stages of the pipeline to identify data inconsistencies or anomalies early on. Performance testing, which includes measuring the ETL process execution time with large datasets and resource utilization, is vital. Finally, user acceptance testing (UAT) can involve stakeholders validating the data in the target system matches expectations. Example code testing using python with pandas dataframe:

import pandas as pd

def test_aggregation(input_df, expected_output):

#apply aggregations

actual_output = input_df.groupby('category')['value'].sum()

pd.testing.assert_series_equal(actual_output, expected_output)

3. Describe your approach to testing incremental ETL loads versus full ETL loads.

Testing incremental ETL loads differs significantly from testing full loads. For incremental loads, the focus is on ensuring that only the new or updated data is processed correctly and that the existing data remains unchanged and consistent. This involves verifying data lineage and transformation logic for the delta data, as well as ensuring that the incremental updates are applied correctly to the existing data in the target system.

For full ETL loads, the entire dataset is processed and reloaded into the target system. Testing a full load requires verifying that the complete dataset is loaded correctly, including data accuracy, completeness, and consistency. It also involves performance testing to ensure that the full load completes within acceptable timeframes. Comparison of data in the source and target systems is critical in this scenario. In addition, tests can be written to ensure that there are no duplicate records and that all constraints are applied correctly.

4. What are some common challenges you've faced while testing ETL processes, and how did you overcome them?

Some common challenges I've faced while testing ETL processes include data quality issues, performance bottlenecks, and complex transformation logic. To overcome data quality issues, I've implemented data profiling and validation rules to identify and flag inconsistencies or errors in the source data. I've also worked with data owners to improve data quality at the source. For performance bottlenecks, I've used query optimization techniques, such as indexing and partitioning, and monitored resource utilization to identify areas for improvement.

Testing complex transformations often involved breaking down the process into smaller, manageable units and writing detailed test cases for each transformation. I've also used data comparison tools to verify that the transformed data matches the expected output. When debugging or understanding transformations I use SQL or Python (depending on the ETL tooling) to extract and manually check transformed data using code snippets, helping confirm/deny hypotheses about specific transformation steps.

5. How do you ensure data quality and consistency throughout the ETL process?

Ensuring data quality and consistency in ETL involves several strategies applied throughout the process. At the source, data profiling helps understand data characteristics and identify potential issues. During transformation, data cleansing, validation, and standardization rules are implemented. Data validation includes checks for data types, range, and uniqueness. Consistency is maintained by using standardized codes and mappings, and handling missing data appropriately.

After loading, data reconciliation compares source and target data to verify completeness and accuracy. Regular monitoring and auditing track data quality metrics and identify anomalies. For example, you can implement checks using SQL queries to validate data transformations, such as verifying that a calculated field's total matches the sum of its components. SELECT SUM(field_a + field_b) FROM target_table;

6. Explain how you would test an ETL process that extracts data from multiple sources with different data formats.

Testing an ETL process involving multiple data sources with different formats requires a multi-faceted approach. I would start with data validation, focusing on schema validation, data type checks, and ensuring data completeness for each source. This can involve querying the source databases directly to verify the data before and after the ETL process.

Next, I'd implement transformation testing to confirm that data is correctly transformed and mapped according to the defined rules. This includes testing data cleansing, data enrichment, and data aggregation. Data quality testing is crucial, looking for duplicate records, null values, and adherence to business rules. Finally, I would perform performance testing to evaluate the ETL process's speed and scalability, particularly when handling large datasets. Example: To validate a date column, I'd use regex to see if the date is adhering to required formats or not. This also involves profiling the data and look for anomalies.

7. Describe your experience with testing different types of ETL tools and technologies.

My experience with ETL testing includes working with a variety of tools and technologies. I've tested commercial ETL platforms like Informatica PowerCenter and Talend, focusing on data validation, transformation accuracy, and performance. I also have experience testing open-source solutions like Apache NiFi and Pentaho Data Integration, where the emphasis was on custom scripting and ensuring data integrity through complex transformations. These tests often involved validating data against source systems, verifying data quality rules, and assessing overall ETL pipeline performance.

Specifically, my testing approach has involved using SQL queries to compare data between source and target systems, employing data profiling tools to identify data quality issues early in the process, and using scripting languages like Python to automate test cases and generate test data. I also have some experience writing unit tests for custom transformations and utilizing data comparison tools to highlight discrepancies and ensure data consistency. I have worked with different types of databases such as Oracle, MySQL, PostgreSQL, and Snowflake, and have tested different data formats like CSV, JSON, and XML.

8. How do you handle data reconciliation during ETL testing to ensure data accuracy?

Data reconciliation during ETL testing involves comparing the source data with the transformed and loaded data in the target system. This ensures data accuracy and completeness. Key techniques include: validating record counts between source and target tables, comparing checksums or hash values of columns, and performing data profiling to identify anomalies, null values, or inconsistencies. Direct data comparison using SQL queries, data comparison tools, or scripting is also crucial.

Specifically, I would:

- Identify key fields: Pinpoint fields critical for data accuracy.

- Implement automated scripts: Use scripts to compare data sets.

- Conduct row-level comparisons: Examine individual records for discrepancies.

- Perform aggregate comparisons: Verify the correctness of calculated fields.

9. What are some strategies you use to optimize ETL testing and reduce testing time?

To optimize ETL testing and reduce testing time, I focus on several key strategies. First, I prioritize test cases based on risk and business impact, executing high-priority tests early and often. Data profiling and source data analysis are crucial upfront to understand data characteristics and identify potential issues before they propagate through the ETL pipeline. This allows for creating targeted test cases. Furthermore, I use data sampling techniques to reduce the volume of data processed during testing while still maintaining sufficient coverage. Automating test case generation and execution is also paramount, using frameworks and tools to validate data transformations, data quality, and data loading. This includes leveraging data comparison tools to efficiently identify discrepancies between source and target systems.

Another important aspect is optimizing the testing environment. This includes using representative test data sets that mimic production data, and ensuring the environment has adequate resources for testing. Also, I practice continuous testing and integration by integrating ETL tests into the CI/CD pipeline, facilitating early detection of issues, faster feedback loops, and quicker turnaround times. Finally, monitoring and analyzing test results is essential to identify bottlenecks or recurring issues, enabling targeted optimization and improvement of the ETL process.

10. How do you ensure data security and compliance during the ETL process?

Ensuring data security and compliance during the ETL process involves several measures. First, data encryption both in transit and at rest is crucial. We encrypt sensitive data fields before extraction, transmit them securely using protocols like HTTPS or TLS, and store them encrypted in the data warehouse or data lake. Access controls are also implemented to restrict data access based on roles and responsibilities.

Second, we adhere to compliance regulations such as GDPR, HIPAA, or CCPA by implementing data masking and anonymization techniques. Data auditing is performed to track data lineage and identify any unauthorized access or modification. Regular security assessments and penetration testing are conducted to identify and address vulnerabilities in the ETL pipeline. Code reviews help spot potential security flaws early on. Finally, we use tools for data loss prevention (DLP) to prevent sensitive data from leaving the organization's control.

11. Describe your approach to testing ETL processes that involve real-time or near real-time data integration.

Testing real-time or near real-time ETL processes requires a multi-faceted approach. First, I'd focus on validating data ingestion, transformation, and loading. This includes verifying data accuracy, completeness, and consistency as it flows through the pipeline. Tools and techniques like data profiling, schema validation, and data reconciliation between source and target systems are essential. I would simulate various data volumes and velocities, including peak load scenarios, to assess performance and identify potential bottlenecks.

Beyond functional testing, monitoring is crucial. Implementing real-time dashboards and alerts to track data latency, error rates, and system health allows for proactive identification and resolution of issues. I'd also incorporate automated regression tests to ensure that new code changes don't negatively impact the existing ETL process. Synthetic data generation helps to create specific test cases in near real time without waiting for production data to arrive.

12. How do you validate the performance of an ETL process, including its ability to handle large volumes of data?

To validate ETL performance, I'd focus on measuring key metrics like data throughput, latency, and resource utilization. Specifically, I would:

- Profile the ETL pipeline: Identify bottlenecks by monitoring CPU, memory, and I/O usage at each stage. Tools like

perf(Linux) or profiling features in the ETL tool itself (e.g., Spark UI for Spark jobs) can be invaluable. - Load test with varying data volumes: Start with a representative dataset and gradually increase its size to observe how performance scales. Track execution time, error rates, and resource consumption. Use synthetic data generation tools if necessary to create large datasets that mimic production data characteristics.

- Implement data quality checks: Verify data accuracy and completeness throughout the ETL process to ensure performance gains aren't achieved at the expense of data integrity. Track data quality metrics to identify potential issues, logging anomalies and errors during the validation process.

- Automate testing: Create automated scripts and workflows to execute performance tests regularly. Integrate these tests into the CI/CD pipeline to catch performance regressions early. Use assertion frameworks to validate the results of the ETL process. Example:

assert actual_row_count == expected_row_count(Python)

13. What are some common ETL testing metrics that you track to measure the effectiveness of testing efforts?

Common ETL testing metrics focus on data quality, performance, and test coverage. Examples include:

- Data accuracy rate: Percentage of data that is accurate after ETL processes.

- Data completeness rate: Percentage of missing or null values.

- Data duplication rate: Percentage of duplicate records.

- ETL processing time: Time taken for the ETL process to complete. High ETL times indicate performance bottlenecks.

- Error count: Number of errors or failures during ETL processes. Monitoring this helps identify potential issues.

- Test coverage: Percentage of data transformations covered by test cases. High test coverage minimizes the risk of defects.

14. How do you handle data lineage and data governance during ETL testing?

During ETL testing, data lineage is verified by tracing data flow from source to target, ensuring transformations are accurately applied and data integrity is maintained. This involves examining ETL logs, data dictionaries, and transformation mappings to confirm that data origins and modifications are correctly documented. Testing includes comparing source data to transformed data to validate the applied rules.

Data governance is addressed by verifying that ETL processes adhere to defined data quality standards, compliance requirements, and security policies. This encompasses validating data masking or anonymization techniques (if applicable), confirming proper data validation rules are in place, and ensuring access controls are appropriately configured. Test cases should specifically address these governance aspects to ensure data security and compliance.

15. Describe your experience with testing ETL processes in a cloud environment.

In my previous role, I extensively tested ETL processes within AWS, primarily using services like AWS Glue, S3, and Redshift. My testing strategy focused on validating data accuracy, completeness, and consistency throughout the entire pipeline. This involved writing SQL queries to compare source and target data, verifying data transformations, and ensuring data integrity constraints were enforced. I also used Python scripts with libraries like boto3 and pandas to automate data validation and perform data profiling.

Specifically, I focused on testing the performance and scalability of ETL jobs, identifying bottlenecks, and suggesting optimizations. I used tools such as CloudWatch to monitor resource utilization and identify potential issues. Furthermore, I implemented automated testing using frameworks like pytest to ensure continuous integration and delivery of the ETL pipelines. I also had experience in testing delta load and full load implementations.

16. How would you test a scenario where the source data contains invalid or inconsistent data?

When testing scenarios with invalid or inconsistent source data, I would focus on ensuring the system handles these errors gracefully and provides informative feedback. This involves designing test cases that cover a range of invalid data types, formats, and values, as well as inconsistent relationships between data points.

Specifically, I'd create test data including:

- Invalid data types: e.g., inserting a string where a number is expected.

- Out-of-range values: e.g., negative values for a quantity that should be positive.

- Missing values: Ensuring that required fields are validated and handled appropriately.

- Inconsistent relationships: e.g., conflicting information across related tables or fields. The system should either reject such invalid data or transform it according to predefined rules.

- Boundary testing Testing values near limits to check for off-by-one errors.

I'd verify that appropriate error messages are logged, alerts are raised, or default values are applied, depending on the expected behavior. The goal is to prevent data corruption and maintain system stability even when faced with flawed input. Also ensure the error messages help in debugging the errors.

17. Explain how you would automate ETL testing using scripting languages or automation tools.

To automate ETL testing, I'd use a scripting language like Python with libraries such as pandas and PyTest. The process would involve extracting data from source systems, transforming it using pre-defined ETL logic (implemented potentially in SQL or stored procedures), and loading it into the target data warehouse or data lake.

My testing script would then validate the loaded data against the source, checking for data completeness (no missing rows), data accuracy (correct transformations), data consistency (no data type mismatches), and data quality (nulls, duplicates). Furthermore, I would automate the execution of SQL queries against the target database to confirm expected data aggregations and calculations, and raise alerts if the tests fail or thresholds are breached. I can also integrate data profiling tools to detect data anomalies early.

18. Describe your approach to testing ETL processes that involve data masking or data anonymization.

When testing ETL processes involving data masking or anonymization, my approach focuses on verifying both the effectiveness of the masking and the integrity of the data. I would first validate the masking/anonymization techniques by creating test data containing sensitive information and then running the ETL process. After the ETL process, I would analyze the output data to confirm that the sensitive information has been properly masked or anonymized according to the defined rules. This involves checking for patterns, identifiable information, or the possibility of reverse engineering the masked data.

Secondly, data integrity is crucial. I would compare aggregated statistics and key data points from the original dataset and the masked/anonymized dataset to ensure that the transformations haven't introduced significant data drift or inaccuracies. For instance, verifying the row count, distribution of key fields, and running data quality checks. This includes verifying that the data types are consistent and that no unexpected null values have been introduced.

19. How do you ensure that the ETL process correctly handles date and time conversions across different time zones?

To ensure correct date and time conversions in ETL across time zones, I'd first standardize all dates and times to UTC as early as possible in the process. This eliminates ambiguity. Then, I'd store the original time zone information if needed for reporting or analysis. Finally, at the reporting or destination stage, I'd convert the UTC time to the appropriate local time zone based on user preferences or system requirements.

This can be achieved using libraries or functions specific to the ETL tool or programming language. For example, in Python: pytz can be used or in Spark you can use from_utc_timestamp() and to_utc_timestamp(). Proper testing with various time zones and edge cases is also critical to ensure data accuracy.

20. What are some common ETL design patterns that you are familiar with, and how would you test them?

Some common ETL design patterns include: Full Load, Incremental Load, Change Data Capture (CDC), and Data Vault. Full Load is the simplest, truncating the target and reloading all data. Incremental Load only loads new or updated data based on timestamps or sequence numbers. CDC captures changes at the source (using triggers, transaction logs, etc.) and applies them to the target. Data Vault is a modeling approach focused on auditing and history. To test these, I'd use a combination of techniques. For all patterns, I'd check data completeness (row counts, column counts), data accuracy (sampling data and comparing to source), data consistency (relationships between tables), and data timeliness (how long the ETL process takes). For incremental loads and CDC, I'd also test scenarios with different types of changes (inserts, updates, deletes) and ensure that data is correctly propagated to the target system. Testing also includes verifying the ETL job's performance and resource utilization, including error handling and logging capabilities.

Specific test examples:

- Full Load: Verify that the target table is truncated before loading.

- Incremental Load: Insert new rows in the source, run the ETL, and verify that only the new rows are added to the target.

- CDC: Update a row in the source, run the ETL, and check if the updated row is reflected in the target. Delete a row and confirm the deletion is propagated.

- Boundary testing with null values, special characters, and large data sets.

21. How do you validate that the ETL process correctly handles data deduplication?

To validate data deduplication in an ETL process, I'd implement several strategies. First, I'd establish a clear definition of what constitutes a 'duplicate' record based on the business requirements (e.g., matching on specific fields or a combination). Then, I'd create test datasets containing known duplicates, near duplicates, and unique records. The ETL process would then be run against these datasets, and the output would be analyzed to ensure that only unique records are present.

Specifically, I would:

- Count Records: Compare the record count before and after the deduplication process. The difference should match the number of duplicates in the test dataset.

- Data Profiling: Profile the output data to check for unexpected variations in the data.

- Data Comparison: Compare a sample set of records before and after deduplication. Use SQL queries or data comparison tools like

diffto verify that the process correctly identifies and removes duplicates while preserving the integrity of the remaining data. For example:SELECT COUNT(*) FROM table WHERE field1 = 'value1' AND field2 = 'value2';would be run on both pre and post deduped tables. We would also check edge cases such as records withNULLvalues in deduping fields.

22. Describe your experience with testing ETL processes that integrate with data lakes or data warehouses.

I have experience testing ETL processes that integrate with both data lakes and data warehouses. My testing strategy typically involves validating data at various stages of the ETL pipeline. This includes source data validation (ensuring data quality and completeness before ingestion), transformation validation (verifying that data transformations are applied correctly according to business rules), and target data validation (comparing the data in the data lake or data warehouse with the source data, confirming accurate loading and aggregation).

Specifically, I've used tools like SQL for querying and comparing data, Python with libraries like Pandas for data profiling and validation, and data comparison tools to identify discrepancies between source and target systems. I also have experience writing test cases to cover various scenarios, including data type validation, null value handling, duplicate record detection, and performance testing to ensure the ETL process meets specified SLAs.

23. How would you test the error handling and recovery mechanisms of an ETL process?

To test error handling and recovery in an ETL process, I'd focus on injecting various types of errors at different stages and verifying that the process responds as expected. This involves:

- Data Errors: Introduce invalid data (incorrect data types, missing values, out-of-range values) in the source and verify if the ETL process correctly identifies, logs, and handles these errors (e.g., rejects the record, applies default values, or moves to a quarantine area).

- Connectivity Issues: Simulate network outages or database unavailability during data extraction, transformation, and loading. Verify the ETL process retries failed operations, uses appropriate timeout mechanisms, and gracefully recovers or terminates with informative error messages.

- Resource Constraints: Test the ETL process under heavy load or limited resources (e.g., CPU, memory, disk space). Observe how the process handles resource exhaustion and whether it degrades gracefully or fails with appropriate error messages. Validate logging and monitoring for alerts.

- Data Duplicates: Deliberately create duplicate records in the source data and ensure the ETL process correctly handles them according to the predefined business rules (e.g., deduplication logic, update existing records, or reject duplicates).

- Process Interruptions: Simulate unexpected process terminations (e.g., power failures, system crashes) during ETL execution and verify the recovery mechanisms. Ensure the process can resume from the last consistent state, avoiding data loss or corruption. This may involve checkingpoint functionality. Logging is critical.

- Schema Changes: Test how the ETL handles schema changes in the source or destination systems. These changes could be adding new columns, modifying data types, or dropping columns. Ensure the ETL adapts gracefully or provides appropriate alerts and error handling.

Each test should involve validating log files, error reports, and the state of the data in the destination system to confirm that the error handling and recovery mechanisms are working correctly. Also test notifications (email, SMS, etc.) are triggered when issues occur.

24. Explain how you would test the data transformations applied by an ETL process, such as data cleansing or data standardization.

To test ETL data transformations, I'd first focus on defining clear test cases covering various scenarios, including valid data, invalid data, boundary conditions, and edge cases. For data cleansing, tests would verify the removal of duplicates, handling of missing values (e.g., imputation or deletion), and correction of errors (e.g., misspelled names). For data standardization, tests would ensure consistent formatting (e.g., date formats, address formats) and unit conversions.

I'd use SQL queries or scripting languages like Python with libraries such as Pandas or PySpark to write test scripts. These scripts would compare the transformed data against the expected output based on the defined test cases. For example:

import pandas as pd

# Example: Testing data standardization for a date column

data = {'date': ['2023-01-01', '01/01/2023', 'Jan 1, 2023']}

df = pd.DataFrame(data)

# Apply transformation (assuming you have a function called standardize_date)

df['date_standardized'] = df['date'].apply(lambda x: pd.to_datetime(x).strftime('%Y-%m-%d'))

# Assert that all dates are standardized to the same format

assert df['date_standardized'].nunique() == 1

print("All dates are standardized correctly.")

Specifically, I would check for data type correctness, data range validity, referential integrity (if applicable), and data completeness after transformation. The entire testing process would include executing these test scripts, logging the results, and reporting any discrepancies or failures.

25. How do you ensure that the ETL process correctly handles null values and missing data?

Handling null values and missing data in ETL processes is crucial for data quality. Strategies include:

- Identification: First, identify nulls using

IS NULLchecks in SQL or equivalent functions in other ETL tools. - Replacement/Imputation: Replace nulls with default values (e.g., 0, 'Unknown'), mean/median values (for numerical data), or values derived from other related fields. Consider using

COALESCEin SQL or similar functions in other tools for default value replacement. For example:COALESCE(column_name, 'default_value'). More complex imputation techniques might involve statistical modeling. - Filtering: Remove records with excessive or critical missing data if imputation isn't feasible and the data isn't essential.

- Data Type Considerations: Ensure data types are appropriately defined to avoid unintentional null conversions.

- Validation and Monitoring: Implement validation rules to check for nulls at various stages of the ETL pipeline. Monitor the frequency of nulls to detect potential data quality issues.

- Error Handling: Implement robust error handling to capture and log errors caused by unexpected null values. Document the chosen approach to handle null values within the ETL process.

26. Describe your approach to testing ETL processes that involve change data capture (CDC).

When testing ETL processes with CDC, my approach focuses on verifying data accuracy, completeness, and consistency throughout the pipeline. This involves several key steps. First, I validate that the CDC mechanism correctly identifies and captures changes from the source system. Then, I verify that these changes are accurately transformed and loaded into the target system, including handling different change types (inserts, updates, deletes). I also check for data integrity issues like duplicates or data loss and reconcile the data between source and target. I leverage tools like data comparison utilities, SQL queries, and custom scripts to automate data validation. Specifically, I would:

- Source Data Validation: Verify captured changes against the source data to confirm accuracy of the CDC process.

- Data Transformation Validation: Ensure transformation logic is correctly applied to changed data.

- Target Data Validation: Validate data in the target to match source data changes.

- Performance Testing: Measure CDC processing time and optimize if needed.

27. How do you test the impact of schema changes on existing ETL processes?

To test the impact of schema changes on existing ETL processes, I'd first analyze the changes to identify potentially affected ETL jobs and downstream systems. Then, I would create a testing strategy that includes unit tests for individual components, integration tests to verify data flow between components, and end-to-end tests to validate the entire process and data quality. Specifically, I'd pay close attention to data type compatibility, null handling, data truncation, and data validation against the new schema. Data profiling tools would be used to compare the data before and after schema changes.

Crucially, I'd set up a non-production environment mirroring production to execute these tests, avoiding disruption to live data. The testing strategy might involve:

- Data Validation: Check for data type mismatches, constraints violations, and data loss.

- Performance Testing: Assess if the schema changes impact ETL processing time.

- Regression Testing: Ensure that existing ETL functionality remains unaffected.

- Data lineage: Verify data transformation accuracy.

- Error Handling: Validate that the ETL process correctly handles any new exceptions or errors introduced by the schema changes. Automating these tests and using version control for ETL code are crucial for maintainability and repeatability.

Advanced ETL Testing interview questions

1. How would you design an ETL testing strategy for a real-time data streaming scenario, considering the challenges of data velocity and volume?

For real-time ETL testing, I'd focus on a strategy incorporating data profiling, validation, and performance checks. Key aspects include:

- Data Profiling and Contract Testing: Define clear data contracts and use automated profiling to ensure incoming data conforms to these contracts regarding format, completeness, and expected values. Implement tests that compare data samples against expected schemas and distributions.

- Real-time Validation: Implement micro-batch testing on incoming streams. Sample data for validation against business rules and data quality metrics. Use tools to automatically detect anomalies and data drift as soon as the data hits the ETL pipelines. Focus on testing transformations and aggregations in real time using small batches.

- Performance and Scalability: Monitor latency, throughput, and resource utilization. Simulate peak loads to identify bottlenecks and ensure the ETL pipeline can handle the data velocity without data loss or degradation in performance. Test scalability by incrementally increasing the data volume. Ensure that the system handles message delivery guarantees (at least once, exactly once).

- Data Reconciliation: Implement mechanisms for data reconciliation by comparing the data from the source to the target after transformation to see if there is any data loss.

2. Describe your approach to testing data lineage in a complex ETL environment with multiple source systems and transformations.

My approach to testing data lineage in a complex ETL environment involves a combination of automated and manual techniques. I would start by creating a data lineage map that visually represents the flow of data from source to target, documenting all transformations along the way. Then, I'd implement automated tests to validate that data transformations are applied correctly at each stage of the ETL process. This includes unit testing individual transformations, integration testing between stages, and end-to-end testing from source to target. Specific steps involve: verifying data type conversions, checking data aggregations and calculations, ensuring data completeness and accuracy, and validating data filtering and cleansing rules. I'd also use data profiling tools to identify any anomalies or inconsistencies in the data.

In addition to automated testing, I would also perform manual testing to verify data lineage and ensure that the data meets business requirements. This may involve tracing data back to its source, comparing data between different systems, and working with business users to validate the accuracy and completeness of the data. For complex transformations, I would use a combination of SQL queries and scripting languages (like Python) to verify the data lineage and transformations. The key is to ensure end-to-end traceability of data and that it can be validated at any stage.

3. Explain how you would validate data quality rules and constraints in an ETL process, especially when dealing with fuzzy matching and data cleansing.

Validating data quality in ETL, especially with fuzzy matching and cleansing, requires a multi-faceted approach. First, I'd define clear data quality rules and constraints (e.g., data type validation, required fields, acceptable value ranges) before the ETL process. During ETL, I would implement checks at various stages:

- Source Data Validation: Verify data types, lengths, and mandatory fields.

- Fuzzy Matching Validation: Evaluate the quality of fuzzy matches by analyzing match scores and performing spot checks to ensure accuracy. Implement thresholds for match scores, flagging matches below a certain threshold for manual review. Track the number of records that fuzzy matching could not resolve.

- Data Cleansing Validation: After cleansing, confirm that the data conforms to the defined rules and constraints. For example, verifying that date formats are standardized, and address formats are consistent.

- Post-Load Validation: Once data is loaded into the target system, perform final validation checks to ensure data integrity and completeness, such as record counts, and data summaries. I would incorporate data profiling to identify anomalies and data quality issues. All validation steps should generate detailed logs and reports for auditing and issue resolution.

4. What are the key considerations when testing the performance and scalability of an ETL process that handles large datasets?

When testing the performance and scalability of an ETL process dealing with large datasets, several key considerations are crucial. First, data volume and variety significantly impact performance; testing should involve realistic data volumes and diverse data types to simulate real-world scenarios. Understanding the data distribution, any skewness or outliers in the data will help identify potential bottlenecks. Second, resource utilization needs careful monitoring. CPU usage, memory consumption, disk I/O, and network bandwidth should be tracked to identify resource constraints. Bottlenecks related to disk speed, available memory for transformations, or inefficient algorithms can become apparent under load. Third, ETL process design itself can cause scalability issues. Consider factors like parallel processing capabilities, efficient data transformations, and appropriate indexing. Can the process be easily scaled by adding more worker nodes? Code profiling and optimization are beneficial in identifying performance bottlenecks.

Finally, infrastructure limitations (e.g., network latency, database server capacity) must be considered. You need to validate the underlying infrastructure can withstand the ETL process demands. Simulate concurrent users and processes accessing data to identify bottlenecks related to concurrency and resource contention. Establish performance baselines and set up monitoring alerts for deviations, so you can address problems proactively.

5. How would you approach testing an ETL process that involves data masking and anonymization techniques to ensure data privacy and compliance?

Testing an ETL process with data masking and anonymization requires a multi-faceted approach. First, I would validate the masking/anonymization rules themselves against the original data to confirm they are correctly implemented and effective in hiding sensitive information. This involves checking if Personally Identifiable Information (PII) is properly masked or anonymized using techniques such as tokenization, pseudonymization, or data redaction. I'd then verify that the transformed data meets compliance requirements like GDPR or HIPAA by assessing whether the anonymization is irreversible and prevents re-identification. Scenarios should include both positive tests (verifying data is masked correctly) and negative tests (verifying unauthorized data is not masked).

Second, I would focus on the data quality of the masked/anonymized data, ensuring it remains usable for downstream processes. This includes verifying data integrity (no data loss or corruption), consistency across different data sets, and validity against expected data types and formats. I would run various data quality checks such as null value analysis, range checks, and data type validation. Performance testing is also critical to ensure the masking/anonymization process does not introduce unacceptable latency into the ETL pipeline. Lastly, I'd implement monitoring to continuously validate the effectiveness of masking and anonymization strategies over time.

6. Describe your experience with testing ETL processes that use cloud-based data warehouses and data lakes.

My experience with testing ETL processes involving cloud data warehouses (like Snowflake, Redshift, BigQuery) and data lakes (like S3, Azure Data Lake Storage) centers around ensuring data quality, accuracy, and completeness throughout the pipeline. I've worked on validating data transformations, verifying data loading processes, and ensuring data integrity across different stages. This involves writing SQL queries to compare source and target data, implementing data profiling techniques to identify anomalies, and developing automated test suites using tools like Python and pytest to perform regression testing and continuous integration.

Specifically, I have experience in:

- Data Validation: Writing SQL queries to validate data transformations, ensuring data accuracy and completeness.

- Schema Validation: Verifying that the target schema matches the expected schema and that data types are correctly mapped.

- Performance Testing: Evaluating the performance of ETL processes and identifying bottlenecks using cloud-native monitoring tools.

- Data Quality Checks: Implementing data quality checks to identify and flag invalid or inconsistent data.

- Error Handling: Testing error handling mechanisms to ensure that errors are properly logged and handled.

- Security Testing: Ensuring that data is securely transferred and stored in the cloud data warehouse or data lake.

- Automation: Automating the testing process using CI/CD pipelines to ensure that new changes do not introduce regressions. An example:

import pytest

def test_data_quality(snowflake_connection):

# Execute a query to check for null values in a specific column

result = snowflake_connection.execute("SELECT COUNT(*) FROM my_table WHERE column_name IS NULL").fetchone()[0]

assert result == 0, "Null values found in column_name"

7. Explain how you would test the incremental data loading functionality of an ETL process, ensuring that only new or modified data is processed.

To test incremental data loading, I'd focus on verifying that only new or modified records are processed. This involves creating test datasets with various scenarios: new records, modified records, and unchanged records. I'd then run the ETL process and validate that only the new and modified data are loaded into the target system.

Specific tests would include:

- New Data: Verify new records are loaded correctly.

- Modified Data: Confirm existing records are updated with the changed values. Use a

timestamporversioncolumn to identify changes. - Unchanged Data: Ensure unchanged records are not reprocessed.

- Edge Cases: Test scenarios with null values, empty strings, and boundary conditions.

- Data Integrity: Validate data accuracy and consistency after loading.

8. What are the challenges of testing ETL processes that integrate with third-party APIs and web services, and how would you address them?

Testing ETL processes that integrate with third-party APIs and web services presents several challenges. Primarily, the unpredictability and lack of control over these external systems are significant hurdles. API availability, response times, data formats, and rate limits can fluctuate, impacting ETL pipeline stability and data quality. Thoroughly handling these variations requires robust error handling, retry mechanisms, and circuit breakers within the ETL process. Additionally, simulating realistic third-party behavior for comprehensive testing is difficult. Consider tools like WireMock or mock APIs to simulate various scenarios, including slow responses, errors, and different data formats. Data validation also becomes more complex as you need to verify the data transformed from third-party APIs before and after loading to the target destination.

To address these challenges, a layered testing approach is beneficial. This includes unit tests for individual ETL components, integration tests focusing on the API interactions (using mocks where necessary), and end-to-end tests validating the entire pipeline. Implementing comprehensive logging and monitoring is also critical for identifying and resolving issues in production. Don't forget to validate data types and data quality rules before data lands to a target destination. Furthermore, version control API dependencies to ensure consistent behavior during tests and in production environments, mitigating breaking changes introduced by third-party API providers.

9. How would you validate the data transformation logic in an ETL process, especially when dealing with complex calculations and aggregations?

Validating ETL transformation logic, particularly with complex calculations, requires a multi-faceted approach. I'd start by using data profiling on the source data to understand its characteristics (min/max values, distributions, etc.) and then design test cases that cover various scenarios, including edge cases, null values, and boundary conditions. I would implement unit tests specifically targeting individual transformations or calculations. For example, if calculating a weighted average, a unit test would verify that the average is correctly computed for a small, manageable dataset with known weights and values.

Further validation involves using data reconciliation techniques to compare the output data with a trusted source or a manually calculated benchmark. Also, end-to-end testing should be carried out, where a subset of the source data is processed through the entire ETL pipeline, and the resulting data in the target system is rigorously checked against the expected values. SQL queries can be used to validate data consistency at different stages of the pipeline. Furthermore, introduce data quality checks and alerts within the ETL process to catch unexpected data issues automatically.

10. Describe your approach to testing error handling and data recovery mechanisms in an ETL process.

My approach to testing error handling and data recovery in ETL processes involves several key steps. First, I would identify potential failure points, such as invalid data formats, network issues, database connection problems, and storage limitations. Then, I create test cases specifically designed to trigger these errors. For example, injecting malformed data into a source system, simulating network outages during data transfer, or intentionally causing database connection timeouts. I also would test if logging is appropriate in these cases.

Next, I'd verify that the ETL process correctly handles these errors. This includes checking that the process logs the errors appropriately, implements retry mechanisms where feasible, and gracefully shuts down or continues processing depending on the criticality of the failure. For data recovery, I would test that rollback mechanisms function correctly, data is restored to a consistent state, and that there are mechanisms to reprocess failed data without duplicating existing records. I would also write tests to confirm alert systems trigger as expected based on the severity of the error. Finally, I use monitoring tools to track the overall health of the ETL pipeline and ensure that error rates are within acceptable thresholds.

11. How would you test an ETL process that involves data partitioning and sharding to improve performance and scalability?

To test an ETL process with data partitioning and sharding, I'd focus on data integrity, performance, and scalability. Key tests include validating data consistency across shards, ensuring correct data distribution based on the partitioning key, and verifying that data transformations are applied accurately to each partition.

Performance testing would involve measuring ETL process completion time with increasing data volumes and shard counts. Specifically, I'd test query performance across different shards, monitor resource utilization (CPU, memory, I/O) for each shard, and benchmark the impact of adding or removing shards on overall performance. Scalability tests will verify the system's ability to handle increasing data volumes and user loads by simulating production-like scenarios and measuring system response times and resource consumption. I'd also check for potential bottlenecks or limitations in the partitioning or sharding strategy.

12. Explain how you would validate the data consistency and integrity across different target systems after an ETL process.

After an ETL process, I'd validate data consistency and integrity by implementing several checks. First, I'd perform data reconciliation, comparing record counts and aggregate statistics (sums, averages, mins, maxes) between the source and target systems to ensure no data was lost or duplicated during the transfer. I'd also implement data quality checks on the target system, verifying that data conforms to expected formats, ranges, and business rules. For example, I would check if date fields are valid dates and if numerical fields fall within acceptable thresholds.