Data warehousing is a complex field that requires a unique blend of technical and analytical skills. To find the right talent for your organization, it's crucial to ask the right interview questions that assess a candidate's knowledge, experience, and problem-solving abilities in data warehousing.

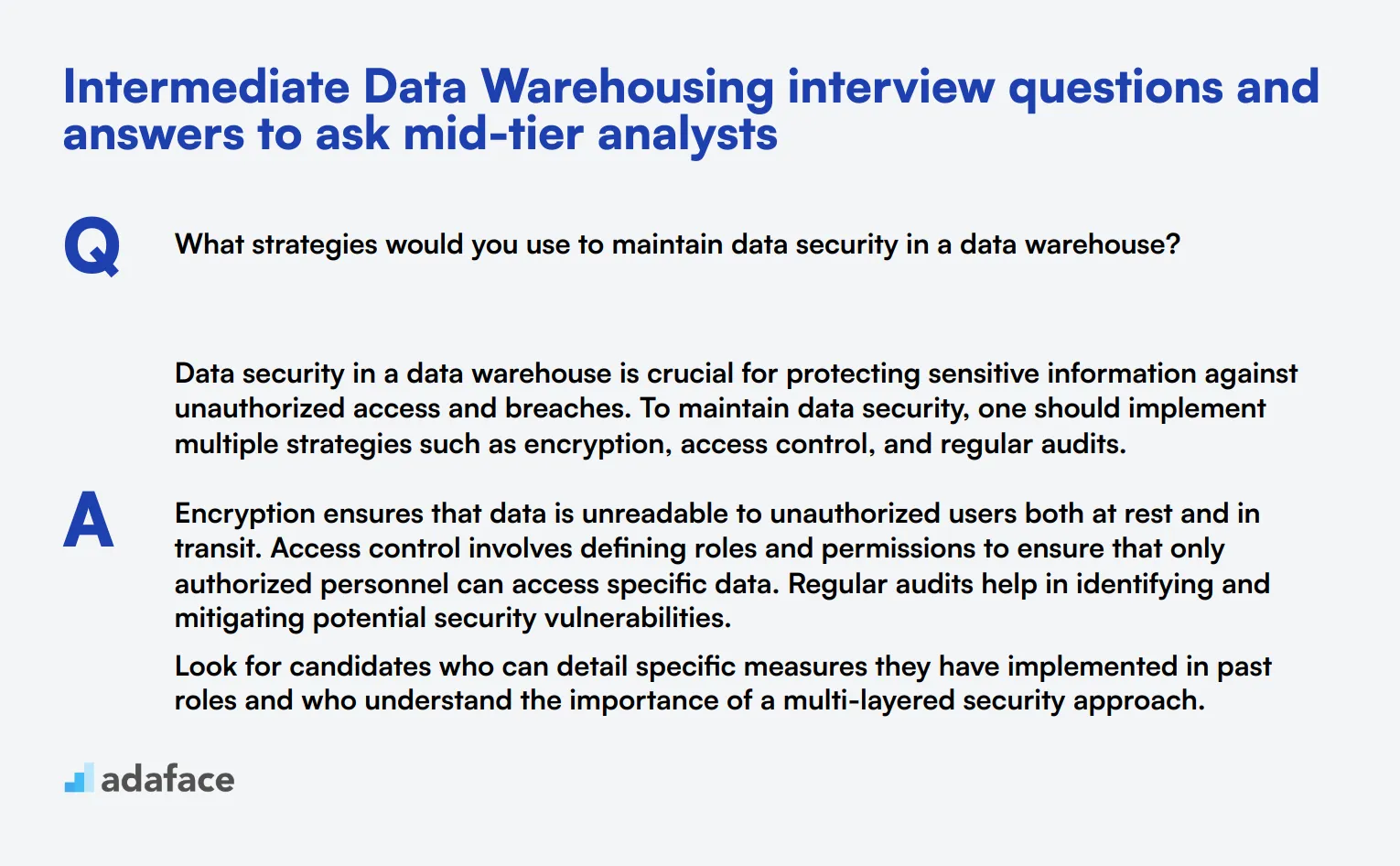

This blog post provides a comprehensive list of data warehousing interview questions tailored for different experience levels, from junior to senior analysts. We've categorized the questions into basic, intermediate, and advanced levels, as well as specific areas like data modeling, ETL processes, and situational scenarios.

By using these questions, you can effectively evaluate candidates' data warehousing expertise and make informed hiring decisions. Additionally, consider incorporating a data warehouse skills assessment before the interview stage to streamline your recruitment process and identify top talent more efficiently.

Table of contents

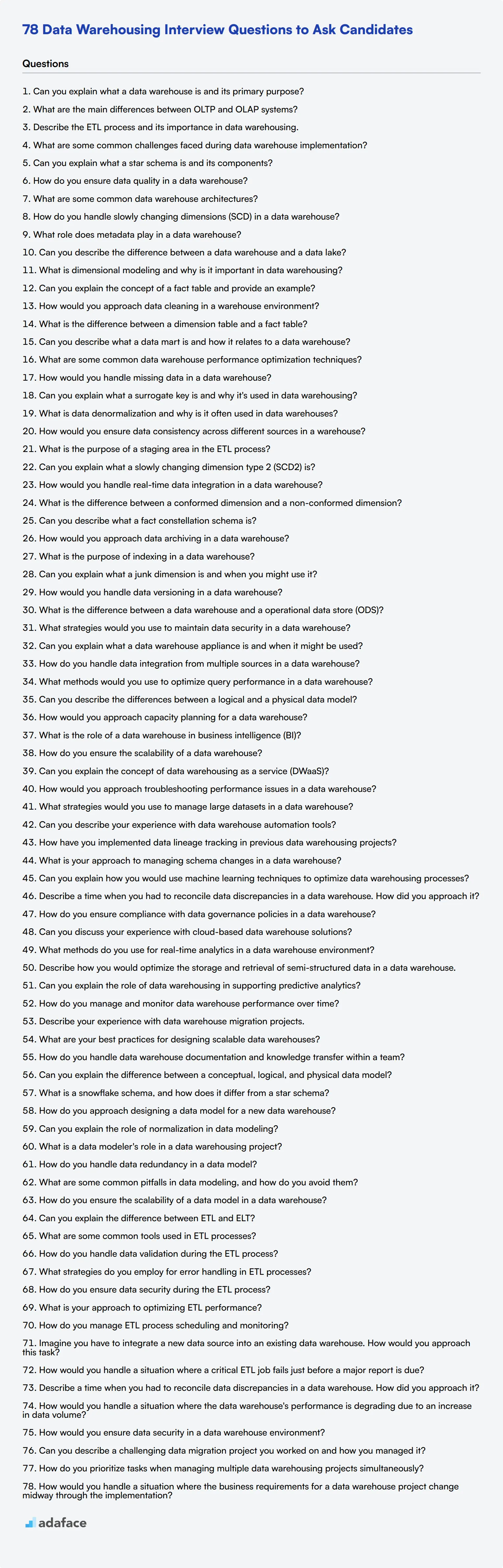

10 basic Data Warehousing interview questions and answers to assess applicants

To effectively assess whether a candidate possesses the fundamental skills and knowledge for a role in Data Warehousing, use these interview questions. They are designed to help you quickly gauge an applicant's understanding and see if they are the right fit for your team.

1. Can you explain what a data warehouse is and its primary purpose?

A data warehouse is a centralized repository that stores large volumes of data from multiple sources. Its primary purpose is to provide a coherent picture of the business at a point in time by aggregating and organizing data in a way that is optimized for query and analysis.

Look for candidates who can clearly articulate the role of a data warehouse in facilitating business intelligence activities such as reporting, data analysis, and decision support. They should mention that data warehouses are designed for read-heavy operations and are essential for historical data analysis.

2. What are the main differences between OLTP and OLAP systems?

OLTP (Online Transaction Processing) systems are designed for managing transactional data, involving a large number of short online transactions. They focus on speed, efficiency, and data integrity in processing day-to-day operations.

OLAP (Online Analytical Processing) systems, on the other hand, are designed for query and analysis rather than transaction processing. OLAP systems facilitate complex queries and data analysis, which helps in decision-making processes.

Strong candidates should be able to highlight these key differences and emphasize that OLTP systems are optimized for operational tasks, while OLAP systems are optimized for data analysis and reporting.

3. Describe the ETL process and its importance in data warehousing.

ETL stands for Extract, Transform, Load. It is the process of extracting data from various sources, transforming it into a suitable format or structure for querying and analysis, and then loading it into the data warehouse.

The ETL process is crucial in data warehousing because it ensures that data is accurate, consistent, and usable for business intelligence activities. It helps in integrating and consolidating data from different sources, making it easier to analyze and derive insights.

Look for candidates who can provide a clear and concise explanation of each step in the ETL process and understand its significance in maintaining the quality and reliability of data within a data warehouse.

4. What are some common challenges faced during data warehouse implementation?

Common challenges during data warehouse implementation include data integration issues, ensuring data quality, managing large volumes of data, and maintaining data security and privacy.

Other challenges may involve aligning the data warehouse design with business requirements, optimizing performance, and ensuring scalability to handle future data growth.

Ideal candidates should be able to discuss these challenges and provide examples of how they have addressed or mitigated them in their previous roles. They should also demonstrate an understanding of best practices for successful data warehouse implementation.

5. Can you explain what a star schema is and its components?

A star schema is a type of database schema that is commonly used in data warehousing. It consists of a central fact table that contains quantitative data (such as sales or revenue), surrounded by dimension tables that contain descriptive attributes related to the data in the fact table.

The fact table and dimension tables are connected by foreign key relationships, forming a star-like pattern when visualized, hence the name 'star schema'.

Candidates should be able to explain this clearly and ideally provide an example to illustrate their understanding. Look for a discussion on the benefits of using a star schema, such as simplicity, improved query performance, and ease of use in reporting and analysis.

6. How do you ensure data quality in a data warehouse?

Ensuring data quality in a data warehouse involves several practices such as data profiling, data cleansing, data validation, and continuous monitoring.

Data profiling helps in understanding the data and identifying any inconsistencies or anomalies. Data cleansing involves correcting or removing inaccurate records from the data. Data validation ensures that the data meets the required standards and business rules.

Look for candidates who emphasize the importance of maintaining high data quality and describe specific techniques or tools they have used to achieve this. They should also mention the role of governance and regular audits in maintaining data quality.

7. What are some common data warehouse architectures?

Common data warehouse architectures include the single-tier architecture, two-tier architecture, and three-tier architecture.

The single-tier architecture aims to minimize the amount of data stored by removing redundancy. The two-tier architecture separates the data warehouse from the operational systems, improving performance and scalability. The three-tier architecture adds an additional layer, typically a data mart, between the data warehouse and end-users, enhancing data accessibility and performance.

Candidates should be able to explain these architectures and discuss their advantages and disadvantages. They should also mention scenarios where each architecture might be appropriate.

8. How do you handle slowly changing dimensions (SCD) in a data warehouse?

Slowly Changing Dimensions (SCD) are dimensions that change slowly over time, rather than on a regular schedule. There are several methods to handle SCD, commonly referred to as Type 1, Type 2, and Type 3.

Type 1 involves overwriting the old data with new data. Type 2 creates a new record for each change, preserving historical data. Type 3 adds new columns to track changes and preserve some historical data.

Candidates should be able to explain these methods and discuss their pros and cons. They should also provide examples of when each method might be used and how they have implemented SCD handling in their previous projects.

9. What role does metadata play in a data warehouse?

Metadata in a data warehouse provides information about the data, including its source, structure, transformations, and usage. It helps in organizing, managing, and understanding the data stored in the warehouse.

Metadata is essential for data governance, data quality, and ensuring that users can find and use the data effectively. It includes technical metadata (details about the data model and ETL processes) and business metadata (definitions, rules, and descriptions).

Look for candidates who understand the importance of metadata and can explain how it is used to improve data management and usability. They should also mention any tools or practices they have used to manage metadata effectively.

10. Can you describe the difference between a data warehouse and a data lake?

A data warehouse is a centralized repository that stores structured data from various sources, optimized for query and analysis. It typically involves a predefined schema and is used for business intelligence activities.

A data lake, on the other hand, is a storage repository that can hold large volumes of raw data in its native format, including structured, semi-structured, and unstructured data. Data lakes are used for big data analytics, machine learning, and data exploration.

Candidates should be able to explain these differences and discuss scenarios where each might be appropriate. They should also mention the benefits and challenges associated with data warehouses and data lakes.

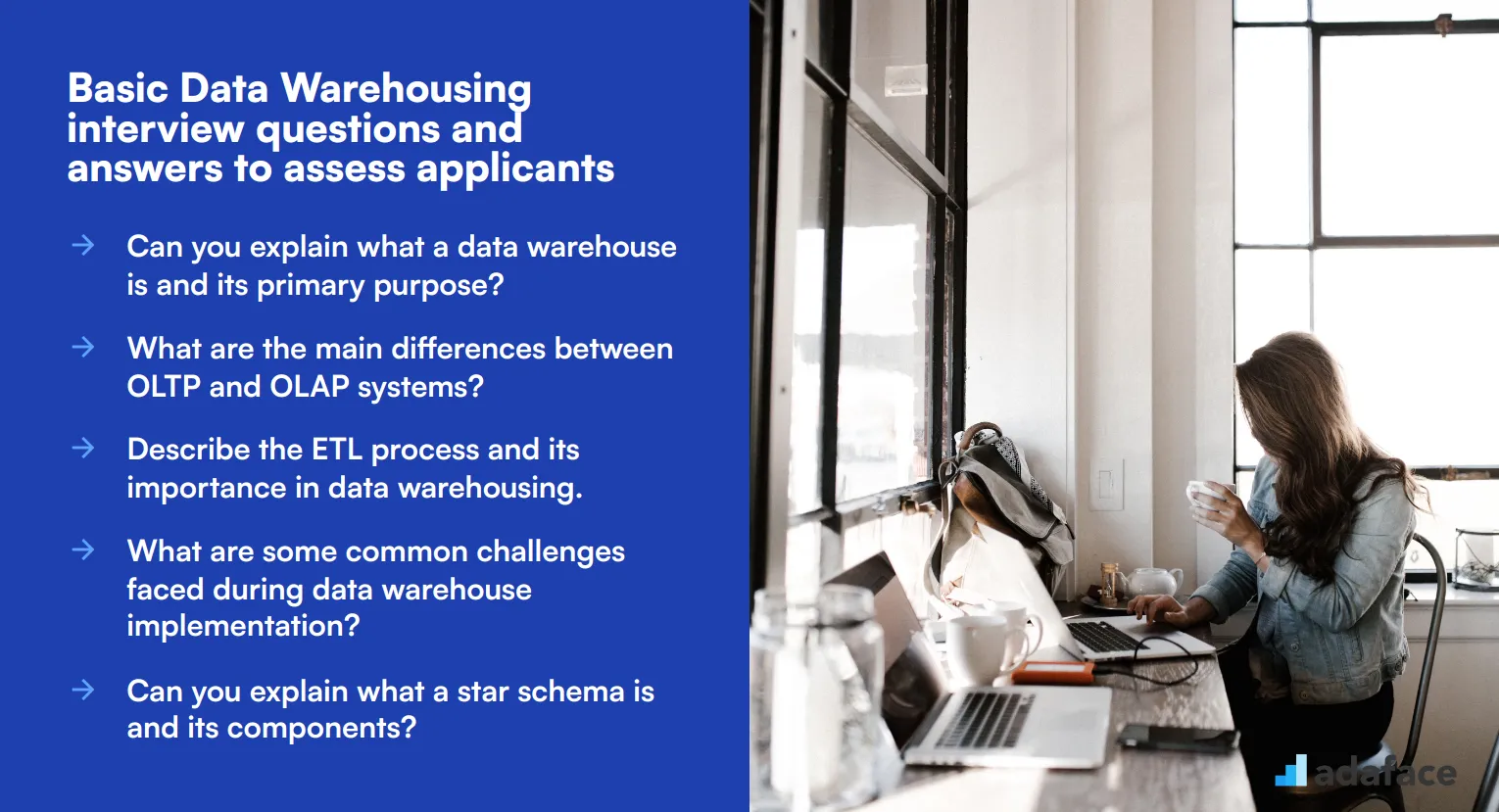

20 Data Warehousing interview questions to ask junior analysts

To assess the foundational knowledge of junior data analysts in data warehousing, consider using these 20 interview questions. These questions are designed to evaluate basic understanding and practical skills, helping you identify candidates with the potential to grow in this field.

- What is dimensional modeling and why is it important in data warehousing?

- Can you explain the concept of a fact table and provide an example?

- How would you approach data cleaning in a warehouse environment?

- What is the difference between a dimension table and a fact table?

- Can you describe what a data mart is and how it relates to a data warehouse?

- What are some common data warehouse performance optimization techniques?

- How would you handle missing data in a data warehouse?

- Can you explain what a surrogate key is and why it's used in data warehousing?

- What is data denormalization and why is it often used in data warehouses?

- How would you ensure data consistency across different sources in a warehouse?

- What is the purpose of a staging area in the ETL process?

- Can you explain what a slowly changing dimension type 2 (SCD2) is?

- How would you handle real-time data integration in a data warehouse?

- What is the difference between a conformed dimension and a non-conformed dimension?

- Can you describe what a fact constellation schema is?

- How would you approach data archiving in a data warehouse?

- What is the purpose of indexing in a data warehouse?

- Can you explain what a junk dimension is and when you might use it?

- How would you handle data versioning in a data warehouse?

- What is the difference between a data warehouse and a operational data store (ODS)?

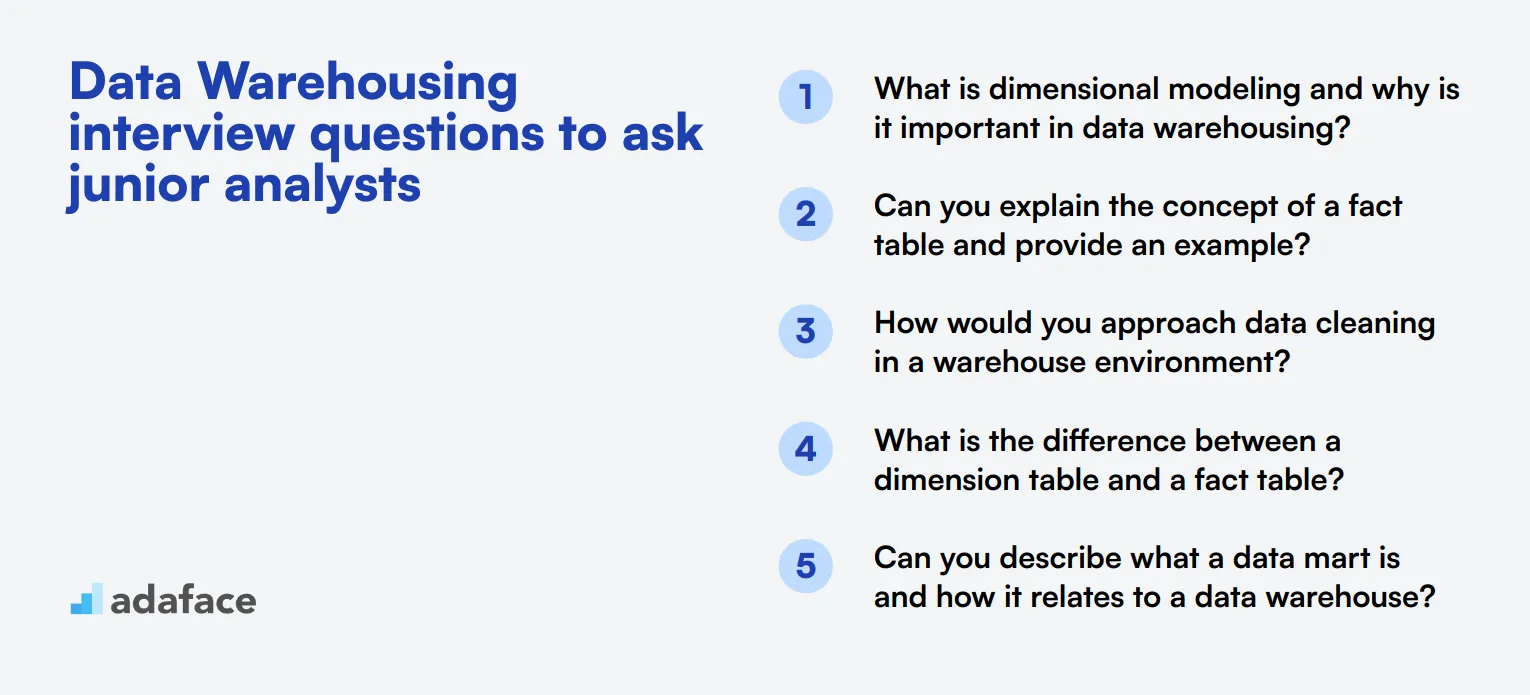

10 intermediate Data Warehousing interview questions and answers to ask mid-tier analysts

To ensure your mid-tier analysts are suitably skilled, these 10 intermediate Data Warehousing interview questions and answers will help you identify candidates with the right experience and knowledge. Use these questions to delve a bit deeper into their understanding and capabilities without going too technical.

1. What strategies would you use to maintain data security in a data warehouse?

Data security in a data warehouse is crucial for protecting sensitive information against unauthorized access and breaches. To maintain data security, one should implement multiple strategies such as encryption, access control, and regular audits.

Encryption ensures that data is unreadable to unauthorized users both at rest and in transit. Access control involves defining roles and permissions to ensure that only authorized personnel can access specific data. Regular audits help in identifying and mitigating potential security vulnerabilities.

Look for candidates who can detail specific measures they have implemented in past roles and who understand the importance of a multi-layered security approach.

2. Can you explain what a data warehouse appliance is and when it might be used?

A data warehouse appliance is an integrated set of hardware and software designed specifically for data warehousing. It typically includes optimized storage, processing power, and database management systems tailored for high-performance data processing and analytics.

Data warehouse appliances are often used in environments requiring rapid deployment, scalability, and high performance with minimal configuration. Organizations choose these appliances to reduce the complexity of setting up and maintaining a data warehouse.

Ideal candidates should be able to discuss the benefits of using a data warehouse appliance and provide examples of scenarios where they have utilized or would recommend using one.

3. How do you handle data integration from multiple sources in a data warehouse?

Handling data integration from multiple sources involves combining data from different databases, applications, or systems into a single, unified warehouse. This process often relies on ETL (Extract, Transform, Load) tools to extract data from the source systems, transform it into a suitable format, and load it into the data warehouse.

Key steps include data profiling to understand the source data, data cleansing to ensure quality, and data mapping to align different data formats. It's also essential to schedule regular data refreshes to keep the warehouse updated.

An effective candidate should demonstrate familiarity with various ETL tools and methodologies and illustrate their ability to manage complex data integration projects successfully.

4. What methods would you use to optimize query performance in a data warehouse?

To optimize query performance in a data warehouse, several methods can be employed. Indexing, partitioning, and materialized views are commonly used techniques.

Indexing improves query speed by allowing faster data retrieval. Partitioning divides large tables into smaller, more manageable pieces, which can reduce the amount of data scanned during a query. Materialized views store precomputed results of queries and can significantly speed up complex calculations.

Look for candidates who can provide examples of how they have used these methods in past projects and who demonstrate an understanding of when and why each technique should be applied.

5. Can you describe the differences between a logical and a physical data model?

A logical data model represents the abstract structure of the data, focusing on the relationships and rules without considering how the data will be physically stored. It includes entities, attributes, and relationships and is used to define business requirements.

In contrast, a physical data model translates the logical model into a specific database schema. It considers the technical aspects, including tables, columns, data types, indexes, and constraints. The physical model is used to implement the database in a specific database management system.

Candidates should be able to explain the importance of both models and how they transition from one to the other. They should also provide examples of their experience with creating and using these models in data warehousing projects.

6. How would you approach capacity planning for a data warehouse?

Capacity planning for a data warehouse involves estimating the storage, processing, and memory requirements to ensure optimal performance and scalability. It includes analyzing current data volumes, growth trends, and the expected workload.

Key steps include assessing data retention policies, compression techniques, and future data growth. It also involves considering the performance requirements for queries and data loads. Regularly reviewing and adjusting the capacity plan is essential to accommodate changing needs.

Look for candidates who can discuss their experience with capacity planning and who demonstrate an understanding of balancing cost, performance, and scalability in their planning process.

7. What is the role of a data warehouse in business intelligence (BI)?

A data warehouse plays a central role in business intelligence by providing a consolidated and consistent data source for reporting and analytics. It aggregates data from various sources, enabling organizations to gain insights and make informed decisions.

In BI, a data warehouse supports complex queries, historical data analysis, and trend identification. It serves as the foundation for data visualization tools, dashboards, and reporting systems, facilitating better data-driven decision-making.

Candidates should explain how they have used data warehouses to support BI initiatives and provide examples of how BI has benefited from a well-structured data warehousing environment.

8. How do you ensure the scalability of a data warehouse?

Ensuring the scalability of a data warehouse involves designing it to handle increasing data volumes and user loads without compromising performance. Key strategies include using scalable architectures, optimizing data storage, and implementing efficient indexing and partitioning techniques.

Cloud-based solutions can offer elastic scalability, allowing resources to be dynamically adjusted based on demand. Additionally, employing distributed computing and parallel processing can enhance scalability.

Look for candidates who can discuss their experience with scalable data warehousing technologies and provide examples of how they have scaled data warehouses to meet growing organizational needs.

9. Can you explain the concept of data warehousing as a service (DWaaS)?

Data Warehousing as a Service (DWaaS) is a cloud-based service that provides data warehousing capabilities without the need for on-premises infrastructure. It offers scalable storage, processing, and management of data, allowing organizations to focus on data analysis rather than infrastructure maintenance.

DWaaS solutions typically include ETL tools, data integration services, and analytics platforms. They provide flexibility, cost-efficiency, and ease of use, making them attractive for organizations looking to leverage data warehousing without significant upfront investments.

Candidates should explain the benefits of DWaaS, such as scalability, cost savings, and reduced maintenance, and provide examples of their experience with DWaaS platforms like Amazon Redshift or Google BigQuery.

10. How would you approach troubleshooting performance issues in a data warehouse?

Troubleshooting performance issues in a data warehouse requires a systematic approach to identify and resolve bottlenecks. Key steps include analyzing query performance, checking indexing strategies, and reviewing data storage and partitioning methods.

Monitoring tools can provide insights into resource utilization, query execution times, and system logs. Identifying and addressing inefficient queries, optimizing indexes, and ensuring proper data distribution are essential for improving performance.

Candidates should demonstrate their experience with troubleshooting tools and techniques and provide examples of how they have successfully resolved performance issues in past projects.

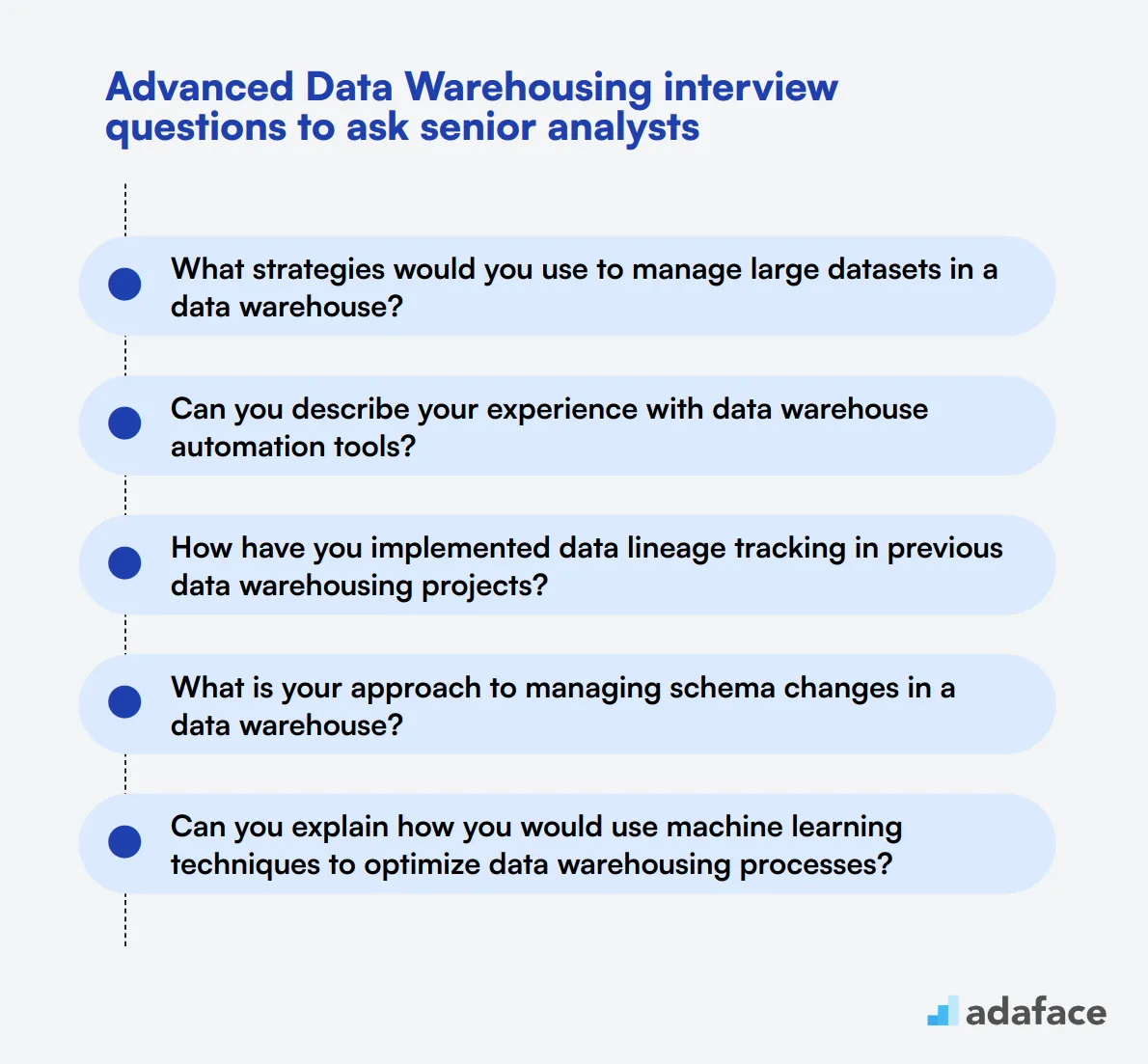

15 advanced Data Warehousing interview questions to ask senior analysts

To ensure you identify the most qualified candidates for senior analyst positions, use this list of advanced Data Warehousing interview questions. The questions are designed to examine a candidate's deep understanding and practical experience in data warehousing. For more insights into specific roles, you might also find the data architect job description useful.

- What strategies would you use to manage large datasets in a data warehouse?

- Can you describe your experience with data warehouse automation tools?

- How have you implemented data lineage tracking in previous data warehousing projects?

- What is your approach to managing schema changes in a data warehouse?

- Can you explain how you would use machine learning techniques to optimize data warehousing processes?

- Describe a time when you had to reconcile data discrepancies in a data warehouse. How did you approach it?

- How do you ensure compliance with data governance policies in a data warehouse?

- Can you discuss your experience with cloud-based data warehouse solutions?

- What methods do you use for real-time analytics in a data warehouse environment?

- Describe how you would optimize the storage and retrieval of semi-structured data in a data warehouse.

- Can you explain the role of data warehousing in supporting predictive analytics?

- How do you manage and monitor data warehouse performance over time?

- Describe your experience with data warehouse migration projects.

- What are your best practices for designing scalable data warehouses?

- How do you handle data warehouse documentation and knowledge transfer within a team?

8 Data Warehousing interview questions and answers related to data modeling

In the world of data warehousing, data modeling is a critical skill that ensures your data is structured efficiently for optimal access and analysis. Use these questions to gauge a candidate's understanding of data modeling concepts and their ability to apply them in practical scenarios.

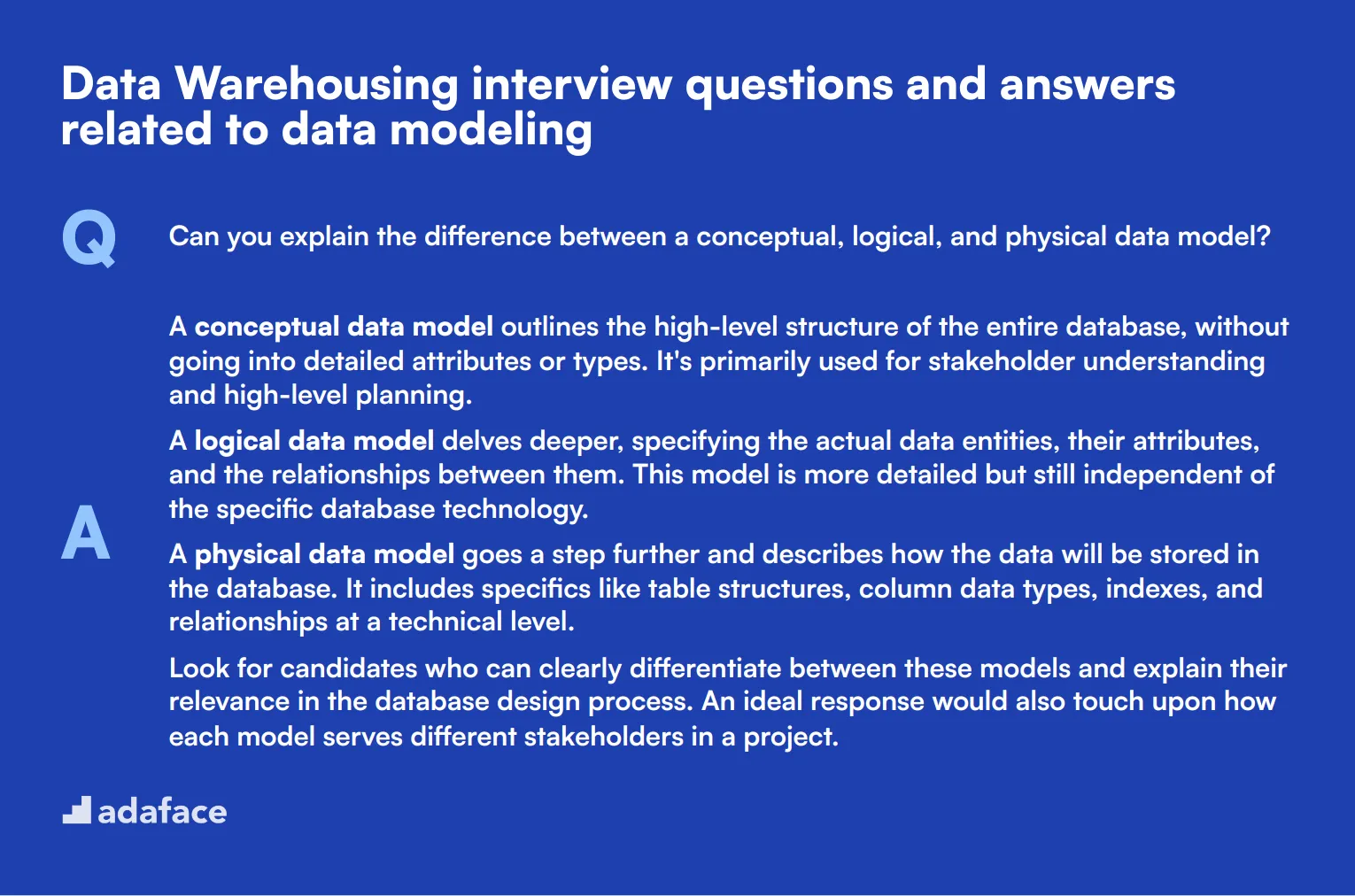

1. Can you explain the difference between a conceptual, logical, and physical data model?

A conceptual data model outlines the high-level structure of the entire database, without going into detailed attributes or types. It's primarily used for stakeholder understanding and high-level planning.

A logical data model delves deeper, specifying the actual data entities, their attributes, and the relationships between them. This model is more detailed but still independent of the specific database technology.

A physical data model goes a step further and describes how the data will be stored in the database. It includes specifics like table structures, column data types, indexes, and relationships at a technical level.

Look for candidates who can clearly differentiate between these models and explain their relevance in the database design process. An ideal response would also touch upon how each model serves different stakeholders in a project.

2. What is a snowflake schema, and how does it differ from a star schema?

A snowflake schema is an extension of the star schema where dimension tables are normalized into multiple related tables. This reduces redundancy and can make the database more organized, but it can also make queries more complex.

In contrast, a star schema consists of a central fact table surrounded by denormalized dimension tables, making it simpler and faster for queries. However, it can lead to data redundancy.

Candidates should be able to discuss the pros and cons of each schema type and provide examples of when each would be appropriate. An ideal response would also touch on performance impacts and maintenance considerations.

3. How do you approach designing a data model for a new data warehouse?

Designing a data model starts with understanding the business requirements. This involves identifying key entities, their attributes, and how they relate to each other based on the business processes.

Next, candidates should draft a conceptual model to outline the high-level structure, followed by a logical model to detail the entities and relationships. The final step is creating a physical model to specify the actual database schema.

Look for candidates who emphasize the importance of iterative feedback and validation with stakeholders throughout the design process. An ideal response would also mention the use of tools and techniques for ensuring data integrity and performance optimization.

4. Can you explain the role of normalization in data modeling?

Normalization is the process of organizing data to reduce redundancy and improve data integrity. It involves dividing large tables into smaller, related tables and defining relationships between them.

The primary goal is to ensure that each piece of data is stored only once, which reduces the risk of anomalies and inconsistencies. Normalization typically follows a series of rules called normal forms, each addressing specific types of redundancy.

Candidates should be able to explain the trade-offs between normalization and denormalization, particularly in the context of performance and query efficiency in a data warehouse. An ideal response would demonstrate an understanding of when to apply each technique.

5. What is a data modeler's role in a data warehousing project?

A data modeler is responsible for defining the structure of the data warehouse, ensuring it meets business requirements and supports efficient data retrieval. This involves creating conceptual, logical, and physical data models.

They work closely with stakeholders to understand data needs, ensure data integrity, and optimize the database for performance. Their role also includes maintaining documentation and evolving the data model as business requirements change.

Look for candidates who can articulate the importance of collaboration with other team members, such as data architects and business analysts. An ideal response would highlight specific tools and techniques they have used in past projects.

6. How do you handle data redundancy in a data model?

Data redundancy can be minimized through normalization, which involves organizing data into multiple related tables and reducing duplicates. However, in a data warehouse, some level of redundancy might be acceptable for performance reasons.

Another approach is to use data deduplication techniques and ensure that master data management principles are followed to maintain a single source of truth.

Candidates should demonstrate an understanding of the balance between performance and storage efficiency. An ideal response would include examples of how they have managed redundancy in previous projects and any tools or methods they employed.

7. What are some common pitfalls in data modeling, and how do you avoid them?

Common pitfalls include over-normalization, which can lead to complex queries and reduced performance; under-normalization, resulting in data redundancy; and not involving stakeholders in the design process, leading to models that don't meet business needs.

To avoid these pitfalls, it's crucial to maintain a balance between normalization and performance, involve stakeholders early and often, and continuously validate the model against business requirements.

Look for candidates who can provide specific examples of how they have navigated these challenges in the past. An ideal response would also mention any best practices or frameworks they follow to ensure robust data models.

8. How do you ensure the scalability of a data model in a data warehouse?

Scalability can be ensured by designing the data model to handle increasing data volumes and user queries without significant performance degradation. This includes using efficient indexing, partitioning large tables, and optimizing query performance.

It's also important to consider future data growth and business changes during the initial design phase. Regularly updating the model and architecture to adapt to new requirements is crucial.

Candidates should demonstrate an understanding of both the technical and business aspects of scalability. An ideal response would include examples of how they have designed scalable models in the past, along with any specific techniques or tools they used.

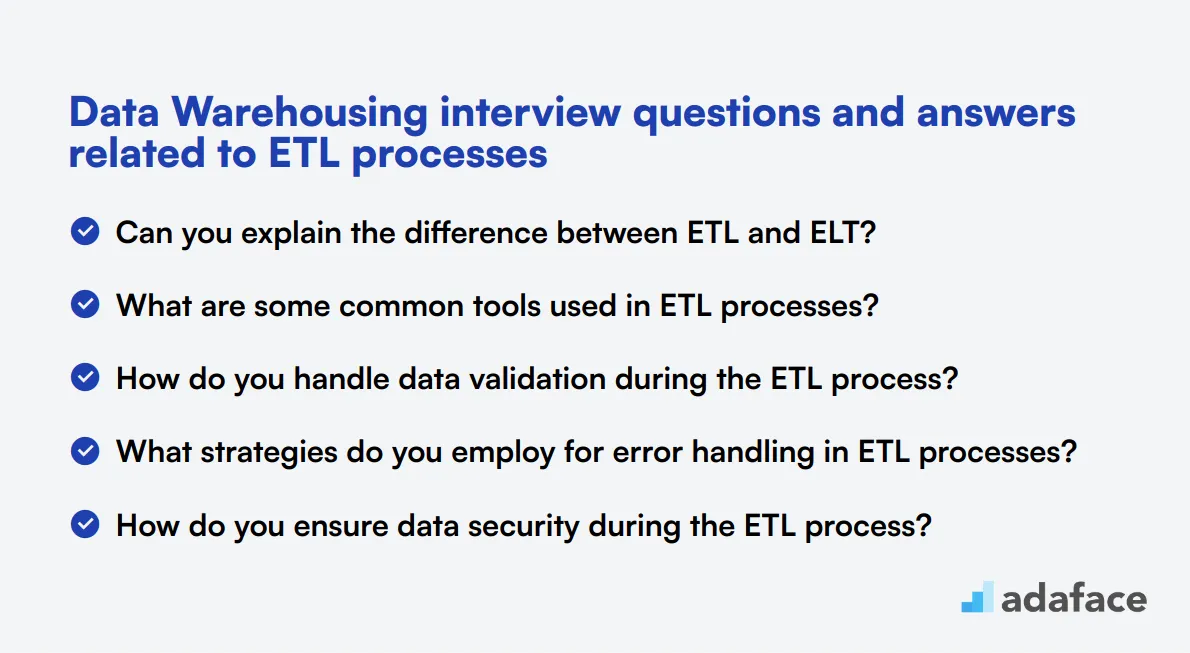

7 Data Warehousing interview questions and answers related to ETL processes

To determine whether your candidates have a firm grasp on ETL processes, ask them some of these interview questions. These queries will help you gauge their understanding of extracting, transforming, and loading data—crucial skills for any data warehousing role.

1. Can you explain the difference between ETL and ELT?

ETL stands for Extract, Transform, Load, and it involves extracting data from various sources, transforming it into a suitable format or structure, and then loading it into a data warehouse. ELT, on the other hand, stands for Extract, Load, Transform, where the data is first extracted and loaded into the data warehouse before any transformation takes place.

In ETL, the transformation occurs outside the data warehouse, whereas in ELT, it occurs within the data warehouse. This often makes ELT more suitable for large volumes of data, leveraging the power of the data warehouse's processing capabilities.

Look for candidates who understand the nuances and can articulate scenarios where one approach might be more beneficial than the other.

2. What are some common tools used in ETL processes?

Common ETL tools include Informatica PowerCenter, Talend, Apache Nifi, Microsoft SQL Server Integration Services (SSIS), and IBM InfoSphere DataStage. These tools help in automating the ETL processes, making data integration more efficient and reliable.

Candidates should also mention open-source options like Apache Camel or more specialized ones like Matillion for cloud-based ETL processes. The choice of tool often depends on the specific requirements of the project, such as data volume, complexity, and the existing tech stack.

Strong candidates will not only list tools but also provide insights into their strengths and weaknesses, helping you identify their practical experience with these technologies.

3. How do you handle data validation during the ETL process?

Data validation in ETL involves ensuring that the data being extracted, transformed, and loaded meets quality standards. This can be done through a variety of checks, such as verifying data formats, checking for missing values, and ensuring data consistency across sources.

Automated data validation tools and scripts are often used to streamline this process. For instance, using checksum or hash functions can help verify data integrity during transfer. Additionally, implementing validation rules within the ETL tool can catch errors early in the process.

Look for candidates who mention specific techniques and tools they have used for data validation, as well as their approach to handling errors when they are detected.

4. What strategies do you employ for error handling in ETL processes?

Effective error handling strategies in ETL involve logging errors, identifying the root cause, and implementing corrective actions. This can include retry mechanisms, fallback procedures, and detailed error logging to capture the context of the error.

Some ETL tools have built-in error handling capabilities, such as Informatica's error handling transformations or SSIS's error output configurations. These can be used to redirect erroneous data to error tables or files for further investigation.

Candidates should demonstrate a systematic approach to error handling and provide examples of how they have resolved specific issues in past projects.

5. How do you ensure data security during the ETL process?

Ensuring data security during ETL involves encrypting data during transfer, implementing access controls, and following best practices for data handling. This includes using secure protocols like HTTPS or SFTP for data transfer and encrypting sensitive data fields.

Access controls should be enforced at multiple layers, including the ETL tool, source systems, and the data warehouse. Role-based access control (RBAC) can help ensure that only authorized users can access or modify the data.

Candidates should mention specific measures they have taken to secure data and any compliance standards they have adhered to, such as GDPR or HIPAA.

6. What is your approach to optimizing ETL performance?

Optimizing ETL performance involves several techniques, including efficient data extraction methods, parallel processing, and incremental loading. Reducing the amount of data being processed by filtering out unnecessary records early can also significantly improve performance.

Using database-specific optimizations, such as indexing and partitioning, can enhance the performance of the transformation and loading phases. Additionally, leveraging in-memory processing and distributed computing frameworks like Apache Spark can further boost performance.

Look for candidates who can discuss specific optimizations they have implemented and the impact these had on ETL performance.

7. How do you manage ETL process scheduling and monitoring?

Scheduling and monitoring ETL processes typically involve using the scheduling features built into ETL tools or external schedulers like Apache Airflow or Cron jobs. Effective monitoring includes setting up alerts for job failures and performance issues.

ETL tools often provide dashboards and logs to track the status of ETL jobs. Implementing automated notifications and retry mechanisms can help quickly address any issues that arise.

Candidates should demonstrate familiarity with scheduling tools and techniques for monitoring ETL processes, along with examples of how they have maintained ETL pipelines in previous roles.

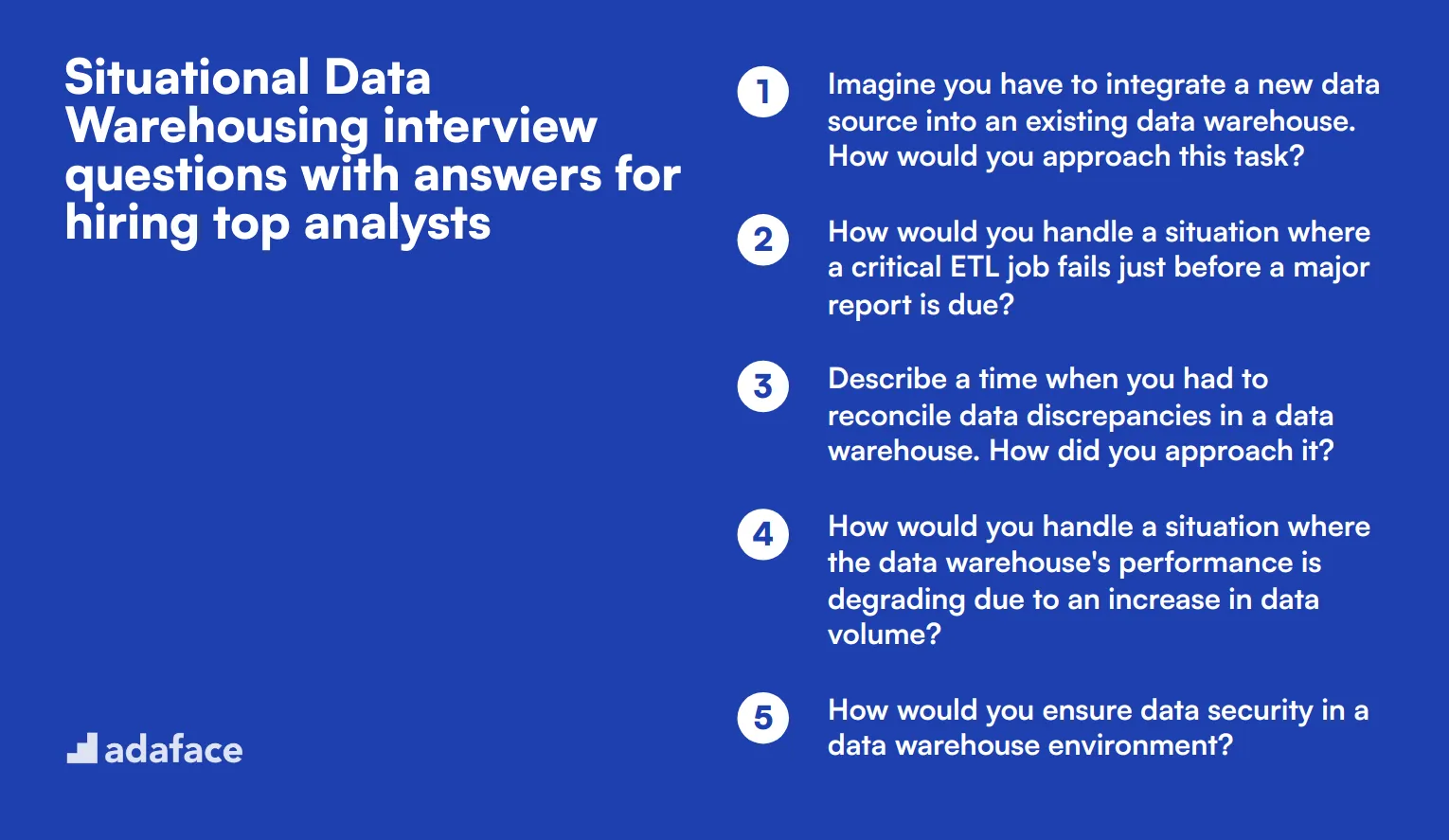

8 situational Data Warehousing interview questions with answers for hiring top analysts

To determine whether your candidates have the situational awareness and problem-solving skills needed for effective data warehousing, you can ask them these 8 specific interview questions. These questions will help you gauge how candidates handle real-world scenarios and challenges that may arise in your organization.

1. Imagine you have to integrate a new data source into an existing data warehouse. How would you approach this task?

First, I would conduct a thorough analysis of the new data source to understand its structure, quality, and relevance to the existing data warehouse. This involves identifying the key data elements and any potential discrepancies or conflicts with the current data model.

Next, I would establish a data integration plan that includes data mapping, transformation rules, and a clear ETL process. This plan would also account for data validation and testing to ensure that the new data integrates seamlessly without compromising the quality or integrity of the existing data.

Look for candidates who emphasize the importance of thorough analysis, meticulous planning, and rigorous testing in their approach. Follow up by asking about specific tools or methods they would use to handle data integration.

2. How would you handle a situation where a critical ETL job fails just before a major report is due?

In such a scenario, my first step would be to quickly diagnose the root cause of the failure—whether it's due to a data issue, system error, or performance bottleneck. I would check the logs and error messages to pinpoint the exact problem.

Once the issue is identified, I would either fix it immediately if it's a minor issue or implement a workaround to ensure the data needed for the report is available. If necessary, I would communicate with stakeholders to manage expectations and provide a revised timeline for the report delivery.

An ideal candidate will demonstrate a sense of urgency, effective problem-solving skills, and clear communication abilities. They should also highlight their experience with using monitoring and alerting tools to minimize the impact of such failures.

3. Describe a time when you had to reconcile data discrepancies in a data warehouse. How did you approach it?

When faced with data discrepancies, my first step is to identify the source and nature of the discrepancies. This involves comparing data across different systems or data sources to understand where and why the inconsistencies occur.

Next, I would implement a reconciliation process that includes data validation, correction, and documentation. This might involve writing scripts to automate the comparison and correction process or manually updating the data based on verified sources.

Recruiters should look for candidates who emphasize a systematic and thorough approach to data reconciliation. Follow up by asking about specific tools or techniques they have used to streamline the process.

4. How would you handle a situation where the data warehouse's performance is degrading due to an increase in data volume?

To address performance degradation, I would start by analyzing the current workload and identifying the bottlenecks. This could involve reviewing query performance, indexing strategies, and hardware utilization.

Based on the analysis, I would implement performance optimization techniques such as partitioning large tables, optimizing queries, and adding or updating indexes. Additionally, I might consider scaling the hardware resources or exploring cloud-based solutions to handle the increased data volume more efficiently.

Candidates should demonstrate a proactive approach to performance management and a solid understanding of various optimization techniques. Look for specific examples of how they have successfully improved data warehouse performance in the past.

5. How would you ensure data security in a data warehouse environment?

Ensuring data security starts with implementing robust access controls to restrict data access to authorized users only. This involves setting up user roles, permissions, and regularly reviewing access logs.

Additionally, I would ensure that data is encrypted both at rest and in transit. This includes using encryption protocols and secure data transfer methods. Regular security audits and vulnerability assessments are also critical to identify and mitigate potential security risks.

An ideal candidate will highlight their experience with various data security measures and emphasize the importance of continuous monitoring and auditing. Follow up by asking about specific security tools and protocols they have used.

6. Can you describe a challenging data migration project you worked on and how you managed it?

In a challenging data migration project, my first step was to develop a comprehensive migration plan that included data mapping, migration scripts, and a detailed timeline. This plan also accounted for data validation and testing to ensure accuracy and completeness.

Throughout the project, I maintained clear communication with all stakeholders to manage expectations and provide regular updates on progress. I also set up a rollback plan to address any issues that might arise during the migration process.

Look for candidates who demonstrate strong project management skills, attention to detail, and effective communication. Follow up by asking about specific challenges they faced and how they overcame them.

7. How do you prioritize tasks when managing multiple data warehousing projects simultaneously?

When managing multiple projects, I prioritize tasks based on their impact, urgency, and dependencies. I start by creating a detailed project plan for each project, outlining the key milestones, deliverables, and deadlines.

I use project management tools to track progress and ensure that all tasks are aligned with the overall project goals. Regular check-ins with the team and stakeholders help me stay on top of any potential issues and make adjustments as needed to keep everything on track.

Candidates should demonstrate strong organizational and time management skills. Follow up by asking about specific tools or methods they use to manage multiple projects effectively.

8. How would you handle a situation where the business requirements for a data warehouse project change midway through the implementation?

In such a situation, my first step would be to thoroughly understand the new requirements and assess their impact on the existing project plan. This involves engaging with stakeholders to clarify the changes and their implications.

Next, I would update the project plan to incorporate the new requirements, including revising timelines, resources, and deliverables. Clear communication with the team and stakeholders is crucial to ensure everyone is aligned and aware of the changes.

Look for candidates who emphasize flexibility, strong communication skills, and the ability to manage change effectively. Follow up by asking about specific instances where they successfully managed changing requirements.

Which Data Warehousing skills should you evaluate during the interview phase?

While it's impossible to assess every aspect of a candidate's Data Warehousing expertise in a single interview, focusing on core skills is crucial. These key areas will help you evaluate a candidate's proficiency and potential fit for your Data Warehousing team.

SQL

SQL is the backbone of Data Warehousing. It's essential for querying, manipulating, and managing large datasets within a data warehouse environment.

To evaluate SQL skills, consider using an SQL online test that includes relevant MCQs. This can help filter candidates based on their SQL proficiency.

During the interview, ask targeted questions to assess the candidate's SQL expertise. Here's an example:

Can you explain the difference between a LEFT JOIN and an INNER JOIN, and provide a scenario where you'd use each?

Look for a clear explanation of how these joins work and practical examples of their application in data warehousing scenarios. This will reveal the candidate's understanding of SQL fundamentals and their ability to apply them in real-world situations.

Data Modeling

Data modeling is crucial for designing efficient and scalable data warehouse structures. It involves creating logical and physical representations of data to support business requirements.

To assess data modeling skills, you can use a data modeling test that covers key concepts and best practices.

During the interview, ask a question that tests the candidate's understanding of data modeling principles:

Describe the differences between star schema and snowflake schema in data warehouse design. When would you choose one over the other?

Look for a comprehensive explanation of both schemas, including their structure, advantages, and disadvantages. The candidate should demonstrate an understanding of when each schema is most appropriate based on specific business needs and data complexity.

ETL Processes

ETL (Extract, Transform, Load) processes are fundamental to data warehousing. They involve moving data from various sources into the warehouse while ensuring data quality and consistency.

To evaluate ETL skills, consider using an ETL online test that covers various aspects of the ETL process.

During the interview, ask a question that assesses the candidate's practical experience with ETL:

Describe a challenging ETL issue you've encountered and how you resolved it. What tools or techniques did you use?

Look for a detailed explanation of the problem, the approach taken to solve it, and the specific tools or techniques used. This will reveal the candidate's problem-solving skills and hands-on experience with ETL processes in real-world scenarios.

Tips for Effective Data Warehousing Interviews

Before you start applying what you've learned, here are some tips to make your Data Warehousing interviews more effective and insightful.

1. Incorporate Skills Tests in Your Screening Process

Skills tests can provide objective data on a candidate's abilities before the interview stage. They help filter out unqualified candidates and allow you to focus on the most promising ones.

For Data Warehousing roles, consider using tests that evaluate SQL skills, data modeling knowledge, and ETL processes understanding. You might also want to assess their familiarity with specific tools like Teradata or Informatica.

By using these tests, you can create a shortlist of candidates who have demonstrated the technical skills required for the role. This approach saves time and ensures that your interviews are spent discussing more complex topics and assessing cultural fit.

2. Prepare a Balanced Set of Interview Questions

Time is limited during interviews, so it's crucial to choose questions that cover key aspects of Data Warehousing. Aim for a mix of technical, problem-solving, and experience-based questions.

Include questions about data modeling, ETL processes, and database optimization. Also, consider adding questions about business intelligence to assess their understanding of how data warehouses support decision-making.

Don't forget to assess soft skills like communication and teamwork, which are important for collaborating with stakeholders and other team members.

3. Master the Art of Follow-Up Questions

Prepared questions are a good start, but follow-up questions can reveal a candidate's true depth of knowledge and experience. They help you distinguish between candidates who have memorized answers and those who truly understand the concepts.

For example, after asking about data modeling techniques, you might follow up with a question about how they've handled a specific challenge in a past project. This approach allows you to assess their problem-solving skills and real-world application of knowledge.

Use Data Warehousing interview questions and skills tests to hire talented analysts

If you're looking to hire someone with Data Warehousing skills, you need to ensure they possess the necessary expertise. The best way to do this is by using skill tests such as our Data Warehouse Online Test or Data Modeling Test.

By using these tests, you can shortlist the most qualified applicants and invite them for interviews. To get started, you can sign up or explore our online assessment platform for more details.

Data Warehouse Online Test

Download Data Warehousing interview questions template in multiple formats

Data Warehousing Interview Questions FAQs

Key skills include proficiency in SQL, experience with ETL processes, data modeling, and familiarity with data warehousing tools.

Ask questions about their previous data modeling projects, the tools they used, and their approach to solving complex data challenges.

Common ETL tools include Informatica, Talend, Microsoft SSIS, and Apache Nifi.

Situational questions reveal a candidate's problem-solving abilities, their approach to real-world scenarios, and how they handle pressure.

Combining technical and situational questions provides a holistic view of a candidate’s skills, experience, and theoretical knowledge.

Focus on advanced technical skills, leadership capabilities, project management experience, and their ability to mentor junior team members.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources