Data mining is a critical skill for many roles in today's data-driven business world. As a recruiter or hiring manager, having a go-to list of targeted interview questions can help you effectively evaluate candidates' data mining expertise and find the right fit for your team.

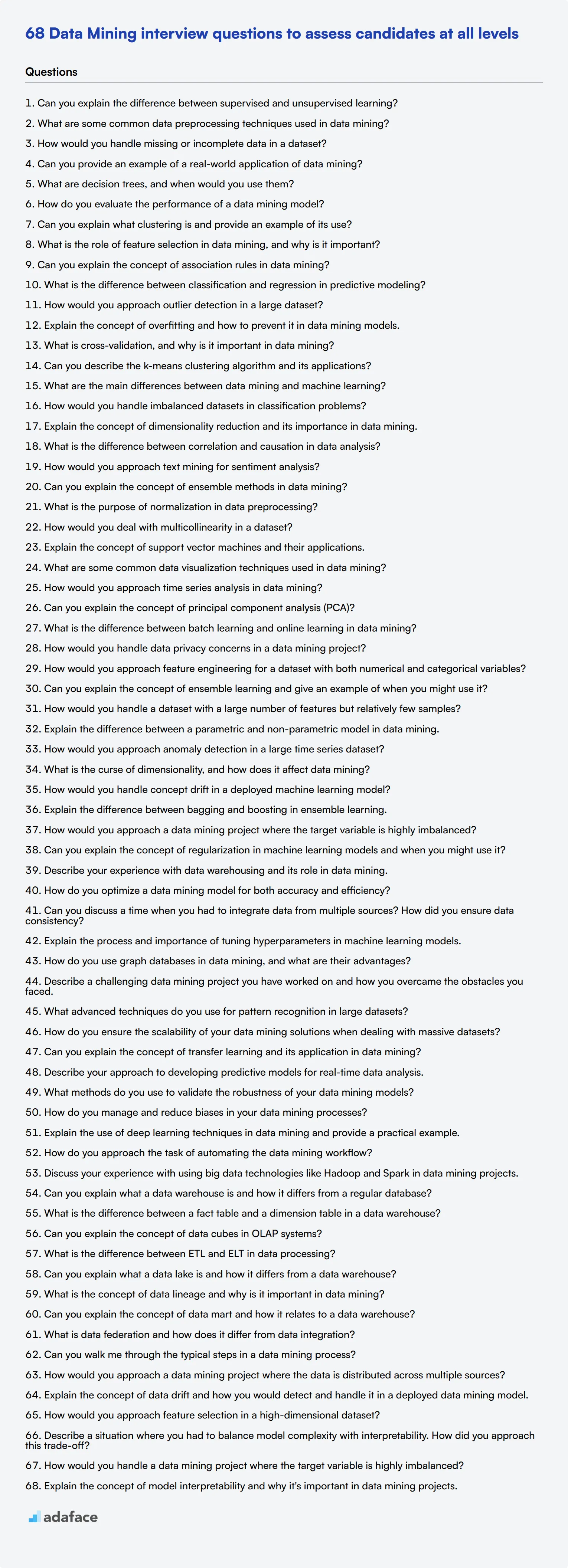

This post provides a comprehensive set of data mining interview questions, organized by experience level and topic area. From general concepts for junior analysts to advanced techniques for senior roles, you'll find questions to assess candidates at every stage of their data mining career.

Use these questions to gain deeper insights into applicants' skills and thought processes during interviews. For an even more thorough evaluation, consider incorporating a data mining skills assessment as part of your screening process.

Table of contents

8 general Data Mining interview questions and answers to assess applicants

To effectively assess whether your candidates possess a solid understanding of data mining concepts and can apply them in real-world scenarios, use these carefully curated interview questions. These questions are designed to gauge both their theoretical knowledge and practical skills in data mining.

1. Can you explain the difference between supervised and unsupervised learning?

Supervised learning involves training a model on labeled data, which means the data includes the input-output pairs. The model learns to predict the output from the input data based on this training.

Unsupervised learning, on the other hand, deals with unlabeled data. The model tries to identify patterns and relationships in the data without any specific output variable to guide it. Examples include clustering and association.

Look for candidates who can clearly articulate these differences and provide examples of algorithms used in each type. Follow up by asking for real-world applications to ensure they can contextualize the concepts.

2. What are some common data preprocessing techniques used in data mining?

Data preprocessing is crucial to prepare raw data for analysis. Common techniques include data cleaning, which involves handling missing values and outliers; data normalization or scaling, which ensures different data features are on a similar scale; and data transformation, which includes encoding categorical variables and reducing dimensionality through methods like PCA (Principal Component Analysis).

Understanding these techniques is essential for effective data mining, as they directly impact the quality of the results. Candidates should demonstrate familiarity with these methods and their importance in ensuring the accuracy and reliability of data mining outputs.

3. How would you handle missing or incomplete data in a dataset?

Handling missing data can be approached in several ways depending on the context and the extent of the missing values. Common methods include removing rows or columns with missing values, which is feasible when the data loss is minimal, or imputing missing values using statistical methods like mean, median, or mode imputation, or more sophisticated techniques like K-Nearest Neighbors (KNN) imputation.

Candidates should also be aware of the implications of each method on the dataset's integrity and the subsequent analysis. An ideal response would discuss the trade-offs involved and the importance of domain knowledge in making the best decision.

4. Can you provide an example of a real-world application of data mining?

One common application of data mining is in the retail industry for market basket analysis. This technique helps retailers understand the purchase behavior of customers by identifying associations between different products. For example, if customers often buy bread and butter together, stores can place these items close to each other to increase sales.

Other examples include fraud detection in banking, where data mining techniques are used to identify unusual patterns that may indicate fraudulent activity, and personalized marketing, where customer data is analyzed to tailor marketing campaigns to individual preferences.

Look for candidates who can provide clear, real-world examples and articulate the benefits and challenges of these applications. This demonstrates their ability to apply theoretical knowledge to practical situations.

5. What are decision trees, and when would you use them?

Decision trees are a type of predictive modeling algorithm used for classification and regression tasks. They work by splitting the data into subsets based on the value of input features, creating a tree-like structure of decisions. Each node in the tree represents a feature, each branch represents a decision rule, and each leaf represents an outcome.

Decision trees are particularly useful when you need a model that is easy to interpret and visualize. They handle both numerical and categorical data and can capture non-linear relationships. However, they can be prone to overfitting if not properly pruned.

An ideal candidate should demonstrate an understanding of both the strengths and limitations of decision trees and discuss scenarios where they are particularly effective, such as in customer segmentation or risk assessment.

6. How do you evaluate the performance of a data mining model?

Evaluating the performance of a data mining model involves several metrics, depending on the type of task. For classification tasks, common metrics include accuracy, precision, recall, F1-score, and AUC-ROC. For regression tasks, metrics like Mean Absolute Error (MAE), Mean Squared Error (MSE), and R-squared are often used.

Cross-validation is another important technique for model evaluation, as it helps ensure that the model generalizes well to unseen data. It involves dividing the dataset into multiple folds and training the model on different subsets while evaluating it on the remaining data.

Candidates should understand the importance of using multiple metrics to get a comprehensive view of model performance and avoid overreliance on a single metric. Look for explanations that show a clear understanding of why these metrics matter and how they can be applied in practice.

7. Can you explain what clustering is and provide an example of its use?

Clustering is an unsupervised learning technique used to group similar data points into clusters based on their features. The goal is to maximize the similarity within clusters and minimize the similarity between different clusters. K-means and hierarchical clustering are popular algorithms used for this purpose.

A practical example of clustering can be found in customer segmentation, where businesses group customers based on purchasing behavior, demographics, or other attributes. This helps in tailoring marketing strategies and improving customer service.

Evaluate if the candidate can clearly explain the concept and discuss its practical applications. An ideal response would include how clustering helps in understanding data better and making informed business decisions.

8. What is the role of feature selection in data mining, and why is it important?

Feature selection involves choosing a subset of relevant features (variables) for use in model construction. This process helps in improving model performance by reducing overfitting, enhancing the model’s generalizability, and decreasing training time.

Important techniques for feature selection include filter methods (like correlation coefficients), wrapper methods (like recursive feature elimination), and embedded methods (like feature importance from tree-based models).

Candidates should emphasize the significance of feature selection in creating efficient and effective models. Look for detailed explanations of different techniques and scenarios where feature selection has notably improved their model outcomes.

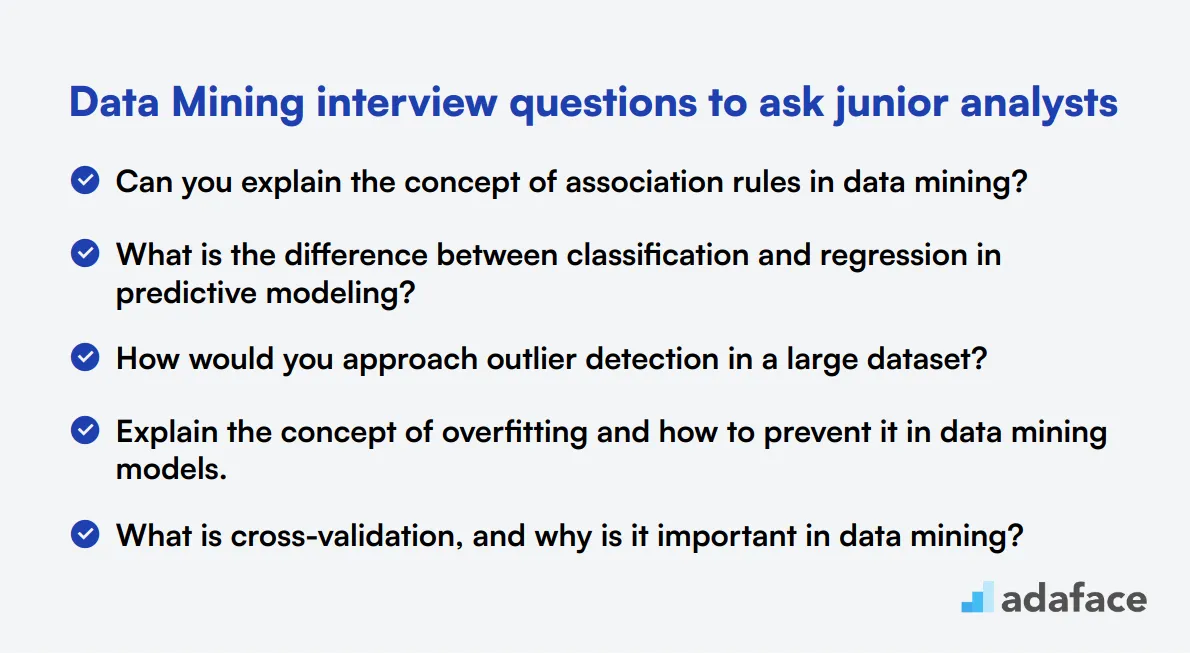

20 Data Mining interview questions to ask junior analysts

To assess junior data analysts effectively, use these 20 data mining interview questions. They help gauge fundamental understanding and practical skills in data mining techniques, ensuring you identify candidates with the right potential for your team.

- Can you explain the concept of association rules in data mining?

- What is the difference between classification and regression in predictive modeling?

- How would you approach outlier detection in a large dataset?

- Explain the concept of overfitting and how to prevent it in data mining models.

- What is cross-validation, and why is it important in data mining?

- Can you describe the k-means clustering algorithm and its applications?

- What are the main differences between data mining and machine learning?

- How would you handle imbalanced datasets in classification problems?

- Explain the concept of dimensionality reduction and its importance in data mining.

- What is the difference between correlation and causation in data analysis?

- How would you approach text mining for sentiment analysis?

- Can you explain the concept of ensemble methods in data mining?

- What is the purpose of normalization in data preprocessing?

- How would you deal with multicollinearity in a dataset?

- Explain the concept of support vector machines and their applications.

- What are some common data visualization techniques used in data mining?

- How would you approach time series analysis in data mining?

- Can you explain the concept of principal component analysis (PCA)?

- What is the difference between batch learning and online learning in data mining?

- How would you handle data privacy concerns in a data mining project?

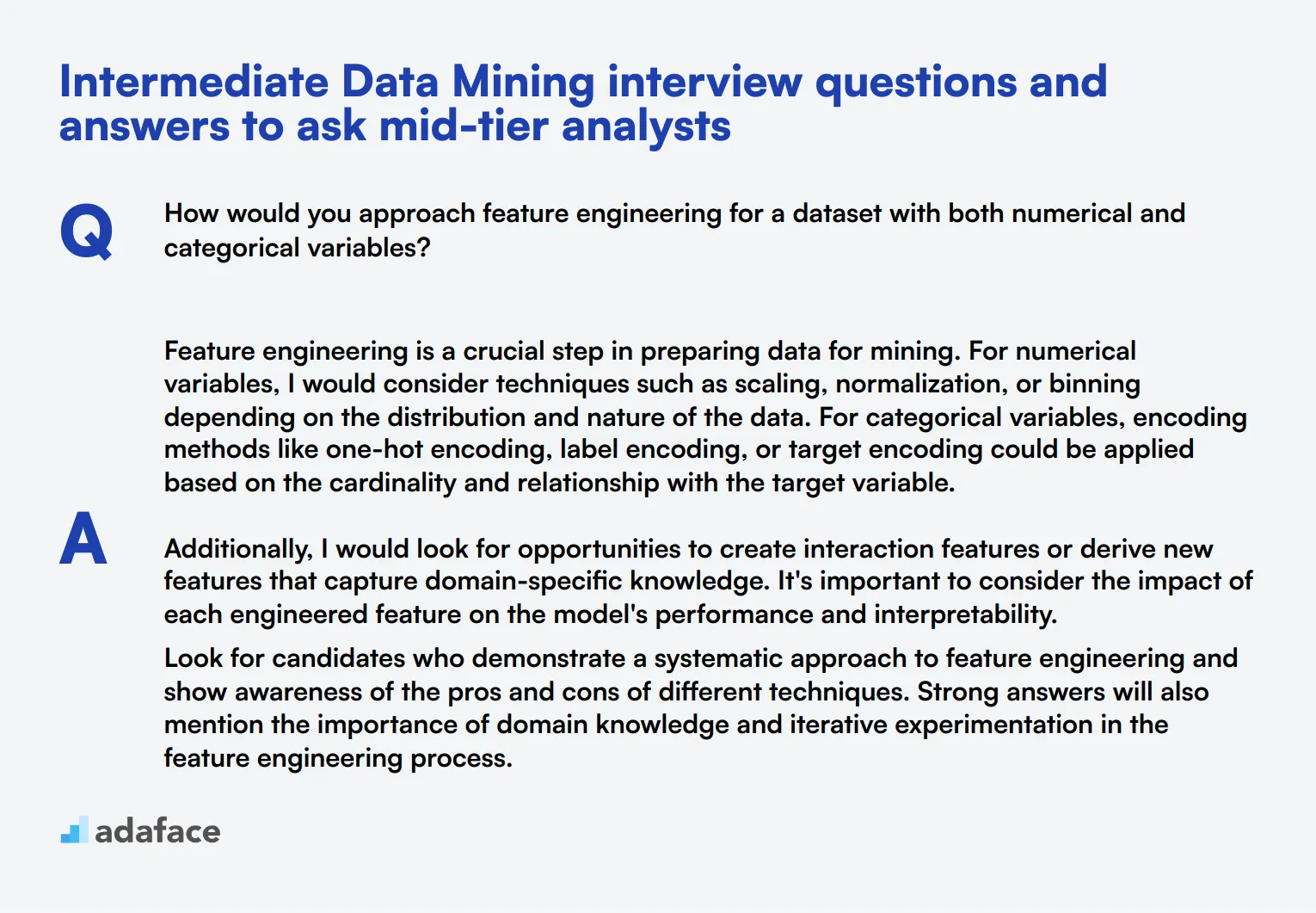

10 intermediate Data Mining interview questions and answers to ask mid-tier analysts

Ready to put your mid-tier data mining analysts to the test? These 10 intermediate questions will help you gauge their skills and understanding of key concepts. Whether you're conducting a face-to-face interview or a virtual assessment, these questions will give you insights into how candidates approach real-world data mining challenges.

1. How would you approach feature engineering for a dataset with both numerical and categorical variables?

Feature engineering is a crucial step in preparing data for mining. For numerical variables, I would consider techniques such as scaling, normalization, or binning depending on the distribution and nature of the data. For categorical variables, encoding methods like one-hot encoding, label encoding, or target encoding could be applied based on the cardinality and relationship with the target variable.

Additionally, I would look for opportunities to create interaction features or derive new features that capture domain-specific knowledge. It's important to consider the impact of each engineered feature on the model's performance and interpretability.

Look for candidates who demonstrate a systematic approach to feature engineering and show awareness of the pros and cons of different techniques. Strong answers will also mention the importance of domain knowledge and iterative experimentation in the feature engineering process.

2. Can you explain the concept of ensemble learning and give an example of when you might use it?

Ensemble learning is a technique that combines multiple models to create a more robust and accurate predictive model. The idea is that by aggregating the predictions of several models, we can reduce bias, variance, and overfitting, leading to better overall performance.

An example of when to use ensemble learning could be in a customer churn prediction project. We might combine decision trees, random forests, and gradient boosting machines to create a more reliable prediction of which customers are likely to churn. Each model might capture different aspects of the customer behavior, and the ensemble would provide a more comprehensive view.

Look for candidates who can explain the concept clearly and provide relevant examples. They should also be able to discuss common ensemble methods like bagging, boosting, and stacking, and understand when ensemble learning might be preferable to using a single model.

3. How would you handle a dataset with a large number of features but relatively few samples?

When dealing with a dataset that has many features but few samples, there's a risk of overfitting. To address this, I would consider several approaches:

- Feature selection: Use techniques like correlation analysis, mutual information, or LASSO regularization to identify the most relevant features.

- Dimensionality reduction: Apply methods such as PCA or t-SNE to reduce the number of features while retaining most of the information.

- Regularization: Implement regularization techniques in the model to prevent overfitting.

- Cross-validation: Use k-fold cross-validation to ensure the model generalizes well despite the limited sample size.

- Data augmentation: If applicable, generate synthetic samples to increase the dataset size.

A strong candidate should recognize the challenges of high dimensionality with limited samples and propose multiple strategies to address it. Look for answers that demonstrate an understanding of the bias-variance tradeoff and the importance of model validation in such scenarios.

4. Explain the difference between a parametric and non-parametric model in data mining.

Parametric models make strong assumptions about the data's underlying distribution and have a fixed number of parameters, regardless of the training set size. Examples include linear regression and logistic regression. These models are often simpler and faster to train but may not capture complex patterns in the data.

Non-parametric models, on the other hand, make fewer assumptions about the data distribution and can have a flexible number of parameters that grows with the training set size. Examples include decision trees, k-nearest neighbors, and kernel methods. These models can capture more complex relationships but may require more data and be prone to overfitting.

Look for candidates who can clearly articulate the differences and provide examples of each type. They should also be able to discuss the trade-offs between parametric and non-parametric models, such as interpretability, flexibility, and computational complexity.

5. How would you approach anomaly detection in a large time series dataset?

Anomaly detection in time series data requires a thoughtful approach. I would consider the following steps:

- Data preprocessing: Clean the data, handle missing values, and perform necessary transformations.

- Trend and seasonality analysis: Identify and remove any underlying trends or seasonal patterns.

- Statistical methods: Use techniques like moving averages, exponential smoothing, or ARIMA models to establish a baseline.

- Machine learning approaches: Consider unsupervised methods like isolation forests or autoencoders, or supervised methods if labeled data is available.

- Threshold setting: Determine appropriate thresholds for flagging anomalies, possibly using domain knowledge or statistical measures.

- Visualization: Create plots to help identify and validate detected anomalies.

A strong candidate should demonstrate knowledge of both statistical and machine learning approaches to anomaly detection. Look for answers that consider the specific challenges of time series data, such as temporal dependencies and concept drift.

6. What is the curse of dimensionality, and how does it affect data mining?

The curse of dimensionality refers to the phenomenon where the performance of machine learning algorithms deteriorates as the number of features (dimensions) increases relative to the number of samples. This occurs because as the number of dimensions grows, the data becomes increasingly sparse in the feature space, making it harder to find meaningful patterns.

In data mining, this curse can lead to several issues:

- Increased computational complexity and memory requirements

- Overfitting, as models may fit noise in high-dimensional spaces

- Reduced effectiveness of distance-based algorithms

- Difficulty in visualizing and interpreting high-dimensional data

Look for candidates who can explain the concept clearly and discuss its implications for data mining tasks. Strong answers will also mention strategies to mitigate the curse of dimensionality, such as feature selection, dimensionality reduction techniques, and using appropriate algorithms for high-dimensional data.

7. How would you handle concept drift in a deployed machine learning model?

Concept drift occurs when the statistical properties of the target variable change over time, potentially making a deployed model less accurate. To handle concept drift, I would consider the following approaches:

- Monitoring: Implement a system to continuously monitor model performance and data distribution.

- Retraining: Periodically retrain the model on recent data to capture new patterns.

- Online learning: Use algorithms that can adapt to changing data in real-time.

- Ensemble methods: Maintain multiple models and weight them based on recent performance.

- Feature engineering: Develop time-aware features that can capture changing trends.

- Drift detection: Implement statistical tests to detect when significant drift has occurred.

A strong candidate should demonstrate awareness of the challenges posed by concept drift in real-world applications. Look for answers that propose a combination of proactive and reactive strategies, and show understanding of the trade-offs between model stability and adaptability.

8. Explain the difference between bagging and boosting in ensemble learning.

Bagging (Bootstrap Aggregating) and Boosting are both ensemble learning techniques, but they differ in their approach:

Bagging:

- Creates multiple subsets of the original dataset through random sampling with replacement

- Trains a separate model on each subset

- Combines predictions through voting (classification) or averaging (regression)

- Reduces variance and helps prevent overfitting

- Example: Random Forests

Boosting:

- Trains models sequentially, with each model trying to correct the errors of the previous ones

- Assigns higher weights to misclassified instances in subsequent iterations

- Combines models through weighted voting or averaging

- Reduces bias and variance, but can be prone to overfitting if not carefully tuned

- Examples: AdaBoost, Gradient Boosting Machines

Look for candidates who can clearly explain the differences in how these techniques work and their impact on model performance. Strong answers will also discuss the trade-offs between bagging and boosting, such as interpretability, training time, and sensitivity to noisy data.

9. How would you approach a data mining project where the target variable is highly imbalanced?

When dealing with an imbalanced target variable, several strategies can be employed:

- Resampling techniques:

- Oversampling the minority class (e.g., SMOTE)

- Undersampling the majority class

- Combination of both

- Algorithmic approaches:

- Use algorithms that handle imbalanced data well (e.g., decision trees)

- Adjust class weights or use cost-sensitive learning

- Ensemble methods:

- Techniques like BalancedRandomForestClassifier or EasyEnsemble

- Evaluation metrics:

- Use appropriate metrics like F1-score, ROC AUC, or precision-recall curve instead of accuracy

- Anomaly detection:

- Frame the problem as anomaly detection if the imbalance is extreme

A strong candidate should recognize the challenges posed by imbalanced data and propose multiple strategies to address it. Look for answers that demonstrate an understanding of the limitations of standard approaches and the importance of choosing appropriate evaluation metrics for imbalanced datasets.

10. Can you explain the concept of regularization in machine learning models and when you might use it?

Regularization is a technique used to prevent overfitting in machine learning models by adding a penalty term to the loss function. This penalty discourages the model from fitting the noise in the training data too closely, leading to better generalization on unseen data.

Common types of regularization include:

- L1 (Lasso): Adds the absolute value of coefficients to the loss function, can lead to sparse models

- L2 (Ridge): Adds the squared value of coefficients, tends to shrink coefficients towards zero

- Elastic Net: Combines L1 and L2 regularization

Regularization is particularly useful when:

- The model has high variance (overfitting)

- The dataset has a large number of features relative to the number of samples

- There's multicollinearity among features

- Feature selection is desired (especially with L1 regularization)

Look for candidates who can explain the concept clearly and discuss different types of regularization. Strong answers will also demonstrate understanding of how regularization affects model complexity and the bias-variance tradeoff.

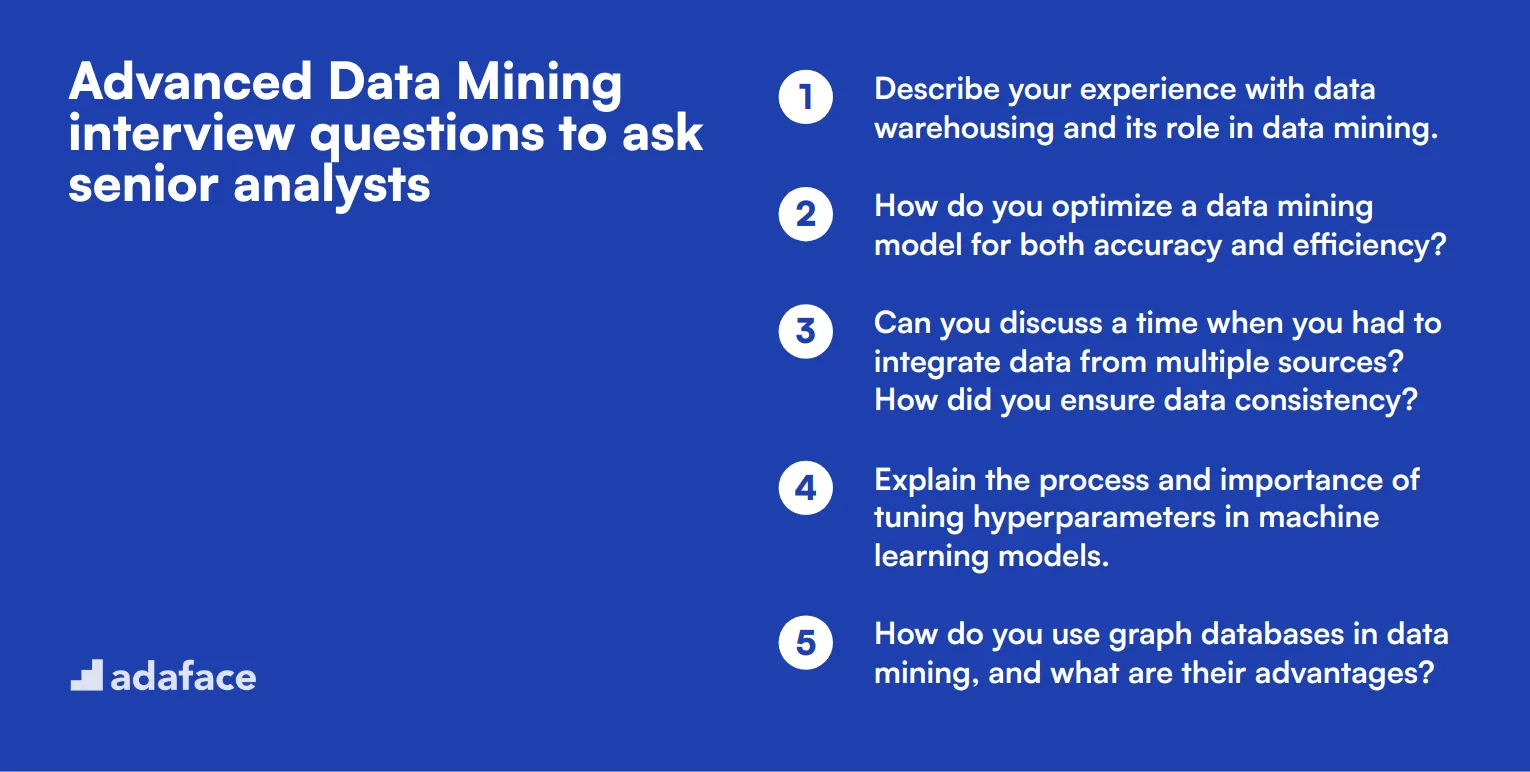

15 advanced Data Mining interview questions to ask senior analysts

To determine if your senior data analyst candidates possess the deep technical expertise required for advanced data mining tasks, consider using these 15 advanced interview questions. These questions are designed to challenge candidates and assess their ability to handle complex data mining scenarios, ensuring they are well-equipped for roles requiring high-level analytical skills. For more detailed role descriptions, you can refer to data analyst job description.

- Describe your experience with data warehousing and its role in data mining.

- How do you optimize a data mining model for both accuracy and efficiency?

- Can you discuss a time when you had to integrate data from multiple sources? How did you ensure data consistency?

- Explain the process and importance of tuning hyperparameters in machine learning models.

- How do you use graph databases in data mining, and what are their advantages?

- Describe a challenging data mining project you have worked on and how you overcame the obstacles you faced.

- What advanced techniques do you use for pattern recognition in large datasets?

- How do you ensure the scalability of your data mining solutions when dealing with massive datasets?

- Can you explain the concept of transfer learning and its application in data mining?

- Describe your approach to developing predictive models for real-time data analysis.

- What methods do you use to validate the robustness of your data mining models?

- How do you manage and reduce biases in your data mining processes?

- Explain the use of deep learning techniques in data mining and provide a practical example.

- How do you approach the task of automating the data mining workflow?

- Discuss your experience with using big data technologies like Hadoop and Spark in data mining projects.

8 Data Mining interview questions and answers related to technical definitions

To assess candidates' understanding of data mining concepts and their ability to apply them in real-world scenarios, consider using these 8 technical definition questions. These questions will help you gauge the depth of a candidate's knowledge and their ability to explain complex concepts in simple terms, which is crucial for data scientists and analysts working with diverse teams.

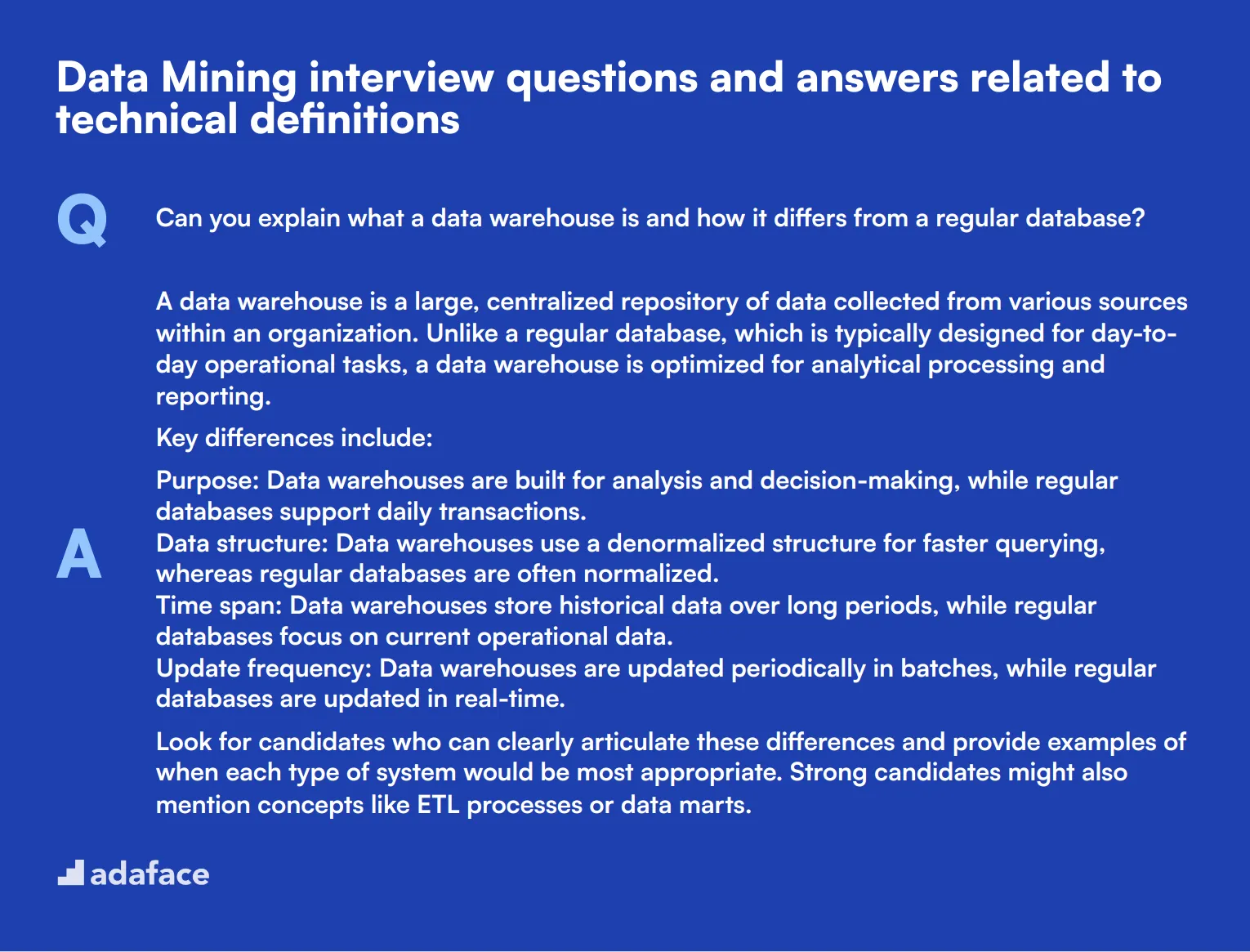

1. Can you explain what a data warehouse is and how it differs from a regular database?

A data warehouse is a large, centralized repository of data collected from various sources within an organization. Unlike a regular database, which is typically designed for day-to-day operational tasks, a data warehouse is optimized for analytical processing and reporting.

Key differences include:

- Purpose: Data warehouses are built for analysis and decision-making, while regular databases support daily transactions.

- Data structure: Data warehouses use a denormalized structure for faster querying, whereas regular databases are often normalized.

- Time span: Data warehouses store historical data over long periods, while regular databases focus on current operational data.

- Update frequency: Data warehouses are updated periodically in batches, while regular databases are updated in real-time.

Look for candidates who can clearly articulate these differences and provide examples of when each type of system would be most appropriate. Strong candidates might also mention concepts like ETL processes or data marts.

2. What is the difference between a fact table and a dimension table in a data warehouse?

In a data warehouse, fact tables and dimension tables are two key components of the star schema, a common design pattern:

- Fact tables: Contain quantitative data about business processes (e.g., sales transactions, website visits).

- Dimension tables: Provide descriptive attributes that give context to the facts (e.g., product details, customer information, time periods).

Fact tables typically have many rows but few columns, mostly consisting of foreign keys to dimension tables and numerical measures. Dimension tables have fewer rows but more columns, providing rich descriptive information.

A strong candidate should be able to explain how these tables work together to facilitate efficient querying and analysis. They might also discuss the concept of granularity in fact tables or slowly changing dimensions.

3. Can you explain the concept of data cubes in OLAP systems?

A data cube is a multi-dimensional data structure used in Online Analytical Processing (OLAP) systems. It allows for fast analysis of data across multiple dimensions. Think of it as a way to pre-calculate and store aggregations of data along various dimensions.

For example, a sales data cube might have dimensions like time, product, and location. Users can then quickly retrieve aggregated data (e.g., total sales) for any combination of these dimensions, such as 'total sales of Product A in the Northeast region for Q2'.

Look for candidates who can explain how data cubes enable quick and flexible analysis. They should understand concepts like dimensions, measures, and hierarchies. Strong candidates might also discuss the trade-offs between storage space and query performance in OLAP systems.

4. What is the difference between ETL and ELT in data processing?

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two approaches to processing data for analytics:

- ETL: Data is extracted from source systems, transformed to fit the target schema, and then loaded into the data warehouse.

- ELT: Data is extracted from sources, loaded into the target system in its raw form, and then transformed as needed.

The key difference is the order and location of the transformation step. ETL transforms data before loading, often using a separate transformation engine. ELT loads raw data first and leverages the power of modern data warehouses to perform transformations.

Look for candidates who can explain the pros and cons of each approach. They should understand that ELT is becoming more popular with cloud-based data warehouses due to their ability to handle large-scale transformations. A strong candidate might discuss scenarios where one approach might be preferred over the other.

5. Can you explain what a data lake is and how it differs from a data warehouse?

A data lake is a large repository that stores raw, unstructured, or semi-structured data in its native format. Unlike a data warehouse, which stores structured data optimized for specific analytical tasks, a data lake can hold a vast amount of diverse data types without a predefined schema.

Key differences include:

- Data structure: Data lakes store raw data, while data warehouses store processed, structured data.

- Schema: Data lakes use a schema-on-read approach, whereas data warehouses use schema-on-write.

- Users: Data lakes are often used by data scientists for exploratory analysis, while data warehouses are typically used by business analysts for regular reporting.

- Flexibility: Data lakes offer more flexibility for storing and analyzing diverse data types, but require more skill to query effectively.

Look for candidates who understand the strengths and weaknesses of each approach. They should be able to discuss scenarios where a data lake might be preferred over a data warehouse, such as when dealing with large volumes of unstructured data or when the end use of the data is not yet defined.

6. What is the concept of data lineage and why is it important in data mining?

Data lineage refers to the life cycle of data, including its origins, movements, transformations, and where it is used. It provides a documented trail of data's journey through various systems and processes.

Data lineage is crucial in data mining for several reasons:

- Data quality: It helps in tracking and resolving data quality issues.

- Compliance: It's essential for regulatory compliance, especially in industries like finance and healthcare.

- Impact analysis: It allows data scientists to understand how changes in one part of the system might affect downstream processes.

- Troubleshooting: When issues arise, data lineage helps in quickly identifying the source of the problem.

- Trust: It increases confidence in data-driven decisions by providing transparency in data processing.

A strong candidate should be able to explain how data lineage tools work and provide examples of how they've used data lineage in their data mining projects. Look for understanding of both the technical and business implications of maintaining good data lineage.

7. Can you explain the concept of data mart and how it relates to a data warehouse?

A data mart is a subset of a data warehouse that focuses on a specific business line, department, or subject area. It's designed to serve the needs of a particular group of users, providing them with relevant data in a format that's easy to access and understand.

Key characteristics of data marts:

- Scope: Narrower focus compared to a data warehouse

- Size: Typically smaller and more manageable

- Users: Often designed for a specific group or department

- Implementation: Can be faster and less complex than a full data warehouse

- Data source: Can be sourced from a central data warehouse (dependent) or directly from operational systems (independent)

Look for candidates who can explain the relationship between data marts and data warehouses. They should understand when and why an organization might choose to implement data marts. Strong candidates might discuss the trade-offs between centralized (enterprise data warehouse) and decentralized (multiple data marts) approaches to data management.

8. What is data federation and how does it differ from data integration?

Data federation is an approach that allows an organization to view and access data from multiple disparate sources through a single, virtual database. Instead of physically moving or copying data into a central repository, data federation provides a unified view of data that remains in its original locations.

Key differences from data integration:

- Data movement: Federation doesn't move data, while integration often involves ETL processes.

- Real-time access: Federation typically provides real-time access to source data.

- Complexity: Federation can be less complex to set up initially but may have performance limitations for large-scale analytics.

- Use cases: Federation is often used for real-time operational decisions, while integration is typically used for historical analysis and reporting.

Look for candidates who can explain scenarios where data federation might be preferred over traditional data integration. They should understand the benefits (like reduced data duplication and real-time access) and challenges (like potential performance issues with complex queries) of federation. Strong candidates might discuss technologies used for data federation or hybrid approaches that combine federation and integration.

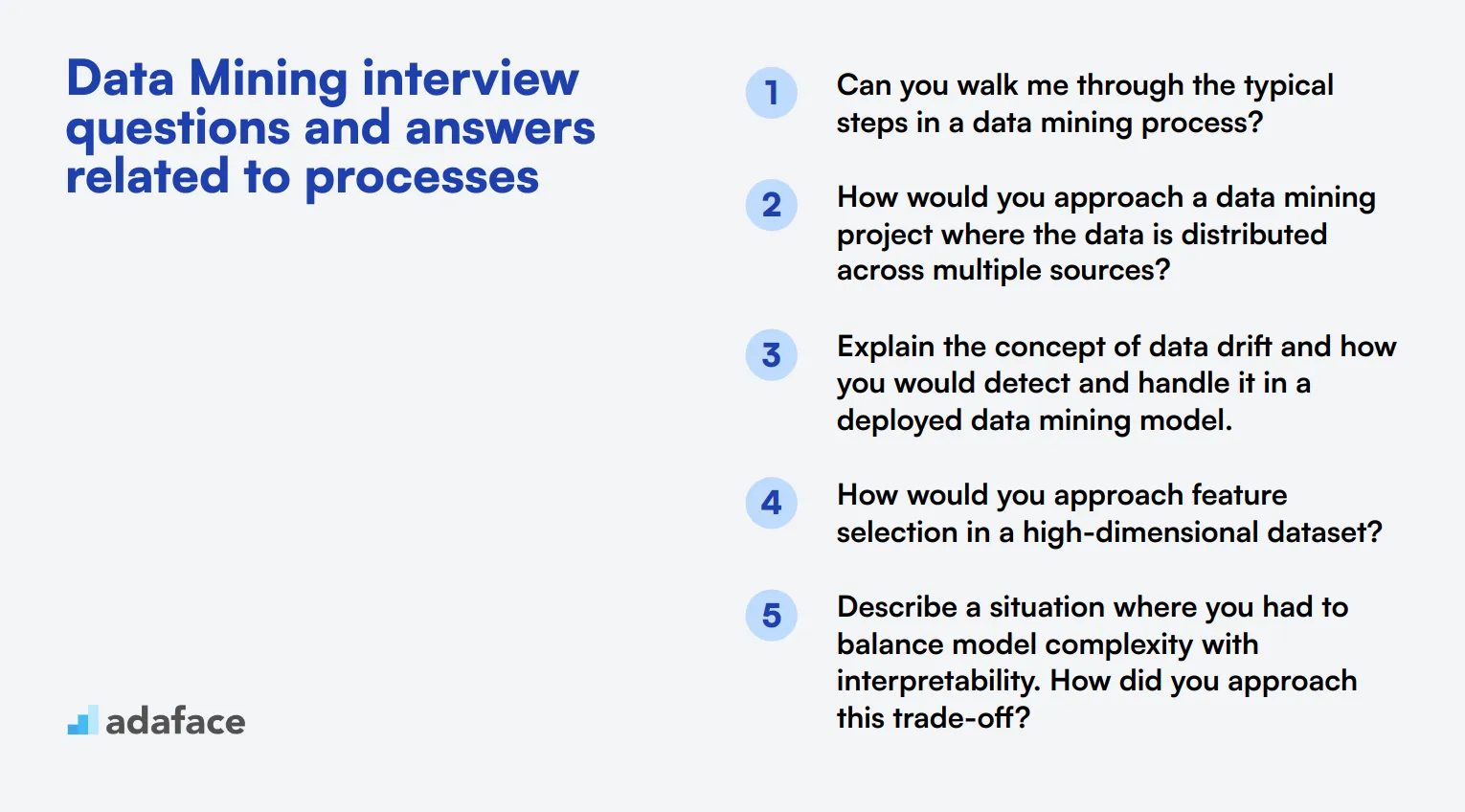

7 Data Mining interview questions and answers related to processes

To assess candidates' understanding of data mining processes and their practical application, consider using these 7 insightful questions. These queries will help you gauge a candidate's ability to navigate the complex world of data analysis and extract valuable insights. Remember, the best responses will demonstrate both theoretical knowledge and real-world problem-solving skills.

1. Can you walk me through the typical steps in a data mining process?

A strong candidate should be able to outline the main steps of the data mining process, which typically include:

- Problem definition: Clearly stating the business problem or objective

- Data collection: Gathering relevant data from various sources

- Data cleaning and preprocessing: Handling missing values, outliers, and inconsistencies

- Exploratory data analysis: Understanding data patterns and relationships

- Feature selection and engineering: Choosing relevant variables and creating new ones

- Model selection and training: Choosing appropriate algorithms and training models

- Model evaluation: Assessing performance using appropriate metrics

- Model deployment and monitoring: Implementing the model in a production environment

Look for candidates who can explain each step concisely and provide examples of how they've applied these steps in real-world projects. A great answer might also touch on the iterative nature of the process and the importance of data quality throughout.

2. How would you approach a data mining project where the data is distributed across multiple sources?

An ideal response should cover the following key points:

- Data integration strategy: Outline a plan to combine data from various sources, considering factors like data formats, schemas, and potential inconsistencies.

- ETL process: Describe the Extract, Transform, Load process to consolidate data into a central repository or data warehouse.

- Data quality assessment: Explain methods to ensure data consistency and accuracy across sources.

- Metadata management: Discuss the importance of maintaining clear documentation of data lineage and definitions.

- Technology considerations: Mention relevant tools or platforms for handling distributed data sources, such as Hadoop or cloud-based solutions.

Look for candidates who emphasize the importance of understanding the business context and requirements before diving into technical solutions. Strong answers might also touch on potential challenges like data privacy concerns or the need for real-time data integration.

3. Explain the concept of data drift and how you would detect and handle it in a deployed data mining model.

A comprehensive answer should include the following elements:

- Definition: Data drift occurs when the statistical properties of the target variable change over time, potentially affecting model performance.

- Types of drift: Concept drift (changes in the relationship between features and target) and feature drift (changes in the distribution of input features).

- Detection methods: Monitoring model performance metrics, statistical tests for distribution changes, or using specialized drift detection algorithms.

- Handling strategies: Regular model retraining, ensemble methods with different time windows, or adaptive learning techniques.

Strong candidates might also discuss the importance of setting up monitoring systems to alert stakeholders of significant drift, and the need to balance model stability with adaptability. Look for answers that demonstrate an understanding of the practical challenges in maintaining model performance in dynamic environments.

4. How would you approach feature selection in a high-dimensional dataset?

A strong answer should cover various feature selection techniques and their applications:

- Filter methods: Using statistical measures like correlation, chi-squared test, or mutual information to select features.

- Wrapper methods: Evaluating subsets of features using the predictive model as a black box (e.g., recursive feature elimination).

- Embedded methods: Techniques that perform feature selection as part of the model training process (e.g., LASSO regression).

- Dimensionality reduction: Methods like Principal Component Analysis (PCA) or t-SNE to create new, lower-dimensional feature spaces.

Look for candidates who can explain the trade-offs between different approaches, such as computational complexity versus model performance. A great answer might also touch on the importance of domain knowledge in feature selection and the need to validate the selected features' impact on model performance. Candidates who mention the skills required for data scientists in this context demonstrate a holistic understanding of the field.

5. Describe a situation where you had to balance model complexity with interpretability. How did you approach this trade-off?

An ideal response should demonstrate the candidate's ability to navigate the common tension between model performance and explainability:

- Context: Describe a specific project or scenario where this trade-off was relevant.

- Stakeholder analysis: Explain how different stakeholders' needs (e.g., data scientists, business users, regulators) were considered.

- Model selection: Discuss the choice between more complex, high-performing models (e.g., deep learning, ensemble methods) and simpler, more interpretable models (e.g., linear regression, decision trees).

- Interpretability techniques: Mention methods used to explain complex models, such as SHAP values, LIME, or partial dependence plots.

- Evaluation criteria: Describe how the balance was assessed, potentially using a combination of performance metrics and interpretability measures.

Look for answers that show thoughtful consideration of the business impact and ethical implications of model choices. Strong candidates might also discuss strategies for gradually introducing more complex models while maintaining trust and understanding among stakeholders.

6. How would you handle a data mining project where the target variable is highly imbalanced?

A comprehensive answer should cover various strategies for dealing with imbalanced datasets:

- Data-level techniques:

- Oversampling the minority class (e.g., SMOTE)

- Undersampling the majority class

- Combining oversampling and undersampling

- Algorithm-level techniques:

- Using algorithms that handle imbalance well (e.g., decision trees)

- Adjusting class weights or decision thresholds

- Ensemble methods:

- Bagging or boosting with focus on minority class

- Combining multiple resampling techniques

- Evaluation metrics:

- Using appropriate metrics like F1-score, ROC AUC, or precision-recall curve instead of accuracy

Look for candidates who emphasize the importance of understanding the business context and the cost of different types of errors. Strong answers might also discuss the need to validate the chosen approach using cross-validation and to consider the potential introduction of bias or overfitting when applying resampling techniques.

7. Explain the concept of model interpretability and why it's important in data mining projects.

A strong answer should cover the following key points:

- Definition: Model interpretability refers to the degree to which humans can understand the reasons behind a model's predictions or decisions.

- Importance:

- Building trust in model outcomes

- Identifying potential biases or errors in the model

- Ensuring regulatory compliance (e.g., GDPR's 'right to explanation')

- Facilitating model debugging and improvement

- Enabling domain experts to validate and provide insights

- Techniques for improving interpretability:

- Using inherently interpretable models (e.g., linear regression, decision trees)

- Applying post-hoc explanation methods (e.g., SHAP values, LIME)

- Feature importance analysis

- Partial dependence plots

Look for candidates who can discuss the trade-offs between model performance and interpretability, and how to balance these factors based on the specific needs of a project. Strong answers might also touch on the challenges of interpreting complex models like deep neural networks and the ongoing research in this area. Candidates who mention the importance of interpretability in the context of ethical AI and responsible data science demonstrate a broader understanding of the field's implications.

Which Data Mining skills should you evaluate during the interview phase?

While a single interview may not reveal everything about a candidate's capabilities, focusing on specific core skills during the data mining interview can provide crucial insights into their potential effectiveness in the role. These skills are fundamental to their day-to-day responsibilities and success within your organization.

Statistical Analysis

Statistical analysis is the backbone of data mining, enabling analysts to interpret data and make predictions. Understanding statistical methods helps in extracting meaningful patterns and trends from large datasets, which is critical for data-driven decision making.

Consider employing a tailored MCQ test to evaluate a candidate's proficiency in statistical analysis. You can use the Adaface Statistical Data Analysis Test to filter candidates effectively.

To further assess this skill, you can ask candidates a specific question that challenges their understanding of statistics in the context of data mining.

Can you explain how you would use a p-value to determine the significance of your data mining results?

Look for answers that demonstrate a clear understanding of hypothesis testing and the ability to apply statistical significance in real-world data mining scenarios.

Machine Learning

Machine learning techniques are integral to modern data mining, automating the extraction of insights and predictions from data. Proficiency in machine learning algorithms can significantly enhance the accuracy and efficiency of data analysis processes.

An assessment test that includes relevant MCQs can effectively gauge candidates' machine learning knowledge. The Adaface Machine Learning Test is designed to identify candidates with the necessary expertise.

To delve deeper into their practical skills, consider asking them the following question during the interview:

Describe a situation where you chose a specific machine learning model over others for a data mining project. What factors influenced your decision?

The answer should show their ability to not only apply appropriate models but also justify their choices based on the project's specific requirements and data characteristics.

Data Visualization

Data visualization is a key skill for data mining, as it allows analysts to present complex data insights in an understandable and actionable manner. Mastery of visualization tools and techniques is necessary to effectively communicate findings to stakeholders.

To assess this skill, consider using a structured MCQ test. The Adaface Data Visualization Test can help in evaluating the candidates' ability to interpret and visualize data.

During the interview, you can ask the following question to evaluate their data visualization capabilities:

What visualization techniques would you use to represent time series data from a marketing campaign's performance metrics?

Expect candidates to discuss a variety of charts and graphs, such as line graphs or heat maps, demonstrating knowledge of when and how to use different types based on the data's nature and the analysis goals.

Hire Top Data Mining Talent with Skills Tests and Targeted Interview Questions

When hiring for Data Mining positions, it's important to verify candidates' skills accurately. This ensures you bring on board professionals who can truly contribute to your data-driven projects and decision-making processes.

One of the most effective ways to assess Data Mining skills is through specialized tests. The Data Mining Test from Adaface is designed to evaluate candidates' proficiency in key areas of the field.

After using the test to shortlist top performers, you can invite them for interviews. The interview questions provided in this post will help you dig deeper into their knowledge and experience, allowing you to make informed hiring decisions.

Ready to streamline your Data Mining hiring process? Sign up for Adaface to access our comprehensive suite of assessment tools and find the perfect fit for your team.

Data Mining Test

Download Data Mining interview questions template in multiple formats

Data Mining Interview Questions FAQs

Look for skills in statistical analysis, machine learning, programming (e.g., Python, R), database management, and data visualization. Problem-solving abilities and domain knowledge are also important.

Ask about specific projects they've worked on, challenges they've faced, and solutions they've implemented. Request examples of data mining techniques they've applied in real-world scenarios.

Be cautious of candidates who can't explain basic concepts clearly, lack hands-on experience, or show little interest in staying updated with the latest trends and technologies in the field.

Ask them to explain a complex data mining concept or process as if they were presenting it to a non-technical stakeholder. This will help assess their communication and simplification skills.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources