Recruiting the perfect Data Architect can be challenging due to the specialized skills required for the role. However, asking the right questions in interviews can simplify the process and help you identify top talent quickly.

This blog post offers a curated list of questions to assess various aspects, from basic knowledge to data modeling and integration processes. We've broken down the questions into categories such as evaluating junior Data Architects and understanding data integration techniques.

Using these questions, you can make informed hiring decisions that bring in candidates with the right expertise. To enhance your recruitment process further, consider utilizing our data modeling and design tests before interviews.

Table of contents

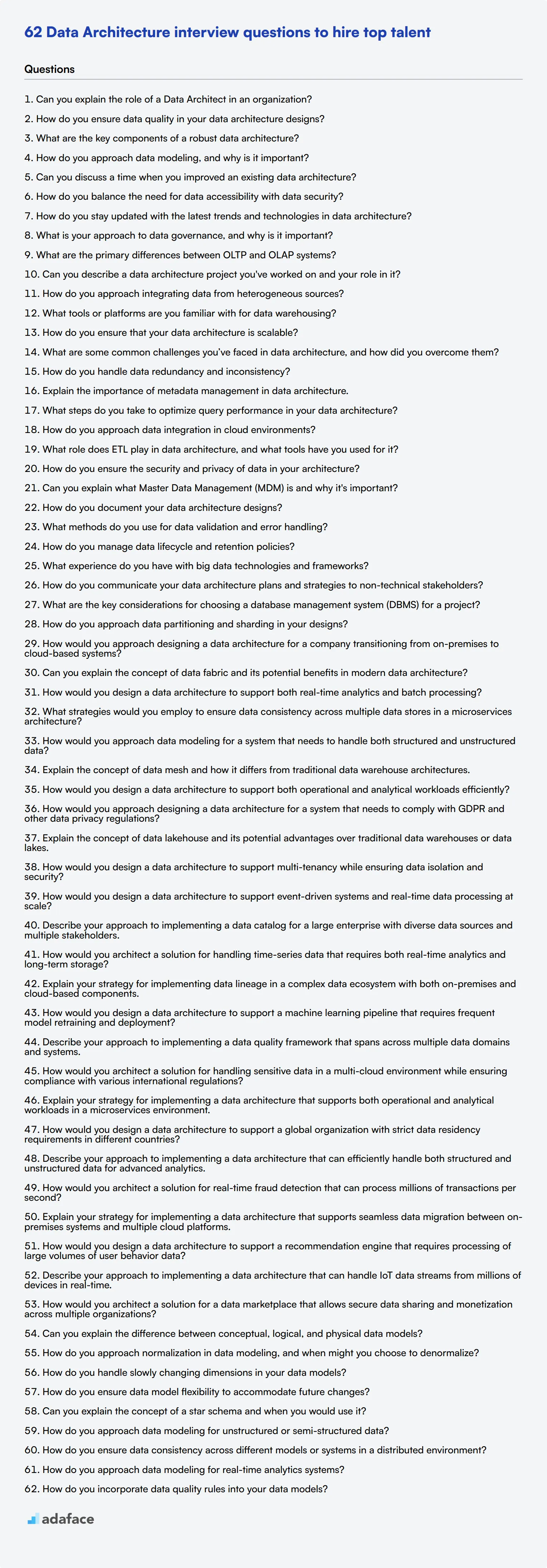

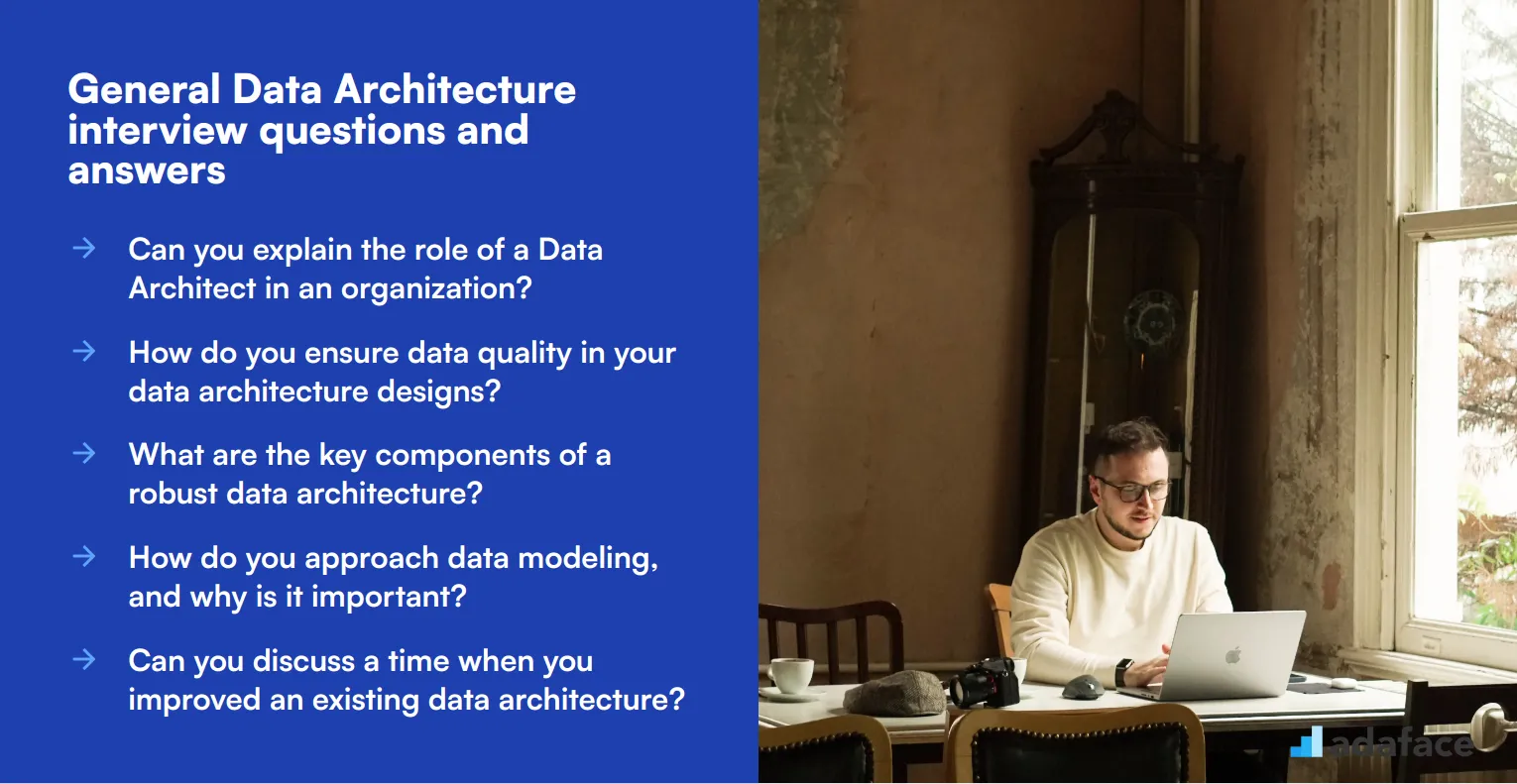

8 general Data Architecture interview questions and answers

Looking to find the perfect fit for your data architecture team? This list of eight general Data Architecture interview questions will help you assess candidates' understanding and skills without diving too deep into technical jargon. Perfect for face-to-face interviews, these questions will provide you with insights into their expertise and problem-solving capabilities.

1. Can you explain the role of a Data Architect in an organization?

A Data Architect is responsible for designing, creating, and managing the data architecture of an organization. They ensure data is organized, stored, and managed efficiently to support business goals and operations. Their role often involves collaborating with data engineers, analysts, and other stakeholders to align data strategies with business needs.

Look for candidates who can articulate the importance of a Data Architect in ensuring data integrity, security, and accessibility. They should also highlight their experience in working with cross-functional teams and their ability to translate business requirements into technical specifications.

2. How do you ensure data quality in your data architecture designs?

Ensuring data quality involves implementing processes and policies that guarantee data accuracy, completeness, consistency, and reliability. This can include data validation rules, data cleansing procedures, and regular audits. A Data Architect may also use tools and technologies designed to monitor and improve data quality.

Candidates should mention specific methodologies or tools they have used in the past to maintain high data quality. Look for answers that demonstrate a proactive approach to identifying and resolving data quality issues and an understanding of the impact of data quality on business operations.

3. What are the key components of a robust data architecture?

A robust data architecture typically includes components such as data sources, data storage solutions (like databases and data warehouses), data integration tools, data processing and transformation mechanisms, and data governance frameworks. These components work together to ensure data is accessible, reliable, and secure.

Ideal candidates should provide a comprehensive overview of these components and explain their roles in creating a cohesive data ecosystem. They should also emphasize the importance of scalability, flexibility, and security in data architecture design.

4. How do you approach data modeling, and why is it important?

Data modeling involves creating visual representations of data and its relationships, which helps in designing databases and other data storage solutions. It is important because it provides a clear blueprint for how data is structured, stored, and accessed, ensuring consistency and efficiency in data management.

Candidates should discuss their experience with different data modeling techniques (such as ER diagrams, UML) and tools. They should also highlight the significance of data modeling in facilitating communication between technical and non-technical stakeholders and in supporting efficient data operations.

5. Can you discuss a time when you improved an existing data architecture?

In my previous role, I identified inefficiencies in our data architecture, such as redundant data storage and slow query performance. I proposed and implemented a new data model, optimized our data storage solutions, and introduced better data indexing techniques. As a result, we saw a significant improvement in data retrieval times and overall system performance.

Look for candidates who can provide specific examples of their contributions to enhancing data architectures. They should demonstrate problem-solving skills, the ability to identify and address inefficiencies, and a track record of successful project implementation.

6. How do you balance the need for data accessibility with data security?

Balancing data accessibility with security involves implementing role-based access controls, data encryption, and regular security audits. It's essential to ensure that data is readily available to authorized users while protecting it from unauthorized access and breaches.

Candidates should discuss their experience with security best practices and tools, as well as their approach to designing data architectures that prioritize both accessibility and security. Look for a clear understanding of the importance of protecting sensitive data while enabling efficient data use.

7. How do you stay updated with the latest trends and technologies in data architecture?

I stay updated by regularly attending industry conferences, participating in webinars, and reading technical blogs and publications. I also engage with online communities and forums where professionals discuss the latest trends and share insights. Continuous learning and professional development are crucial in keeping up with the rapidly evolving field of data architecture.

Candidates should demonstrate a proactive approach to learning and staying informed about industry developments. Look for mentions of specific resources, communities, or professional networks they engage with to stay current with trends and technologies.

8. What is your approach to data governance, and why is it important?

Data governance involves establishing policies, procedures, and standards to ensure data is managed effectively and responsibly throughout its lifecycle. It is important because it ensures data quality, security, compliance with regulations, and alignment with business objectives. A robust data governance framework helps organizations make informed decisions and maintain trust in their data.

Candidates should provide a clear explanation of their data governance strategies and highlight their experience in implementing governance frameworks. Look for an understanding of the importance of data governance in achieving organizational goals and ensuring regulatory compliance.

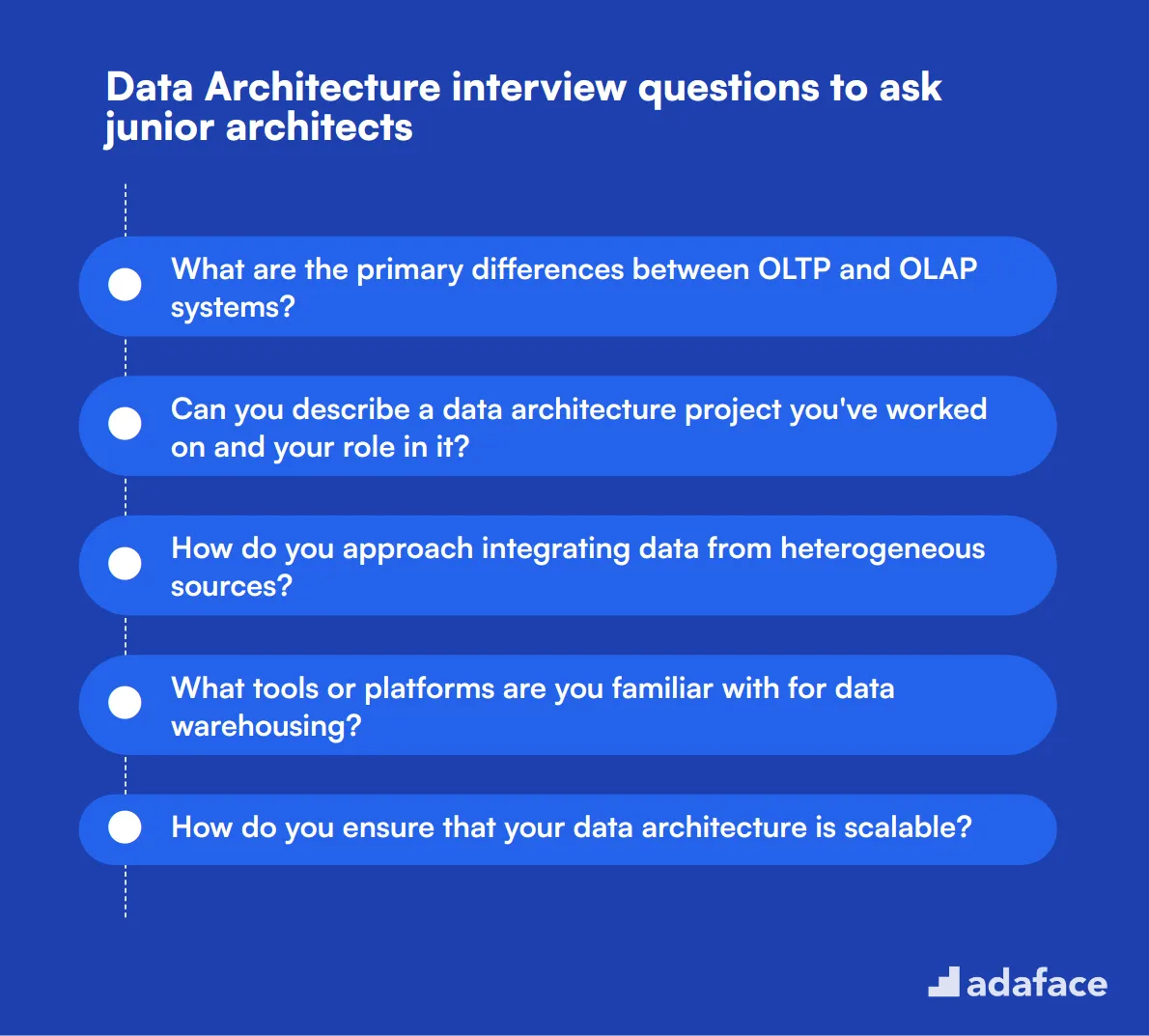

20 Data Architecture interview questions to ask junior architects

To determine if a candidate possesses the foundational skills for a Data Architect role, ask them some of these targeted interview questions. This list is designed for hiring managers to use during interviews to gauge the depth of a junior architect's understanding and practical experience. Find out more about what skills are critical for a Data Architect job.

- What are the primary differences between OLTP and OLAP systems?

- Can you describe a data architecture project you've worked on and your role in it?

- How do you approach integrating data from heterogeneous sources?

- What tools or platforms are you familiar with for data warehousing?

- How do you ensure that your data architecture is scalable?

- What are some common challenges you’ve faced in data architecture, and how did you overcome them?

- How do you handle data redundancy and inconsistency?

- Explain the importance of metadata management in data architecture.

- What steps do you take to optimize query performance in your data architecture?

- How do you approach data integration in cloud environments?

- What role does ETL play in data architecture, and what tools have you used for it?

- How do you ensure the security and privacy of data in your architecture?

- Can you explain what Master Data Management (MDM) is and why it's important?

- How do you document your data architecture designs?

- What methods do you use for data validation and error handling?

- How do you manage data lifecycle and retention policies?

- What experience do you have with big data technologies and frameworks?

- How do you communicate your data architecture plans and strategies to non-technical stakeholders?

- What are the key considerations for choosing a database management system (DBMS) for a project?

- How do you approach data partitioning and sharding in your designs?

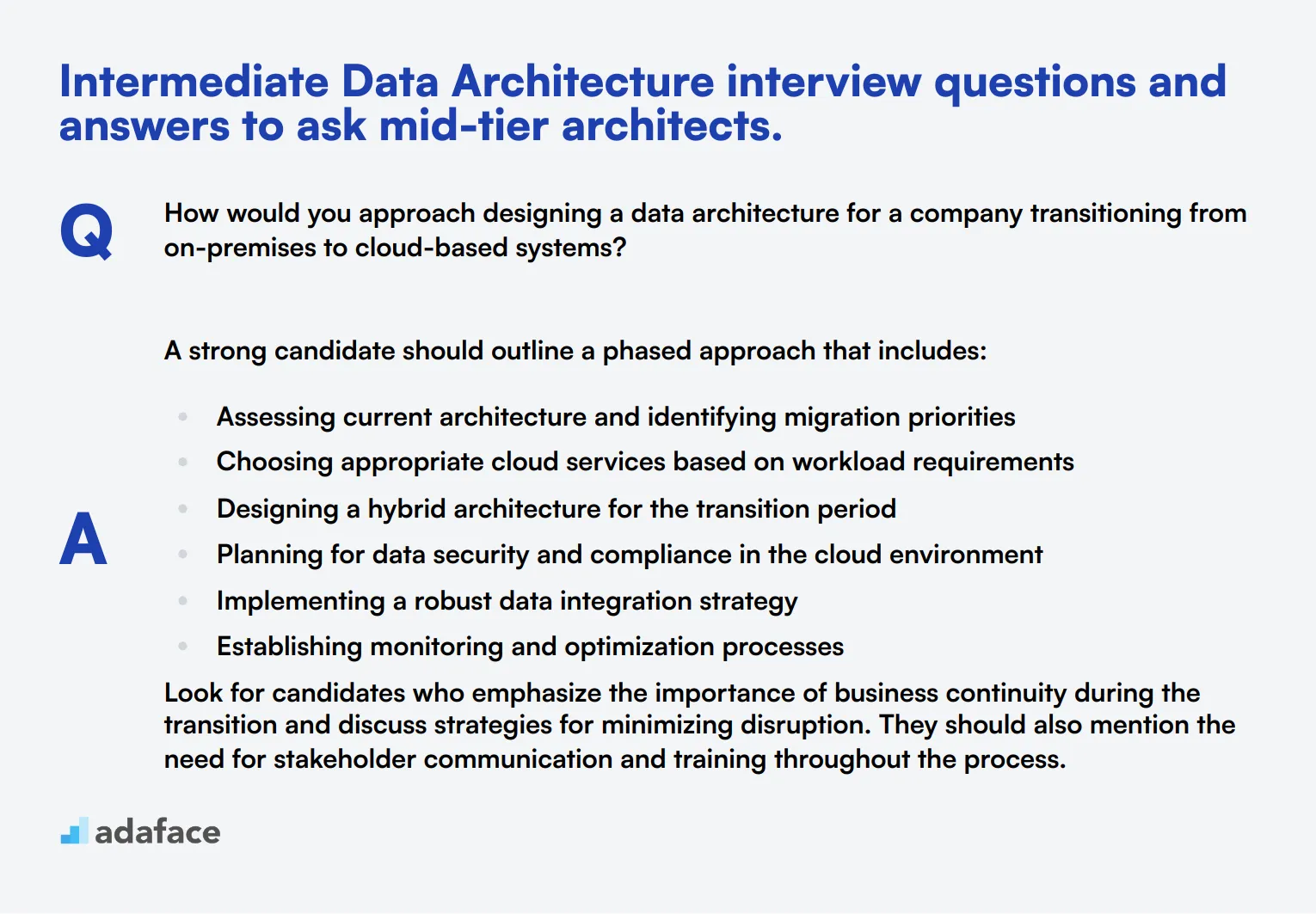

10 intermediate Data Architecture interview questions and answers to ask mid-tier architects.

Ready to level up your data architecture interviews? These 10 intermediate questions are perfect for assessing mid-tier architects. They'll help you dig deeper into a candidate's understanding of data management principles and problem-solving skills. Use them to spark insightful discussions and uncover the true potential of your applicants.

1. How would you approach designing a data architecture for a company transitioning from on-premises to cloud-based systems?

A strong candidate should outline a phased approach that includes:

- Assessing current architecture and identifying migration priorities

- Choosing appropriate cloud services based on workload requirements

- Designing a hybrid architecture for the transition period

- Planning for data security and compliance in the cloud environment

- Implementing a robust data integration strategy

- Establishing monitoring and optimization processes

Look for candidates who emphasize the importance of business continuity during the transition and discuss strategies for minimizing disruption. They should also mention the need for stakeholder communication and training throughout the process.

2. Can you explain the concept of data fabric and its potential benefits in modern data architecture?

Data fabric is an architectural approach that provides a unified, consistent user experience and access to data across a distributed environment. It aims to simplify data management and integration in complex, multi-cloud, and hybrid infrastructures.

Key benefits of data fabric include:

- Improved data accessibility and discoverability

- Enhanced data governance and security

- Reduced data silos and improved data quality

- Increased agility in data operations

- Better support for real-time analytics and AI/ML applications

Look for candidates who can explain how data fabric differs from traditional data integration approaches and can discuss potential challenges in implementing a data fabric architecture. Strong answers will also touch on how data fabric supports data coordination across diverse environments.

3. How would you design a data architecture to support both real-time analytics and batch processing?

An effective architecture for supporting both real-time analytics and batch processing typically involves a lambda or kappa architecture approach. Key components might include:

- Stream processing layer for real-time data ingestion and analysis

- Batch processing layer for historical data analysis

- Serving layer to combine results from both streams

- Data lake or data warehouse for data storage

- Message queue system for managing data flow

- APIs for data access and integration

Evaluate candidates based on their ability to explain the trade-offs between different architectural choices and their understanding of technologies that support both processing paradigms. Look for discussions on scalability, fault tolerance, and data consistency challenges in such hybrid systems.

4. What strategies would you employ to ensure data consistency across multiple data stores in a microservices architecture?

Ensuring data consistency in a microservices architecture is challenging but crucial. Candidates should discuss strategies such as:

- Implementing event-driven architecture with a message broker

- Using the Saga pattern for distributed transactions

- Applying the CQRS (Command Query Responsibility Segregation) pattern

- Implementing eventual consistency with compensating transactions

- Utilizing a distributed cache for frequently accessed data

- Employing a change data capture (CDC) mechanism

Look for candidates who understand the complexities of maintaining consistency in distributed systems. They should be able to explain the trade-offs between strong consistency and eventual consistency, and discuss how to choose the right approach based on business requirements.

5. How would you approach data modeling for a system that needs to handle both structured and unstructured data?

A comprehensive approach to modeling both structured and unstructured data typically involves:

- Using a combination of relational databases for structured data and NoSQL databases for unstructured data

- Implementing a data lake to store raw, unstructured data

- Creating a metadata layer to describe and categorize unstructured data

- Utilizing schema-on-read approaches for flexibility

- Implementing data virtualization to provide a unified view of diverse data types

- Considering graph databases for handling complex relationships

Assess candidates based on their understanding of different data modeling techniques and their ability to choose appropriate solutions for various data types. Look for discussions on how to maintain data quality and consistency across different data stores and how to enable efficient querying and analysis of mixed data types.

6. Explain the concept of data mesh and how it differs from traditional data warehouse architectures.

Data mesh is a decentralized approach to data architecture that treats data as a product and applies domain-driven design principles to data management. Key characteristics include:

- Domain-oriented, decentralized data ownership and architecture

- Data as a product, with each domain responsible for the quality and usability of its data

- Self-serve data infrastructure as a platform

- Federated computational governance to ensure interoperability and compliance

Look for candidates who can contrast data mesh with traditional centralized data warehouse architectures. They should be able to discuss the potential benefits of data mesh, such as improved scalability, faster time-to-market for data products, and better alignment with business domains. Also, assess their understanding of the challenges in implementing a data mesh, such as ensuring data consistency and managing cross-domain data products.

7. How would you design a data architecture to support both operational and analytical workloads efficiently?

An effective architecture for supporting both operational and analytical workloads might include:

- Operational data store (ODS) for real-time transactional data

- Data warehouse for historical and aggregated data

- Data lake for storing raw, unprocessed data

- ETL/ELT processes for data integration and transformation

- OLAP cubes or in-memory analytics engines for fast query processing

- Data virtualization layer for unified data access

- Caching mechanisms to improve performance for frequently accessed data

Evaluate candidates based on their ability to explain how to balance the needs of transactional systems with those of analytical systems. Look for discussions on data replication strategies, real-time data integration techniques, and approaches to minimize the impact of analytical queries on operational systems. Strong candidates will also mention the importance of data governance and metadata management in such hybrid architectures.

8. How would you approach designing a data architecture for a system that needs to comply with GDPR and other data privacy regulations?

Designing a GDPR-compliant data architecture involves several key considerations:

- Implementing data classification and tagging mechanisms

- Designing data flows with privacy by design principles

- Incorporating data masking and encryption for sensitive data

- Implementing robust access control and authentication mechanisms

- Creating data lineage and audit trail capabilities

- Designing mechanisms for data retention and deletion

- Implementing processes for handling data subject rights (e.g., right to be forgotten)

Look for candidates who demonstrate a thorough understanding of data privacy principles and their architectural implications. They should be able to discuss strategies for data minimization, purpose limitation, and data protection. Strong answers will also touch on the challenges of maintaining compliance across different geographical regions and the importance of regular audits and assessments.

9. Explain the concept of data lakehouse and its potential advantages over traditional data warehouses or data lakes.

A data lakehouse is an architectural pattern that combines the best features of data warehouses and data lakes. It aims to provide the structure and performance of a data warehouse with the flexibility and scalability of a data lake.

Key advantages of a data lakehouse include:

- Support for both structured and unstructured data

- ACID transactions on large datasets

- Schema enforcement and governance

- BI and ML workload support without data movement

- Open storage formats (e.g., Parquet)

- Improved data freshness and reduced data silos

- Potential for cost savings by eliminating separate systems

Evaluate candidates based on their understanding of the limitations of traditional data warehouses and data lakes, and how data lakehouses address these issues. Look for discussions on technologies that enable data lakehouse architectures (e.g., Delta Lake, Iceberg) and potential challenges in implementing and managing a data lakehouse.

10. How would you design a data architecture to support multi-tenancy while ensuring data isolation and security?

Designing a multi-tenant data architecture requires careful consideration of data isolation, security, and performance. Key strategies include:

- Implementing a shared database with separate schemas for each tenant

- Using row-level security for fine-grained access control

- Encrypting data at rest and in transit

- Implementing robust authentication and authorization mechanisms

- Designing efficient data partitioning strategies

- Using database views or materialized views for tenant-specific data access

- Implementing audit logging and monitoring for all data access

Look for candidates who can discuss the trade-offs between different multi-tenancy models (shared database, shared schema, separate databases) and their implications for scalability, maintenance, and cost. Strong answers will also address strategies for handling tenant-specific customizations, data migration between tenants, and ensuring performance isolation.

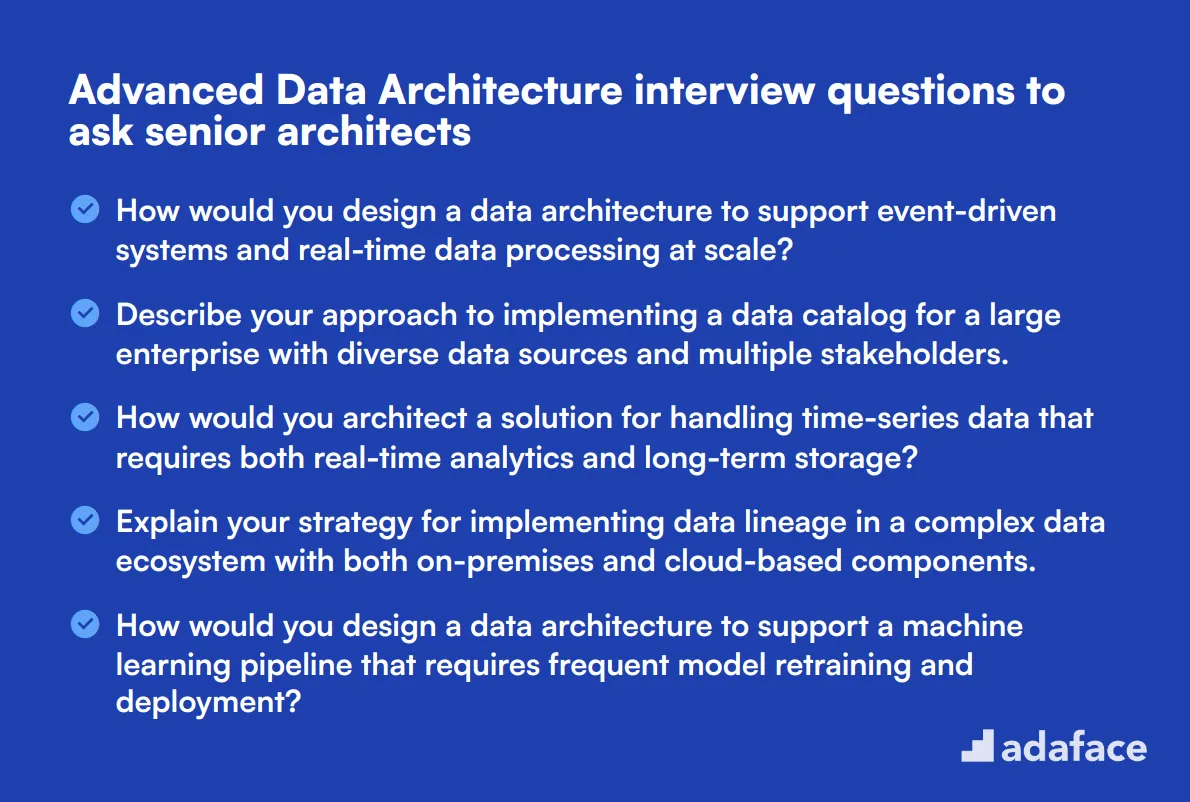

15 advanced Data Architecture interview questions to ask senior architects

To assess the advanced capabilities of senior data architects, use these 15 in-depth questions. They're designed to probe complex problem-solving skills and strategic thinking in data architecture. Use them to evaluate candidates' expertise in handling sophisticated data challenges and their ability to drive innovation in your organization.

- How would you design a data architecture to support event-driven systems and real-time data processing at scale?

- Describe your approach to implementing a data catalog for a large enterprise with diverse data sources and multiple stakeholders.

- How would you architect a solution for handling time-series data that requires both real-time analytics and long-term storage?

- Explain your strategy for implementing data lineage in a complex data ecosystem with both on-premises and cloud-based components.

- How would you design a data architecture to support a machine learning pipeline that requires frequent model retraining and deployment?

- Describe your approach to implementing a data quality framework that spans across multiple data domains and systems.

- How would you architect a solution for handling sensitive data in a multi-cloud environment while ensuring compliance with various international regulations?

- Explain your strategy for implementing a data architecture that supports both operational and analytical workloads in a microservices environment.

- How would you design a data architecture to support a global organization with strict data residency requirements in different countries?

- Describe your approach to implementing a data architecture that can efficiently handle both structured and unstructured data for advanced analytics.

- How would you architect a solution for real-time fraud detection that can process millions of transactions per second?

- Explain your strategy for implementing a data architecture that supports seamless data migration between on-premises systems and multiple cloud platforms.

- How would you design a data architecture to support a recommendation engine that requires processing of large volumes of user behavior data?

- Describe your approach to implementing a data architecture that can handle IoT data streams from millions of devices in real-time.

- How would you architect a solution for a data marketplace that allows secure data sharing and monetization across multiple organizations?

9 Data Architecture interview questions and answers related to data modeling

Ready to dive into the world of data modeling? These 9 data architecture interview questions will help you assess candidates' understanding of this crucial aspect. Use them to gauge how well applicants can translate business needs into effective data structures, ensuring your data architect can build a solid foundation for your organization's data ecosystem.

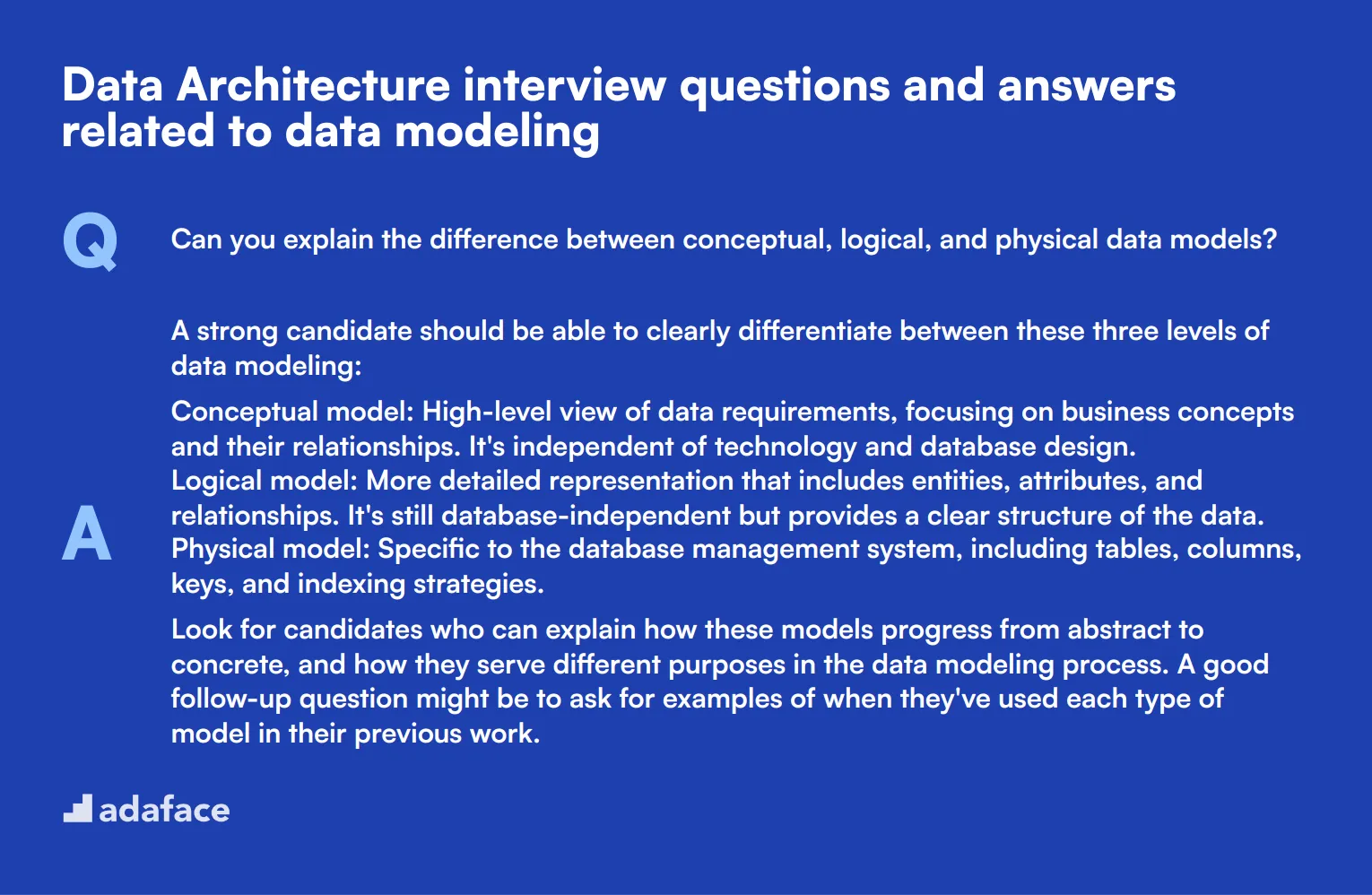

1. Can you explain the difference between conceptual, logical, and physical data models?

A strong candidate should be able to clearly differentiate between these three levels of data modeling:

- Conceptual model: High-level view of data requirements, focusing on business concepts and their relationships. It's independent of technology and database design.

- Logical model: More detailed representation that includes entities, attributes, and relationships. It's still database-independent but provides a clear structure of the data.

- Physical model: Specific to the database management system, including tables, columns, keys, and indexing strategies.

Look for candidates who can explain how these models progress from abstract to concrete, and how they serve different purposes in the data modeling process. A good follow-up question might be to ask for examples of when they've used each type of model in their previous work.

2. How do you approach normalization in data modeling, and when might you choose to denormalize?

An ideal response should cover the basics of normalization and its benefits:

- Normalization reduces data redundancy and improves data integrity

- It typically involves breaking down tables into smaller, more focused tables

- The process usually aims for at least Third Normal Form (3NF)

Regarding denormalization, candidates should recognize that it's sometimes necessary for performance reasons:

- Denormalization can improve query performance by reducing the need for joins

- It's often used in data warehousing and reporting systems

- The decision to denormalize should balance performance gains against the increased complexity of data management

Look for candidates who can articulate the trade-offs involved and provide examples of when they've made these decisions in real-world scenarios. Their approach should demonstrate a nuanced understanding of data modeling principles.

3. How do you handle slowly changing dimensions in your data models?

A competent candidate should be familiar with the concept of slowly changing dimensions (SCDs) and be able to explain different strategies for handling them:

- Type 1: Overwrite the old value with the new value

- Type 2: Add a new row with the changed data, keeping historical records

- Type 3: Add new columns to track changes

- Type 4: Use a separate history table

- Type 6: Combine Type 1, 2, and 3 approaches

They should be able to discuss the pros and cons of each approach and when to use them. For example, Type 2 is great for maintaining a full history but can lead to table bloat, while Type 1 is simple but loses historical data.

Look for candidates who can provide real-world examples of implementing SCDs and explain how they chose the appropriate type based on business requirements and system constraints. A good follow-up question might be to ask about their experience with tools or techniques for efficiently managing SCDs in large datasets.

4. How do you ensure data model flexibility to accommodate future changes?

A strong answer should touch on several key points:

- Use of abstraction layers to separate business logic from physical implementation

- Implementing a modular design that allows for easy additions or modifications

- Avoiding hard-coding of business rules directly into the data model

- Utilizing metadata-driven approaches for increased adaptability

- Considering extensible schemas or NoSQL solutions for highly variable data

Candidates should also mention the importance of thorough documentation and version control for managing model evolution over time. Look for responses that demonstrate foresight and an understanding of the balance between current needs and future scalability.

A good candidate might also discuss their experience with agile data modeling techniques or how they've successfully adapted models to accommodate unforeseen business changes in past projects.

5. Can you explain the concept of a star schema and when you would use it?

A comprehensive answer should cover the following points:

- A star schema consists of one or more fact tables referencing any number of dimension tables

- Fact tables contain quantitative data (metrics), while dimension tables contain descriptive attributes

- It's called a star schema because the diagram resembles a star, with the fact table at the center

- Star schemas are typically used in data warehousing and business intelligence applications

Candidates should be able to explain the benefits of star schemas:

- Simplified queries and improved query performance

- Easier for business users to understand and navigate

- Efficient for aggregating large datasets

Look for answers that also mention potential drawbacks, such as data redundancy and the challenges of maintaining data integrity across denormalized structures. A strong candidate might discuss their experience in implementing star schemas and how they've balanced performance needs with data management considerations.

6. How do you approach data modeling for unstructured or semi-structured data?

A good response should acknowledge the challenges of modeling non-relational data and discuss various approaches:

- Using NoSQL databases like document stores (e.g., MongoDB) or key-value stores

- Implementing data lakes to store raw, unstructured data

- Utilizing schema-on-read approaches for flexibility

- Considering graph databases for highly interconnected data

- Employing JSON or XML data types within relational databases

Candidates should also mention strategies for integrating unstructured data with structured data, such as:

- Creating hybrid models that combine relational and non-relational elements

- Using ETL processes to extract structured information from unstructured sources

- Implementing metadata management systems to track and categorize diverse data types

Look for answers that demonstrate adaptability and a willingness to explore non-traditional modeling techniques. A strong candidate might discuss their experience with specific tools or frameworks designed for handling unstructured data in big data environments.

7. How do you ensure data consistency across different models or systems in a distributed environment?

A comprehensive answer should address several key strategies:

- Implementing a single source of truth for critical data elements

- Using master data management (MDM) systems to maintain consistent reference data

- Employing data governance practices to establish data ownership and quality standards

- Utilizing event-driven architectures or change data capture (CDC) for real-time synchronization

- Implementing data validation and reconciliation processes

Candidates should also discuss the challenges of maintaining consistency in distributed systems:

- Dealing with network latency and potential failures

- Balancing consistency with availability and partition tolerance (CAP theorem)

- Managing eventual consistency in large-scale systems

Look for answers that demonstrate practical experience in addressing these challenges. A strong candidate might discuss specific tools or technologies they've used for data integration and synchronization, as well as their approach to designing resilient data architectures that can handle inconsistencies gracefully.

8. How do you approach data modeling for real-time analytics systems?

A strong answer should cover the following aspects:

- Emphasis on low-latency data ingestion and processing

- Use of stream processing technologies (e.g., Apache Kafka, Apache Flink)

- Implementing time-series data models for efficient storage and querying

- Consideration of in-memory databases for faster data access

- Designing for high concurrency and scalability

Candidates should also discuss strategies for balancing real-time requirements with historical analysis:

- Using lambda or kappa architectures to combine batch and stream processing

- Implementing data tiering to manage hot, warm, and cold data

- Considering approximation algorithms for real-time aggregations on large datasets

Look for answers that demonstrate an understanding of the trade-offs involved in real-time systems, such as consistency vs. availability. A strong candidate might discuss their experience with specific real-time analytics projects and how they've optimized data models to meet stringent performance requirements.

9. How do you incorporate data quality rules into your data models?

An ideal response should cover several key strategies:

- Defining and implementing constraints (e.g., primary keys, foreign keys, check constraints)

- Using triggers or stored procedures to enforce complex business rules

- Implementing data validation at the application layer

- Designing for data profiling and monitoring

- Incorporating data cleansing and standardization processes

Candidates should also discuss the importance of:

- Collaborating with business stakeholders to define data quality standards

- Implementing metadata management to document data quality rules

- Setting up automated data quality checks and alerts

- Establishing processes for handling exceptions and data remediation

Look for answers that demonstrate a proactive approach to data quality. A strong candidate might discuss their experience with data quality tools or frameworks, and how they've integrated data quality considerations throughout the data lifecycle, from ingestion to reporting.

Which Data Architecture skills should you evaluate during the interview phase?

While a single interview may not unveil every facet of a candidate’s capabilities, focusing on key skills specific to Data Architecture can streamline the selection process. These core competencies are essential to assess as they directly impact the effectiveness and success of any data architectural projects.

Data Modeling

Data modeling is crucial for creating data structures that effectively support the business's needs. It involves the conceptualization and visualization of data relationships and flows which are fundamental for creating efficient databases and data warehouses.

To preliminarily assess a candidate's expertise in data modeling, consider utilizing a data modeling test from our library. This test includes relevant MCQs to help pinpoint candidates with the necessary skills.

In addition to an assessment test, asking targeted interview questions can help further evaluate a candidate's practical understanding of data modeling.

Can you describe the approach you would take to design a database for a new inventory management system?

Listen for a clear, methodical approach in their answer, highlighting their ability to translate business requirements into a coherent database design. Understanding of normalization and entity-relationship diagrams (ERDs) is also critical.

Data Integration

Data integration is key in ensuring that disparate data sources can be effectively combined to provide a unified view. This skill is essential for developing systems that provide comprehensive analytics and insights across various data environments.

For preliminary screening, consider using an assessment test that covers data integration scenarios. Although not available directly in our library, you can explore related assessments or develop customized questions.

Consider asking detailed questions on data integration to gain insight into the candidate's ability to handle complex data from multiple sources.

Explain a challenging data integration project you've worked on and how you addressed the challenges.

Look for answers that demonstrate the candidate’s problem-solving abilities and their experience with various data integration tools and methodologies. Their ability to identify and overcome specific challenges should also be evident.

SQL

Proficiency in SQL is essential for data architects as it is the primary language used for interacting with databases. Candidates must be adept at writing efficient, complex queries to manage and manipulate data effectively.

You can gauge a candidate's SQL skills efficiently through our SQL test which includes a variety of MCQs tailored to assess their command over SQL.

To further explore their SQL capabilities, consider including practical interview questions that require in-depth knowledge.

Write a SQL query to find the second highest salary from the 'employee' table.

Evaluate the candidate's ability to understand SQL functions and their approach to solving common SQL problems. Effective use of subqueries or window functions can also indicate a strong grasp of SQL.

Hire top talent with Data Architecture skills assessments and the right interview questions

If you are looking to hire someone with Data Architecture skills, it is important to ensure they possess the relevant expertise.

The most accurate way to do this is by using skill tests. Check out our Data Architecture Tests to assess candidates thoroughly.

Once you use these tests, you can shortlist the best applicants and invite them for interviews.

Ready to get started? Head over to our sign-up page to begin assessing candidates.

Data Modeling Skills Test

Download Data Architecture interview questions template in multiple formats

Data Architecture Interview Questions FAQs

A Data Architect should have strong analytical skills, knowledge of database systems, data modeling expertise, understanding of data integration processes, and familiarity with data governance principles.

Ask questions about different data modeling techniques, normalization, denormalization, and have them explain how they would approach modeling a specific business scenario.

Junior roles typically focus on basic data modeling and database design, while senior roles involve complex system architecture, data strategy, and leadership in large-scale projects.

Data integration is a key aspect of Data Architecture. It ensures smooth data flow between systems and is critical for creating a unified view of organizational data.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources