Hiring the right Cassandra developer can be a challenge for recruiters and hiring managers. Asking the right interview questions is key to assessing a candidate's knowledge and skills effectively.

This blog post provides a comprehensive list of Cassandra interview questions, ranging from basic to advanced levels. We cover topics like data modeling, performance tuning, and situational scenarios to help you evaluate candidates at different experience levels.

By using these questions, you can gain valuable insights into a candidate's Cassandra expertise and problem-solving abilities. Consider pairing these interview questions with a Cassandra skills assessment for a more thorough evaluation process.

Table of contents

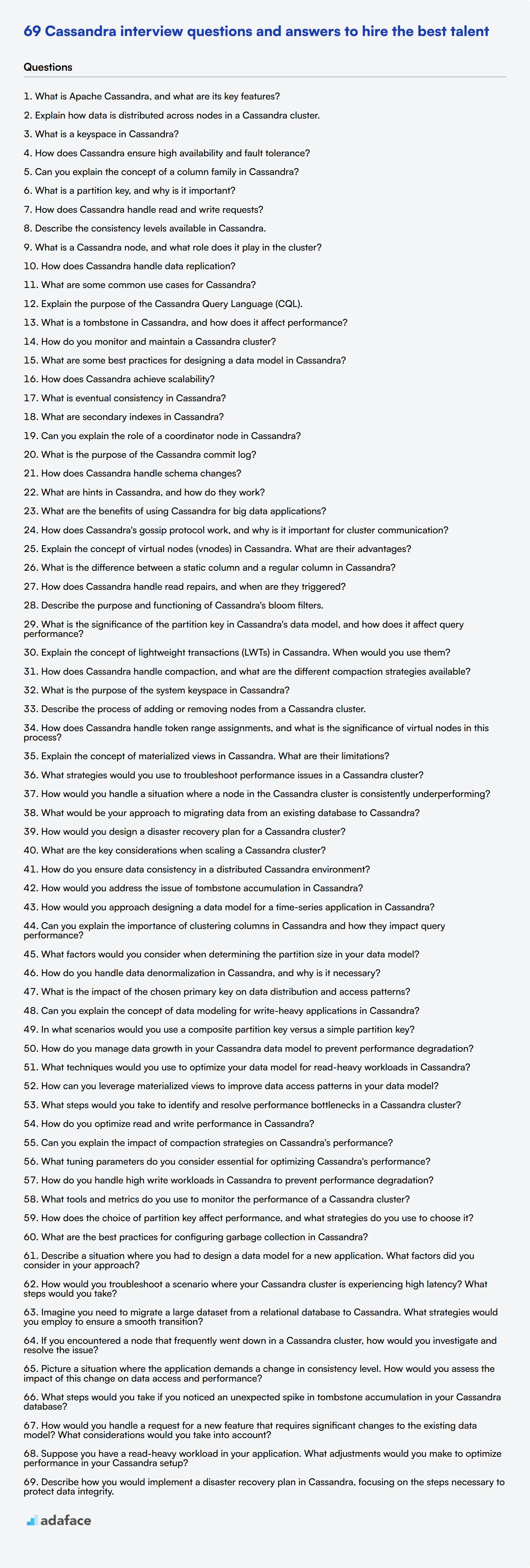

15 basic Cassandra interview questions and answers to assess candidates

To determine if your candidates have a solid understanding of basic Cassandra concepts, consider using this list of 15 essential interview questions. These questions will help you assess their technical skills and determine if they are the right fit for your team. For more detailed roles, you might also want to check out this software engineer job description.

- What is Apache Cassandra, and what are its key features?

- Explain how data is distributed across nodes in a Cassandra cluster.

- What is a keyspace in Cassandra?

- How does Cassandra ensure high availability and fault tolerance?

- Can you explain the concept of a column family in Cassandra?

- What is a partition key, and why is it important?

- How does Cassandra handle read and write requests?

- Describe the consistency levels available in Cassandra.

- What is a Cassandra node, and what role does it play in the cluster?

- How does Cassandra handle data replication?

- What are some common use cases for Cassandra?

- Explain the purpose of the Cassandra Query Language (CQL).

- What is a tombstone in Cassandra, and how does it affect performance?

- How do you monitor and maintain a Cassandra cluster?

- What are some best practices for designing a data model in Cassandra?

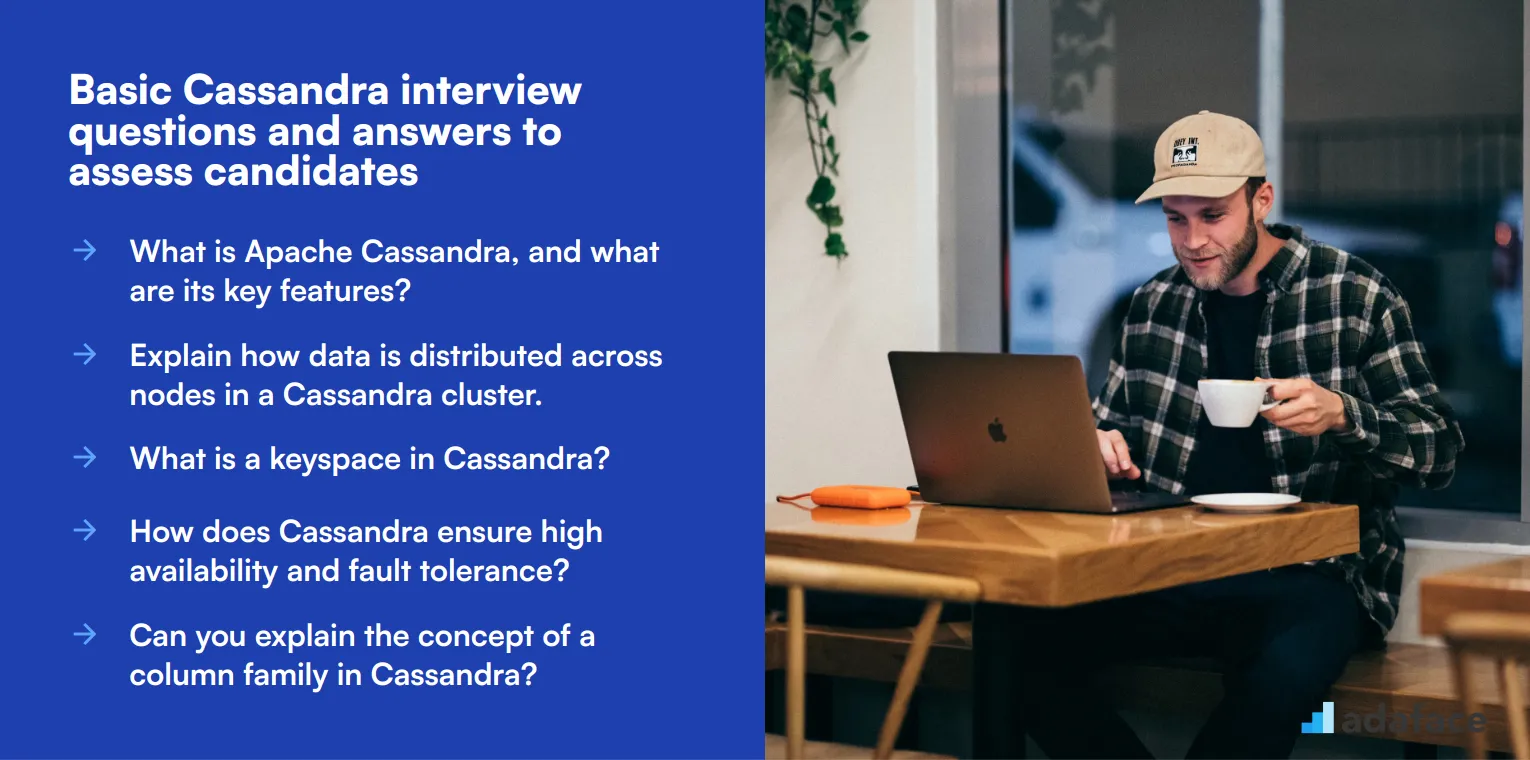

8 Cassandra interview questions and answers to evaluate junior developers

To evaluate whether junior developers have a solid grasp of Apache Cassandra, consider using some of these insightful interview questions. They are designed to test candidates on their understanding and practical knowledge, ensuring you find the right fit for your team.

1. How does Cassandra achieve scalability?

Cassandra achieves scalability through its unique architecture, which allows for linear scalability. This means that as you add more nodes to the cluster, the performance scales linearly. It uses a peer-to-peer design where every node is equal, avoiding any single point of failure.

Look for candidates who can explain the concept of adding nodes to a cluster and how data is distributed evenly across all nodes. They should mention that this setup allows for easy scaling without extensive reconfiguration or performance loss.

2. What is eventual consistency in Cassandra?

Eventual consistency means that while all updates to a distributed database may not be immediately visible to all nodes, the system will eventually become consistent once all updates propagate through the system. In Cassandra, this is achieved through tunable consistency levels.

Ideal candidates should mention that Cassandra allows for adjusting the consistency level based on requirements, balancing between consistency, availability, and performance. They should understand the implications of different consistency levels on read and write operations.

3. What are secondary indexes in Cassandra?

Secondary indexes in Cassandra are used to create indexes on column values, allowing you to query data based on non-primary key columns. However, they are not as powerful as traditional SQL indexes and should be used judiciously.

Candidates should acknowledge the limitations of secondary indexes, such as their impact on write performance and efficiency for high-cardinality data. Look for an understanding of when and how to use secondary indexes effectively.

4. Can you explain the role of a coordinator node in Cassandra?

In Cassandra, the coordinator node is the node that receives the client's read or write request. It acts as a proxy and is responsible for routing the request to the correct node(s) that hold the required data.

Candidates should describe the coordinator’s role in query processing, including how it ensures data consistency and availability by coordinating with other nodes. They should also highlight its temporary role, as any node can act as a coordinator.

5. What is the purpose of the Cassandra commit log?

The commit log in Cassandra is a crash-recovery mechanism used to ensure data durability. Every write operation is first written to the commit log before being applied to the memtable. This ensures that data is not lost in case of a sudden crash.

Ideal answers should mention that the commit log provides durability and recovery capabilities, allowing Cassandra to restore data after a failure. Look for an understanding of how it works in conjunction with the memtable and SSTables.

6. How does Cassandra handle schema changes?

Cassandra handles schema changes through a process called schema agreement. When a schema change is made, it is propagated to all nodes in the cluster to ensure consistency. Each node updates its local schema and acknowledges the change.

Candidates should explain the importance of schema agreement and how Cassandra ensures that all nodes eventually have a consistent view of the schema. They should also discuss any potential challenges or delays in schema propagation.

7. What are hints in Cassandra, and how do they work?

Hints in Cassandra are used to ensure eventual consistency when a node is temporarily unavailable. When a write operation is attempted and a target node is down, the coordinator node stores a hint for the downed node. When the downed node returns, the hint is replayed to ensure the data is consistent.

Look for candidates who can effectively explain how hinted handoff works and its role in maintaining data consistency during node outages. They should also mention any potential drawbacks, such as increased storage requirements and replay delays.

8. What are the benefits of using Cassandra for big data applications?

Cassandra offers several benefits for big data applications, including high availability, fault tolerance, and scalability. Its distributed architecture ensures no single point of failure, making it ideal for handling large volumes of data across multiple data centers.

Candidates should highlight how Cassandra’s write-optimized architecture and ability to handle high write throughput make it suitable for big data. They should also touch on its support for wide-column storage and real-time data processing capabilities.

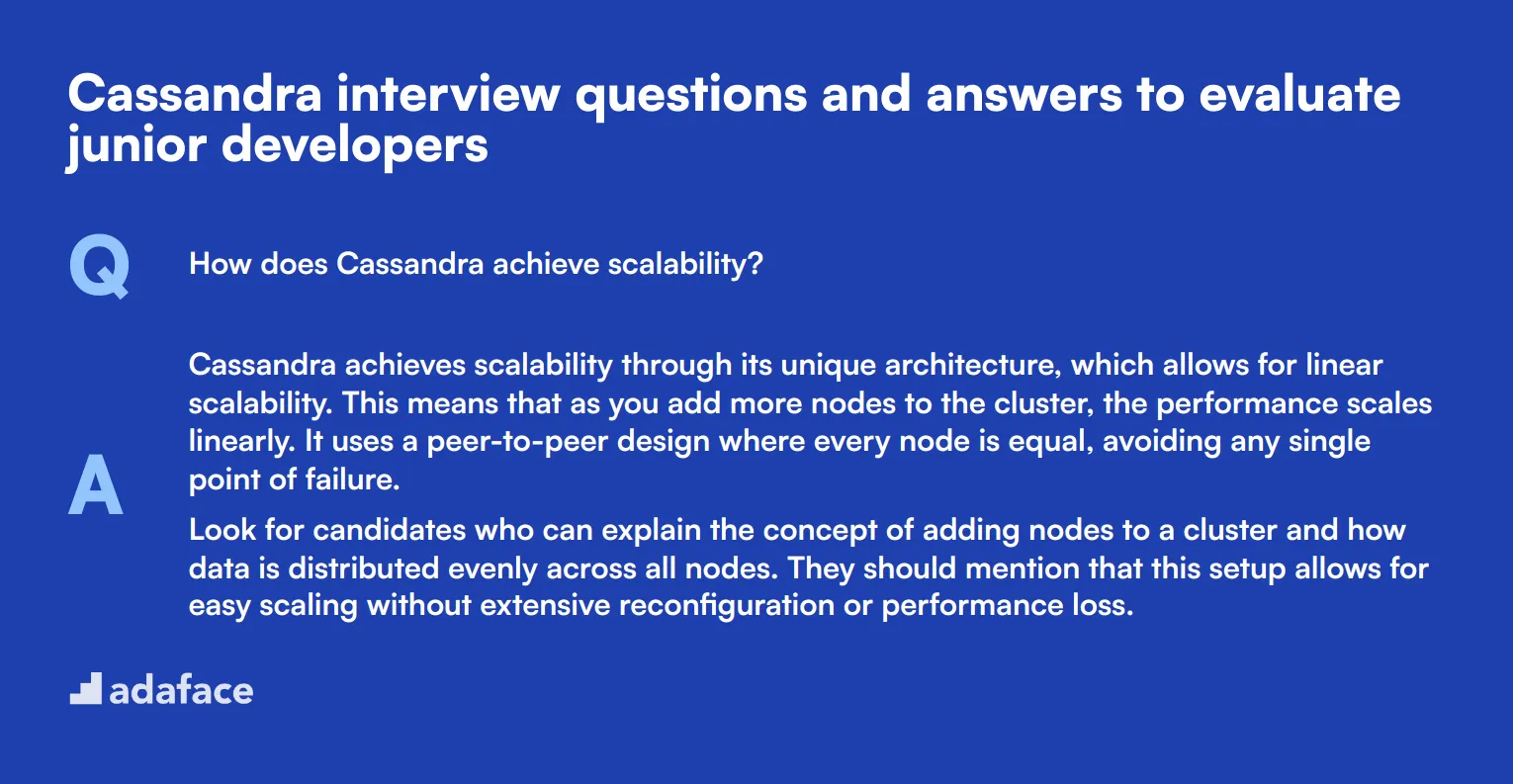

12 intermediate Cassandra interview questions and answers to ask mid-tier developers

To assess the intermediate-level skills of Cassandra developers, use these 12 targeted interview questions. These questions are designed to evaluate a candidate's deeper understanding of Cassandra's architecture, data modeling, and performance optimization techniques.

- How does Cassandra's gossip protocol work, and why is it important for cluster communication?

- Explain the concept of virtual nodes (vnodes) in Cassandra. What are their advantages?

- What is the difference between a static column and a regular column in Cassandra?

- How does Cassandra handle read repairs, and when are they triggered?

- Describe the purpose and functioning of Cassandra's bloom filters.

- What is the significance of the partition key in Cassandra's data model, and how does it affect query performance?

- Explain the concept of lightweight transactions (LWTs) in Cassandra. When would you use them?

- How does Cassandra handle compaction, and what are the different compaction strategies available?

- What is the purpose of the system keyspace in Cassandra?

- Describe the process of adding or removing nodes from a Cassandra cluster.

- How does Cassandra handle token range assignments, and what is the significance of virtual nodes in this process?

- Explain the concept of materialized views in Cassandra. What are their limitations?

7 advanced Cassandra interview questions and answers to evaluate senior developers

To truly vet senior developers, you need questions that dig deeper into their understanding and real-world application of Cassandra. Use this list of advanced Cassandra interview questions to identify candidates who possess both the experience and the strategic thinking needed for complex data management tasks.

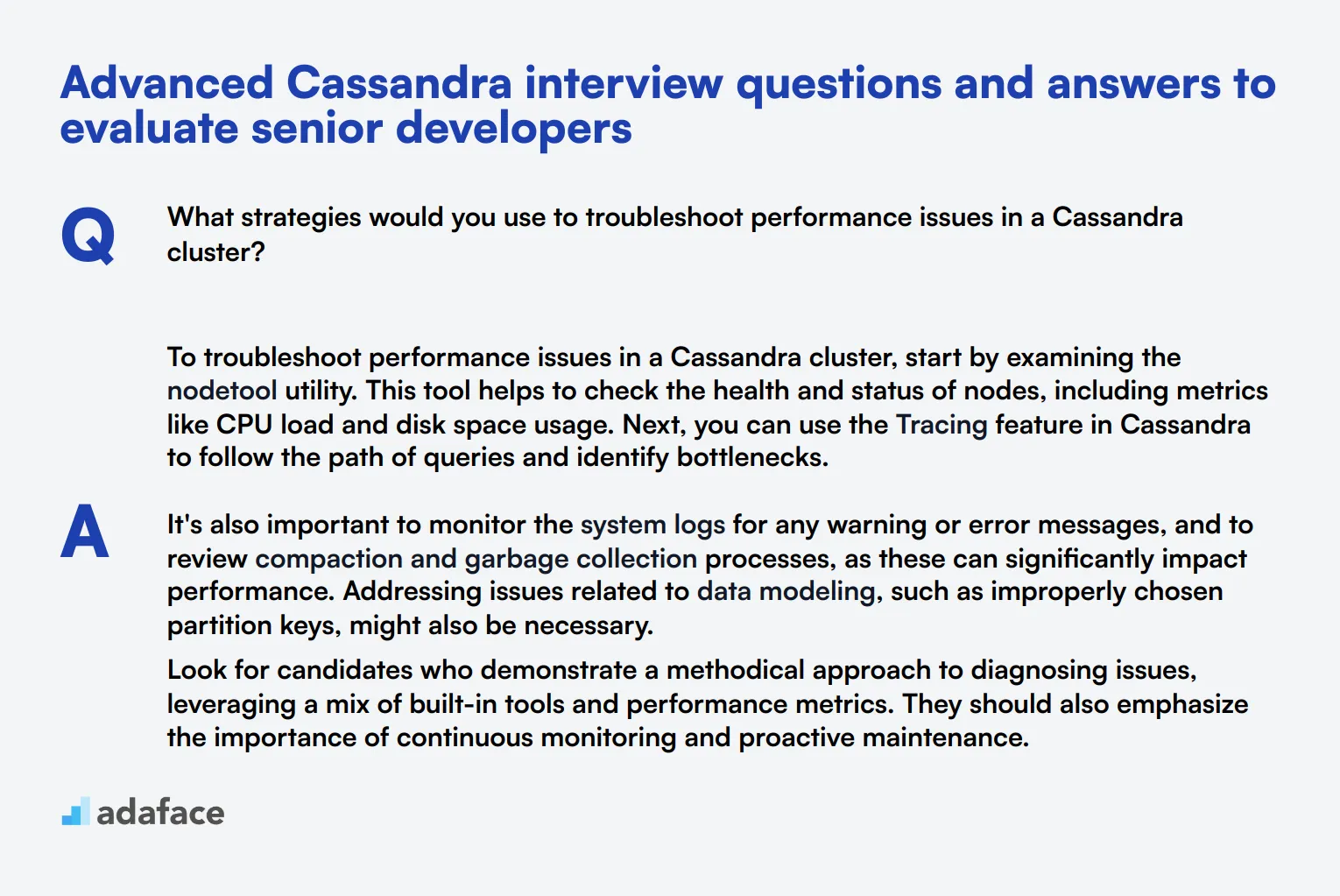

1. What strategies would you use to troubleshoot performance issues in a Cassandra cluster?

To troubleshoot performance issues in a Cassandra cluster, start by examining the nodetool utility. This tool helps to check the health and status of nodes, including metrics like CPU load and disk space usage. Next, you can use the Tracing feature in Cassandra to follow the path of queries and identify bottlenecks.

It's also important to monitor the system logs for any warning or error messages, and to review compaction and garbage collection processes, as these can significantly impact performance. Addressing issues related to data modeling, such as improperly chosen partition keys, might also be necessary.

Look for candidates who demonstrate a methodical approach to diagnosing issues, leveraging a mix of built-in tools and performance metrics. They should also emphasize the importance of continuous monitoring and proactive maintenance.

2. How would you handle a situation where a node in the Cassandra cluster is consistently underperforming?

When a node in a Cassandra cluster is underperforming, the first step is to identify if the issue is related to hardware limitations or software misconfigurations. Checking for resource constraints such as CPU, memory, and disk I/O can help pinpoint the cause.

Once hardware issues are ruled out, review the node's configuration settings. Adjustments in JVM parameters and Cassandra.yaml file settings might be necessary. Additionally, ensure that data distribution is balanced across all nodes to prevent hotspots.

An ideal candidate will showcase a thorough understanding of both hardware and software factors that can affect node performance. They should also highlight their experience with tuning configurations and rebalancing data to maintain cluster health.

3. What would be your approach to migrating data from an existing database to Cassandra?

Migrating data to Cassandra involves several key steps. Start by designing a data model that takes full advantage of Cassandra's distributed nature. This might require denormalizing data for optimal query performance.

Next, create a plan for data extraction from the source database and data transformation to match the Cassandra schema. Tools like Apache Nifi or custom ETL scripts can facilitate this process. Finally, perform a data load into Cassandra, ensuring minimal downtime and consistency.

Candidates should exhibit a clear methodology for data migration, emphasizing both planning and execution phases. Look for familiarity with data migration tools and a strong grasp of data modeling principles tailored to Cassandra's strengths.

4. How would you design a disaster recovery plan for a Cassandra cluster?

Designing a disaster recovery plan for Cassandra starts with setting up regular backups of the data. This can be done using nodetool snapshot or third-party tools that integrate with cloud storage solutions. Ensuring that backups are geographically distributed adds an extra layer of security.

Next, implement a replication strategy that aligns with your desired level of fault tolerance. Having a multi-datacenter setup can help maintain availability even in the event of a regional outage. Also, test your restore procedures regularly to ensure data integrity and recovery speed.

Strong candidates will emphasize the importance of regular testing and validation of the disaster recovery plan. They should also be able to discuss specific tools and strategies they have used in past experiences to safeguard data.

5. What are the key considerations when scaling a Cassandra cluster?

When scaling a Cassandra cluster, start by ensuring that the data model can handle increased data volumes and query loads. Properly chosen partition keys and clustering columns are crucial for maintaining performance.

Next, add new nodes to the cluster and allow Cassandra's auto-rebalancing feature to distribute data evenly. Monitor the cluster closely during this process to identify any issues with data distribution or node performance.

Look for candidates who understand the importance of both data modeling and node management in scaling scenarios. They should also demonstrate experience with monitoring and adjusting the cluster during scaling operations to ensure a smooth transition.

6. How do you ensure data consistency in a distributed Cassandra environment?

Ensuring data consistency in Cassandra involves choosing the appropriate consistency level for read and write operations. Consistency levels like QUORUM or ALL can provide stronger guarantees, though they may impact performance.

Additionally, implementing repair operations regularly helps to resolve inconsistencies across replicas. Tools like nodetool repair can ensure that data remains synchronized across nodes.

Candidates should demonstrate a clear understanding of the trade-offs between consistency and performance. They should also emphasize the role of regular maintenance tasks, such as repairs, in maintaining data integrity in a distributed environment.

7. How would you address the issue of tombstone accumulation in Cassandra?

Tombstone accumulation can be addressed by optimizing compaction strategies. Using the right compaction strategy, such as Leveled Compaction or Time Window Compaction, helps to manage the number of tombstones effectively.

Additionally, regularly running nodetool compact can help to remove old tombstones and prevent them from impacting read performance. Adjusting gc_grace_seconds to a lower value might also reduce the duration tombstones are kept.

Strong candidates will be familiar with different compaction strategies and their impact on tombstone management. They should also demonstrate an understanding of the trade-offs involved in adjusting compaction settings and garbage collection intervals.

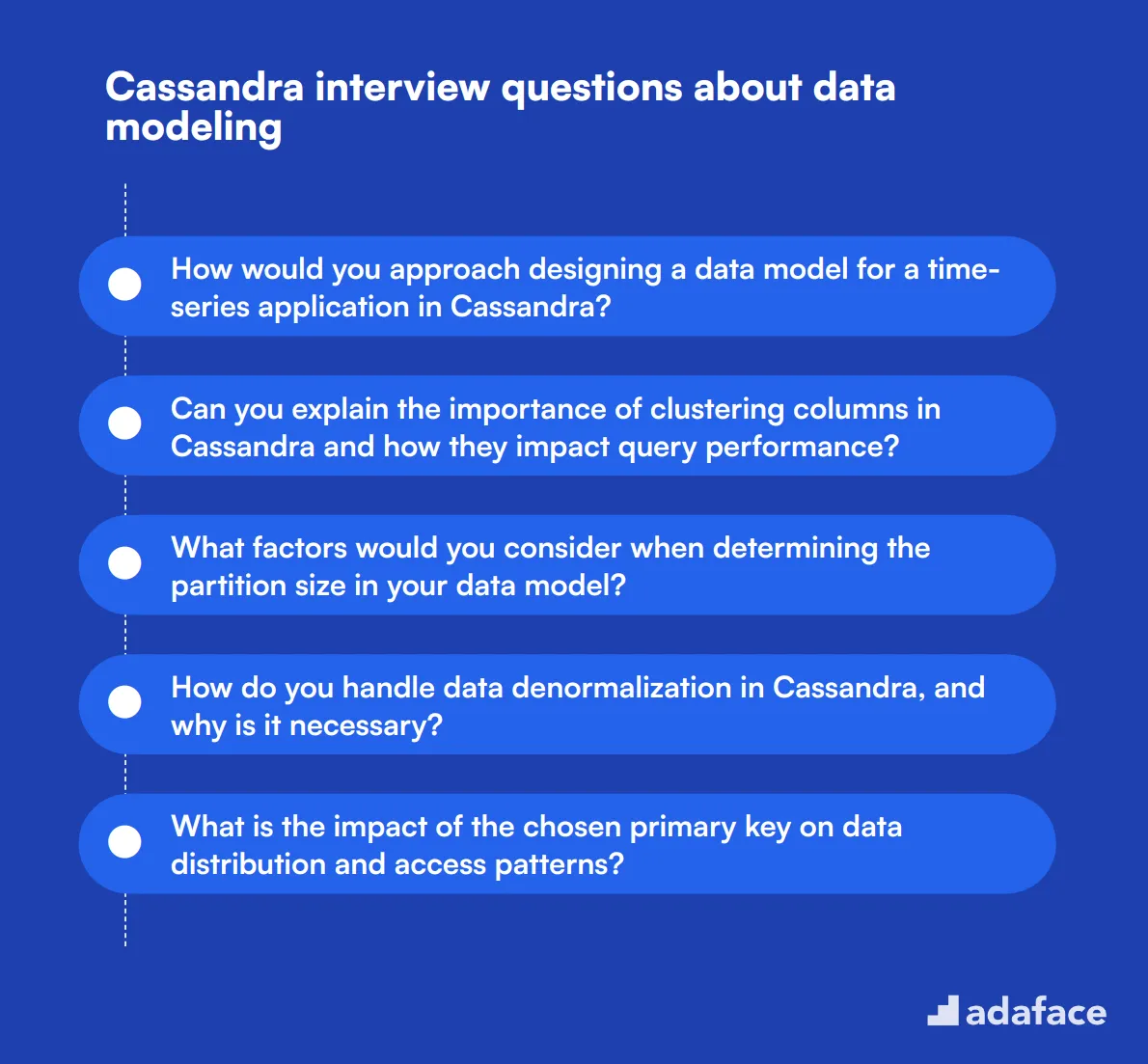

10 Cassandra interview questions about data modeling

To assess whether candidates possess the necessary skills in data modeling using Cassandra, consider asking some of these targeted interview questions. These questions will help you gauge their understanding of fundamental principles and practical applications, ensuring they are fit for your technical needs in roles like data scientist or database management.

- How would you approach designing a data model for a time-series application in Cassandra?

- Can you explain the importance of clustering columns in Cassandra and how they impact query performance?

- What factors would you consider when determining the partition size in your data model?

- How do you handle data denormalization in Cassandra, and why is it necessary?

- What is the impact of the chosen primary key on data distribution and access patterns?

- Can you explain the concept of data modeling for write-heavy applications in Cassandra?

- In what scenarios would you use a composite partition key versus a simple partition key?

- How do you manage data growth in your Cassandra data model to prevent performance degradation?

- What techniques would you use to optimize your data model for read-heavy workloads in Cassandra?

- How can you leverage materialized views to improve data access patterns in your data model?

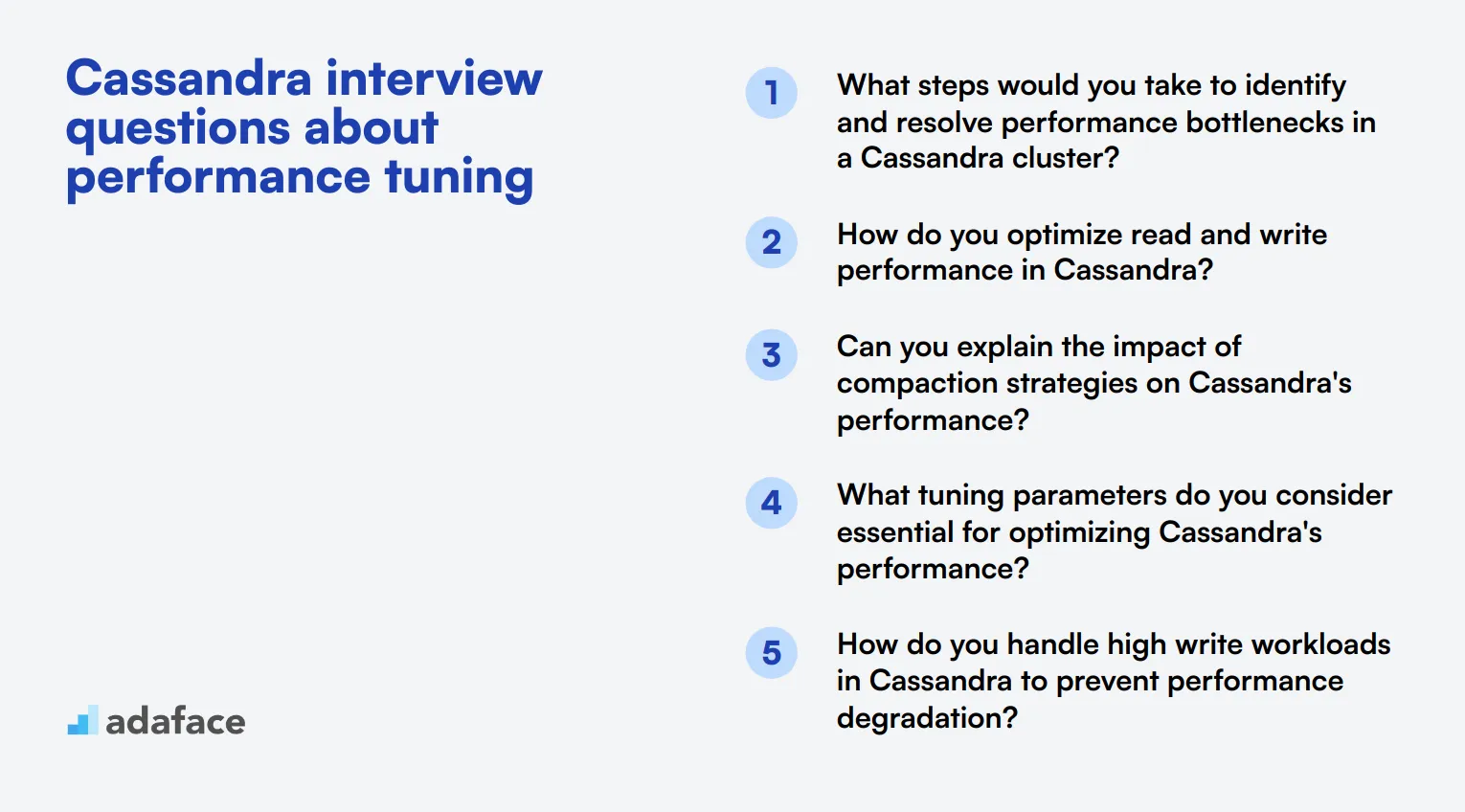

8 Cassandra interview questions about performance tuning

To determine whether your candidates have the right skills for optimizing performance in Cassandra, use these targeted interview questions. These questions will help you evaluate their expertise in performance tuning and ensure they can effectively manage and optimize your Cassandra clusters. For additional insights, refer to software engineer job description.

- What steps would you take to identify and resolve performance bottlenecks in a Cassandra cluster?

- How do you optimize read and write performance in Cassandra?

- Can you explain the impact of compaction strategies on Cassandra's performance?

- What tuning parameters do you consider essential for optimizing Cassandra's performance?

- How do you handle high write workloads in Cassandra to prevent performance degradation?

- What tools and metrics do you use to monitor the performance of a Cassandra cluster?

- How does the choice of partition key affect performance, and what strategies do you use to choose it?

- What are the best practices for configuring garbage collection in Cassandra?

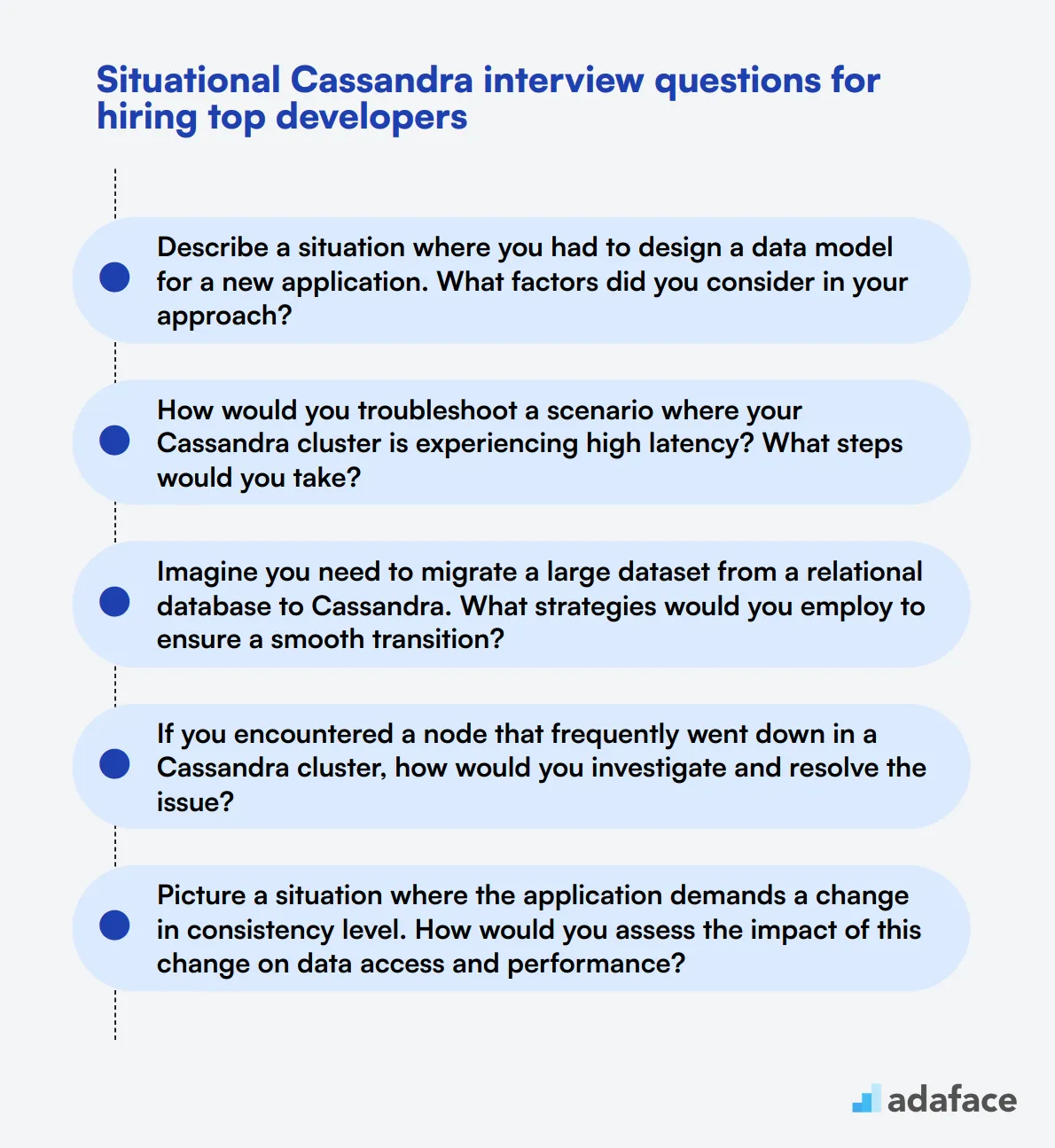

9 situational Cassandra interview questions for hiring top developers

To effectively evaluate if candidates have the right skills to tackle real-world scenarios in Cassandra, consider asking these situational questions during the interview. This approach will help you gauge their problem-solving abilities and practical knowledge in a more dynamic context, ultimately leading to better hiring decisions. For additional guidance on job descriptions, check out software engineer resources.

- Describe a situation where you had to design a data model for a new application. What factors did you consider in your approach?

- How would you troubleshoot a scenario where your Cassandra cluster is experiencing high latency? What steps would you take?

- Imagine you need to migrate a large dataset from a relational database to Cassandra. What strategies would you employ to ensure a smooth transition?

- If you encountered a node that frequently went down in a Cassandra cluster, how would you investigate and resolve the issue?

- Picture a situation where the application demands a change in consistency level. How would you assess the impact of this change on data access and performance?

- What steps would you take if you noticed an unexpected spike in tombstone accumulation in your Cassandra database?

- How would you handle a request for a new feature that requires significant changes to the existing data model? What considerations would you take into account?

- Suppose you have a read-heavy workload in your application. What adjustments would you make to optimize performance in your Cassandra setup?

- Describe how you would implement a disaster recovery plan in Cassandra, focusing on the steps necessary to protect data integrity.

Which Cassandra skills should you evaluate during the interview phase?

While a single interview cannot fully assess every aspect of a candidate's Cassandra expertise, focusing on key skills can provide valuable insights. The following core competencies are particularly important to evaluate during the Cassandra interview process.

Data Modeling

Data modeling is a fundamental skill for working with Cassandra. It involves designing efficient schemas that optimize query performance and data distribution across the cluster.

To assess this skill, consider using an assessment test that includes relevant MCQs on Cassandra data modeling concepts.

You can also ask targeted interview questions to gauge the candidate's understanding of data modeling in Cassandra. Here's an example:

Can you explain the differences between designing a schema for Cassandra versus a relational database? What considerations are specific to Cassandra?

Look for answers that demonstrate understanding of denormalization, query-driven design, and the importance of partition keys in Cassandra. The candidate should also mention how Cassandra's distributed nature influences schema design decisions.

Query Optimization

Query optimization is crucial for maintaining Cassandra's performance at scale. It involves understanding how to write efficient CQL queries and avoiding anti-patterns that can lead to poor performance.

An online test with practical CQL questions can help evaluate a candidate's query optimization skills.

To further assess this skill, consider asking a question like:

What strategies would you use to optimize a slow-performing Cassandra query?

Listen for responses that include techniques such as proper indexing, avoiding large partitions, using appropriate consistency levels, and leveraging Cassandra's strengths in data distribution. The candidate should also mention the importance of monitoring and profiling queries.

Cluster Management

Effective cluster management is essential for maintaining a healthy Cassandra deployment. This includes understanding node operations, replication strategies, and consistency levels.

To evaluate a candidate's cluster management skills, you might ask:

How would you handle adding new nodes to an existing Cassandra cluster? What precautions would you take?

Look for answers that discuss proper planning, understanding of token ranges, the importance of running repairs, and strategies for minimizing impact on cluster performance during expansion. The candidate should also mention considerations for data consistency and rebalancing.

Enhance Your Team with Expert Cassandra Skills Using Adaface

If you're looking to hire someone with Cassandra skills, it's important to verify those skills accurately.

The best way to assess these skills is by using specialized skill tests. Consider utilizing the Apache Cassandra Online Test from Adaface to gauge the proficiency of your candidates effectively.

After applying this test, you can efficiently shortlist the top applicants and invite them for interviews, ensuring you only spend time on the most promising candidates.

Ready to find the best talent? Begin by signing up on our dashboard or explore more about our testing options on our online assessment platform page.

Apache Cassandra Online Test

Download Cassandra interview questions template in multiple formats

Cassandra Interview Questions FAQs

Common Cassandra interview questions include topics on data modeling, performance tuning, and situational problem-solving.

They help to assess a candidate’s technical skills, problem-solving abilities, and experience with Cassandra.

Look for clarity, depth of knowledge, practical experience, and problem-solving capabilities.

Yes, questions can be categorized into basic, junior, intermediate, and advanced levels to match the candidate’s experience.

Situational questions assess how candidates apply their skills in real-world scenarios, reflecting their practical expertise.

Key topics include data modeling, performance tuning, basic and advanced concepts, and situational problem-solving.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources