When evaluating candidates for AWS roles, especially those involving S3, it's important to gauge their knowledge to ensure they can manage cloud storage. Many roles like AWS Cloud Engineers require a firm understanding of S3 to store and retrieve data effectively.

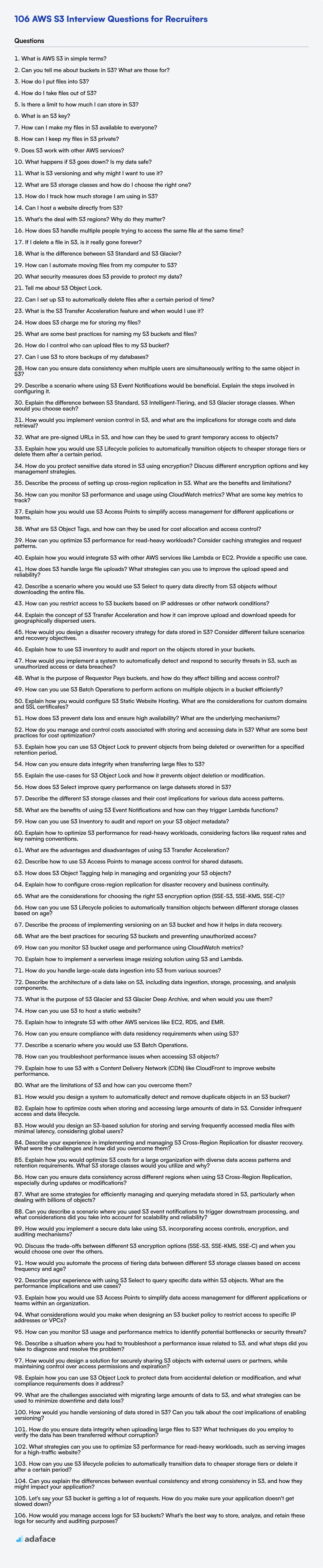

This blog post provides a curated list of AWS S3 interview questions, covering basic to advanced topics and MCQs, designed to help you assess candidates' expertise. These questions are categorized by difficulty level, including questions for experienced professionals.

By utilizing these questions, you can streamline your interview process and make informed hiring decisions, and you can further enhance candidate screening by using our AWS Online Test before interviews.

Table of contents

Basic AWS S3 interview questions

1. What is AWS S3 in simple terms?

AWS S3 (Simple Storage Service) is like a giant online hard drive. It's a service offered by Amazon Web Services that lets you store and retrieve any amount of data, at any time, from anywhere on the web.

Think of it as cloud-based object storage. Instead of storing files on your computer's hard drive, you store them in S3 'buckets'. You can then access these files programmatically, via a web interface, or through the AWS CLI. S3 is highly scalable, durable, and secure, making it a popular choice for storing backups, media files, application data, and more.

2. Can you tell me about buckets in S3? What are those for?

In Amazon S3, buckets are fundamental containers for storing objects (files). Think of them as the top-level directory in your S3 storage. Every object resides in a bucket.

Buckets serve several key purposes:

- Organization: They provide a way to organize your data logically.

- Access Control: Buckets are the primary unit for setting access permissions. You can control who can read, write, and manage the objects within a bucket.

- Storage Management: Buckets have configurations that you can adjust, such as versioning or object locking.

- Regionality: Buckets are created in a specific AWS region which impacts latency and compliance.

- Namespace: Every bucket name must be globally unique across all of AWS.

3. How do I put files into S3?

You can put files into S3 using several methods:

- AWS Management Console: Upload files directly through the S3 web interface.

- AWS CLI: Use the

aws s3 cpcommand (copy) oraws s3 synccommand (synchronize a local directory with an S3 bucket). For example:aws s3 cp my_file.txt s3://my-bucket/path/to/file.txt - AWS SDKs: Use programming languages like Python (with boto3), Java, or Go to interact with S3 programmatically. For example, a simple Python example:

import boto3 s3 = boto3.client('s3') s3.upload_file('my_file.txt', 'my-bucket', 'path/to/file.txt') - S3 API: Make direct HTTP requests to the S3 API (less common for simple uploads).

- Third-party tools: Tools like Cyberduck, FileZilla, or specialized backup software can also upload files to S3.

4. How do I take files out of S3?

There are several ways to take files out of S3. The most common methods include using the AWS CLI, the AWS SDKs in various programming languages (like Python's boto3), or the S3 console.

Using the AWS CLI, you'd typically use the aws s3 cp command to copy files from S3 to your local machine or another location. For example, aws s3 cp s3://your-bucket-name/path/to/your/file.txt /local/path/file.txt downloads a file. Similarly you can use aws s3 sync to download an entire folder or bucket content. With SDKs, you'd use the appropriate get_object or download_file methods provided by the S3 client object. The S3 console offers a download button to manually retrieve files.

5. Is there a limit to how much I can store in S3?

In practice, S3 offers virtually unlimited storage. While individual objects are limited to 5TB, there's no limit to the total amount of data you can store in an S3 bucket. AWS advertises S3 as providing virtually unlimited storage.

Keep in mind, however, that there are limits on the number of buckets you can create per AWS account (100 by default, but this can be increased upon request) and certain performance characteristics can be impacted by storing very large numbers of objects in a single bucket with certain naming patterns. Consult AWS documentation for performance best practices.

6. What is an S3 key?

An S3 key is the unique identifier for an object stored in Amazon S3 (Simple Storage Service). It's essentially the object's name within its bucket. Think of a bucket as a directory, and the key as the filename, including any directory path within that bucket.

Keys are structured and can include a prefix, mimicking a file system directory structure. For example, a key could be images/cats/fluffy.jpg, where images/cats/ is the prefix and fluffy.jpg is the object name. Keys are UTF-8 encoded strings, allowing for a wide range of characters, and they are fundamental for retrieving, updating, and deleting objects in S3.

7. How can I make my files in S3 available to everyone?

You can make your S3 files available to everyone using a few different methods:

- Making Objects Public: You can directly modify the object's Access Control List (ACL) to grant

public-readpermission. This makes the object publicly accessible via its S3 URL. Note that enabling public access requires acknowledging the security implications. You can also use S3 bucket policies to allow public access to all the objects inside of the bucket. - Using a Bucket Policy: A bucket policy provides more granular control. You can define a policy that grants

s3:GetObjectpermission to everyone ("Principal": "*") for all objects in the bucket. This is generally considered the more secure approach, especially if you need to manage access for multiple objects. For example:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::your-bucket-name/*"

}

]

}

8. How can I keep my files in S3 private?

To keep your files in S3 private, use these methods:

- IAM Roles and Policies: Grant access to S3 buckets and objects only to specific IAM roles/users. Define policies that explicitly allow or deny access based on the principle of least privilege.

- Bucket Policies: Define bucket-level policies that control access to all objects within the bucket. These policies can restrict access based on IP address, AWS account, or other conditions.

- Object ACLs (Access Control Lists): Control access to individual objects. While less scalable than bucket policies, ACLs can be useful for fine-grained access control.

- Encryption: Encrypt objects at rest using server-side encryption (SSE-S3, SSE-KMS, SSE-C) or client-side encryption. This protects data even if unauthorized access occurs.

- Block Public Access: Enable Block Public Access settings at the bucket or account level to prevent accidental public access. This overrides ACLs and bucket policies that grant public access.

- Signed URLs: Generate temporary URLs with limited validity periods to grant access to specific objects without requiring AWS credentials. This is useful for controlled sharing.

9. Does S3 work with other AWS services?

Yes, S3 integrates extensively with many other AWS services. It often serves as a central storage component in AWS architectures.

Examples include:

- EC2: Storing application data, hosting static websites.

- Lambda: Storing code packages, processing data stored in S3.

- CloudFront: Caching S3 content for faster delivery.

- Athena/Redshift: Querying data directly from S3 for analytics.

- Glacier: Archiving infrequently accessed data.

- EMR: Storing input and output data for big data processing.

- CloudTrail: Storing logs.

10. What happens if S3 goes down? Is my data safe?

If S3 experiences a complete outage, applications relying on it will likely experience failures related to data retrieval and storage. The impact depends on how critical S3 is to the application's functionality. For example, a website hosting images on S3 might display broken images, or an application heavily reliant on S3 for data processing could completely fail.

Regarding data safety, Amazon S3 is designed for 99.999999999% durability. While a total data loss is theoretically possible, it's extremely unlikely. S3 replicates data across multiple availability zones within a region, providing redundancy. However, it's still a best practice to implement your own backup and disaster recovery strategies, such as cross-region replication, for critical data. This provides an extra layer of protection against unforeseen circumstances.

11. What is S3 versioning and why might I want to use it?

S3 Versioning is a feature in AWS S3 that allows you to keep multiple versions of an object in the same bucket. When versioning is enabled, every modification (PUT, POST, COPY) creates a new version of the object. S3 assigns a unique version ID to each version. If you delete an object, S3 inserts a delete marker which also gets a version ID. The object is not truly deleted, previous versions are retained.

You might want to use S3 Versioning for several reasons:

- Data Recovery: Easily recover from accidental deletions or overwrites. You can simply revert to a previous version.

- Data Protection: Provides a layer of protection against unintended changes or application failures. If some application corrupts objects, previous safe versions are still available.

- Archival: Maintain a history of object changes for compliance or audit purposes.

- Undo Changes: Allows easy reverting of changes made to an S3 object.

12. What are S3 storage classes and how do I choose the right one?

S3 storage classes offer different options for data storage based on access frequency and cost. Common classes include: S3 Standard (frequent access, high availability), S3 Intelligent-Tiering (automatically moves data between frequent and infrequent access tiers), S3 Standard-IA (infrequent access), S3 One Zone-IA (infrequent access, lower cost, data stored in a single availability zone), S3 Glacier Instant Retrieval (archive data, milliseconds retrieval), S3 Glacier Flexible Retrieval (archive data, expedited, standard, and bulk retrieval options), and S3 Glacier Deep Archive (lowest cost, long-term archive).

Choosing the right class depends on your use case. If data is accessed frequently, use Standard or Intelligent-Tiering. For less frequent access, consider Standard-IA or One Zone-IA. For archival data, Glacier Instant Retrieval, Glacier Flexible Retrieval or Glacier Deep Archive are suitable. Consider factors like access frequency, data retrieval time requirements, cost, and data resilience when making your decision.

13. How do I track how much storage I am using in S3?

You can track S3 storage usage using several methods:

- S3 Storage Lens: Provides a dashboard in the AWS console with aggregated storage metrics. It offers organizational-level and bucket-level views, trends, and recommendations. You can configure it to generate daily or weekly reports. These reports can be accessed via the S3 console, API, or CLI.

- CloudWatch Metrics: S3 publishes metrics to CloudWatch, such as

BucketSizeBytesandNumberOfObjects. These metrics can be monitored and graphed to track usage trends. Note that enabling storage metrics incurs charges. - S3 Inventory: Generates a CSV or ORC file listing all objects in a bucket and their metadata (including size). You can then use this inventory to calculate the total storage used programmatically using tools like AWS Athena or other data processing tools.

14. Can I host a website directly from S3?

Yes, you can host a static website directly from an S3 bucket. You need to enable static website hosting for the bucket, configure an index document (e.g., index.html), and optionally configure an error document (e.g., error.html). Make sure the S3 bucket policy allows public read access so visitors can view your website.

However, S3 is designed for static content. For dynamic content or server-side processing (like PHP or Python), you'll need a different service like EC2, Elastic Beanstalk, or a serverless solution like Lambda behind API Gateway.

15. What's the deal with S3 regions? Why do they matter?

S3 regions are geographically isolated locations where your S3 buckets and data reside. They matter primarily for these reasons:

- Latency: Choosing a region close to your users reduces data access latency, improving application performance.

- Compliance: Certain regulations (e.g., GDPR) require data to be stored within specific geographic boundaries. S3 regions help you meet these requirements.

- Availability: S3 is designed for high availability within a region. Using multiple regions adds another layer of redundancy for disaster recovery.

- Cost: Data transfer costs can vary between regions. Storing data in the same region as your compute resources can reduce costs. Additionally, different regions can have slightly varying storage costs.

16. How does S3 handle multiple people trying to access the same file at the same time?

S3 is designed to handle concurrent access to the same file by multiple users. It achieves this through its architecture, which is built for high availability and scalability. S3 employs a optimistic concurrency control model. This means that multiple users can read the same file concurrently without any issues. For writes, S3 handles concurrent requests by applying the last-write-wins principle by default.

Specifically, if multiple users attempt to write to the same object simultaneously, the last write request received by S3 will be the one that is eventually stored. It's important to note that S3 provides mechanisms for more robust concurrency control, such as using object versioning or implementing conditional updates using If-Match headers. Using versioning will mean every write will be saved as a new version, otherwise the last write wins. Conditional updates using If-Match headers allows updates to only proceed when the current ETag matches the expected ETag, this prevents overwrites if the file has been updated in the meantime.

17. If I delete a file in S3, is it really gone forever?

When you delete a file (object) in S3, it's generally not immediately and permanently gone. Several factors influence whether the data is truly unrecoverable.

- Versioning: If S3 Versioning is enabled on the bucket, deleting a file simply creates a delete marker. The previous versions of the file are still stored and can be recovered. To permanently delete a file with versioning, you need to delete all its versions and the delete marker. Without versioning, the deletion is more immediate.

- Lifecycle policies: S3 lifecycle policies might move deleted objects to cheaper storage classes (like Glacier or Deep Archive) before eventual permanent deletion. So the data might still exist for a period.

- Legal Holds/Object Locks: If Object Lock or Legal Holds are enabled on a bucket or object, the files cannot be deleted until the expiration/retention period.

Even after permanent deletion, there's a small window where AWS might be able to recover data due to internal backup or caching mechanisms, but this is not guaranteed and shouldn't be relied upon.

18. What is the difference between S3 Standard and S3 Glacier?

S3 Standard is designed for frequently accessed data, offering high availability, high throughput, and low latency. It's suitable for active workloads, websites, and mobile applications.

S3 Glacier (including Glacier Flexible Retrieval and Glacier Deep Archive) is designed for long-term archival storage of infrequently accessed data. It offers significantly lower storage costs compared to S3 Standard but at the expense of retrieval times, which can range from minutes to hours, depending on the Glacier storage class.

19. How can I automate moving files from my computer to S3?

You can automate moving files from your computer to S3 using several methods. A common approach is using the AWS CLI (Command Line Interface) along with a scripting language like Python or Bash. The AWS CLI provides commands to interact with S3, such as aws s3 cp for copying files.

For automation, you can create a script that runs periodically using a task scheduler like cron (on Linux/macOS) or Task Scheduler (on Windows). The script would use the AWS CLI to sync a local directory with an S3 bucket. For example, a simple Bash script might look like this:

#!/bin/bash

aws s3 sync /path/to/local/directory s3://your-bucket-name

Alternatively, for more complex workflows or continuous monitoring, consider using AWS Lambda, an event-driven compute service, triggered by file system events (e.g., using a file system watcher utility to invoke a Lambda function when a file is created/modified). Lambda will then use the S3 API (via boto3 in Python, for example) to upload files. You can also leverage AWS DataSync for efficient and secure data transfer over the network.

20. What security measures does S3 provide to protect my data?

S3 offers several security measures to protect your data. Access control mechanisms are central, including IAM policies to manage user permissions and bucket policies to define rules for bucket access. You can also use Access Control Lists (ACLs), although they're less recommended than policies. For data protection in transit, S3 supports HTTPS encryption. At rest, you can use server-side encryption (SSE) with S3 managed keys (SSE-S3), KMS managed keys (SSE-KMS), or customer-provided keys (SSE-C). You can also use client-side encryption. S3 also provides features like MFA Delete which requires multi-factor authentication to delete objects, and versioning to protect against accidental deletion or overwrites.

21. Tell me about S3 Object Lock.

S3 Object Lock prevents objects from being deleted or overwritten for a fixed amount of time or indefinitely. It adds a layer of data protection by enforcing write-once-read-many (WORM) principles, ensuring data immutability. There are two modes: Governance and Compliance. Governance mode allows privileged users to remove the lock under certain conditions, while Compliance mode is more strict and prevents anyone, including the root user, from removing the lock during the retention period.

Object Lock helps meet compliance requirements like SEC Rule 17a-4(f) and supports legal and regulatory requirements related to data retention. You can specify a retention period (amount of time the object remains protected) or apply a legal hold (indefinitely preserves the object until the legal hold is removed).

22. Can I set up S3 to automatically delete files after a certain period of time?

Yes, you can configure S3 to automatically delete files after a specific period using S3 Lifecycle policies. These policies allow you to define rules for transitioning objects to different storage classes (like Glacier) or expiring them (permanently deleting them). You can set up these rules based on object age (in days), prefix/tags, and other criteria.

To configure this, you can use the AWS Management Console, AWS CLI, SDKs, or CloudFormation. You essentially create a lifecycle rule specifying the number of days after object creation before an action (transition or expiration) should be taken.

23. What is the S3 Transfer Acceleration feature and when would I use it?

S3 Transfer Acceleration enables fast, easy, and secure transfers of files over long distances between your client and your Amazon S3 bucket. It leverages Amazon CloudFront's globally distributed edge locations. When data is uploaded to S3, it is routed to the nearest edge location, and then Amazon uses optimized network paths to transfer the data to your S3 bucket.

Use S3 Transfer Acceleration when you need to:

- Transfer files to S3 buckets that are geographically distant from your clients.

- Upload from locations with varying internet connection quality.

- Regularly transfer large files across continents.

24. How does S3 charge me for storing my files?

S3 charges for storage based on several factors: the storage class you choose (e.g., Standard, Intelligent-Tiering, Glacier), the amount of data stored in GB per month, the number of requests made to your data (GET, PUT, LIST, etc.), data transfer outbound to the internet, and any optional features like versioning or cross-region replication. Storage costs decrease for less frequently accessed data.

Specifically, you pay for:

- Storage: Cost per GB per month, varying by storage class.

- Requests: Cost per 1,000 requests (GET, PUT, LIST, etc.).

- Data Transfer Out: Cost per GB for data transferred out of S3 to the internet. Ingress is generally free.

- Storage Management Features: Costs associated with features like inventory, analytics, and object tagging.

25. What are some best practices for naming my S3 buckets and files?

S3 bucket names must be globally unique, so choose names that are unlikely to be taken. Use a naming convention that includes your company name or a project identifier. Bucket names should be between 3 and 63 characters long and can only contain lowercase letters, numbers, dots (.), and hyphens (-). They must start and end with a letter or number.

For object keys (file names), be descriptive and consistent. Use prefixes to organize objects logically; for example, project-a/images/image1.jpg. Avoid spaces and special characters; use hyphens or underscores instead. Consider including a date or timestamp for versioning if needed. Choose extensions that accurately reflect the file type for proper handling by downstream applications. For example, 2024-01-01-report.pdf.

26. How do I control who can upload files to my S3 bucket?

You can control who can upload files to an S3 bucket using several methods:

- IAM Policies: Create IAM users and groups with specific permissions to

s3:PutObjecton your bucket or specific prefixes within the bucket. This is the most granular approach. You can define conditions, for instance, restricting uploads based on IP address or requiring MFA. - Bucket Policies: Attach a bucket policy to your S3 bucket granting

s3:PutObjectpermission to specific IAM users, AWS accounts, or even anonymous users (generally not recommended for uploads). Like IAM policies, bucket policies also allow for specifying conditions. - Pre-signed URLs: Generate pre-signed URLs that grant temporary upload access to specific objects in your bucket. You control the expiration time and who receives the URL. The user with the URL can upload the file during the validity period.

- ACLs (Access Control Lists): While still available, ACLs are less powerful and harder to manage than IAM and bucket policies. They offer basic access control for individual objects. Generally, prefer using IAM or bucket policies.

27. Can I use S3 to store backups of my databases?

Yes, you can use S3 to store backups of your databases. S3 offers durable, highly available, and scalable object storage, making it a suitable option for storing database backups.

Consider these points:

- Cost-effectiveness: S3's storage classes (e.g., Standard, Glacier) allow you to optimize costs based on access frequency.

- Durability: S3 is designed for 99.999999999% data durability.

- Security: You can control access to your backups using IAM roles, bucket policies, and encryption.

- Lifecycle management: You can automatically transition backups to lower-cost storage tiers as they age.

- Consider using a tool or script to automate the backup and upload process. Many database systems integrate directly with S3. For example, you could use

mysqldumpto create a backup andaws s3 cpcommand to copy it to S3

Intermediate AWS S3 interview questions

1. How can you ensure data consistency when multiple users are simultaneously writing to the same object in S3?

To ensure data consistency when multiple users are simultaneously writing to the same S3 object, you can leverage the following mechanisms:

- Object Versioning: Enable versioning on your S3 bucket. This way, every write creates a new version of the object, preventing overwrites. You can then implement a process to merge or reconcile these versions later, resolving any conflicts.

- Pre-signed URLs with Conditions: Use pre-signed URLs with conditions to enforce atomic updates. You can specify a condition, such as

If-Match, that requires a specific version of the object to exist before the write is allowed. If the object has been updated by another user in the meantime, the write will fail, and the user can retry with the latest version. This involves reading the object's current version, applying changes, and then writing it back with theIf-Matchcondition set to the version you read. This ensures a check-then-update atomicity. - Atomic Operations: If your use case involves numerical data you can use

compare and swapconditional updates using mechanisms such as DynamoDB's atomic counters. For example, use DynamoDB to store counts and use them for naming a specific file/version. The file will only be written if that DynamoDB counter equals a certain number. This can involve additional overhead and cost, but it helps ensure consistency at a fine-grained level.

2. Describe a scenario where using S3 Event Notifications would be beneficial. Explain the steps involved in configuring it.

S3 Event Notifications are beneficial when you need to trigger actions or workflows based on changes to objects in your S3 bucket. For example, when a new image is uploaded, you might want to automatically generate thumbnails, trigger a virus scan, or update a database. Imagine a photo sharing application, when a user uploads a photo to S3, a notification could trigger a Lambda function to create different sized thumbnails for the web and mobile app versions.

To configure S3 Event Notifications, you would typically follow these steps: 1. Choose an event type: Select the S3 event that will trigger the notification (e.g., s3:ObjectCreated:*). 2. Configure the destination: Specify where to send the notification such as an SQS queue, SNS topic, or Lambda function. 3. Define the object key name filtering(optional): You can apply filtering rules based on object key name prefixes or suffixes to only send notifications for specific objects. 4. Set permissions: Ensure S3 has the necessary permissions to publish notifications to the chosen destination service. This is typically done via IAM roles.

3. Explain the difference between S3 Standard, S3 Intelligent-Tiering, and S3 Glacier storage classes. When would you choose each?

S3 Standard is designed for frequently accessed data, offering high availability and performance. It's suitable for active data used in applications, websites, and dynamic workloads. S3 Intelligent-Tiering automatically moves data between frequent, infrequent, and archive access tiers based on access patterns, optimizing storage costs. It's ideal for data with unknown or changing access patterns, where you want cost savings without operational overhead. S3 Glacier is for long-term archival storage, offering the lowest cost but with retrieval times ranging from minutes to hours.

You'd choose S3 Standard for frequently accessed data requiring immediate availability. S3 Intelligent-Tiering is best for data with unpredictable access patterns where cost optimization is important. S3 Glacier is the choice when data is rarely accessed and you can tolerate longer retrieval times for maximum cost savings, for example, compliance archives and backups.

4. How would you implement version control in S3, and what are the implications for storage costs and data retrieval?

To implement version control in S3, you enable the 'Versioning' setting on an S3 bucket. This setting ensures that every version of an object is preserved when it's overwritten or deleted. When you upload a new object with the same key as an existing one, S3 creates a new version instead of overwriting the original.

The implications are increased storage costs, as all versions are stored until explicitly deleted. Retrieving a specific version requires specifying its version ID in the request. Without a version ID, S3 returns the latest version. Deleting an object creates a delete marker (another version), so you must explicitly delete all versions to permanently remove the object and reduce storage costs. You can also use S3 Lifecycle policies to automatically transition older versions to cheaper storage tiers or permanently delete them.

5. What are pre-signed URLs in S3, and how can they be used to grant temporary access to objects?

Pre-signed URLs in S3 provide a way to grant temporary access to S3 objects without requiring AWS security credentials. They are URLs that are valid for a limited time and allow anyone who possesses the URL to perform specific actions, such as reading or writing an object. The URL contains all the necessary authentication information.

To use them, you first generate the pre-signed URL using AWS SDKs or CLI, specifying the bucket, object key, HTTP method (e.g., GET for reading, PUT for writing), and expiration time. You then distribute this URL to the user or application that needs access. They can then use the URL to access the object directly, within the specified time frame. For example, in python you can create a pre-signed URL like this:

import boto3

s3 = boto3.client('s3')

url = s3.generate_presigned_url(

'get_object',

Params={'Bucket': 'my-bucket', 'Key': 'my-object.txt'},

ExpiresIn=3600

)

print(url)

6. Explain how you would use S3 Lifecycle policies to automatically transition objects to cheaper storage tiers or delete them after a certain period.

S3 Lifecycle policies automate the process of moving objects between different storage classes or deleting them after a specified duration. I would use these policies to reduce storage costs by transitioning infrequently accessed data to cheaper tiers like S3 Standard-IA, S3 Intelligent-Tiering, S3 Glacier, or S3 Glacier Deep Archive. For example, a policy can be configured to move objects to S3 Standard-IA after 30 days and then to S3 Glacier after one year.

Furthermore, I would use lifecycle policies to automatically delete objects after a certain period to comply with data retention policies or to remove outdated data. For instance, logs older than 7 years could be automatically deleted. These policies can be defined based on object age, creation date, or even prefixes/tags, enabling granular control over object lifecycle management. Versioning also affects the lifecycle rules with specific rules for previous versions.

7. How do you protect sensitive data stored in S3 using encryption? Discuss different encryption options and key management strategies.

To protect sensitive data stored in S3 using encryption, several options are available. S3 offers server-side encryption (SSE) using keys managed by AWS (SSE-S3), keys managed by KMS (SSE-KMS), or customer-provided keys (SSE-C). Client-side encryption is another option, where data is encrypted before being uploaded to S3.

Key management strategies are crucial. For SSE-S3, AWS handles key management, offering convenience but less control. SSE-KMS allows using AWS KMS for key management, providing more control and auditing capabilities. SSE-C requires managing your own encryption keys. Client-side encryption necessitates secure key management on the client-side. AWS KMS is generally recommended for managing encryption keys, offering centralized control, auditing, and integration with other AWS services. Always consider compliance requirements and the sensitivity of the data when choosing an encryption and key management strategy.

8. Describe the process of setting up cross-region replication in S3. What are the benefits and limitations?

To set up cross-region replication (CRR) in S3, you first need to enable versioning on both the source and destination buckets. Then, you create a replication rule in the source bucket's properties. This rule specifies the destination bucket, the storage class for replicated objects, and an IAM role that S3 can use to replicate objects on your behalf. Optionally, you can configure replication time control (RTC) to meet specific compliance requirements. CRR asynchronously copies objects to the destination region.

Benefits of CRR include disaster recovery, reduced latency for users in different regions, and compliance with data sovereignty requirements. However, limitations include replication latency, potential cost implications due to storage and data transfer, and the fact that existing objects must be replicated separately using S3 Batch Operations. Replication configuration applies only to objects created after the rule is in place. Replication of delete markers is also not enabled by default, and requires enabling replication of delete markers.

9. How can you monitor S3 performance and usage using CloudWatch metrics? What are some key metrics to track?

You can monitor S3 performance and usage using CloudWatch metrics. S3 automatically publishes metrics to CloudWatch for storage, request, and data transfer. To enable detailed request metrics, you need to configure S3 request metrics for specific buckets or prefixes. Key metrics to track include:

BucketSizeBytes: Tracks the total size of all objects in a bucket. Useful for capacity planning and cost management. Filtered by Storage Type.NumberOfObjects: Tracks the total number of objects stored in a bucket. Helpful for understanding data growth. Filtered by Storage Type.4xxErrors: Indicates the number of client-side errors. High values may indicate permission issues or incorrect API usage.5xxErrors: Indicates the number of server-side errors. High values could mean issues on AWS side.FirstByteLatency: Measures the time from when S3 receives a request until the first byte is sent. Helps identify latency issues.TotalRequestLatency: Measures the total time to process a request. Helps in debugging performance bottlenecks.BytesDownloaded: Tracks the amount of data downloaded from a bucket.BytesUploaded: Tracks the amount of data uploaded to a bucket.

These metrics can be visualized in CloudWatch dashboards, and alarms can be set to notify you when thresholds are breached.

10. Explain how you would use S3 Access Points to simplify access management for different applications or teams.

S3 Access Points simplify access management by allowing you to create dedicated access points with specific permissions for different applications or teams. Instead of granting broad permissions to the entire S3 bucket, each access point acts as a named network endpoint with a customized access policy that restricts access to a specific prefix or set of operations.

For example, you could create an access point for a data analytics team that only allows them to read data from a specific folder within the S3 bucket. Another access point for an application that only allows write access to a different folder. This approach reduces the risk of accidental data exposure and makes it easier to enforce the principle of least privilege.

11. What are S3 Object Tags, and how can they be used for cost allocation and access control?

S3 Object Tags are key-value pairs that you can associate with objects stored in Amazon S3. These tags can be used for various purposes, including cost allocation and access control. Each object can have up to 10 tags.

For cost allocation, you can activate these tags in the AWS Cost Explorer or AWS Cost and Usage Reports. This allows you to break down your S3 costs based on the tags you've assigned to the objects. For access control, you can use S3 bucket policies or IAM policies to grant or deny access to objects based on their tags. For example, you can create a policy that only allows a specific IAM role to access objects with a certain tag. This provides a granular way to manage access to your S3 data.

12. How can you optimize S3 performance for read-heavy workloads? Consider caching strategies and request patterns.

For read-heavy workloads on S3, optimizing performance involves several caching and request pattern strategies. Caching is critical. You can use CloudFront (Amazon's CDN) to cache frequently accessed objects closer to users, reducing latency and S3 load. Consider setting appropriate TTLs (Time To Live) based on content update frequency. Another option is S3's own caching features using S3 Accelerate for faster transfer speeds. You should be aware of eventual consistency. For request patterns, optimize object sizes to avoid small object problem (group small objects). Use key prefixes strategically to avoid hot partitions. Implement request parallelism (e.g., using multithreading or asynchronous calls) to maximize throughput. Also ensure your application is located in the same AWS region as your S3 bucket to minimize network latency.

13. Explain how you would integrate S3 with other AWS services like Lambda or EC2. Provide a specific use case.

Integrating S3 with other AWS services is a common practice. For example, you can trigger an AWS Lambda function whenever a new object is uploaded to an S3 bucket. This is done using S3 event notifications. You configure the S3 bucket to send events (like s3:ObjectCreated:*) to the Lambda function. When the event occurs (an object is uploaded), Lambda gets triggered, receives information about the S3 object (bucket name, object key), and can then process the file. Another approach is to utilize an EC2 instance to process files stored on S3, e.g. running data analytics. In that case, the EC2 instance would need appropriate IAM role and permissions to read from the S3 bucket.

A concrete use case is automatically resizing images uploaded to S3. A user uploads a large image to an S3 bucket. This triggers a Lambda function. The Lambda function downloads the image from S3, resizes it using a library like Pillow (in Python), and uploads the resized image to another S3 bucket or back to the original bucket with a different key. This allows for creating thumbnails or optimized images for different devices.

14. How does S3 handle large file uploads? What strategies can you use to improve the upload speed and reliability?

S3 handles large file uploads using a feature called Multipart Upload. This allows you to split a large file into smaller parts, which are then uploaded independently and in parallel. After all parts are uploaded, S3 reassembles them into a single object. This approach significantly improves upload speed, especially over unreliable networks, as individual parts can be retried if they fail, without restarting the entire upload.

To further improve upload speed and reliability, consider these strategies:

- Increase Parallelism: Upload multiple parts concurrently to utilize available bandwidth.

- Optimize Part Size: Choose a suitable part size (e.g., 8MB - 128MB) based on network conditions. Larger parts reduce the number of requests, but smaller parts allow for faster retries.

- Use S3 Transfer Acceleration: Leverage Amazon's globally distributed edge locations to accelerate uploads.

- Ensure Network Stability: Utilize a stable network connection and retry failed uploads.

- Implement Checksums: Verify data integrity using checksums to ensure data isn't corrupted during transfer.

15. Describe a scenario where you would use S3 Select to query data directly from S3 objects without downloading the entire file.

Imagine you have a large CSV file containing website clickstream data stored in S3. You only need to analyze clicks originating from a specific country (e.g., 'USA'). Downloading the entire multi-gigabyte file would be time-consuming and costly. Instead, I would use S3 Select to query the CSV file directly in S3 using a SQL-like SELECT statement. The query would filter the data based on the 'country' column and return only the relevant rows, dramatically reducing data transfer costs and processing time. The statement could be something like SELECT * FROM s3object s WHERE s.country = 'USA'.

16. How can you restrict access to S3 buckets based on IP addresses or other network conditions?

You can restrict access to S3 buckets based on IP addresses or other network conditions using Bucket Policies. These policies use JSON documents that define who has access to the bucket and what actions they can perform. To restrict access by IP address, you can use the aws:SourceIp condition. This condition allows you to specify a list of allowed IP addresses or CIDR blocks.

Here's a simplified example of a bucket policy that restricts access to a specific IP address:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowSpecificIP",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:*",

"Resource": "arn:aws:s3:::your-bucket-name/*",

"Condition": {

"IpAddress": {

"aws:SourceIp": "203.0.113.0/24"

}

}

}

]

}

Other network conditions can be applied similarly, using different condition keys supported by AWS.

17. Explain the concept of S3 Transfer Acceleration and how it can improve upload and download speeds for geographically dispersed users.

S3 Transfer Acceleration leverages Amazon CloudFront's globally distributed edge locations to speed up data transfers to and from S3. When you enable Transfer Acceleration on an S3 bucket, data is routed to the nearest CloudFront edge location. That edge location then uses optimized network paths to transfer the data to your S3 bucket in the AWS region. This reduces latency and improves transfer speeds, especially for users who are geographically distant from the S3 bucket's region.

In essence, instead of directly uploading to the S3 bucket, users upload to a nearby CloudFront edge, and CloudFront handles the optimized transfer to S3. This optimization includes minimizing network hops and utilizing efficient TCP connections, which leads to faster and more reliable uploads and downloads.

18. How would you design a disaster recovery strategy for data stored in S3? Consider different failure scenarios and recovery objectives.

A disaster recovery (DR) strategy for S3 should consider region-wide outages and data corruption/loss. For region outages, cross-region replication (CRR) is critical. Configure CRR to a secondary AWS region, ensuring your Recovery Point Objective (RPO) is met by enabling versioning on the S3 buckets. For Recovery Time Objective (RTO), pre-provision necessary compute resources (like EC2 instances or Lambda functions) in the secondary region and automate failover procedures using services like Route 53 or CloudWatch events to redirect traffic.

For data corruption or accidental deletion, S3 versioning is essential. It allows reverting to previous object versions. Implement lifecycle policies to archive older versions to cheaper storage tiers (like Glacier) for cost optimization while retaining them for a defined period. Regularly test the DR plan by simulating failures and verifying data recovery in the secondary region. Additionally, enable S3 Object Lock for WORM (Write Once Read Many) protection to prevent accidental or malicious data deletion or modification. This will help to ensure data integrity during disaster scenarios.

19. Explain how to use S3 inventory to audit and report on the objects stored in your buckets.

S3 Inventory provides a scheduled (daily or weekly) listing of your objects and their metadata. You can configure it to generate a CSV, ORC, or Parquet file containing the object metadata, which is then stored in a specified S3 bucket. To audit and report, you would:

- Enable S3 Inventory: Configure an inventory for your bucket, specifying the destination bucket, file format, and frequency.

- Process the Inventory Data: Use services like Athena, Redshift, or even simple scripts to query and analyze the inventory data. For example, you could use Athena to run SQL queries to find objects older than a certain date, or those with specific encryption settings. This allows you to create reports on object size, storage class, encryption status, and other metadata, helping ensure compliance and identify potential cost optimization opportunities.

20. How would you implement a system to automatically detect and respond to security threats in S3, such as unauthorized access or data breaches?

To automatically detect and respond to S3 security threats, I'd leverage several AWS services. Primarily, I would use CloudTrail to log all API calls made to S3, enabling the monitoring of access patterns. These logs would then be ingested into CloudWatch Logs or AWS Security Hub, where I can define custom alarms and rules to detect suspicious activity, such as unusual access from unknown IP addresses or regions or unauthorized data downloads. Furthermore, S3 Event Notifications can trigger Lambda functions on specific S3 events (e.g., object creation, deletion). These Lambda functions can then evaluate the event and take automated remediation steps, such as revoking permissions, quarantining data, or alerting security personnel.

To enhance security, I'd employ IAM policies to enforce least privilege access and use S3 bucket policies to control access based on source IP or VPC. Regularly scanning S3 buckets using AWS Macie helps identify sensitive data and potential compliance violations. In case of a detected threat, responses could include: creating a SNS notification, modifying bucket policies, blocking the source IP using AWS WAF, or initiating an AWS Step Functions workflow for a more complex, multi-step remediation process.

21. What is the purpose of Requestor Pays buckets, and how do they affect billing and access control?

Requestor Pays buckets in cloud storage (like AWS S3 or Google Cloud Storage) enable the bucket owner to specify that the requester, not the bucket owner, will pay for data access and transfer costs. This is useful when the bucket owner wants to make data publicly available but doesn't want to incur the costs associated with widespread access. To access a Requestor Pays bucket, the requester must explicitly acknowledge they will pay by including a specific parameter or header in their request.

This affects billing because the costs are shifted from the bucket owner to the requester. Access control is also affected. The requester must have the necessary permissions to read the data, and they must also specifically indicate their agreement to pay for the data transfer. This can be enforced using bucket policies and IAM roles, ensuring that only authorized requesters who agree to pay can access the data. If the requester doesn't indicate they will pay, the request will be denied.

22. How can you use S3 Batch Operations to perform actions on multiple objects in a bucket efficiently?

S3 Batch Operations allows you to perform actions on multiple S3 objects at scale. You create a manifest file (CSV or S3 inventory) listing the objects, specify the operation (e.g., copy, delete, initiate Glacier restore, invoke a Lambda function), and S3 Batch Operations executes the action in parallel across the objects. This is more efficient than iterating through objects and performing actions individually, as it leverages S3's infrastructure for parallel processing and retry mechanisms.

Key benefits include: reduced operational overhead compared to custom scripts, built-in progress tracking and reporting, and simplified error handling via completion reports. Example actions include:

- Copying objects to a different storage class.

- Adding or modifying object tags.

- Invoking an AWS Lambda function for custom processing.

23. Explain how you would configure S3 Static Website Hosting. What are the considerations for custom domains and SSL certificates?

To configure S3 static website hosting, you first enable the static website hosting feature on your S3 bucket. You'll need to specify an index document (like index.html) and optionally an error document. Then, upload your website files (HTML, CSS, JavaScript, images) to the bucket. The bucket policy needs to be configured to allow public read access so users can view your website. Finally, you use the S3 endpoint URL to access your website.

For custom domains, you'd use a service like Route 53 to point your domain to your S3 bucket endpoint. You can't directly use an S3 bucket to host content over HTTPS with a custom domain. To enable SSL, you'd typically use CloudFront in front of S3. CloudFront lets you associate an SSL certificate (obtained from AWS Certificate Manager or another provider) with your custom domain. CloudFront then retrieves the content from S3 and serves it over HTTPS, handling certificate management and providing additional benefits like caching.

24. How does S3 prevent data loss and ensure high availability? What are the underlying mechanisms?

Amazon S3 achieves data durability and high availability through several underlying mechanisms. Primarily, it uses data redundancy by automatically storing multiple copies of your data across multiple devices and facilities within an AWS Region. This replication occurs synchronously, ensuring that data is immediately available even if one or more storage devices fail.

Furthermore, S3 offers different storage classes, each with varying levels of redundancy and availability. For example, S3 Standard provides the highest level of redundancy, while S3 One Zone-IA stores data in a single Availability Zone, offering lower cost but also lower availability. Versioning is another feature that helps prevent accidental data loss by keeping multiple versions of an object. These mechanisms, combined with S3's highly distributed architecture, contribute to its impressive durability and availability guarantees.

25. How do you manage and control costs associated with storing and accessing data in S3? What are some best practices for cost optimization?

Managing S3 costs involves several strategies. Object lifecycle management automatically transitions data to cheaper storage classes (like Glacier or Deep Archive) based on age. S3 Intelligent-Tiering automatically moves data between frequent and infrequent access tiers based on access patterns. You can also delete obsolete data using lifecycle policies. Monitoring storage usage with S3 Storage Lens and AWS Cost Explorer is crucial for identifying cost drivers. Compressing data before storing it in S3 reduces storage footprint and transfer costs. Consider using S3 Batch Operations for large-scale management tasks.

Best practices include:

- Right Sizing: Choose the appropriate storage class based on access frequency.

- Data Compression: Compress data before uploading to S3.

- Lifecycle Policies: Implement lifecycle rules to move data to cheaper tiers or delete it.

- Monitoring: Regularly monitor storage usage and costs.

- S3 Analytics: Analyze access patterns to optimize storage tiering.

- Tagging: Use tags to categorize and track storage costs by department, project, etc.

- Consider S3 Transfer Acceleration: Evaluate the cost/benefit of faster transfers for frequently accessed data.

26. Explain how you can use S3 Object Lock to prevent objects from being deleted or overwritten for a specified retention period.

S3 Object Lock prevents objects from being deleted or overwritten during a specified retention period or until a legal hold is removed. You first enable Object Lock at the bucket level, which can be done when you create the bucket. Once enabled, it cannot be disabled.

There are two ways to protect objects: retention periods and legal holds. Retention periods specify a fixed time during which an object cannot be deleted or overwritten. You can set a retention period in governance mode (where specific IAM permissions are required to bypass the lock) or compliance mode (where no one, including the root user, can bypass the lock). Legal holds, on the other hand, are applied to objects when you need to preserve them for legal reasons, and they remain in effect until explicitly removed. You can apply retention settings or legal holds on individual objects. Object Lock ensures data immutability by writing data once and reading many times (WORM), enforcing data retention policies.

Advanced AWS S3 interview questions

1. How can you ensure data integrity when transferring large files to S3?

To ensure data integrity when transferring large files to S3, several mechanisms can be employed. A primary method involves utilizing checksums. Before uploading, calculate a checksum (e.g., MD5, SHA-256) of the original file. After the upload to S3 is complete, recalculate the checksum of the file stored in S3. Compare both checksums to verify they match. AWS S3 provides built-in support for checksum validation during uploads.

Additionally, using multipart upload is beneficial for large files. Multipart upload divides the file into smaller parts, uploading each part independently. This allows for parallel uploads and retries of failed parts, increasing resilience. S3 also calculates checksums for each part and the final assembled object, further safeguarding data integrity. Utilizing S3's versioning can also help revert to a previous good state if corruption is detected.

2. Explain the use-cases for S3 Object Lock and how it prevents object deletion or modification.

S3 Object Lock is used to store objects using a Write Once Read Many (WORM) model, primarily for data compliance and regulatory requirements. Common use cases include archiving financial records, storing legal documents, and retaining healthcare data. It helps organizations meet requirements from regulatory bodies like SEC, FINRA, and HIPAA.

Object Lock prevents object deletion or modification through two retention modes: Governance mode and Compliance mode. In Governance mode, users with specific IAM permissions can override the retention settings. Compliance mode is stricter; once an object is locked in Compliance mode, its retention settings cannot be changed, and the object cannot be deleted until the retention period expires. Retention can be specified either by setting a retention period (duration) or by specifying a retention date (retain until date).

3. How does S3 Select improve query performance on large datasets stored in S3?

S3 Select dramatically improves query performance on large datasets in S3 by allowing you to retrieve only the specific data needed by a query, instead of retrieving the entire object. It works by pushing the filtering and projection operations down to S3, so only the relevant subset of data is transferred.

This is achieved through these key mechanisms:

- Data Filtering: S3 Select uses SQL expressions to filter rows based on specified criteria, reducing the amount of data returned.

- Column Projection: S3 Select allows you to select specific columns from the object, further minimizing the data transfer.

- Reduced Data Transfer: By transferring less data, S3 Select reduces network latency and processing time, resulting in faster query execution and lower costs. It supports formats like CSV, JSON and Parquet (which further supports predicate pushdown and column projection internally), often compressed with GZIP or BZIP2, maximizing efficiency.

4. Describe the different S3 storage classes and their cost implications for various data access patterns.

S3 offers several storage classes optimized for different access patterns and cost considerations. S3 Standard is for frequently accessed data, offering high availability and performance, but is the most expensive. S3 Intelligent-Tiering automatically moves data between frequent, infrequent, and archive access tiers based on usage, optimizing cost without performance impact. S3 Standard-IA (Infrequent Access) is for less frequently accessed data, offering lower storage costs but higher retrieval costs. S3 One Zone-IA is similar to Standard-IA but stores data in a single availability zone, further reducing storage costs but with lower availability. S3 Glacier and S3 Glacier Deep Archive are for long-term archival, offering the lowest storage costs but with significant retrieval time and cost implications. Choosing the right class depends on how often you need to access your data and your tolerance for retrieval latency and costs. Therefore, infrequent access favors Glacier while frequent access requires S3 Standard. There are also some related functionalities like:

- S3 Lifecycle policies: Automate the movement of objects between storage classes based on age or other criteria.

- S3 Storage Lens: Provides organization-wide visibility into object storage usage and activity trends, allowing you to make data-driven decisions about storage optimization.

5. What are the benefits of using S3 Event Notifications and how can they trigger Lambda functions?

S3 Event Notifications offer several benefits: real-time processing as events trigger actions immediately, decoupling by allowing S3 to interact with other services without direct dependencies, and scalability as they handle large volumes of events efficiently. They enable event-driven architectures, automating workflows based on object changes in S3.

S3 Event Notifications can trigger Lambda functions by configuring S3 to send notifications (e.g., object creation, deletion) to Lambda. You need to specify the event type and the Lambda function's ARN in the S3 bucket's properties. When the specified event occurs (e.g., a new image is uploaded), S3 invokes the Lambda function, passing event data (bucket name, object key, event type) as a JSON payload. The Lambda function then processes the data, such as resizing the image or generating a thumbnail.

6. How can you use S3 Inventory to audit and report on your S3 object metadata?

S3 Inventory provides a flat-file output (CSV, ORC, or Parquet format) listing your objects and their metadata. You configure it to run daily or weekly and store the output in a designated S3 bucket. To audit and report, you can then query this inventory data using services like:

- Athena: Directly query the inventory files using SQL to find objects matching specific criteria (e.g., encryption status, storage class, size).

- S3 Select: Similar to Athena, enables querying the inventory data in place without needing to load it into a database.

- QuickSight: Visualize the inventory data to create dashboards and reports on object metadata trends over time (e.g., object size distribution, storage class usage).

7. Explain how to optimize S3 performance for read-heavy workloads, considering factors like request rates and key naming conventions.

To optimize S3 performance for read-heavy workloads, consider several factors. Firstly, request rates are crucial. S3 automatically scales to handle high request rates, but understanding your expected read volume helps. Distributing requests across multiple prefixes can improve performance as S3 partitions data based on key prefixes. Key naming conventions play a significant role; avoid sequential keys (like timestamps) as they can lead to hot partitions and reduced throughput. Instead, use randomized prefixes or hash functions to distribute keys evenly across the key space. Furthermore, leverage features like S3 Transfer Acceleration (for geographically dispersed users) which uses CloudFront's globally distributed edge locations to speed up transfers. Consider using S3 Select to retrieve only the required data instead of the entire object, thereby reducing latency and cost.

Additionally, you could implement a cache (like CloudFront) in front of your S3 bucket to serve frequently accessed content directly from the edge locations. Select the appropriate storage class, consider Standard vs Intelligent-Tiering/Glacier based on access patterns and cost requirements. For consistent performance make sure you have configured retry logic in the application, specially when dealing with temporary network issues or service throttling. This prevents failures and ensures resilience.

8. What are the advantages and disadvantages of using S3 Transfer Acceleration?

S3 Transfer Acceleration leverages Amazon CloudFront's globally distributed edge locations to speed up transfers of files to and from S3.

Advantages: *Faster uploads and downloads, especially for geographically dispersed users. *Improved application performance. Simplified data transfer process. It's enabled with a simple configuration change.

Disadvantages: *Increased cost as you pay for the CloudFront usage. *Not always faster; performance depends on location, network conditions, and object size. *Requires testing to ensure actual benefit and cost-effectiveness. Using the S3 Transfer Acceleration speed test is crucial before full implementation. It might not significantly improve performance for transfers within the same AWS region.

9. Describe how to use S3 Access Points to manage access control for shared datasets.

S3 Access Points simplify managing data access at scale, especially for shared datasets. Instead of managing a single complex bucket policy, you create multiple access points, each with its own specific policy tailored to a specific application or team. Each access point policy can grant specific permissions, such as read-only or write-only access, to a defined set of users or roles.

For shared datasets, you could create one access point for a data science team needing full access, another for an analytics application requiring read-only access, and yet another for an external auditor. This granular control reduces the risk of unintended data modification or exposure. You can restrict access points to specific VPCs, further enhancing security. Each access point has a unique ARN, making it easy to track and audit access. This approach simplifies access control, improves security, and enables more efficient data sharing.

10. How does S3 Object Tagging help in managing and organizing your S3 objects?

S3 Object Tagging allows you to assign key-value pairs (tags) to S3 objects. This helps in several ways:

- Organization: You can categorize objects based on tags, acting as metadata without altering object data itself. Think of it as adding labels to files in a folder.

- Management: Tags enable you to implement lifecycle policies or IAM policies based on specific tag values. For example, objects tagged with

lifecycle:archivecan be automatically moved to Glacier after a certain period. Similarly, access permissions can be controlled at the object level based on these tags. This also helps track cost at an object level. You can use tags for cost allocation by activating these in Cost Explorer.

11. Explain how to configure cross-region replication for disaster recovery and business continuity.

Cross-region replication is configured to maintain a copy of your data in a geographically separate region, crucial for disaster recovery and business continuity. The specific steps depend on the service (e.g., AWS S3, Azure Storage, Google Cloud Storage), but generally involves enabling replication on the source bucket/storage account and specifying the destination region. Data is then automatically and asynchronously copied to the destination region.

For example, in AWS S3, you'd create a replication rule specifying the source bucket and destination bucket in a different region. In Azure, you'd configure Geo-Redundant Storage (GRS) or Geo-Zone-Redundant Storage (GZRS). Key considerations include network bandwidth between regions, replication latency, and potential costs associated with data transfer and storage in the secondary region. Choose a recovery point objective (RPO) and recovery time objective (RTO) that matches your business requirements when configuring replication.

12. What are the considerations for choosing the right S3 encryption option (SSE-S3, SSE-KMS, SSE-C)?

When choosing an S3 encryption option, consider these factors:

- SSE-S3 (Server-Side Encryption with Amazon S3-Managed Keys): Easiest to implement; AWS manages the keys. Suitable when you want basic encryption and don't need key management control.

- SSE-KMS (Server-Side Encryption with AWS KMS-Managed Keys): Provides more control over encryption keys. You manage keys in KMS, allowing for auditing and key rotation. Good for compliance and when you need key management.

- SSE-C (Server-Side Encryption with Customer-Provided Keys): You manage the encryption keys entirely. S3 only uses the key to encrypt/decrypt; it doesn't store the key. This gives you maximum control but also the most responsibility. Useful when you need to fully control the encryption keys and associated security.

13. How can you use S3 Lifecycle policies to automatically transition objects between different storage classes based on age?

S3 Lifecycle policies allow you to define rules to automatically transition objects between different storage classes (like Standard, Intelligent-Tiering, Standard-IA, One Zone-IA, Glacier, and Deep Archive) based on their age. You configure these rules in the S3 Management Console, AWS CLI, or SDK. For example, you can transition objects to Standard-IA after 30 days, to Glacier after 90 days, and permanently delete them after 365 days.

To set up a lifecycle policy, you specify a prefix (e.g., a folder) to which the rule applies, choose the transition actions (storage class and age in days), and enable the rule. When an object meets the age criteria, S3 automatically transitions it to the specified storage class. This helps optimize storage costs by moving infrequently accessed data to cheaper storage tiers.

14. Describe the process of implementing versioning on an S3 bucket and how it helps in data recovery.

To enable versioning on an S3 bucket, you can use the AWS Management Console, AWS CLI, or AWS SDKs. Once enabled, every object stored in the bucket receives a unique version ID. When you modify an object, the previous version is retained, and a new version is created. Deleting an object doesn't permanently remove it; instead, a delete marker is created, but the previous versions remain accessible. Versioning is bucket-level configuration meaning that you enable/disable it for the whole bucket. You should consider implications on cost since the older versions do still occupy storage and you may need to consider lifecycle rules to manage the costs.

Versioning significantly aids data recovery. If an object is accidentally deleted or overwritten, you can easily retrieve a previous version. To recover a deleted object, you can simply remove the delete marker. To recover an overwritten object, you can retrieve the desired previous version and make it the current version. This provides a safety net against unintended data loss or corruption, enabling easy rollback to previous states. This is especially useful for data protection against accidental deletion or application failures.

15. What are the best practices for securing S3 buckets and preventing unauthorized access?

Securing S3 buckets involves several best practices. Firstly, implement strong access controls using IAM roles and policies, adhering to the principle of least privilege. Ensure that only authorized users and services have the necessary permissions to access specific buckets and objects. Enable bucket policies to further restrict access based on IP address, AWS account, or other conditions.

Secondly, enable encryption both at rest (using SSE-S3, SSE-KMS, or SSE-C) and in transit (using HTTPS). Regularly audit your S3 bucket configurations and access logs using AWS CloudTrail and S3 server access logging to identify and remediate any security vulnerabilities or unauthorized access attempts. Use MFA Delete to protect against accidental or malicious deletion of objects.

16. How can you monitor S3 bucket usage and performance using CloudWatch metrics?

You can monitor S3 bucket usage and performance using CloudWatch metrics by enabling request metrics for your S3 bucket. These metrics provide insights into the operational health of your S3 buckets and can be viewed in the CloudWatch console.

Specifically, you can track metrics like:

BucketSizeBytes: The amount of data stored in a bucket (usage).NumberOfObjects: The total number of objects stored in the bucket (usage).GetRequests,PutRequests,DeleteRequests: The number of requests made to the bucket (performance and usage).BytesDownloaded,BytesUploaded: The amount of data transferred in/out of the bucket (performance).4xxErrors,5xxErrors: Error rates, indicating potential problems.FirstByteLatency,TotalRequestLatency: Measures of request latency (performance).

You can create CloudWatch dashboards and alarms based on these metrics to proactively monitor S3 bucket health and performance. Note that storage metrics (BucketSizeBytes, NumberOfObjects) are reported daily, while request metrics are reported in one-minute intervals (after enabling).

17. Explain how to implement a serverless image resizing solution using S3 and Lambda.

To implement a serverless image resizing solution using S3 and Lambda, you can trigger a Lambda function whenever a new image is uploaded to an S3 bucket. This Lambda function can then download the image from S3, resize it using a library like Pillow (Python) or ImageMagick, and then upload the resized image back to another S3 bucket or the same bucket with a different prefix. The Lambda function needs appropriate IAM permissions to read from and write to the S3 buckets.

- S3 Event Trigger: Configure an S3 event notification to trigger the Lambda function upon

ObjectCreatedevents. - Lambda Function: The Lambda function will:

Download the image from S3 using the S3 bucket name and object key from the event.

Resize the image using a suitable library. For example, in Python:

from PIL import Image def resize_image(image_path, output_path, size): image = Image.open(image_path) image = image.resize(size) image.save(output_path)Upload the resized image back to S3.

18. How do you handle large-scale data ingestion into S3 from various sources?

For large-scale data ingestion into S3, I'd leverage a combination of strategies depending on the source and volume of data. For streaming data, I'd consider using AWS Kinesis Data Firehose to directly ingest into S3, handling buffering and compression automatically. For batch data, I'd opt for AWS Data Pipeline, AWS Glue, or Apache Spark on EMR to orchestrate the transfer, transformation, and loading.

For sources that are on-premises or outside AWS, I might use AWS Storage Gateway or AWS Direct Connect for faster and more secure data transfer. Optimizing for cost and performance also involves choosing the right S3 storage class (Standard, Intelligent-Tiering, Glacier, etc.), implementing partitioning and compression (e.g., Parquet or ORC), and ensuring proper IAM roles for secure access. Monitoring would involve using CloudWatch metrics for S3 and the ingestion services.

19. Describe the architecture of a data lake on S3, including data ingestion, storage, processing, and analysis components.

A data lake on S3 utilizes S3 as the central storage repository. Data ingestion can be achieved through services like AWS Glue, Kinesis, or Lambda functions to load data from various sources (databases, streaming data, logs) in its raw format. The data lake follows a layered architecture: raw layer (unprocessed data), curated layer (transformed and cleaned data), and application-specific layer (data prepared for specific analytical needs).

Data processing is typically handled by services like AWS Glue (for ETL), EMR (for big data processing using Spark, Hadoop), or Athena (for serverless SQL queries). Analysis can be performed using services such as Athena for ad-hoc queries, QuickSight for visualization, or SageMaker for machine learning. Data governance and security are enforced through IAM roles, S3 bucket policies, and AWS Lake Formation, ensuring controlled access and compliance.

20. What is the purpose of S3 Glacier and S3 Glacier Deep Archive, and when would you use them?

S3 Glacier and S3 Glacier Deep Archive are low-cost storage classes designed for archiving data that is infrequently accessed. S3 Glacier offers lower storage costs than S3 Standard, but retrieval times can range from minutes to hours. S3 Glacier Deep Archive offers the lowest storage cost but has the longest retrieval times, typically taking several hours.

You would use S3 Glacier when you need to retain data for compliance, regulatory reasons, or long-term backups, but you don't need immediate access. For example, archiving old logs, financial records, or media assets. S3 Glacier Deep Archive is suitable for data that is rarely or never accessed but still needs to be preserved, such as long-term digital preservation, or data that must be retained for many years due to strict regulatory requirements.

21. How can you use S3 to host a static website?

To host a static website on S3, you first need to upload your website files (HTML, CSS, JavaScript, images, etc.) to an S3 bucket. Then, enable static website hosting for the bucket. This involves configuring the bucket to serve an index document (usually index.html) and optionally an error document. Finally, configure the bucket policy to allow public read access to the objects.

Specifically, in the S3 console, go to Properties -> Static website hosting, and enable it. Then, edit the Bucket Policy under Permissions -> Bucket Policy to allow s3:GetObject for everyone (*). For example, a bucket policy might look like this:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::your-bucket-name/*"

}

]

}

Remember to replace your-bucket-name with your actual bucket name.

22. Explain how to integrate S3 with other AWS services like EC2, RDS, and EMR.

S3 integrates with various AWS services to provide scalable storage and data management. EC2 instances can directly access S3 buckets to store application data, configuration files, or backups. IAM roles are used to grant EC2 instances permissions to interact with S3. For RDS, S3 can be used for storing database backups, allowing for durable and cost-effective disaster recovery. RDS snapshots can be periodically exported to S3. EMR clusters leverage S3 as a primary data source and destination for processing large datasets. Input data for EMR jobs is often stored in S3, and the results of the processing are written back to S3 for further analysis or consumption by other applications. AWS Glue is frequently used to catalog S3 data for use in Athena and other services.

23. How can you ensure compliance with data residency requirements when using S3?

To ensure compliance with data residency requirements in S3, you can utilize several AWS features. Primarily, you should select the appropriate S3 region when creating your bucket. AWS S3 stores your data in the region you specify, meaning the data will physically reside within that geographical location.

Furthermore, you can use S3 replication features like S3 Cross-Region Replication (CRR) or S3 Intelligent-Tiering with Archive Access tier to copy data to another region for disaster recovery or compliance reasons. Remember to configure these replication rules carefully to avoid unintended data movement outside of the designated residency boundary. Additionally, S3 Object Lambda allows you to modify data on retrieval, which can be used for masking or redacting data that is non-compliant before it leaves the S3 bucket. Finally, implement proper access controls with IAM policies to restrict access to S3 buckets based on location or user identity.

24. Describe a scenario where you would use S3 Batch Operations.

I would use S3 Batch Operations when needing to perform the same action on a large number of S3 objects. For example, imagine needing to update the storage class of millions of objects from STANDARD to GLACIER for cost optimization. Doing this manually or through scripting object-by-object would be incredibly slow and inefficient.

S3 Batch Operations allows me to create a manifest of objects to be acted upon (e.g., a CSV file listing object keys). Then, I can specify the operation to perform (e.g., changing storage class) and S3 Batch Operations will handle the execution in a managed, scalable, and auditable way. Other use cases include updating object tags, copying objects to another bucket, or invoking a Lambda function on each object.

25. How can you troubleshoot performance issues when accessing S3 objects?

Troubleshooting S3 performance issues involves several areas. Start by checking network connectivity between the client and S3. High latency or packet loss can significantly impact performance. Use tools like traceroute or ping to diagnose network problems. Also, verify your S3 bucket's region matches the region of your application servers to minimize latency.

Next, analyze S3 metrics using CloudWatch. Look for trends in BucketSizeBytes, NumberOfObjects, GetRequests, PutRequests, 4xxError, and 5xxError. High error rates indicate potential throttling or access issues. Optimize your application to use request parallelism where possible, and consider using S3 Transfer Acceleration for faster uploads/downloads over long distances. Check your IAM policies for any unintended restrictions.

26. Explain how to use S3 with a Content Delivery Network (CDN) like CloudFront to improve website performance.

To use S3 with a CDN like CloudFront for improved website performance, you first store your website's static assets (images, CSS, JavaScript files, videos, etc.) in an S3 bucket. Then, you configure CloudFront to serve these assets from the S3 bucket. CloudFront caches these assets at edge locations globally, so when a user requests an asset, it's delivered from the nearest edge location instead of directly from S3, reducing latency and improving load times.