Hiring AWS EC2 professionals requires a keen understanding of their skills and expertise, as a cloud engineer's skills are constantly evolving. Knowing the right questions to ask helps recruiters and hiring managers quickly assess candidates for the right fit.

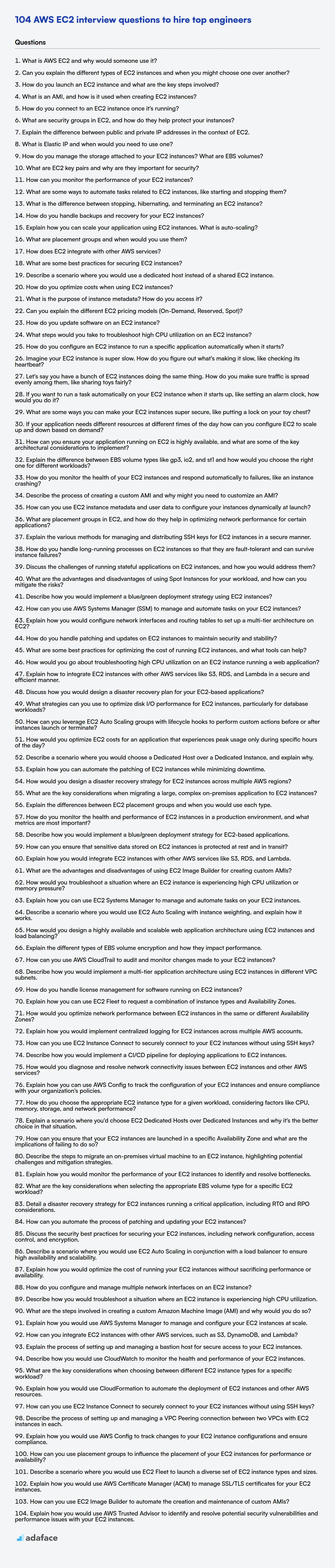

This blog post offers a curated list of AWS EC2 interview questions categorized by difficulty level: basic, intermediate, advanced, and expert, including a set of MCQs. It will equip you with the tools necessary to evaluate a candidate's knowledge and practical skills.

By using these questions, you can identify candidates who truly understand AWS EC2, and to ensure a more objective evaluation, consider using a skill assessment like our AWS Online Test before the interview.

Table of contents

Basic AWS EC2 interview questions

1. What is AWS EC2 and why would someone use it?

AWS EC2 (Elastic Compute Cloud) provides virtual servers (instances) in the cloud. It lets you rent virtual machines with various operating systems, CPUs, memory, and storage options.

People use EC2 for many reasons, including:

- Scalability: Easily increase or decrease computing capacity based on demand.

- Cost-effectiveness: Pay-as-you-go pricing, only paying for what you use.

- Flexibility: Choose from a wide range of instance types to match specific workload requirements.

- Control: Full control over the operating system, software, and networking.

- Deployment: Deploy and manage applications quickly and easily.

2. Can you explain the different types of EC2 instances and when you might choose one over another?

EC2 instances come in various types optimized for different workloads. General Purpose instances (like t3.micro, m5.large) are suitable for web servers, development environments, and small databases, offering a balance of compute, memory, and networking. Compute Optimized instances (like c5.xlarge) are ideal for CPU-intensive tasks like batch processing, media transcoding, and high-performance computing. Memory Optimized instances (like r5.large) are designed for memory-intensive applications like in-memory databases (Redis, Memcached), data analytics, and high-performance databases. Storage Optimized instances (like i3.large) provide high I/O performance for applications that require fast, sequential read and write access to large datasets, such as data warehousing, log processing, and NoSQL databases (Cassandra, MongoDB). GPU instances (like g4dn.xlarge, p3.2xlarge) are used for machine learning, video encoding, and other computationally intensive tasks requiring GPU acceleration.

The selection depends on the specific application's needs. Consider factors like CPU utilization, memory requirements, storage I/O, and network bandwidth. General Purpose is a good starting point, and then one can switch if performance bottlenecks are observed. Cost is another significant factor to consider when choosing an instance type.

3. How do you launch an EC2 instance and what are the key steps involved?

Launching an EC2 instance involves several key steps. First, you need to choose an AMI (Amazon Machine Image), which serves as the operating system and software configuration for your instance. Then, you select an instance type, determining the hardware resources like CPU, memory, and storage. Next, you configure network settings, including VPC (Virtual Private Cloud), subnet, and security groups to control network access. You might also want to assign an IAM role. Finally, you launch the instance. The process can be done via the AWS Management Console, AWS CLI or SDKs.

Specifically with the AWS CLI, you would use the aws ec2 run-instances command. For example:

aws ec2 run-instances --image-id ami-xxxxxxxxxxxxx --instance-type t2.micro --key-name MyKeyPair --security-group-ids sg-xxxxxxxxxxxxx --subnet-id subnet-xxxxxxxxxxxxx

This command specifies the AMI, instance type, key pair for SSH access, security group, and subnet for launching the instance.

4. What is an AMI, and how is it used when creating EC2 instances?

An Amazon Machine Image (AMI) is a pre-configured template that contains the operating system, application server, and applications required to launch an EC2 instance. Think of it as a snapshot of a virtual machine's hard drive.

When you create an EC2 instance, you specify an AMI. This AMI is then used to launch the instance. The AMI determines the initial state of the instance - the OS, installed software, and any initial data. You can choose from a variety of AMIs provided by AWS, the AWS Marketplace, or create your own customized AMIs.

5. How do you connect to an EC2 instance once it's running?

Once an EC2 instance is running, you can connect to it using several methods, primarily SSH for Linux/macOS instances and RDP for Windows instances. For SSH, you'll need a private key file (.pem) that you specified during instance creation. You use this key with an SSH client (like OpenSSH, PuTTY on Windows) and the instance's public IP address or public DNS. The basic SSH command would look like: ssh -i "path/to/your/key.pem" username@public_ip_address. The 'username' is usually 'ec2-user' or 'ubuntu' depending on the AMI used.

For Windows instances, you'll retrieve the initial administrator password using the key pair specified at launch. Then, you use an RDP client (Remote Desktop Connection) to connect using the instance's public IP address or public DNS and the retrieved password. Ensure that the security group associated with the EC2 instance allows inbound traffic on port 22 for SSH or port 3389 for RDP from your IP address, or from a wider range if necessary, to allow the connection.

6. What are security groups in EC2, and how do they help protect your instances?

Security groups act as a virtual firewall for your EC2 instances in AWS. They control inbound and outbound traffic at the instance level. By defining rules within a security group, you specify which traffic is allowed to reach your instance and which traffic your instance is allowed to send.

They help protect your instances by:

- Controlling access: You can specify IP addresses, ports, and protocols allowed to access your instance.

- Restricting outbound traffic: Limit the types of connections your instance can make to the outside world.

- Layered security: Security groups are applied in addition to any security measures within the operating system itself, providing a defense-in-depth approach.

7. Explain the difference between public and private IP addresses in the context of EC2.

In the context of EC2, a public IP address is used for communication over the internet. It's directly routable and allows your EC2 instance to communicate with the outside world. AWS assigns these to instances, or you can use Elastic IPs for persistent public addresses.

A private IP address, on the other hand, is used for internal communication within the VPC (Virtual Private Cloud). It is not directly routable over the internet and allows instances within the same VPC, or peered VPCs, to communicate with each other securely without exposing traffic to the public internet. Each EC2 instance has a primary private IP address assigned during launch, and you can assign secondary private IP addresses as well. You can’t directly control which private IP is assigned, only that it will be within the subnet's CIDR range.

8. What is Elastic IP and when would you need to use one?

An Elastic IP address is a static, public IPv4 address designed for dynamic cloud computing. It's associated with your AWS account, not a specific instance. You can quickly remap it to another instance if one fails, maintaining a consistent public IP.

You'd use an Elastic IP when you need a persistent public IP address, such as for:

- Hosting a website or application that requires a fixed IP.

- Maintaining DNS records that point to a specific IP.

- Ensuring uninterrupted service during instance failures by quickly remapping the IP to a healthy instance.

9. How do you manage the storage attached to your EC2 instances? What are EBS volumes?

I manage storage attached to EC2 instances primarily using Elastic Block Storage (EBS) volumes. EBS volumes are persistent block storage devices that you can attach to a single EC2 instance. They act like virtual hard drives. I can choose different EBS volume types (SSD or HDD) based on performance and cost requirements, and I typically use provisioned IOPS for workloads needing consistent performance. EBS volumes are automatically replicated within their Availability Zone, protecting against data loss from component failure. They also support snapshots, which are incremental backups that can be used to restore volumes or create new ones.

Beyond EBS, I might use instance store (ephemeral storage) for temporary data, although this is less common due to its non-persistence. For larger data sets or shared access, I consider using Elastic File System (EFS) or S3, depending on whether I need a file system interface or object storage. I handle tasks like volume creation, attaching/detaching, resizing, and snapshot management through the AWS Management Console, CLI, or Infrastructure as Code tools such as Terraform.

10. What are EC2 key pairs and why are they important for security?

EC2 key pairs are sets of cryptographic keys, consisting of a public key and a private key, used to securely connect to EC2 instances. Amazon stores the public key, and you store the private key. When you launch an instance, you specify the key pair you want to use.

They are important for security because the private key is the only way to SSH into your instance if you're using an AMI that doesn't have password-based logins enabled (which is the recommended and more secure configuration). Without the correct private key, unauthorized users cannot gain access to the instance. Losing your private key can mean losing access to your instance.

11. How can you monitor the performance of your EC2 instances?

I can monitor EC2 instance performance using several tools and metrics. AWS CloudWatch is the primary service for monitoring. It collects metrics like CPU utilization, disk I/O, network traffic, and memory usage (though memory usage often requires a CloudWatch agent). These metrics can be visualized in dashboards and used to set alarms for proactive notifications.

Beyond CloudWatch, I can use system-level monitoring tools within the instance itself (e.g., top, htop, vmstat on Linux, or Performance Monitor on Windows). For more in-depth application monitoring, I might use tools like Prometheus, Datadog, or New Relic to collect application-specific metrics and logs.

12. What are some ways to automate tasks related to EC2 instances, like starting and stopping them?

Several AWS services and tools can automate EC2 instance tasks. AWS Systems Manager (SSM) lets you define maintenance windows to automatically start/stop instances based on schedules. You can use SSM Automation documents to create more complex workflows. AWS Lambda functions triggered by CloudWatch Events (now EventBridge) can also start/stop instances based on schedules or events. For example, you can trigger a Lambda function to stop instances every night and start them in the morning.

Another option is the AWS CLI. You can write scripts that use the aws ec2 start-instances and aws ec2 stop-instances commands and schedule them using cron jobs (on Linux/macOS) or Task Scheduler (on Windows). Infrastructure as Code (IaC) tools like Terraform or CloudFormation can also manage the lifecycle of EC2 instances, including starting and stopping them as part of deployments or infrastructure changes.

13. What is the difference between stopping, hibernating, and terminating an EC2 instance?

Stopping an EC2 instance is like turning off your computer. The instance shuts down, but the EBS volumes remain attached, and you can restart it later. You stop paying instance usage fees, but you still pay for storage. When restarted, the instance moves to a new underlying host, and might get a new public IP unless you use an Elastic IP.

Hibernating is similar to stopping, but the instance's memory (RAM) is saved to the EBS volume. When you start the instance, it resumes from where it left off. The instance is also moved to a new underlying host. Not all instance types support hibernation.

Terminating an instance completely deletes it. The EBS volumes attached to the instance are deleted, unless the DeleteOnTermination attribute is set to false. You stop paying for the instance and its associated storage (if DeleteOnTermination is true). Once terminated, an instance cannot be restarted.

14. How do you handle backups and recovery for your EC2 instances?

I handle backups and recovery for EC2 instances using a combination of strategies. For critical data, I leverage EBS snapshots, creating them regularly (daily or hourly, depending on the RTO/RPO requirements). These snapshots are stored durably in S3 and can be used to restore an EBS volume to a previous state in case of failure. I also automate the snapshot creation and deletion process using AWS Data Lifecycle Manager (DLM) or custom scripts.

For application-level backups, I may use tools like rsync or specialized backup software to copy data to a separate storage location, such as S3. In the event of an instance failure, I can launch a new instance and restore the data from the backups. For instances with complex configurations, I create AMIs (Amazon Machine Images), which are essentially templates of the instance. This allows for quick recovery by launching new instances from the AMI.

15. Explain how you can scale your application using EC2 instances. What is auto-scaling?

To scale an application using EC2 instances, I can use a combination of techniques. First, I would create an Amazon Machine Image (AMI) of my application. Then, I can launch multiple EC2 instances using this AMI, distributing the load across them using a load balancer like AWS Application Load Balancer (ALB) or Network Load Balancer (NLB). This approach allows handling increased traffic by adding more instances. The load balancer directs traffic to healthy instances, ensuring high availability and responsiveness. Horizontal scaling is key here, meaning more instances are added, not making existing ones bigger.

Auto Scaling automates this process. It dynamically adjusts the number of EC2 instances based on demand. You define scaling policies (e.g., scale out when CPU utilization exceeds 70%, scale in when it drops below 30%) and Auto Scaling automatically launches or terminates instances to maintain performance and cost efficiency. This ensures the application remains available and responsive during traffic spikes while minimizing costs during periods of low demand. Auto Scaling integrates with CloudWatch for monitoring metrics and triggering scaling events.

16. What are placement groups and when would you use them?

Placement groups are a mechanism in cloud computing, particularly within object storage services like Amazon S3, designed to influence the physical placement of your data to optimize performance. They allow you to control how your data is distributed across the underlying infrastructure. There are typically different types of placement groups offering different tradeoffs.

Placement groups are useful when you need:

- Low Latency: Placing instances close together reduces network latency (e.g., for tightly coupled HPC applications).

- High Throughput: Spreading data across multiple storage nodes maximizes aggregate bandwidth (e.g., for large data processing).

- Sequential Reads/Writes: Optimizing data locality can improve sequential I/O performance.

- Hadoop/Spark: Placing HDFS/Spark nodes within a placement group improves data locality, hence faster mapreduce times.

17. How does EC2 integrate with other AWS services?

EC2 integrates with many AWS services. For example, S3 can be used for storing EC2 instance backups (AMIs) or instance data. IAM controls access permissions to EC2 instances and other AWS resources. VPC provides network isolation and connectivity for EC2 instances. CloudWatch monitors EC2 instance performance metrics and logs. Auto Scaling automates the process of launching or terminating EC2 instances based on demand. RDS hosts databases that EC2 applications connect to.

Essentially, EC2 serves as the compute engine and other AWS services enhance its functionality and manageability by providing storage, networking, security, monitoring, and automation.

18. What are some best practices for securing EC2 instances?

Securing EC2 instances involves several key practices. Firstly, regularly update and patch the operating system and installed software to address known vulnerabilities. Use a configuration management tool or AWS Systems Manager for automation. Next, control network access using Security Groups and Network ACLs. Security Groups act as a virtual firewall for your instances, while Network ACLs control traffic at the subnet level. Adopt the principle of least privilege by granting only the necessary permissions to users and applications through IAM roles. Finally, consider using encryption for both data at rest (using EBS encryption or instance store encryption) and data in transit (using TLS/SSL). Regularly audit your security configurations and logs to identify and address potential issues.

19. Describe a scenario where you would use a dedicated host instead of a shared EC2 instance.

I would choose a dedicated host over a shared EC2 instance primarily for compliance or licensing reasons. For example, some software licenses are tied to physical server hardware. If the software vendor requires the license to be associated with a physical server for compliance reasons, a dedicated host would be the appropriate choice. Similarly, if a particular industry regulation dictates specific hardware isolation to meet security requirements, a dedicated host would be necessary.

Another reason would be if I wanted greater control over the placement of my EC2 instances, and needed to ensure that no other AWS customers' instances were running on the same physical hardware. This might be for performance reasons (avoiding noisy neighbors), or for security reasons beyond the scope of standard AWS isolation.

20. How do you optimize costs when using EC2 instances?

To optimize EC2 costs, consider several strategies. First, choose the right instance type and size for your workload. Over-provisioning leads to wasted resources. Utilize monitoring tools like CloudWatch to analyze CPU utilization, memory usage, and network I/O to identify underutilized instances that can be scaled down or consolidated. Leverage EC2 Instance Savings Plans or Reserved Instances for predictable workloads, offering significant discounts compared to On-Demand pricing. Spot Instances can be used for fault-tolerant workloads that can withstand interruptions.

Secondly, implement auto-scaling to automatically adjust the number of EC2 instances based on demand. This ensures that you only pay for what you use. Regularly review and delete unused EBS volumes and snapshots, and choose cost-effective storage options like S3 for less frequently accessed data. Consider using AWS Cost Explorer to gain insights into your EC2 spending and identify potential areas for optimization. Finally, ensure you are using the most efficient AMI. Older AMIs could be less optimized or have higher pricing.

21. What is the purpose of instance metadata? How do you access it?

Instance metadata provides information about an instance, such as its instance ID, public keys, network settings, and other configuration details. It's essentially data about the instance itself. It's useful for automating tasks, configuring applications, and retrieving information about the running instance dynamically.

To access instance metadata, you typically use a special, non-routable IP address (often 169.254.169.254) from within the instance. The method varies depending on the cloud provider (e.g., AWS, Azure, GCP), but generally involves making HTTP requests to this IP address with specific paths to retrieve different categories of metadata. Here's an example using curl (often used in shell scripts):

curl http://169.254.169.254/latest/meta-data/instance-id

The exact paths and available metadata categories will vary depending on the specific cloud platform.

22. Can you explain the different EC2 pricing models (On-Demand, Reserved, Spot)?

EC2 offers several pricing models to cater to diverse needs. On-Demand instances are pay-as-you-go, billed by the second, suitable for short-term, unpredictable workloads. You pay only for what you use, with no upfront commitment.

Reserved Instances (RIs) provide significant discounts (up to 75%) compared to On-Demand, in exchange for a commitment to use the instance for 1 or 3 years. RIs are ideal for predictable, steady-state workloads. There are Standard, Convertible, and Scheduled RIs.

Spot Instances allow you to bid on spare EC2 capacity. They offer the highest potential discounts (up to 90%), but AWS can reclaim them with a two-minute warning if the Spot price exceeds your bid. Spot Instances are suitable for fault-tolerant, flexible workloads.

23. How do you update software on an EC2 instance?

To update software on an EC2 instance, you typically connect to the instance using SSH. Then, you use the appropriate package manager for the instance's operating system. For example, on Ubuntu or Debian, you would use apt:

sudo apt update

sudo apt upgrade

On Amazon Linux or CentOS, you would use yum:

sudo yum update

Alternatively, configuration management tools like Ansible, Chef, or Puppet can automate the software update process across multiple instances. These tools allow you to define the desired state of your systems and automatically apply the necessary updates and configurations.

24. What steps would you take to troubleshoot high CPU utilization on an EC2 instance?

First, I'd connect to the EC2 instance using SSH and use tools like top, htop, or ps to identify the process(es) consuming the most CPU. If using top, I'd look at the %CPU column. Once I identify the problematic process, I'd investigate it further. This could involve analyzing application logs, using profiling tools (like perf or a language-specific profiler), or examining code for potential infinite loops or inefficient algorithms. I would also check system metrics using CloudWatch to see if the high CPU utilization correlates with other events, such as increased network traffic or disk I/O. Based on my findings, I would then take appropriate actions such as optimizing code, scaling the instance to a larger size, or implementing caching mechanisms.

25. How do you configure an EC2 instance to run a specific application automatically when it starts?

To configure an EC2 instance to automatically run an application on startup, you can use a startup script. This script is executed when the instance boots. Here's how:

- Create a Startup Script: Write a shell script (e.g.,

startup.sh) that contains the commands to start your application. Ensure this script is executable (chmod +x startup.sh). - Place the Script: Put the script on the EC2 instance. Common locations include

/home/ec2-user/or/opt/. You can usescpto transfer the file. - Configure Startup: There are a few ways to configure the script to run on startup:

rc.local: Add a line to

/etc/rc.local(beforeexit 0) that executes the script. For example:sudo /home/ec2-user/startup.sh &. Note: You might need to create/etc/rc.localand make it executable (e.g.,sudo touch /etc/rc.local; sudo chmod +x /etc/rc.local).Systemd: Create a systemd service file (e.g.,

myapp.service) in/etc/systemd/system/. The service file will define how the application should start, stop, and restart. Here's a simplified example:[Unit] Description=My Application After=network.target [Service] ExecStart=/home/ec2-user/startup.sh Restart=on-failure User=ec2-user [Install] WantedBy=multi-user.targetThen, enable and start the service:

sudo systemctl enable myapp.service; sudo systemctl start myapp.service.

Choose the method that best fits your needs. Systemd is generally preferred for modern Linux distributions due to its robust management capabilities. Remember to test your script thoroughly to ensure it functions as expected.

Intermediate AWS EC2 interview questions

1. Imagine your EC2 instance is super slow. How do you figure out what's making it slow, like checking its heartbeat?

To troubleshoot a slow EC2 instance, I'd start by checking the basics:

- CPU Utilization: Use

top,htop, or CloudWatch metrics to see if the CPU is maxed out. High CPU usage often indicates a resource-intensive process. - Memory Usage: Check memory utilization with

free -mor CloudWatch. Swapping can drastically slow things down. - Disk I/O: Use

iostator CloudWatch to monitor disk read/write speeds. Bottlenecks here can be a major performance killer. - Network I/O: Check network traffic with

iftopor CloudWatch. High network latency or packet loss can cause slowdowns. - Instance Status: Verify that it shows as healthy and reachable in the AWS Management Console.

If the instance appears healthy, I would investigate the application logs to identify slow queries, errors, or other application-level bottlenecks. Tools like tcpdump or wireshark can be utilized to inspect network traffic in detail if needed.

2. Let's say you have a bunch of EC2 instances doing the same thing. How do you make sure traffic is spread evenly among them, like sharing toys fairly?

To distribute traffic evenly across EC2 instances, I'd use a load balancer. An Application Load Balancer (ALB) is ideal for HTTP/HTTPS traffic. It automatically distributes incoming requests to healthy instances based on a defined algorithm (like round robin, least outstanding requests, etc.).

Alternatively, Network Load Balancers (NLB) can be used for TCP/UDP traffic and offer high performance with low latency. For more complex routing, a service mesh like AWS App Mesh or Istio could be employed, providing fine-grained control over traffic distribution and advanced features like traffic shaping and canary deployments. These options offer features beyond basic even distribution.

3. If you want to run a task automatically on your EC2 instance when it starts up, like setting an alarm clock, how would you do it?

To run a task automatically on an EC2 instance at startup, I would use a startup script. This script can be added to the instance's user data. User data scripts are executed by the cloud-init service during the instance's first boot cycle.

Specifically, I would create a shell script that contains the commands to set the alarm. Then I would add this script to the EC2 instance's user data, accessible through the AWS console or CLI during instance creation or modification. For example, the script could look like this:

#!/bin/bash

# Set an alarm clock

sleep 60 # Wait for 60 seconds

notify-send "Alarm!" # Send a notification (example, requires a display server)

Ensure the necessary packages like notify-send are installed within the script or via instance configuration.

4. What are some ways you can make your EC2 instances super secure, like putting a lock on your toy chest?

To secure EC2 instances, think about layers of defense. First, use Security Groups as a virtual firewall to control inbound and outbound traffic, allowing only necessary ports and protocols. Always follow the principle of least privilege. Second, employ IAM roles to grant permissions to your instances, avoiding hardcoding credentials. Rotate keys regularly. Third, keep your AMI updated, and scan the instance periodically.

Further enhance security by using tools like AWS Systems Manager for patching and configuration management, enabling encryption at rest and in transit, and implementing multi-factor authentication (MFA) for all AWS accounts. Consider using a network ACL with the security groups for another layer of defense to specifically control network traffic in and out of your subnet.

5. If your application needs different resources at different times of the day how can you configure EC2 to scale up and down based on demand?

To scale EC2 instances based on time-of-day demand, I would use a combination of CloudWatch alarms and EC2 Auto Scaling. I'd create CloudWatch alarms that trigger scaling actions based on a schedule. For example:

- Scheduled Actions: Define scheduled actions within the Auto Scaling group to increase the desired capacity during peak hours and decrease it during off-peak hours. These actions specify the new minimum, maximum, and desired capacity at specific times.

- CloudWatch Events (EventBridge): Use CloudWatch Events (now EventBridge) to trigger Lambda functions at specific times. These Lambda functions can then modify the Auto Scaling group's desired capacity or update scaling policies. This provides more flexibility for complex scaling scenarios.

6. How can you ensure your application running on EC2 is highly available, and what are some of the key architectural considerations to implement?

To ensure high availability for an application running on EC2, several architectural considerations are crucial. First, deploy your application across multiple Availability Zones (AZs). This way, if one AZ fails, the application remains available in others. Load balancing, using services like Elastic Load Balancer (ELB), distributes traffic across these instances, preventing overload and ensuring traffic is routed to healthy instances.

Key considerations include: Auto Scaling Groups (ASG) to automatically provision and scale EC2 instances based on demand, maintaining desired capacity. Regular backups and disaster recovery plans are essential. Monitoring application health is critical, and you can use services like CloudWatch for this purpose, setting up alarms to trigger scaling or failover events. Use Route 53 for DNS failover to redirect traffic to healthy regions in case of regional failures.

7. Explain the difference between EBS volume types like gp3, io2, and st1 and how would you choose the right one for different workloads?

EBS volume types cater to different performance needs and costs. gp3 is a general-purpose SSD volume offering a balance of price and performance suitable for most workloads like boot volumes, small to medium databases, and development environments. io2 (and io1) are provisioned IOPS SSD volumes designed for I/O-intensive workloads like large databases (e.g., Oracle, SAP HANA) needing consistent high performance, offering guaranteed IOPS. st1 is a low-cost HDD volume optimized for sequential read/write operations, making it suitable for frequently accessed, large, sequential workloads like big data, data warehouses, and log processing.

Choosing the right volume depends on your workload's I/O characteristics and performance requirements. If you need high, consistent IOPS and low latency, io2 is best. For general workloads with moderate I/O, gp3 offers a good balance of price and performance. If you have large, sequential workloads where cost is a primary concern, st1 is a good choice. Consider testing your application with different volume types to identify the best option for your specific needs.

8. How do you monitor the health of your EC2 instances and respond automatically to failures, like an instance crashing?

I use a combination of AWS CloudWatch and Auto Scaling Groups (ASG) to monitor the health of my EC2 instances and automatically respond to failures.

CloudWatch metrics (CPU utilization, memory usage, disk I/O, network traffic, etc.) are monitored, and alarms are configured to trigger based on specific thresholds. For example, if CPU utilization exceeds 80% for 5 minutes, an alarm can trigger. ASG health checks detect instance failures. When an instance fails a health check, or a CloudWatch alarm is triggered, ASG automatically terminates the unhealthy instance and launches a new one, ensuring high availability. For more complex scenarios or application-specific health checks, a custom health check script could be integrated. This script could run on each instance and publish custom metrics to CloudWatch, which in turn trigger alarms if application health degrades below an acceptable level.

9. Describe the process of creating a custom AMI and why might you need to customize an AMI?

Creating a custom AMI involves starting with an existing AMI (often a base OS image), customizing it with the software, configurations, and security settings you need, and then creating a new AMI from that customized instance. The general process is:

- Launch an EC2 instance from a base AMI.

- Connect to the instance (e.g., via SSH).

- Install and configure the necessary software (e.g., web servers, databases, applications), configure users and permissions, and apply security patches.

- Clean up any temporary files or sensitive data.

- Create an AMI from the instance. This process involves stopping the instance, creating a snapshot of its volumes, and registering the AMI.

Customizing AMIs is beneficial when you want to pre-install software, apply security hardening, optimize boot times, standardize configurations across your infrastructure, reduce deployment time for new instances, and ensure consistent environments. For example, you might create a custom AMI with your application server, dependencies, and monitoring tools pre-installed, saving you time and ensuring that every instance launched from that AMI has the same setup. This contributes to Infrastructure as Code (IaC) and DevOps best practices.

10. How can you use EC2 instance metadata and user data to configure your instances dynamically at launch?

EC2 instance metadata provides information about the instance itself, such as its instance ID, AMI ID, security groups, and IAM role. User data, on the other hand, is a script or set of instructions that you provide when launching an instance. This script is executed when the instance boots up.

To dynamically configure instances, you can use a combination of these:

- Access metadata within the user data script: The script can query the instance metadata service (using

curl http://169.254.169.254/latest/meta-data/) to retrieve instance-specific information. - Use metadata to customize configuration: Based on the metadata retrieved (e.g., instance type, environment tag), the user data script can then install specific software, configure applications, or set environment variables.

- Leverage user data for bootstrapping: The user data can include shell scripts, cloud-init directives, or other configuration management tools (like Ansible, Chef, or Puppet) to automate the installation and configuration process.

For example, a user data script might check the instance's environment tag from metadata and then download and configure the appropriate application version for that environment. This allows you to use the same AMI for different environments (dev, test, prod) and configure them dynamically.

11. What are placement groups in EC2, and how do they help in optimizing network performance for certain applications?

Placement groups in EC2 are a way to influence the placement of a set of interdependent EC2 instances. They primarily optimize network performance, though some also offer benefits for storage. There are three types:

- Cluster: Instances are placed close together inside a single Availability Zone. This provides the lowest latency and highest throughput network performance. Ideal for tightly coupled applications like HPC or MPI jobs.

- Partitioned: Instances are spread across logical partitions, each with its own network and power. Failure in one partition won't affect others. Good for distributed and replicated workloads like Hadoop, Cassandra, or Kafka.

- Spread: Instances are placed on distinct underlying hardware. Maximizes fault tolerance and is best for applications where a small number of instances need to be kept separate from each other.

By strategically placing instances using placement groups, you can minimize latency, maximize throughput, and enhance the resilience of your applications.

12. Explain the various methods for managing and distributing SSH keys for EC2 instances in a secure manner.

Securely managing and distributing SSH keys for EC2 instances involves several methods. One common approach is using the EC2 Instance Connect feature, which provides temporary SSH access without permanently storing keys on the instance. AWS Systems Manager Session Manager offers a keyless way to access instances, routing SSH connections through the AWS console or CLI using IAM permissions for authentication. Another method is storing SSH public keys in AWS Secrets Manager and retrieving them during instance startup via user data or configuration management tools like Ansible or Chef. Instance Metadata Service (IMDS) can also provide the keys, but ensure IMDSv2 is enabled for enhanced security against SSRF attacks. For managing authorized keys on a fleet of EC2 instances, tools like HashiCorp Vault or configuration management tools can be utilized to securely store and distribute keys in an automated way.

When using any method, it is crucial to rotate keys regularly and grant the least privilege necessary. Avoid storing private keys directly in user data or any publicly accessible locations. Always monitor SSH access attempts and audit key usage to detect and respond to potential security breaches. Centralized key management systems provide better control and visibility over SSH key usage across the entire infrastructure.

13. How do you handle long-running processes on EC2 instances so that they are fault-tolerant and can survive instance failures?

To handle long-running processes on EC2 instances with fault tolerance, I'd use a combination of services. Primarily, I would leverage services like AWS SQS (Simple Queue Service) to decouple the process from the EC2 instance. The EC2 instance would pick up tasks from the queue and execute them.

For fault tolerance, I would use Auto Scaling Groups (ASG) and Elastic Load Balancers (ELB) to ensure that if an instance fails, another one automatically takes its place and picks up the processing from the queue, effectively handling instance failures. Furthermore, regularly backing up the state using AWS S3 or EBS snapshots would provide an additional layer of protection against data loss. Finally, I'd monitor the processes using CloudWatch alarms to trigger actions or alerts in case of failures.

14. Discuss the challenges of running stateful applications on EC2 instances, and how you would address them?

Running stateful applications on EC2 instances presents challenges primarily around data persistence, consistency, and recovery. Unlike stateless applications, stateful apps rely on consistent data storage that survives instance failures or scaling events. Directly storing data on EC2 instance storage (like EBS volumes) can be problematic because attaching/detaching EBS volumes across instances takes time, and instance failures could lead to data loss if snapshots aren't recent or automated. Data consistency across multiple instances also requires careful management, especially during scaling operations.

To address these challenges, I would utilize managed services like Amazon RDS, DynamoDB, or Elasticache depending on the specific data storage needs. These services handle replication, backups, and failover automatically, simplifying data management. For applications requiring file system semantics, Amazon EFS offers a network file system that can be mounted across multiple EC2 instances, ensuring data persistence and sharing. If using EBS volumes, implementing a robust backup and recovery strategy with frequent snapshots and automated instance recovery is crucial. Containerizing the stateful application with orchestration tools such as Kubernetes or ECS can also improve manageability, automating deployment, scaling, and recovery processes.

15. What are the advantages and disadvantages of using Spot Instances for your workload, and how can you mitigate the risks?

Spot Instances offer significant cost savings compared to On-Demand Instances, often up to 90%, making them ideal for fault-tolerant, flexible workloads. They provide access to unused EC2 capacity. However, the primary disadvantage is that Spot Instances can be terminated with a 2-minute warning if the Spot price exceeds your bid or if capacity becomes unavailable. This makes them unsuitable for critical, time-sensitive applications that cannot tolerate interruptions.

To mitigate the risks, use Spot Instance fleets to diversify across instance types and Availability Zones, increasing the likelihood of acquiring and maintaining capacity. Implement checkpointing and state saving mechanisms to minimize data loss upon termination. Design your application to be stateless and fault-tolerant, allowing it to handle interruptions gracefully and resume operations on new instances. Consider using a combination of On-Demand, Reserved, and Spot Instances to optimize cost and availability. Auto scaling groups and tools like AWS Batch are well suited for managing spot instance workloads.

16. Describe how you would implement a blue/green deployment strategy using EC2 instances?

Blue/Green deployment on EC2 involves running two identical environments: 'Blue' (the current production) and 'Green' (the new version). To implement this, I'd start by creating two EC2 Auto Scaling groups, one for Blue and one for Green. I would then deploy the new application version to the Green environment. After thorough testing of the Green environment, I would switch traffic from the Blue environment to the Green environment. This can be achieved using a load balancer like AWS ALB, updating the target groups associated with the ALB to point to the Green instances.

Post-switch, the Blue environment is kept idle as a rollback option. Monitoring is crucial throughout the process to detect any issues. Once the Green environment is stable and performing as expected, the Blue environment can either be updated to the Green version or decommissioned. Important considerations include database migration strategies and ensuring zero downtime during the switchover, potentially using connection draining on the load balancer.

17. How can you use AWS Systems Manager (SSM) to manage and automate tasks on your EC2 instances?

AWS Systems Manager (SSM) enables managing and automating tasks on EC2 instances via several features. SSM Agent, installed on the instances, allows interaction with SSM services. One way is using Run Command which lets you remotely and securely execute commands or scripts on instances. You can specify a document (either AWS-provided or custom) containing the commands to run. Another way is using State Manager to enforce configuration consistency by associating documents with targets (instances or groups of instances). This allows you to automate tasks like installing software, patching systems, or starting services at specified schedules or events.

SSM also offers Patch Manager to automate OS and application patching for enhanced security and compliance. Furthermore, Automation simplifies complex tasks involving multiple AWS services. SSM also integrates with other AWS services such as CloudWatch, providing centralized logging and monitoring.

18. Explain how you would configure network interfaces and routing tables to set up a multi-tier architecture on EC2?

To configure a multi-tier architecture on EC2, I'd start by creating security groups for each tier (e.g., web, application, database). Network interfaces are configured by assigning each EC2 instance in each tier a private IP address within a Virtual Private Cloud (VPC). The web tier's security group would allow inbound traffic from the internet (e.g., port 80, 443), while the application tier only allows traffic from the web tier, and the database tier only allows traffic from the application tier. This restricts lateral movement.

Routing tables are configured to control traffic flow within the VPC. A public subnet, where the web tier resides, has a route to an Internet Gateway for outbound internet access. Private subnets for the application and database tiers do not have direct internet access but can use a NAT Gateway or NAT instance in the public subnet for outbound traffic if needed (e.g., for updates). Routing is set up so the web tier can reach the application tier via its private IP addresses, and the application tier can reach the database tier, also via private IPs. Custom routes within the routing tables ensure traffic is directed to the appropriate tier based on destination IP ranges, effectively isolating each tier.

19. How do you handle patching and updates on EC2 instances to maintain security and stability?

To handle patching and updates on EC2 instances, I typically employ a multi-layered approach. Firstly, I leverage AWS Systems Manager (SSM) Patch Manager to automate the patching process for both operating systems and applications. This involves defining patch baselines that specify which patches should be applied and scheduling maintenance windows to minimize downtime. I also use SSM Automation documents for more complex update scenarios.

Secondly, I utilize Amazon Inspector to continuously assess EC2 instances for vulnerabilities and security exposures. This helps identify missing patches and misconfigurations. Finally, I regularly review security advisories and patch notes from OS vendors and application providers to stay informed about potential security risks and proactively apply necessary updates. AMIs are periodically rebuilt with the latest patches to serve as golden images for new instances.

20. What are some best practices for optimizing the cost of running EC2 instances, and what tools can help?

To optimize EC2 costs, several best practices can be followed. Right-sizing instances based on actual resource utilization is crucial; use tools like CloudWatch to monitor CPU, memory, and network usage. Consider using EC2 Instance Savings Plans or Reserved Instances for predictable workloads to significantly reduce costs compared to On-Demand pricing. Spot Instances offer substantial discounts but are suitable for fault-tolerant applications. Also, regularly review and delete unused or underutilized instances.

Tools that help include AWS Cost Explorer for cost analysis and forecasting, AWS Trusted Advisor for recommendations on cost optimization, and AWS Compute Optimizer for instance right-sizing suggestions. CloudWatch provides metrics for monitoring resource utilization, and third-party tools like Cloudability or CloudCheckr offer comprehensive cost management features across multiple cloud environments. Consider automating instance lifecycle management using AWS Auto Scaling to scale resources dynamically based on demand.

21. How would you go about troubleshooting high CPU utilization on an EC2 instance running a web application?

To troubleshoot high CPU utilization on an EC2 instance running a web application, I would start by connecting to the instance using SSH and using tools like top, htop, or pidstat to identify the processes consuming the most CPU. Once identified, I'd analyze the application logs to check for errors or unusual activity correlated with the high CPU usage. If the application uses a database, I'd also check the database server for slow queries or locking issues.

Next, I would investigate the application code itself, looking for potential performance bottlenecks such as inefficient algorithms, excessive I/O operations, or memory leaks. Profiling tools specific to the application's language (e.g., Python's cProfile, Java's VisualVM) can help pinpoint problematic code sections. Scaling the instance vertically or horizontally might be necessary if the application is consistently under high load and optimization efforts are insufficient.

22. Explain how to integrate EC2 instances with other AWS services like S3, RDS, and Lambda in a secure and efficient manner.

To integrate EC2 instances securely and efficiently with other AWS services, IAM roles are crucial. Assign an IAM role to your EC2 instance granting it the necessary permissions to access services like S3, RDS, or Lambda. This eliminates the need to store AWS credentials directly on the instance, enhancing security. For instance, an EC2 instance needs to store files in S3, it can be assigned a role that has s3:PutObject permission.

For efficient communication, consider using VPC endpoints. VPC endpoints allow your EC2 instances to access AWS services privately, without traversing the public internet, this reduces latency and improves security. For connecting to RDS from EC2 within the same VPC, security groups should be configured to allow traffic only between the EC2 instance and the RDS instance on the specific RDS port (e.g., 3306 for MySQL). For Lambda, your EC2 instance can invoke Lambda functions using the AWS SDK, ensuring the IAM role associated with the EC2 instance has the lambda:InvokeFunction permission.

23. Discuss how you would design a disaster recovery plan for your EC2-based applications?

A disaster recovery (DR) plan for EC2-based applications prioritizes minimizing downtime and data loss. My approach would involve several key components. First, regularly back up critical data using services like EBS snapshots, which can be automated and stored in geographically separate regions. Second, implement infrastructure as code (IaC) using tools like Terraform or CloudFormation to easily recreate the entire environment. Third, use AWS Route 53 for DNS failover, configuring health checks to automatically redirect traffic to a backup region in case of a primary region failure.

Furthermore, the DR plan would include regular testing to ensure its effectiveness. This includes practicing failover scenarios and validating data restoration procedures. I'd also leverage auto-scaling to ensure that instances in the DR region can handle the production load and use AWS CloudWatch to monitor key metrics to detect and respond to issues quickly. The recovery time objective (RTO) and recovery point objective (RPO) will determine the specific configuration and cost trade-offs for the DR strategy.

24. What strategies can you use to optimize disk I/O performance for EC2 instances, particularly for database workloads?

Optimizing disk I/O for EC2 instances, especially for database workloads, involves several strategies. Firstly, provisioning EBS volumes with sufficient IOPS (Input/Output Operations Per Second) is crucial. Consider using Provisioned IOPS (io1 or io2) or General Purpose SSD (gp3) volumes based on your performance needs. RAID configurations (RAID 0 for performance) can also be implemented, but with careful consideration for data redundancy. Selecting the correct instance type with EBS optimization (indicated by a suffix like 'ebsOptimized') ensures dedicated bandwidth between the EC2 instance and EBS volumes.

Secondly, optimize the operating system and database configuration. This includes using appropriate file system types (e.g., XFS), tuning the database buffer pool size, and enabling caching mechanisms. Monitoring disk I/O metrics using tools like iostat or CloudWatch helps identify bottlenecks. For temporary files, consider using instance store volumes or RAM disks (tmpfs) to reduce load on EBS volumes. Also, consider using EBS snapshots for backups to avoid performance impact during the backup process.

25. How can you leverage EC2 Auto Scaling groups with lifecycle hooks to perform custom actions before or after instances launch or terminate?

EC2 Auto Scaling groups, combined with lifecycle hooks, enable you to execute custom actions during instance launch or termination. Lifecycle hooks pause the Auto Scaling process, allowing you to perform tasks before an instance enters service (launching) or before it's terminated (terminating).

You can leverage this by:

- Defining Lifecycle Hooks: Create hooks specifying the event (

instance launchingorinstance terminating) and the action to take (e.g., invoke an SNS topic or SQS queue). - Executing Custom Actions: When an instance enters a lifecycle state, the hook triggers. A script on the instance (using

user data) or an external system (listening to the SNS/SQS notification) can then perform actions like installing software, downloading configurations, backing up data, or deregistering the instance from a load balancer. - Completing the Lifecycle Action: After completing the actions, you must signal the Auto Scaling group to continue the lifecycle transition, either by explicitly completing the lifecycle action through the AWS CLI/SDK or by letting it timeout after a defined period.

Advanced AWS EC2 interview questions

1. How would you optimize EC2 costs for an application that experiences peak usage only during specific hours of the day?

To optimize EC2 costs for an application with peak usage during specific hours, several strategies can be implemented. The most effective would be to use EC2 Auto Scaling with scheduled scaling. Configure the Auto Scaling group to increase the number of instances during peak hours and decrease it during off-peak hours. This ensures you only pay for the resources you need when you need them. Consider using a combination of instance types, where cheaper (e.g., spot instances) are used during off-peak and reserved or on-demand instances are used during peak for stability.

Another approach is to leverage AWS Lambda for certain parts of the application that are triggered by events and can scale automatically without managing EC2 instances directly. You could also explore using AWS Savings Plans or Reserved Instances for the baseline capacity required, which provides significant cost savings compared to on-demand pricing. Finally, regularly review EC2 instance utilization metrics and right-size instances to ensure you're not over-provisioning. Consider using tools like AWS Compute Optimizer to identify underutilized instances.

2. Describe a scenario where you would choose a Dedicated Host over a Dedicated Instance, and explain why.

I would choose a Dedicated Host over a Dedicated Instance when I need maximum control and visibility into the underlying hardware. Specifically, if I have strict compliance requirements that mandate knowing the exact physical server my workloads are running on, a Dedicated Host is the better choice. This is because Dedicated Hosts allow you to bring your own server-bound software licenses, whereas with Dedicated Instances, licensing can be more complex and less transparent.

For example, if I'm running a database that's licensed per physical core and I need to ensure full compliance to avoid audits and penalties, a Dedicated Host gives me that assurance. While a Dedicated Instance provides hardware isolation, I don't get full visibility or control over the physical server, making it difficult to comply with such stringent licensing requirements. In such scenarios, the transparency offered by a Dedicated Host outweighs the potential cost savings of a Dedicated Instance.

3. Explain how you can automate the patching of EC2 instances while minimizing downtime.

To automate patching EC2 instances with minimal downtime, I'd use AWS Systems Manager (SSM). First, create a maintenance window in SSM, defining a schedule for patching. Then, use SSM Patch Manager to define patch baselines and approval rules. Before applying patches to production instances, test them on a staging environment. For zero downtime during patching, implement a rolling deployment strategy using EC2 Auto Scaling groups and a load balancer. Patch instances in batches, ensuring the load balancer only sends traffic to healthy instances.

Specifically, I would use an SSM Document with the AWS-ShutdownInstance and AWS-RunPatchBaseline actions. The instance will be shutdown using AWS-ShutdownInstance and subsequently patched using the AWS-RunPatchBaseline. After the patch is applied the instance will be restarted. This should be done on a rolling basis within the ASG. After each batch is completed, health checks through the load balancer are performed before moving to the next batch, which ensures minimal impact on the application availability.

4. How would you design a disaster recovery strategy for EC2 instances across multiple AWS regions?

A multi-region disaster recovery (DR) strategy for EC2 involves replicating instances and data to a secondary AWS region. Key components include: regularly backing up EC2 instance data using EBS snapshots or AMIs and replicating them to the DR region. Implement AWS services like CloudEndure Disaster Recovery or AWS Replication Manager to automate the replication process. Configure a load balancer (e.g., Application Load Balancer) with failover capabilities to route traffic to the DR region in case of a primary region outage.

For databases, use cross-region replication features provided by RDS or configure backups to be stored in S3 buckets replicated across regions. Define Recovery Point Objectives (RPO) and Recovery Time Objectives (RTO) to guide the backup and replication frequency. Regularly test the DR plan through failover exercises to ensure its effectiveness and identify potential issues.

5. What are the key considerations when migrating a large, complex on-premises application to EC2 instances?

Migrating a large, complex on-premises application to EC2 requires careful planning and consideration. Key areas include: Application Compatibility: Ensure your application and its dependencies are compatible with the EC2 environment (OS, libraries). Network Configuration: Design your VPC, subnets, security groups, and routing tables for optimal performance and security. Data Migration: Plan a strategy for migrating your data, whether using AWS Database Migration Service (DMS), Storage Gateway, or other methods. Consider downtime and data consistency. Performance and Scalability: Choose the right EC2 instance types based on your application's resource requirements. Implement auto-scaling to handle fluctuating workloads.

Other considerations include Security: Implement robust security measures like IAM roles, encryption, and regular security audits. Monitoring and Logging: Set up comprehensive monitoring and logging using CloudWatch to track performance and troubleshoot issues. Cost Optimization: Optimize your EC2 instance sizes and usage patterns to minimize costs. Use reserved instances or savings plans where applicable. Testing and Validation: Thoroughly test your application in the EC2 environment to ensure it functions as expected before going live. Also important is High Availability and Disaster Recovery: Design for high availability using multiple Availability Zones and implement a disaster recovery plan.

6. Explain the differences between EC2 placement groups and when you would use each type.

EC2 Placement Groups influence how instances are physically placed on underlying hardware. There are three types: Cluster, Spread, and Partition.

- Cluster Placement Groups: Instances are packed close together inside a single Availability Zone. This provides low latency and high network throughput, suitable for HPC or tightly coupled applications. However, this carries a higher risk of simultaneous failure as instances share infrastructure.

- Spread Placement Groups: Instances are spread across distinct underlying hardware. This reduces the risk of correlated failures and is suitable for critical applications needing high availability. Each group is limited to a maximum of seven instances per Availability Zone, when launching on-demand or reserved instances. The limit increases to hundreds of instances per Availability Zone for spot instances, depending on instance type.

- Partition Placement Groups: Instances are spread across multiple partitions within an Availability Zone. Each partition has its own set of racks. These are suitable for distributed, large, workloads like HDFS, Cassandra, or Kafka where you need to isolate failure domains. They offer the distribution benefits of spread groups with the ability to have more instances in a single Availability Zone and provide visibility into the partitions.

7. How do you monitor the health and performance of EC2 instances in a production environment, and what metrics are most important?

I monitor EC2 instance health and performance using a combination of CloudWatch metrics, system logs, and potentially third-party monitoring tools. Key CloudWatch metrics include CPU Utilization, Memory Utilization, Disk I/O (Read/Write), Network I/O (Bytes In/Out), and Status Checks (System and Instance). High CPU or memory utilization, excessive disk I/O, or network bottlenecks can indicate performance issues. Failed status checks are critical and indicate potential instance problems requiring immediate attention.

Beyond CloudWatch, I'd configure logging to central location, review those logs to identify anomalies, errors or other unexpected behaviour. For a more granular view, I would use tools like Prometheus and Grafana, or Datadog, New Relic, which provide dashboards and alerting based on custom metrics. Alerts would be set up for critical thresholds on the mentioned metrics, notifying the on-call team of potential issues.

8. Describe how you would implement a blue/green deployment strategy for EC2-based applications.

Blue/Green deployment for EC2 applications involves running two identical environments: Blue (the current production) and Green (the new version). I would create a new EC2 Auto Scaling group (Green) with the updated application version, configured behind a load balancer. After deploying the new version to the Green environment, I would thoroughly test it. Then, I would update the load balancer to direct traffic from the Blue environment to the Green environment. This could be achieved by modifying the listener rules, or changing target groups.

Finally, after verifying the Green environment is stable and performing as expected, the Blue environment can be kept on standby for potential rollback, or decommissioned. Monitoring tools would be crucial throughout the process to detect and resolve any issues immediately. CloudFormation or Terraform could be used to automate the entire process to reduce manual intervention and ensure consistency.

9. How can you ensure that sensitive data stored on EC2 instances is protected at rest and in transit?

To protect sensitive data on EC2 instances at rest, use encryption. AWS Key Management Service (KMS) can manage encryption keys. Encrypt EBS volumes using KMS keys. For data in transit, use TLS/SSL to encrypt communication between the instance and other services or users. Consider using AWS Certificate Manager to provision and manage SSL/TLS certificates. Further protect data in transit by limiting network access using Security Groups to allow only necessary ports and protocols.

Additionally, regularly audit and monitor access to sensitive data. Utilize IAM roles and policies to grant least-privilege access. Consider data masking or tokenization to further protect sensitive information when possible. Implement a robust key rotation policy for KMS keys.

10. Explain how you would integrate EC2 instances with other AWS services like S3, RDS, and Lambda.

EC2 instances can integrate with various AWS services through IAM roles, allowing secure access without hardcoding credentials. For example, to access S3, an EC2 instance needs an IAM role with S3 permissions. The AWS SDK (e.g., boto3 for Python) can then be used to interact with S3 using the instance's IAM role credentials. Similar IAM-based methods are used to connect to RDS databases, using database credentials managed via Secrets Manager for enhanced security. For integrating with Lambda, an EC2 instance can invoke Lambda functions via the AWS SDK or AWS CLI using the instance's IAM role. You can also set up EventBridge rules to trigger Lambda functions based on events occurring on the EC2 instance.

11. What are the advantages and disadvantages of using EC2 Image Builder for creating custom AMIs?

EC2 Image Builder offers several advantages. It automates the AMI creation process, reducing manual effort and potential errors. It enhances security by allowing you to harden images using built-in security policies and compliance checks. Image Builder also simplifies patching and updates, ensuring your AMIs are always up-to-date. Version control and centralized management are also key benefits, improving consistency across your infrastructure.

However, there are some disadvantages. Initial setup can be complex, requiring you to learn the Image Builder service and its components. Customization options, while powerful, can be overwhelming for simple use cases. Debugging image build failures can be challenging, requiring you to examine logs and troubleshoot build processes. Finally, while integrated with AWS services, it might require additional configuration to integrate with existing CI/CD pipelines outside of AWS.

12. How would you troubleshoot a situation where an EC2 instance is experiencing high CPU utilization or memory pressure?

To troubleshoot high CPU utilization or memory pressure on an EC2 instance, I would start by identifying the process or processes consuming the most resources. I'd use tools like top, htop, or ps within the instance to get a real-time view of CPU and memory usage by process. For memory-specific issues, free -m or vmstat can provide insights.

Once the problematic process is identified, the next step is to analyze its behavior. This might involve examining application logs, profiling the code (if it's a custom application), or checking database queries for inefficiencies. For CPU spikes, tools like perf or specialized profilers (e.g., for Java, Python) can pinpoint bottlenecks. If the instance is undersized, scaling up to a larger instance type could provide immediate relief. Also consider autoscaling to add more instances during peak load periods. If memory pressure is due to caching, evaluate cache eviction policies and consider offloading caching to a dedicated service like ElastiCache.

13. Explain how you can use EC2 Systems Manager to manage and automate tasks on your EC2 instances.

EC2 Systems Manager (SSM) allows centralized management of EC2 instances. It automates tasks like patching, software installation, configuration management, and inventory collection. SSM Agent runs on each instance and communicates with the SSM service.

Key capabilities include: Patch Manager: Automates OS and application patching. Automation: Creates and executes automated workflows (runbooks). Run Command: Remotely executes commands on instances. State Manager: Enforces consistent configurations. Inventory: Collects software and hardware inventory data. Session Manager: Enables secure shell access to instances without opening inbound ports.

14. Describe a scenario where you would use EC2 Auto Scaling with instance weighting, and explain how it works.

Consider an e-commerce website experiencing fluctuating traffic. We can use EC2 Auto Scaling with instance weighting to optimize costs while maintaining performance. Let's say the website's demand sometimes requires powerful instances (e.g., m5.2xlarge) and at other times, smaller instances (e.g., m5.large) are sufficient. Without instance weighting, the Auto Scaling group might only launch the larger, more expensive instances even when the load is low, leading to wasted resources.

With instance weighting, we can configure the Auto Scaling group to treat, for example, an m5.2xlarge as having a weight of '2' and an m5.large as having a weight of '1'. The desired capacity of the group is defined in 'units' of weight, not instances. So, if the desired capacity is '4', the Auto Scaling group can launch two m5.2xlarge instances (2 * 2 = 4), four m5.large instances (4 * 1 = 4), or a mix of instance types to reach the capacity of '4' (e.g., one m5.2xlarge and two m5.large). We would configure the Auto Scaling group with multiple instance types and instance weighting to dynamically select the most cost-effective instance types based on the demand, choosing cheaper m5.large when traffic is low and scaling to more powerful instances only when really needed, maintaining performance by ensuring total capacity always matches the demand.

15. How would you design a highly available and scalable web application architecture using EC2 instances and load balancing?

To design a highly available and scalable web application architecture using EC2 instances and load balancing, I would use the following approach: Firstly, deploy multiple EC2 instances across multiple Availability Zones (AZs) to ensure redundancy. Place these instances behind an Elastic Load Balancer (ELB), which distributes incoming traffic across healthy instances. The ELB will automatically detect and remove unhealthy instances from the pool, ensuring continuous availability. For scalability, implement auto-scaling groups that dynamically adjust the number of EC2 instances based on traffic demand. Configure scaling policies based on metrics like CPU utilization or network traffic using CloudWatch alarms. The application should be designed to be stateless, storing session data in a separate, shared service like ElastiCache or DynamoDB to allow instances to be easily scaled up or down without impacting user sessions. Finally, ensure regular backups are taken and tested.

I'd also monitor the infrastructure using CloudWatch to proactively identify and address performance issues. Consider using infrastructure as code (IaC) tools like CloudFormation or Terraform to automate the deployment and management of the infrastructure, ensuring consistency and repeatability. In addition, I would configure CI/CD pipelines for the application to automate the deployment process, and integrate health checks in the application so the ELB can perform application aware health checks in addition to standard instance checks. I would also configure proper logging and alerting.

16. Explain the different types of EBS volume encryption and how they impact performance.

EBS offers encryption at rest using AWS Key Management Service (KMS). There are essentially two main types: AWS managed keys (AWS KMS) and customer managed keys (Customer KMS). When using AWS managed keys, AWS handles the key management for you, which simplifies the process. Customer managed keys give you greater control; you manage the lifecycle, permissions, and auditing of the key. Both options encrypt the data at rest inside the volume, data in transit between the volume and the instance, and all snapshots created from the volume.

Performance impact is generally minimal for modern instance types and EBS volume types like SSD-backed volumes (gp2/gp3/io1/io2). AWS uses dedicated hardware for encryption/decryption, reducing the CPU overhead. However, there might be a slight latency increase on initial access, but this is usually negligible for most applications. The first I/O request to an encrypted block experiences the full latency overhead, subsequent I/O operations to the same block are faster, as the decrypted data remains in the cache.

17. How can you use AWS CloudTrail to audit and monitor changes made to your EC2 instances?

AWS CloudTrail can be used to audit and monitor changes to EC2 instances by logging API calls made to AWS services, including EC2. By enabling CloudTrail, every action taken on your EC2 instances via the AWS Management Console, AWS CLI, or AWS SDKs is recorded as an event. These events include details such as who made the API call, when it was made, and what resources were affected. These logs can be stored in an S3 bucket.

To audit changes, you can analyze these CloudTrail logs. You can filter logs based on specific EC2 instance IDs, user identities, or API actions (e.g., RunInstances, TerminateInstances, ModifyInstanceAttribute). You can also create CloudWatch alarms based on CloudTrail events to get notified of specific changes, like security group modifications or instance state changes, in near real-time, enabling proactive monitoring and security responses.

18. Describe how you would implement a multi-tier application architecture using EC2 instances in different VPC subnets.

I would implement a multi-tier application architecture on EC2 instances across VPC subnets by creating separate subnets for each tier (e.g., web, application, database). The web tier would reside in public subnets with internet access via an Internet Gateway and a load balancer (like ALB) distributing traffic. The application tier would be in private subnets, accessible only from the web tier, handling business logic. The database tier would also reside in private subnets, accessible only from the application tier, and use security groups to restrict access to only the application tier's EC2 instances. Network ACLs would further control traffic at the subnet level.

Communication between tiers would be secured using security groups and potentially VPC peering or a Transit Gateway if the tiers reside in different VPCs. For example, the web tier's security group allows inbound traffic on port 80/443 from the internet and outbound traffic to the application tier's security group on a specific port (e.g., 8080). The application tier's security group allows inbound traffic from the web tier's security group and outbound traffic to the database tier's security group on the database port (e.g., 3306 for MySQL). Instance roles and IAM policies would grant necessary permissions for each tier to access other AWS services.

19. How do you handle license management for software running on EC2 instances?

License management for EC2 instances can be handled in several ways depending on the type of license and the software vendor. Common approaches include using license servers, AWS License Manager, or integrating with third-party license management tools. For floating licenses, a license server is often deployed within the VPC, and EC2 instances are configured to request licenses from it. AWS License Manager simplifies tracking and managing software licenses, controlling usage, and reducing the risk of non-compliance. It integrates with AWS Marketplace and can enforce license limits, preventing instances from launching if licenses aren't available.

For software with node-locked licenses, you might use activation keys or host IDs tied to the EC2 instance. In this case, you would need to automate the activation process as part of the instance launch using tools like AWS CloudFormation or Terraform. AWS Systems Manager can also be used to remotely execute commands on EC2 instances to activate or manage licenses. Regularly auditing your license usage is crucial, which can be achieved using AWS License Manager or custom scripts that query the license servers or instances directly. The chosen approach should align with the software vendor's licensing terms and the organization's compliance requirements.

20. Explain how you can use EC2 Fleet to request a combination of instance types and Availability Zones.

EC2 Fleet simplifies requesting a combination of instance types and Availability Zones through its configuration. You define a target capacity, and then specify multiple launch specifications, each detailing an instance type, Availability Zone, and pricing (on-demand or spot). EC2 Fleet then attempts to fulfill the target capacity using the most cost-effective combination based on your specifications and current availability.

Specifically, you provide a list of LaunchSpecifications each including InstanceType, AvailabilityZone, and optionally, details for spot instances like SpotPrice. EC2 Fleet distributes instances across Availability Zones to increase availability and uses diverse instance types to reduce the risk of a single instance type becoming unavailable or experiencing price spikes (for spot instances). You can define the allocation strategy such as prioritizing lowest price, diversified distribution or capacity optimized.

21. How would you optimize network performance between EC2 instances in the same or different Availability Zones?

To optimize network performance between EC2 instances, several strategies can be employed. Within the same Availability Zone (AZ), ensure Enhanced Networking is enabled using Elastic Network Adapters (ENAs) or the older Virtual Network Drivers (VIFs) where supported for lower latency and higher throughput. Placement Groups (specifically Cluster Placement Groups) can further reduce latency by placing instances physically close together; however, they are limited to instances within a single AZ.

Across different AZs, consider enabling inter-AZ traffic via VPC peering or Transit Gateway. Evaluate the data transfer costs and latency implications of cross-AZ traffic. Optimize application-level communication by compressing data, reducing the number of requests, and using efficient protocols. Tools like iperf3 can be useful for benchmarking network performance. Consider AWS Global Accelerator for globally distributed applications to optimize routing and minimize latency.

22. Explain how you would implement centralized logging for EC2 instances across multiple AWS accounts.

To implement centralized logging for EC2 instances across multiple AWS accounts, I would use AWS CloudWatch Logs and a central AWS account. First, in each source account containing the EC2 instances, I'd configure the CloudWatch agent on each instance to send logs to CloudWatch Logs within that account. Then, I'd configure a cross-account CloudWatch Logs subscription. This involves creating a CloudWatch Logs subscription filter in each source account that forwards logs to a Kinesis Data Firehose delivery stream in the central logging account.