Testing APIs can be tricky, and identifying candidates who truly grasp API testing principles can be a challenge. As with hiring software testers, it's about finding those who can ensure the reliability and performance of your applications.

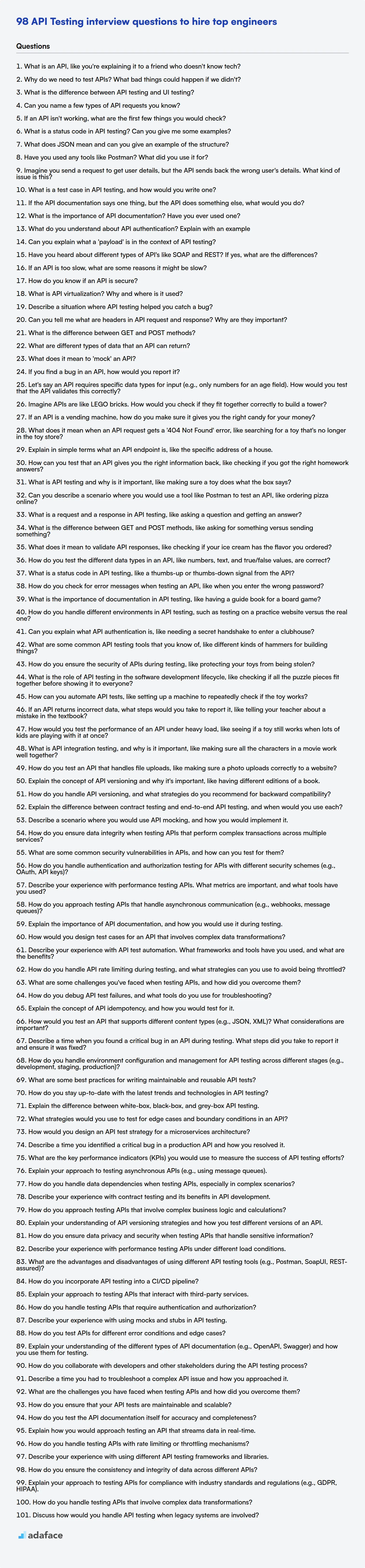

This blog post provides a compilation of API testing interview questions, categorized by experience level, to help you assess candidates effectively. You'll find questions for freshers, juniors, intermediate, and experienced testers, as well as multiple-choice questions (MCQs).

Using these questions, you can better gauge a candidate's suitability for your team and also use our REST API Test to objectively assess skills before the interview.

Table of contents

API Testing interview questions for freshers

1. What is an API, like you're explaining it to a friend who doesn't know tech?

Imagine you're at a restaurant. The menu is like the API. It lists what the kitchen (the computer system) can do, like make a burger or a salad. You (an application) tell the waiter (the API) what you want by ordering from the menu. The waiter takes your order to the kitchen, and the kitchen prepares your food and sends it back through the waiter to you.

So, an API is basically a way for different computer programs to talk to each other and exchange information. It defines what requests one program can make, what kind of data is needed, and what responses it can expect, allowing them to work together without needing to know all the details of how the other program works.

2. Why do we need to test APIs? What bad things could happen if we didn't?

APIs are the backbone of modern applications, enabling communication and data exchange between different systems. Testing them is crucial to ensure functionality, reliability, security, and performance. Without API testing, several issues can arise, including:

- Functional errors: Incorrect data being returned or processed, leading to application failures.

- Security vulnerabilities: APIs can be exploited to gain unauthorized access to sensitive data.

- Performance bottlenecks: Slow API response times can degrade the user experience.

- Reliability issues: APIs failing under load can cause cascading failures in dependent systems.

- Data integrity problems: APIs corrupting data during transmission or storage. For instance, a buggy

POSTrequest might insert incorrect values into a database, which then propagates through the system.

3. What is the difference between API testing and UI testing?

API testing focuses on validating the backend functionality, data contracts, security, and performance of an application's interfaces (APIs) without involving the user interface. It often involves sending requests to API endpoints and verifying the responses. This may include checking status codes, data formats (e.g., JSON, XML), and error handling.

UI testing, on the other hand, concentrates on validating the application's visual elements and user interactions. It verifies that the UI components (e.g., buttons, forms, menus) are displayed correctly, respond as expected to user input, and that the overall user experience is satisfactory. UI tests often involve simulating user actions and verifying the resulting behavior and appearance. Tools like Selenium or Cypress are commonly used for UI testing.

4. Can you name a few types of API requests you know?

Common API request types include:

- GET: Retrieves data from a specified resource.

- POST: Sends data to the server to create or update a resource.

- PUT: Replaces all current representations of the target resource with the request payload.

- PATCH: Applies partial modifications to a resource.

- DELETE: Deletes the specified resource.

- HEAD: Similar to GET, but only retrieves the headers, not the body.

- OPTIONS: Describes the communication options for the target resource.

5. If an API isn't working, what are the first few things you would check?

If an API isn't working, I'd first check the basics:

- Network Connectivity: Can I even reach the server? I'd use

pingortracerouteto verify network access. Then I'd usecurl,wget, or Postman to send a simple request and check the response. This helps determine if the problem is with my network or the API server itself. - API Endpoint and Method: Double-check that I'm using the correct URL and HTTP method (GET, POST, PUT, DELETE, etc.). Typos are common! Then I'd make sure that the endpoint exists and is actively maintained.

- Authentication: Am I providing the correct API key, token, or credentials? Has the authentication expired or been revoked?

- Request Parameters: Are the request parameters (query parameters or request body) correctly formatted and valid? I'll make sure the datatypes are correct, and also check if some values are out of bounds.

- API Status Page/Documentation: Check the API provider's status page or documentation for known issues or outages. Often, they will have scheduled maintenance or known errors they are actively addressing.

- Logs: Check the API provider logs if the problem is on their side, and application logs if the problem is on my side. Logs will help to isolate the errors.

6. What is a status code in API testing? Can you give me some examples?

A status code in API testing is a three-digit number returned by a server in response to a client's request. It indicates whether the request was successful, encountered an error, or requires further action. These codes help in verifying the API's behavior and identifying potential issues.

Examples include:

200 OK: Indicates that the request was successful.201 Created: Indicates that a new resource was successfully created.400 Bad Request: Indicates that the server could not understand the request due to invalid syntax.401 Unauthorized: Indicates that the request requires authentication.403 Forbidden: Indicates that the server understands the request, but refuses to authorize it.404 Not Found: Indicates that the requested resource could not be found.500 Internal Server Error: Indicates that the server encountered an unexpected condition that prevented it from fulfilling the request.

7. What does JSON mean and can you give an example of the structure?

JSON stands for JavaScript Object Notation. It is a lightweight data-interchange format that is easy for humans to read and write and easy for machines to parse and generate. JSON is based on a subset of the JavaScript programming language, Standard ECMA-262 3rd Edition - December 1999. JSON is a text format that is completely language-independent but uses conventions that are familiar to programmers of all languages.

Here's an example of a JSON structure:

{

"name": "John Doe",

"age": 30,

"isStudent": false,

"address": {

"street": "123 Main St",

"city": "Anytown",

"zip": "12345"

},

"courses": ["Math", "Science", "English"]

}

8. Have you used any tools like Postman? What did you use it for?

Yes, I have used Postman extensively. My primary use for Postman has been to test APIs during development and for integration testing.

Specifically, I've used it to:

- Send various types of HTTP requests: GET, POST, PUT, PATCH, DELETE.

- Inspect API responses: Checking status codes, headers, and response bodies (JSON, XML, etc.).

- Set up environments: Defining variables for different environments (development, staging, production).

- Automate testing: Creating collections and running tests to ensure API endpoints are working as expected. For example, I've written tests in the Postman interface using JavaScript (e.g.,

pm.test("Status code is 200", () => { pm.response.to.have.status(200); });) to validate response status codes and data. - Debug API issues: Using Postman's console to examine request and response details to identify problems.

9. Imagine you send a request to get user details, but the API sends back the wrong user's details. What kind of issue is this?

This is a data integrity issue, specifically a data corruption or data mapping problem. The API is returning data that doesn't correspond to the request parameters, indicating a mismatch between the user identifier sent in the request and the user data retrieved from the database or another data source.

This could be due to several factors, including bugs in the API's data retrieval logic, incorrect indexing in the database, or issues with data caching mechanisms. It's a serious problem because it can lead to users accessing sensitive information belonging to other users, violating privacy and potentially causing security breaches. Further investigation is needed to determine the root cause.

10. What is a test case in API testing, and how would you write one?

In API testing, a test case is a specific set of conditions and inputs used to verify that an API endpoint functions correctly and meets its intended specifications. It outlines what needs to be tested, the steps to perform the test, the input data required, and the expected outcome.

To write a test case, you would first define a specific aspect of the API you want to test (e.g., creating a new user). Then, you would specify the input data needed (e.g., request body with user details), the expected HTTP status code (e.g., 201 for successful creation), and the expected response body (e.g., user ID returned). You'd also include any pre-conditions, like authentication requirements. Tools like Postman, Insomnia, or automated testing frameworks (pytest, requests in Python, or supertest in JavaScript) can be used to execute these test cases. Consider different scenarios, including valid and invalid input, edge cases, and error conditions.

11. If the API documentation says one thing, but the API does something else, what would you do?

First, I would meticulously verify my understanding of the API documentation and my code implementation to rule out any potential errors on my end. This includes double-checking request parameters, data types, and the expected response format as described in the documentation. If, after thorough investigation, I'm confident the discrepancy lies with the API, I would document the observed behavior (including request payloads, actual responses, and expected responses according to the docs), then immediately contact the API provider's support team (or the team responsible for the API). I would provide them with the detailed documentation of the issue and ask for clarification or a fix. Depending on the severity and my timeline, I would consider implementing a temporary workaround in my code, such as adding conditional logic to handle the unexpected API response, while waiting for a resolution from the API provider.

12. What is the importance of API documentation? Have you ever used one?

API documentation is crucial because it serves as a comprehensive guide for developers on how to effectively use an API. It outlines the available endpoints, request parameters, response formats, authentication methods, and any other relevant information needed for seamless integration. Without proper documentation, developers would struggle to understand the API's functionality, leading to increased development time, errors, and frustration. Good documentation enables developers to quickly learn and implement the API, fostering wider adoption and reducing support costs for the API provider.

Yes, I have used API documentation extensively. For example, I have used the Stripe API documentation when building payment processing features into applications. The documentation clearly defined the necessary API calls, parameters, and authentication procedures, which allowed me to integrate their payment services efficiently. Similarly, I've referred to the Twilio API documentation for integrating SMS capabilities and used the OpenAI API documentation extensively to learn how to work with the models.

13. What do you understand about API authentication? Explain with an example

API authentication is the process of verifying the identity of a client (user, application, or device) requesting access to an API. It ensures that only authorized clients can access protected resources. It answers the question "Who are you?".

For example, imagine a weather API that provides forecast data. To access this API, a client might need to provide an API key in the request header. The API server then checks the API key against its records. If the key is valid and associated with an authorized client, the server grants access to the requested weather data. Common authentication mechanisms include API keys, OAuth 2.0, and Basic Authentication.

14. Can you explain what a 'payload' is in the context of API testing?

In API testing, the 'payload' refers to the data transmitted within the body of a request message. It's the actual information you're sending to the API, such as data to create a new record, update an existing one, or parameters for a specific request. The format of the payload is usually JSON, XML, or other data serialization formats.

For example, in a POST request to create a new user, the payload would contain the user's details like name, email, and password formatted as a JSON object. Testing the payload involves validating that the API correctly processes and responds to the data it receives, ensuring it adheres to the expected structure and data types.

15. Have you heard about different types of API's like SOAP and REST? If yes, what are the differences?

Yes, I'm familiar with SOAP and REST APIs. SOAP (Simple Object Access Protocol) is a protocol that relies on XML for message formatting and typically uses protocols like HTTP, SMTP, or TCP for message transmission. It's known for its strict standards, WS-* specifications, and built-in error handling, making it more robust but also more complex and resource-intensive.

REST (Representational State Transfer), on the other hand, is an architectural style that uses standard HTTP methods (GET, POST, PUT, DELETE) to interact with resources. It's often uses JSON for message formatting (though XML is also supported), making it lighter and easier to work with. REST is stateless, meaning each request from the client to the server must contain all the information needed to understand and process the request. REST is generally favored for its simplicity, scalability, and performance.

16. If an API is too slow, what are some reasons it might be slow?

An API's slowness can stem from various issues. Server-side bottlenecks are common, including inefficient database queries, slow disk I/O, high CPU load, or insufficient memory. Network latency and bandwidth limitations can also contribute, especially if the API involves transferring large payloads or communicating over long distances. Code inefficiencies, such as poorly optimized algorithms or excessive logging, may also slow down processing.

Furthermore, the problem may originate from external dependencies or services, such as a slow third-party API the service relies on. Insufficient resources allocated to the API server (e.g., too few instances) or improperly configured caching mechanisms can also lead to delays. Finally, consider that the client-side might be to blame, for example a client making too many requests in a short timeframe or not handling the API response correctly.

17. How do you know if an API is secure?

To determine if an API is secure, consider several factors. Look for HTTPS encryption (SSL/TLS) to protect data in transit. Authentication mechanisms like API keys, OAuth 2.0, or JWT should be implemented to verify the identity of the client making requests. Authorization controls determine what resources authenticated users can access; role-based access control (RBAC) is commonly used.

Furthermore, input validation and output encoding are crucial to prevent injection attacks. Rate limiting and throttling can mitigate denial-of-service (DoS) attacks. Regularly audit the API's code and infrastructure, and perform penetration testing to identify vulnerabilities. Consider using security headers like X-Frame-Options, Content-Security-Policy, and Strict-Transport-Security to enhance security posture.

18. What is API virtualization? Why and where is it used?

API virtualization simulates the behavior of specific components in a system, like APIs, without actually invoking the real component. It creates a virtual "stand-in" that returns predefined responses. This is particularly helpful when real components are unavailable, under development, expensive to use for testing, or have limited capacity.

API virtualization is used in several scenarios: * Testing: Simulate API responses for quicker and more reliable testing, especially for edge cases. * Development: Enable parallel development when real APIs are not yet ready. * Performance testing: Simulate high API load to evaluate system performance. * Training: Create safe environments for training without interacting with production systems. * Integration testing: Validate integration between services by mocking dependencies. It helps in reducing costs, speeding up development cycles, and ensuring quality.

19. Describe a situation where API testing helped you catch a bug?

During a recent project, I was testing an API endpoint responsible for updating user profiles. I used API testing to send a request with a malformed email address (e.g., missing the '@' symbol). The API should have returned a validation error, but instead, it attempted to save the invalid email to the database, which caused an exception on the backend and a 500 error.

Without API testing, this bug might have slipped through to the UI, where it would be more difficult to isolate and debug. By catching it early in the API layer, we could quickly fix the validation logic and prevent corrupted data in the database.

20. Can you tell me what are headers in API request and response? Why are they important?

Headers in API requests and responses are key-value pairs that transmit metadata about the request or response. They provide essential information like content type, authorization details, caching instructions, and the type of server being used.

Headers are important because they enable clients and servers to correctly interpret and process the data being exchanged. Without headers, it would be difficult to handle different content types (e.g., JSON, XML), authenticate users, manage caching effectively, or handle errors gracefully. For example, the Content-Type header tells the receiver how to interpret the body, while the Authorization header provides credentials for accessing protected resources. Similarly, Cache-Control helps manage caching behavior.

21. What is the difference between GET and POST methods?

GET and POST are HTTP methods used to transfer data between a client and a server, but they differ in how they transmit data and their intended use.

GET requests data from a specified resource. Data is appended to the URL as query parameters, making it visible in the browser's address bar and server logs. GET is typically used for retrieving data and should not modify server-side data. It's considered idempotent, meaning multiple identical requests should have the same effect as a single request. POST submits data to be processed to a specified resource. Data is sent in the request body, making it less visible. POST is commonly used for creating or updating data on the server. It is not idempotent, as multiple identical requests may have different effects (e.g., creating multiple identical records).

22. What are different types of data that an API can return?

APIs can return various data types, depending on their design and purpose. Common types include:

- JSON (JavaScript Object Notation): A lightweight, human-readable format widely used for data exchange. Data is structured as key-value pairs.

- XML (Extensible Markup Language): Another markup language used to encode data. It is more verbose than JSON.

- Plain Text: Simple text data, without any formatting or structure.

- HTML (HyperText Markup Language): Used for web content, APIs may return HTML fragments or full pages.

- Images/Videos/Audio: APIs can serve binary data representing media files.

- CSV (Comma-Separated Values): A simple text format for tabular data. Each line represents a row, and values are separated by commas.

- PDF (Portable Document Format): APIs can return PDF documents.

- Other Binary Formats: APIs can serve other binary formats like protocol buffer. They are often used for performance reasons.

23. What does it mean to 'mock' an API?

To 'mock' an API means to create a simulated version of it. This simulated API behaves like the real one but doesn't rely on the actual backend systems. Instead, it provides predefined responses.

This is useful for testing, development, and situations where the real API is unavailable, unreliable, or costly to use. For example, while testing client-side code, a mocked API lets developers verify how the application handles different API responses (success, error, slow responses) without depending on the live API's status. Tools like Mockoon or libraries like Jest can be used to create and manage mock APIs.

24. If you find a bug in an API, how would you report it?

First, I would gather all relevant information. This includes the API endpoint, request parameters, request body (if applicable), the expected response, the actual response, timestamps, and any error messages. I would also try to reproduce the bug to confirm its existence and understand the steps needed to trigger it.

Then, I would create a detailed bug report. This report would include a clear and concise description of the bug, the steps to reproduce it, the expected vs. actual results, the severity and priority of the bug, and any relevant environment information (e.g., operating system, browser version if applicable). If possible, I'd include a minimal, reproducible example (e.g., a curl command or code snippet) to help the developers quickly understand and fix the issue.

25. Let's say an API requires specific data types for input (e.g., only numbers for an age field). How would you test that the API validates this correctly?

To test API input validation for data types, I would use a combination of valid and invalid input data. For example, if an 'age' field is expected to be a number, I'd send requests with:

- Valid input: Integers (e.g.,

25,0,100) and potentially boundary values (e.g.,1,120if there's a reasonable age limit). - Invalid input: Strings (e.g.,

"twenty-five","abc"), special characters, null, empty strings, boolean values, and potentially large numbers if there's an implementation limit. The tests should verify that the API returns an appropriate error code and error message when invalid data types are provided, and that the API handles the valid inputs correctly.

API Testing interview questions for juniors

1. Imagine APIs are like LEGO bricks. How would you check if they fit together correctly to build a tower?

To check if APIs (LEGO bricks) fit together, I'd focus on interface compatibility and expected behavior. It's about verifying that the APIs exchange data in a way that makes sense and achieves the desired outcome, like a stable tower.

Specifically, I would:

- Define Clear Specifications: Understand the input and output formats (data types, structures) of each API brick.

- Contract Testing: Verify each API adheres to a pre-defined contract, ensuring it sends and receives data as expected. This is like checking the dimensions and connecting points of LEGOs are standard.

- Integration Tests: Write tests that simulate the interaction between APIs. These tests confirm that when API A sends data to API B, API B processes it correctly and produces the expected result. Think of this as building a small section of the tower to see if the bricks stay together.

- Error Handling: Check how each API handles unexpected inputs or failures. A good API, like a sturdy LEGO, shouldn't cause the whole system to collapse if something goes wrong.

2. If an API is a vending machine, how do you make sure it gives you the right candy for your money?

To ensure an API (vending machine) delivers the correct "candy" (data) for your "money" (request), you need to focus on request and response validation. First, clearly define the input parameters (the coins you insert and button you press). Then, send well-formed requests, matching the API's expected input format, including data types and required fields. Think of this as inserting the correct denomination and pushing the right button. Next, validate the response. Check the status code. A 200 OK typically indicates success, but others may signal problems. Finally, examine the response body against the API's documented schema to confirm the correct data structure and content are returned. For example, if you expect a JSON object with a candy_name field, verify that field exists and contains the expected value, in code:

import requests

response = requests.get("https://api.example.com/candy?type=chocolate")

if response.status_code == 200:

data = response.json()

if "candy_name" in data and data["candy_name"] == "Chocolate Bar":

print("Got the correct candy!")

else:

print("Incorrect candy received.")

else:

print("API request failed.")

3. What does it mean when an API request gets a '404 Not Found' error, like searching for a toy that's no longer in the toy store?

A '404 Not Found' error means the server can't find the resource (like a specific webpage, image, or API endpoint) that the client (your browser or application) requested. It's like searching for a toy that used to be in the store but has since been removed.

In the context of an API, it usually indicates that the requested endpoint or resource doesn't exist at the specified URL. This could be due to a typo in the URL, the resource having been deleted, or the API's structure changing without the client being updated. The server can be reached and is running, unlike errors like DNS resolution failures, but the resource isn't available at the given path.

4. Explain in simple terms what an API endpoint is, like the specific address of a house.

Think of an API endpoint like the specific address of a house. The entire house (the API) offers different services, like a bedroom for sleeping, a kitchen for cooking, and a bathroom. The endpoint is the precise location where you can access one of those services. For example, https://example.com/users might be an endpoint that gives you a list of all users (like getting the names of everyone living in the house).

Specifically it's a URL (Uniform Resource Locator) that a server uses to receive requests from a client. When your application wants to access data or functionality from another application, it sends a request to a specific API endpoint using HTTP methods (like GET, POST, PUT, DELETE). The server at that endpoint then processes the request and sends back a response.

5. How can you test that an API gives you the right information back, like checking if you got the right homework answers?

To test if an API returns the correct information, like checking homework answers, you can use several methods. Primarily, you'll send requests to the API with known inputs and then verify the API's responses against expected outputs. This involves:

- Using API testing tools or libraries: Tools like

Postman,curl, or programming language-specific libraries such asrequestsin Python orsupertestin JavaScript are used to send requests and inspect responses. - Validating the response status code: Check if the API returns the correct status code (e.g., 200 OK, 400 Bad Request).

- Verifying the response body: This is crucial. Ensure the returned data (e.g., homework answers) matches the expected values. This might involve parsing the JSON or XML response and comparing individual fields. For example, you might check if

response.answerequals the correct solution. - Checking data types: Ensure the data types of the returned fields are as expected (e.g., an answer should be a string or number if that's what's expected).

- Edge case testing: Test with different inputs, including invalid or boundary values, to ensure the API handles them correctly and returns appropriate error messages or default values.

6. What is API testing and why is it important, like making sure a toy does what the box says?

API testing is like checking if a toy does what the box says it does. Instead of a toy, we're talking about software interfaces (APIs) that allow different programs to communicate. It's about sending requests to the API, receiving responses, and validating that the responses are correct according to the API's specifications.

API testing is important because it verifies the core logic and functionality of an application. It helps ensure reliability, data integrity, and security. By testing APIs early and often, you can catch bugs before they make their way into the user interface, saving time and resources. It's also crucial for ensuring different systems can communicate seamlessly, which is essential in modern, interconnected applications.

7. Can you describe a scenario where you would use a tool like Postman to test an API, like ordering pizza online?

Imagine you're testing an online pizza ordering API. I would use Postman to simulate different user actions and verify the API's responses. For example, I would send a POST request to the /order endpoint with a JSON payload containing details like pizza type, size, toppings, and delivery address. I would then check the API's response to ensure it returns a 201 Created status code, indicating successful order placement, and a JSON body containing the order ID and estimated delivery time.

Furthermore, I'd use Postman to test edge cases and error handling. I'd send invalid data, like a negative pizza quantity or an unsupported topping, and verify that the API returns appropriate error codes (e.g., 400 Bad Request) and informative error messages. I would also check how the API handles authentication by sending requests without a valid API key or token and verifying the API returns 401 Unauthorized. This comprehensive testing would ensure the pizza ordering API functions correctly and reliably.

8. What is a request and a response in API testing, like asking a question and getting an answer?

In API testing, a request is akin to asking a question. It's a message sent to an API endpoint, containing specific data or instructions. This data could include parameters, headers, and a body (often in JSON or XML format). The request specifies what action the client wants the API to perform.

A response is like getting an answer to that question. It's the API's reply to the request, containing data, a status code (indicating success or failure), and headers. The response body typically includes the requested information or a confirmation of the action taken, again often in JSON or XML. For example, if a request asks for user data, the response would contain that user's information (or an error message if the user wasn't found).

9. What is the difference between GET and POST methods, like asking for something versus sending something?

GET and POST are HTTP methods used to transfer data between a client and a server. GET is primarily used to retrieve data from a server. Think of it as asking the server for information. The data is appended to the URL in the query string, making it visible and bookmarkable. It's generally used for read-only operations.

POST, on the other hand, is used to send data to the server to create or update a resource. Think of it as sending information to the server. The data is sent in the request body, making it invisible in the URL. POST is suitable for operations that modify server-side data, such as submitting forms, uploading files, or creating new entries in a database. Because it is more secure and can handle larger amounts of data, it is preferred when sending sensitive information.

10. What does it mean to validate API responses, like checking if your ice cream has the flavor you ordered?

Validating API responses is like checking if you received the ice cream flavor you actually ordered. It involves verifying that the data returned by an API meets your expectations and adheres to a predefined contract or schema. This ensures the reliability and correctness of your application by catching unexpected data formats, missing fields, incorrect data types, or error codes.

Specifically, validation may involve checking the following:

- Status code: Verifying if the API returned a successful status code (e.g., 200 OK) or an error code (e.g., 400 Bad Request, 500 Internal Server Error).

- Data type: Ensuring that each field in the response body has the correct data type (e.g., a number is actually a number, a string is a string).

- Required fields: Confirming that all mandatory fields are present in the response.

- Value constraints: Checking if the values of specific fields fall within acceptable ranges or adhere to specific patterns (e.g., a date is in the correct format, a quantity is positive). For example, using a tool like

ajvin Javascript to validate against a JSON schema.

11. How do you test the different data types in an API, like numbers, text, and true/false values, are correct?

To test data types in an API, I'd use a combination of techniques. For numeric values, I'd check boundary conditions (min/max), valid ranges, and handle potential errors like non-numeric input or overflows. For text, I'd test length constraints, character encoding (UTF-8), and handle special characters or invalid formats. For booleans, I'd verify true and false are correctly interpreted and handled.

Specifically, testing involves crafting API requests with various payloads:

- Numbers: Send integers, decimals, very large/small numbers, negative numbers, and non-numeric values like strings. Verify correct parsing and error handling.

- Text: Send strings of varying lengths, special characters (e.g.,

<>"'&), different character encodings, and potentially SQL injection attempts (if applicable). Test for proper encoding and escaping. - Booleans: Send

true,false, and potentially strings like"true"or numbers like1and0to see how the API handles non-boolean inputs. Ensure consistent representation and interpretation.

12. What is a status code in API testing, like a thumbs-up or thumbs-down signal from the API?

An API status code is a three-digit number returned by a server in response to a client's request. It indicates whether the request was successful, encountered an error, or requires further action. Think of it like a 'thumbs up' (success) or 'thumbs down' (failure) signal from the API. Common examples include:

200 OK: The request was successful.201 Created: A new resource was successfully created.400 Bad Request: The server could not understand the request due to invalid syntax.404 Not Found: The requested resource was not found on the server.500 Internal Server Error: A generic error occurred on the server. Understanding these codes is crucial for debugging and validating API behavior in testing.

13. How do you check for error messages when testing an API, like when you enter the wrong password?

When testing an API for error messages, especially in scenarios like incorrect password attempts, I focus on verifying the HTTP status code and the response body. I expect a 4xx status code (e.g., 400 Bad Request, 401 Unauthorized) indicating a client-side error. The response body should contain a structured error message, often in JSON format. I use tools like curl, Postman, or automated test frameworks (e.g., Jest with Supertest for Node.js) to send requests with invalid credentials and assert that the response matches the expected error code and message format. For example, testing a login endpoint I would expect a 401 unauthorized response, and the response body might include { "error": "Invalid credentials" }.

14. What is the importance of documentation in API testing, like having a guide book for a board game?

API documentation is crucial for successful API testing, much like a guidebook is essential for playing a board game effectively. Without clear documentation, testers struggle to understand the API's functionality, input parameters, expected outputs, and potential error codes. This leads to inefficient testing, increased development time, and a higher risk of overlooking critical bugs.

Good API documentation, like a well-written guidebook, provides testers with the necessary information to design comprehensive test cases. It clarifies authentication methods, rate limits, and data formats (e.g., JSON schemas), enabling testers to validate the API's behavior accurately and ensure it meets the defined specifications. It can even include example requests and responses, accelerating the testing process.

15. How do you handle different environments in API testing, such as testing on a practice website versus the real one?

To handle different environments in API testing, I use configuration files or environment variables to store environment-specific settings like base URLs, API keys, and database connection strings. This allows me to switch between environments (e.g., development, staging, production) without modifying the test code itself. I can use a command-line argument or environment variable to specify the target environment when running tests.

For example, in Python with pytest, I might use a pytest.ini file with different sections for each environment, or utilize environment variables accessed using os.environ. In code, I'd use these variables to dynamically construct API requests like: requests.get(f"{base_url}/users") where base_url is derived from the chosen environment's configuration. Also, tools like Postman allow defining environment variables and switching between them easily.

16. Can you explain what API authentication is, like needing a secret handshake to enter a clubhouse?

API authentication is like needing a secret handshake to get into a clubhouse. When an application (the 'person') wants to use an API (the 'clubhouse'), it needs to prove it is who it says it is. This is done by providing credentials, like a username/password, an API key, or a token, which acts as the 'secret handshake'.

Without proper authentication, anyone could access the API and potentially steal data or misuse its functions. Think of it like this: the API only lets in those who know the secret handshake, ensuring only authorized applications can use its services.

17. What are some common API testing tools that you know of, like different kinds of hammers for building things?

Some common API testing tools include: Postman, which is widely used for manual testing and exploration. Then there's curl, a command-line tool perfect for simple requests and scripting. For automated testing and CI/CD integration, tools like pytest with the requests library (in Python) or Rest-Assured (for Java) are popular. SoapUI is often used for testing SOAP-based web services.

Choosing the right tool depends on the specific needs of the project. Postman is excellent for initial exploration and manual tests, while tools like pytest and Rest-Assured are better suited for automated testing and integration into a CI/CD pipeline. curl offers flexibility for simple, scriptable tests, and SoapUI addresses the specific requirements of SOAP-based services.

18. How do you ensure the security of APIs during testing, like protecting your toys from being stolen?

API security testing is like safeguarding my toys. I focus on several key areas. Firstly, Authentication and Authorization: ensuring only verified users or services gain access and that their permissions are appropriately restricted. Think of it as checking everyone's ID before letting them play and making sure they only play with the toys they're allowed to. Secondly, Input Validation: preventing malicious data from being injected into the system. This is like making sure no one tries to break my toys by using them improperly or feeding them something dangerous. We can do this with techniques like using a Web Application Firewall (WAF) and implementing strict data type and format checks on all API requests.

Further, rate limiting is employed to prevent abuse (like someone trying to hog all the toys), and regular penetration testing helps identify vulnerabilities before malicious actors can exploit them. I also pay close attention to encryption, ensuring sensitive data is protected both in transit (using HTTPS) and at rest (using encryption algorithms). For example, using HTTPS ensures that communication happens through a secure channel. We can also leverage tools to automate security tests during the development lifecycle. Example: OWASP ZAP, Burp Suite

19. What is the role of API testing in the software development lifecycle, like checking if all the puzzle pieces fit together before showing it to everyone?

API testing is crucial in the software development lifecycle because it validates the integration and functionality of different software components. It's like checking if the puzzle pieces fit together correctly before presenting the complete picture to end-users. API testing ensures that the APIs (Application Programming Interfaces) that connect different systems or microservices are working as expected, handling data correctly, and returning appropriate responses.

Specifically, API testing verifies things like:

- Data Integrity: Ensuring data is passed correctly between systems.

- Error Handling: Verifying APIs respond appropriately to invalid inputs or unexpected conditions.

- Security: Validating authentication and authorization mechanisms.

- Performance: Assessing API response times and scalability.

- Functional correctness: Validates that API functions as per specification.

By identifying and resolving issues early in the development cycle, API testing reduces the risk of integration problems, improves software quality, and accelerates the delivery of reliable software.

20. How can you automate API tests, like setting up a machine to repeatedly check if the toy works?

Automating API tests involves creating a process to repeatedly send requests to your API and verify the responses. Several tools and techniques can be used. A common approach is to use a test automation framework like pytest or JUnit along with a library specifically designed for making HTTP requests, such as requests in Python or RestAssured in Java.

The process generally involves the following:

- Define Test Cases: Specify the API endpoints to test, the expected inputs, and the desired outputs.

- Write Test Scripts: Use the chosen framework and library to write code that sends requests to the API with different inputs.

- Assert Responses: Verify that the API responses match the expected outputs using assertions provided by the testing framework.

- Schedule Execution: Use a Continuous Integration (CI) tool like Jenkins, GitHub Actions, or GitLab CI to schedule the test scripts to run automatically at regular intervals or upon code changes. For example, you can use cron to schedule the execution on a machine.

- Report Results: Configure the CI tool to generate reports indicating which tests passed or failed, allowing for quick identification of issues.

21. If an API returns incorrect data, what steps would you take to report it, like telling your teacher about a mistake in the textbook?

If an API returns incorrect data, I would first try to reproduce the issue. This involves making the same API call with the same parameters to confirm the incorrect data is consistently returned. If the problem persists, I'd document the exact steps to reproduce it, including the API endpoint, request parameters, and the incorrect response received, along with the expected response. I would also document the time the issue was observed and the environment. For example:

API Endpoint: /users/123

Request Parameters: None

Observed Response: {"name": "Jane Doe", "age": 25}

Expected Response: {"name": "John Doe", "age": 30}

Timestamp: 2024-10-27 10:00:00 UTC

Then, I'd report the issue to the appropriate team or individual, which could be the API developers or the team responsible for data integrity. The report would include the steps to reproduce, the expected vs. actual results, and any relevant context. If internal ticketing system exists, I would file a detailed bug report to track the progress of the issue resolution.

22. How would you test the performance of an API under heavy load, like seeing if a toy still works when lots of kids are playing with it at once?

To test an API's performance under heavy load, I'd use load testing tools like Apache JMeter, Gatling, or Locust. I would simulate many concurrent users (kids playing) accessing the API simultaneously. The key is to define realistic scenarios (what actions kids take with the toy). For example, if it's a toy car API (e.g., /accelerate, /brake, /turn), I'd simulate multiple users repeatedly calling these endpoints.

I would then monitor key metrics like:

- Response time: How long does it take for the API to respond?

- Error rate: How many requests fail?

- Throughput: How many requests can the API handle per second?

- Resource utilization: CPU, memory, and network usage on the server. By observing these metrics, you can identify bottlenecks and determine if the API can handle the expected load. It's also crucial to ramp up the load gradually to find the breaking point.

23. What is API integration testing, and why is it important, like making sure all the characters in a movie work well together?

API integration testing is a type of software testing that verifies different APIs work together correctly. Think of it as checking how well different characters in a movie interact. Each character (API) might function fine on its own (unit test), but integration testing makes sure they communicate and exchange data properly when combined. It's important because problems often arise in the connections between different components.

Without it, you might experience unexpected errors, data corruption, or system failures when APIs are combined in a real-world scenario. It ensures that the different API endpoints can handle the data and calls as intended, and validates the overall workflow among different parts of the system. For example, API_A sends data, API_B receives it, and API_C processes and updates data based on the combined output.

24. How do you test an API that handles file uploads, like making sure a photo uploads correctly to a website?

To test an API that handles file uploads, I would focus on several key areas. First, I'd verify successful uploads by checking the API's response code (expecting 200 OK or 201 Created) and confirming the file's presence in the designated storage location with the correct file name, size, and content. Error handling is also critical, I would test various failure scenarios like:

- Uploading files exceeding the size limit.

- Uploading files with unsupported formats (e.g., a

.exefile when only.jpgand.pngare allowed). - Attempting to upload without proper authentication or authorization.

- Simulating network errors during the upload process.

For each of these scenarios, I'd expect the API to return appropriate error codes and informative error messages. I will also test the API using different clients or tools to ensure consistency, for example using curl or Postman. In addition, I'd consider security implications, such as preventing malicious file uploads (e.g., files containing scripts) and ensuring proper access controls.

25. Explain the concept of API versioning and why it's important, like having different editions of a book.

API versioning is like having different editions of a book. When you update an API, you might introduce changes that break existing applications relying on the older version. Versioning allows you to release new features, fix bugs, or change the API's structure without forcing all existing users to immediately update their code. It ensures backward compatibility and provides a smoother transition path.

It's important because it prevents widespread application failures. Imagine if every website broke every time a core library was updated. Versioning offers stability, gives developers time to adapt to changes, and allows for the gradual deprecation of older versions. Examples of versioning strategies include URI versioning (/api/v1/resource), header versioning (Accept: application/vnd.example.v2+json), and query parameter versioning (/api/resource?version=2).

API Testing intermediate interview questions

1. How do you handle API versioning, and what strategies do you recommend for backward compatibility?

API versioning is crucial for managing changes and ensuring existing applications continue to function. I typically use URI versioning (e.g., /v1/resource) or header-based versioning (Accept header). For backward compatibility, I recommend these strategies:

- Versioning: Introduce new versions without modifying existing ones. Deprecate older versions gracefully, providing ample notice.

- Data Transformation: Adapt data formats between API versions using transformers or adapters.

- Feature Flags: Use feature flags to selectively enable or disable new features, allowing clients to opt-in. This minimizes impact on existing clients and allows testing.

- Tolerant Reader Pattern: Design APIs to be tolerant of unexpected data. Clients should ignore unknown fields in responses.

2. Explain the difference between contract testing and end-to-end API testing, and when would you use each?

Contract testing verifies that two separate services (e.g., a consumer and a provider) agree on the structure and content of their interactions. It ensures the provider fulfills the consumer's specific needs, not necessarily the provider's entire specification. End-to-end (E2E) API testing, on the other hand, tests the entire API workflow, often involving multiple services or layers. It verifies that the API functions correctly from start to finish, mimicking real user scenarios.

Use contract testing when you want to isolate and verify the integration between specific services, especially in microservice architectures. It helps prevent integration issues early in the development cycle. Use E2E API testing to validate the complete API flow and ensure all components work together seamlessly. This is crucial for verifying critical user journeys and identifying system-wide issues.

3. Describe a scenario where you would use API mocking, and how you would implement it.

I would use API mocking when developing a feature that depends on an external API that is not yet available, is unstable, or has rate limits that would hinder development. For example, imagine building a UI component that displays user data fetched from a third-party service. Instead of waiting for the real API to be ready, I would create a mock API endpoint using a library like Mockoon or json-server. This mock endpoint would return predefined JSON responses that mimic the expected data structure from the real API. This allows frontend development to proceed independently and be testable without relying on the external service.

Implementation would involve defining the mock API endpoint (e.g., /users/{id}) and configuring the response data, including status codes and headers. For example, using json-server it would be as simple as writing the required json data (e.g. db.json) and running the command json-server --watch db.json --port 3001. Tests would then be written against the mock API, verifying that the UI component correctly renders the mocked data.

4. How do you ensure data integrity when testing APIs that perform complex transactions across multiple services?

Ensuring data integrity when testing APIs performing complex transactions across multiple services involves several key strategies. Firstly, implement end-to-end tests that simulate real-world scenarios, verifying that transactions are completed accurately and consistently across all services. Employ data validation techniques at each stage of the transaction, including checks for data type, format, and range.

Secondly, use techniques like idempotency testing (ensuring an operation performed multiple times has the same result as a single operation) and compensating transactions to handle failures gracefully. Implement robust monitoring and logging to track transactions and quickly identify any discrepancies. This can include detailed audit trails and the use of unique transaction IDs. Finally, introduce fault injection to test the system's resilience in the face of errors and network issues, ensuring that data is not corrupted or lost during unexpected events. For example, simulate a network timeout during a critical update and verify that the system correctly rolls back or retries the transaction. This might involve using tools like tcpdump or iptables to simulate network issues in a controlled environment. Also, use data comparison tools to compare the state of the system before and after the transaction.

5. What are some common security vulnerabilities in APIs, and how can you test for them?

Common API security vulnerabilities include:

- Injection flaws: SQL injection, command injection. Test with tools like OWASP ZAP or by manually crafting malicious inputs.

- Broken Authentication: Weak passwords, lack of multi-factor authentication. Test by trying default credentials, brute-force attacks, and bypassing authentication mechanisms.

- Excessive Data Exposure: APIs returning too much sensitive data. Test by analyzing API responses for unnecessary information.

- Broken Access Control: Unauthorized access to resources. Test by attempting to access resources with different user roles or without authentication.

- Security Misconfiguration: Improperly configured servers or APIs. Review configuration files and security settings.

- Cross-Site Scripting (XSS): Injecting malicious scripts into API responses. Test by injecting XSS payloads into API inputs and observing the output.

- Denial of Service (DoS): Overwhelming the API with requests. Test using load testing tools to simulate high traffic.

- API rate limiting: Verify rate limiting implementation using automated tools.

6. How do you handle authentication and authorization testing for APIs with different security schemes (e.g., OAuth, API keys)?

To handle authentication and authorization testing for APIs with different security schemes, I first identify the specific security scheme being used (OAuth, API keys, JWT, etc.). For each scheme, I'll use different approaches and tools. For example:

For OAuth, I'd use tools like Postman or Insomnia to obtain access tokens through the appropriate grant flow. I'd then include these tokens in the Authorization header when making API requests. I'll validate both the token's validity and the scopes/permissions associated with it. For API keys, I ensure the key is included correctly (e.g., in the header X-API-Key), validate correct key placement, and test with both valid and invalid keys. When using JWTs, I might use tools like jwt.io to decode the token and inspect its claims, then assert if it matches what is expected for access to different resources. Also, testing with expired tokens is a must. For all schemes, I test positive (valid credentials) and negative (invalid credentials, expired tokens, wrong scopes) scenarios and confirm appropriate HTTP status codes (e.g., 401 Unauthorized, 403 Forbidden).

7. Describe your experience with performance testing APIs. What metrics are important, and what tools have you used?

My experience with API performance testing includes using tools like JMeter and Postman (with Newman) to simulate various user loads and analyze API response times, throughput, and error rates. I've designed tests to assess performance under normal and peak conditions, identifying bottlenecks and areas for optimization. I've also used cloud-based load testing platforms like LoadView for larger scale simulations.

Key metrics I focus on are response time (average, minimum, maximum, and percentiles), error rate (number of failed requests), throughput (requests per second), CPU utilization, and memory consumption of the API server. Specifically, monitoring percentiles like the 95th or 99th helps understand the experience of the slowest requests. When dealing with database-backed APIs, I also monitor database query performance and connection pool utilization. I also utilize tools like tcpdump to monitor the network and use tools like Wireshark to understand network packets.

8. How do you approach testing APIs that handle asynchronous communication (e.g., webhooks, message queues)?

Testing APIs with asynchronous communication requires a different approach than synchronous APIs. Since responses aren't immediate, you need to verify that the API publishes messages or triggers webhooks correctly, and that downstream systems process those messages as expected. Here's a general strategy:

- Mock downstream systems: Instead of relying on the actual systems that consume the asynchronous events, mock them to control the input and verify the output. This allows you to isolate the API you're testing. Tools like

MockServer,WireMockor libraries likeunittest.mockin Python can be useful. - Verify message/event creation: Ensure that the API correctly creates and publishes the expected messages or triggers the correct webhooks. Check the message content (payload, headers) for accuracy. For message queues, you can often consume messages directly from the queue during testing.

- Test different scenarios: Test various scenarios, including success, failure, and edge cases. For example, what happens if the downstream system is temporarily unavailable? Does the API handle retries or dead-letter queues correctly? What if the message payload is invalid?

- Utilize correlation IDs: If applicable, use correlation IDs to track asynchronous messages across different systems. This makes it easier to trace and debug issues.

- Consider end-to-end tests: While mocking is useful, end-to-end tests that involve the actual downstream systems can provide more confidence in the overall system's behavior. However, these tests can be more complex and time-consuming.

9. Explain the importance of API documentation, and how you would use it during testing.

API documentation is crucial because it serves as the single source of truth for understanding how an API functions. It outlines endpoints, request/response formats, authentication methods, and potential error codes. Without proper documentation, developers and testers waste significant time reverse-engineering the API or relying on potentially inaccurate information. This leads to slower development cycles, increased bug counts, and integration challenges.

During testing, I would heavily rely on API documentation to:

- Understand expected behavior: Verify the intended functionality of each endpoint.

- Create test cases: Design tests covering various scenarios, including positive and negative cases, boundary conditions, and error handling. For example, understanding the expected data type of a parameter would allow me to create test cases with invalid data types, and confirm the correct error codes are returned.

- Validate responses: Ensure that the API returns the correct data structure and values as defined in the documentation. For example if the API is supposed to return a JSON array of user objects with

id,nameandemailproperties. the test can validate the presence and type of those properties for each user. - Troubleshoot issues: Identify discrepancies between the actual API behavior and the documented behavior, aiding in debugging and bug fixing. If the response code is different than documented, it could be an issue.

10. How would you design test cases for an API that involves complex data transformations?

When designing test cases for an API involving complex data transformations, focus on validating each transformation step and the overall outcome. Start by identifying key input data scenarios, including valid, invalid, and boundary cases. Then, define the expected output for each scenario based on the documented transformations. Consider using data mocking or stubbing to isolate the API and control input data.

Specific test cases should cover: Input validation (data types, ranges), transformation logic (individual steps and combinations), error handling (invalid data, unexpected conditions), and output validation (format, accuracy, completeness). You can also use property-based testing to generate a wide range of test cases automatically. Finally, performance test the API to verify transformations don't result in slow response times. Be certain to use appropriate assertion statements to validate outputs, and use a test framework such as pytest for test execution.

11. Describe your experience with API test automation. What frameworks and tools have you used, and what are the benefits?

I have experience in API test automation using various frameworks and tools. I've primarily worked with REST Assured in Java and pytest with the requests library in Python for creating and executing API tests. I also have some experience with Postman and Newman for API testing and collection management.

Some of the benefits I've found using these tools include improved test coverage, faster test execution compared to manual testing, and early detection of defects in the API layer. For example, using REST Assured, I can easily validate response status codes, headers, and JSON schema. I've also implemented data-driven testing, parameterization, and assertion libraries to streamline the process. Using tools like Postman and Newman, I can easily integrate the test in CI/CD pipeline. Here's a small code example using requests and pytest:

import requests

import pytest

BASE_URL = "https://api.example.com"

def test_get_resource():

response = requests.get(f"{BASE_URL}/resource/1")

assert response.status_code == 200

data = response.json()

assert data["id"] == 1

12. How do you handle API rate limiting during testing, and what strategies can you use to avoid being throttled?

To handle API rate limiting during testing, I employ several strategies. First, I use mock APIs or stubs to simulate the real API and avoid hitting actual rate limits. These mocks can be configured to mimic rate limiting behavior, allowing me to test my application's resilience. Second, when testing against the real API, I use a tiered approach. Start with a small number of requests and gradually increase the load to identify the threshold. If the API supports it, I use techniques like exponential backoff with jitter to retry failed requests after a delay, which increases with each attempt. This helps to avoid overwhelming the API and stay within the allowed rate limits.

To avoid throttling, I implement caching mechanisms to reduce the number of API calls. Also, I optimize the frequency and timing of API requests, perhaps using asynchronous processing or request batching where applicable to minimize the overall load. Monitoring the API response headers for rate limit information (e.g., X-RateLimit-Remaining, X-RateLimit-Limit, X-RateLimit-Reset) is also crucial. This allows me to proactively adjust the request rate and prevent throttling.

13. What are some challenges you've faced when testing APIs, and how did you overcome them?

Some challenges I've faced when testing APIs include dealing with inconsistent data formats, authentication complexities, and rate limiting. Inconsistent data formats require robust validation and transformation logic in my tests, often using tools like JSON schema validators or custom parsing functions to handle variations. Authentication challenges, such as OAuth 2.0, were addressed by implementing proper token management and refresh mechanisms, which sometimes involved scripting the authentication flow using tools like curl or specific API client libraries.

Rate limiting presented another hurdle. To overcome this, I implemented strategies like queuing requests and introducing delays between calls, effectively throttling the test execution to avoid exceeding the API's limits. I also used mocking and stubbing techniques when possible, to isolate specific parts of the API and reduce dependence on live endpoints during testing.

14. How do you debug API test failures, and what tools do you use for troubleshooting?

When debugging API test failures, I typically start by examining the test logs and error messages to identify the specific endpoint, request parameters, and expected vs. actual results. I use tools like Postman or Insomnia to manually recreate the failing API calls with the same parameters to isolate the issue. I also inspect the API server logs to check for errors or unexpected behavior on the server-side.

For more in-depth troubleshooting, I use tools like Charles Proxy or Wireshark to capture and analyze the network traffic between the test client and the API server. This helps me identify issues such as incorrect request headers, invalid request bodies, or unexpected response codes. Additionally, I'll often use the browser's developer tools, specifically the 'Network' tab, to examine API calls made by a web application interacting with the API. Code blocks like console.log() are also valuable for debugging test scripts.

15. Explain the concept of API idempotency, and how you would test for it.

API idempotency means that making the same API request multiple times has the same effect as making it only once. It ensures that even if a request is accidentally or intentionally repeated (due to network issues or client retries), the system's state remains consistent. For example, a PUT request to set a resource's value is naturally idempotent.

To test for idempotency, send the same API request multiple times (e.g., three times) and verify that the resource's state or the system's state is the same after each request. Specifically, if you're testing a payment endpoint, ensure that a user is only charged once, even if the request is sent multiple times. For example with curl: curl -X POST -d '{"amount": 100}' https://example.com/charge -H 'Idempotency-Key: unique_key'. You can send this multiple times with the same Idempotency-Key. The HTTP response code should also reflect idempotency, such as returning a 200 OK on the first request and subsequent requests return 200 OK but do not change the state, or a 204 No Content for subsequent requests that are successfully processed without changing the system state.

16. How would you test an API that supports different content types (e.g., JSON, XML)? What considerations are important?

To test an API supporting different content types (JSON, XML), I'd focus on verifying that the API correctly handles requests and responses for each supported type. This involves sending requests with the appropriate Content-Type header and validating that the response is returned in the expected format with the correct Content-Type header. I'd also verify error handling, ensuring the API gracefully handles requests with unsupported or malformed content types, returning appropriate error codes and messages.

Important considerations include:

- Content Negotiation: Ensuring the API correctly negotiates content based on the

Acceptheader in the request. - Schema Validation: Validating JSON and XML responses against predefined schemas (e.g., JSON Schema, XSD) to ensure data consistency.

- Data Serialization/Deserialization: Testing how the API serializes and deserializes data for each content type, checking for data loss or corruption.

- Error Handling: Verifying that the API returns informative error messages when it encounters invalid content types or malformed data.

- Performance: Measuring the API's performance for each content type, as serialization and deserialization can impact response times.

17. Describe a time when you found a critical bug in an API during testing. What steps did you take to report it and ensure it was fixed?

During API testing for a new user authentication service, I discovered a critical bug where the resetPassword endpoint was not properly validating the password reset token. Specifically, it allowed any arbitrary token to be used, potentially granting unauthorized access to user accounts. To report this, I immediately documented the steps to reproduce the vulnerability, including the API endpoint, request parameters, and expected vs. actual results. I then created a detailed bug report in our project management system (Jira), assigning it a high priority and severity. The report included a clear description of the issue, the impact on user security, and the reproduction steps. I also attached the relevant API request/response logs.

To ensure it was fixed, I actively tracked the bug's status and collaborated with the development team. I offered to help with debugging by providing additional test data and insights. After the developers implemented a fix (adding proper token validation), I retested the endpoint with various invalid tokens and confirmed that the vulnerability was resolved. Finally, I updated the bug report with the verification results and closed the issue, making sure to communicate the resolution to the relevant stakeholders.

18. How do you handle environment configuration and management for API testing across different stages (e.g., development, staging, production)?

I handle environment configuration and management for API testing by using environment variables and configuration files. These files (e.g., .env, config.json) store environment-specific settings like API endpoints, database connection strings, and API keys. I utilize tools like dotenv in Python or similar mechanisms in other languages to load these variables. This allows me to switch environments easily by changing the active configuration file or setting the relevant environment variables before running tests.

For different stages, I maintain separate configuration files or sets of environment variables (e.g., dev.env, staging.env, prod.env). My test scripts read these configurations to determine the appropriate environment to target. I integrate this setup into my CI/CD pipeline, so the correct configuration is automatically loaded based on the branch or environment being deployed to. This ensures that tests always run against the intended environment.

19. What are some best practices for writing maintainable and reusable API tests?

To write maintainable and reusable API tests, focus on abstraction and modularity. Use a layered approach, separating test logic from setup and assertions. This allows for easy modification and reuse of components. Avoid hardcoding values, instead use configuration files or environment variables. Employ data-driven testing to run the same test with different datasets.

Follow the DRY (Don't Repeat Yourself) principle by creating reusable functions or classes for common tasks like authentication, request construction, and response validation. Utilize a well-defined test framework and naming conventions for better organization and readability. Keep tests focused and avoid testing multiple aspects in a single test case. Use tools that support API testing such as pytest, requests, Postman or RestAssured.

20. How do you stay up-to-date with the latest trends and technologies in API testing?

I stay up-to-date with API testing trends and technologies through a combination of online resources and professional development. I regularly read industry blogs and articles from reputable sources, such as those published by API testing tool vendors like Postman and ReadyAPI. I also participate in online communities and forums, such as Stack Overflow and dedicated API testing groups on LinkedIn, to learn from other professionals and discuss emerging trends.

Furthermore, I follow key influencers and thought leaders in the API space on social media and subscribe to relevant newsletters to get the latest updates delivered directly. I also explore new tools and frameworks by experimenting with open-source options and utilizing free trials of commercial products. When possible, I attend webinars, workshops, and conferences focused on API testing to network with peers and learn from experts. Finally, I try to implement the new learnings at work and improve our api testing suite.

21. Explain the difference between white-box, black-box, and grey-box API testing.

White-box API testing involves testing the internal structure and code of the API. Testers need knowledge of the API's implementation, data structures, and algorithms. It allows for testing of specific code paths, branches, and internal logic. Black-box API testing, on the other hand, focuses solely on the functionality of the API without any knowledge of its internal workings. Testers interact with the API through its public interfaces and validate the inputs and outputs based on the API's documentation and specifications.

Grey-box API testing is a combination of both white-box and black-box approaches. Testers have partial knowledge of the API's internal structure. This could involve knowing some data structures, algorithms or having access to design documents. This allows for designing tests that target specific areas of the API while still focusing on functional validation through public interfaces. It helps in identifying issues that might be missed by pure black-box or white-box approaches.

22. What strategies would you use to test for edge cases and boundary conditions in an API?

To test for edge cases and boundary conditions in an API, I would employ several strategies. Firstly, I'd identify potential boundary values for input parameters (e.g., minimum, maximum, zero, null, empty strings, very large strings). Then, I'd design test cases that specifically use these boundary values, as well as values slightly outside these boundaries (both above and below). For instance, if an API expects an age parameter between 18 and 65, I'd test with 17, 18, 65, and 66.

Secondly, I'd consider error handling. The API should gracefully handle invalid inputs and return informative error messages. Test cases should verify that appropriate errors are returned when boundary conditions are violated. I would use tools like Postman or automated testing frameworks using Python and requests library to craft and execute these tests systematically, analyzing response codes and error messages to ensure correctness. For example, requests.post(url, data={'age': 17}) and then assert that the status code is 400 or similar, and that the response body contains a helpful error message.

API Testing interview questions for experienced

1. How would you design an API test strategy for a microservices architecture?

An API test strategy for a microservices architecture should focus on verifying contracts, data integrity, performance, and security across services. It involves different testing layers. First, unit tests for individual microservice components. Second, integration tests to validate interactions between services, employing techniques like contract testing (e.g., using Pact) to ensure compatibility. Third, end-to-end tests (or system tests) to verify critical user flows across multiple services. API testing tools such as Postman or REST-assured can be used.

Key areas to test include request/response validation (status codes, data formats), authentication/authorization, rate limiting, error handling, and performance metrics (latency, throughput). Implement automated tests integrated into the CI/CD pipeline, including smoke tests for quick service health checks, and more thorough regression tests to ensure new changes don't break existing functionality. Also consider performance testing under load and security vulnerability scanning. Monitoring APIs is crucial using tools like Prometheus or Grafana to get insights into API performance and detect any anomalies.

2. Describe a time you identified a critical bug in a production API and how you resolved it.

During my work on an e-commerce platform, I noticed a significant increase in failed payment transactions reported by users. After checking the logs, I identified a bug in the payment processing API. Specifically, an unhandled exception was occurring when the API received currency symbols it wasn't explicitly coded to handle. This was happening because a recent update to the product catalog introduced products with currencies that weren't supported previously. To resolve this quickly, I implemented a try-except block to catch the exception, log the error for further analysis, and return a standardized error message to the user, preventing the application from crashing. Then, I added logic to correctly handle all the currencies specified in the product catalog.

3. What are the key performance indicators (KPIs) you would use to measure the success of API testing efforts?

Key Performance Indicators (KPIs) for API testing success include several metrics covering different aspects of quality and efficiency. Test Coverage (percentage of API endpoints and functionalities covered by tests), Defect Detection Rate (number of defects found before release), and Test Execution Time (time taken to execute the entire test suite) are important. Also, Defect Density (number of defects per API endpoint) helps assess the quality of specific APIs.

Further KPIs are Test Pass Rate (percentage of tests that pass), API Response Time (average time taken for an API to respond, indicating performance), Error Rate (percentage of API calls resulting in errors), and Security Vulnerabilities found during testing (e.g., using tools to check for OWASP vulnerabilities). Monitoring these KPIs provides a comprehensive view of the effectiveness of API testing.

4. Explain your approach to testing asynchronous APIs (e.g., using message queues).