Fundamentals of Spark Core: Understanding Spark Core involves knowledge of the basic building blocks and execution model of Apache Spark, such as RDDs, transformations, and actions. This skill is necessary to develop efficient and scalable Spark applications.

Developing and running Spark jobs (Java; Scala; Python): Developing and running Spark jobs requires proficiency in programming languages like Java, Scala, or Python. This skill is crucial to write Spark applications using Spark APIs, perform data processing tasks, and leverage the power of Spark's distributed computing capabilities.

Spark Resilient Distributed Datasets (RDD): Spark RDDs are fundamental data structures in Spark that allow for distributed data processing and fault tolerance. Understanding RDDs is essential for efficient data manipulation, transformation, and parallel computing in Spark.

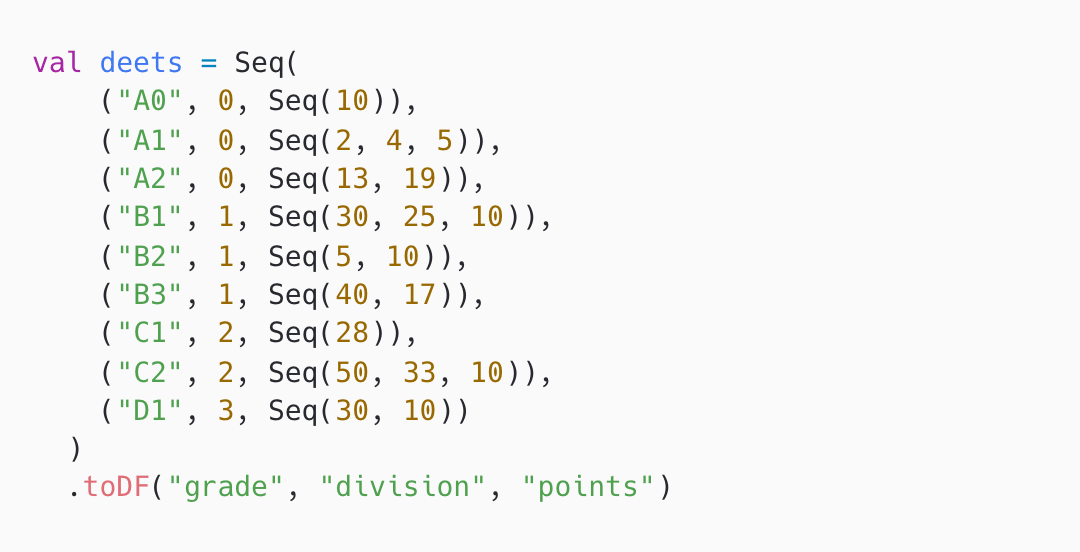

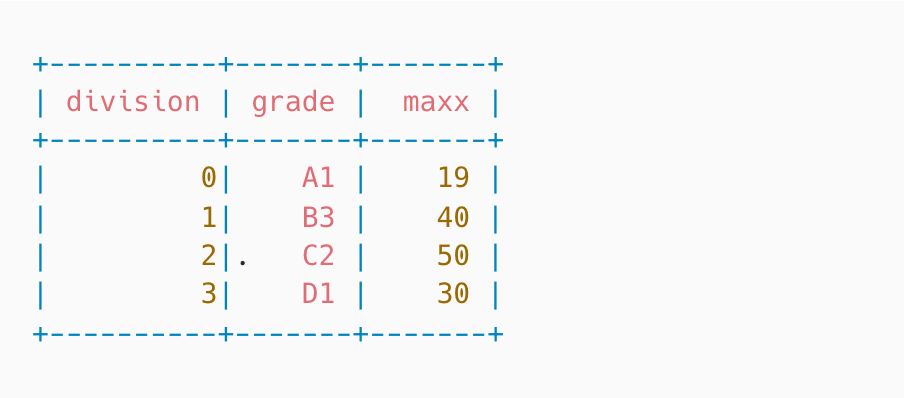

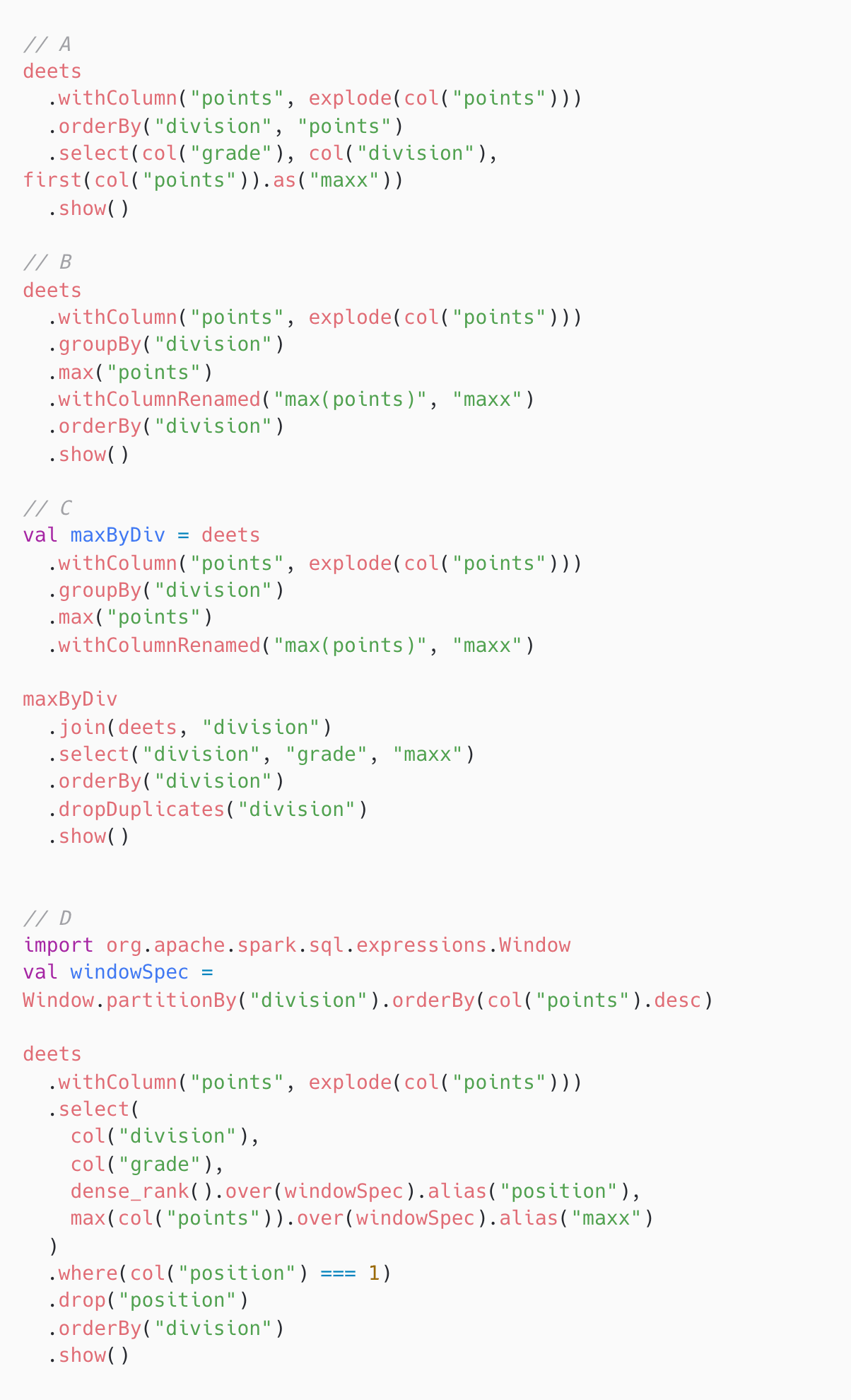

Data processing with Spark SQL: Spark SQL is a module in Spark that provides a programming interface for querying structured and semi-structured data using SQL-like syntax. This skill is important to analyze and process structured data using SQL operations and leverage the optimizations provided by Spark SQL's query engine.

Dataframes and Datasets: Dataframes and Datasets are higher-level abstractions built on top of RDDs in Spark. They provide a more expressive and efficient way to work with structured and unstructured data. Understanding Dataframes and Datasets is crucial for performing data manipulations, transformations, and aggregations efficiently in Spark.

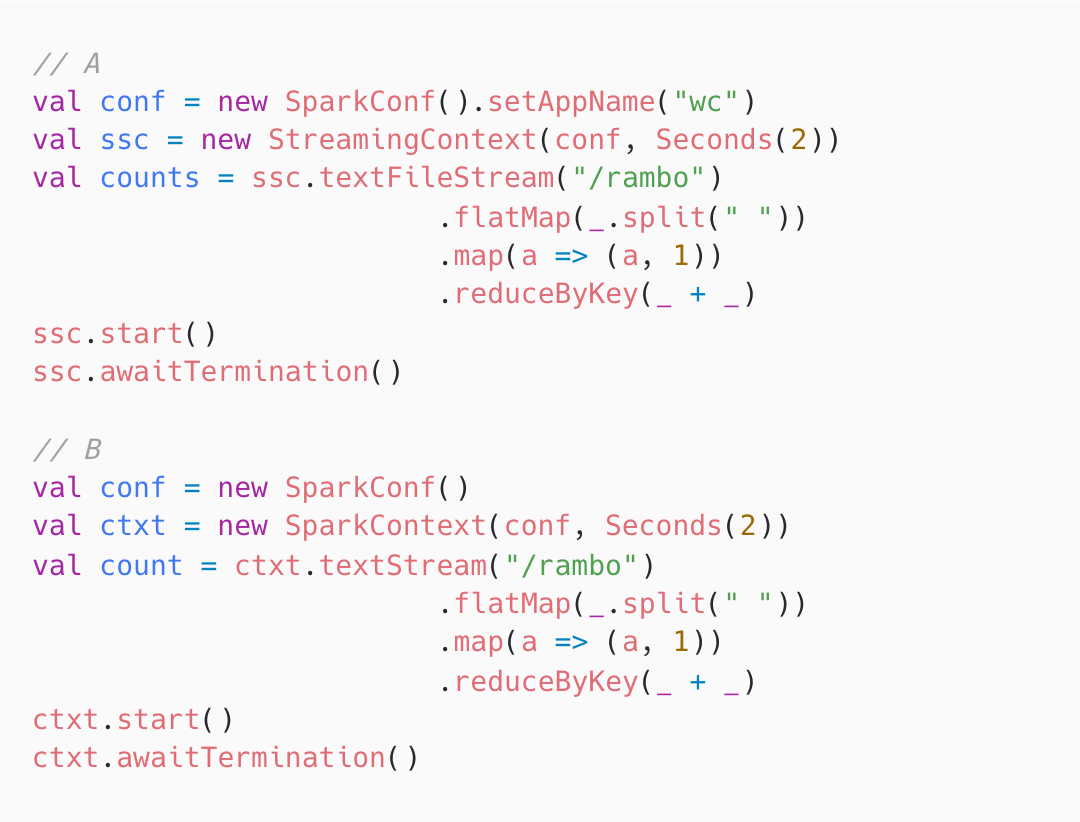

Spark Streaming to process real-time data: Spark Streaming is a scalable and fault-tolerant stream processing library in Spark that allows for real-time data processing. This skill is important to handle continuous streams of data and perform real-time analytics, enabling applications to react to data changes in near real-time.

Running Spark on a cluster: Running Spark on a cluster involves configuring and deploying Spark applications across a distributed cluster infrastructure. This skill is necessary to take advantage of Spark's distributed computing capabilities and ensure optimal performance and scalability.

Implementing iterative and multi-stage algorithms: Implementing iterative and multi-stage algorithms in Spark involves designing and optimizing algorithms that require multiple iterations or stages to achieve the desired output. This skill is important for tasks like machine learning and graph processing that often involve complex iterative and multi-stage computations.

Tuning and troubleshooting Spark jobs in a cluster: Tuning and troubleshooting Spark jobs in a cluster requires expertise in identifying and resolving performance issues, optimizing resource utilization, and ensuring fault tolerance. This skill is crucial to maximize the efficiency and reliability of Spark applications running on a distributed cluster.

Graph/ Network analysis with GraphX library: GraphX is a graph computation library in Spark that provides an API for graph processing and analysis. Understanding GraphX is important for tasks like social network analysis, recommendation systems, and fraud detection that involve analyzing relationships and patterns in graph data.

Migrating data from data sources/ databases: Migrating data from data sources or databases to Spark involves understanding various data ingestion techniques, such as batch processing, streaming, and data connectors. This skill is necessary to efficiently transfer and process data from external sources in Spark for further analysis and computation.